Artificial Cognition for Detection of Mental Disability: A Vision Transformer Approach for Alzheimer’s Disease

Abstract

:1. Introduction

2. Literature Review

2.1. Machine Learning Methods

2.2. Deep Learning Methods

3. Proposed Methodology

3.1. Data

3.2. Data Preparation

3.3. Preprocessing

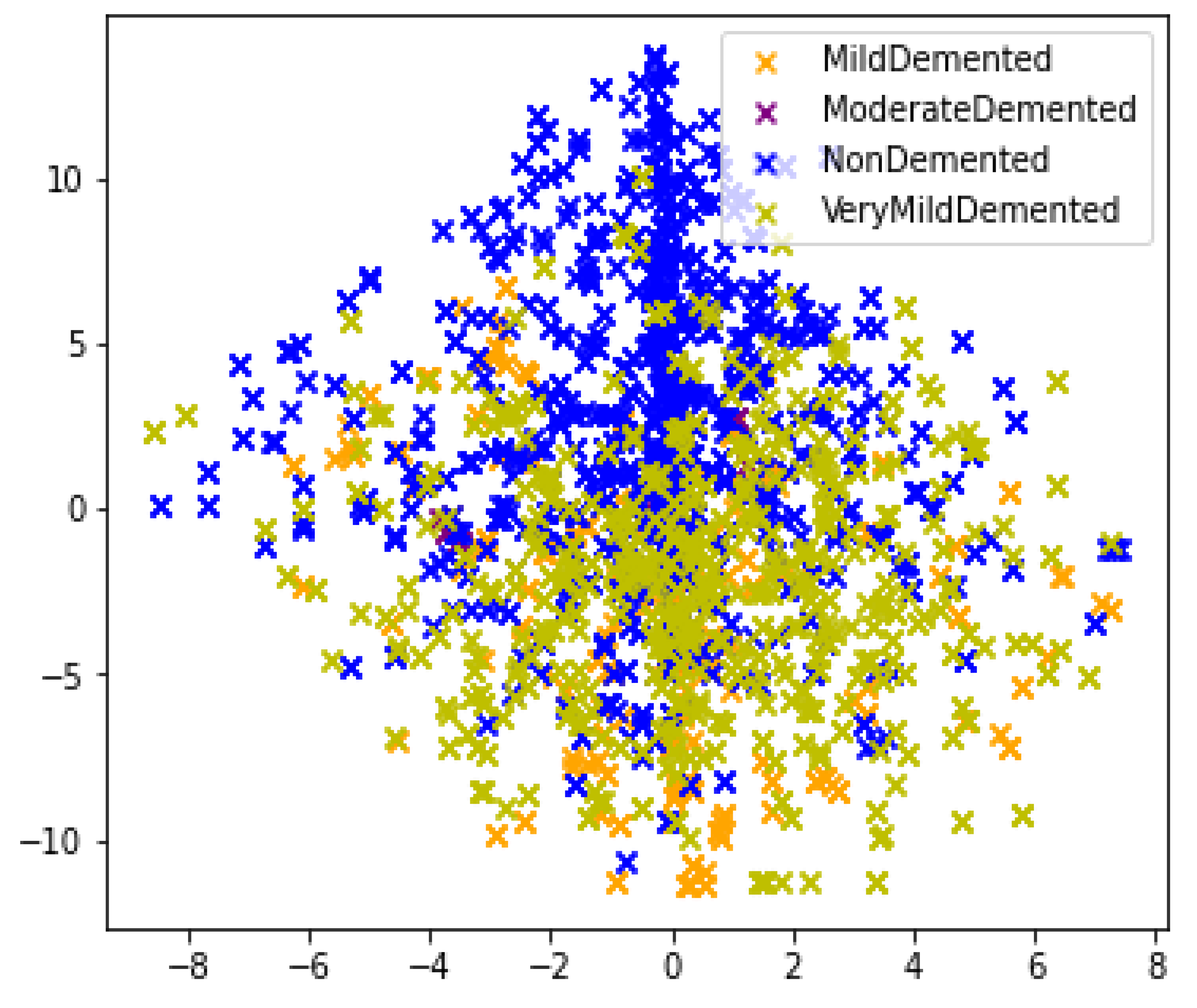

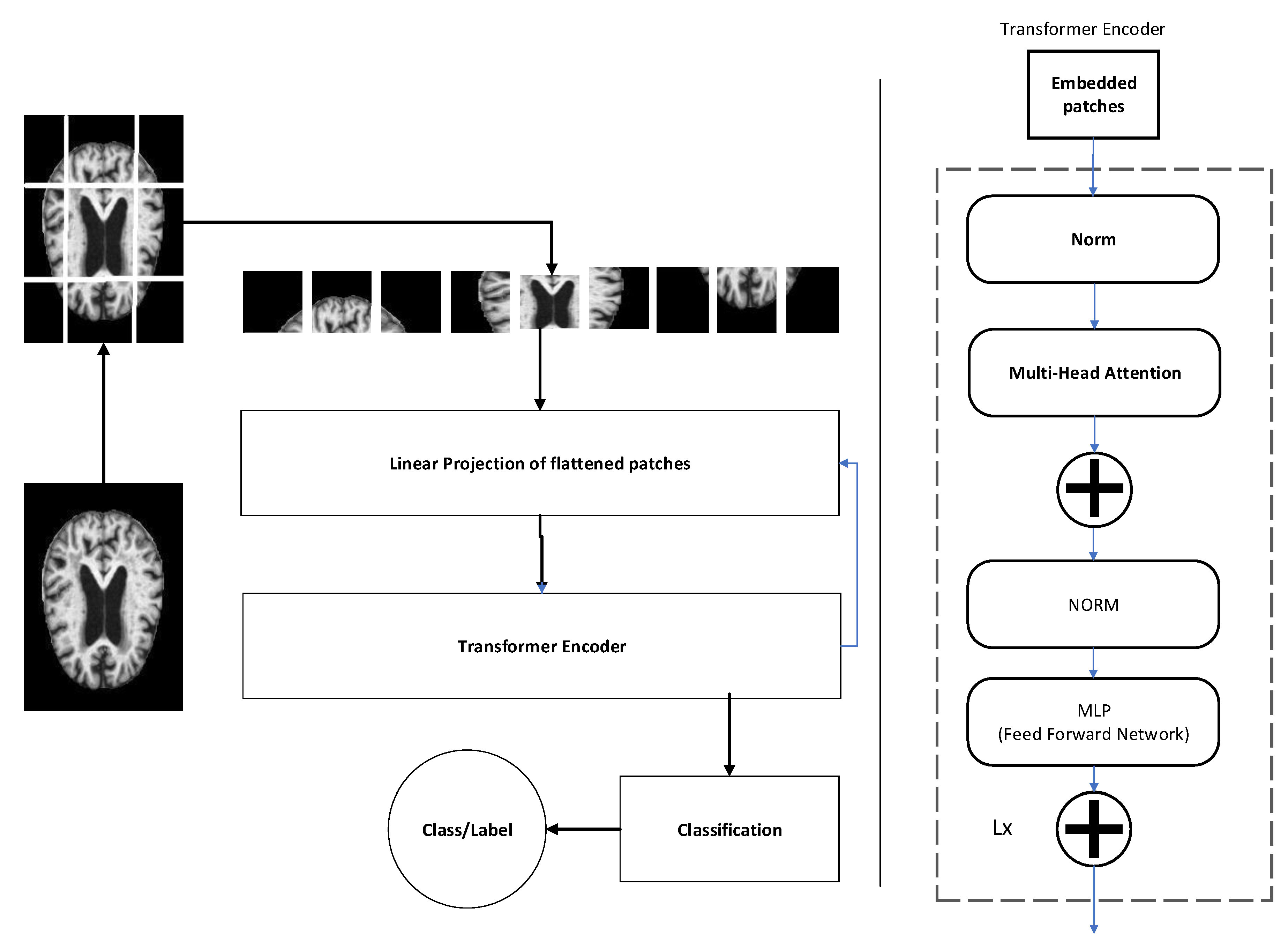

3.4. Feature Extraction

3.5. Classification

4. Evaluation Metrics

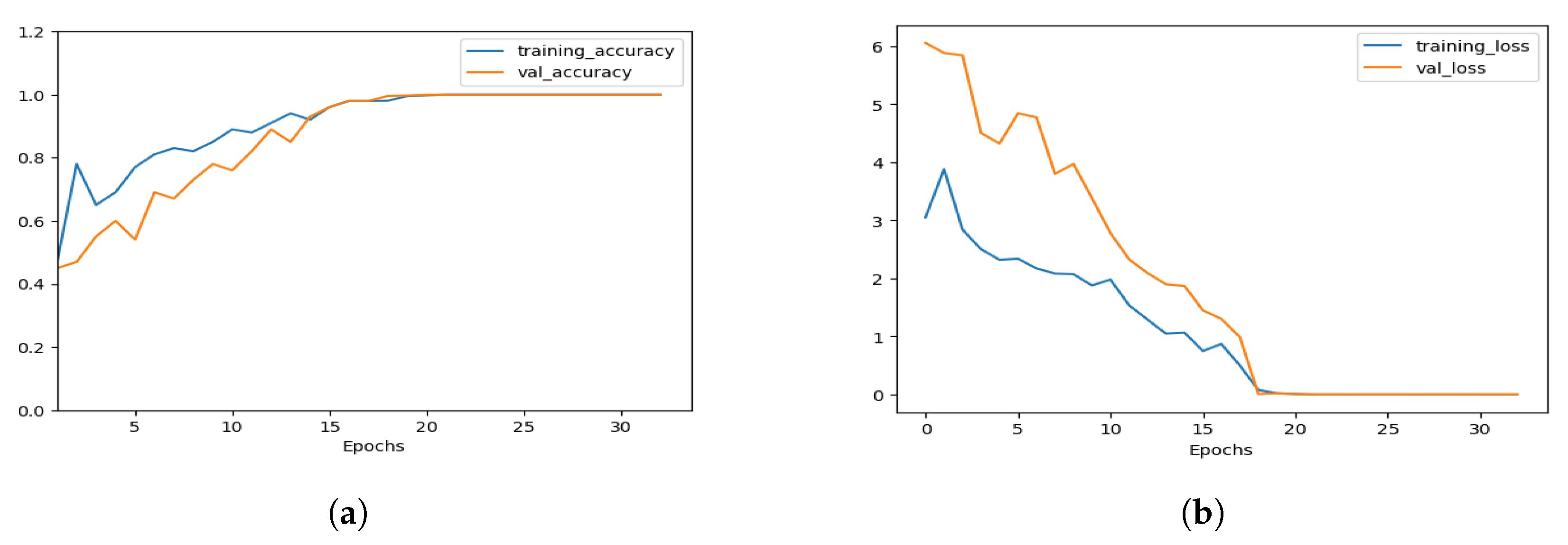

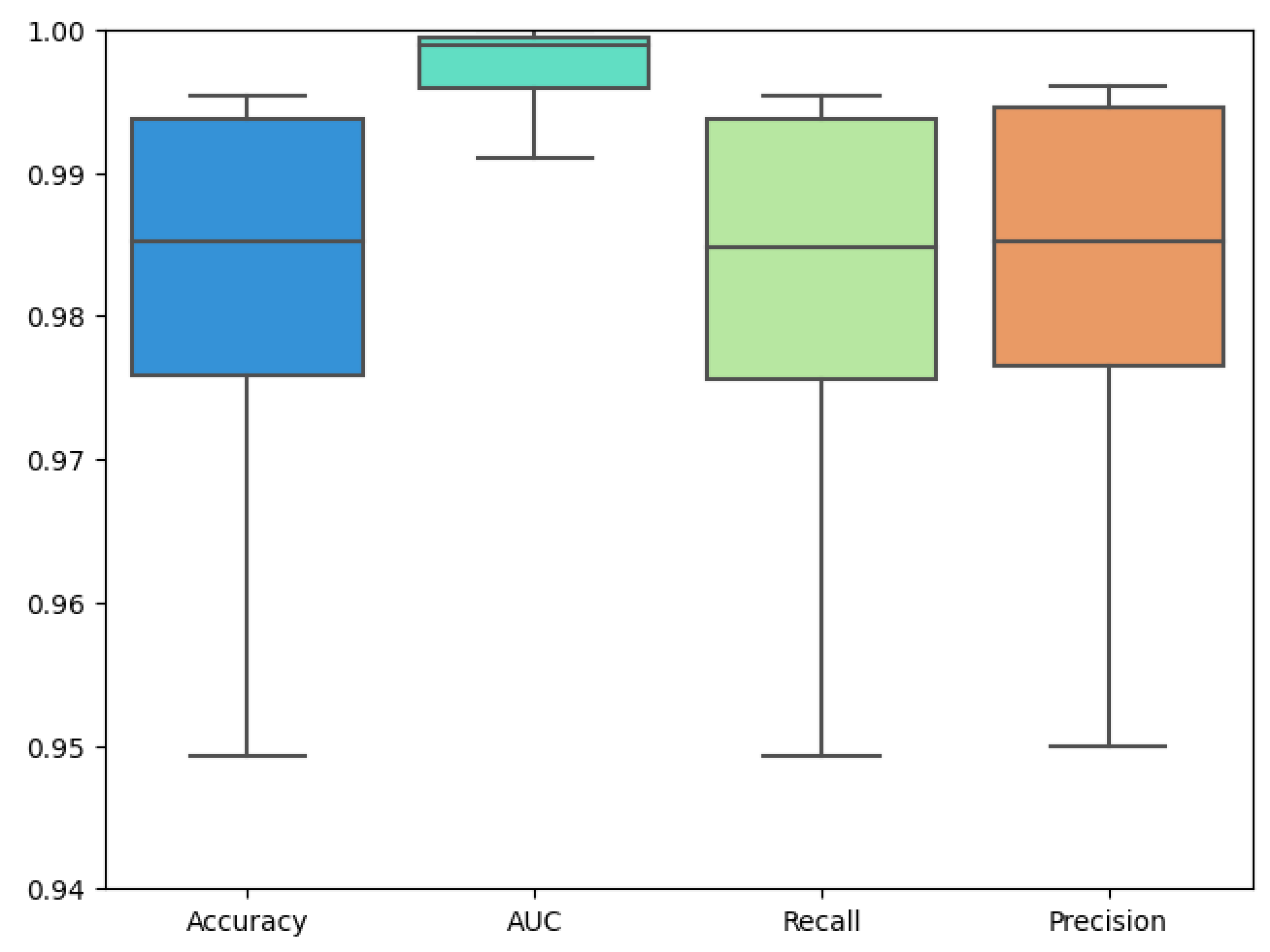

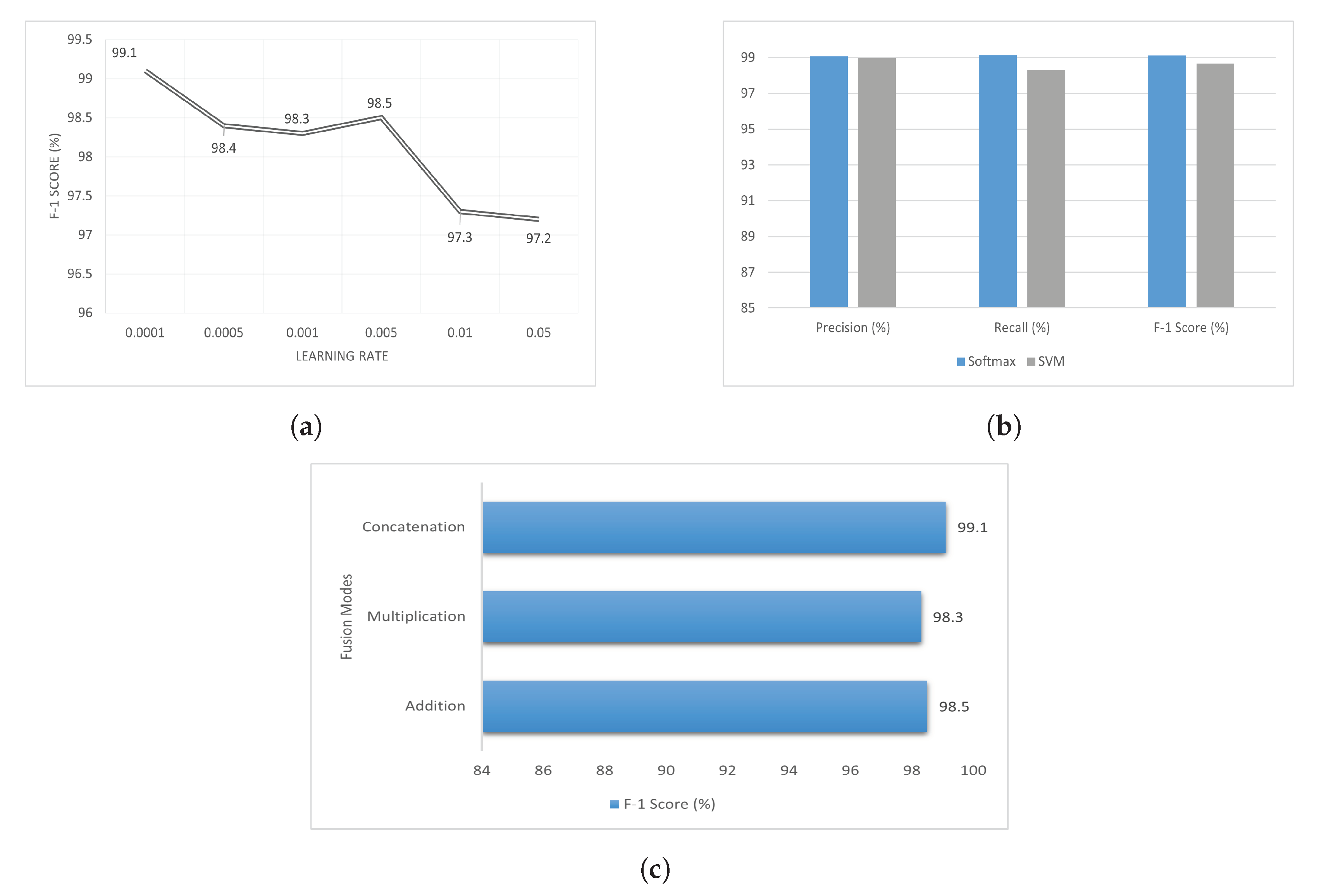

5. Result and Analysis

6. Limitations and Future Recommendations

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Tomassini, S.; Sbrollini, A.; Covella, G.; Sernani, P.; Falcionelli, N.; Müller, H.; Morettini, M.; Burattini, L.; Dragoni, A.F. Brain-on-Cloud for automatic diagnosis of Alzheimer’s disease from 3D structural magnetic resonance whole-brain scans. Comput. Methods Programs Biomed. 2022, 227, 107191. [Google Scholar] [CrossRef]

- Hamza, M.; Tehsin, S.; Humayun, M.; Almufareh, M.F.; Alfayad, M. A Comprehensive Review of Face Morph Generation and Detection of Fraudulent Identities. Appl. Sci. 2022, 12, 12545. [Google Scholar] [CrossRef]

- Tehsin, S.; Kausar, S.; Jameel, A.; Humayun, M.; Almofarreh, D.K. Satellite Image Categorization Using Scalable Deep. Learn. Appl. Sci. 2023, 13, 5108. [Google Scholar] [CrossRef]

- Alrayes, F.S.; Asiri, M.M.; Maashi, M.S.; Nour, M.K.; Rizwanullah, M.; Osman, A.E.; Drar, S.; Zamani, A.S. Waste classification using vision transformer based on multilayer hybrid convolution neural network. Urban Clim. 2023, 49, 101483. [Google Scholar] [CrossRef]

- Almufareh, M.F.; Tehsin, S.; Humayun, M.; Kausar, S. A Transfer Learning Approach for Clinical Detection Support of Monkeypox Skin Lesions. Diagnostics 2023, 13, 1503. [Google Scholar] [CrossRef] [PubMed]

- Loh, H.W.; Ooi, C.P.; Seoni, S.; Barua, P.D.; Molinari, F.; Acharya, U.R. Application of explainable artificial intelligence for healthcare: A systematic review of the last decade (2011–2022). Comput. Methods Programs Biomed. 2022, 226, 107161. [Google Scholar] [CrossRef]

- Dicuonzo, G.; Donofrio, F.; Fusco, A.; Shini, M. Healthcare system: Moving forward with artificial intelligence. Technovation 2023, 120, 102510. [Google Scholar] [CrossRef]

- Klöppel, S.; Stonnington, C.M.; Chu, C.; Draganski, B.; Scahill, R.I.; Rohrer, J.D.; Fox, N.C.; Jack, C.R.; Ashburner, J.; Frackowiak, R.S.J. Automatic classification of MR scans in Alzheimer’s disease. Brain 2008, 131, 681–689. [Google Scholar] [CrossRef]

- Gray, K.R.; Aljabar, P.; Heckemann, R.A.; Hammers, A.; Rueckert, D. Random forest-based similarity measures for multi-modal classification of Alzheimer’s disease. NeuroImage 2013, 65, 167–175. [Google Scholar] [CrossRef]

- Neati, S.; Abdellafou, K.B.; Jael, I.; Taouali, O.; Bouzrara, K. An improved machine learning technique based on downsized KPCA for Alzheimer’s disease classification. Int. J. Imaging Syst. Technol. 2019, 29, 121–131. [Google Scholar]

- Ahmed, O.B.; Benois-Pineau, J.; Allard, M.; Amar, C.B.; Catheline, G. Classification of Alzheimer’s disease subjects from MRI using hippocampal visual features. Multimed. Tools Appl. 2014, 74, 1249–1266. [Google Scholar] [CrossRef]

- El-Dahshan, E.S.A.; Hosny, T.; Salem, A.B.M. Hybrid intelligent techniques for MRI brain images classification. Digit. Signal Process. 2010, 20, 433–441. [Google Scholar] [CrossRef]

- Wang, S.-H.; Zhang, Y.; Li, Y.-J.; Jia, W.-J.; Liu, F.-Y.; Yang, M.-M.; Zhang, Y.-D. Single slice based detection for Alzheimer’s disease via wavelet entropy and multilayer perceptron trained by biogeography-based optimization. Multimed. Tools Appl. 2018, 77, 10393–10417. [Google Scholar] [CrossRef]

- Li, F.; Tran, L.; Thung, K.H.; Ji, S.; Shen, D.; Li, J. A robust deep model for improved classification of AD/MCI patients. IEEE J. Biomed. Health Inform. 2015, 19, 1610–1616. [Google Scholar] [CrossRef] [PubMed]

- Böhle, M.; Eitel, F.; Weygandt, M.; Ritter, K. Layer-wise relevance propagation for explaining deep neural network decisions in MRI-based Alzheimer’s disease classification. Front. Aging Neurosci. 2019, 11, 194. [Google Scholar] [CrossRef] [PubMed]

- Yang, K.; Mohammed, E.A. A review of artificial intelligence technologies for early prediction of Alzheimer’s disease. arXiv 2020, arXiv:2101.01781. [Google Scholar]

- Govindaraj, V.V. High performance multiple sclerosis classification by data augmentation and AlexNet transfer learning model. J. Med. Imaging Health Inform. 2019, 9, 2012–2021. [Google Scholar]

- Lu, S. Pathological brain detection based on AlexNet and transfer learning. J. Comput. Sci. 2019, 30, 41–47. [Google Scholar] [CrossRef]

- Nguyen, M.; He, T.; An, L.; Alexander, D.C.; Feng, J.; Yeo, B.T.T.; Alzheimer’s Disease Neuroimaging Initiative. Predicting Alzheimer’s disease progression using deep recurrent neural networks. Neuroimage 2020, 222, 117203. [Google Scholar] [CrossRef] [PubMed]

- Aless, R.M.; Biagetti, G.; Crippa, P.; Falaschetti, L.; Luzzi, S.; Turchetti, C. EEG-Based Alzheimer’s disease recognition using robust-PCA and LSTM recurrent neural network. Sensors 2022, 22, 3696. [Google Scholar]

- Aqeel, A.; Hassan, A.; Khan, M.A.; Rehman, S.; Tariq, U.; Kadry, S.; Majumdar, A.; Thinnukool, O. A long short-term memory biomarker-based prediction framework for Alzheimer’s disease. Sensors 2022, 22, 1475. [Google Scholar] [CrossRef]

- Ren, F.; Yang, C.; Qiu, Q.; Zeng, N.; Cai, C.; Hou, C.; Zou, Q. Exploiting discriminative regions of brain slices based on 2D CNNs for Alzheimer’s disease classification, IEEE Access: Practical Innovations. Open Solut. 2019, 7, 181423–181433. [Google Scholar]

- Zhang, Y.; Teng, Q.; Liu, Y.; Liu, Y.; He, X. Diagnosis of Alzheimer’s disease based on regional attention with sMRI gray matter slices. J. Neurosci. Methods 2021, 365, 109376. [Google Scholar] [CrossRef]

- Suk, H.I.; Wee, C.Y.; Lee, S.W.; Shen, D. State-space model with deep learning for functional dynamics estimation in resting-state fMRI. NeuroImage 2016, 129, 292–307. [Google Scholar] [CrossRef] [PubMed]

- Shi, B.; Chen, Y.; Zhang, P.; Smith, C.D.; Liu, J.; Neuroimaging, I.A.D. Nonlinear feature transformation and deep fusion for Alzheimer’s Disease staging analysis. Pattern Recognit. 2017, 63, 487–498. [Google Scholar] [CrossRef]

- Faturrahman, M.; Wasito, I.; Hanifah, N.; Mufidah, R. Structural MRI classification for Alzheimer’s disease detection using deep belief network. In Proceedings of the 11th International Conference on Information & Communication Technology and System (ICTS), Surabaya, Indonesia, 31 October 2017; pp. 37–42. [Google Scholar]

- Shakeri, M.; Lombaert, H.; Tripathi, S.; Kadoury, S.; Initiative, A.S.D.N. Deep spectral-based shape features for Alzheimer’s disease classification. In Proceedings of the International Workshop on Spectral and Shape Analysis in Medical Imaging, Athens, Greece, 21 October 2016; Springer: Berlin/Heidelberg, Germany, 2016; pp. 15–24. [Google Scholar]

- Lu, D.; Popuri, K.; Ding, G.W.; Balachandar, R.; Beg, M.F.; Initiative, A.S.D.N. Multiscale deep neural network based analysis of FDG-PET images for the early diagnosis of Alzheimer’s disease. Med. Image Anal. 2018, 46, 26–34. [Google Scholar] [CrossRef] [PubMed]

- Kushol, R.; Masoumzadeh, A.; Huo, D.; Kalra, S.; Yang, Y.H. Addformer: Alzheimer’s disease detection from structural mri using fusion transformer. In Proceedings of the 2022 IEEE 19th International Symposium On Biomedical Imaging (ISBI), Kolkata, India, 28–31 March 2022; IEEE: Piscataway Township, NJ, USA, 2022; pp. 1–5. [Google Scholar]

- Miltiadous, A.; Gionanidis, E.; Tzimourta, K.D.; Giannakeas, N.; Tzallas, A.T. DICE-net: A Novel Convolution-Transformer Architecture for Alzheimer Detection in EEG Signals. IEEE Access 2023, 11, 71840–71858. [Google Scholar] [CrossRef]

- Islam, J.; Zhang, Y. A novel deep learning based multi-class classification method for Alzheimer’s disease detection using brain MRI data. In Proceedings of the Brain Informatics: International Conference, BI 2017, Beijing, China, 16–18 November 2017; Springer International Publishing: Berlin/Heidelberg, Germany, 2017; pp. 213–222. [Google Scholar]

- OASIS Alzheimer’s Detection. Available online: https://www.kaggle.com/datasets/ninadaithal/imagesoasis (accessed on 10 June 2023).

- OASIS-1: Cross-Sectional: Principal Investigators: D. Marcus, R, Buckner, J, Csernansky J. Morris; P50 AG05681, P01 AG03991, P01 AG026276, R01 AG021910, P20 MH071616, U24 RR021382. Available online: https://www.oasis-brains.org/ (accessed on 10 June 2023).

- The Mini-Mental State Exam for Alzheimer’s. Available online: https://www.verywellhealth.com/mini-mental-state-exam-as-an-alzheimers-screening-test-98623 (accessed on 25 May 2023).

- Saba, T.; Khan, S.U.; Islam, N.; Abbas, N.; Rehman, A.; Javaid, N.; Anjum, A. Cloud-based decision support system for the detection and classification of malignant cells in breast cancer using breast cytology images. Microsc. Res. Tech. 2019, 82, 775–785. [Google Scholar] [CrossRef]

- Ayadi, M.; Ksibi, A.; Al-Rasheed, A.; Soufiene, B.O. COVID-AleXception: A deep learning model based on a deep feature concatenation approach for the detection of COVID-19 from chest X-ray images. Healthcare 2022, 10, 2072. [Google Scholar] [CrossRef] [PubMed]

- Kothadiya, D.; Rehman, A.; Abbas, S.; Alamri, F.S.; Saba, T. Attention-based deep learning framework to recognize diabetes disease from cellular retinal images. Biochem. Cell Biol. 2023. ahead of print. [Google Scholar] [CrossRef] [PubMed]

- Hern, E.-G.A.; Santana-Perez, I.; Arteaga-Marrero, N.; Fabelo, H.; Callico, G.M.; Ruiz-Alzola, J. Performance Evaluation of Deep Learning Models for Image Classification Over Small Datasets: Diabetic Foot Case Study. IEEE Access 2022, 10, 124373–124386. [Google Scholar]

- Hussain, Z.; Gimenez, F.; Yi, D.; Rubin, D. Differential data augmentation techniques for medical imaging classification tasks. AMIA Annu. Symp. Proc. 2017, 2017, 979. [Google Scholar] [PubMed]

- Shah, M.; Xiao, Y.; Subbanna, N.; Francis, S.; Arnold, D.L.; Collins, D.L.; Arbel, T. Evaluating intensity normalization on MRIs of human brain with multiple sclerosis. Med Image Anal. 2011, 15, 267–282. [Google Scholar] [CrossRef] [PubMed]

- Boyes, R.G.; Gunter, J.L.; Frost, C.; Janke, A.L.; Yeatman, T.; Hill, D.L.G.; Bernstein, M.A.; Thompson, P.M.; Weiner, M.W.; Schuff, N.; et al. Intensity non-uniformity correction using N3 on 3-T scanners with multichannel phased array coils. Neuroimage 2008, 39, 1752–1762. [Google Scholar] [CrossRef] [PubMed]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.; Polosukhin, I. Attention is all you need. Adv. Neural Inf. Process. Syst. 2017, 30, 1–15. [Google Scholar]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An image is worth 16x16 words: Transformers for image recognition at scale. arXiv 2020, arXiv:2010.11929. [Google Scholar]

- Tolstikhin, I.; Houlsby, N.; Kolesnikov, A.; Beyer, L.; Zhai, X.; Unterthiner, T.; Yung, J.; Steiner, A.; Keysers, D.; Uszkoreit, J.; et al. Mlp-mixer: An all-mlp architecture for vision. Adv. Neural Inf. Process. Syst. 2021, 34, 24261–24272. [Google Scholar]

- Kabir, A.; Kabir, F.; Mahmud, M.A.H.; Sinthia, S.A.; Azam, S.R.; Hussain, E.; Parvez, M.Z. Multi-Classification based Alzheimer’s Disease Detection with Comparative Analysis from Brain MRI Scans using Deep Learning. In Proceedings of the TENCON 2021–2021 IEEE Region 10 Conference (TENCON), Auckland, New Zealand, 7–10 December 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 905–910. [Google Scholar]

- Qureshi, S.A.; Raza, S.E.A.; Hussain, L.; Malibari, A.A.; Nour, M.K.; Rehman, A.U.; Al-Wesabi, F.N.; Hilal, A.M. Intelligent ultra-light deep learning model for multi-class brain tumor detection. Appl. Sci. 2022, 12, 3715. [Google Scholar] [CrossRef]

- Qureshi, S.A.; Hussain, L.; Ibrar, U.; Alabdulkreem, E.; Nour, M.K.; Alqahtani, M.S.; Nafie, F.M.; Mohamed, A.; Mohammed, G.P.; Duong, T.Q. Radiogenomic classification for MGMT promoter methylation status using multi-omics fused feature space for least invasive diagnosis through mpMRI scans. Sci. Rep. 2023, 13, 3291. [Google Scholar] [CrossRef]

| Disease | Precision% | Recall% | F1-Score% |

|---|---|---|---|

| ND | 100 | 100 | 100 |

| VMD | 99.99 | 100 | 99.99 |

| MID | 100 | 100 | 100 |

| MOD | 100 | 100 | 100 |

| Methodology | Precision% | Recall% | F-1% |

|---|---|---|---|

| Kabir et al. (2021) [45] | 92.78 | 90.78 | 0.94 |

| EfficientNetV2B1 | 90.37 | 89.76 | 90.06 |

| InceptionResnetV2 | 97.4 | 94.76 | 95.80 |

| InceptionV3 | 98.13 | 97.72 | 98.05 |

| Proposed | 99.99 | 99.99 | 99.99 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Almufareh, M.F.; Tehsin, S.; Humayun, M.; Kausar, S. Artificial Cognition for Detection of Mental Disability: A Vision Transformer Approach for Alzheimer’s Disease. Healthcare 2023, 11, 2763. https://doi.org/10.3390/healthcare11202763

Almufareh MF, Tehsin S, Humayun M, Kausar S. Artificial Cognition for Detection of Mental Disability: A Vision Transformer Approach for Alzheimer’s Disease. Healthcare. 2023; 11(20):2763. https://doi.org/10.3390/healthcare11202763

Chicago/Turabian StyleAlmufareh, Maram Fahaad, Samabia Tehsin, Mamoona Humayun, and Sumaira Kausar. 2023. "Artificial Cognition for Detection of Mental Disability: A Vision Transformer Approach for Alzheimer’s Disease" Healthcare 11, no. 20: 2763. https://doi.org/10.3390/healthcare11202763

APA StyleAlmufareh, M. F., Tehsin, S., Humayun, M., & Kausar, S. (2023). Artificial Cognition for Detection of Mental Disability: A Vision Transformer Approach for Alzheimer’s Disease. Healthcare, 11(20), 2763. https://doi.org/10.3390/healthcare11202763