Clinicians’ Perceptions of Artificial Intelligence: Focus on Workload, Risk, Trust, Clinical Decision Making, and Clinical Integration

Abstract

:1. Introduction

2. Methods and Materials

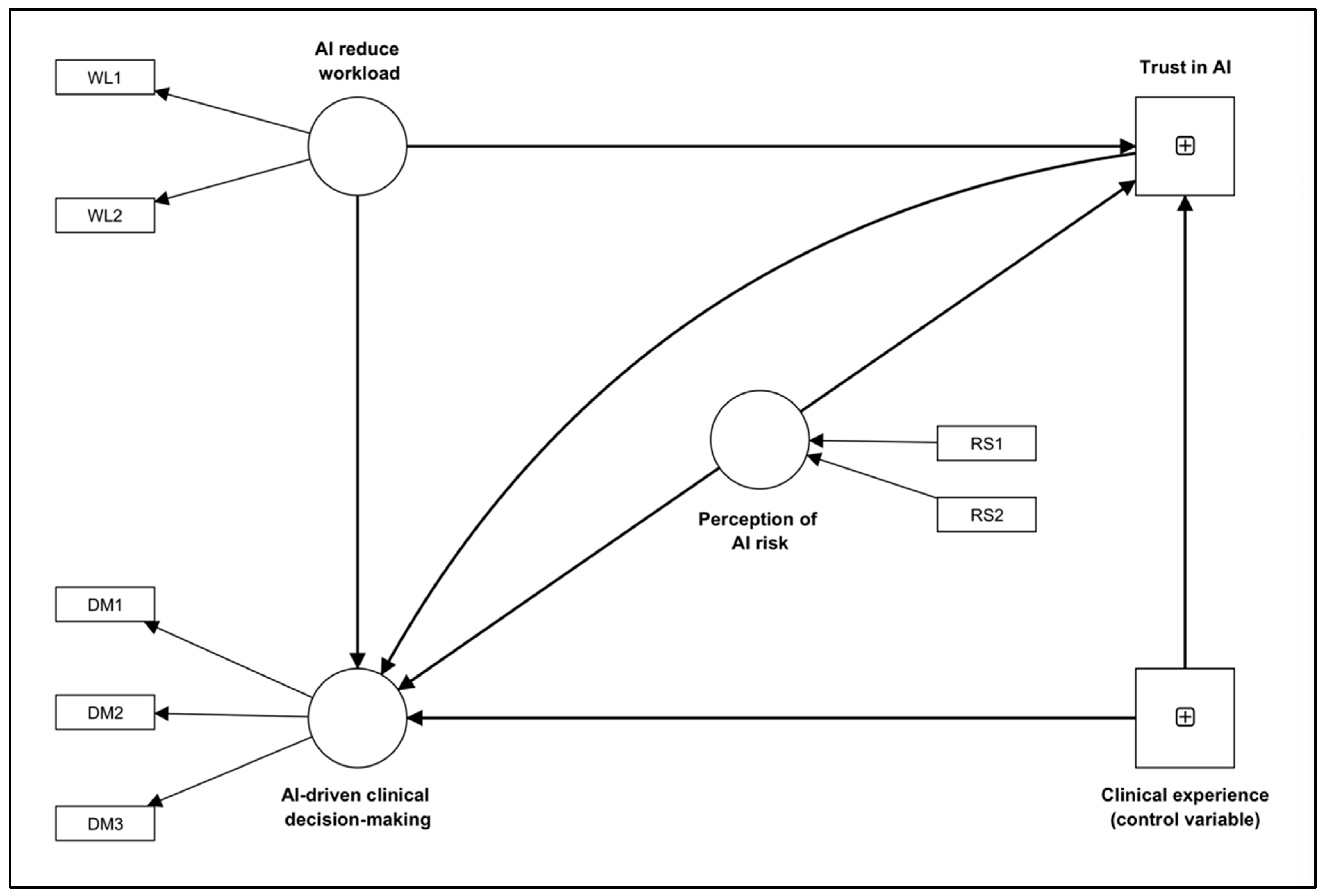

2.1. Survey Items and Variables

2.2. Statistical Analysis

3. Results

3.1. Respondents

3.2. Measurement Model

3.3. Structural Model

4. Discussion

4.1. Trust in AI

4.2. Decision–Making Using AI

4.3. Recommendation for Better AI Integration to Support AI-Driven Decision–Making

4.4. Limitations

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Zhang, X.; Lin, D.; Pforsich, H.; Lin, V.W. Physician workforce in the United States of America: Forecasting nationwide shortages. Hum. Resour. Health 2020, 18, 8. [Google Scholar] [CrossRef] [PubMed]

- Luther, L.; Gearhart, T.; Fukui, S.; Morse, G.; Rollins, A.L.; Salyers, M.P. Working overtime in community mental health: Associations with clinician burnout and perceived quality of care. Psychiatr. Rehabil. J. 2017, 40, 252. [Google Scholar] [CrossRef] [PubMed]

- Gonzalez, C. Task workload and cognitive abilities in dynamic decision making. Hum. Factors 2005, 47, 92–101. [Google Scholar] [CrossRef] [PubMed]

- Van Merriënboer, J.J.; Sweller, J. Cognitive load theory in health professional education: Design principles and strategies. Med. Educ. 2010, 44, 85–93. [Google Scholar] [CrossRef] [PubMed]

- Jeffri, N.F.S.; Rambli, D.R.A. A review of augmented reality systems and their effects on mental workload and task performance. Heliyon 2021, 7, e06277. [Google Scholar] [CrossRef] [PubMed]

- Byrne, A. Mental workload as a key factor in clinical decision making. Adv. Health Sci. Educ. 2013, 18, 537–545. [Google Scholar] [CrossRef]

- Garot, O.; Rössler, J.; Pfarr, J.; Ganter, M.T.; Spahn, D.R.; Nöthiger, C.B.; Tscholl, D.W. Avatar-based versus conventional vital sign display in a central monitor for monitoring multiple patients: A multicenter computer-based laboratory study. BMC Med. Inform. Decis. Mak. 2020, 20, 26. [Google Scholar] [CrossRef]

- Akbas, S.; Said, S.; Roche, T.R.; Nöthiger, C.B.; Spahn, D.R.; Tscholl, D.W.; Bergauer, L. User Perceptions of Different Vital Signs Monitor Modalities During High-Fidelity Simulation: Semiquantitative Analysis. JMIR Hum. Factors 2022, 9, e34677. [Google Scholar] [CrossRef]

- Harada, T.; Miyagami, T.; Kunitomo, K.; Shimizu, T. Clinical decision support systems for diagnosis in primary care: A scoping review. Int. J. Environ. Res. Public Health 2021, 18, 8435. [Google Scholar] [CrossRef]

- Roy, K.; Debdas, S.; Kundu, S.; Chouhan, S.; Mohanty, S.; Biswas, B. Application of natural language processing in healthcare. In Computational Intelligence and Healthcare Informatics; Wiely: Hoboken, NJ, USA, 2021; pp. 393–407. [Google Scholar]

- Smalley, E. AI-powered drug discovery captures pharma interest. Nat. Biotechnol. 2017, 35, 604–606. [Google Scholar] [CrossRef]

- Saadabadi, M.S.E.; Malakshan, S.R.; Zafari, A.; Mostofa, M.; Nasrabadi, N.M. A Quality Aware Sample-to-Sample Comparison for Face Recognition. In Proceedings of the 2023 IEEE/CVF Winter Conference on Applications of Computer Vision (WACV), Waikoloa, HI, USA, 2–7 January 2023; pp. 6129–6138. [Google Scholar]

- Ahmed, Z.; Mohamed, K.; Zeeshan, S.; Dong, X. Artificial intelligence with multi-functional machine learning platform development for better healthcare and precision medicine. Database 2020, 2020, baaa010. [Google Scholar] [CrossRef] [PubMed]

- Nagy, M.; Sisk, B. How will artificial intelligence affect patient-clinician relationships? AMA J. Ethics 2020, 22, 395–400. [Google Scholar]

- Tulk Jesso, S.; Kelliher, A.; Sanghavi, H.; Martin, T.; Henrickson Parker, S. Inclusion of clinicians in the development and evaluation of clinical artificial intelligence tools: A systematic literature review. Front. Psychol. 2022, 13, 773. [Google Scholar] [CrossRef] [PubMed]

- Shinners, L.; Aggar, C.; Grace, S.; Smith, S. Exploring healthcare professionals’ understanding and experiences of artificial intelligence technology use in the delivery of healthcare: An integrative review. Health Inform. J. 2020, 26, 1225–1236. [Google Scholar] [CrossRef] [PubMed]

- Hah, H.; Goldin, D.S. How Clinicians Perceive Artificial Intelligence–Assisted Technologies in Diagnostic Decision Making: Mixed Methods Approach. J. Med. Internet Res. 2021, 23, e33540. [Google Scholar] [CrossRef]

- Lötsch, J.; Kringel, D.; Ultsch, A. Explainable artificial intelligence (XAI) in biomedicine: Making AI decisions trustworthy for physicians and patients. BioMedInformatics 2022, 2, 1–17. [Google Scholar] [CrossRef]

- Castagno, S.; Khalifa, M. Perceptions of artificial intelligence among healthcare staff: A qualitative survey study. Front. Artif. Intell. 2020, 3, 578983. [Google Scholar] [CrossRef] [PubMed]

- Secinaro, S.; Calandra, D.; Secinaro, A.; Muthurangu, V.; Biancone, P. The role of artificial intelligence in healthcare: A structured literature review. BMC Med. Inform. Decis. Mak. 2021, 21, 125. [Google Scholar] [CrossRef]

- Shen, J.; Zhang, C.J.; Jiang, B.; Chen, J.; Song, J.; Liu, Z.; He, Z.; Wong, S.Y.; Fang, P.-H.; Ming, W.-K. Artificial intelligence versus clinicians in disease diagnosis: Systematic review. JMIR Med. Inform. 2019, 7, e10010. [Google Scholar] [CrossRef]

- Choudhury, A. Toward an Ecologically Valid Conceptual Framework for the Use of Artificial Intelligence in Clinical Settings: Need for Systems Thinking, Accountability, Decision-making, Trust, and Patient Safety Considerations in Safeguarding the Technology and Clinicians. JMIR Hum. Factors 2022, 9, e35421. [Google Scholar] [CrossRef]

- Tran, A.Q.; Nguyen, L.H.; Nguyen, H.S.A.; Nguyen, C.T.; Vu, L.G.; Zhang, M.; Vu, T.M.T.; Nguyen, S.H.; Tran, B.X.; Latkin, C.A. Determinants of intention to use artificial intelligence-based diagnosis support system among prospective physicians. Front. Public Health 2021, 9, 755644. [Google Scholar] [CrossRef]

- Alhashmi, S.F.; Alshurideh, M.; Al Kurdi, B.; Salloum, S.A. A systematic review of the factors affecting the artificial intelligence implementation in the health care sector. In Proceedings of the International Conference on Artificial Intelligence and Computer Vision (AICV2020), Cairo, Egypt, 8–10 April 2020; pp. 37–49. [Google Scholar]

- Benda, N.C.; Novak, L.L.; Reale, C.; Ancker, J.S. Trust in AI: Why we should be designing for APPROPRIATE reliance. J. Am. Med. Inform. Assoc. 2022, 29, 207–212. [Google Scholar] [CrossRef] [PubMed]

- Zhang, Z.; Genc, Y.; Xing, A.; Wang, D.; Fan, X.; Citardi, D. Lay individuals’ perceptions of artificial intelligence (AI)-empowered healthcare systems. Proc. Assoc. Inf. Sci. Technol. 2020, 57, e326. [Google Scholar] [CrossRef]

- Choudhury, A. Factors influencing clinicians’ willingness to use an AI-based clinical decision support system. Front. Digit. Health 2022, 4, 920662. [Google Scholar] [CrossRef] [PubMed]

- Mayer, R.C.; Davis, J.H.; Schoorman, F.D. An integrative model of organizational trust. Acad. Manag. Rev. 1995, 20, 709–734. [Google Scholar] [CrossRef]

- Choudhury, A.; Elkefi, S. Acceptance, initial trust formation, and human biases in artificial intelligence: Focus on clinicians. Front. Digit. Health 2022, 4, 966174. [Google Scholar] [CrossRef] [PubMed]

- Akash, K.; Polson, K.; Reid, T.; Jain, N. Improving human-machine collaboration through transparency-based feedback–part I: Human trust and workload model. IFAC-Pap. 2019, 51, 315–321. [Google Scholar] [CrossRef]

- Chien, S.-Y.; Lewis, M.; Sycara, K.; Liu, J.-S.; Kumru, A. The effect of culture on trust in automation: Reliability and workload. ACM Trans. Interact. Intell. Syst. (TiiS) 2018, 8, 1–31. [Google Scholar] [CrossRef]

- Israelsen, B.; Wu, P.; Woodruff, K.; Avdic-McIntire, G.; Radlbeck, A.; McLean, A.; Highland, P.D.; Schnell, T.M.; Javorsek, D.A. Introducing SMRTT: A Structural Equation Model of Multimodal Real-Time Trust. In Proceedings of the Companion of the 2021 ACM/IEEE International Conference on Human-Robot Interaction, Boulder, CO, USA, 8–11 March 2021; pp. 126–130. [Google Scholar]

- Dubois, C.; Le Ny, J. Adaptive task allocation in human-machine teams with trust and workload cognitive models. In Proceedings of the 2020 IEEE International Conference on Systems, Man, and Cybernetics (SMC), Toronto, ON, Canada, 11–14 October 2020; pp. 3241–3246. [Google Scholar]

- Glikson, E.; Woolley, A.W. Human trust in artificial intelligence: Review of empirical research. Acad. Manag. Ann. 2020, 14, 627–660. [Google Scholar] [CrossRef]

- Nejati, B.; Lin, C.C.; Aaronson, N.K.; Cheng, A.S.; Browall, M.; Lin, C.Y.; Broström, A.; Pakpour, A.H. Determinants of satisfactory patient communication and shared decision making in patients with multiple myeloma. Psycho-Oncology 2019, 28, 1490–1497. [Google Scholar] [CrossRef]

- Vereschak, O.; Bailly, G.; Caramiaux, B. How to evaluate trust in AI-assisted decision making? A survey of empirical methodologies. Proc. ACM Hum.-Comput. Interact. 2021, 5, 1–39. [Google Scholar] [CrossRef]

- Zhang, Y.; Liao, Q.V.; Bellamy, R.K. Effect of confidence and explanation on accuracy and trust calibration in AI-assisted decision making. In Proceedings of the 2020 Conference on Fairness, Accountability, and Transparency, Barcelona, Spain, 27–30 January 2020; pp. 295–305. [Google Scholar]

- Ashoori, M.; Weisz, J.D. In AI we trust? Factors that influence trustworthiness of AI-infused decision-making processes. arXiv 2019, arXiv:1912.02675. [Google Scholar]

- Gbongli, K.; Xu, Y.; Amedjonekou, K.M.; Kovács, L. Evaluation and classification of mobile financial services sustainability using structural equation modeling and multiple criteria decision-making methods. Sustainability 2020, 12, 1288. [Google Scholar] [CrossRef]

- Xie, Y.; Bodala, I.P.; Ong, D.C.; Hsu, D.; Soh, H. Robot capability and intention in trust-based decisions across tasks. In Proceedings of the 2019 14th ACM/IEEE International Conference on Human-Robot Interaction (HRI), Barcelona, Spain, 27–30 January 2020; pp. 39–47. [Google Scholar]

- Kahneman, D.; Tversky, A. Prospect theory: An analysis of decision under risk. In Handbook of the fundamentals of financial decision making: Part I; World Scientific: Singapore, 2013; pp. 99–127. [Google Scholar]

- Manojkrishnan, C.G.; Aravind, M. COVID-19 Pandemic and its Impact on Labor Force: A New Model Based on Social Stress Theory and Prospect Theory. Sci. Pap. Univ. Pardubic. Ser. D Fac. Econ. Adm. 2020, 28, 1–12. [Google Scholar]

- Hart, S.G.; Staveland, L.E. Development of NASA-TLX (Task Load Index): Results of empirical and theoretical research. In Advances in Psychology; Elsevier: Amsterdam, The Netherlands, 1988; Volume 52, pp. 139–183. [Google Scholar]

- Chao, C.-M. Factors determining the behavioral intention to use mobile learning: An application and extension of the UTAUT model. Front. Psychol. 2019, 10, 1652. [Google Scholar] [CrossRef]

- Latif, K.F.; Nazeer, A.; Shahzad, F.; Ullah, M.; Imranullah, M.; Sahibzada, U.F. Impact of entrepreneurial leadership on project success: Mediating role of knowledge management processes. Leadersh. Organ. Dev. J. 2020, 41, 237–256. [Google Scholar] [CrossRef]

- Hair, J.F.; Risher, J.J.; Sarstedt, M.; Ringle, C.M. When to use and how to report the results of PLS-SEM. Eur. Bus. Rev. 2019, 31, 2–24. [Google Scholar] [CrossRef]

- Hair, J.F.; Hult, G.T.M.; Ringle, C.M.; Sarstedt, M.; Thiele, K.O. Mirror, mirror on the wall: A comparative evaluation of composite-based structural equation modeling methods. J. Acad. Mark. Sci. 2017, 45, 616–632. [Google Scholar] [CrossRef]

- Nitzl, C. The use of partial least squares structural equation modelling (PLS-SEM) in management accounting research: Directions for future theory development. J. Account. Lit. 2016, 37, 19–35. [Google Scholar] [CrossRef]

- Hair, J.F., Jr.; Hult, G.T.M.; Ringle, C.M.; Sarstedt, M. A Primer on Partial Least Squares Structural Equation Modeling (PLS-SEM); Sage Publications: Thousand Oaks, CA, USA, 2021. [Google Scholar]

- Li, C.; Zhang, Y.; Xu, Y. Factors Influencing the Adoption of Blockchain in the Construction Industry: A Hybrid Approach Using PLS-SEM and fsQCA. Buildings 2022, 12, 1349. [Google Scholar] [CrossRef]

- Akash, K.; McMahon, G.; Reid, T.; Jain, N. Human trust-based feedback control: Dynamically varying automation transparency to optimize human-machine interactions. IEEE Control. Syst. Mag. 2020, 40, 98–116. [Google Scholar] [CrossRef]

- de Visser, E.; Parasuraman, R. Adaptive aiding of human-robot teaming: Effects of imperfect automation on performance, trust, and workload. J. Cogn. Eng. Decis. Mak. 2011, 5, 209–231. [Google Scholar] [CrossRef]

- Bulińska-Stangrecka, H.; Bagieńska, A. HR practices for supporting interpersonal trust and its consequences for team collaboration and innovation. Sustainability 2019, 11, 4423. [Google Scholar] [CrossRef]

- Cook, K.S.; Emerson, R.M. Social Exchange Theory; Sage Publications: Thousand Oaks, CA, USA, 1987. [Google Scholar]

- Blau, P.M. Justice in social exchange. Sociol. Inq. 1964, 34, 193–206. [Google Scholar] [CrossRef]

- Earle, T.C. Trust in risk management: A model-based review of empirical research. Risk Anal. Int. J. 2010, 30, 541–574. [Google Scholar] [CrossRef] [PubMed]

- Ha, T.; Kim, S.; Seo, D.; Lee, S. Effects of explanation types and perceived risk on trust in autonomous vehicles. Transp. Res. Part F Traffic Psychol. Behav. 2020, 73, 271–280. [Google Scholar] [CrossRef]

- Li, M.; Holthausen, B.E.; Stuck, R.E.; Walker, B.N. No risk no trust: Investigating perceived risk in highly automated driving. In Proceedings of the 11th International Conference on Automotive User Interfaces and Interactive Vehicular Applications, Utrecht, The Netherlands, 21–25 September 2019; pp. 177–185. [Google Scholar]

- Lang, A. The limited capacity model of mediated message processing. J. Commun. 2000, 50, 46–70. [Google Scholar] [CrossRef]

- Radlo, S.J.; Janelle, C.M.; Barba, D.A.; Frehlich, S.G. Perceptual decision making for baseball pitch recognition: Using P300 latency and amplitude to index attentional processing. Res. Q. Exerc. Sport 2001, 72, 22–31. [Google Scholar] [CrossRef]

- Goldsmith, R.E. Rational choice and bounded rationality. In Consumer Perception of Product Risks and Benefits; Springer: Cham, Switzerland, 2017; pp. 233–252. [Google Scholar]

- Richardson, K.M.; Fouquet, S.D.; Kerns, E.; McCulloh, R.J. Impact of mobile device-based clinical decision support tool on guideline adherence and mental workload. Acad. Pediatr. 2019, 19, 828–834. [Google Scholar] [CrossRef]

- Malau-Aduli, B.S.; Hays, R.B.; D’Souza, K.; Smith, A.M.; Jones, K.; Turner, R.; Shires, L.; Smith, J.; Saad, S.; Richmond, C. Examiners’ decision-making processes in observation-based clinical examinations. Med. Educ. 2021, 55, 344–353. [Google Scholar] [CrossRef]

- Levy, J.S. An introduction to prospect theory. Political Psychol. 1992, 171–186. [Google Scholar]

| Survey Items | Likert Scale | Standard Deviation |

|---|---|---|

| I think using AI would improve my clinical decision-making skills/abilities. (DM1) | 7 | 1.448 |

| + I think using AI would confuse me and hinder my clinical decision-making skills. (DM2) | 7 | 1.399 |

| I think using AI would allow me to accomplish clinical tasks more quickly. (DM3) | 7 | 1.378 |

| I think AI in healthcare is trustworthy. (TR) | 7 | 1.354 |

| I think using AI for my clinical work will put my patients (health) at risk. (RS1) | 7 | 1.350 |

| I think using AI will put my patients’ privacy at risk. (RS2) | 7 | 1.549 |

| Overall, I think using AI to complete clinical tasks will be: (very demanding—very easy). (WL1) | 7 | 1.323 |

| I think using AI in my clinical practice will reduce my overall workload. (WL2) | 7 | 1.483 |

| For approximately how many years have you been serving in your current position? | 5 | 1.462 |

| Survey Items | |

|---|---|

| 1 | With which gender do you identify yourself with? |

| 2 | With which race do you identify yourself with? |

| 3 | What is your clinical expertise? |

| 4 | What is your designation? |

| 5 | For approximately how many years have you been serving in your current position? |

| 6 | Have you ever used any AI in your work or research? |

| 7 | How was your overall experience of using AI? |

| 8 | Given a chance, how do you want AI to assist you in clinical tasks? |

| 9 | What can the government do to motivate you to adopt AI in your clinical practice? |

| 10 | What are the factors preventing you from using AI? |

| Constructs | Items | Factor Loading | Variance Inflation Factor | Cronbach’s Alpha | Composite Reliability | Average Variance Explained |

|---|---|---|---|---|---|---|

| Perception of AI risk (RS) * | RS1 | 0.98 | 1.30 | na | na | na |

| RS2 | 0.65 | 1.30 | ||||

| AI reduces workload (WL) | WL1 | 0.66 | 1.43 | 0.71 | 0.74 | 0.57 |

| WL2 | 0.84 | 1.43 | ||||

| AI-driven decision making (DM) | DM1 | 0.82 | 2.15 | 0.75 | 0.81 | 0.54 |

| DM2 | 0.51 | 1.24 | ||||

| DM3 | 0.83 | 2.03 |

| Conceptualized Paths | Standardized Path Coefficient | Standard Deviation | T Statistics | p Values |

|---|---|---|---|---|

| Direct effects | ||||

| AI reduces workload → AI-driven clinical decision making | 0.659 | 0.108 | 6.089 | <0.001 |

| AI reduce workload → Trust in AI | 0.661 | 0.080 | 8.252 | <0.001 |

| Clinical experience (control variable) → AI-driven clinical decision making | 0.026 | 0.045 | 0.588 | 0.557 |

| Clinical experience (control variable) → Trust in AI | 0.049 | 0.056 | 0.888 | 0.375 |

| Perception of AI risk → AI-driven clinical decision making | −0.346 | 0.063 | 5.477 | <0.001 |

| Perception of AI risk → Trust in AI | −0.062 | 0.070 | 0.854 | 0.393 |

| Trust in AI → AI-driven clinical decision making | 0.114 | 0.091 | 1.252 | 0.210 |

| Total indirect effects | ||||

| AI reduces workload → AI-driven clinical decision making | 0.070 | 0.061 | 1.227 | 0.220 |

| Clinical experience (control variable) → AI-driven clinical decision making | 0.005 | 0.008 | 0.665 | 0.506 |

| Perception of AI risk → AI-driven clinical decision making | −0.008 | 0.012 | 0.555 | 0.579 |

| Specific indirect effects | ||||

| Clinical experience (control variable) → Trust in AI → AI-driven clinical decision making | 0.005 | 0.008 | 0.665 | 0.506 |

| Perception of AI risk → Trust in AI → AI-driven clinical decision making | −0.008 | 0.012 | 0.555 | 0.579 |

| AI reduces workload → Trust in AI → AI-driven clinical decision making | 0.070 | 0.061 | 1.227 | 0.220 |

| Total effects | ||||

| AI reduces workload → AI-driven clinical decision making | 0.739 | 0.069 | 10.688 | <0.001 |

| AI reduces workload → Trust in AI | 0.660 | 0.080 | 8.252 | <0.001 |

| Clinical experience (control variable) → AI-driven clinical decision making | 0.031 | 0.046 | 0.703 | 0.482 |

| Clinical experience (control variable) → Trust in AI | 0.048 | 0.056 | 0.888 | 0.375 |

| Perception of AI risk → AI-driven clinical decision making | −0.347 | 0.067 | 5.287 | <0.001 |

| Perception of AI risk → Trust in AI | −0.062 | 0.070 | 0.854 | 0.393 |

| Trust in AI → AI-driven clinical decision making | 0.109 | 0.091 | 1.252 | 0.210 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Shamszare, H.; Choudhury, A. Clinicians’ Perceptions of Artificial Intelligence: Focus on Workload, Risk, Trust, Clinical Decision Making, and Clinical Integration. Healthcare 2023, 11, 2308. https://doi.org/10.3390/healthcare11162308

Shamszare H, Choudhury A. Clinicians’ Perceptions of Artificial Intelligence: Focus on Workload, Risk, Trust, Clinical Decision Making, and Clinical Integration. Healthcare. 2023; 11(16):2308. https://doi.org/10.3390/healthcare11162308

Chicago/Turabian StyleShamszare, Hamid, and Avishek Choudhury. 2023. "Clinicians’ Perceptions of Artificial Intelligence: Focus on Workload, Risk, Trust, Clinical Decision Making, and Clinical Integration" Healthcare 11, no. 16: 2308. https://doi.org/10.3390/healthcare11162308

APA StyleShamszare, H., & Choudhury, A. (2023). Clinicians’ Perceptions of Artificial Intelligence: Focus on Workload, Risk, Trust, Clinical Decision Making, and Clinical Integration. Healthcare, 11(16), 2308. https://doi.org/10.3390/healthcare11162308