Abstract

Ultrasound (US) imaging is a medical imaging modality that uses the reflection of sound in the range of 2–18 MHz to image internal body structures. In US, the frequency bandwidth (BW) is directly associated with image resolution. BW is a property of the transducer and more bandwidth comes at a higher cost. Thus, methods that can transform strongly bandlimited ultrasound data into broadband data are essential. In this work, we propose a deep learning (DL) technique to improve the image quality for a given bandwidth by learning features provided by broadband data of the same field of view. Therefore, the performance of several DL architectures and conventional state-of-the-art techniques for image quality improvement and artifact removal have been compared on in vitro US datasets. Two training losses have been utilized on three different architectures: a super resolution convolutional neural network (SRCNN), U-Net, and a residual encoder decoder network (REDNet) architecture. The models have been trained to transform low-bandwidth image reconstructions to high-bandwidth image reconstructions, to reduce the artifacts, and make the reconstructions visually more attractive. Experiments were performed for 20%, 40%, and 60% fractional bandwidth on the original images and showed that the improvements obtained are as high as 45.5% in RMSE, and 3.85 dB in PSNR, in datasets with a 20% bandwidth limitation.

1. Introduction

Ultrasound (US) imaging is the modality of choice for many diagnostic purposes [1,2]. US is a cost-effective, versatile, and non-invasive imaging modality and is therefore often applied for various imaging tasks, such as cardiac imaging [3], abdominal imaging [4] or obstetrics [5] in addition to many other applications. The amount of bandwidth (BW) necessary for imaging varies from task to task [6]. The frequency range of the transducer is a key factor in determining the resolution for B-mode imaging [7,8,9]. The temporal bandwidth of the ultrasound transducer is directly associated with the image resolution and image quality. A large bandwidth therefore ensures that the smallest possible detail is visible in both axial and lateral direction. Certain tasks require a higher bandwidth transducer to image structures that are normally not clearly distinguishable with lower bandwidth transducers, as in the case of intra-vascular microvessel imaging [10]. Intravascular ultrasound (IVUS) based methods require high-frequency transducers which are not available commercially for imaging the microvessels [10]. High-frequency transducers are also required for imaging in various clinical applications and in small animal studies for scanning rats, mice and zebrafish [11,12]. Furthermore, for intra-vascular or intra-cardiac US techniques, the responsiveness of the PZT crystals (with certain thickness) with respect to frequency also limits the bandwidth [13]. The improvement in reconstruction that transforms low bandwidth image reconstruction to high bandwidth image reconstruction can potentially help in making these tasks possible with lower bandwidth transducers. Traditional methods for bandwidth improvement in US already exist; for example, in ultrafast imaging, the bandwidth of the transducer limits the image quality of the reconstruction. Resolution enhancement compression (REC) is then utilized for enhancing the bandwidth [14]. In Ref. [15], a pre-filtering technique was used to increase the output signal bandwidth for the US transmit-receive systems. These methods are based on traditional approaches but are not data driven and hence do not consider the probability distributions of actual US.

In this work, we proposed a new technique for bandwidth improvement based on deep learning (DL). DL has already been successful in addressing a wide range of problems, such as speech recognition [16], language processing [17], image classification [18] and image segmentation [19]. Furthermore, DL has been used in various medical applications [20,21,22] like Computed Tomography (CT) imaging [23,24], US imaging [25,26,27,28], and MRI imaging [29,30] to improve image quality. DL has applications in denoising the image, image analysis and super-resolution. In super-resolution, a low-resolution image is transformed into a higher-resolution image. This task of bandwidth enhancement in ultrasound is similar to super resolution problems in computer vision. In ultrasound imaging, DL methods have been applied for various kinds of image enhancement tasks. Perdios et al. [31] proposed a residual CNN based method, using multiscale and multichannel filtering properties, for transforming a low-quality estimate to a high-quality image for ultrafast US imaging. In the work by Yoon et al. [32], a DL-based model is created that can transform a sub-sampled radiofrequency (RF) signal directly to a normal-quality B-mode image. However, none of these tasks have actively targeted bandwidth improvement.

In this work, we assess the performance of several popular DL architectures for the first time on five different US datasets consisting of B-mode images. The three model architectures compared are: super resolution convolutional neural network (SRCNN) [33], U-Net [34], and a Residual Encoder Decoder network (REDNet) architecture based on the work by Gholizadeh-Ansari et al. [35]. We also introduce two loss functions namely, PL1 (scaled mean square error) and PL2 (a combination of mean square error (RMSE) and perceptual loss), to improve the results for these different architectures. To show that these models really improve the reconstruction and bandwidth, we also compare these methods with conventional histogram-based methods for contrast enhancement. For this, we use histogram equalization (HE) and contrast limited adaptive histogram equalization (CLAHE). The main contributions of the work can be summarized as follows:

- A DL-based method is proposed for directly transforming a low-bandwidth image reconstruction to a broadband image reconstruction. The transducers are band-limited in nature. The band-limitation of the transducer directly affects the resolution of the US image. This DL based technique can help in transforming the low-bandwidth image reconstruction obtained into a high-bandwidth image reconstruction, which can help in removing artifacts, improving resolution and image quality in general.

- A data driven method was proposed for bandwidth improvement in US imaging for the first-time using DL. The DL model proposed is light weight, has low computational complexity, and can be utilized in real settings. Error analysis, frequency domain comparisons and speckle characteristic comparisons were performed to show the benefits of the proposed method as compared with other state-of-the-art networks.

- A scaled mean square error loss for the training of the DL network is introduced to solve the vanishing gradient problem. The models were trained on only one set of training data and tested on five different datasets with different properties to show the generalizable and scalable property of the proposed model in real settings.

The rest of this article is organized as follows. In Section 2, we briefly introduce the deep learning methods compared in this work, training strategy, and loss functions. In Section 3, datasets and experiments have been discussed, whereas in Section 4, details about the figures of metric have been presented. In Section 5, results are discussed, while Section 6 details about the discussion, analysis of all the models and Section 7 concludes the work.

2. Material and Methods

We evaluate the proposed DL-based methods along with the two different loss functions, for enhancing the bandwidth of the band-limited US images.

2.1. Deep Learning Techniques

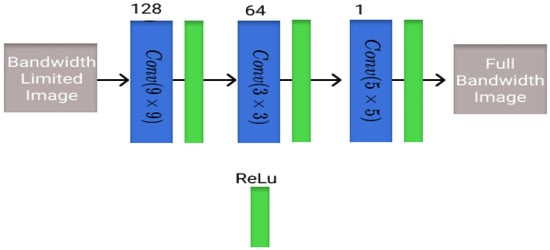

2.1.1. SRCNN

The first architecture that is evaluated is known as the super-resolution convolutional neural network (SRCNN) and is one of the first models to learn end-to-end mapping of low-resolution to high-resolution images. It was one of the first super-resolution DL architectures for computer vision images [33]. The model architecture is shown in Figure 1 with corresponding blocks and the number of filters in each layer.

Figure 1.

Visualization of the SRCNN architecture utilized in this study with different blocks and the number of filters present in each layer.

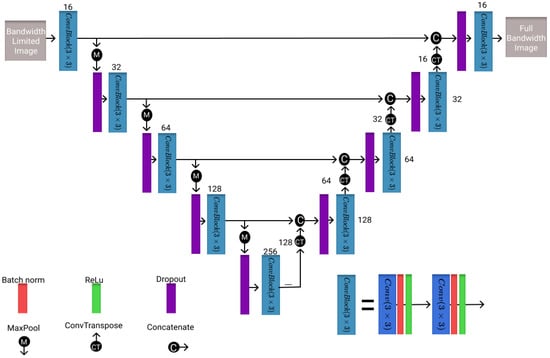

2.1.2. UNet

The second architecture evaluated is the U-Net, which is often used for medical imaging segmentation problems [34]. However, it has also been applied in the field of super resolution and denoising to improve image quality or resolution [36,37]. Therefore, the U-Net model is chosen as the benchmark model. However, a ReLu activation instead of the sigmoid activation is applied here for bandwidth improvement. Thus, the model is slightly adjusted by removing the sigmoid activation in the last layer. The model architecture is shown in Figure 2 with the number of filters in each layer and the corresponding block representations.

Figure 2.

Visualization of the U-Net architecture utilized in this study with different blocks and the number of filters present in each layer.

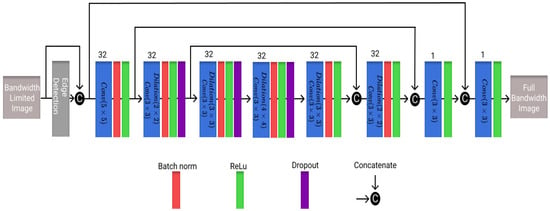

2.1.3. REDNet

The third architecture utilized here is a residual encoder decoder network (REDNet), which was originally developed to transform low-dose CT images to high-dose CT images [35]. As can be seen in Figure 3, the architecture uses an edge detection layer that uses a Sobel filter for feature extraction. The model uses dilated convolutional layers to increase the receptive field of the model as this is more computationally efficient than increasing either the filters or the kernel size [38]. As a small adjustment to the model, dropout layers with a value of 0.05 were added to reduce over-fitting. The model architecture is shown in Figure 3, with the number of filters in each layer and corresponding block representations.

Figure 3.

Visualization of the REDNet architecture utilized in this work with different blocks and the number of filters present in each layer.

2.2. Loss Functions

We evaluated the models for two different loss functions:

- PL1 loss: Scaled mean square error loss. PL1 loss is used as it is more sensitive towards outliers and is the most used loss function [39]. The network was trained using the proposed PL1 (scaled-MSE) loss function, defined as:where, are the predictions of the model, are the true signals and is the scaling factor [40].

- PL2 loss: Recently perceptual loss has gained wide popularity as it can extract features, and can compare the content, style, and high-level differences between images [35]. Hence, we used PL2 loss as the combination of RMSE and perceptual loss. The losses are combined in a ratio of 30:70 [35].

2.3. Model Specifications

The models are implemented in TensorFlow [41] using Keras [42] as back-end. All models are trained using a batch size of 16 and run for either 300 epochs or until the validation loss stops improving. For each image, 64 image patches are made with each at a size of 128 × 128 by randomly selecting overlapping patches of 128 × 128 from the image. This means that 25,600 image patches are used to train the models and 8640 image patches are used to validate the models. The Adam optimizer [43] is used with the following parameters: Learning rate (lr) = , = 0.9, = 0.999, = None and decay = 0. The models are trained only for 20% bandwidth limitation using the Phantom I dataset and tested on other datasets (Table 1) and bandwidth scenarios. This is to show the generalization of the model on different datasets and bandwidth scenarios.

Table 1.

The number of training, validation and test images for each dataset included in the experiments.

3. Datasets and Experiments

3.1. Datasets

We have acquired five different phantom or in vitro datasets for our experiments, the details of which are explained below. Table 1 gives the distribution of the images in the dataset for training, validation, and testing purposes. A Verasonics Vantage research system (Verasonics, Kirkland, WA, USA) was employed for acquisition of the data. With a Verasonics L11-5v linear array transducer, 101 Plane Waves were transmitted at equi-spaced angles, ranging from to . After careful consideration, the range was reduced to to (61 PWs, step of ) in post-processing to reduce grating lobe [44]. The data that support the findings of this study are available on request from the corresponding author.

3.1.1. Phantom I

A Bloom 300 (8%) gelatin solution was infused with high concentrations of silicon carbide (SiC) scatterers [45]. Three different batches with different concentrations of SiC: 1 g/dL, 8 g/dL, and 16 g/dL were produced for this dataset. After solidification, the latter two batches were diced into small cubes of roughly 1 cm3 and embedded in the first batch, which was still fluid. In addition, fresh mandarin segments and raspberries were added to provide some complex structures in the image data. A total of 669 US images using the full bandwidth were acquired and were limited to different fractional bandwidths. The images were split into a training, validation, and test set of 400, 135, and 134, respectively, dividing the data into a 60, 20, and 20 split (Table 1).

3.1.2. Phantom II

Based on previous experience, a second multi-purpose phantom was created that consists of only canned mandarins embedded in gelatin with a lower concentration of SiC scatterers (0.5 g/dL). Mandarins were processed to remove the connective layers and membranes, that would otherwise absorb or reflect a lot of the acoustic energy. A total of 90 images were used for testing the model developed for bandwidth improvement (Table 1).

3.1.3. Commercial Phantom

This phantom is a commercially available phantom (Model 539, Multipurpose US Phantom, CIRS, Inc., Norfolk, VA, USA) for quantitative US quality measurements. The phantom provides a combination of tissue mimicking target structures of varying sizes and contrasts and monofilament line targets for distance measurements. This dataset consists of 31 US images acquired at full available bandwidth (3.04 MHz passband) and derived datasets that were band-limited to the following fractional bandwidths, i.e., 20%, 40%, and 60% (Table 1).

3.1.4. Carotid Artery

Porcine carotid arteries (CAs), after removing most of the surrounding tissue, were embedded in the low concentration (0.5 g/dL) scattering emulsion. The arteries were tied up on both ends and pressurized with water. This dataset consists of 70 full bandwidth US images of two porcine carotid arteries, which were limited to different fractional bandwidth ranges, i.e., 20%, 40%, and 60% (Table 1).

3.1.5. In Vivo Carotid Mimicking Setup

For this phantom, another two carotid arteries were prepared identically. This time however, they were embedded in skin-on muscle and adipose tissue of the porcine abdomen and neck. Cuts were made skin side down. These cuts were approximately half the thickness of the tissue in depth. The arteries were put inside of those cuts. This dataset consists of 239 images and was only used for testing the model (Table 1).

Various experiments were performed for three different band-limited scenarios (20%, 40%, and 60% bandwidth) and for the five different test datasets to show the effectiveness of the proposed techniques for improving the frequency content of the band-limited images. A bandwidth limitation of 20% means that the frequencies of the target band-limited image are further reduced to only 20% and used as the input for the DL-based model. The reason of choosing these specific bandwidths is as follows: 60% bandwidth limitation is quite realistic while 40% and 20% limitation is to check the limit of bandwidth limitation which can be used for US imaging. All images in the datasets were processed in MATLAB (Release 2019a, The MathWorks, Inc., Natick, MA, USA) to attain image pairs of limited bandwidth and full bandwidth reconstructions. The RF data are band-filtered. From these processed RF data, the images are reconstructed for band-limited as well as full bandwidth images. Envelope detection and log compression is applied to the resulting RF images to retrieve B-mode data. The images obtained have a size of 801 × 401 pixels.

3.2. Experiments

We compared the DL-based methods proposed with conventional HE-based methods.

3.2.1. Histogram Equalization (HE)

HE has been widely used as a method for enhancing the contrast of images in various applications, such as radar image processing as well as medical image processing [46,47]. HE flattens the density distribution of the image and enhances its contrast [48,49]. There are some disadvantages to this technique such as artifacts, and deterioration of the visual quality; thus, HE does not always give improved results and can lead to an enhanced noise level [50].

3.2.2. Contrast Limited Adaptive Histogram Equalization (CLAHE)

CLAHE is another histogram equalization technique and consists of the following four steps [51]:

- Partitioning of the image into non-overlapping and continuous patches.

- Clipping the histogram of each patch above a threshold and distributing the pixels to all the gray values.

- Applying HE on each patch.

- Interpolating the mapping between separate patches.

Although CLAHE usually performs better than HE, it also suffers from noise enhancement and providing no one-to-one correspondence between the input and the enhanced image [51]. Please refer to Ref. [51] for more details about the method.

4. Figures of Metric

The reconstructions obtained using the different techniques were evaluated using the Root Mean Square Error (RMSE), Peak Signal to Noise Ratio (PSNR), and Pearson Correlation (PC) for all the methods.

4.1. RMSE

RMSE is the root of the mean squared difference between the pixel intensities of two images [52]:

Equation (2) gives the RMSE metric with being the image generated by the technique, being the full bandwidth image and n being the number of pixels of both images. RMSE is sensitive towards the outliers and is a measure of accuracy of the model. This metric helps in quantifying the error between the reconstructions obtained as compared to the full-bandwidth reconstructions.

4.2. PSNR

PSNR is the ratio between the maximum value in the full bandwidth image and the mean square error between the model predicted image and the full bandwidth image [53].

Equation (3) gives the PSNR metric with the maximum pixel intensity, the full bandwidth image, and the image generated by the technique, where the mean square error (MSE) is the square of the RMSE. PSNR is often used for quantifying the reconstruction quality [37].

4.3. PC

PC is a measure of linear correlation between two entities [54,55]. Here, correlation between the frequency domain amplitude for the full bandwidth image and band-limited image is computed as:

where cov represents the covariance and represents the standard deviation. PC , where 1 denotes complete linear correlation, 0 represents no linear correlation and indicates a perfectly complementary relationship. This metric is used to show the correlation between the frequencies in the obtained reconstruction as compared to the full bandwidth reconstruction.

5. Results

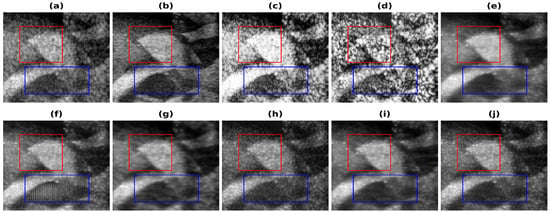

5.1. Results—20% Bandwidth

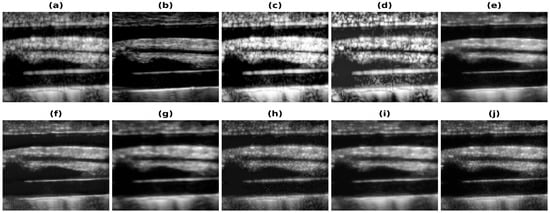

In Figure 4, the results obtained using Phantom I as test data are presented for different models. Figure 4a shows the band-limited reconstruction obtained after reducing the frequency information to 20%, compared to the full bandwidth reconstruction, which is shown in Figure 4b. The speckle variation is reduced in the full bandwidth reconstruction compared to the band-limited reconstruction. The result obtained using HE and the CLAHE are shown in Figure 4c,d. The reconstruction results obtained using these techniques improve but also over-enhance the contrast and hence lead to information loss. As seen from these reconstructions, the background is also enhanced and hence the RMSE and PSNR values are even higher than the band-limited reconstruction. This is also visible from the increase in the error and decrease in the PSNR values from Table 2. The results obtained using the U-Net model are shown in Figure 4e and Figure 4f, respectively, for the loss functions PL1 and PL2. The reconstruction obtained for Phantom I using SRCNN is shown in Figure 4g and Figure 4h, respectively, for the loss functions PL1 and PL2. The reconstruction obtained using the REDNet is shown in Figure 4i and Figure 4j, respectively, for the loss functions PL1 and PL2. The reconstructions obtained using the PL1 loss are better as compared to using the PL2 loss for training the DL models. The improvements obtained in RMSE and PSNR compared to the band-limited reconstruction are 35.5% and 3.85 dB, utilizing the REDNet model with PL1 loss function.

Figure 4.

Results of the bandwidth improvement for Phantom I for different models (a) Band-limited image (b) Full Bandwidth image (c) Histogram Equalization (d) Contrast Limited Adaptive Histogram Equalization (e) U-Net-PL1 (f) U-Net-PL2 (g) SRCNN-PL1 (h) SRCNN-PL2 (i) REDNet-PL1 (j) REDNet-PL2. Red and blue boxes show the different marked regions.

Table 2.

The average metric values for all the test datasets, for all the models and state-of-the-art techniques for 20 % Bandwidth. The best scores for each dataset are written in bold font.

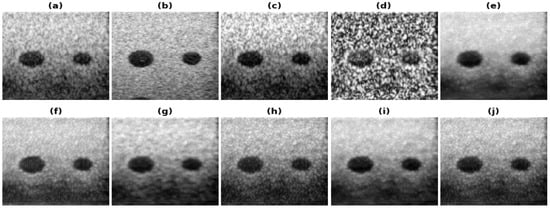

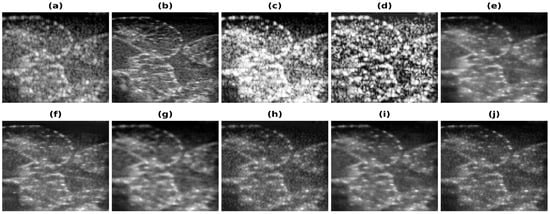

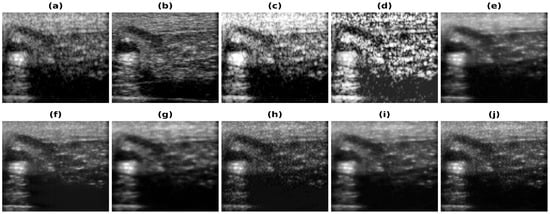

Figure 5, Figure 6, Figure 7 and Figure 8 show the reconstructions obtained using the Carotid artery, Commercial phantom, Phantom II, and porcine–pork belly datasets for 20% band-limited data as input. The results and the improvements obtained in RMSE and PSNR compared to the band-limited reconstruction are shown in Table 2 and Table 3, respectively. The REDNet model with PL1 loss performs better in 3 out of 4 cases for these datasets. A Sobel filter is inherently included in the REDNet model, which can increase the features captured by the layers and hence the REDNet model gives the best results among the three models.

Figure 5.

Results of the bandwidth improvement for Carotid artery phantom for different models (a) Band-limited image (b) Full Bandwidth image (c) Histogram Equalization (d) Contrast Limited Adaptive Histogram Equalization (e) U-Net-PL1 (f) U-Net-PL2 (g) SRCNN-PL1 (h) SRCNN-PL2 (i) REDNet-PL1 (j) REDNet-PL2.

Figure 6.

Results of the bandwidth improvement for Commercial phantom for different models (a) Band-limited image (b) Full Bandwidth image (c) Histogram Equalization (d) Contrast Limited Adaptive Histogram Equalization (e) U-Net-PL1 (f) U-Net-PL2 (g) SRCNN-PL1 (h) SRCNN-PL2 (i) REDNet-PL1 (j) REDNet-PL2.

Figure 7.

Results of the bandwidth improvement for Phantom II for different models (a) Band-limited image (b) Full Bandwidth image (c) Histogram Equalization (d) Contrast Limited Adaptive Histogram Equalization (e) U-Net-PL1 (f) U-Net-PL2 (g) SRCNN-PL1 (h) SRCNN-PL2 (i) REDNet-PL1 (j) REDNet-PL2.

Figure 8.

Results of the bandwidth improvement for in vivo carotid mimicking setup for different models (a) Band-limited image (b) Full Bandwidth image (c) Histogram Equalization (d) Contrast Limited Adaptive Histogram Equalization (e) U-Net-PL1 (f) U-Net-PL2 (g) SRCNN-PL1 (h) SRCNN-PL2 (i) REDNet-PL1 (j) REDNet-PL2.

Table 3.

The improvements obtained for all test datasets with the different models and state-of-the-art techniques for 20%, 40% and 60% Bandwidth.

The improvements are also visible in the reconstructions obtained, as the artifacts are less and the edges are more visible. The red box shown in Figure 4 shows the improvement in the edge information and less artifacts. The edges are clearer in the full-bandwidth reconstruction shown in Figure 4b as compared to the band-limited reconstruction shown in Figure 4a. The histogram based methods (Figure 4c,d) show a loss in the edge information while the DL based methods show an improvement in the edges and hence improve the reconstruction (Figure 4e–j). The PL1 loss outperforms the PL2 loss in most cases. The reconstruction obtained using the U-Net-PL2 model shows the artifact present in the reconstruction (Figure 4f). The blue box (Figure 4) shows the artifact introduced by the PL2 loss and hence is not suitable for reconstruction. Thus, the PL1 loss is the choice of loss function for the reconstruction although, in some cases the PL2 loss function gives better reconstruction image quality and image metrics.

5.2. Results—40% and 60% Bandwidth

Next, we performed the same experiments for the 40% and 60% bandwidth limitation scenarios. The goal of these set of experiments was to investigate whether the model also works for other bandwidth limitations and to check the applicability of the model for different bandwidth reductions. Again, in these cases, the model was trained only on the Phantom I dataset and then tested on all the datasets.

The results obtained when the US images are limited to 40% and 60% bandwidth for all the different test datasets. The RMSE and PSNR values for the 40% and 60% bandwidth reconstruction are provided in Table 2. The improvements obtained for all the test datasets for different bandwidths are shown in Table 3. The reconstructions obtained using the DL-based methods improve the RMSE and PSNR. The DL based reconstructions also reduce the artifacts present in the band-limited reconstructions and thus the image quality is improved. The improvement for 60% bandwidth limitation is lower as compared to the 40 % bandwidth limitation. This occurs since the 60% reconstructions are better than the 40% band-limited reconstructions and this limits the improvement which can be obtained compared to the 40% band-limited reconstructions.

6. Discussion/Analysis of the Results

The purpose of this study was to develop and investigate methods based on DL for enhancing the US bandwidth. We evaluated several architectures with different loss functions in their ability to improve the reconstruction. When looking at the metrics, all training pipelines with PL1 loss show an improvement over the bandwidth limited reconstructions, while the PL2 loss does not bring improvement for networks other than REDNet. There is no pipeline that outperforms all others on each metric but in general, the REDNet model with loss PL1 performs best on RMSE and PSNR for all the datasets. The model parameters and model size are shown in Table 4. The REDNet model has the minimum number of parameters as well as the smallest size and gives the best performance for most cases. The developed models and codes are available at https://github.com/navchetan-awasthi/US-Bandwidth-Improvement, accessed on 5 December 2022.

Table 4.

Total parameters including the Model parameters (Trainable Parameters and Non-trainable Parameters) and Model Size are shown for all the models.

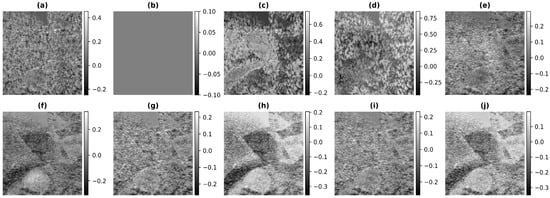

6.1. Error Analysis

We have performed an error analysis to see how much the reconstructions obtained using the different methods are different from the full bandwidth reconstruction for Phantom I. In Figure 9, the resulting error between the output image and the target image obtained using Phantom I as the test dataset for different models is presented. Figure 9a is the difference between the full bandwidth reconstruction and the band-limited reconstruction obtained after reducing the frequency information to 20% of that obtained from the full bandwidth reconstruction shown in Figure 4b. The resulting error obtained using the histogram equalization and the contrast limited adaptive histogram equalization are shown in Figure 9c,d. As can be seen from the figures of the resulting error, the dynamic range of values obtained is higher as compared to the band-limited image because of over-enhancement and hence the error is higher in histogram-based methods. The resulting error obtained using the U-Net model are shown in Figure 9e and Figure 9f, respectively, for the different loss functions. The reconstruction error obtained for the Phantom I using SRCNN is shown in Figure 9g and Figure 9h, respectively, for the loss functions PL1 and PL2. The reconstruction obtained using the REDNet is shown in Figure 9i and Figure 9j, respectively, for the loss functions PL1 and PL2. The dynamic range of the error in the colorbar reflects the improvement obtained in the reconstruction as the range is less for DL based methods. Furthermore, loss of structure in the error points to the improvement obtained in the reconstruction. Thus, Figure 9e,g,i show less error as compared to Figure 9f,h,j. This implies that the reconstructions errors obtained using the PL1 loss are low compared to the PL2 loss, which can also be depicted from Table 2.

Figure 9.

Results of the error of the bandwidth improvement for Phantom I for the different models (a) Band-limited image (b) Full Bandwidth image (c) Histogram Equalization (d) Contrast Limited Adaptive Histogram Equalization (e) U-Net-PL1 (f) U-Net-PL2 (g) SRCNN-PL1 (h) SRCNN-PL2 (i) REDNet-PL1 (j) REDNet-PL2.

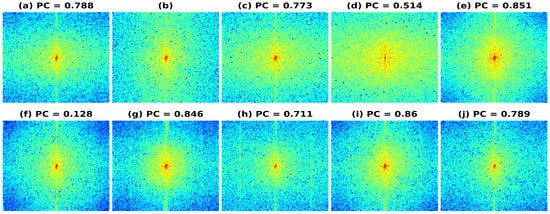

6.2. Frequency Domain Comparison

We also investigated the frequency spectrum response for the reconstruction to understand the improvement in spatial bandwidth of the reconstructions obtained. In Figure 10, the frequency spectrums are plotted using Phantom I reconstructed data as input data for the different models presented. Figure 10a represents the frequency spectrum for the band-limited reconstruction, obtained after reducing the frequency information to 20% of the full bandwidth spectrum (shown in Figure 10b). The resulting frequency spectrum obtained using HE and CLAHE are shown in Figure 10c and Figure 10d, respectively. As seen from the reduction in PC values, there is a reduction in the frequency component correlation and hence the reconstructions obtained have not improved over the band-limited reconstruction. The resulting frequency spectrum obtained using the U-Net, SRCNN, and REDNet are shown in Figure 10e–j. The reconstruction errors obtained using the PL1 loss are low as compared to using the PL2 loss for training the DL models, which is also visible in a high PC value for the PL1 loss and a low PC value for the PL2 loss. The reduction in PC values correlates with the RMSE/PSNR obtained, as can be seen in Table 2. A high RMSE, low PSNR value gives low PC and vice versa and the spectrums of the full bandwidth reconstruction is similar to the reconstructions obtained using DL-based methods with PL1 loss.

Figure 10.

Results of the frequency spectrum of the bandwidth improvement for Phantom II for the different models (a) Band-limited image (b) Full Bandwidth image (c) Histogram Equalization (d) Contrast Limited Adaptive Histogram Equalization (e) U-Net-PL1 (f) U-Net-PL2 (g) SRCNN-PL1 (h) SRCNN-PL2 (i) REDNet-PL1 (j) REDNet-PL2.

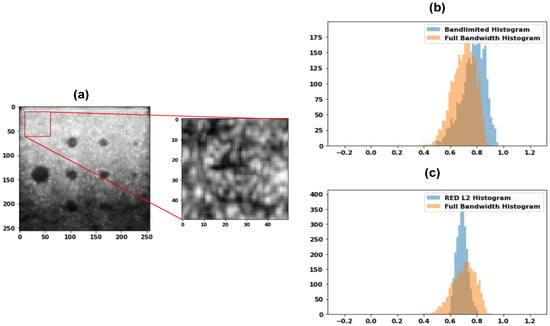

6.3. Histogram Analysis

Finally, we performed first-order speckle statistics, i.e., analyzed the histogram of the speckle for the band-limited data, full bandwidth data, and the images obtained using the DL-based techniques for the envelope image. Figure 11a shows the Commercial phantom used for the speckle characteristics as well as the part of the image used for histogram comparison. The histogram comparison for band-limited and the full bandwidth is shown in Figure 11b, while the histogram comparison for the REDNet PL2 model and the full bandwidth is shown in Figure 11c. As can be seen from the images, the overlap is increased and hence the reconstructed images are better, compared to the band-limited reconstruction; the speckles are more similar in nature, leading to reconstruction near to the full bandwidth reconstruction in visual quality. Next, we performed the analysis of the speckle size. The autocorrelation of the speckle pattern is obtained, and the full width half maximum (FWHM) is computed to obtain the speckle size. The US imaging resolution is anisotropic, and the speckle size is quantitatively evaluated by computing the eccentricity of the ellipse that represents the uniformity of the resolution. The eccentricity of the ellipse containing the longest and the shortest axis is the first eccentricity and is given as:

where a and b are the shortest and the longest axis.

Figure 11.

(a) Sample of a Commercial phantom image and the corresponding patch area which we want to analyze, (b) Comparison of the histogram of band-limited and full bandwidth data, and, (c) REDNet PL2 model and full bandwidth histogram.

A uniform distribution of the speckle size results in zero eccentricity of the ellipse, but since the speckle size is disk shaped, a value of zero is impossible to reach, especially at deeper depth. We performed an analysis of the eccentricity of the images before and after the bandwidth improvement. The eccentricity of the ellipse for band-limited image for a set of 10 images was calculated and averaged over the frames and was found to be 0.458 ± 0.073, while for the full bandwidth image it was found to be 0.237 ± 0.205. The eccentricity of the image obtained using the REDNet model was found to be 0.296 ± 0.117 and hence it is improved as compared to the band-limited image. In addition, numerous DL models have been proposed recently for super-resolution enhancement of images, which can also be utilized for improving the bandwidth in US images. An improved residual self-encoding and attention mechanism based super-resolution network was proposed in Ref. [56] and a multi-scale convolution-based model was proposed in Ref. [57]. An efficient model based on neural architecture search and attention mechanism was proposed in Ref. [58]. Other encoder-decoder networks are also available such as binocular rivalry oriented predictive autoencoding network for blind stereoscopic image quality measurement [59] and wavelet-based deep auto encoder-decoder (WDAED)-based image compression [60]. These can also be used for bandwidth improvement after doing some tweaks in the models. All of these models were shown to improve the results for natural images with improved PSNR, and structural similarity index metric (SSIM) and can also be utilized in future research for improving the bandwidth in US images.

6.4. Discussion

The models are trained only on the Phantom I dataset and tested on the other datasets. Thus, the model generalizes very well on the unseen datasets and hence can be utilized in real settings without re-training the model. As can be seen from Table 2, the model REDNet-PL1 performs the best in most of the cases with different bandwidth limitations as well as for different test datasets. Thus, REDNet-PL1 model can be utilized for improving the limited bandwidth reconstruction.

The following factors should be considered for potential practical applications of the architectures.

- Firstly, the amount of bandwidth limitation that can be applied to the full bandwidth data. The bandwidth limited reconstructions sometimes may lose too much information for the model to predict certain structures that were present in the original images. This leads to the complete or partial omission of these structures in the model-enhanced reconstructions, and thus a significantly worse metric score, depending on the dataset and the bandwidth limitation.

- Secondly, the models have trouble in predicting the speckle in US images, which leads to smoothed images in some of the results. The loss of speckle also impacts the usability of the images because the speckle is often used for various diagnostic purposes, for example predicting the type of tissue [61]. Most US machines will have some speckle reduction in post processing. So, this should not be a big problem for normal B-mode imaging, if there is improvement in resolution and quality.

For future research, there are some interesting directions that can be considered: Firstly, the models currently use either MSE loss or a combination of VGG16 perceptual loss and MSE. A viable option might be to use other loss functions, for example, using a texture loss as seen in [62] could be considered, because the models currently smooth the images in some cases. A texture loss might result in the generated images being less smoothed and closer to the original texture and thus retain the speckle. Secondly, currently the models cannot predict certain structures due to severe bandwidth limitation. The current models are trained with a 20% fractional bandwidth. A good point of research would be to train a generalized network with all data and different bandwidth limited images as input, to see for what range of bandwidth limitation these structures can or cannot be predicted. Lastly, similar to multi noise level image denoising, where various levels of noise are used as input [63], another interesting approach might be including different bandwidth limitation in the images in the input set to make the models potentially more robust when it comes to different rates of bandwidth limiting.

7. Conclusions

In conclusion, DL-based architectures with two different training losses were utilized and compared with histogram-based techniques. The developed methods show that the DL-based methods transform the limited bandwidth reconstruction to an image comparable with one obtained with the full US bandwidth and can thus be utilized for improving reconstruction. We compared some of the state-of-the-art architectures for transforming low bandwidth images into high bandwidth images. The REDNet architecture shows improvements as high as 45.5% on RMSE and 3.85 dB in PSNR for 20% bandwidth limitation. The proposed architecture is lightweight compared to U-Net, has less computational cost, and hence can be utilized in real time settings.

Author Contributions

Conceptualization, algorithms development, data analysis, methodology, writing—original draft preparation, writing—review and editing, N.A.; algorithms development and data analysis, L.v.A.; Experiments setup and data acquisition, G.J.; Experiments setup, data acquisition and manuscript writing, H.-M.S.; editing, supervision, funding acquisition, manuscript writing, J.P.W.P., R.G.P.L. All authors have read and agreed to the published version of the manuscript.

Funding

This work is funded in part by the 4TU Precision Medicine program supported by High Tech for a Sustainable Future, a framework commissioned by the four Universities of Technology of the Netherlands.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors have no conflict of interest.

References

- Carovac, A.; Smajlovic, F.; Junuzovic, D. Application of Ultrasound in Medicine. Acta Inform. Medica 2011, 19, 168–171. [Google Scholar] [CrossRef]

- Wells, P.N. Ultrasound imaging. Phys. Med. Biol. 2006, 51, R83. [Google Scholar] [CrossRef] [PubMed]

- Cikes, M.; Tong, L.; Sutherland, G.R.; D’Hooge, J. Ultrafast cardiac ultrasound imaging: Technical principles, applications, and clinical benefits. JACC Cardiovasc. Imaging 2014, 7, 812–823. [Google Scholar] [CrossRef] [PubMed]

- Nicolau, C.; Ripollés, T. Contrast-enhanced ultrasound in abdominal imaging. Abdom. Imaging 2012, 37, 1–19. [Google Scholar] [CrossRef] [PubMed]

- Woo, J. A short history of the development of ultrasound in obstetrics and gynecology. Hist. Ultrasound Obstet. Gynecol. 2002, 3, 1–25. [Google Scholar]

- Szabo, T.L.; Lewin, P.A. Ultrasound transducer selection in clinical imaging practice. J. Ultrasound Med. 2013, 32, 573–582. [Google Scholar] [CrossRef]

- Szabo, T.L. Diagnostic Ultrasound Imaging: Inside Out; Academic Press: New York, NY, USA, 2004. [Google Scholar]

- Szabo, T.L.; Lewin, P.A. Piezoelectric materials for imaging. J. Ultrasound Med. 2007, 26, 283–288. [Google Scholar] [CrossRef][Green Version]

- Reid, J.; Lewin, P. Ultrasound imaging transducers. Encycl. Electr. Electron. Eng. 1999, 22, 664–672. [Google Scholar]

- Maresca, D.; Renaud, G.; van Soest, G.; Li, X.; Zhou, Q.; Shung, K.K.; De Jong, N.; Van der Steen, A.F. Contrast-Enhanced Intravascular Ultrasound Pulse Sequences for Bandwidth-Limited Transducers. Ultrasound Med. Biol. 2013, 39, 706–713. [Google Scholar] [CrossRef]

- Wong, C.M.; Chen, Y.; Luo, H.; Dai, J.; Lam, K.H.; Chan, H.L.W. Development of a 20-MHz wide-bandwidth PMN-PT single crystal phased-array ultrasound transducer. Ultrasonics 2017, 73, 181–186. [Google Scholar] [CrossRef]

- Foster, F.; Zhang, M.; Zhou, Y.; Liu, G.; Mehi, J.; Cherin, E.; Harasiewicz, K.; Starkoski, B.; Zan, L.; Knapik, D.; et al. A new ultrasound instrument for in vivo microimaging of mice. Ultrasound Med. Biol. 2002, 28, 1165–1172. [Google Scholar] [CrossRef] [PubMed]

- Rashid, M.W.; Carpenter, T.; Tekes, C.; Pirouz, A.; Jung, G.; Cowell, D.; Freear, S.; Ghovanloo, M.; Degertekin, F.L. Front-end electronics for cable reduction in Intracardiac Echocardiography (ICE) catheters. In Proceedings of the 2016 IEEE International Ultrasonics Symposium, IUS, Tours, France, 18–21 September 2016. [Google Scholar] [CrossRef]

- Benane, Y.M.; Lavarello, R.; Bujoreanu, D.; Cachard, C.; Varray, F.; Savoia, A.S.; Franceschini, E.; Basset, O. Ultrasound bandwidth enhancement through pulse compression using a CMUT probe. In Proceedings of the 2017 IEEE International Ultrasonics Symposium, IUS, Washington, DC, USA, 6–9 September 2017. [Google Scholar] [CrossRef]

- Costa-Felix, R.; Machado, J.C. Output bandwidth enhancement of a pulsed ultrasound system using a flat envelope and compensated frequency-modulated input signal: Theory and experimental applications. Measurement 2015, 69, 146–154. [Google Scholar] [CrossRef]

- Sainath, T.N.; Kingsbury, B.; Saon, G.; Soltau, H.; Mohamed, A.R.; Dahl, G.; Ramabhadran, B. Deep Convolutional Neural Networks for Large-scale Speech Tasks. Neural Netw. 2015, 64, 39–48. [Google Scholar] [CrossRef] [PubMed]

- Bordes, A.; Chopra, S.; Weston, J. Question answering with subgraph embeddings. In Proceedings of the EMNLP 2014—2014 Conference on Empirical Methods in Natural Language Processing, Doha, Qatar, 25–29 October 2014. [Google Scholar] [CrossRef]

- Rastegari, M.; Ordonez, V.; Redmon, J.; Farhadi, A. XNOR-net: Imagenet classification using binary convolutional neural networks. In Computer Vision—ECCV 2016, Proceedings of the 14th European Conference, Amsterdam, The Netherlands, 11–14 October 2016; Springer: Cham, Switzerland, 2016. [Google Scholar] [CrossRef]

- Minaee, S.; Boykov, Y.Y.; Porikli, F.; Plaza, A.J.; Kehtarnavaz, N.; Terzopoulos, D. Image segmentation using deep learning: A survey. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 44, 3523–3542. [Google Scholar] [CrossRef] [PubMed]

- Zhang, Y.; Li, K.; Li, K.; Zhong, B.; Fu, Y. Residual non-local attention networks for image restoration. In Proceedings of the 7th International Conference on Learning Representations, ICLR 2019, New Orleans, LA, USA, 6–9 May 2019. [Google Scholar]

- Tai, Y.; Yang, J.; Liu, X. Image super-resolution via deep recursive residual network. In Proceedings of the 30th IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2017, Honolulu, HI, USA, 21–26 July 2017. [Google Scholar] [CrossRef]

- Cheng, G.; Matsune, A.; Li, Q.; Zhu, L.; Zang, H.; Zhan, S. Encoder-decoder residual network for real super-resolution. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition Workshops, Long Beach, CA, USA, 16–17 June 2019. [Google Scholar] [CrossRef]

- Mao, X.J.; Shen, C.; Yang, Y.B. Image restoration using very deep convolutional encoder-decoder networks with symmetric skip connections. In Proceedings of the Advances in Neural Information Processing Systems, Barcelona, Spain, 5–10 December 2016. [Google Scholar]

- Shiri, I.; Akhavanallaf, A.; Sanaat, A.; Salimi, Y.; Askari, D.; Mansouri, Z.; Shayesteh, S.P.; Hasanian, M.; Rezaei-Kalantari, K.; Salahshour, A.; et al. Ultra-low-dose chest CT imaging of COVID-19 patients using a deep residual neural network. Eur. Radiol. 2021, 31, 1420–1431. [Google Scholar] [CrossRef] [PubMed]

- Christensen-Jeffries, K.; Couture, O.; Dayton, P.A.; Eldar, Y.C.; Hynynen, K.; Kiessling, F.; O’Reilly, M.; Pinton, G.F.; Schmitz, G.; Tang, M.X.; et al. Super-resolution ultrasound imaging. Ultrasound Med. Biol. 2020, 46, 865–891. [Google Scholar] [CrossRef]

- Van Sloun, R.J.; Cohen, R.; Eldar, Y.C. Deep learning in ultrasound imaging. Proc. IEEE 2019, 108, 11–29. [Google Scholar] [CrossRef]

- Awasthi, N.; Vermeer, L.; Fixsen, L.S.; Lopata, R.G.; Pluim, J.P. LVNet: Lightweight Model for Left Ventricle Segmentation for Short Axis Views in Echocardiographic Imaging. IEEE Trans. Ultrason. Ferroelectr. Freq. Control 2022, 69, 2115–2128. [Google Scholar] [CrossRef]

- Awasthi, N.; Dayal, A.; Cenkeramaddi, L.R.; Yalavarthy, P.K. Mini-COVIDNet: Efficient lightweight deep neural network for ultrasound based point-of-care detection of COVID-19. IEEE Trans. Ultrason. Ferroelectr. Freq. Control 2021, 68, 2023–2037. [Google Scholar] [CrossRef]

- Chaudhari, A.S.; Fang, Z.; Kogan, F.; Wood, J.; Stevens, K.J.; Gibbons, E.K.; Lee, J.H.; Gold, G.E.; Hargreaves, B.A. Super-resolution musculoskeletal MRI using deep learning. Magn. Reson. Med. 2018, 80, 2139–2154. [Google Scholar] [CrossRef]

- Chun, J.; Zhang, H.; Gach, H.M.; Olberg, S.; Mazur, T.; Green, O.; Kim, T.; Kim, H.; Kim, J.S.; Mutic, S.; et al. MRI super-resolution reconstruction for MRI-guided adaptive radiotherapy using cascaded deep learning: In the presence of limited training data and unknown translation model. Med. Phys. 2019, 46, 4148–4164. [Google Scholar] [CrossRef] [PubMed]

- Perdios, D.; Vonlanthen, M.; Martinez, F.; Arditi, M.; Thiran, J.P. CNN-based image reconstruction method for ultrafast ultrasound imaging. IEEE Trans. Ultrason. Ferroelectr. Freq. Control 2021, 69, 1154–1168. [Google Scholar] [CrossRef] [PubMed]

- Yoon, Y.H.; Khan, S.; Huh, J.; Ye, J.C. Efficient B-Mode Ultrasound Image Reconstruction From Sub-Sampled RF Data Using Deep Learning. IEEE Trans. Med. Imaging 2019, 38, 325–336. [Google Scholar] [CrossRef] [PubMed]

- Dong, C.; Loy, C.C.; He, K.; Tang, X. Image Super-Resolution Using Deep Convolutional Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 38, 295–307. [Google Scholar] [CrossRef] [PubMed]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Medical Image Computing and Computer-Assisted Intervention, Proceedings of the MICCAI 2015—18th International Conference, Munich, Germany, 5–9 October 2015; Springer: Cham, Switzerland, 2015. [Google Scholar] [CrossRef]

- Gholizadeh-Ansari, M.; Alirezaie, J.; Babyn, P. Deep learning for low-dose CT denoising using perceptual loss and edge detection layer. J. Digit. Imaging 2020, 33, 504–515. [Google Scholar] [CrossRef] [PubMed]

- Heinrich, M.P.; Stille, M.; Buzug, T.M. Residual U-Net convolutional neural network architecture for low-dose CT denoising. Curr. Dir. Biomed. Eng. 2018, 4, 297–300. [Google Scholar] [CrossRef]

- Awasthi, N.; Jain, G.; Kalva, S.K.; Pramanik, M.; Yalavarthy, P.K. Deep neural network-based sinogram super-resolution and bandwidth enhancement for limited-data photoacoustic tomography. IEEE Trans. Ultrason. Ferroelectr. Freq. Control 2020, 67, 2660–2673. [Google Scholar] [CrossRef]

- Zhang, K.; Zuo, W.; Gu, S.; Zhang, L. Learning deep CNN denoiser prior for image restoration. In Proceedings of the 30th IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2017, Honolulu, HI, USA, 21–26 July 2017. [Google Scholar] [CrossRef]

- Wang, Q.; Ma, Y.; Zhao, K.; Tian, Y. A comprehensive survey of loss functions in machine learning. Ann. Data Sci. 2022, 9, 187–212. [Google Scholar] [CrossRef]

- Micikevicius, P.; Narang, S.; Alben, J.; Diamos, G.; Elsen, E.; Garcia, D.; Ginsburg, B.; Houston, M.; Kuchaiev, O.; Venkatesh, G.; et al. Mixed precision training. arXiv 2017, arXiv:1710.03740. [Google Scholar]

- Abadi, M.; Agarwal, A.; Barham, P.; Brevdo, E.; Chen, Z.; Citro, C.; Corrado, G.S.; Davis, A.; Dean, J.; Devin, M.; et al. TensorFlow: Large-Scale Machine Learning on Heterogeneous Systems, 2015. Software. Available online: https://tensorflow.org (accessed on 1 March 2021).

- Chollet, F. Keras. 2015. Available online: https://keras.io (accessed on 1 March 2021).

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- Jansen, G.; Awasthi, N.; Schwab, H.M.; Lopata, R. Enhanced Radon Domain Beamforming Using Deep-Learning-Based Plane Wave Compounding. In Proceedings of the 2021 IEEE International Ultrasonics Symposium (IUS), Xi’an, China, 11–16 September 2021; pp. 1–4. [Google Scholar]

- Parker, N.; Povey, M. Ultrasonic study of the gelation of gelatin: Phase diagram, hysteresis and kinetics. Food Hydrocoll. 2012, 26, 99–107. [Google Scholar] [CrossRef]

- Li, Y.; Wang, W.; Yu, D. Application of adaptive histogram equalization to X-ray chest images. In Proceedings of the Second International Conference on Optoelectronic Science and Engineering’94, Beijing, China, 15–18 August 1994; Volume 2321, pp. 513–514. [Google Scholar]

- Zimmerman, J.B.; Pizer, S.M.; Staab, E.V.; Perry, J.R.; McCartney, W.; Brenton, B.C. An evaluation of the effectiveness of adaptive histogram equalization for contrast enhancement. IEEE Trans. Med. Imaging 1988, 7, 304–312. [Google Scholar] [CrossRef] [PubMed]

- Lim, J.S. Two-Dimensional Signal and Image Processing; Prentice Hall: Englewood Cliffs, NJ, USA, 1990. [Google Scholar]

- Gonzalez, R.C.; Woods, R.E. Digital Image Processing; Pearson Education (Singapore) Pte. Ltd.: Delhi, India, 2002. [Google Scholar]

- Kim, Y.T. Contrast enhancement using brightness preserving bi-histogram equalization. IEEE Trans. Consum. Electron. 1997, 43, 1–8. [Google Scholar]

- Zuiderveld, K. Contrast limited adaptive histogram equalization. In Graphics Gems; Academic Press Professional, Inc.: Cambridge, MA, USA, 1994; pp. 474–485. [Google Scholar]

- Pai, P.P.; De, A.; Banerjee, S. Accuracy enhancement for noninvasive glucose estimation using dual-wavelength photoacoustic measurements and kernel-based calibration. IEEE Trans. Instrum. Meas. 2018, 67, 126–136. [Google Scholar] [CrossRef]

- Horé, A.; Ziou, D. Image quality metrics: PSNR vs. SSIM. In Proceedings of the 2010 International Conference on Pattern Recognition, Istanbul, Turkey, 23–26 August 2010. [Google Scholar] [CrossRef]

- Awasthi, N.; Kalva, S.K.; Pramanik, M.; Yalavarthy, P.K. Dimensionality reduced plug and play priors for improving photoacoustic tomographic imaging with limited noisy data. Biomed. Opt. Express 2021, 12, 1320–1338. [Google Scholar] [CrossRef]

- Benesty, J.; Chen, J.; Huang, Y.; Cohen, I. Pearson correlation coefficient. In Noise Reduction in Speech Processing; Springer: Berlin/Heidelberg, Germany, 2009; pp. 1–4. [Google Scholar]

- Yang, X.; Wang, S.; Han, J.; Guo, Y.; Li, T. RSAMSR: A deep neural network based on residual self-encoding and attention mechanism for image super-resolution. Optik 2021, 245, 167736. [Google Scholar] [CrossRef]

- Yang, X.; Zhu, Y.; Guo, Y.; Zhou, D. An image super-resolution network based on multi-scale convolution fusion. Vis. Comput. 2022, 38, 4307–4317. [Google Scholar] [CrossRef]

- Yang, X.; Fan, J.; Wu, C.; Zhou, D.; Li, T. NasmamSR: A fast image super-resolution network based on neural architecture search and multiple attention mechanism. Multimed. Syst. 2022, 28, 321–334. [Google Scholar] [CrossRef]

- Xu, J.; Zhou, W.; Chen, Z.; Ling, S.; Le Callet, P. Binocular rivalry oriented predictive autoencoding network for blind stereoscopic image quality measurement. IEEE Trans. Instrum. Meas. 2020, 70, 1–13. [Google Scholar] [CrossRef]

- Mishra, D.; Singh, S.K.; Singh, R.K. Wavelet-based deep auto encoder-decoder (wdaed)-based image compression. IEEE Trans. Circuits Syst. Video Technol. 2020, 31, 1452–1462. [Google Scholar] [CrossRef]

- Damerjian, V.; Tankyevych, O.; Souag, N.; Petit, E. Speckle characterization methods in ultrasound images—A review. IRBM 2014, 35, 202–213. [Google Scholar] [CrossRef]

- Sajjadi, M.S.; Scholkopf, B.; Hirsch, M. EnhanceNet: Single image super-resolution through automated texture synthesis. In Proceedings of the 2017 IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017. [Google Scholar] [CrossRef]

- Agostinelli, F.; Anderson, M.R.; Lee, H. Adaptive multi-column deep neural networks with application to robust image denoising. In Proceedings of the Advances in Neural Information Processing Systems 2013, Lake Tahoe, NV, USA, 5–10 December 2013. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).