Abstract

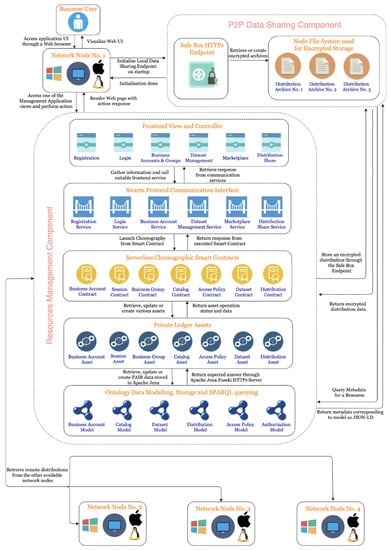

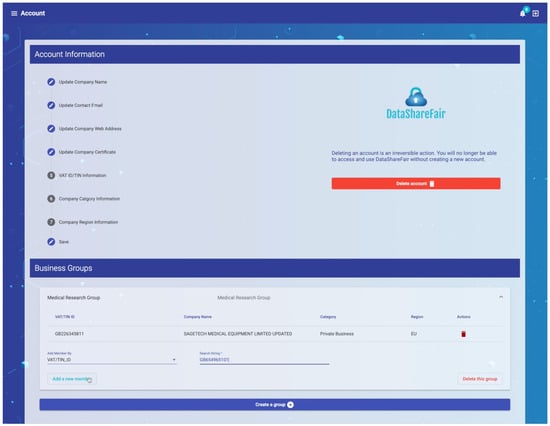

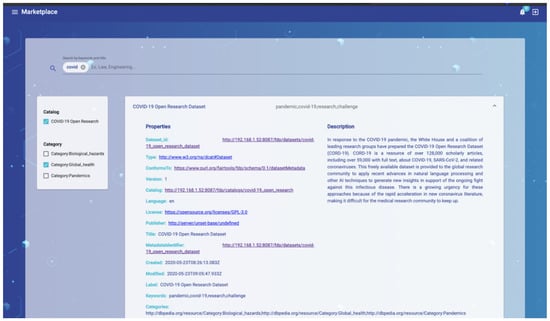

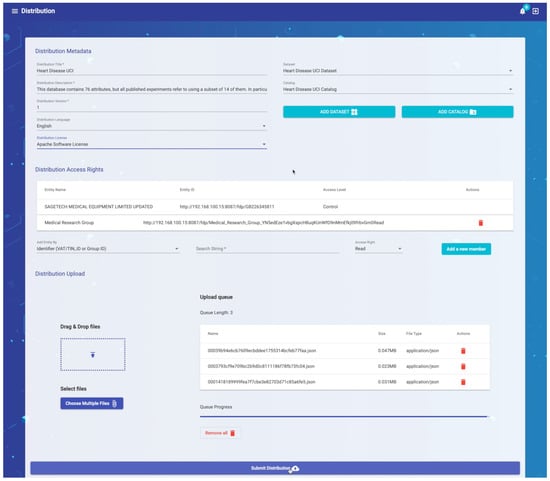

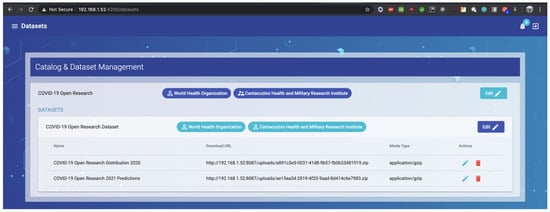

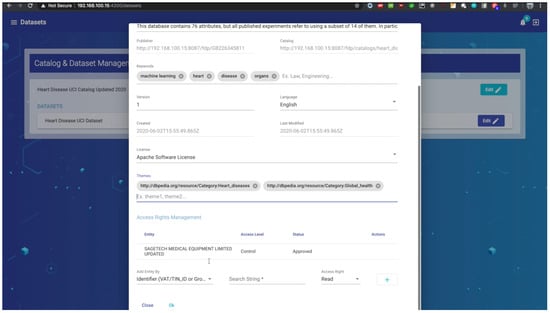

Sharing data along the economic supply/demand chain represents a catalyst to improve the performance of a digitized business sector. In this context, designing automatic mechanisms for structured data exchange, that should also ensure the proper development of B2B processes in a regulated environment, becomes a necessity. Even though the data format used for sharing can be modeled using the open methodology, we propose the use of FAIR principles to additionally offer business entities a way to define commonly agreed upon supply, access and ownership procedures. As an approach to manage the FAIR modelled metadata, we propose a series of methodologies to follow. They were integrated in a data marketplace platform, which we developed to ensure they are properly applied. For its design, we modelled a decentralized architecture based on our own blockchain mechanisms. In our proposal, each business entity can host and structure its metadata in catalog, dataset and distribution assets. In order to offer businesses full control over the data supplied through our system, we designed and implemented a sharing mechanism based on access policies defined by the business entity directly in our data marketplace platform. In the proposed approach, metadata-based assets sharing can be done between two or multiple businesses, which will be able to manually access the data in the management interface and programmatically through an authorized data point. Business specific transactions proposed to modify the semantic model are validated using our own blockchain based technologies. As a result, security and integrity of the FAIR data in the collaboration process is ensured. From an architectural point of view, the lack of a central authority to manage the vehiculated data ensures businesses have full control of the terms and conditions under which their data is used.

1. Cross-Sector B2B Data Sharing and Its Impact on the Companies Ecosystem

For a long time, companies strictly relied on traditional Business-to-Business (B2B) transactions to evolve. These transactions refer to purchasing and selling physical raw goods, with the goal to complete the product manufacture process []. Usually, the obtained product represents the base of Business-to-Consumer (B2C) transactions. In order to ensure the success of B2C transactions, all companies involved in the B2B collaboration chain need to have an overview of the raw good demand and their supply capacity in comparison to similar businesses []. As a result, data sharing between companies has become a key aspect in the process of growing business opportunities in the past years. B2B data sharing refers to “making data available to or accessing data from other companies for business purposes” [] either for free or by making a payment to the data holder. The business owner has the option to choose who to share the data with and under which conditions.

In [], the author emphasizes the need to share data between well-established companies in order to encourage innovation. Data sharing comes as a prerequisite since one company alone cannot envision the complete perspective of the economic supply/demand chain. When data from multiple businesses is gathered through this process, customer experience in relation to several products can also be easily identified. This helps business owners adjust their plans and products to fulfill customer needs and expectancies. Companies which imply themselves in B2B processes with their suppliers and their buyers will be able to see issues in new and existing business initiatives from multiple perspectives. As a result, business representatives will understand each other’s difficulties in proposed growth plans and will focus on finding solutions that benefit everyone.

In order to achieve a performant business model, designing efficient processes of B2B data sharing is crucial. One of the main encountered difficulties in their implementation is given by the dependency between the business users and the technological department. The latter is required for providing data adapters with various custom logic, which depends on the data provider. Another issue in B2B data sharing implementation appears because of the data formats diversity, protocols and standards through which each provider chooses to deliver its data. On a platform specifically designed for the B2B data sharing use case, a large number of transactions over the datasets are performed concurrently by the collaborating businesses. Subsequently, the need for implementing synchronization and consensus mechanisms in the data sharing process appears [,].

Companies which engage in B2B data sharing processes tend to estimate the effort to integrate custom mechanisms in the existing business model, in order to assess the feasibility of the whole process. In the B2B perspectives report [], redacted by the European Commission, some categories of techniques and strategies for the exchange of shared data can be outlined:

- Data monetization—companies are willing to share part of their data in order to increase their business revenues; according to a Gartner report on “Magic Quadrant for Analytics and Business Intelligence Platforms” [], several corporations consider data sharing for profit an important part of their business strategy, amongst which Microsoft and Tableau are leaders, Oracle and Salesforce are visionaries, while IBM and Alibaba Cloud can be considered niche players; there are also business such as MicroStrategy and Looker who constantly challenge the consecrated techniques for B2B, which leads to improvements and new discoveries;

- Public data marketplaces—rely on public trusted entities that connect both data sellers and data buyers in one environment; usually, a transaction fee is perceived for all exchanges in order to keep the platform alive;

- Industrial data platforms—a secure and private environment which is restricted to group of companies exchanging data for free voluntarily in order to facilitate new product and services development;

- Technical enablers—businesses which specialize in creating data sharing flows custom for companies;

- Open data policy—companies sharing part of their data in a completely open manner;

From a technical point of view, companies involved in creating public data marketplaces, industrial data platforms and technical enablers offer solutions which intermediate the B2B data sharing process. We analyzed the most representative technical solutions with their advantages and disadvantages.

A major player which monetizes B2B data sharing processes through its public data marketplace is DataPace []. This company offers a decentralized global-scale marketplace to facilitate the trade of data collected from a variety of sensors and IoT devices. Businesses can sell and buy sensor’s collected data through DataPace in a secure environment powered by the hyperledger fabric blockchain technology, similar to e-store web sites. Some advantages to this approach are the ability to tokenize the value of data (through platform specific tokens), to ensure its integrity through stored hashes in the blockchain and to enable smart contracts capabilities. The stakeholders of this platform are the data sellers, data buyers and validators. The validators are entities responsible to validate blocks created by the other stakeholders in the network, through their transactions. Network security is ensured through the PBFT consensus algorithm, which ensures the system has fault tolerance to 1/3 nodes becoming malicious. The actual data sharing process is facilitated by smart contracts defined in the system between companies, which contain the set of conditions under which data is exchanged. This approach ensures other transaction costs associated with creating a traditional law contract are reduced and the security of the transaction is enhanced. This solution is highly scalable, characteristic which is assured by the underlying decentralized architecture. A strong downside to this solution is that there are no privacy enhancing mechanisms included by default. This aspect must be ensured by companies developing the smart contracts. Data governance mechanisms are not available by default, which may result in issues of ownership and control of usage over the shared data. Finally, the shared data is not annotated to be reusable and interoperable, which may lead to redundancy issues and the need to build custom integrations for each integration within a B2B process. Another limitation of this platform is that the shared data is strictly collected from IoT sensors and systems, which limits its usage in other B2B contexts.

A trending and emerging technical enabler for B2B data sharing is proposed by Epimorphics [], which provides a suite of services and tools for linked data management. These services offer support for commercial customers to model their data and build applications relying on the obtained linked datasets. They also offer consultancy services with customizations depending on each business’s requests. The data management platform has a three-tiered architecture, comprised of load balancing and routing, application services and storage. Load balancing is ensured by providing a company with multiple RDF servers, which provide access through API and user interface to the linked data belonging to the business. Linked data accessible through API is updated and maintained through a data management service, which is responsible with the conversion of unstructured to structured data. Since the conversion process is specific to each business, Epimorphics develops different convertors for each business in order to maximize the findability, accessibility, interoperability and reusability of their data, according to the FAIR principles. Epimorphics’s business model focuses on providing support for companies to prepare their data for integration in B2B processes, rather than facilitate discovery of linked data and enabling new partnerships between companies. A significant downside to this solution is the lack of privacy centered data curation, which may lead to businesses subsequently sharing private user data. This leaves the business exposed to legal issues in terms of compliance with data confidentiality laws, such as GDPR. Another downside to this approach is the lack of integrated data governance mechanisms. Companies are able to share their datasets with their partners by providing access directly to an RDF API, authenticated through OAuth2 tokens. Nonetheless, API security through OAuth2 authentication does not guarantee that data usage can be controlled or its ownership can be asserted by the owner company.

A promising technology offering an integrated public data marketplace is iGrant.io []. It is a cloud-based data exchange and consent mediation platform, which targets to help businesses monetize the personal data they collect about users, while preserving user privacy rights granted through GDPR and other data protection regulations. In comparison with the other B2B data platforms, iGrant.io offers data collection mechanisms for products which are user-centered. Business customers can closely manage their data within a wallet, controlling how their data is used and shared by the company they provided access to. In turn, companies can define their own terms of data usage in the available enterprise management platform, which are then submitted to users for review and consent. These features are facilitated by a commercial software named MyData Operator, which companies can integrate in their software services. The operator provides business processes with access to an own indexed metadata registry, which stores references to the data in a distributed ledger. Using this registry, businesses can improve their own products and services by integrating datasets of user-consented data. A downside of the data exchange process facilitated by iGrant.io is that data governance is only addressed from the consumer’s point of view, which can choose third party companies to share its data with. No specific mechanisms are developed to ensure companies have control over the data they make available in the registry, nor can they claim ownership and control access rights over it. Other aspects to be improved are the interoperability and reusability of the data, which are not included in the current version of the software. The possibility to create formal agreements in the B2B sharing process between companies is also needed, in order to further develop privacy compliant enterprise data governance mechanisms.

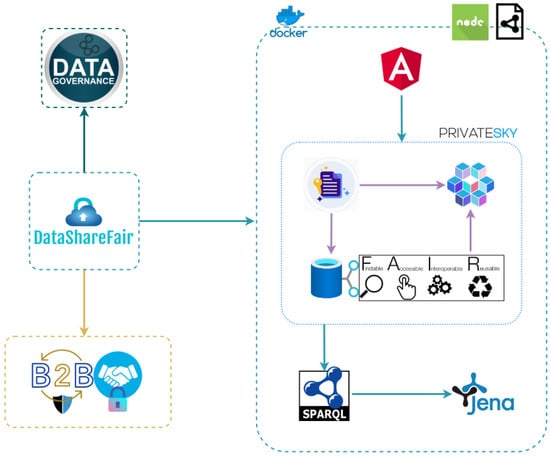

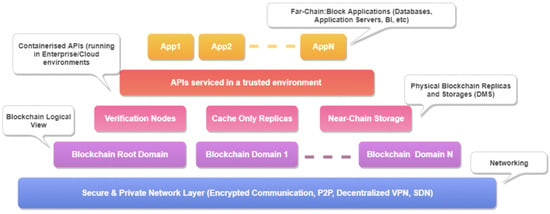

Our proposal, which we called DataShareFair, aims to provide a viable solution, in respect to data governance principles, for the commonly encountered issues of the B2B data sharing strategies. It provides the advantages of the public data marketplaces, while also including key features of industrial data platforms. Our solution has several original key approaches. First of all, its architecture follows the ‘privacy-by-design’ principles by developing the platform’s functionalities using our own blockchain solution centered on data confidentiality mechanisms. Second of all, aspects regarding data reusability, traceability and findability are approached by developing in DataShareFair a set of methodologies we proposed, based on the FAIR principles. Third of all, formalization of legal agreements is possible through direct and group data sharing based on dataset specific access policies defined in the platform. Data governance mechanisms are built on top of the FAIR compliant metadata modelling and our own blockchain solution. They are included in the DataShareFair platform, whose decentralized architecture ensures businesses have full control of the terms and conditions under which their data is used.

2. Data Modelling in B2B Processes

Digital data accessibility and timely sharing have proven to be key aspects in the innovation process of any research area. In Wilkinson (2016), the authors outline the importance of improving the currently used digital ecosystem in order to support automatic reuse of scholarly data. Since there are many research areas that could benefit from this initiative, such as academia, industry, funding agencies and scholarly publishers, FAIR data principles have been established.

2.1. FAIR Principles Overview

The first formal steps towards better data management and governance were made when the “FAIR Guiding Principles for Scientific Data Management and Stewardship” [] paper was published. Its authors proposed some written guidelines to improve findability, accessibility, interoperability, and reuse of digital assets []. These principles can be used to automate the process of extracting metadata from available data. This is a very important aspect, considering the increase in complexity and volume of the information collected these days. According to [], the European Commission’s Open Research Data Pilot [] tried to incorporate these principles only a few months later after their publication. Their goal is to make research results more visible in the community and easier to share between institutions. However, extensive research still needs to be performed until the FAIRification process can be applied successfully to the variety of open data available at this point on the Web [].

The main rules proposed in the FAIR data principles paper should be used in the process of FAIRification, whose purpose is to make existing data compliant with the FAIR model.

The first characteristic of a dataset should be findability. To achieve this, metadata extracted from data and provided by the authors should be rich in terms of volume and references to already standardized concepts. As an additional measure to increase its findability on the long term, a unique and persistent identifier should be provided for the shared data.

Accessibility is another key requirement when sharing and reusing the published data, according to the FAIR model. Metadata and data should be easily processed by both machines and humans, while being made accessible for manual and automatic discovery on a repository. FAIR data does not necessarily mean Open Data, which suggests that the data repository and its contents may be made public under certain sharing agreement licenses, while also requiring the user to authenticate and receive an authorization to access the data. Following the formerly mentioned principles when implementing a data management system also contributes to the data governance process [,], one of the main issues in the case of Open Data.

Since one of the main goals of sharing data is integrating it with existing datasets used in processing workflows, interoperability appears to be another important property. The constructed metadata should be formally defined using an accessible and broadly applicable knowledge representation language, in order to be uniform in structure and easily integrateable in existing processing and storage tools.

The main purpose of the FAIR methodology is to “optimize data reuse”. Combination of data in different contexts can only be achieved if the data and infrastructure are described by rich metadata. All data collections should provide clear information about usage licenses in order to enable references in the new metadata. The data governance process is facilitated by having accurate information about the provenance and distribution license.

2.2. Open Data Debates Approached in the FAIR Data Model

All automatic processes have one common thing they need in order to produce relevant results—an abundance of data from various sources. When it comes to improving the current living standards of our society, the most important data publishers are public sector actors, such as governmental institutions and NGOs. For the European Union member countries, the data sharing and disclosure process is subjected to regulations imposed both at national and communitarian level.

Modelling the information produced by the public representatives in agreement to the open standards is part of the European Data Portal Initiative [], which intends to make the data freely available and accessible for reuse on any purpose. Even though this initiative is partially regulated by the “Public Sector Information Directive” [] and it brings a lot of benefits, such as free “flow of data, transparency and fair competition” [], there are several legal and technical issues that arise from the disclosure and uncontrolled usage.

As stated in [] the first issue that arises in the context of open data implementation and usage is governance []. Data supply, access and ownership procedures are not clearly stated, which leads to creation of ad-hoc requirements established in custom negotiations between parties. This behavior overrules the basic characteristics of open data.

Even though the main goal of open data is to be accessible by all, sensitive information collected by governmental institutions should be kept private and disclosed only with the user consent according to GDPR regulations []. Using open data to increase reusability of existing information and to fructify research opportunities does not guarantee the user with his entitled privacy, therefore, it raises issues of trust and creates legal barriers.

In terms of FAIR data, these issues can be partially solved by first following the Reusability guidelines, where detailed provenance of the data and metadata must be supplied. Metadata should only be released under a clear usage license. Secondly, the accessibility principles should be followed, since a protocol with support for authentication and authorization is needed to control which entities have access to data and under which circumstances. Availability of data is ensured by adhering to the findability and accessibility rules proposed in the FAIR paper []. Effective data governance cannot be applied to open data. There is no way to prove its consistency and trustworthiness, while also tracking its usage.

Another issue that arises when trying to create open data refers to the lack of standardization amongst existing datasets []. This leads to poor quality data that cannot be easily associated with other data in automated processes, since labels and time indicators are not used in a consistent manner. Open data guarantee us neither interoperability between old and new, nor provenance of data since there are no written requirements for the shared metadata. This aspect is approached by the FAIR methodology through its interoperability and reusability principles which clearly state the metadata categories and how they should be modelled in order to reach a processing ready dataset.

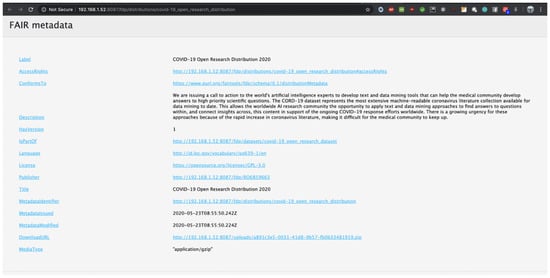

According to [], finding and accessing open data is another issue that has not been thought through. The authors emphasize the need to easily locate the data, while also keeping track of the time data were made available and last updated. To achieve these goals, they propose creating open data registries structured relative to location, which should contain datasets identified by various keywords. This solution is already similarly modelled in the FAIR Data Point specification []. A FAIR Data Point represents a registry of FAIR datasets and their metadata served through a REST API, among which we can also find information about the required time variables and keywords []. The main purpose of a FAIR Data Point is to ensure data discovery, access, publication and metrics.

Several issues arise when it comes to sustaining open data costs of storage, delivery and maintenance. There is no standardized way to keep metadata, while erasing stale data in order to reduce costs. This situation is foreseen by the FAIR principles, which comprise that metadata should not be erased from a FAIR Data Point, even though data is no longer available. The FAIR approach guarantees us a way to easily find historical records of data and its publishers, while also minimizing the costs of keeping perishable data alive.

Data redundancy also appears to be a recurrent problem in the usage of open data. Since there has not been established a clear methodology on how to annotate data in order to be uniquely identifiable, there is no guarantee open means unique. The FAIR data ontology modelling guarantees uniqueness through the reusability principles, which state that each piece of metadata and data has its own identifier.

5. Conclusions

The current article, located at the interSection of B2B, Semantic Web, Blockchain and Privacy, brings both theoretical and practical contributions.

Following a comprehensive introduction in B2B data sharing, modelling and its impact in the business ecosystem in the first two sections, in Section 3 we propose a series of data governance methodologies to manage metadata structured according to our FAIR compliant ontologies. The resulting model can be traded by companies in the B2B data sharing platform which we described in Section 3, while in Section 4 we conclude over the impact of our proposal in B2B. The system’s features rely on our own privacy and security centered blockchain mechanisms.

As for the future directions, DataShareFair should not only facilitate the B2B data sharing process, but also offer businesses opportunities to monetize their data. Given our blockchain based solution, this is possible by introducing wallet resources for each user into the current mechanisms for granting access to data. As a result, companies will be able to make their data available to others in exchange of being paid in various cryptocurrencies.

Another direction in our future work is to make the platform able to automatically translate legal formal contracts between partner businesses into authorizations and access rights over the data traded through our platform.

From a technical point of view, an aspect to improve is the FAIR extraction process, by introducing tokenization and NLP techniques to extract relevant intrinsic metadata. Using these techniques, we can build and continually improve a knowledge representation model based on our FAIR ontologies. This representation model can be used to compute appropriate solutions to specific questions a business has regarding the efficiency, scalability and profitability of its model.

Using DataShareFair as a platform for data sharing and reuse in the context of business to business processes would significantly improve their efficiency and reliability. The proposed solution manages to overcome technical impediments such as interoperability and discovery issues and also addresses several legal concerns, which result in a major reduction of the associated costs. As a result, more major players from significant industries would perceive B2B data sharing processes as the road towards innovative opportunities, rather than a costly investment with potential liabilities.

Author Contributions

Conceptualization, C.G.C. and L.A.; data curation, C.G.C. and L.A.; methodology, C.G.C. and L.A.; project administration, L.A.; resources, C.G.C. and L.A.; software, C.G.C.; supervision, L.A.; validation, L.A.; visualization, C.G.C.; writing—original draft, C.G.C. and L.A.; writing—review and editing, C.G.C. and L.A. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Acknowledgments

This research is partially supported by POC-A1-A1.2.3-G-2015 program, as part of the PrivateSky Project (P_40_371/13/01.09.2016) and by the Competitiveness Operational Programme Romania under Project Number SMIS 124759—RaaS-IS (Research as a Service Iasi).

Conflicts of Interest

The authors declare no conflict of interest.

References

- Gartner. New B2B Buying Journey & Its Implication for Sales. Available online: https://www.gartner.com/en/sales/insights/b2b-buying-journey (accessed on 18 April 2021).

- Gunnar, S. Business-to-business data sharing: A source for integration of supply chains. Int. J. Prod. Econ. 2002, 75, 135–146. [Google Scholar] [CrossRef]

- Directorate-General for Communications Networks, Content and Technology. Study on Data Sharing between Companies in Europe (2018). Available online: https://op.europa.eu/en/publication-detail/-/publication/8b8776ff-4834-11e8-be1d-01aa75ed71a1/language-en (accessed on 20 April 2021).

- Myler, L. Forbes. Available online: https://www.forbes.com/sites/larrymyler/2017/09/11/data-sharing-can-be-a-catalyst-for-b2b-innovation/ (accessed on 13 September 2020).

- Informatica. B2B Data Exchange—Streamline Multi-Enterprise Data Integration 2020. Available online: https://www.informatica.com/content/dam/informatica-com/en/collateral/brochure/b2b-data-exchange_brochure_6828.pdf (accessed on 23 April 2021).

- Euro Banking Association. B2B Data Sharing: Digital Consent Management as a Driver for Data Opportunities. 2018. Available online: https://eba-cms-prod.azurewebsites.net/media/azure/production/1815/eba_2018_obwg_b2b_data_sharing.pdf (accessed on 20 April 2020).

- Gartner. Magic Quadrant for Analytics and Business Intelligence Platforms. Available online: https://www.gartner.com/en/documents/3980852/magic-quadrant-for-analytics-and-business-intelligence-p (accessed on 11 February 2020).

- Datapace. 2021. Available online: https://datapace.io (accessed on 18 April 2021).

- Epimorphics. Epimorphics. 2021. Available online: https://www.epimorphics.com/services/ (accessed on 18 April 2021).

- iGrant.io. iGrant.io. 2021. Available online: https://igrant.io/ (accessed on 18 April 2021).

- Wilkinson, M.D.; Dumontier, M.; Aalbersberg, I.J.; Appleton, G.; Axton, M.; Baak, A.; Blomberg, N.; Boiten, J.W.; da Silva Santos, L.B.; Bourne, P.E.; et al. The FAIR Guiding Principles for scientific data management and stewardship. Sci. Data 2016, 3, 160018. [Google Scholar] [CrossRef] [PubMed]

- Fair, R.T. FAIR Principles. 2020. Available online: FAIR Principles (accessed on 16 May 2020).

- Association of European Research Libraries (A. o. E. R. Libraries). Implementing FAIR Data Principles: The Role of Libraries. 2017. Available online: https://libereurope.eu/wp-content/uploads/2017/12/LIBER-FAIR-Data.pdf (accessed on 20 April 2020).

- Commission, E. Guidelines on FAIR Data Management in Horizon 2020. Available online: http://ec.europa.eu/research/participants/data/ref/h2020/grants_manual/hi/oa_pilot/h2020-hi-oa-data-mgt_en.pdf (accessed on 22 April 2020).

- Gartner. Data Governance. 2021. Available online: https://www.gartner.com/en/information-technology/glossary/data-governance (accessed on 18 April 2021).

- Talend. What is Data Governance. 2021. Available online: https://www.talend.com/resources/what-is-data-governance/ (accessed on 19 March 2021).

- European Data Portal. 2020. Available online: https://www.europeandataportal.eu/en/training/what-open-data (accessed on 22 April 2020).

- European Legislation on Open Data and the Re-use of Public. 2020. Available online: https://ec.europa.eu/digital-single-market/en/european-legislation-reuse-public-sector-information (accessed on 15 April 2020).

- Link, G.J.; Lumbard, K.; Conboy, K.; Feldman, M.; Feller, J.; George, J.; Germonprez, M.; Goggins, S.; Jeske, D.; Kiely, G.; et al. Contemporary Issues of Open Data in Information Systems Research: Considerations and Recommendations. Commun. Assoc. Inf. Syst. 2017, 41. [Google Scholar] [CrossRef]

- Mohan, S. Building a Comprehensive Data Governance Program. Available online: https://www.gartner.com/en/documents/3956689/building-a-comprehensive-data-governance-program (accessed on 27 August 2019).

- Calancea, C.G.; Alboaie, L.; Panu, A.; Swarm, A. ESB Based Architecture for an European Healthcare Insurance System in Compliance with GDPR. In International Conference on Parallel and Distributed Computing: Applications and Technologies; PDCAT 2018. In Communications in Computer and Information Science; Springer: Singapore, 2018; p. 931. [Google Scholar] [CrossRef]

- Cowan, D.; Alencar, P.; McGarry, F. Perspectives on Open Data: Issues and Opportunities. In Proceedings of the 2014 IEEE International Conference on Software Science, Technology and Engineering, Ramat Gan, Israel, 11–12 June 2014. [Google Scholar] [CrossRef]

- Fair, R.T. FAIR Data Point Specification. 2020. Available online: https://github.com/FAIRDataTeam/FAIRDataPoint-Spec (accessed on 18 May 2020).

- Fair, R.T. FAIR Data Point Metadata Specification. 2020. Available online: https://github.com/FAIRDataTeam/FAIRDataPoint-Spec/blob/master/spec.md (accessed on 18 May 2020).

- W3C. “WebAccessControl”. 2016. Available online: https://www.w3.org/wiki/WebAccessControl (accessed on 19 March 2021).

- PrivateSky Project. 2020. Available online: https://profs.info.uaic.ro/~ads/PrivateSky/ (accessed on 18 April 2021).

- Alboaie, S.; Ursache, N.C.; Alboaie, L. Self-Sovereign Applications: Return control of data back to people. In Proceedings of the 24th International Conference on Knowledge-Based and Intelligent Information & Engineering Systems, Verona, Italy, 16–18 September 2020. [Google Scholar]

- Alboaie, S.; Alboaie, L.; Zeev, P.; Adrian, I. Secret Smart Contracts in Hierarchical Blockchains. In Proceedings of the 28th International Conference on Information Systems Development (ISD2019), Toulon, France, 28–30 August 2019. [Google Scholar]

- Angular. 2020. Available online: https://angular.io (accessed on 15 May 2020).

- Apache Jena. 2020. Available online: https://jena.apache.org (accessed on 16 May 2020).

- Apache Jena Fuseki. 2020. Available online: https://jena.apache.org/documentation/fuseki2/index.html (accessed on 16 May 2020).

- Docker. 2020. Available online: https://www.docker.com/ (accessed on 20 May 2020).

- Alboaie, L.; Alboaie, S.; Panu, A. Swarm Communication—A Messaging Pattern Proposal for Dynamic Scalability in Cloud. In Proceedings of the 2013 IEEE 10th International Conference on High Performance Computing and Communications & 2013 IEEE International Conference on Embedded and Ubiquitous Computing, Zhangjiajie, China, 13–15 November 2013. [Google Scholar]

- Alboaie, L. Towards a Smart Society through Personal Assistants Employing Executable Choreographies. In Proceedings of the Information Systems Development: Advances in Methods, Tools and Management (ISD2017 Proceedings), Larnaca, Cyprus, 6–8 September 2017. [Google Scholar]

- Voshmgir, S. Token Economy: How Blockchains and Smart Contracts Revolutionize the Economy; BlockchainHub: Berlin, Germany, 27 June 2019; ISBN/EAN: 9783982103822. [Google Scholar]

- PrivateSky. PrivateSky EDFS Explained. 2020. Available online: https://privatesky.xyz/?API/edfs/overview (accessed on 18 April 2021).

- PrivateSky. What is Swarm Communication? 2020. Available online: https://privatesky.xyz/?Overview/swarms-explained (accessed on 18 April 2021).

- PrivateSky. PrivateSky Secret Smart Contracts. 2020. Available online: https://privatesky.xyz/?Overview/Blockchain/secret-smart-contracts (accessed on 18 April 2021).

- PrivateSky. PrivateSky Architecture. 2020. Available online: https://privatesky.xyz/?Overview/architecture (accessed on 18 April 2021).

- PrivateSky. PrivateSky Interactions. 2020. Available online: https://privatesky.xyz/?API/interactions (accessed on 18 April 2021).

- Calancea, C.; Miluț, C.; Alboaie, L.; Iftene, A. iAssistMe—Adaptable Assistant for Persons with Eye Disabilities. In Proceedings of the Knowledge-Based and Intelligent Information & Engineering Systems: Proceedings of the 23rd International Conference KES2019, Budapest, Hungary, 4–6 September 2019. [Google Scholar]

- DataShareFair Source Code. 2020. Available online: https://bitbucket.org/meoweh/datasharefair/src/master/ (accessed on 15 July 2020).

- VAT. Identification Numbers. 2020. Available online: https://ec.europa.eu/taxation_customs/business/vat/eu-vat-rules-topic/vat-identification-numbers_en (accessed on 10 April 2020).

- DBpedia. 2020. Available online: https://wiki.dbpedia.org (accessed on 15 July 2020).

- Wikidata. 2020. Available online: https://www.wikidata.org/wiki/Wikidata:Main_Page (accessed on 15 July 2020).

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).