Abstract

In this research, we study the convex minimization problem in the form of the sum of two proper, lower-semicontinuous, and convex functions. We introduce a new projected forward-backward algorithm using linesearch and inertial techniques. We then establish a weak convergence theorem under mild conditions. It is known that image processing such as inpainting problems can be modeled as the constrained minimization problem of the sum of convex functions. In this connection, we aim to apply the suggested method for solving image inpainting. We also give some comparisons to other methods in the literature. It is shown that the proposed algorithm outperforms others in terms of iterations. Finally, we give an analysis on parameters that are assumed in our hypothesis.

1. Introduction

Let H be a real Hilbert space. The unconstrained minimization problem of the sum of two convex functions is modeled as the following form:

where f and are proper, lower semi-continuous, and convex functions. If f is differentiable on H, we know that problem (1) can be described by the fixed point equation, that is,

where and is the proximal operator of g, i.e., where is the identity operator in H and is the subdifferential of g. Therefore, the forward-backward algorithm was defined in the following manner:

where . Some works that relate to the forward-backward method for convex optimization problems can be investigated in [1,2,3,4,5,6]. This method covers the gradient method [7,8,9] and the proximal algorithm [10,11,12]. Combettes and Wajs [13] introduced the following relaxed forward-backward method.

Cruz and Nghia [14] suggested the forward-backward method using linesearch approach, which does not need the Lipschitz constant in implementation.

It was shown that converges weakly to a minimizer of .

Now, inspired by Cruz and Nghia [14], we suggest a new projected forward-backward algorithm for solving the constrained convex minimization problem, which is modeled as follows:

where is a nonempty closed convex subset of H, f and g are convex functions on H that f is differentiable on H. We denote by the solution set of (4).

By the way, to obtain a nice convergence rate, Polyak [15] introduced the heavy ball method for solving smooth convex minimization problem. In case , Nesterov [16] modified the heavy ball method as follows.

In this work, motivated by Algorithm 1 [13], Algorithm 2 [14], Algorithm 3 [16] and Algorithm 4 [17], we are interested to design a new projected forward-backward algorithm for solving the constrained convex minimization problem (4) and establishing the convergence theorem. We also apply our method to solve image inpainting and provide some comparisons and numerical results. Finally, we show the effects of each parameters in the proposed algorithm.

| Algorithm 1 Ref. [13] Let and For , define |

| Algorithm 2 Let and . Let . For define |

| Algorithm 3 Let and . For define |

In 2003, Moudafi and Oliny [17] suggested the inertial forward-backward splitting as follows:

| Algorithm 4 Let and . For define |

This paper is organized as follows: In Section 2, we recall some preliminaries and mathemetical tools. In Section 3, we prove the weak convergence theorem of the proposed method. In Section 4, we provide numerical experiments in image inpainting to valid the convergence theorem and, finally, in Section 5, we give conclusions of this paper.

2. Preliminaries

Let us review some important definitions and lemmas for proving the convergence theorem. Let H be a real Hilbert space with inner product and norm . Let be a proper, lower semicontinuous (), and convex function. The domain of h is defined by For any , we know that the orthogonal projection of u onto a nonempty, closed and convex subset C of H is defined by

Lemma 1

([21]). Let C be a nonempty, closed and convex subset of a real Hilbert space H. Then, for any , we have

- (i)

- for all;

- (ii)

- for all;

- (iii)

- for all.

The directional derivative of h at u in the direction d is

Definition 1.

The subdifferential of h at u is defined by

It is known that is maximal monotone and if h is differentiable, then is the gradient of h denoted by . Moreover, is monotone, that is, for all . From (4), we also know that

where and .

From [14], we know that

Lemma 2

([22]). is demiclosed, i.e., if the sequence satisfies that converges weakly to u and converges strongly to a, then

Lemma 3.

Let, andbe real positive sequences such that

Ifand, thenexists.

Lemma 4

([23]). Let and be real positive sequences such that

Then,, where. Moreover, if, thenis bounded.

Definition 2.

Let S be a nonempty subset of H. A sequencein H is said to be quasi-Fejér convergent to S if and only if for allthere exists a positive sequencesuch thatandfor allWhenis a null sequence, we say thatis Fejér convergent to S.

Lemma 5

([21,24]). If is quasi-Fejér convergent to S, then we have:

- (i)

- is bounded.

- (ii)

- If all weak accumulation points ofis in S, thenweakly converges to a point in S.

3. Results

In this section, we suggest a new projected forward-backward algorithm and establish the weak convergence. The following conditions are assumed:

- (A1)

- are proper, , convex functions on H that f is differentiable on H.

- (A2)

- is uniformly continuous on bounded subsets of H and is bounded on any bounded subset of H.

Next, we will prove weak convergence theorem of the proposed algorithm.

Theorem 1.

Letbe defined by Algorithm 5. Suppose. Then,weakly converges to a point in.

| Algorithm 5 Let be a nonempty closed convex subset of H. Given , , and . Let and define |

Proof.

Let be a solution in . Thus, we obtain

By the definition of proximal operator and , we have

By the convexity of g, we get

The convexity of f gives

This yields

Since , it follows that

Since f is convex, we have . This implies that

Setting , we obtain

Hence,

This shows that . By Lemma 4, we have

where . Since , is bounded. Thus, . By Lemma 3, therefore exists.

Next, we consider

This gives

Since and exists, it follows that and . It is easily seen that and hence . On the other hand, we see that

It follows that is Fejér convergent to . Thus, we have

where . Since is bounded, the set of weak accumulation points is nonempty. Take any weak accumulation point of . Thus, there is a subsequence of weakly converging to . Moreover, also weakly converges to . Since is bounded and , from , we obtain

By passing in (19), we get from (18) and Lemma 2 that . Thus, By Lemma 5 (ii), we conclude that weakly converges to a point in . □

4. Numerical Experiments

In this section, we aim to apply our result for solving an image inpainting problem which is of the following mathematical model:

where , A is a linear map that selects a subset of the entries of an matrix by setting each unknown entry in the matrix to 0, u is matrix of known entries , and is regularization parameter.

In particular, we investigate the image inpainting problem [25,26]:

where is the Frobenius matrix norm, and is the nuclear matrix norm. Here, we define as follows:

The optimization problem (21) relates to (4). In fact, let and . Then, is 1-Lipschitz continuous. Moreover, is obtained by the singular value decomposition (SVD) [27].

From Algorithm 5, we obtain the Algorithm 6 for image inpainting.

To measure the quality of images, we consider the signal-to-noise ratio (SNR) and the structural similarity index (SSIM) [28], which are given by

and

where u is the original image, is the restored image, and are the mean values of the original image u and restored image , respectively, and are the variances, is the covariance of two images, and , and L is the dynamic range of pixel values. SSIM ranges from 0 to 1, and 1 means perfect recovery. Next, we analyze its convergence including its effects of the parameters and that proposed in Algorithm 5. We now present the corresponding numerical results (number of iterations denoted by Iter and CPU denoted by the time of CPU).

First, we investigate the effect . Set parameters as follows:

where is a sequence defined by and .

| Algorithm 6 Forward-backward algorithm for image inpainting. |

| Step 1: Input and . |

| Step 2: Compute

|

| Step 3: (Linesearch rule) Set |

| While |

| End while. |

| Step 4: Compute

|

| and |

| Set go to Step 2. |

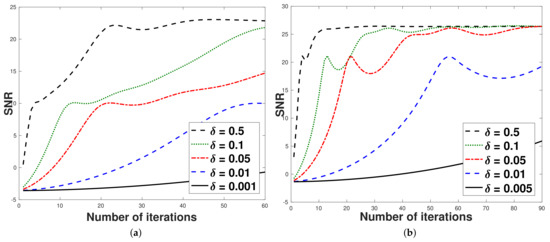

From Table 1, we observe that SNR and SSIM of Algorithm 5 have been getting larger when the parameter approaches . Moreover, the CPU of Algorithm 5 is decreasing when tends to .

Table 1.

The convergence results of Algorithm 5 for each .

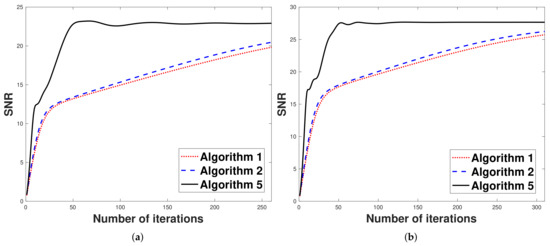

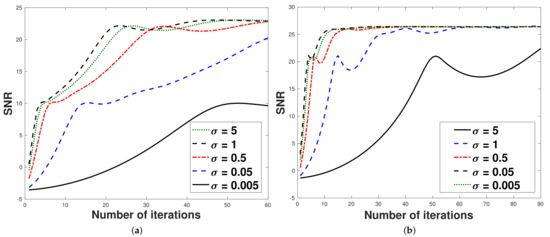

Figure 1, Figure 2, Figure 3 and Figure 4 show the SNR and the reconstructed images for each caseof dimensions.

Figure 1.

Graph of the number of iterations and SNR for the parameter . (a) Number of iterations and SNR with N = 227, M = 340; (b) number of iterations and SNR with N = 480, M = 360.

Figure 2.

Graph of number of iterations and SNR for the parameter . (a) Number of iterations and SNR with N = 227, M = 340; (b) number of iterations and SNR with N = 480, M = 360.

Figure 3.

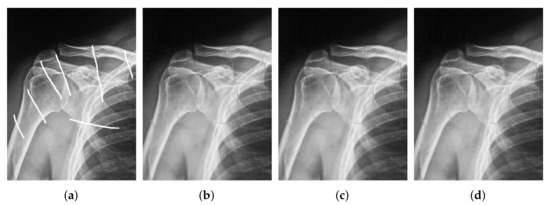

The painted image and restored images with the real image N = 227, M = 340. (a) The painted image; (b) restored images in Table 1 for (SNR = 22.8626, SSIM = 0.9476); (c) restored images in Table 2 for (SNR = 23.0594, SSIM = 0.9479); (d) restored images in Table 3 for (SNR = 22.9865, SSIM = 0.9477).

Figure 4.

The painted image and restored images with the real image N = 480, M = 360. (a) The painted image; (b) restored images in Table 1 for (SNR = 26.3994, SSIM = 0.9210); (c) restored images in Table 2 for (SNR = 26.4002, SSIM = 0.9210); (d) restored images in Table 3 for (SNR = 26.4084, SSIM = 0.9210).

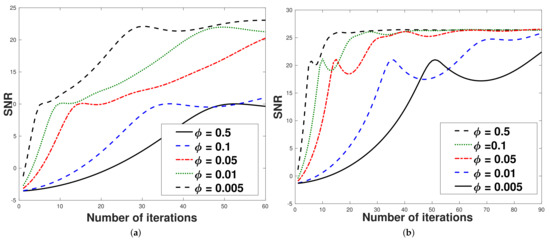

Next, we discuss the effect of . The numerical experiments are given in Table 2.

Table 2.

The convergence results of Algorithm 5 for each .

From Table 2, we observe that SNR, SSIM, and CPU time of Algorithm 5 have been getting larger when the parameter approaches .

Next, we discuss the effect . The numerical experiments are given in Table 3. From Table 3, we observe that SNR, SSIM, and the CPU time of Algorithm 5 have been getting larger if increases. The SNR and the reconstructed images are shown in Figure 3, Figure 4 and Figure 5.

Table 3.

The convergence results of Algorithm 5 for each .

Figure 5.

Graph of the number of iterations and SNR for the parameter . (a) Number of iterations and SNR with N = 227, M = 340; (b) number of iterations and SNR with N = 480, M = 360.

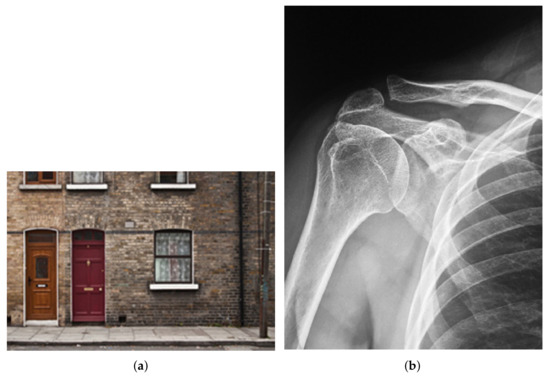

The real images are shown in Figure 6, input image, and the reconstructed images are shown in Figure 3, Figure 4 and Figure 6, respectively.

Figure 6.

The original images. (a) The original image of size N = 227, M = 340; (b) the original image of size of N = 480, M = 360.

Now, we present the performance of Algorithms 5 and its comparison to the projected version of Algorithm 1 [13] and Algorithm 2 [14]. The initial point and are chosen to be zero and let and in Algorithm 1. Let , and be defined by (25) in Algorithms 2 and 5, respectively. The numerical results are shown in Table 4.

Table 4.

Computational results for solving (21).

From Table 4, we see that the experiment results of Algorithm 5 are better than Algorithms 1 and 2 in terms of SNR and SSIM in all cases.

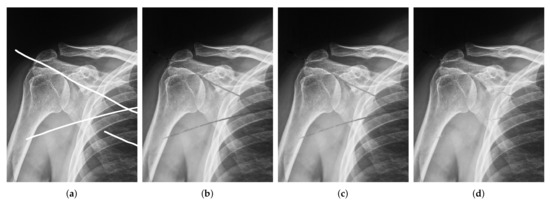

The figure of the inpainting image for the 260th and 310th iterations are shown in Figure 7, Figure 8 and Figure 9, respectively.

Figure 7.

The painted image and restored images. (a) The painted image; (b) restored images in Algorithm 1 (SNR = 19.8276, SSIM = 0.9278); (c) restored images in Algorithm 2 (SNR = 20.4704, SSIM = 0.9402); (d) restored images in Algorithm 5 (SNR = 22.9158, SSIM = 0.9477).

Figure 8.

The painted image and restored images. (a) The painted image; (b) restored images in Algorithm 1 (SNR = 25.7210, SSIM = 0.9363); (c) restored images in Algorithm 2 (SNR = 26.2362, SSIM = 0.9373); (d) restored images in Algorithm 5 (SNR = 27.6581, SSIM = 0.9400).

5. Conclusions

In this research, we investigated inertial projected forward-backward algorithm using linesearches for constrained minimization problems. The weak convergence results were proved under control conditions. The proposed algorithms do not need to compute the Lipschitz constant of the gradient of functions. We applied our results to solve image inpainting. We also presented the effects of all parameters that are assumed in our method.

For our future research, we aim to find a new linesearch technique that does not require the Lipschitz continuity assumption on the gradient of the function. We note that the proposed algorithm depends on the computation of the projection which is not an easy task to find in some cases. It is interesting to construct new algorithms that do not involve the projection.

Author Contributions

Funding acquisition and supervision, S.S.; writing—original draft preparation, K.K.; writing—review and editing and software, P.C. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by Chiang Mai University and Thailand Science Research and Innovation under the project IRN62W007.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Acknowledgments

The authors would like to thank reviewers and the editor for valuable comments for improving the original manuscript.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Bauschke, H.H.; Borwein, J.M. Dykstra’s alternating projection algorithm for two sets. J. Approx. Theory 1994, 79, 418–443. [Google Scholar] [CrossRef]

- Chen, G.H.; Rockafellar, R.T. Convergence rates in forward–backward splitting. SIAM J. Optim. 1997, 7, 421–444. [Google Scholar] [CrossRef]

- Cholamjiak, W.; Cholamjiak, P.; Suantai, S. An inertial forward–backward splitting method for solving inclusion problems in Hilbert spaces. J. Fixed Point Theory Appl. 2018, 20, 1–7. [Google Scholar] [CrossRef]

- Dong, Q.; Jiang, D.; Cholamjiak, P.; Shehu, Y. A strong convergence result involving an inertial forward–backward algorithm for monotone inclusions. J. Fixed Point Theory Appl. 2017, 19, 3097–3118. [Google Scholar] [CrossRef]

- Bauschke, H.H.; Burachik, R.S.; Combettes, P.L.; Elser, V.; Luke, D.R.; Wolkowicz, H. (Eds.) Fixed-Point Algorithms for Inverse Problems in Science and Engineering; Springer: Berlin/Heidelberg, Germany, 2011. [Google Scholar]

- Kankam, K.; Pholasa, N.; Cholamjiak, P. Hybrid Forward-Backward Algorithms Using Linesearch Rule for Minimization Problem. Thai J. Math. 2019, 17, 607–625. [Google Scholar]

- Dunn, J.C. Convexity, monotonicity, and gradient processes in Hilbert space. J. Math. Anal. Appl. 1976, 53, 145–158. [Google Scholar] [CrossRef]

- Wang, C.; Xiu, N. Convergence of the gradient projection method for generalized convex minimization. Comput. Optim. Appl. 2000, 16, 111–120. [Google Scholar] [CrossRef]

- Xu, H.K. Averaged mappings and the gradient-projection algorithm. J. Optim. Theory Appl. 2011, 150, 360–378. [Google Scholar] [CrossRef]

- Güler, O. On the convergence of the proximal point algorithm for convex minimization. SIAM J. Control Optim. 1991, 29, 403–419. [Google Scholar] [CrossRef]

- Martinet, B. Régularisation dinéquations variationnelles par approximations successives. Rev. Franaise Informat. Rech. OpéRationnelle 1970, 4, 154–158. [Google Scholar]

- Rockafellar, R.T. Monotone operators and the proximal point algorithm. SIAM J. Control Optim. 1976, 14, 877–898. [Google Scholar] [CrossRef]

- Combettes, P.L.; Wajs, V.R. Signal recovery by proximal forward-backward splitting. Multiscale Model. Simul. 2005, 4, 1168–1200. [Google Scholar] [CrossRef]

- Bello Cruz, J.Y.; Nghia, T.T. On the convergence of the forward—Backward splitting method with linesearches. Optim. Methods Softw. 2016, 31, 1209–1238. [Google Scholar] [CrossRef]

- Polyak, B.T. Some methods of speeding up the convergence of iteration methods. Ussr Comput. Math. Math. Phys. 1964, 4, 1–7. [Google Scholar] [CrossRef]

- Nesterov, Y.E. A method for solving the convex programming problem with convergence rate O(1/k2). InDokl. Akad. Nauk Sssr 1983, 269, 543–547. [Google Scholar]

- Moudafi, A.; Oliny, M. Convergence of a splitting inertial proximal method for monotone operators. J. Comput. Appl. Math. 2003, 155, 447–454. [Google Scholar] [CrossRef]

- Beck, A.; Teboulle, M. A fast iterative shrinkage-thresholding algorithm for linear inverse problems. SIAM J. Imaging Sci. 2009, 2, 183–202. [Google Scholar] [CrossRef]

- Suantai, S.; Pholasa, N.; Cholamjiak, P. The modified inertial relaxed CQ algorithm for solving the split feasibility problems. J. Ind. Manag. Optim. 2018, 14, 1595. [Google Scholar] [CrossRef]

- Shehu, Y.; Cholamjiak, P. Iterative method with inertial for variational inequalities in Hilbert spaces. Calcolo 2019, 56, 1–21. [Google Scholar] [CrossRef]

- Bauschke, H.H.; Combettes, P.L. Convex Analysis and Monotone Operator Theory in Hilbert Spaces; Springer: New York, NY, USA, 2011; Volume 408. [Google Scholar]

- Burachik, R.S.; Iusem, A.N. Enlargements of Monotone Operators. In InSet-Valued Mappings and Enlargements of Monotone Operators; Springer: Boston, MA, USA, 2008; pp. 161–220. [Google Scholar]

- Hanjing, A.; Suantai, S. A fast image restoration algorithm based on a fixed point and optimization method. Mathematics 2020, 8, 378. [Google Scholar] [CrossRef]

- Iusem, A.N.; Svaiter, B.F.; Teboulle, M. Entropy-like proximal methods in convex programming. Math. Oper. Res. 1994, 19, 790–814. [Google Scholar] [CrossRef]

- Cui, F.; Tang, Y.; Yang, Y. An inertial three-operator splitting algorithm with applications to image inpainting. arXiv 1904, arXiv:1904.11684. [Google Scholar]

- Davis, D.; Yin, W. A three-operator splitting scheme and its optimization applications. Set-Valued Var. Anal. 2017, 25, 829–858. [Google Scholar] [CrossRef]

- Cai, J.F.; Candès, E.J.; Shen, Z. A singular value thresholding algorithm for matrix completion. SIAM J. Optim. 2010, 20, 1956–1982. [Google Scholar] [CrossRef]

- Wang, Z.; Bovik, A.C.; Sheikh, H.R.; Simoncelli, E.P. Image quality assessment: From error visibility to structural similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).