Abstract

A novel semi-supervised learning method is proposed to better utilize labeled and unlabeled samples to improve classification performance. However, there is exists the limitation that Laplace regularization in a semi-supervised extreme learning machine (SSELM) tends to lead to poor generalization ability and it ignores the role of labeled information. To solve the above problems, a Joint Regularized Semi-Supervised Extreme Learning Machine (JRSSELM) is proposed, which uses Hessian regularization instead of Laplace regularization and adds supervised information regularization. In order to solve the problem of slow convergence speed and the easy to fall into local optimum of marine predator algorithm (MPA), a multi-strategy marine predator algorithm (MSMPA) is proposed, which first uses a chaotic opposition learning strategy to generate high-quality initial population, then uses adaptive inertia weights and adaptive step control factor to improve the exploration, utilization, and convergence speed, and then uses neighborhood dimensional learning strategy to maintain population diversity. The parameters in JRSSELM are then optimized using MSMPA. The MSMPA-JRSSELM is applied to logging oil formation identification. The experimental results show that MSMPA shows obvious superiority and strong competitiveness in terms of convergence accuracy and convergence speed. Also, the classification performance of MSMPA-JRSSELM is better than other classification methods, and the practical application is remarkable.

1. Introduction

Swarm intelligence algorithms [1] mainly simulate the behavior of a group of animals in search of food in a cooperative manner, where each member of the group learns from his or her own experience and the experience of the whole group, and changes the direction of the prey search accordingly [2]. Swarm intelligence algorithms can effectively solve many complex and challenging optimization problems in the field of artificial intelligence [3], and are mainly applied to combinatorial optimization [4], feature selection [5], image processing [6], data mining [7], and other fields.

In recent years, new meta-heuristic algorithms have been proposed to mimic the natural behavior of birds, bees, fish, and other groups of organisms, including Particle Swarm Optimization (PSO) [8], Gray Wolf Optimization (GWO) [9], Moth Flame Optimization (MFO) [10], Seagull Optimization Algorithm (SOA) [11], Sine Cosine Algorithm (SCA) [12], Whale Optimization Algorithm (WOA) [13], Coyote Optimization Algorithm (COA) [14], Carnivorous Plant Algorithm (CPA) [15], Transient Search Algorithm (TSA) [16], and more. In 2020, Faramarzi et al. [17] proposed novel meta-heuristic algorithms that mimic marine hunting behavior, and they believe that the Lévy flight strategy and the Brownian motion strategy that balance the movement of marine organisms can effectively solve the optimization problem. Each hunting process is divided into three stages. The first stage is the high-speed movement of the prey; the predator keeps a low-speed movement, and the second stage high-speed movement is carried out for both the prey and the predator. In the third stage, the predator moves much faster than the prey. According to the vortex formation and the fish gathering device effect, it can prevent premature convergence.

Since MPA exhibits significant superiority in solving optimization problems, it is now used to solve both continuous and engineering optimization problems, exhibiting good performance. Elaziz et al. [18] proposed a hybrid MPA and MFO algorithm (MPAMFO) to solve the problem of MPA’s weak ability to avoid local optimality, replacing the local search method of MPA with MFO, effectively improving the development capability of MPA, and using it for the multi-level threshold segmentation (MLT) of images with excellent performance. Abdel et al. [19] proposed an improved marine predator algorithm (IMPA) that introduces a ranking-based diversity reduction (RDR) strategy to directly replace the position of continuously poorly adapted search agents with the best individual position, improving the performance of the MPA and speeding up the convergence. Naga et al. [20] proposed a hybrid MPA and an adaptive differential evolution algorithm based on success history, which improves the global search and local search capabilities of finding the optimal solution, and is used to solve the optimal parameter combination in the single diode model. To address the limitations of the MPA’s exploratory and development capabilities, Ridha [21] combined MPA with Lambert W-functions and applied this method to solve the problem of optimizing parameter combinations in single-diode and dual-diode PV models. Yousri et al. [22] proposed an MPA-based robust photovoltaic array reconfiguration strategy for solar PV arrays that need to ensure both maximum power under weak lighting conditions and no damage under intense heat conditions. Soliman et al. [23] proposed a phototransistor (TDPV) model for the problem of photovoltaic losses in photovoltaic power plants, but it has nine parameters and cannot be solved directly. They proposed using MPA to solve the optimal parameter distribution, and finally a new high-precision TDPV model was obtained. For the optimal reactive power dispatch (ORPD) of ground efficiency due to highly stochastic wind speed and solar illumination, Ebeed et al. [24] propose an optimal combination of parameters to solve the ORPD by MPA to ensure that the system’s resource waste is minimized. For the deployment and training of convolutional neural network (CNN), it is challenging to perform feature extraction quickly. Sahlol et al. [25] proposed a feature extraction method combining CNN and swarm intelligence algorithm; the combined fractional order algorithm and ocean predator algorithm (FO- MPA) is used to improve the optimization performance and convergence speed of MPA. The proposed feature extraction method not only has good performance but also reduces the computational complexity.

The classification methods that have been widely used are Random Forests (RF) [26], Support Vector Machines (SVM) [27], and Extreme Learning Machines (ELM) [28]. To address the shortcomings of the traditional missing data filling methods, Deng et al. [29] proposed an improved Random Forest filling algorithm, which combines linear interpolation, matrix combination, and matrix transformation to solve the filling problem of the large amount of missing data of electricity. To utilize the minimum number of trees for classification, Paul et al. [30] proposed an improved random forest classifier based on the number of important and unimportant features, which iteratively removes some unimportant features. To improve the protein structure prediction performance, Kalaiselvi et al. [31] introduced an improved random forest classification (WPC-IRFC) technique based on weighted Pearson correlation, which has higher accuracy and shorter time. In response to the fact that SVMs do not adequately consider the distinction between numerical and nominal attributes, Peng et al. [32] proposed a novel SVM algorithm for heterogeneous data learning, which embeds nominal attributes into the real space by minimizing the estimated generalization error. To address the lack and contamination of supervised information, Dong et al. [33] proposed a robust semi-supervised classification using a novel correlated entropy loss function and Laplace SVM (LapSVM).

Huang et al. [34] proposed a semi-supervised extreme learning machine (SSELM) based on manifold regularization and extended ELM to semi-supervised learning. On various data sets, when auxiliary unlabeled data is available, SSELM is always better than purely supervised learning algorithms such as Support Vector Machines (SVM) and ELM. To address the strong sensitivity of the classification performance of SSELM to the quality of popular graphs, She et al. [35] proposed a regularized SSELM based on balanced graphs, combining label consistency graph (LCG) and sample similarity graph (SSG), and then optimizing the weight ratio of these two graphs to obtain an optimal neighborhood graph. To address the shortcoming that SSELM cannot mine information from nonlinear data, Zhou et al. [36] proposed a semi-supervised extreme learning machine (LRR-SSELM) based on a low-rank representation, which introduces a nonlinear classifier and a low-rank representation (LRR), and the LRR can maintain the popular structure of the original data. To enhance the feature extraction and classification performance of SSELM, She et al. [37] proposed a new hierarchical semi-supervised extremal learning machine (HSSELM) that uses the HELM method for automatic feature extraction of deep structures and then uses SSELM for classification tasks. To address the problem that manifold graph are only pre-constructed before classification and not changed later, which leads to poor model performance robustness, Ma et al. [38] proposed an adaptive safety semi-supervised extreme learning machine, which allows the model to adaptively compute the safety of unlabeled samples and adaptively construct manifold graphs. To address the problem that SSELM is sensitive to outliers in labeled samples, Pei et al. [39] proposed a new robust SSELM that uses a nonconvex squared loss function to impose a constant penalty on outliers and mitigate their possible negative effects.

SSELM is based on the ELM with the addition of Laplace regularization, and mitigates the drawback that sample imbalance tends to lead to poor generalization performance by assigning weights to different classes [34]. SSELM greatly expands the practicality of the ELM algorithm, and also retains all the advantages of ELM, such as high training efficiency and the simple implementation of multi-class classification problems. Laplace regularization [40] utilizes the L2 norm of the function gradient, so when solving classification or regression problems, its minimization makes the optimal function close to the constant function, further destroying the local topology and inferred power between samples. Hessian regularization [41] utilizes the L2 norm of the Hessian generic function of a function whose minimization will make the optimal function close to a linear function, capable of adaptively changing the geodesic function with distance, with a more effective preservation of the local topology between samples as well as the ability to characterize the intrinsic local geometric properties. Hessian regularization shows superior performance over Laplace regularization in predicting data points outside the region boundaries.

Therefore, in this paper, a novel semi-supervised learning method is proposed to extract the hidden information of large amount of unlabeled data and to obtain an efficient classification method with only a small number of labeled samples for training. Since SSELM takes a Laplace regularization with poor inferential ability and does not further exploit label information, resulting in its limited classification performance and poor generalization, the Joint Regularized Semi-Supervised Extreme Learning Machine (JRSSELM) is proposed. Using Hessian regularization with better inferencing power and better local prevalence structure, a supervised information regularization term that further exploits the information of tagged samples is introduced. Since JRSSELM and SSELM maintain consistency in parameter selection, with input weights and hidden layer thresholds chosen randomly and not adjusted subsequently, this, along with the selection of a grid of hyperparameters, can lead to limited performance and poor generalization. A Multi-Strategy Marine Predator Algorithm is proposed to address the limitations of MPA development and exploration capabilities, as well as the slow convergence rate. First, to address the problem that the traditional random initialization of populations is prone to generate low-quality initial populations, the initialization of populations is based on chaotic tent mapping and opposing learning strategies to ensure the generation of high-quality initial populations. Secondly, to balance the global extensive search in the early stage and the local fine search in the later stage, adaptive inertial weights and adaptive step control factors are adopted, which effectively enhance the exploration and exploitation capability of the algorithm. Furthermore, as the number of iterations increases, individuals in the population tend to lose their diversity and it is difficult to avoid the local optimum, so the proposed neighbor dimensional learning strategy ensures the diversity of the population in each iteration.

In order to verify the superiority of MSMPA, it is compared with 7 well-known algorithms (MPA, PSO, GWO, WOA, MFO, SOA, and SCA) on 18 classic benchmark test functions and CEC2017 competition test functions. The experimental results indicate that MSMPA shows significant competitiveness, enhances global search and local search capabilities, and accelerates the convergence speed. MSMPA is proposed to optimize the JRSSELM model with respect to the parameters mentioned in JRSSELM. JRSSELM and MSMPA-JRSSELM are applied to logging oil layer identification. The results show that JRSSELM and MSMPA-JRSSELM are significantly better than other classification method in terms of performance, especially MSMPA-JRSSELM has higher classification accuracy and stability compared to other models while ensuring not overfitting.

The rest of the paper is structured as follows. Section 2 describes the introduction of the ocean predator algorithm and its improvements. Section 3 presents and analyzes the experimental results of MSMPA. Section 4 describes the Semi-Supervised Extreme Learning Machine and its improvements. Section 5 presents and analyzes the experimental results for oil logging applications. Section 6 summarizes the work of this paper and provides an outlook for the future. This is followed by the Abbreviations section and the Appendix A section.

2. Marine Predator Algorithm and Its Improvement

2.1. Basic Marine Predator Algorithm

2.1.1. Population Location Initialization

MPA is a novel meta-heuristic algorithm that mimics marine predation by adopting a randomized localization rule for the initial population, with the following mathematical expression:

where represents the coordinates of the j-th dimension of the i-th population, n is the number of populations, d is the dimension, ub and lb are the upper and lower boundaries of the search space, respectively, and r is a random number between [0, 1].

Based on the location of the search agent, a matrix of prey can be constructed, as follows:

Inspired by the law of the jungle of survival of the fittest, it is believed that the best hunters are gifted in predation, so the optimal individual is used as the ontology to replicate n − 1 identical predators, and these n hunters are formed into an elite matrix, as shown in the following equation:

where denotes the optimal individual vector. The search agent includes both predators and prey. As such, they are both searching for their food, and after each iteration, the elite position is updated based on adaptation.

2.1.2. Exploratory Phase of High-Speed Ratio

The standard Brownian motion [31] is a stochastic process for which the step is given by a probability function defined by the zero mean (μ = 0) and unit variance (σ2 = 1) of the normal (Gaussian) distribution. The control density function of the motion at point x is as follows:

At the beginning of an iteration, i.e., the period, where is the current iteration and the maximum number of iterations, the prey is frantically searching for food, while the predator adopts a no-movement strategy. Thus, this is the high-speed ratio case, where the prey movement trajectory proceeds as follows.

where is a vector of random numbers resulting from the normal distribution of Brownian motion, is an element-by-element multiplication, P is a step control factor of constant 0.5, and is a vector of random numbers between [0, 1]. The multiplicative simulates the high-speed movement of prey.

2.1.3. Mid-Speed Ratio Transition Phase

In the middle stage, , the predator takes the same rate of position update as the prey, and since exploration in the previous section has been going on for some time, this time transitions the behavior of half of the population to the exploitation stage. Obviously, both exploration and exploitation are equally important, and at this point, the prey is primarily responsible for exploitation, while the hunter is responsible for exploration. In this phase, then, the prey updates its position according to the Lévy movement, and the predator takes the Brownian movement.

Lévy flight is a special kind of random walking strategy in which the distribution of walking steps obeys a probability distribution of heavy-tailed features, called the Lévy distribution [42]. It can usually be approximated as a simple power function distribution , where , is the step length, and is the probability of a moving step s. In the algorithm of Mantegna et al. [43], the Lévy flight step can be defined as:

where u and v are random numbers that are normally distributed, i.e., .

For the first half of the population, the position is updated by the following formula:

where is a vector of random numbers generated by the Lévy flight step. It can be seen that the Lévy stride is very useful for development.

For the latter half of the population, the exploration behavior is still performed, and the simulated motion is updated by the following formula:

where CF is an adaptive parameter used to control the predator’s moving stride.

2.1.4. Low-Speed Ratio Development Phase

The later stages, i.e., , are also called the low ratio stages because they set the prey to move much slower than the hunter. The predator is eager to hunt prey, and the movement of the population is all exploitation, so the Lévy stride strategy is adopted and the position is updated as follows:

2.1.5. Eddy Current Formation and Fish Aggregation Device Effects (FADS)

In order to circumvent the local optimal solution, Faramazi et al., taking into account that other external environmental disturbances may affect the movement of the population to a greater or lesser extent, proposed the following mechanism of position updating based on the eddy current formation and the fish aggregating device effect (FADS), which is mathematically expressed as follows:

where FADS is specified to represent the probability of influencing the search process and is set equal to 0.2, is a vector consisting of the maximum value of the search boundary, is a vector consisting of the minimum value of the search boundary, and the subscripts r1 and r2 represent the random index of the predation matrix, which is a binary vector consisting of 0 and 1.

Because marine predators have a good memory, they are guaranteed to visit prey-rich areas after a successful hunt. Adaptation is calculated for the population after each iteration and the elite matrix is replaced if there is a better-adapted position, a process that ensures that the quality of the elite increases with the number of iterations.

2.2. Multi-Strategy Marine Predator Algorithm—MSMPA

2.2.1. Chaotic Opposition Learning Strategy

Chaotic mappings have been widely used in the optimization of intelligent algorithms due to their regularity, randomness, and traversability [44], but different chaotic mappings have a great influence on the chaotic optimization process [45]. The Tent mapping has good traversal uniformity and fast iterations and produces a uniform distribution of chaotic sequences between [0, 1]. The Tent mapping expression is as follows:

where is the number of chaos at the t-th iteration, T is the maximum number of iterations, and is a customizable parameter between (0, 1). Note that when , the system shows a short-period state, which is not suitable for population mapping, so is chosen in this experiment.

The resulting chaotic variable λ is used for the initial population generation according to the search boundary , as follows:

where is the j-th dimensional coordinate of the i-th search agent, and is the coordinate of the j-th dimension of .

Even if the chaotic sequence is capable of generating a diverse and well-distributed population, it is undeniable that there may be better search agents on opposing sides of the search space, and the same number of opposing populations will be generated again, as follows:

where is the opposing position of the i-th agent and is the position of the i-th individual.

Then, we merge them into a 2*n population, calculate the adaptation values for each individual, and rank them in order from smallest to largest, with the top n best-adapted individuals as the initial population and the best-adapted individuals as the parent of the Elite matrix.

2.2.2. Adaptive Inertial Weights and Step Control Factors

The inertia weights are inspired by the particle swarm algorithm, which plays a decisive role in the algorithm’s search ability and convergence speed. In the original MPA algorithm, the inertia weights are constant values, which constrain the algorithm’s global and local search capabilities [46].

For early iterations, large inertia weights enhance the global search capability; in later iterations, the algorithm needs to perform local fine search, so small inertia weights enhance the local search capability and speed up convergence [47].

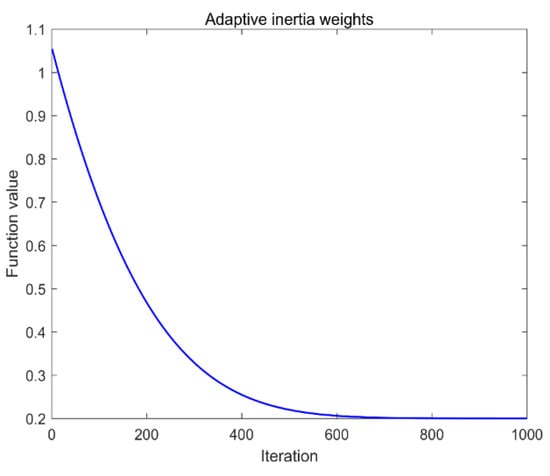

In the MPA search process, the choice of inertia weighting strategy is crucial, and thus a strategy is proposed that adaptively changes the inertia weights according to the number of iterations. In the early iterations, the inertia weights are large and decrease rapidly, which is focused on global search; in the later iterations, the inertia weights are small and decrease slowly, which is to be more detailed in the local fine search [48]. The adaptive inertia weights are expressed as follows:

where , b, and c are optional parameters. After the experimental analysis, , b, and c were set to 20, 12, and 0.2, respectively.

The adaptive inertia weight curves are shown in Figure 1.

Figure 1.

The plot of Adaptive Weights.

From Figure 1, it can be seen that after the introduction of adaptive inertial weights, the position of the prey will be updated by the following equation.

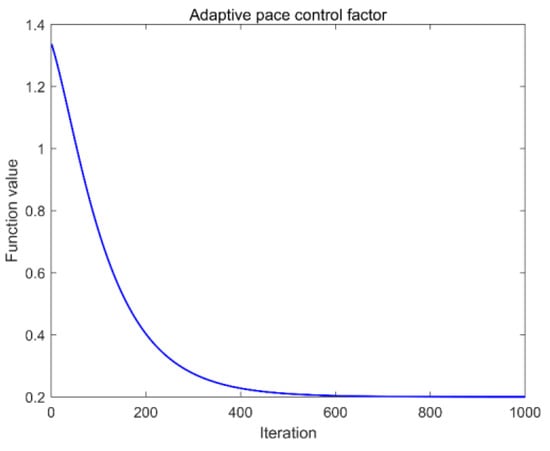

Also, the step length control is particularly important for MPA, in the early hope that the step length influence is as large as possible to make the prey better traversal search space; later, a small step length influence is needed to avoid falling into the local optimum, but also local fine search, while in the original MPA, the step length control factor is constant 0.5, which will limit the performance of the algorithm. Therefore, in this paper, an adaptive step length control factor is proposed, which not only ensures the global search demand of the early traversability but also enhances the local fine search capability at the later stage. The mathematical expression is as follows:

where m, n, p, and q are optional parameters. After experimental analysis, m, n, p, and q were set to be 1.2, 10, 2, and 0.2, respectively.

The curve of the adaptive step control factor is shown in Figure 2.

Figure 2.

The plot of the adaptive step control factor.

From Figure 2, it can be seen that the early values are large and rapidly decreasing, which enhances the influence of step size, i.e., strengthening the traversability of the global search, and the values are small and slowly decreasing in order to take into account the later local fine search.

2.2.3. Neighborhood Dimensional Learning Strategy (NDL)

The population diversity plays a key role in the convergence speed and accuracy of the algorithm. The population diversity of the original MPA decreases gradually with the iteration of the algorithm, which tends to lead to the local optimum and not the global optimum when solving high-dimensional complex problems [49]. Therefore, in order to ensure that the population diversity remains rich in each iteration, a Neighborhood Dimensional Learning strategy is proposed.

First, at the end of each iteration of the original MPA, a candidate population of the same size is generated for the original population, and it is taken to relocate the new population using either optimal individual information or information about itself, as follows:

where is the position vector of the i-th search agent, is the position vector of the i-th candidate individuals, and is the position vector of the elite individuals, and p is the random probability.

Second, based on the Euclidean distance between the current position and the candidate position , a neighborhood radius is computed by the following equation:

Then, according to , the Euclidean distance which is less than the radius search agent is successively selected from the population, and these individuals are saved as neighbors of the i-th individual. The mathematical expression is as follows:

where denotes the set of individual neighbors and denotes performing a European distance operation.

The next step in neighborhood dimension learning is to update the dimensional coordinates of the current individual using some dimensional information from the population of neighbors and the dimensional information of an individual randomly selected from the entire population, as expressed mathematically as follows:

where represents the d-dimensional information of the i-th search agent, represents the new d-dimensional information after passing the NDL, represents the d-dimensional information of the neighboring individuals, and represents the d-dimensional information of the randomly selected individuals.

Finally, the fitness of the candidate population and the NDL population is calculated to select the newer individuals, as shown by the following mathematical expression.

The pseudo-code of the proposed MSMPA is shown in Algorithm 1.

| Algorithm 1: Pseudo-code of the MSMPA |

| Input: Number of Search Agents: N, Dim, Max_Iter |

| Output: The optimum fitness value |

| Generate chaotic tent mapping sequences by the Equation (11) |

| Initialized populations by the Equation (12) |

| Generation of Opposing Populations by the Equation (13) |

| Selection of the first N well-adapted individuals as the first generation of the population |

| While Iter < Max_Iter |

| Calculating Adaptive Inertia Weights by the Equation (14) |

| Calculating Adaptive Step Control Factors by the Equation (17) |

| Calculate fitness values and construct an elite matrix |

| If Iter < Max_Iter/3 |

| Update prey by the Equation (15) |

| Else if Max_Iter/3 < Iter < 2∗Max_Iter/3 |

| For the first half of the populations (i = 1, …, n/2) |

| Update prey by the Equation (15) |

| For the other half of the populations (i = n/2, …, n) |

| Update prey by the Equation (16) |

| Else if Iter > 2∗Max_Iter/3 |

| Update prey by the Equation (16) |

| End If |

| Generation of candidate populations by the Equation (18) |

| Calculating Neighborhood Radius by the Equation (19) |

| Finding Neighborhood Populations by the Equation (20) |

| Calculation of NDL populations by the Equation (21) |

| Calculate fitness values to update population position by the Equation (22) |

| Updating Memory and Applying FADs effect and update by the Equation (10) |

| Calculate the fitness value of the population by the fitness function |

| Update the current optimum fitness value and the position of the best Search Agent |

| End while |

| Return the optimum fitness value |

3. Simulation Experiments and Comparative Analysis

3.1. Experimental Environment and Algorithm Parameters

In this section, in order to verify the superiority of the proposed MSMPA in solving the large-scale optimization problem, it is shown that the proposed MSMPA can be used to solve the large-scale optimization problem. Eighteen classical benchmarking functions [50] and 30 optimization functions from the CEC2017 competition [51] were selected and tested against seven popular algorithms (MPA [17], PSO [8], GWO [9], WOA [13], MFO [10], SOA [11], and SCA [12]). In order to ensure the fairness of the comparison between evolutionary and swarm intelligence algorithms, the number of iterations is chosen to be replaced by the number of function evaluations in this paper. The population size of the experiment is 30, the maximal number of function evaluation is 30,000 with 30 independent runs on each function. The experimental environment is Windows 10 64 bit, MATLAB R2016A, Intel(R) Core CPU (i5-10210U 2.1GHz), 16G RAM. To ensure the fairness of the comparison experiments, the parameter settings in the original literature are maintained in this paper for each competing algorithm. The specific parameter settings are shown in Table 1.

Table 1.

Parameter settings for each algorithm.

3.2. Benchmark Test Functions

The details of the 18 benchmark functions are shown in Table 2. Table 2 includes seven high-dimensional single-peak functions (F1–F7), six high-dimensional multi-peak functions (F8–F13), and five fixed-dimensional multi-peak functions (F14–F18). Since the high-dimensional single-peak function has only one peak, it is used to test the local convergence and convergence speed of the algorithm; the high-dimensional multipeak function and the fixed-dimensional multipeak function have multiple peaks and only one global optimum, so they are used to test the algorithm’s global optimum search and the ability to jump out of the local optimum.

Table 2.

Description of the 18 classical benchmarking functions.

To further test the performance of the algorithm, the CEC2017 Numerical Optimization Competition Suite functions were also selected, including 3 single-peak functions, 7 multi-peak functions, 10 mixed functions, and 10 composite functions. CEC2017 Numerical Optimization functions are shown in Table 3. The dimensionality of all functions is set to 10.

Table 3.

CEC2017 numerical optimization functions.

3.3. Experimental Results and Analysis

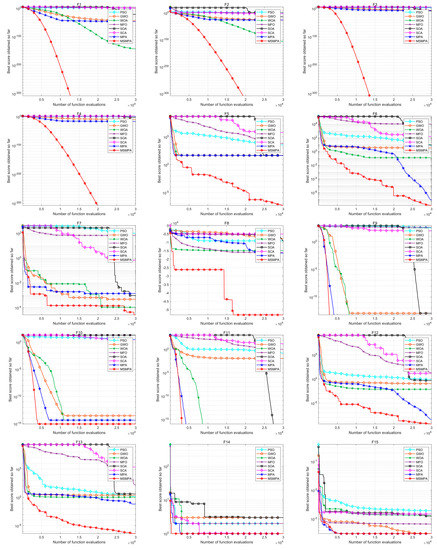

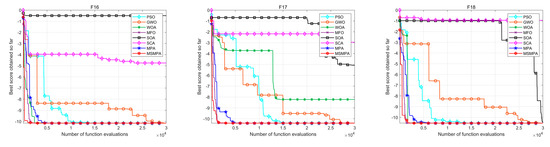

The convergence curve is a visual representation of the ability to evaluate the development and speed of convergence of the algorithm to find the best, and the convergence curves of MSMPA and seven other comparison algorithms on 18 benchmark functions are shown in Figure A1 in Appendix A. From Figure A1, we can see that the convergence speed of MSMPA on F1 to F4 is obviously faster than other algorithms and the end of the convergence curve is also significantly lower than other algorithms; the fastest convergence on F5 to F8, F12 and F13 is not the fastest, but the convergence accuracy of other algorithms is significantly worse than MSMPA; on F9 to F11, the convergence speed and convergence accuracy of MSMPA show significant superiority. MSMPA converges faster than the other algorithms on solid-dimensional multimodal functions, except on F15, where the convergence accuracy is poorer than that of MPA, and MSMPA exhibits significant competitiveness.

In this experiment, the average (Ave), standard deviation (Std), maximum (Max), and minimum (Min) are evaluated. The Friedman’s test [52], a popular statistical test for non-parametric tests, is also used to make it easier to detect differences in the performance of individual algorithms, to compare the average performance of each method across all experimental results, and to place the results at the end of the statistical table of experimental results.

The test results of the 18 benchmark functions are shown in Table A1 in Appendix A, where the bold data are the optimal values of all the methods in the same function performance index. From Table A1, it can be seen that MSMPA performs optimally on the high-dimensional single-peak functions, especially on F1~F4, where MSMPA achieves the theoretical best value. This demonstrates the superiority of MSMPA in local search capability. In the high-dimensional multimode function, MSMPA shows obvious superiority, except for the standard deviation on F8, where SCA is the best performer, MSMPA is the best performer in other performance indexes, especially on F9 and F11, where MSMPA achieves the theoretical global optimum. On the fixed-dimensional multimode functions, except for F15, which is second to MPA, MSMPA is the best performer on all other functions, especially on F16–F18, where MSMPA is optimized to the theoretical extremes and its standard deviation is zero. MSMPA is the first place in the average rank of all functions ranked by Friedman’s test.

The experimental results of CEC2017 are shown in Table A2 in Appendix A, in which the bold font in the table is the optimal value of the current function under the same evaluation index. From Table A2, it can be concluded that, in addition to the non-optimal performance on F16, F21, F22, F24, and F25, the best performance is achieved on the other functions, so the overall performance is optimal, and it is noteworthy that the theoretical extremes were achieved on CF2. The average ranking of MSMPA in the Friedman test is 1.6167, which is still the best performance, followed by MPA.

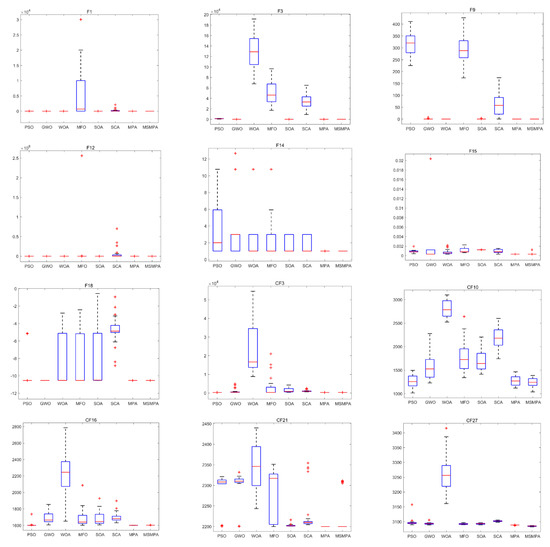

3.4. Algorithmic Stability Analysis

In order to show the stability of MSMPA and other algorithms more visually, this experiment uses a box line diagram to show the distribution of the results of each function after 30 independent tests. The numerical distribution of some of the selected functions is selected from the test functions, and the test result box diagram of the selected functions is shown in Figure A2 in Appendix A.

From Figure A2, it can be seen that MSMPA exhibits remarkable stability and superior performance over the other algorithms for all functions, as shown by the fact that the lower edge, upper edge, and median are always lower than the other algorithms for the same function.

3.5. Wilcoxon Rank Sum Test Analysis

Since there are too many random factors affecting the performance of the algorithm, a statistical test is used to compare the difference in performance between MSMPA and other algorithms. The widely used Wilcoxon’s rank sum test [53] was selected, and 5% was set as the index of significance, and p-values were obtained from the two-rank sum analysis of MSMPA and other algorithms. If the p-value is greater than 5% or NaN, it means that MSMPA is not statistically significantly different on this function. Wilcoxon’s rank-sum tests for MSMPA and other algorithms yielded the p-values shown in Table A3 in Appendix A, where the bold text in the table indicates values greater than 5% or NaN.

From Table A3, it can be concluded that on F9, the MSMPA, MPA, and WOA tests show results for NaN because on F9, all three algorithms take the theoretical optimum; both MSMPA and MPA took the theoretical optimum on F11 and CF2, with no statistically significant differences between MSMPA and GWO and WOA on F11; MSMPA was not statistically significantly different from MPA only on F14; No statistically significant differences between MSMPA and MFO on F16, F17, CF3, CF9, and CF22; No statistically significant differences between MSMPA and PSO on CF2, CF11, CF20, CF23, and CF28; MSMPA was statistically significantly different from SOA only on CF21. In general, MSMPA is statistically significantly different from other algorithms in most functions, demonstrating its superior ability to find the global optimum and jump out of the local optimum.

3.6. High-Dimensional Functional Test Analysis

MPA performs poorly when dealing with complex high-dimensional problems and tends to fall into local optimality. In order to verify the better performance of the proposed MSMPA in global search and local optimization avoidance, the dimensions of F1–F13 are set to 100, 200, and 500, respectively. It is stills run independently with the other seven algorithms at the population size of 30 and the maximum number of iterations of 1000 for 30 times, and the results are counted and used in the Friedman test. The test results for each high-dimensional function with dimensions of 100, 200, and 500 are shown in Table A4, Table A5 and Table A6 in Appendix A, respectively.

It can be seen from Table A4, Table A5 and Table A6 that, apart from the second better performance of MSMPA on F2 with a dimension of 500 than WOA, MSMPA is significantly more competitive than other algorithms in dealing with complex and high-dimensional problems. The final result of its Friedman test for the mean is ranked first. It is worth noting that, on F1~F4, F9 and F11, MSMPA achieved the theoretical optimal value. These just prove the stability and effectiveness of MSMPA in solving complex high-dimensional problems.

4. Semi-Supervised Extreme Learning Machines and Its Improvements

4.1. Semi-Supervised Extreme Learning Machine

The mathematical expression for the objective function of SSELM is defined by introducing a Laplacian regularization term and using information from unlabeled samples as follows:

The solution to SSELM can be obtained with the following expression:

where is the unit matrix of nh.

If the number of crypto neurons is greater than the number of labeled samples, then the following solution can be adopted, with the following expression:

where is the unit matrix of .

4.2. Joint Hessian and Supervised Information Regularization Semi-Supervised Extreme Learning Machine

4.2.1. Hessian Regularization

The Hessian regularization term [41] provides a simple way to establish the relationship between mappings and manifolds, which is derived from the Eells energy. The Hessian energy can also be estimated by applying a simplified form of the derivative of the second order of regular coordinates. Let be the set of k hypotenuse samples at sample point Xi, and estimate the Hessian of f at Xi by computing H. The Hessian can be approximated as follows:

The above equation can be solved by using linear least squares for a second-order polynomial. The ideal form of . Hessian’s estimate of the Frobenius paradigm is thus obtained:

where is the Hessian energy to complete all the estimates.

Given a European clustering expression for Hessian at point Xi:

where matrix B is the sum of all data points, and n represents the number of all labeled and unlabeled data.

4.2.2. Supervisory Information Regularization

Assuming that the labels of Xi and Xj are the same, then, assuming that the coefficients at this time, the difference between their decision functions is also small, then the optimization problem can be defined as with the following expression for the elements of the coefficient matrix LS:

where Ls is the number of combinations of all labeled consistent samples, others include the case where one of the comparative combinations exists with or without labeled samples, and i,j = 1, 2, ..., l, ..., l + u.

Assuming that the labels of Xi and Xj are different at the same time, then the product of the decision function (f(Xi)*f(Xj)) is small, assuming that the coefficients . The optimization problem can be defined as , with the following expression for the elements of the coefficient matrix LD:

where Ld is the number of combinations of all label inconsistent samples, and the others include the cases where one of the comparative combinations has an unlabeled sample.

The mathematical expression defining the regularization term of the supervision constraint is as follows:

where LS _D is the diagonal matrix of the matrix LS.

4.2.3. Joint Regularization SSELM

In order to make full use of the direct associations of various categories contained in the label information, a regularization term of supervision information is introduced to enhance SSELM. Then, the Laplacian regularization in the original SSELM is replaced with the joint Hessian regularization and supervision information regularization term, hence, JRSSELM is proposed.

Assume that the training set consists of L labeled data and U unlabeled data, represented by and , respectively. Among the L labeled data, there are Ls combinations with the same label, and Ld combinations with different labels. The Hessian regularization and supervision information regularization terms are introduced to replace the original Laplace regularization terms, and the objective function optimized by JRSSELM can be obtained. The mathematical expression is defined as follows:

where and are tradeoff parameters.

Substituting the constraints into the objective function, a new optimized objective function can be obtained, and its expression is as follows:

where represents the training target to be reinforced, C is a -dimensional matrix whose first l diagonal element is , and all the other elements are zero.

Then the derivative of the objective function to β is expressed as follows:

If the derivative value is set to zero, then the JRSSELM solution can be obtained, and its expression is as follows:

If the number of hidden layer neurons is greater than the number of labeled samples, the following solutions can be adopted, and the expression is as follows:

where is the unit matrix of .

4.3. Hybrid MSMPA and Joint Regularized Semi-Supervised Extreme Learning Machine

The input weights and hidden layer deviations in the original SSELM are generated by randomization and will not be adjusted in the future. The grid selection of its hyperparameters often leads to limited performance and poor stability of the model. The values of hyperparameters are all {10−5, 10−4, …, 104, 105}, which can be calculated by the following mathematical expressions:

where i and j are integers between [0, 11].

JRSSELM and SSELM maintain consistency in parameter settings. The input weights and hidden layer deviations are also randomly generated and will not be updated in the future. The hyperparameters are also selected for gridding, and the value range remains the same as in SSELM. Its mathematical expression is as follows:

where l and m are integers between [0, 11].

MSMPA is proposed to optimize the selection of various parameters in JRSSELM. To further improve the classification performance and robustness of JRSSELM, first, input weight w, hidden layer deviation b, , , and as the coordinates of the search agent. If the number of hidden layers at this time is hn, and the number of neurons in the input layer is hi, then each prey dimension is . It is worth noting that the first -dimensional coordinates represent the input weight and hidden layer threshold, so its value range is [−1, 1], and the last three dimensions represent the position information of , and , respectively. The range is set to [−5, 5]. To be consistent with the value range of JRSSELM, the calculation can be performed as follows:

where is the total dimension and represents the M-dimensional coordinates of the i-th search agents.

Secondly, the selection of the fitness function is particularly important. In order to make a fair comparison with SSELM and JRSSELM, both SSELM and JRSSELM select the optimal hyperparameter combination by calculating the prediction error of the test set. Therefore, the fitness function is also selected as the test set prediction error in MSMPA, and its mathematical expression is as follows:

where TP stands for true positive, TN stands for true negative, FP stands for false positive, and FN stands for false negative.

The pseudo code for the MSMPA and JRSSELM mix is shown in Algorithm 2.

| Algorithm 2. Pseudo-code of the MSMPA-JRSSELM |

| Input: L labeled samples |

| U unlabeled samples, |

| Number of Search Agents: N, Dim, Max_Iter |

| Output: The best mapping function of JRSSELM:f: |

| Step 1: Constructing Hessian regularization terms and supervisory information regularization terms via and . |

| Step 2: Generate chaotic tent mapping sequences by the Equation (11) |

| Initialized populations by the Equation (12) |

| Generation of Opposing Populations by the Equation (13) |

| Selection of the first N well-adapted individuals as the first generation of the population |

| Step 3: |

| If |

| Calculate the output weights by Equation (35) |

| Else if |

| Calculate the output weights by Equation (36) |

| Step 4: |

| While Iter < Max_Iter |

| Update all population positions by MSMPA |

| Repeat step 3 |

| Calculate the fitness of each search agent by the Equation (44) |

| End while |

| Step 5: Outputs the best search agent position and optimal value |

| Step 6: Optimal mapping functions of JLSSELM: |

5. Oil Logging Oil Layer Identification Applications

5.1. Design of Oil Layer Identification System

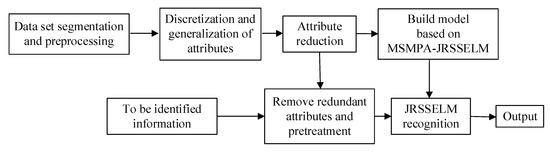

In oil logging, the oil layer identification technology is a very complex dynamic research technology, and it is also a very critical element. Many factors affect the oil layer distribution, such as reservoir thickness, oil pressure, permeability, water content, reservoir pressure, storage effective thickness, and so on. The most important thing is the accuracy of the identification, which can assist the oil layer exploration and provide effective information for the engineers to make the decision. The MSMPA-JRSSELM proposed in this paper is highly accurate in classification and can make accurate predictions of oil layer distribution on a test set using a small number of marker samples and a large number of unmarked samples. Therefore, MSMPA-JRSSELM is applied to oil logging to verify the effectiveness of this algorithm by using oil data provided by a Chinese oil field. The block diagram of the oil layer identification system is shown in Figure 3.

Figure 3.

Block diagram of MSMPA-SSELM oil layer identification system.

From Figure 3, it can be seen that there are several major steps in oil layer identification.

Phase 1 (Data set delineation and pre-processing). The selection of the dataset should be complete and comprehensive and should be closely related to the oil layer evaluation. The data set is divided into two parts: training sample and test sample, in which a small portion of samples from the training set are selected as labeled samples.

Phase 2 (Attribute discretization and generalization). In order to approximate the attributes of the sample information, the decision attributes = {},, where −1 and 1 represent the dry layer and the oil layer, respectively. For the discretization of the conditional attributes, in order to conform to the actual geophysical characteristics, the continuous attributes are discretized by the curvilinear inflection point method [54], in which each attribute is discretized separately in turn, i.e., first, the attribute values are arranged from small to large to find the possible inflection point locations, and then the appropriate discretization points are filtered out according to the target layer range constraints.

Phase 3 (Attribute reduction of data information). Since the degree of decisiveness of each conditional attribute on the oil layer distribution is not consistent, there are more than ten conditional attributes in the logging data, but only a few conditional attributes play a decisive role, so it is necessary to eliminate other redundant attributes to avoid algorithm redundancy. In this paper, we adopt a Rough Set based on consistent coverage for attribute reduction [55].

Phase 4 (Training the MSMPA-JRSSELM classifier). In the MSMPA-JRSSELM model, the sample information is input after attribute reduction, and training is carried out using the JRSSELM model. The MSMPA is used to find better training parameters to improve the model recognition accuracy until the iterations are completed, and the optimal MSMPA-JRSSELM classification model is obtained.

Phase 5 (Test Set Prediction Task). The trained MSMPA-JRSSELM model is used to predict the formation for the entire well oil layer of the test set and output the results. To validate the validity and stability of the model, we selected data from two wells for the experimental comparative analysis.

5.2. Practical Applications

In order to verify the application effect of the semi-supervised model optimized by the improved algorithm, three logging data were selected from the database for training and testing, and they were recorded as well 1 and well 2. The data set division of these two wells is shown in Table 4. It can be seen from Table 4 that the oil layer and dry layer distribution range in the training set and test set. In addition, the attribute reduction results of the two wells are shown in Table 5. It can be seen from Table 5 that there are many redundant conditional attributes in the original data. After Rough Set attribute reduction, important attributes can be selected, which greatly simplifies the complexity of the algorithm. The value range of attributes after attribute reduction is shown in Table 6.

Table 4.

Details of the data set division between the two wells.

Table 5.

Results of attribute reduction.

Table 6.

Range of values for each attribute after attribute reduction.

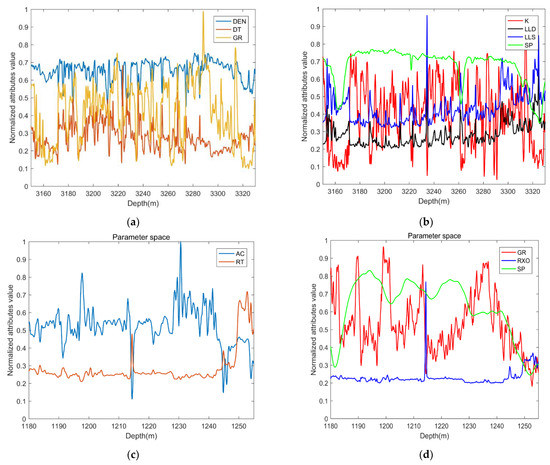

From Table 6, we can see the range of values for each of the well 1 and well 2 properties, where GR stands for natural gamma, DT stands for acoustic time difference, SP stands for natural potential, LLD stands for deep lateral resistivity, LLS stands for shallow lateral resistivity, DEN stands for compensation density, and K stands for potassium. AC stands for acoustic time difference, RT stands for in situ ground resistivity, and RXO stands for flush zone resistivity. For the simplified conditional attributes, since the units of each attribute are different and the value ranges are different, the data should be normalized first, so that the range of the sample data is between [0, 1], and then the normalized influencing factor data should be substituted into the network for training and testing to obtain the results. The formula for normalizing the sample is as follows:

where , is the minimum value of the data sample attribute, and is the maximum value of the data sample attribute.

After normalizing the reduced attributes, the logging curve is shown in Figure 4, where the horizontal axis represents the depth and the vertical axis represents the normalized value. It can be seen from Figure 4 that the logging curves of each condition attribute are completely different, and effective information cannot be obtained directly from the logging curves.

Figure 4.

Normalized curves for each conditional property: (a,b) belong to well 1; (c,d) belong to well 2.

To evaluate the performance of the recognition model, in addition to the test set prediction accuracy, we defined the following performance metrics:

where and y are the predicted and desired output values, respectively.

The RMSE is a measure of the deviation between the predicted value and the true value, and the MAE is the average of the absolute errors. The smaller the RMSE and MAE, the better the performance of the algorithmic model. Therefore, RMSE is used as a criterion for evaluating the accuracy of each algorithmic model, and MAE is often used as the actual prediction and prediction error because it better reflects the actual error of the predicted value.

In order to comprehensively test the superiority of the proposed JRSSELM and MSMPA-JRSSELM models on semi-supervised learning, we divided the labeled samples into two categories of 10% and 20% in the training set and conducted comparison experiments with the supervised learning model ELM and other semi-supervised learning models, including LapSVM [56], SSELM, and MPA-JRSSELM. They were run independently for 30 times, the number of hidden layer neurons was 100, and the maximum number of iterations was 100. Then, the average of the individual performance metrics was calculated. The classification performance of each model on well 1 is shown in Table 7. It should be noted that although the number of labeled samples has different ratios, the training set is invariant for ELM, so its ACC, MAE, and RMSE are consistent across the labeled samples.

Table 7.

Performance of each model on well 1.

From Table 7, it can be seen that LapSVM performs the worst, with the proposed JRSSELM improving its classification accuracy by nearly 3% over SSELM. With the increase in the number of labeled samples in the training set, the MSMPA-JRSSELM performance was further improved after MSMPA optimized selection parameters, with nearly 5% higher than SSELM and 2% higher than JRSSELM, and with the lowest MAE and RMSE, reflecting the significant competitive advantage of MSMPA-JRSSELM on well 1.

The classification performance of each model on Well 2 is shown in Table 8. It can be seen from Table 8 that LapSVM performs the worst, and the classification performance of SSELM is inferior to ELM, while the classification accuracy of JRSSELM proposed by us is slightly higher than that of ELM, and it is more noteworthy that MSMPA-JRSSELM improves the classification accuracy by nearly 8% compared to SSELM, reaching about 98% classification accuracy, and its MAE and RMSE are also the lowest values, which is helpful for well discrimination decision making. It has engineering applications, which reflect the obvious advantages of the proposed classifier for oil layer identification in oil logging.

Table 8.

Performance of each model on well 2.

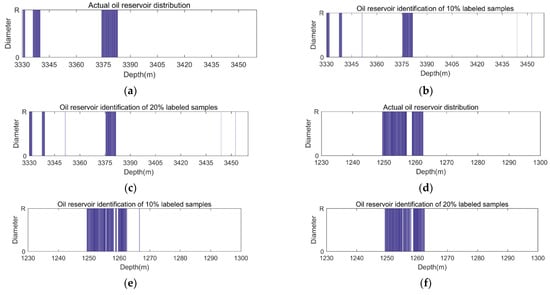

In order to more intuitively observe the original oil test conclusions and predicted oil layer distribution of the test set, the original and predicted oil layer distributions of the two wells are shown in Figure 5. It can be seen from Figure 5 that for Well 1, as the proportion of labeled samples increases, the oil layer prediction is significantly more accurate, and the oil layer distribution position is basically consistent with the oil test conclusion. For Well 2, whether it is a training model with 10% or 20% of the training set of labeled samples, its predictions are almost consistent with the oil test conclusions, which has application significance for auxiliary oil logging.

Figure 5.

Original and predicted oil layer distribution for two wells, where (a–c) is the distribution for well 1, and (d–f) is the distribution for well 2.

6. Conclusions

In this paper, in order to better utilize only a small number of labeled samples and a large number of unlabeled samples to obtain better classification performance, a novel semi-supervised classification model, namely MSMPA-JRSSELM, is proposed.

First, the MSMPA is designed to address the shortcomings of the original MPA in solving global optimization problems such as slow convergence and poor ability to avoid local optima. There are 3 efficient strategies introduced in MPA. A chaotic opposition learning strategy is used to ensure high quality initial populations, adaptive inertial weights, and adaptive step control factors are adopted to enhance early global exploration and later fine-grained exploitation behavior and speed up convergence, and a neighbor dimensional learning strategy is proposed to ensure population diversity in each iteration. Among the 18 classical benchmark functions and 30 CEC2017 competition functions, MSMPA exhibits significant superiority over other algorithms, especially in solving high-dimensional complex problems, MSMPA exhibits strong global search and the ability to avoid local optimality.

Secondly, since SSELM uses Laplace regularization with weaker inferential power, Hessian regularization with stronger extrapolation power and the ability to maintain the manifold structure is used instead of Laplace regularization. Third, in response to the SSELM’s inability to fully utilize the valid information embedded in the labeled samples, a supervised regularization term that assigns new coefficient weights to the given labeled information is proposed. Hessian regularization and supervised regularization are added to ELM to propose JRSSELM. finally, to further improve the classification performance of JRSSELM, MSMPA is proposed to optimize the selection of input weights, implied thresholds and hyperparameters in JRSSELM. To verify the effectiveness of JRSSELM and MSMPA-JRSSELM, they are applied to oil layer identification in logging. The experimental results show that JRSSELM and MSMPA-JRSSELM outperform SSELM and other popular classification methods in ACC, MAE and RMSE, especially MSMPA-JRSSELM shows the most excellent classification performance.

Further research and applications of MPA and SSELM are warranted, considering the combination of MPA with other meta-heuristics, the introduction of cost-sensitive learning in SSELM, and the application of MPA and SSELM to other complex engineering problems. We consider combining SSELM with deep learning, adding self-encoder, convolution, down sampling, and other deep learning methods, which can realize automatic feature extraction and finally apply to regression problems such as short-term power load forecasting, meteorological load forecasting and wind power interval forecasting.

Author Contributions

W.Y.: Conceptualization, Methodology, Software, Writing—Original Draft, Writing—Review & Editing, Supervision. K.X.: Validation, Investigation, Project administration. T.L.: Data Curation, Resources, Funding acquisition. M.X.: Formal analysis. F.S.: Visualization. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the National Natural Science Foundation of China (No.U1813222, No.42075129), Tianjin Natural Science Foundation (No.18JCYBJC16500) and Key Research and Development Project from Hebei Province(No.19210404D, No.20351802D).

Data Availability Statement

All data are available upon request from the corresponding author.

Conflicts of Interest

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Abbreviations

The main abbreviations in this paper are shown below.

| ELM | Extreme Learning Machines |

| SSELM | Semi-Supervised Extreme Learning Machine |

| JRSSELM | Joint Regularized Semi-Supervised Extreme Learning Machine |

| MPA | Marine Predator Algorithm |

| MSMPA | Multi-Strategy Marine Predator Algorithm |

| PSO | Particle Swarm Optimization |

| GWO | Gray Wolf Optimization |

| MFO | Moth Flame Optimization |

| SOA | Seagull Optimization Algorithm |

| SCA | Sine Cosine Algorithm |

| WOA | Whale Optimization Algorithm |

| COA | Coyote Optimization Algorithm |

| CPA | Carnivorous Plant Algorithm |

| TSA | Transient Search Algorithm |

| RF | Random Forests |

| SVM | Support Vector Machines |

| LapSVM | Laplace Support Vector Machines |

| FADS | Fish Aggregation Device Effects |

| NDL | Neighborhood Dimensional Learning |

| GR | natural gamma |

| DT | acoustic time difference |

| SP | natural potential |

| LLD | deep lateral resistivity |

| LLS | shallow lateral resistivity |

| DEN | compensation density |

| K | Potassium |

| AC | acoustic time difference |

| RT | in situ ground resistivity |

| RXO | flush zone resistivity |

Appendix A

The experimental results in Section 3 are presented below, including statistics for each algorithm, Wilcoxon’s rank sum test results, convergence curves, and box line plots.

Figure A1.

Convergence curves for 18 benchmark functions.

Figure A2.

Box line diagram of partially selected functions.

Table A1.

Test results of 18 benchmark functions.

Table A1.

Test results of 18 benchmark functions.

| Function | Criteria | MSMPA | MPA | PSO | GWO | WOA | MFO | SOA | SCA |

|---|---|---|---|---|---|---|---|---|---|

| F1 | Ave | 0.00 × 10+00 | 2.69 × 10−46 | 6.83 × 10+00 | 6.68 × 10−44 | 1.04 × 10−147 | 7.07 × 10+03 | 1.51 × 10−20 | 2.49 × 10+02 |

| Std | 0.00 × 10+00 | 5.14 × 10−46 | 2.36 × 10+00 | 6.83 × 10−44 | 5.56 × 10−147 | 8.97 × 10+03 | 6.40 × 10−20 | 4.54 × 10+02 | |

| Max | 0.00 × 10+00 | 2.51 × 10−45 | 1.32 × 10+01 | 2.82 × 10−43 | 3.10 × 10−146 | 3.00 × 10+04 | 3.58 × 10−19 | 2.10 × 10+03 | |

| Min | 0.00 × 10+00 | 8.73 × 10−50 | 2.58 × 10+00 | 1.17 × 10−45 | 7.10 × 10−169 | 5.58 × 10−01 | 8.03 × 10−24 | 3.35 × 10−01 | |

| F2 | Ave | 0.00 × 10+00 | 1.54 × 10−26 | 1.07 × 10+01 | 4.86 × 10−26 | 1.53 × 10−102 | 7.23 × 10+01 | 5.22 × 10−14 | 3.62 × 10−02 |

| Std | 0.00 × 10+00 | 1.84 × 10−26 | 1.99 × 10+00 | 7.31 × 10−26 | 7.40 × 10−102 | 3.59 × 10+01 | 4.46 × 10−14 | 1.07 × 10−01 | |

| Max | 0.00 × 10+00 | 6.62 × 10−26 | 1.43 × 10+01 | 4.06 × 10−25 | 4.13 × 10−101 | 1.60 × 10+02 | 1.68 × 10−13 | 6.03 × 10−01 | |

| Min | 0.00 × 10+00 | 2.69 × 10−29 | 6.18 × 10+00 | 6.58 × 10−27 | 2.77 × 10−113 | 1.02 × 10+01 | 2.92 × 10−15 | 1.68 × 10−04 | |

| F3 | Ave | 0.00 × 10+00 | 1.41 × 10−07 | 1.14 × 10+03 | 4.79 × 10−05 | 1.26 × 10+05 | 5.10 × 10+04 | 5.27 × 10−08 | 3.40 × 10+04 |

| Std | 0.00 × 10+00 | 4.73 × 10−07 | 2.68 × 10+02 | 2.41 × 10−04 | 3.33 × 10+04 | 2.18 × 10+04 | 1.54 × 10−07 | 1.27 × 10+04 | |

| Max | 0.00 × 10+00 | 1.94 × 10−06 | 1.72 × 10+03 | 1.35 × 10−03 | 1.92 × 10+05 | 9.65 × 10+04 | 7.80 × 10−07 | 6.49 × 10+04 | |

| Min | 0.00 × 10+00 | 1.15 × 10−15 | 4.71 × 10+02 | 9.28 × 10−10 | 6.77 × 10+04 | 1.72 × 10+04 | 2.45 × 10−13 | 9.15 × 10+03 | |

| F4 | Ave | 0.00 × 10+00 | 1.34 × 10−17 | 3.27 × 10+00 | 1.54 × 10−09 | 6.31 × 10+01 | 8.44 × 10+01 | 2.51 × 10−01 | 5.97 × 10+01 |

| Std | 0.00 × 10+00 | 1.04 × 10−17 | 3.66 × 10−01 | 1.77 × 10−09 | 2.58 × 10+01 | 4.68 × 10+00 | 7.91 × 10−01 | 8.01 × 10+00 | |

| Max | 0.00 × 10+00 | 4.37 × 10−17 | 4.44 × 10+00 | 7.16 × 10−09 | 9.30 × 10+01 | 9.22 × 10+01 | 4.09 × 10+00 | 7.34 × 10+01 | |

| Min | 0.00 × 10+00 | 2.09 × 10−18 | 2.30 × 10+00 | 1.35 × 10−10 | 6.17 × 10+00 | 7.73 × 10+01 | 6.95 × 10−07 | 4.50 × 10+01 | |

| F5 | Ave | 7.20 × 10−06 | 4.44 × 10+01 | 3.46 × 10+03 | 4.73 × 10+01 | 4.77 × 10+01 | 1.34 × 10+07 | 4.84 × 10+01 | 6.32 × 10+05 |

| Std | 1.10 × 10−05 | 7.72 × 10−01 | 1.57 × 10+03 | 7.86 × 10−01 | 5.31 × 10−01 | 3.62 × 10+07 | 3.98 × 10−01 | 9.81 × 10+05 | |

| Max | 4.12 × 10−05 | 4.77 × 10+01 | 7.11 × 10+03 | 4.86 × 10+01 | 4.86 × 10+01 | 1.60 × 10+08 | 4.88 × 10+01 | 4.37 × 10+06 | |

| Min | 3.86 × 10−12 | 4.32 × 10+01 | 1.32 × 10+03 | 4.61 × 10+01 | 4.67 × 10+01 | 4.03 × 10+02 | 4.72 × 10+01 | 4.06 × 10+03 | |

| F6 | Ave | 7.49 × 10−07 | 1.13 × 10−02 | 6.38 × 10+00 | 2.40 × 10+00 | 4.11 × 10−01 | 8.09 × 10+03 | 7.19 × 10+00 | 1.75 × 10+02 |

| Std | 9.73 × 10−07 | 4.31 × 10−02 | 2.08 × 10+00 | 5.60 × 10−01 | 2.24 × 10−01 | 9.11 × 10+03 | 5.36 × 10−01 | 3.22 × 10+02 | |

| Max | 3.87 × 10−06 | 2.16 × 10−01 | 1.25 × 10+01 | 3.25 × 10+00 | 1.04 × 10+00 | 4.02 × 10+04 | 8.13 × 10+00 | 1.22 × 10+03 | |

| Min | 1.05 × 10−08 | 3.24 × 10−08 | 3.04 × 10+00 | 1.25 × 10+00 | 8.74 × 10−02 | 1.08 × 10+00 | 5.89 × 10+00 | 8.70 × 10+00 | |

| F7 | Ave | 2.48 × 10−05 | 6.96 × 10−04 | 1.63 × 10+02 | 1.24 × 10−03 | 1.50 × 10−03 | 1.56 × 10+01 | 1.62 × 10−03 | 9.81 × 10−01 |

| Std | 1.95 × 10−05 | 3.03 × 10−04 | 6.65 × 10+01 | 6.62 × 10−04 | 1.77 × 10−03 | 1.87 × 10+01 | 1.59 × 10−03 | 1.29 × 10+00 | |

| Max | 8.65 × 10−05 | 1.17 × 10−03 | 3.12 × 10+02 | 2.75 × 10−03 | 7.74 × 10−03 | 7.84 × 10+01 | 7.56 × 10−03 | 5.10 × 10+00 | |

| Min | 8.50 × 10−07 | 1.76 × 10−04 | 4.01 × 10+01 | 4.42 × 10−04 | 2.61 × 10−06 | 4.46 × 10−01 | 2.76 × 10−04 | 2.77 × 10−02 | |

| F8 | Ave | −4.67 × 10+04 | −1.51 × 10+04 | −9.99 × 10+03 | −9.71 × 10+03 | −1.86 × 10+04 | −1.31 × 10+04 | −7.25 × 10+03 | −5.02 × 10+03 |

| Std | 1.12 × 10+04 | 6.77 × 10+02 | 2.01 × 10+03 | 8.12 × 10+02 | 2.60 × 10+03 | 1.27 × 10+03 | 1.09 × 10+03 | 3.08 × 10+02 | |

| Max | −1.85 × 10+04 | −1.39 × 10+04 | −4.30 × 10+03 | −8.11 × 10+03 | −1.32 × 10+04 | −1.10 × 10+04 | −5.82 × 10+03 | −4.61 × 10+03 | |

| Min | −5.45 × 10+04 | −1.63 × 10+04 | −1.25 × 10+04 | −1.12 × 10+04 | −2.09 × 10+04 | −1.51 × 10+04 | −1.00 × 10+04 | −5.68 × 10+03 | |

| F9 | Ave | 0.00 × 10+00 | 0.00 × 10+00 | 3.18 × 10+02 | 3.24 × 10−01 | 0.00 × 10+00 | 2.98 × 10+02 | 1.16 × 10−01 | 6.41 × 10+01 |

| Std | 0.00 × 10+00 | 0.00 × 10+00 | 4.37 × 10+01 | 1.39 × 10+00 | 0.00 × 10+00 | 6.59 × 10+01 | 6.23 × 10−01 | 4.72 × 10+01 | |

| Max | 0.00 × 10+00 | 0.00 × 10+00 | 4.11 × 10+02 | 7.48 × 10+00 | 0.00 × 10+00 | 4.26 × 10+02 | 3.47 × 10+00 | 1.74 × 10+02 | |

| Min | 0.00 × 10+00 | 0.00 × 10+00 | 2.25 × 10+02 | 0.00 × 10+00 | 0.00 × 10+00 | 1.73 × 10+02 | 0.00 × 10+00 | 1.32 × 10−01 | |

| F10 | Ave | 8.88 × 10−16 | 4.32 × 10−15 | 3.18 × 10+00 | 3.32 × 10−14 | 3.73 × 10−15 | 1.95 × 10+01 | 2.00 × 10+01 | 1.94 × 10+01 |

| Std | 0.00 × 10+00 | 6.38 × 10−16 | 3.72 × 10−01 | 4.71 × 10−15 | 2.81 × 10−15 | 5.73 × 10−01 | 9.30 × 10−04 | 3.73 × 10+00 | |

| Max | 8.88 × 10−16 | 4.44 × 10−15 | 4.04 × 10+00 | 4.35 × 10−14 | 7.99 × 10−15 | 2.00 × 10+01 | 2.00 × 10+01 | 2.05 × 10+01 | |

| Min | 8.88 × 10−16 | 8.88 × 10−16 | 2.49 × 10+00 | 2.22 × 10−14 | 8.88 × 10−16 | 1.76 × 10+01 | 2.00 × 10+01 | 9.91 × 10−02 | |

| F11 | Ave | 0.00 × 10+00 | 0.00 × 10+00 | 1.90 × 10−01 | 2.40 × 10−03 | 8.03 × 10−03 | 7.33 × 10+01 | 4.70 × 10−03 | 1.31 × 10+00 |

| Std | 0.00 × 10+00 | 0.00 × 10+00 | 7.04 × 10−02 | 7.28 × 10−03 | 2.42 × 10−02 | 7.82 × 10+01 | 1.35 × 10−02 | 7.09 × 10−01 | |

| Max | 0.00 × 10+00 | 0.00 × 10+00 | 4.10 × 10−01 | 2.71 × 10−02 | 8.76 × 10−02 | 2.71 × 10+02 | 6.16 × 10−02 | 4.82 × 10+00 | |

| Min | 0.00 × 10+00 | 0.00 × 10+00 | 9.82 × 10−02 | 0.00 × 10+00 | 0.00 × 10+00 | 7.64 × 10−01 | 0.00 × 10+00 | 6.90 × 10−01 | |

| F12 | Ave | 2.33 × 10−09 | 1.88 × 10−04 | 1.96 × 10−01 | 7.81 × 10−02 | 1.15 × 10−02 | 1.71 × 10+07 | 4.92 × 10−01 | 5.61 × 10+06 |

| Std | 2.83 × 10−09 | 6.10 × 10−04 | 3.31 × 10−01 | 2.68 × 10−02 | 7.35 × 10−03 | 6.39 × 10+07 | 1.00 × 10−01 | 1.42 × 10+07 | |

| Max | 1.42 × 10−08 | 2.96 × 10−03 | 1.88 × 10+00 | 1.54 × 10−01 | 3.48 × 10−02 | 2.56 × 10+08 | 7.93 × 10−01 | 7.01 × 10+07 | |

| Min | 2.10 × 10−12 | 1.78 × 10−09 | 3.36 × 10−02 | 3.38 × 10−02 | 3.39 × 10−03 | 2.27 × 10+00 | 3.42 × 10−01 | 5.09 × 10+00 | |

| F13 | Ave | 4.59 × 10−08 | 9.99 × 10−02 | 1.96 × 10+00 | 1.92 × 10+00 | 6.35 × 10−01 | 4.10 × 10+07 | 3.95 × 10+00 | 3.43 × 10+06 |

| Std | 5.09 × 10−08 | 9.80 × 10−02 | 5.29 × 10−01 | 3.47 × 10−01 | 2.93 × 10−01 | 1.23 × 10+08 | 2.44 × 10−01 | 5.41 × 10+06 | |

| Max | 2.06 × 10−07 | 3.73 × 10−01 | 2.82 × 10+00 | 2.45 × 10+00 | 1.41 × 10+00 | 4.10 × 10+08 | 4.46 × 10+00 | 2.59 × 10+07 | |

| Min | 5.46 × 10−11 | 8.88 × 10−08 | 1.14 × 10+00 | 7.63 × 10−01 | 1.92 × 10−01 | 2.27 × 10+01 | 3.54 × 10+00 | 6.89 × 10+02 | |

| F14 | Ave | 9.98 × 10−01 | 9.98 × 10−01 | 3.30 × 10+00 | 3.52 × 10+00 | 2.08 × 10+00 | 2.25 × 10+00 | 1.59 × 10+00 | 1.59 × 10+00 |

| Std | 0.00 × 10+00 | 5.73 × 10−17 | 2.67 × 10+00 | 3.54 × 10+00 | 2.45 × 10+00 | 2.10 × 10+00 | 9.09 × 10−01 | 9.08 × 10−01 | |

| Max | 9.98 × 10−01 | 9.98 × 10−01 | 1.08 × 10+01 | 1.27 × 10+01 | 1.08 × 10+01 | 1.08 × 10+01 | 2.98 × 10+00 | 2.98 × 10+00 | |

| Min | 9.98 × 10−01 | 9.98 × 10−01 | 9.98 × 10−01 | 9.98 × 10−01 | 9.98 × 10−01 | 9.98 × 10−01 | 9.98 × 10−01 | 9.98 × 10−01 | |

| F15 | Ave | 0.0004 | 0.0003 | 0.0008 | 0.0038 | 0.0007 | 0.001 | 0.0012 | 0.0009 |

| Std | 0.0002 | 0 | 0.0003 | 0.0074 | 0.0005 | 0.0004 | 0 | 0.0004 | |

| Max | 0.0012 | 0.0003 | 0.0019 | 0.0204 | 0.0022 | 0.0023 | 0.0012 | 0.0015 | |

| Min | 0.0003 | 0.0003 | 0.0004 | 0.0003 | 0.0003 | 0.0006 | 0.0012 | 0.0003 | |

| F16 | Ave | −10.1532 | −10.1532 | −7.7963 | −9.6475 | −8.6505 | −8.0628 | −3.5614 | −2.885 |

| Std | 0 | 0 | 2.7564 | 1.5157 | 2.5831 | 3.0377 | 4.3677 | 1.8174 | |

| Max | −10.1532 | −10.1532 | −2.6305 | −5.1003 | −0.881 | −2.6305 | −0.3507 | −0.4965 | |

| Min | −10.1532 | −10.1532 | −10.1532 | −10.1531 | −10.153 | −10.1532 | −10.1373 | −4.9475 | |

| F17 | Ave | −10.4028 | −10.4028 | −10.2258 | −10.2267 | −8.5934 | −8.7168 | −6.0807 | −4.1795 |

| Std | 0 | 0 | 0.9541 | 0.9467 | 2.7846 | 3.065 | 4.5085 | 2.4846 | |

| Max | −10.4028 | −10.4028 | −5.0877 | −5.1284 | −2.7655 | −2.7659 | −0.3724 | −0.5224 | |

| Min | −10.4028 | −10.4028 | −10.4028 | −10.4028 | −10.4028 | −10.4028 | −10.4005 | −9.5476 | |

| F18 | Ave | −10.5363 | −10.5363 | −9.8201 | −10.536 | −9.014 | −8.2934 | −8.0033 | −4.7824 |

| Std | 0 | 0 | 1.8263 | 0.0002 | 2.5492 | 3.225 | 3.938 | 1.5385 | |

| Max | −10.5363 | −10.5363 | −5.1285 | −10.5354 | −2.8064 | −2.4273 | −0.5542 | −0.9448 | |

| Min | −10.5363 | −10.5363 | −10.5363 | −10.5363 | −10.5363 | −10.5363 | −10.5342 | −8.8356 | |

| Friedman Average Rank | 1.6551 | 2.4101 | 4.9058 | 4.4775 | 4.3225 | 5.3986 | 5.7667 | 7.0638 | |

| Rank | 1 | 2 | 5 | 4 | 3 | 6 | 7 | 8 | |

Table A2.

CEC2017 optimization function results.

Table A2.

CEC2017 optimization function results.

| Function | Criteria | MSMPA | MPA | PSO | GWO | WOA | MFO | SOA | SCA |

|---|---|---|---|---|---|---|---|---|---|

| CF1 | Ave | 1.00 × 10+02 | 1.00 × 10+02 | 2.67 × 10+03 | 2.46 × 10+06 | 1.37 × 10+10 | 4.68 × 10+06 | 1.84 × 10+08 | 6.00 × 10+08 |

| Std | 1.58 × 10−05 | 6.00 × 10−03 | 2.86 × 10+03 | 6.44 × 10+06 | 5.50 × 10+09 | 1.75 × 10+07 | 1.83 × 10+08 | 2.07 × 10+08 | |

| CF2 | Ave | 2.00 × 10+02 | 2.00 × 10+02 | 2.00 × 10+02 | 3.68 × 10+06 | 1.57 × 10+12 | 1.04 × 10+08 | 1.29 × 10+07 | 7.48 × 10+06 |

| Std | 0.00 × 10+00 | 0.00 × 10+00 | 0.00 × 10+00 | 1.08 × 10+07 | 5.97 × 10+12 | 4.01 × 10+08 | 1.78 × 10+07 | 1.45 × 10+07 | |

| CF3 | Ave | 3.00 × 10+02 | 3.00 × 10+02 | 3.00 × 10+02 | 8.85 × 10+02 | 2.36 × 10+04 | 3.13 × 10+03 | 1.47 × 10+03 | 1.06 × 10+03 |

| Std | 4.01 × 10−10 | 2.83 × 10−08 | 2.29 × 10−08 | 1.15 × 10+03 | 1.33 × 10+04 | 4.97 × 10+03 | 1.08 × 10+03 | 4.75 × 10+02 | |

| CF4 | Ave | 4.00 × 10+02 | 4.00 × 10+02 | 4.03 × 10+02 | 4.12 × 10+02 | 1.50 × 10+03 | 4.09 × 10+02 | 4.40 × 10+02 | 4.33 × 10+02 |

| Std | 1.20 × 10−07 | 8.73 × 10−08 | 1.07 × 10+00 | 1.37 × 10+01 | 6.46 × 10+02 | 1.94 × 10+01 | 3.81 × 10+01 | 1.11 × 10+01 | |

| CF5 | Ave | 5.06 × 10+02 | 5.08 × 10+02 | 5.08 × 10+02 | 5.12 × 10+02 | 6.22 × 10+02 | 5.24 × 10+02 | 5.24 × 10+02 | 5.44 × 10+02 |

| Std | 2.00 × 10+00 | 2.36 × 10+00 | 3.43 × 10+00 | 4.20 × 10+00 | 2.05 × 10+01 | 8.03 × 10+00 | 8.55 × 10+00 | 5.94 × 10+00 | |

| CF6 | Ave | 6.00 × 10+02 | 6.00 × 10+02 | 6.00 × 10+02 | 6.00 × 10+02 | 6.74 × 10+02 | 6.00 × 10+02 | 6.08 × 10+02 | 6.16 × 10+02 |

| Std | 3.28 × 10−02 | 1.02 × 10−04 | 4.34 × 10−11 | 7.31 × 10−01 | 1.50 × 10+01 | 9.98 × 10−01 | 4.30 × 10+00 | 3.22 × 10+00 | |

| CF7 | Ave | 7.12 × 10+02 | 7.19 × 10+02 | 7.17 × 10+02 | 7.26 × 10+02 | 9.65 × 10+02 | 7.35 × 10+02 | 7.53 × 10+02 | 7.70 × 10+02 |

| Std | 2.42 × 10+00 | 2.65 × 10+00 | 4.55 × 10+00 | 9.34 × 10+00 | 1.01 × 10+02 | 1.24 × 10+01 | 1.49 × 10+01 | 7.80 × 10+00 | |

| CF8 | Ave | 8.05 × 10+02 | 8.06 × 10+02 | 8.08 × 10+02 | 8.10 × 10+02 | 8.92 × 10+02 | 8.26 × 10+02 | 8.24 × 10+02 | 8.37 × 10+02 |

| Std | 2.27 × 10+00 | 1.98 × 10+00 | 3.04 × 10+00 | 4.00 × 10+00 | 1.98 × 10+01 | 1.21 × 10+01 | 6.17 × 10+00 | 5.99 × 10+00 | |

| CF9 | Ave | 9.00 × 10+02 | 9.00 × 10+02 | 9.00 × 10+02 | 9.04 × 10+02 | 2.74 × 10+03 | 9.38 × 10+02 | 9.84 × 10+02 | 9.72 × 10+02 |

| Std | 1.40 × 10−04 | 2.01 × 10−08 | 6.56 × 10−14 | 9.47 × 10+00 | 9.39 × 10+02 | 1.01 × 10+02 | 9.03 × 10+01 | 2.60 × 10+01 | |

| CF10 | Ave | 1.19 × 10+03 | 1.27 × 10+03 | 1.28 × 10+03 | 1.57 × 10+03 | 2.80 × 10+03 | 1.79 × 10+03 | 1.71 × 10+03 | 2.19 × 10+03 |

| Std | 1.19 × 10+02 | 9.66 × 10+01 | 1.23 × 10+02 | 2.44 × 10+02 | 1.70 × 10+02 | 3.19 × 10+02 | 2.22 × 10+02 | 1.99 × 10+02 | |

| CF11 | Ave | 1.10 × 10+03 | 1.10 × 10+03 | 1.10 × 10+03 | 1.13 × 10+03 | 5.46 × 10+03 | 1.13 × 10+03 | 1.20 × 10+03 | 1.19 × 10+03 |

| Std | 1.26 × 10+00 | 7.50 × 10−01 | 2.34 × 10+00 | 1.06 × 10+01 | 5.34 × 10+03 | 3.89 × 10+01 | 7.76 × 10+01 | 6.08 × 10+01 | |

| CF12 | Ave | 1.20 × 10+03 | 1.20 × 10+03 | 1.95 × 10+04 | 4.05 × 10+05 | 4.95 × 10+08 | 9.56 × 10+05 | 2.58 × 10+06 | 8.06 × 10+06 |

| Std | 1.68 × 10+01 | 5.14 × 10+00 | 1.91 × 10+04 | 5.72 × 10+05 | 4.98 × 10+08 | 2.16 × 10+06 | 2.43 × 10+06 | 6.55 × 10+06 | |

| CF13 | Ave | 1.30 × 10+03 | 1.30 × 10+03 | 5.92 × 10+03 | 1.07 × 10+04 | 3.07 × 10+07 | 1.17 × 10+04 | 1.67 × 10+04 | 2.28 × 10+04 |

| Std | 1.84 × 10+00 | 1.92 × 10+00 | 6.17 × 10+03 | 6.91 × 10+03 | 5.54 × 10+07 | 1.25 × 10+04 | 1.13 × 10+04 | 1.85 × 10+04 | |

| CF14 | Ave | 1.40 × 10+03 | 1.40 × 10+03 | 1.43 × 10+03 | 2.16 × 10+03 | 5.72 × 10+03 | 2.25 × 10+03 | 1.55 × 10+03 | 1.57 × 10+03 |

| Std | 1.95 × 10+00 | 2.07 × 10+00 | 1.10 × 10+01 | 1.40 × 10+03 | 8.46 × 10+03 | 9.25 × 10+02 | 6.39 × 10+01 | 5.21 × 10+01 | |

| CF15 | Ave | 1.50 × 10+03 | 1.50 × 10+03 | 1.53 × 10+03 | 3.10 × 10+03 | 2.09 × 10+04 | 4.43 × 10+03 | 2.26 × 10+03 | 2.09 × 10+03 |

| Std | 4.36 × 10−01 | 4.79 × 10−01 | 2.45 × 10+01 | 1.90 × 10+03 | 2.58 × 10+04 | 2.65 × 10+03 | 7.50 × 10+02 | 5.56 × 10+02 | |

| CF16 | Ave | 1.61 × 10+03 | 1.60 × 10+03 | 1.61 × 10+03 | 1.69 × 10+03 | 2.24 × 10+03 | 1.68 × 10+03 | 1.68 × 10+03 | 1.70 × 10+03 |

| Std | 2.97 × 10+01 | 4.22 × 10−01 | 3.35 × 10+01 | 5.79 × 10+01 | 2.38 × 10+02 | 9.95 × 10+01 | 7.44 × 10+01 | 5.33 × 10+01 | |

| CF17 | Ave | 1.71 × 10+03 | 1.71 × 10+03 | 1.71 × 10+03 | 1.75 × 10+03 | 2.01 × 10+03 | 1.74 × 10+03 | 1.77 × 10+03 | 1.77 × 10+03 |

| Std | 6.04 × 10+00 | 6.48 × 10+00 | 9.17 × 10+00 | 2.50 × 10+01 | 1.60 × 10+02 | 1.76 × 10+01 | 3.37 × 10+01 | 9.68 × 10+00 | |

| CF18 | Ave | 1.80 × 10+03 | 1.80 × 10+03 | 4.59 × 10+03 | 2.62 × 10+04 | 3.92 × 10+07 | 2.38 × 10+04 | 3.88 × 10+04 | 6.88 × 10+04 |

| Std | 1.41 × 10+00 | 1.17 × 10+00 | 2.47 × 10+03 | 1.43 × 10+04 | 7.49 × 10+07 | 1.82 × 10+04 | 1.08 × 10+04 | 3.97 × 10+04 | |

| CF19 | Ave | 1.90 × 10+03 | 1.90 × 10+03 | 1.92 × 10+03 | 4.64 × 10+03 | 8.76 × 10+05 | 8.04 × 10+03 | 7.50 × 10+03 | 2.68 × 10+03 |

| Std | 2.72 × 10−01 | 4.28 × 10−01 | 2.52 × 10+01 | 4.73 × 10+03 | 2.30 × 10+06 | 1.03 × 10+04 | 6.35 × 10+03 | 1.64 × 10+03 | |

| CF20 | Ave | 2.01 × 10+03 | 2.01 × 10+03 | 2.01 × 10+03 | 2.06 × 10+03 | 2.29 × 10+03 | 2.04 × 10+03 | 2.09 × 10+03 | 2.09 × 10+03 |

| Std | 4.48 × 10+00 | 8.41 × 10+00 | 1.06 × 10+01 | 4.01 × 10+01 | 7.52 × 10+01 | 2.26 × 10+01 | 5.71 × 10+01 | 2.08 × 10+01 | |

| CF21 | Ave | 2.24 × 10+03 | 2.20 × 10+03 | 2.29 × 10+03 | 2.30 × 10+03 | 2.35 × 10+03 | 2.27 × 10+03 | 2.20 × 10+03 | 2.23 × 10+03 |

| Std | 5.24 × 10+01 | 1.29 × 10−05 | 4.64 × 10+01 | 3.84 × 10+01 | 5.63 × 10+01 | 6.23 × 10+01 | 2.77 × 10+00 | 4.56 × 10+01 | |

| CF22 | Ave | 2.30 × 10+03 | 2.24 × 10+03 | 2.30 × 10+03 | 2.31 × 10+03 | 3.32 × 10+03 | 2.30 × 10+03 | 2.45 × 10+03 | 2.35 × 10+03 |

| Std | 1.84 × 10+01 | 4.78 × 10+01 | 1.51 × 10+01 | 4.85 × 10+00 | 4.75 × 10+02 | 2.19 × 10+01 | 3.61 × 10+02 | 1.83 × 10+01 | |

| CF23 | Ave | 2.51 × 10+03 | 2.58 × 10+03 | 2.61 × 10+03 | 2.61 × 10+03 | 2.73 × 10+03 | 2.63 × 10+03 | 2.63 × 10+03 | 2.65 × 10+03 |

| Std | 2.17 × 10+00 | 8.39 × 10+01 | 4.08 × 10+00 | 6.63 × 10+00 | 2.71 × 10+01 | 8.45 × 10+00 | 6.90 × 10+00 | 6.25 × 10+00 | |

| CF24 | Ave | 2.70 × 10+03 | 2.49 × 10+03 | 2.72 × 10+03 | 2.75 × 10+03 | 2.90 × 10+03 | 2.76 × 10+03 | 2.75 × 10+03 | 2.76 × 10+03 |

| Std | 7.88 × 10+01 | 2.49 × 10+01 | 5.98 × 10+01 | 1.03 × 10+01 | 6.38 × 10+01 | 9.34 × 10+00 | 9.90 × 10+00 | 6.77 × 10+01 | |

| CF25 | Ave | 2.91 × 10+03 | 2.86 × 10+03 | 2.92 × 10+03 | 2.93 × 10+03 | 4.01 × 10+03 | 2.93 × 10+03 | 2.94 × 10+03 | 2.95 × 10+03 |

| Std | 2.00 × 10+01 | 1.01 × 10+02 | 2.34 × 10+01 | 1.89 × 10+01 | 4.44 × 10+02 | 2.80 × 10+01 | 2.61 × 10+01 | 1.51 × 10+01 | |

| CF26 | Ave | 2.90 × 10+03 | 2.70 × 10+03 | 2.93 × 10+03 | 2.93 × 10+03 | 4.47 × 10+03 | 2.98 × 10+03 | 3.06 × 10+03 | 3.06 × 10+03 |

| Std | 2.44 × 10−04 | 1.05 × 10+02 | 1.69 × 10+02 | 1.78 × 10+02 | 3.99 × 10+02 | 5.46 × 10+01 | 2.37 × 10+02 | 2.22 × 10+01 | |

| CF27 | Ave | 3.08 × 10+03 | 3.09 × 10+03 | 3.10 × 10+03 | 3.09 × 10+03 | 3.26 × 10+03 | 3.09 × 10+03 | 3.09 × 10+03 | 3.10 × 10+03 |

| Std | 1.14 × 10+00 | 5.67 × 10−01 | 1.15 × 10+01 | 2.90 × 10+00 | 6.07 × 10+01 | 1.95 × 10+00 | 1.91 × 10+00 | 1.90 × 10+00 | |

| CF28 | Ave | 3.11 × 10+03 | 3.07 × 10+03 | 3.23 × 10+03 | 3.35 × 10+03 | 3.69 × 10+03 | 3.25 × 10+03 | 3.23 × 10+03 | 3.25 × 10+03 |

| Std | 3.23 × 10+01 | 9.00 × 10+01 | 1.59 × 10+02 | 8.68 × 10+01 | 1.15 × 10+02 | 8.01 × 10+01 | 8.22 × 10+01 | 5.64 × 10+01 | |

| CF29 | Ave | 3.14 × 10+03 | 3.13 × 10+03 | 3.16 × 10+03 | 3.17 × 10+03 | 3.58 × 10+03 | 3.20 × 10+03 | 3.18 × 10+03 | 3.21 × 10+03 |

| Std | 7.29 × 10+00 | 9.20 × 10+00 | 1.90 × 10+01 | 1.98 × 10+01 | 1.34 × 10+02 | 4.05 × 10+01 | 2.57 × 10+01 | 2.17 × 10+01 | |

| CF30 | Ave | 3.31 × 10+03 | 3.40 × 10+03 | 6.02 × 10+05 | 5.65 × 10+05 | 1.23 × 10+07 | 5.64 × 10+05 | 7.35 × 10+04 | 6.44 × 10+05 |

| Std | 3.66 × 10+01 | 4.54 × 10+00 | 8.68 × 10+05 | 6.42 × 10+05 | 1.03 × 10+07 | 5.53 × 10+05 | 1.25 × 10+05 | 4.64 × 10+05 | |

| Friedman Average Rank | 1.6167 | 2.4489 | 2.9056 | 4.6561 | 7.9300 | 4.7728 | 5.3978 | 6.2722 | |

| Rank | 1 | 2 | 3 | 4 | 8 | 5 | 6 | 7 | |

Table A3.

p-values obtained from Wilcoxon’s rank sum test for MSMPA and other algorithms.

Table A3.

p-values obtained from Wilcoxon’s rank sum test for MSMPA and other algorithms.

| Function | MSMPA VS. | |||||||

|---|---|---|---|---|---|---|---|---|

| MPA | PSO | GWO | WOA | MFO | SOA | SCA | ||

| F1 | p-value | 1.21 × 10−12 | 1.21 × 10−12 | 1.21 × 10−12 | 1.21 × 10−12 | 1.21 × 10−12 | 1.21 × 10−12 | 1.21 × 10−12 |

| F2 | p-value | 1.21 × 10−12 | 1.21 × 10−12 | 1.21 × 10−12 | 1.21 × 10−12 | 1.21 × 10−12 | 1.21 × 10−12 | 1.21 × 10−12 |

| F3 | p-value | 1.21 × 10−12 | 1.21 × 10−12 | 1.21 × 10−12 | 1.21 × 10−12 | 1.21 × 10−12 | 1.21 × 10−12 | 1.21 × 10−12 |

| F4 | p-value | 1.21 × 10−12 | 1.21 × 10−12 | 1.21 × 10−12 | 1.21 × 10−12 | 1.21 × 10−12 | 1.21 × 10−12 | 1.21 × 10−12 |