1. Introduction

One of the growing interests in neural networks (NNs) is directed towards the efficient representation of weights and activations by means of quantization [

1,

2,

3,

4,

5,

6,

7,

8,

9,

10,

11,

12,

13,

14]. Quantization, as a bit-width compression method, is a desirable mechanism that can dictate the entire NN performance [

10,

12]. In other words, the overall network complexity reduction, provided by the quantization process, can lead to commensurately reduced overall accuracy if the pathway toward this reduction is not chosen prudently. Quantization is significantly beneficial for NN implementation on resource-limited devices since it is capable of fitting the whole NN model into the on-chip memory of edge devices such that the high overhead that occurs by off-chip memory access can be mitigated [

9]. Namely, standard implementation of NNs supposes 32-bits full-precision (FP32) representation of NN parameters, requiring complex and expensive hardware. By quantizing FP32 weights and activations with low-bits, that is, by thoughtfully choosing a quantizer model for NN parameters, one can significantly reduce the required bit-width for the digital representation of NN parameters, greatly reducing the overall complexity of the NN while degrading the network accuracy to some extent [

2,

3,

5,

6,

8,

9]. For that reason, a few of new quantizer models and quantization methodologies have been proposed, for instance in [

4,

5,

11,

13], with a main objective—to enable quantized NNs to have the slightly degraded or almost the same accuracy level as their full-precision counterparts.

In general, to optimize a quantizer model, one has to know the statistical distribution of the input signal, allowing for the quantizer to be adapted as best as possible to the statistical characteristics of the signal itself. The symmetric Laplacian probability density functions (pdf) with the pronounced peak and heavy tails has been successfully used for modelling signals in many practical applications [

8,

10,

13,

15,

16,

17]. Furthermore, it is arguably the most suitable pdf form for speech and audio signals because it fits many distinctive attributes of these signals [

15,

16,

17,

18,

19]. In addition, transformed signals and other quantities that are derived from original signals often follow the Laplacian pdf [

16]. As it is commonly encountered in many applications, in this paper we favor signal modelling by the Laplacian pdf.

It is well known that a nonuniform quantizer model, well accommodated to the signal’s amplitude dynamic and a nonuniform pdf, has lower quantization error compared to the uniform quantizer (UQ) model with an equal number of quantization levels or equal bit-rates [

2,

11,

13,

18,

20,

21,

22,

23,

24,

25,

26,

27]. However, due to the fact that UQ is the simplest quantizer model, it has been intensively studied, for instance in [

23,

24,

28,

29,

30,

31,

32]. Moreover, the high complexity of nonuniform quantizers can outweigh the potential performance advantages over uniform quantizers [

21]. Substantial progress in this direction might go towards the usage of one well-designed PWUQ, composed of the optimized pair of UQs with equal bit-rates, capable of accommodating the statistical characteristics of the assumed Laplacian pdf in the predefined amplitude regions. For that reason, in this paper we address the parameterization of a PWUQ with the goal to provide beneficial performance improvements over the existing UQ solutions.

By following [

24,

25], we accept quantizer’s support region definition as a region separating the signal amplitudes into a granular region and an overload region, or alternatively, into an inner and an outer region. For the symmetric quantizer, such as the one we propose in this paper, these regions are separated by the support region thresholds or clipping thresholds, as denoted by ±

xclip (see

Figure 1). These thresholds have particular real values that define a quantizer’s support region [−

xclip,

xclip], where distortion due to quantization and clipping is bounded. The problem of determining the value of

xclip of the binary scalar quantizer for the assumed Laplacian pdf has been recently addressed in [

33]. Since the Laplacian pdf is a long-tailed pdf, a fair percentage of the samples are concentrated around the mean value, whereas a small percentage of the samples are in the granular region, near to the support region threshold, or out of the granular region. Observe that the shrinkage of the support region for a fixed number of quantization levels results in a reduction of the granular distortion, while at the same time causing an unwanted increase of the overload distortion [

22]. On the other hand, for the given number of quantization levels, with the increase of the support region width, the overload region, and hence the overload distortion, is reduced at the expense of the granular distortion increase. In that regard, the main trade-off in quantizer design is making the support region width large enough to accommodate the signal’s amplitude dynamic while keeping this support region width small enough to minimize quantizer distortion.

By inspecting the specific features of the Laplacian pdf, we propose a novel PWUQ whose granular region is wide enough so that the overload distortion can be nullified, whereas the granular region is divided properly into two non-overlapping regions (the central granular region (CGR) and the peripheral granular region (PGR)) and to utilize the simplest uniform quantization within each of the regions. In particular, we assume a narrow region around the mean for the CGR covering the peak of the Laplacian pdf, while for the PGR we specify a wider region, that is the rest of the granular region where the pdf is tailed. These two regions are separated by the granular region border thresholds, denoted by ±xb, that are symmetrically placed around the mean. Since our goal is to minimize the overall distortion, especially the granular distortion, the parameterization of our PWUQ so that most of the samples (99.99%) from the assumed Laplacian pdf belong to the granular region, can indeed be considered as a ubiquitous optimization task. Nevertheless, the authors of this paper have found an iterative manner to solve this task in a convenient way, explained in detail below.

In brief, the novelty of this paper in the field of quantization is reflected in the following:

- -

by a studious inspection of the shape of the Laplacian pdf, a novel idea with the partition of the amplitude range of the quantizer into two regions, CGR and PGR, is proposed;

- -

for given xclip values, the widths of these two regions are optimized using our iterative algorithm so that the distortion of PWUQ is minimal;

- -

the simplest model of a uniform quantizer is exploited, for equal bit-rates it is applied in each of the two regions, which makes the design of our model much simpler compared to many non-uniform quantizer models that are available in the literature (for instance, see [

21,

22,

27]);

- -

a significant gain is achieved in SQNR in relation to the uniform quantizer, which justifies the meaningfulness of our idea.

The paper is organized as follows:

Section 2 describes the iterative algorithm for the parameterization of our novel PWUQ that is optimized for the given support region threshold and the assumed Laplacian pdf.

Section 3 provides the discussion on the gain in performance that is achieved with the proposed quantizer when compared to UQ.

Section 4 summarizes and concludes our research results.

2. Iterative Parameterization of PWUQ

At the beginning of this section, we briefly recall the basic theory of quantization. An

Nlevel quantizer

QN is defined by mapping

QN: ℝ →

Y [

25], where ℝ is a set of the real numbers,

Y = {

y−−

N/2, …,

y−1,

y1, …,

yN/2} ⸦ ℝ is the code book of size

N containing representation levels

yi, where

N = 2

r and

r is a bit-rate. With the

N-level quantizer

QN, ℝ is partitioned into

N bounded in-width granular cells ℜ

i and two unbounded overload cells. The

i-th granular cell is given by ℜ

i = {

x|

x ∈ [−

xclip,

xclip],

QN(

x) =

yi}, where it holds ℜ

i∩ℜ

j = ∅, for

i ≠

j. In other words,

yi specifies the

i-th codeword and is the only representative for all real values

x from ℜ

i.

Let us define our novel symmetrical PWUQ that consisted of two UQs of the same number of quantization levels

N/2 =

K. One quantizer is utilized for quantization of amplitudes belonging to the CGR [−

xb,

xb] and the second one is used for PGR [−

xclip,

xb) ∪ (

xb,

xclip] (see

Figure 1). Let us also assume that the amplitudes belonging to the two overload cells, that is, to (−∞, −

xclip) ∪ (

xclip, +∞), are clipped. Further, define CGR and PGR as:

which are separated by the border thresholds as denoted by ±

xb, for which it holds ±

xb = ±

xK/2. Due to the symmetry of the Laplacian pdf of zero mean and variance

σ2 = 1, for which we design our PWUQ:

decision thresholds and representation levels of our quantizer are symmetrically placed about the mean value. Without loss of generality, we restrict our attention to the

K positive counterparts, whereas the negative counterparts trivially follow from the symmetry

In particular, non-negative decision thresholds of our PWUQ consisted of two UQs of the same number of quantization levels

N/2 =

K, for a given

K,

xclip and

xb calculates from:

In other words, both regions, CGR covering [−

xb,

xb] and PGR covering [−

xclip, −

xb) ∪ (

xb,

xclip] are symmetrically partitioned into

K uniform cells (see

Figure 2 where transfer characteristic of PWUQ is shown for

N = 16). Due to symmetry, this equally means that each of the regions [0,

xb] and (

xb,

xclip] is partitioned into

K/2 uniform cells. Since these two UQs compose our PWUQ the notation of the decision thresholds in the CGR ends with index

K/2, whereas the index of the decision thresholds in the PGR increases up to

K.

In what follows, we describe other parameters of our PWUQ, provide derivation of the distortion, and perform its optimization per ψ = xb/xclip, for a given xclip value by using the iterative algorithm. In other words, for a given xclip value, we perform optimization of the widths of CGR and PGR via distortion optimization per border–clipping threshold scaling ratio ψ and we end up with an iterative formula enabling the concrete design of our PWUQ.

Let us define the quantization step sizes Δ

CGR and Δ

PGR as a uniform width of cells ℜ

i from ℜ

CGR and ℜ

PGR, respectively

We introduce parameter

ψ, that is border–clipping threshold scaling ratio, specified as

ψ =

xb/

xclip, for which, in accordance with our PWUQ model definition (see

Figure 1), it holds

ψ < 1, or more precisely

ψ < 0.5. Observing the area under the Laplacian pdf, we opt to increase quantization step size in ℜ

PGR since it is counterbalanced by a corresponding diminution of the step size in ℜ

CGR. Our symmetrical PWUQ maps a real value

x ∈ ℝ to one of the representation levels

yi, where it holds:

and

yi is determined as the midpoint of the corresponding quantization cells ℜ

i ∈ ℜ

CGR for

i = 1, …,

K/2 and ℜ

i ∈ ℜ

PGR for

i =

K/2+1, …,

KDetermining the decision thresholds and representation levels of the quantizer specifies its entire performance. If they are chosen more suitably, the overall distortion is smaller which translates to a reduction in the number of bits that are required from the quantizer for achieving certain distortion. The main idea behind our PWUQ design is to improve the overall quantization performance by the prudent application of the simplest uniform quantization. To assess the performance of our PWUQ for a given bit-rate,

r (

r = log

2N), and a given support region threshold we have to specify its distortion. Specifically, in accordance with our PWUQ model, we have to determine the sum of the granular distortions originating from quantization in ℜ

CGR and ℜ

PGR, that is to determine

DgCGR and

DgPGRPCGR and

PPGR denote the probability of belonging the input sample

x to ℜ

CGR and ℜ

PGR, respectively

Let us recall that the mean is a measure of central tendency and that it specifies where the values of

x tend to cluster, whereas the standard deviation

σ, indicates how the data are spread out from the selected mean to form the measure of dispersion [

25]. Also, let us recall that on an unbounded amplitude domain, the cumulative distribution function (CDF), denoted as

FCDF, is given by

where CDF satisfies

FCDF(∞) = 1. For a symmetric pdf, such as the pdf that is given in (2), it holds:

where

Φ(−

b,

b) is the probability that the value of the input sample

x having pdf

p(

x) belongs to the given interval [−

b,

b]. By invoking (2), (13), and (14) for

PCGR and

PPGR, we have:

Substituting (5), (6), (15) and (16) in (10) yields

where

C = 4

xclip2/(3

N2). By further setting the first derivate of

DgPWUQ with respect to

ψ equal to zero

we obtain:

From (19) and discussions about

xb or

ψ determining, the application of an iterative numerical method is required:

Taking the second derivative of (17) with respect to

ψ yields:

As it holds

xb ≤

xclip/2,

, we can conclude that

DgPWUQ is a convex function of

ψ, and subsequently of the border threshold

xb, where

xb ∈ (0,

xclip/2]. In other words, as it holds ∂

2DgPWUQ/∂

ψ2 > 0,

DgPWUQ is also a convex function of the border threshold

xb so that for the given

xclip, one unique optimal value of

xb and one unique optimal value of

ψ exists that minimizes

DgPWUQ.

Pseudo-code (see Algorithm 1) that is shown here summarizes our iterative algorithm for the concreate designing and parameterization of PWUQ for a given bit-rate and a clipping threshold. PWUQ parameterization implies specifying the clipping thresholds, xclip, and iteratively determining: border thresholds xb*, and border–clipping threshold scaling ratio ψ* = xb*/xclip, ΔCGR, ΔPGR—uniform step sizes in ℜCGR and ℜPGR; {y−i, yi}, i = 1, 2, …, K—symmetrical representation levels; {x−i, xi}, i = 1, 2, …, K—symmetrical decision thresholds.

We initialize our PWUQ model with the UQ model, that is with

xb(0) =

xclip/2, to follow performance improvement that is achieved by the iterative algorithm. In other words, we assume that it holds

ψ(0) = 0.5 as for this

ψ value and the same number of levels

N, PWUQ and UQ model are matched. We define that the iterative algorithm stopping criterion is satisfied when the absolute error

is less than

εmin = 10

−4.

Recalling (11)–(13) we can calculate

λ, as the probability that an input sample

x, with unrestricted pdf

p(

x), belongs to the granular region:

We should also highlight here that

xclip,

xb, and

N have a direct effect on the distortion. If the clipping threshold

xclip has a very small value, the quantization accuracy may be decreased because too many samples will be clipped [

24]. Note that the clipping effect nullifies the overload distortion where it can indeed degrade the granular distortion if the clipping threshold is not appropriately specified. Accordingly, setting a suitable clipping threshold value is crucial for achieving the best possible performance of the given quantization task. We can anticipate that for the given clipping threshold, the border threshold

xb has a large impact on the granular distortion because the CGR and PGR distortion, which compose the total granular distortion, behave opposite in relation to the border threshold

xb. In particular, for the fixed and an equal number of quantization levels that are assumed in ℜ

CGR and ℜ

PGR, with the decrease of the border threshold value, the CGR distortion is reduced at the expense of the increase in PGR distortion. Namely, the shrinkage of ℜ

CGR can cause a significant distortion reduction in CGR, while at the same time, can result in an unwanted but expected increase of the distortion in the pdf tailed PGR. Therefore, we can conclude that tuning the values of the border thresholds ±

xb and the clipping thresholds ±

xclip, is one of the key challenges when heavy-tailed Laplacian pdf are taken into consideration. In what follows, we will show that, for the Laplacian pdf given in (2), our PWUQ that is composed of only two UQs provides significantly better performance than one UQ. That is, it provides a higher signal–quantization-noise-ratio (SQNR)

compared to UQ for the same bit-rate

It is interesting to notice that if we assume equal values of the support region thresholds, or clipping thresholds of PWUQ and UQ,

xclip =

xUQ, we can end up with the simple closed-form formula providing a detailed insight in performance gain. That is, SQNR gain achievable with our PWUQ over UQ can be calculated from:

Obviously, for

ψ = 0.5 and the same number of levels

N, the PWUQ and UQ models are matched so that SQNR

PWUQ is equal to SQNR

UQ.

| Algorithm 1. PWUQ Laplacian (N, xclip, εmin)—iterative parameterization of PWUQ for the Laplacian pdf and the given xclip—determining ψ* and Φ (−xb*, xb*) |

| Input: Total number of quantization levels N, predefined clipping threshold xclip, εmin << 1 |

| 1st Output:ψ*, Φ(−xb*, xb*) |

| 2nd Output: ΔCGR, ΔPGR, {y−i, yi}, {x−i, xi}, i = 1, 2, …, K |

| 1: Initialize i ← 0, |

| 2: ψ* ← 0.5, |

| 3: ε(0) ← 0.5 |

| 4: while ε (i) > εmin do |

| 5: i ← i + 1 |

| 6: ψ(i − 1) ← ψ* |

| 7: compute ψ(i) using (20) |

| 8: ψ* ← ψ(i) |

| 9: compute ε(i) using (22) |

| 10: end while |

| 11: xb* ← ψ* × xclip |

| 12: compute Φ(−xb*, xb*) by using (15) |

| 13: return ψ*, Φ(−xb*, xb*) |

| 14: calculate ΔCGR, ΔPGR, {y−i, yi}, {x−i, xi}, i = 1, 2, …, K |

Let us highlight that we have performed our analysis for the Laplacian source; similar analyses can be derived for some other source. That is, for some other pdf that we can first specify in (2) and then substitute in (11) and (12), which will further affect the derivation of the formulas starting from (15). However, not every pdf will allow the derivation of the expressions in closed form. Moreover, to provide a similar iterative algorithm, as in this paper, the distortion should be a convex function of ψ, so this should be taken into consideration as well. In brief, as the Laplacian source is one of the widely used sources, it can be concluded that the analysis that is presented in this paper is indeed significant.

Let us also highlight that our piecewise uniform quantizer, with the border threshold between two segments as determined iteratively, can be considered a piecewise linear quantizer if we consider its realization from the standpoint of a companding technique. Taking this fact into account, our novel model can be related to adaptive rejection sampling, as used in [

34,

35,

36,

37,

38,

39] for the generation of samples from a given pdf where piecewise linearization is performed with linear segments as tangents on log pdf and with iteratively calculated nodes specifying the linear segments. This approach with piecewise linear approximation is especially useful for pdfs such as Gaussian. As with the Gaussian pdf, one cannot solve the integrals provided in our analysis in a closed form. Due to the widespread utilization of both the Laplacian and Gaussian pdf, our future work will be focused on designing a quantizer that is based on the piecewise linear approximation of the Gaussian pdf by using a similar technique to the one proposed in [

34,

35,

36,

37,

38,

39].

3. Numerical Results

The most important step in designing our PWUQ is determining the value of its key parameter

ψ. For a given

xclip, we can calculate

ψ* using the above algorithm. Then we can calculate

xb* =

ψ*·

xclip and other parameters of PWUQ, as well as its performance (SQNR

PWUQ). Since the Laplacian pdf is long-tailed and accordingly persistently unbounded, we can assume that most of the pdf’s samples are in the granular region for

λGR =

PCGR +

PPGR = 0.9999. We can analyse the case where the values of the clipping and border thresholds

xclipGR and

xbGR, as well as of

ψGR, are determined from

λGR = 0.9999, where the GR notation indicates the granular region. By invoking (15) and (16) for

λGR = 0.9999, we have:

For medium and high bit-rates, where

r amounts from 5 bit/sample to 8 bit/sample, we have calculated

ψ*,

xb* =

ψ*·

xclip,

Φ(−

xb*,

xb*),

λ*, SQNR

UQ, SQNR

PWUQ, and

δ for different

xclip values (see

Table 1). In particular, along with

xclipGR, as determined from (28), we assume

xclip values as determined in [

24,

25], by Hui and Jayant, respectively. To distinguish between these three different cases, we use notation [J], [H], and [GR] (or just GR) in the line or in the superscript. As we can see from

Figure 2, we can simply calculate from (5) and (6) (Δ

CGR/Δ

PGR =

ψ*/(1 −

ψ*)), it holds Δ

PGR ≈ 2Δ

CGR, so that from

K×(Δ

PGR + Δ

CGR) = 2

K × Δ

UQ it implies Δ

UQ ≈ 1.5 Δ

CGR and Δ

UQ ≈ 0.75 Δ

PGR. Accordingly, we show that in comparison to UQ, more precise quantization is enabled in ℜ

CGR to which most of samples from the assumed Laplace pdf belong, while in ℜ

PGR, where pdf is predominantly tailed, UQ provides slightly better performances since Δ

UQ < Δ

PGR. In other words, keeping in mind the shape of the Laplacian pdf, we show that it makes sense to favor the more meticulous quantization of the dominant number of samples that are concentrated around the mean belonging to ℜ

CGR. Since we assume

ψ* < 0.5, from Δ

CGR/Δ

PGR =

ψ*/(1 −

ψ*) we can write Δ

CGR < Δ

PGR, proving that in the narrower ℜ

CGR to which most of samples from the assumed Laplacian pdf belong, smaller quantization errors indeed occur. In brief, by taking into account the shape of the assumed Laplacian pdf and the manner in which we perform parameterization of our PWUQ, the overall gain in SQNR that is achieved with PWUQ over UQ can be completely justified.

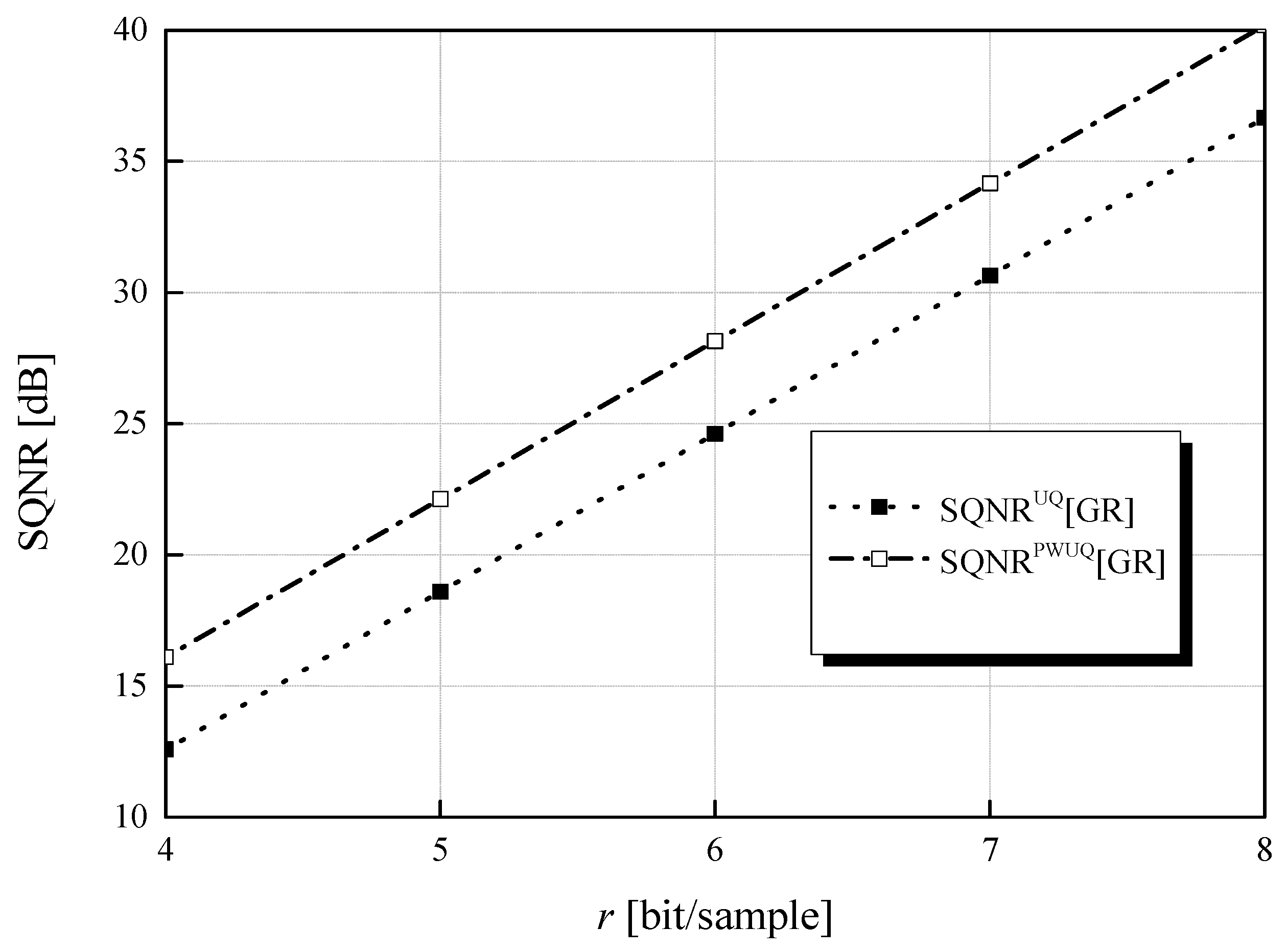

Let us observe that for

xclip, as calculated from (28) so that

λGR = 0.9999 and for

ε < 10

−4,

ψGR, amounts to 0.2674 and is not dependent on the bit-rate. Namely, we have calculated the following fixed values:

xclip =

xclipGR = 6.5127,

ψGR = 0.2674,

xbGR = 1.74150, and

Φ(−

xbGR,

xbGR) = 0.9148, whereas SQNR

PWUQ strictly depends on

r. It is interesting to notice that although SQNR

PWUQ depends on

r, the gain in SQNR that is achieved by PWUQ over UQ in the so-called GR case, is also constant and it amounts to 3.523 dB (see

Figure 3 for GR case). To justify this constant gain in SQNR we can observe (27) from which it trivially follows our conclusion for given fixed

ψGR,

xclipGR and

xbGR values. Eventually, we can notice that for

r = 8 bit/sample, SQNR

PWUQ[GR], determined for

xclip =

xclipGR = 6.5127, is higher than SQNR

PWUQ[H] [

24] and SQNR

PWUQ[J] [

25], which can be justified by the suitable clipping effect that was performed in the GR case in accordance with the assumption that it holds

λGR = 0.9999 and also by the fact that in [

24,

25], the optimization of the support region threshold, here considered as a clipping threshold, have been performed without nullifying the overload distortion, i.e., by avoiding a clipping effect.

It is worthy to notice from

Table 1 that the Laplacian pdf is expected to be in the defined granular regions with

λ*[J] = 0.9982,

λ*[H] = 0.9990 for

r = 5 bit/sample, whereas for

r = 8 bit/sample

λ*[J] and

λ*[H] are equal to

λGR = 0.9999. By calculating

λ* and

Φ(−

xb*,

xb*) for our three different cases, we confirm that with a convenient choice of clipping values, only a few quantized samples are in the granular region near to the support or clipping threshold or out of the granular region. From

Table 1, we can also conclude that the probability of belonging samples

x to ℜ

CGR grows with the bit-rate since values of

xclip[J] and

xclip[H] increase with the bit-rate. Also, we can notice that

Φ(−

xbGR,

xbGR) shows dominance compared to

Φ(−

xb*[J],

xb*[J]) and

Φ(−

xb*[H],

xb*[H]) for

r equal to 5 bit/sample and 6 bit/sample, whereas

Φ(−

xbGR,

xbGR) takes close values to

Φ(−

xb*[J],

xb*[J]) and

Φ(−

xb*[H],

xb*[H]) for

r = 7 bit/sample and

r = 8 bit/sample. Since the values of

Φ(−

xbGR,

xbGR) and

xclipGR are constant and for

r = 7 bit/sample it holds

xclipGR <

xclip[H], as a result we have SQNR

PWUQ[GR] > SQNR

PWUQ[H]. Similarly, for

r = 8 bit/sample, from

xclipGR <

xclip[J], it implies that SQNR

PWUQ[GR] > SQNR

PWUQ[J]. For the highest observed bit-rate,

r = 8 bit/sample, along with the values of the key design parameters and performances of UQ and PWUQ given in the last row of

Table 1 for the GR case, we present additional descriptive information, given in

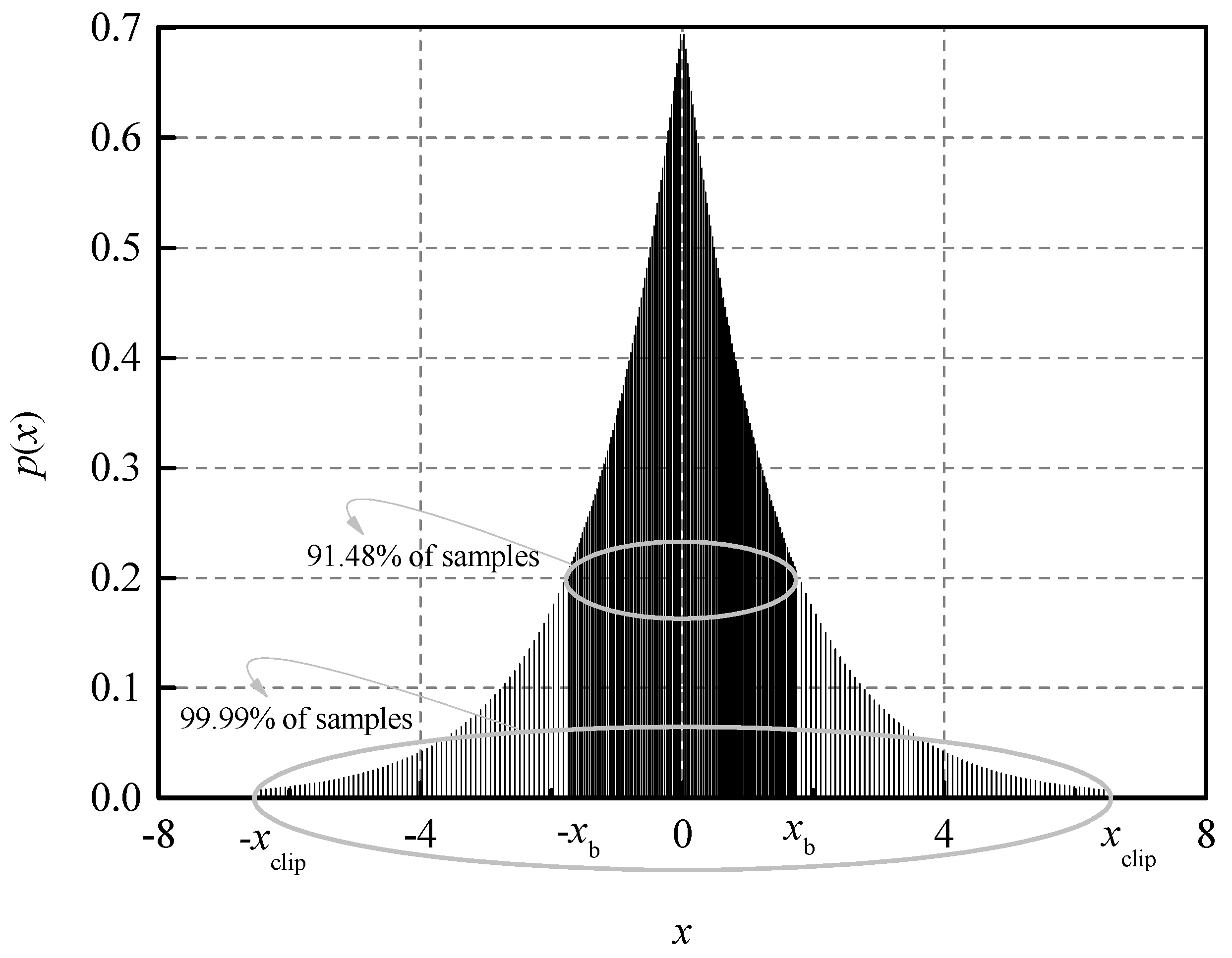

Figure 4. From

Figure 4, we can notice that 91.48% of the samples belonged to ℜ

CGR, whereas 99.99% of samples belonged to ℜ

CGR ∪ ℜ

PGR, meaning that we have determined the value of

ψ =

ψGR so that a huge percentage of the samples do belong to ℜ

CGR. In the case with

r = 8 bit/sample we have determined the smallest values of

ψ* in [J] and [H] cases. That is, we have determined the largest absolute differences from the initial value

ψ(0) = 0.5. In these two cases, the number of iterations for determining

ψ* ranges up to 25, where the values of

ψ* matches with the results of the numerical distortion optimization per

ψ, meaning that our algorithm converges fast.

To illustrate the importance of determining not only the optimized value of

ψ, but also of the choice of

xclip values,

Figure 5 shows the dependences of SQNR

PWUQ on

ψ for

r = 6 bit/sample for the three cases that were considered, where

xclip is equal to

xclipGR,

xclip[H],

xclip[J]. Let us highlight that the values of the parameter

ψ, which are the results of our iterative algorithm for the considered three cases, marked with asterisks in

Figure 5, are indeed optimal and gives the corresponding maximum of SQNR of PWUQ for each of these three cases. To select one of the values for

xclip for a given bit-rate,

r, we can choose the one giving the highest SQNR. Eventually, we can conclude that although the range of values for

ψ is relatively narrow, its selection is very significant since the unfavorable choice of this parameter can significantly degrade the performance of our PWUQ. This observation additionally justifies the importance of our proposal, described in the paper.