1. Introduction

Recently, fractional differential equations have become an active research area due to their applications in a wide range of fields including mechanics, computer science, and biology [

1,

2,

3,

4]. There are different kinds of fractional derivatives, e.g., Caputo, Riemman–Liouville, Riesz, which have been studied extensively in the literature. However, the Hadamard fractional derivative is also very important and used to model the different physical problems [

5,

6,

7,

8,

9,

10,

11].

The Hadamard fractional derivative was suggested in early 1892 [

12]. More recently, a new derivative which involved a Caputo-type modification on the Hadamard derivative known as the Caputo–Hadamard derivative was suggested [

8]. The aim of this paper is to study and analyze some useful numerical methods for solving Caputo–Hadamard fractional differential equations with graded and non-uniform meshes under the weak smoothness assumptions of the Caputo–Hadamard fractional derivative, e.g.,

with

.

We thus consider the following Caputo–Hadamard fractional differential equation, with

, [

8]

where

is a nonlinear function with respect to

, and the initial values

are given and

. Here the fractional derivative

denotes the Caputo–Hadamard derivative defined by

with

and where

denotes the smallest integer greater than or equal to

[

8].

To make sure that (

1) has a unique solution, we assume that the function f is continuous and satisfies the following Lipschitz condition with respect to the second variable

y [

7,

13]

For some recent existence and uniqueness results for Caputo–Hadamard fractional differential equations, the readers can refer to [

14,

15,

16] and the references therein.

It is well known that the Equation (

1) is equivalent to the following Volterra integral equation, with

, [

5]

Let us review some numerical methods for solving (

1). Gohar et al. [

7] studied the existence and uniqueness of the solution of (

1) and Euler and predictor-corrector methods were considered. Gohar et al. [

13] further considered the rectangular, trapezoidal, and predictor-corrector methods for solving (

1) with uniform meshes under the smooth assumption of the fractional derivative, e.g.,

with

. There are also some numerical methods for solving Caputo–Hadamard time fractional partial differential equations [

7,

17]. In this paper, we shall assume that

with

and assume that

behaves as

with

which implies that the derivatives of

have the singularities at

. In such case, we can not expect the numerical methods with uniform meshes have the optimal convergence orders. To obtain the optimal convergence orders, we shall use the graded and non-uniform meshes as in Liu et al. [

18,

19] for solving Caputo fractional differential equations. We shall show that the predictor-corrector method has the optimal convergence orders with the graded meshes

for some suitable

. We also show that the rectangular, trapezoidal methods also have the optimal convergence orders with some non-uniform meshes

.

For some recent works for the numerical methods for solving fractional differential equations with graded and non-uniform meshes, we refer to [

17,

20,

21,

22]. In particular, Stynes et al. [

23,

24] applied a graded mesh on a finite difference method for solving subdiffusion equations when the solutions of the equations are not sufficiently smooth. Liu et al. [

18,

19] applied a graded mesh for solving Caputo fractional differential equation by using a fractional Adams method with the assumption that the solution was not sufficiently smooth. The aim of this work is to extend the ideas in Liu et al. [

18,

19] for solving Caputo fractional differential equations to solve the Caputo–Hadamard fractional differential equations.

The paper is organized as follows. In

Section 2 we consider the error estimates of the predictor-corrector method for solving (

1) with the graded meshes. In

Section 3 we consider the error estimates of the rectangular, trapezoidal methods for solving (

1) with non-uniform meshes. In

Section 4 we will provide several numerical examples which support the theoretical conclusions made in

Section 2 and

Section 3.

Throughout this paper, we denote by C a generic constant depending on , but independent of and N, which could be different at different occurrences.

2. Predictor-Corrector Method with Graded Meshes

In this section, we shall consider the error estimates of the predictor-corrector method for solving (

1) with graded meshes. We first recall the following smoothness properties of the solutions to (

1).

Theorem 1 ([

25])

. Let . Assume that where G is a suitable set. Define . Then there exists a function and some constants such that the solution y of (1) can be expressed in the following form An example of this would be when

. We would have

and

This implies that the solution

y of (

1) would behave as

. As such the solution

.

Theorem 2 ([

25])

. If for some and , then where and with . With the above two theorems, we can see that if one of y and is sufficiently smooth then the other will not be sufficiently smooth unless some special conditions have been met.

Recall that, by (

3), the solution of (

1) can be written as the following form, with

and

,

Therefore it is natural to introduce the following smoothness assumptions for the fractional derivative

in (

1).

Assumption 1. Let and . Let y be the solution of (1). Assume that can be expressed as a function of , that is, there exists a smooth function such that Further we assume that satisfies the following smooth assumptions, with ,where and denote the first and second order derivatives of , respectively. Hence the assumptions (6) is equivalent to, with ,which are similar to the smoothness assumptions given in Liu et al. [18] for the Caputo fractional derivative . Remark 1. Assumption 1 gives the behavior of near and implies that has the singularity near . It is obvious that . For example, we may choose with .

Let N be a positive integer and let

be the partition on

. We define the following graded mesh on

with

such that, with

,

which implies that

When

we have

. Further we have

Denote

the approximation of

. Let us introduce the different numerical methods for solving (

3) with

below. Similarly we may define the numerical methods for solving (

3) with

. The fractional rectangular method for solving (

3) is defined as

where the weights

are defined as

The fractional trapezoidal method for solving (

3) is defined as

where the weights

for

are defined as

The predictor-corrector Adams method for solving (

3) is defined as, with

,

where the weights

and

are defined as above.

If we assume that satisfies Assumption 1, we shall prove the following error estimate.

Theorem 3. Assume that satisfies Assumption 1. Further assume that and are the solutions of (3) and (13), respectively. If , then we have

Proof of Theorem 3

In this subsection, we shall prove Theorem 3. To help with this we will start by proving some preliminary Lemmas. In Lemma 1 we will be finding the error estimate between and the piecewise linear function for both and . This will be used to estimate one of the terms in our main proof.

Lemma 1. Assume that satisfies Assumption 1

If , then we have If , then we havewhere is the piecewise linear function defined by,

Proof. Note that, with

,

Note that, with

,

which implies that, by Assumption 1,

Note that there exists a constant

such that

which follows from

Thus we have, for

,

For

we have, with

and

,

where we have used the following fact, with

,

which can be seen easily by noting

and (

7).

By Assumption 1 and by using [

24] (Section 5.2), we have, with

,

where

defines the ceiling function defined as before. For each of these integrals we shall consider the cases when

and when

.

For

, when

, we have, with

,

Note that, with

,

and

Case 1, If

, we have

Case 2, If

, we have

Case 3, If

, we have

Thus, we have that for

Next we will take the case for when

, we have, with

,

Thus, we have that for

,

For

, we have, noting that with

,

which implies that

Note that

we get, with

and

,

For

, we have, with

,

By Assumption 1, we then have, with

,

Obviously the bound for is stronger than the bound for . Together these estimates complete the proof of this lemma. □

In Lemma 2 below, we state that the weights and are positive for all values of j.

Lemma 2. Let . We have

where are the weights defined in (12), where are the weights defined in (10).

Proof. The proof is obvious, we omit the proof here. □

For Lemma 3, we are attempting to find an upper bound for . This will be used in the main proof when addressing the term.

Lemma 3. Let . We have, with ,where is defined in (12). Proof. We have, by (

12), with

,

□

In Lemma 4 we will be finding the error estimate between and the piecewise constant function for both and . This will be used to estimate one of the terms in our main proof.

Lemma 4. Assume that satisfies Assumption 1.

If , then we have

where is the piecewise constant function defined as below, with Proof. The proof is similar to the proof of Lemma 1. Note that

For

, by Assumption 1, we have

For

, we have, with

,

For

, if

, then we have, with

,

Note that

for any

. Hence, we have

Noting that, with

,

we have, with

,

For

, we have, with

,

Together these estimates complete the proof of this Lemma. □

For Lemma 5, we are attempting to find an upper bound for the sum of our weights. This will be used in the main proof when simplifying several terms.

Lemma 5. Let . There exists a positive constant C such thatwhere and are defined by (12) and (10), respectively. Proof. We only prove (

21). The proof of (

22) is similar. Note that

where

is the remainder term. Let

, we have

Thus, (

21) follows by the fact

in Lemma 2. □

We will now use the above lemmas to prove the error estimates of Theorem 3.

Proof of Theorem 3. Subtracting (

13) from (

3), we have

The term

I is estimated by Lemma 1. For II, we have, by Lemma 2 and the Lipschitz condition of

f,

For

, we have, by Lemma 2 and the Lipschitz condition for f,

The term

is estimated by Lemma 4. For

, we have, by Lemma 2,

The rest of the proof is exactly the same as the proof of [

18] (Theorem 1.4). The proof of Theorem 3 is complete. □

4. Numerical Examples

In this section, we will consider some numerical examples to confirm the theoretical results obtained in the previous sections. For simplicity, all the examples below will take . All the following results may be adapted for all .

Example 1. Consider the following nonlinear fractional differential equation, with and ,where The exact solution of this equation is

. We will be solving Example 1 over the interval

. Let N be a positive integer and let

be the graded mesh on the interval

. This mesh is defines as

for

with

. Therefore, we have by Theorem 3,

In

Table 1 we can see the maximum absolute error and experimental order of convergence (EOC) for the predictor-corrector method at varying

and

N values. For our different

, we have chosen N values as

. For this example we have taken

. The maximum absolute errors

were obtained as shown above with respect to N and we calculate the experimental order of convergence or EOC as

As we can see, the EOCs for this example are almost

which was predicted by Theorem 3. Due to the solution of the FODE being sufficiently smooth, any value of r will give the optimal convergence order given above. As we are using

, this means that we are using a uniform mesh and so can compare these results with the methods introduced by Gohar et al. [

13]. We can see, we have obtained a similar result.

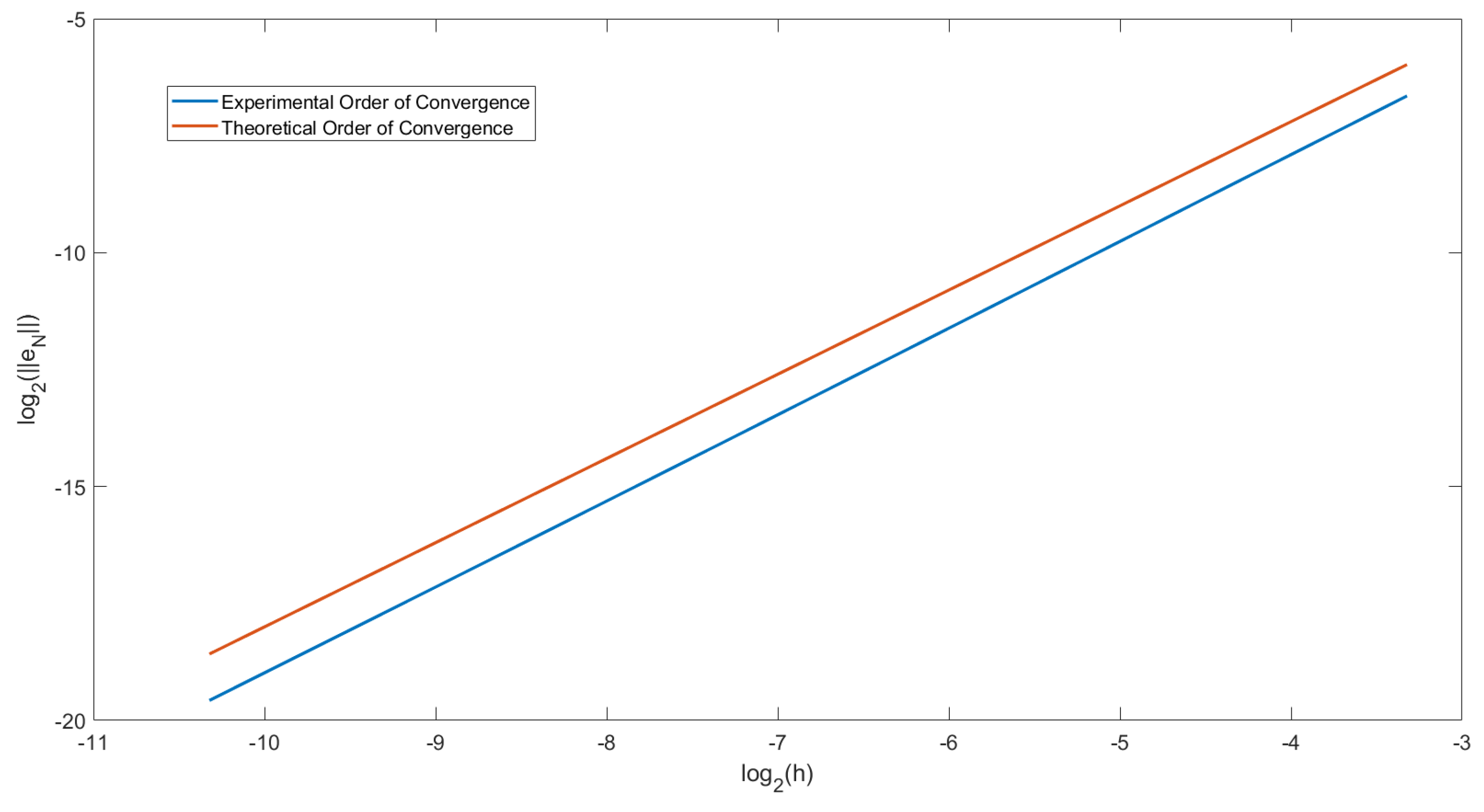

In

Figure 1, we have plotted the order of convergence for Example 1. From Equation (

24) we have, with

,

Let

and let

. We then plotted a graph for

y against

x for

. Doing this, we get that the gradient of the graph would equal the EOC. To compare this to the theoretical order of convergence, we have also plotted the straight line

. For

Figure 1 we choose

. We can observe that the two lines drawn are parallel. Therefore we can conclude that the order of convergence of this predictor-corrector method is

.

Example 2. Consider the following nonlinear fractional differential equation, with and ,where We will be solving Example 2 over the interval

. The exact solution of this equation is

and

. This implies that the regularity of

behaves as

. This means that

satisfies Assumption 1. We will be using the same graded mesh as in Example 1. Therefore, we have by Theorem 3, with

,

In

Table 2,

Table 3 and

Table 4 we can see the EOC for the predictor-corrector method with varying values of

and with

r values at

and

. With a fixed

we have obtain the EOC and maximum absolute error for increasing values of

N. By doing so we can see that the EOC are almost

when

and the EOC are almost

when

.

When

, we are using a uniform mesh and we can see that the EOC obtained is the same as those obtained by Gohar et al. [

13]. Comparing these to the results of the graded mesh when

we can see that a higher EOC has been obtained and an optimal order of convergence is recovered.

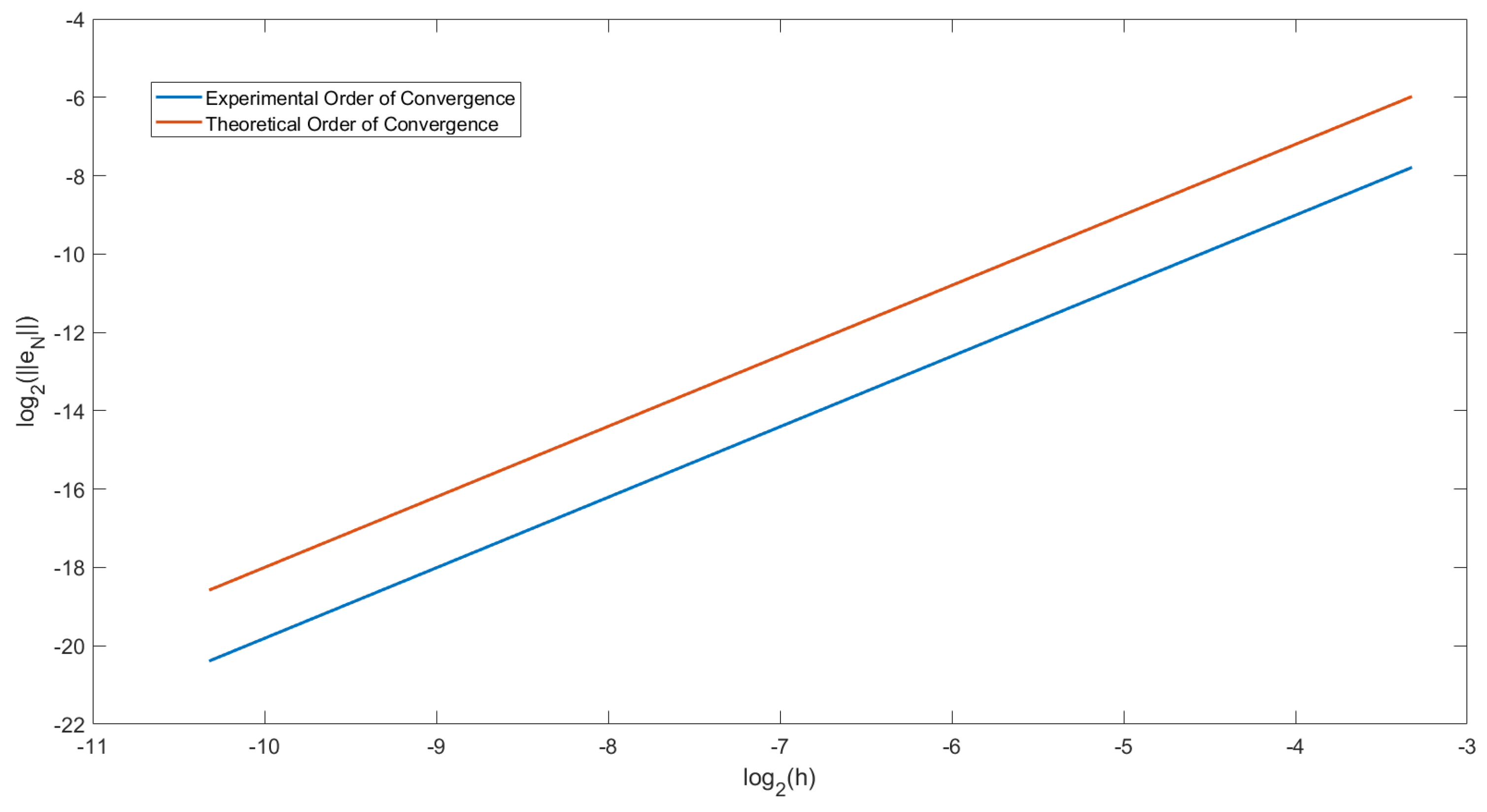

In

Figure 2, we have plotted the order of convergence for Example 2 when

and

. This plot is the same as for

Figure 1. We have also plotted the straight line

. We can observe that the two lines drawn are parallel. Therefore we can conclude that the order of convergence of this predictor-corrector method is

.

Example 3. Consider the following nonlinear fractional differential equation, with and , The exact solution of this FODE is

. Therefore

, where

is defined as the Mittag–Leffler function

This shows that

behaves as

This means that

satisfies Assumption 1. Therefore, with

, we have by Theorem 3,

We will be solving this equation over the same graded mesh as in Example 1 with varying r values. In

Table 5,

Table 6 and

Table 7, we have calculated the EOC and maximum absolute error with respect to increasing N values and with

r values at

and

. The experimental orders of convergence are shown to be almost

if we choose

and almost

if we choose

. Once again it is shown when we use a graded mesh at the optimal r value, we get a higher order of convergence to that obtained by the uniform mesh at

.

In

Figure 3, we have plotted the order of convergence for Example 3 when

and

. This plot is the same as for

Figure 1. We have also plotted the straight line

. We can observe that the two lines drawn are parallel. Therefore we can conclude that the order of convergence of this predictor-corrector method is

for choosing the suitable graded mesh ratio

r.

Example 4. In this example we will be applying the rectangular and trapezoidal methods for solving (27). Let N be a positive integer and let be the graded mesh on the interval for . We will be using and . In

Table 8, we have calculated the EOC and maximum absolute error with respect to increasing N values and with

for the rectangular method. By once again using the fact that

and applying Theorem 4 we can say

The experimental orders of convergence are shown to be almost

if we choose

and almost

if we choose

. This confirms the theoretical error estimates calculated in

Section 4. In

Table 9, we have used the same method to solve (

27) but using the uniform mesh. This shows how a larger EOC is achieved when using non-uniform mesh over a uniform mesh.

In

Table 10, we have calculated the EOC and maximum absolute error with respect to increasing N values and with

for the trapezoidal method. By once again using the fact that

and applying Theorem 4 we can say

The experimental orders of convergence are shown to be almost

if we choose

and almost

if we choose

. This confirms the theoretical error estimates calculated in

Section 4. In

Table 11, we have used the same method to solve (

27) but using the uniform mesh. This shows how a larger EOC is achieved when using graded mesh over a uniform mesh.