Convergence Analysis and Dynamical Nature of an Efficient Iterative Method in Banach Spaces

Abstract

:1. Introduction

2. Local Convergence

3. Generalized Method

3.1. Order of Convergence

3.2. Local Convergence

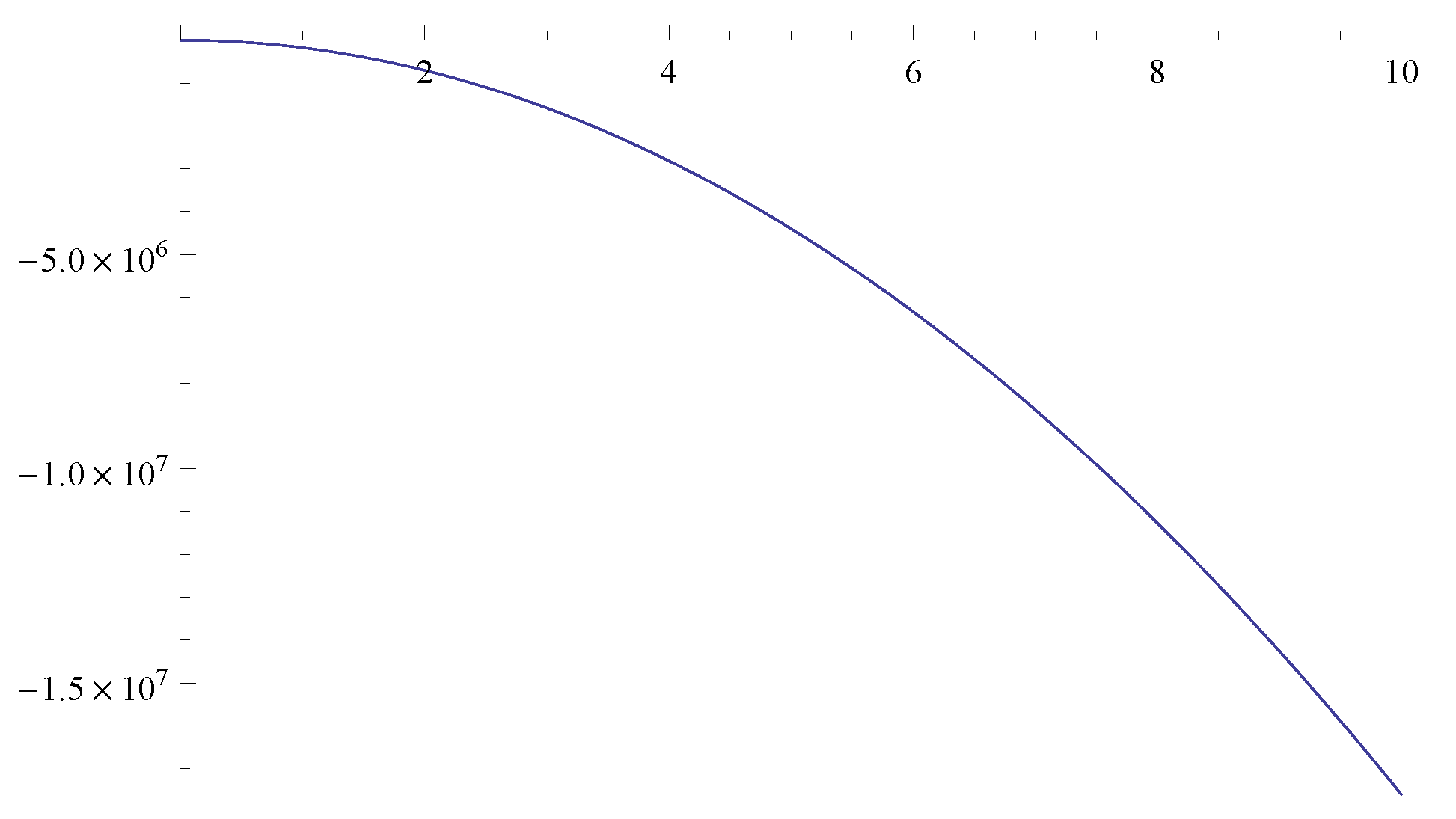

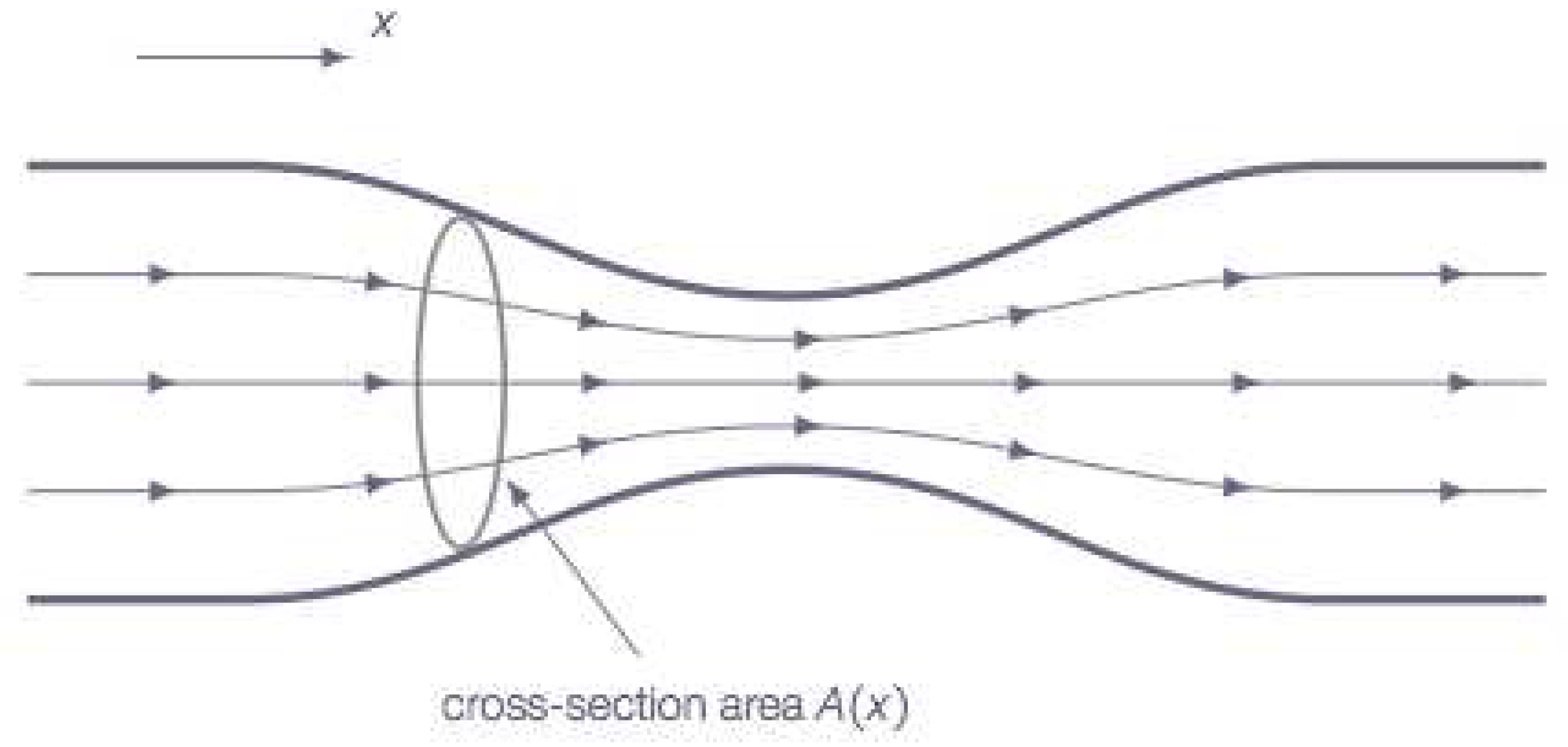

4. Numerical Examples

5. Study of Complex Dynamics of the Method

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Potra, F.-A.; Ptak, V. Nondiscrete Induction and Iterative Process, Research Notes in Mathematics; Pitman: Boston, MA, USA, 1984. [Google Scholar]

- Traub, J.F. Iterative Methods for the Solution of Equations; Prentice-Hall: Englewood Cliffs, NJ, USA, 1964. [Google Scholar]

- Argyros, I.K.; Hilout, S. Computational Methods in Nonlinear Analysis; World Scientific Publishing Company: Singapore, 2013. [Google Scholar]

- Amat, S.; Hernández, M.A.; Romero, N. Semilocal convergence of a sixth order iterative method for quadratic equation. Appl. Num. Math. 2012, 62, 833–841. [Google Scholar] [CrossRef]

- Argyros, I.K.; Ren, H. Improved local analysis for certain class of iterative methods with cubic convergence. Numer. Algor. 2012, 59, 505–521. [Google Scholar]

- Argyros, I.K.; Regmi, S. Undergraduate Research at Cameron University on Iterative Procedures in Banach and Other Spaces; Nova Science Publisher: New York, NY, USA, 2019. [Google Scholar]

- Argyros, I.K.; Sharma, J.R.; Kumar, D. Ball convergence of the Newton-Gauss method in Banach space. SeMA 2017, 74, 429–439. [Google Scholar] [CrossRef]

- Babajee, D.K.R.; Dauhoo, M.Z.; Darvishi, M.T.; Barati, A. A note on the local convergence of iterative methods based on Adomian decomposition method and 3-node quadrature rule. Appl. Math. Comput. 2008, 200, 452–458. [Google Scholar] [CrossRef]

- Candela, V.; Marquina, A. Recurrence relations for rational cubic methods I: The Halley method. Computing 1990, 44, 169–184. [Google Scholar] [CrossRef]

- Candela, V.; Marquina, A. Recurrence relations for rational cubic methods II: The Chebyshev method. Computing 1990, 45, 355–367. [Google Scholar] [CrossRef]

- Chun, C.; Stănică, P.; Neta, B. Third-order family of methods in Banach spaces. Comput. Math. Appl. 2011, 61, 1665–1675. [Google Scholar] [CrossRef] [Green Version]

- Cordero, A.; Torregrosa, J.R. Variants of Newton’s method using fifth-order quadrature formulas. Appl. Math. Comput. 2007, 199, 686–698. [Google Scholar] [CrossRef]

- Ezquerro, J.A.; Hernández, M.A. Recurrence relation for Chebyshev-type methods. Appl. Math. Optim. 2000, 41, 227–236. [Google Scholar] [CrossRef]

- Usurelu, G.I.; Bejenaru, A.; Postolache, M. Newton-like methods and polynomiographic visualization of modified Thakur processes. Int. J. Comp. Math. 2021, 98, 1049–1068. [Google Scholar] [CrossRef]

- Gdawiec, K.; Kotarski, W.; Lisowska, A. Polynomiography Based on the Nonstandard Newton-Like Root Finding Methods. Abst. Appl. Anal. 2015, 2015, 797594. [Google Scholar] [CrossRef] [Green Version]

- Hasanov, V.I.; Ivanov, I.G.; Nebzhibov, F. A new modification of Newton’s method. Appl. Math. Eng. 2002, 27, 278–286. [Google Scholar]

- Sharma, J.R.; Arora, H. On efficient weighted-Newton methods for solving systems of nonlinear equations. Appl. Math. Comput. 2013, 222, 497–506. [Google Scholar] [CrossRef]

- Sharma, J.R.; Kumar, D. A fast and efficient composite Newton Chebyshev method for systems of nonlinear equations. J. Complex. 2018, 49, 56–73. [Google Scholar] [CrossRef]

- Ren, H.; Wu, Q. Convergence ball and error analysis of a family of iterative methods with cubic convergence. Appl. Math. Comput. 2009, 209, 369–378. [Google Scholar] [CrossRef]

- Weerkoon, S.; Fernado, T.G.I. A variant of Newton’s method with accelerated third-order convergence. Appl. Math. Lett. 2000, 13, 87–93. [Google Scholar] [CrossRef]

- Hernández, M.A.; Salanova, M.A. Modification of Kantrovich assumptions for semi local convergence of Chebyshev method. J. Comput. Appl. Math. 2000, 126, 131–143. [Google Scholar] [CrossRef] [Green Version]

- Gutiérrez, J.M.; Magreñán, A.A.; Romero, N. On the semilocal convergence of Newton-Kantrovich method under center-Lipschitz conditions. Appl. Math. Comput. 2013, 221, 79–88. [Google Scholar]

- Kou, J.S.; Li, Y.T.; Wang, X.H. A modification of Newton’s method with third-order convergence. Appl. Math. Comput. 2006, 181, 1106–1111. [Google Scholar]

- Ortega, J.M.; Rheinboldt, W.C. Iterative Solutions of Nonlinear Equations in Several Variables; Academic Press: New York, NY, USA, 1970. [Google Scholar]

- Hoffman, J.D. Numerical Methods for Engineers and Scientists; McGraw-Hill Book Company: New York, NY, USA, 1992. [Google Scholar]

| S. No. | Test Problems | Roots | Color of Fractal | Best Performer | Poor Performer |

|---|---|---|---|---|---|

| 1 | red | , | , | ||

| 2 | green | ||||

| 2 | red | , | |||

| 0 | green | ||||

| 1 | blue | ||||

| 3 | cyan | , | |||

| i | yellow | ||||

| purple | |||||

| i | blue | ||||

| i | green | ||||

| i | red |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kumar, D.; Kumar, S.; Sharma, J.R.; Jantschi, L. Convergence Analysis and Dynamical Nature of an Efficient Iterative Method in Banach Spaces. Mathematics 2021, 9, 2510. https://doi.org/10.3390/math9192510

Kumar D, Kumar S, Sharma JR, Jantschi L. Convergence Analysis and Dynamical Nature of an Efficient Iterative Method in Banach Spaces. Mathematics. 2021; 9(19):2510. https://doi.org/10.3390/math9192510

Chicago/Turabian StyleKumar, Deepak, Sunil Kumar, Janak Raj Sharma, and Lorentz Jantschi. 2021. "Convergence Analysis and Dynamical Nature of an Efficient Iterative Method in Banach Spaces" Mathematics 9, no. 19: 2510. https://doi.org/10.3390/math9192510

APA StyleKumar, D., Kumar, S., Sharma, J. R., & Jantschi, L. (2021). Convergence Analysis and Dynamical Nature of an Efficient Iterative Method in Banach Spaces. Mathematics, 9(19), 2510. https://doi.org/10.3390/math9192510