1. Introduction

Neural networks (NNs) have been successfully applied in classification and recognition problems, but their performances in time-series forecasting have not been so well established [

1]. Despite the two most appealing features, that is, non-parametric specification and universal approximation, the main drawback is overfitting [

2,

3]. This means that neural networks produce almost perfect fitted values but insufficiently precise forecasts. To overcome this problem a user should understand the structure and mechanism of neural networks. Therefore, the purpose of the paper is to explain in detail a neural network modeling strategy, which consists of specification, estimation and forecasting accuracy determination. Recommendation of approprate modeling strategy is of a great interest to users when appling neural networks in time-series forecasting. Correct specification of neural network type and structure was underlying motive of this research.

Expected inflation is considered as appropriate forecasting subject for several reasons. The implementation of fundamental economic phenomena such as Phillips curve, Fisher’s equation and Taylor rule strongly depend on expected inflation. Future inflation expectations are embedded in the current activities, e.g., households will increase their present consumption if they expect prices to rise and vice versa. If consumers share the same expectations, they will push aggregate demand and make inflationary pressures. Besides, the change in the expected inflation shifts the aggregate supply by setting nominal wages in negotiations between employees and employers. Additionally, nonlinear behavior of inflation itself as well as nonlinear dependence with respect to other relevant variables are the main reasons for applying neural networks as a forecasting tool [

4]. It should be outlined here that expected inflation can be measured as the respondents’ perception of future price movements obtained from survey studies, market-based expected inflation obtained as “break-even” rate derived from the Treasury inflation protected securities (TIPS), or a prediction computed from the forecasting models. Therefore, what we forecast is what we expect to be. Expected inflation, although unobservable, matters as the actual inflation significantly depends on it. A survey-based measure of expected inflation obtained from professional forecasters will serve as a benchmark for an “out-of-sample” comparison between neural networks and other model-based competitors. This enables to find weather a specific neural network model is better at capturing the expected inflation within a given forecasting horizon against other commonly used models. Competing survey-based and the most appropriate model-based expected inflation towards ex-post actual inflation is also analyzed. Thus, a research question was imposed: “Can recurrent neural networks predict inflation in euro zone as good as professional forecasters”? If the results affirmatively confirm this research objective, then neural networks can be a helpful tool for managing monetary policy in the countries with inflation targeting as their central banks’ decisions strongly depend on expected inflation.

Although various macroeconomic models have been used to obtain analytical support for measuring inflation expectations, they differ in size, purpose, degree of fundamentals, and treatment of expectations (adaptive or rational). A comprehensive review of different models employed by central banks to predict inflation in the euro zone is well documented by [

5]. Regardless of the model-based or survey-based measures, or whether a single equation or a system of equations is applied, those models suffer from a linear dependence assumption as well as normality assumption, which are unlikely to meet in practical applications, especially in the periods of uncertain events and frequent changes.

Nonlinear models, particularly the non-parametric ones, are less common in empirical studies. Parametric nonlinear models enable varying parameters across multiple sub-periods to capture the nonlinearity, see, e.g., [

6,

7]. However, a functional form of nonlinearity, as well as the regime states with respect to the threshold, should be determined ad hoc. When the functional form of nonlinearity is unknown or the dependent variable does not have the required properties a more suitable approach should be considered, in particular, neural networks. Feedforward neural networks (FNNs) are the most widespread among users [

8] despite the overfitting problem, which can be reduced and more easily controlled by adding recurrent connections from the output layer to the input layer. This type of recurrent neural network in the literature is known as Jordan neural network [

9]. In this paper, a single hidden layer Jordan neural network (JNN) is considered as the competing alternative which predicts inflation as good as professional forecasters. The main advantage of JNN over other neural networks is that it requires less hidden neurons in the hidden layer due to recurrent connections [

10] and for the same recurrent structure it is suitable for forecasting nonstationary time-series with long memory [

11]. In specific cases where JNN provides satisfactory results, an appropriate FNN counterpart requires more hidden neurons to achieve at least a similar performance. JNNs are of special interest to practitioners as well as academics as they can achieve the same fit with less trained weights associated with hidden neurons. This also means that JNN is easier to train due to parsimony principle while additionally having better forecasting performances. Nevertheless, specification of the JNN structure is not so straightforward and it should not be arbitrary.

This paper offers considerable contrubution to the existing studies in several ways. Firstly, Jordan neural network is employed as competing alternative to forecasting inflation (measuring expected inflation), because its recurrent structure enables to control the overfitting problem. In particular, a modeling strategy that is more convenient when dealing with nonstationary time-series which exhibit long memory property and nonlinear dependence with respect to lagged inputs or other exogenous inputs is proposed. Furthermore, a set of additional exogenous inputs was found, which helps to reduce the overfitting problem even more. Thus, this paper provides a great contribution, not only from the modeling perspective, but also from the economic perspective as it considers the first and the second pillars of the European Central Bank (ECB) monetary policy, i.e., economic and monetary analysis, assuming rational expectations which incorporate demand-pull and cost-push factors. Thirdly, forecasts from JNN are compared against forecasts obtained from commonly used linear and nonlinear models to demonstrate their superiority. In that context, a robustness of JNN forecasts with respect to the number of hidden neurons, the number of the inputs and the context unit settings is established. JNN forecasts are also compared with SPFs in order to found whose predictions are more accurate.

The rest of the paper is structured as follows.

Section 2 summarizes previous studies and provides a comprehensive criticism.

Section 3 describes the modeling strategy and network setup and presents output and input data.

Section 4 provides empirical findings and a discussion. Finally, conclusion and future research directions are presented in

Section 5.

2. Previous Studies

Most previous studies have focused on inflation forecasting using ARIMA, STAR and VAR models, see, e.g., [

7,

12,

13,

14]. Some of the studies have compared traditional econometric models against neural networks when forecasting inflation, see, e.g., [

15,

16,

17,

18], but only a few of them have dealt with the recurrent neural network as the competing one among other neural network structures, see, e.g., [

10,

19,

20].

Outperformance of neural networks against univariate autoregressions as well as smooth transition autoregressions were well documented in [

16,

17,

21]. Ref. [

15] compared FNN with multivariate autoregressions VAR and BVAR, respectively. They concluded that FNNs can predict inflation with similar precision in 3 and 12 months’ horizon but they are better than traditional models in shorter prediction horizon of one month. Ref. [

20] demonstrated that FNN produces better results compared to ARIMA and VAR models in both data sets, i.e., “in-the-sample” and “out-of-sample”. Ref. [

16] investigated the predictive accuracy of AR, STAR, NNAR and FNN models to predict 47 monthly macroeconomic variables including inflation for the G7 countries. In general, none of the methods prevailed over the others and their appropriateness varied by countries, variables and prediction horizons. Ref. [

17] used Hybrid and Elman NNs to forecast inflation in 28 OECD countries and a combination of two NNs to increase predictive ability. The predictions of NNs were significantly better than the AR(1) models in 45% of the countries, while the AR(1) models were significantly better in 21% of the countries. However, Choudhary and Haider’s research lacks many explanations regarding the NN architecture and does not discuss training and validation issues. Parameters settings argumentation is also missing. Ref. [

22] used 10 exogenous inputs concluded that the accuracy of FNN is satisfactory when compared with forecasts by some prominent institutions, e.g., OECD, IMF. Ref. [

23] concluded that NNs can be useful in forecasting inflation but that the linear AR model is a serious competitor. Ref. [

10] showed that JNN has a significantly better forecasting performance one and six months ahead compared to the FNN. On the other hand, [

18] compared machine learning models, including FNNs, with standard time-series models and concluded that multivariate models produce the most precise results in all horizons and that there is no single best model to forecast inflation. Namely, NN-type models may be applied to the CPI inflation forecasting, while the ARDL model is a better option for other inflation measures. Ref. [

24] compared JNN only with different exogenous inputs to conclude that in most cases the simplest JNNs are ranked the highest by their performance, i.e., JNNs with lagged dependent variable and one exogenous regressor. Ref. [

25] concluded that neural network outperforms some of the benchmark models at longer horizons, and that forecasting accuracy is increased in the period of low inflation. Ref. [

26] demonstrated that a long short-term memory recurrent network outperforms, among others, a simple fully-connected neural network in forecasting monthly consumer price index. Moreover, ref. [

27] concluded that incorporating both linear and nonlinear aspects of the time-series using a seasonal ARIMA long short-term memory type of recurrent neural network provides higher accuracy in inflation forecasts.

Major findings of the aforementioned studies support the idea that neural networks can successfully replace various time-series models, that is, they are similarly good, if not better than traditional both univariate and multivariate models. This is not surprising due their vast flexibility and universal approximation property. However, neural networks in all these studies differ according to the selection of lagged inputs as well as exogenous inputs, the number of hidden neurons in hidden layer, forecasting horizon, training algorithm, and whether recurrent connections are enabled or not. More importantly, they neglect the overfitting issue and they do not provide a clear suggestion on how to reduce this problem.

Contrary to model-based studies, several papers have shown that the survey-based measures provide better inflation forecasts than any other alternative, e.g., Ref [

28] found that survey forecasts outperform the model-based ones. Moreover, professional forecasters form expectations that are in line with conventional theories [

29,

30]. Surveys of consumers (SCs) are not considered in this paper as consumers’ expectations are higher than those of SPFs, although they relatively quickly incorporate changes in the inflation process. Due to respondents’ heterogeneity some consumers tend to be more biased and less rational [

7,

31]. In the short run, the SPF can predict almost all of the variation in the time-series due to the trend and the business cycle, but that the forecasts contain little or no significant information about the variation in the irregular component [

32], while [

33] argues that the SPFs are sensitive to other aspects of the environment as well, though this may not always lead to improved forecast accuracy. Finally, he concluded that neither households nor professionals were able to perceive changes in the inflationary process, which have occurred in recent years. Therefore, they can be considered as a complementary source of information on future inflation with inflation forecasts based on econometric models. Market-based measures are also not considered because the data have only been available since 2006, but not for all countries and markets.

3. Modeling Strategy and Data

The modeling strategy explained in this paper provides a better insight into the specifications of neural networks with a great attention paid to the overfitting problem reduction. It leads to the NN structure with the highest predictive ability which produces the most accurate inflation forecasts. Based on the limitations and drawbacks of previous model-based approaches, the necessity for recurrent neural networks becomes apparent.

The generalized structure of a dynamic univariate single hidden layer neural network is as follows:

Neural network in Equation (

1) is univariate as it includes only one output (

). Notation

for inflation time-series is taken as commonly used in the literature. NN is dynamic as it includes lagged output values as inputs (

) in addition to exogenous inputs (

m-dimensional vector

) and one recurrent connection with respect to the output from the previous stage. It is usually considered as a correction term obtained from the previous errors.

the is activation function, which can be understood as a smoothing function like in any smooth transition model, that is, a link function between the dependent variable (output) and independent variables (inputs). Smoothed values from the input layer are transmitted to the output layer over one hidden layer only, but with multiple hidden neurons (

).

The structure of the NN in Equation (

1) fits the Jordan specification of neural network, that is, JNN

. The total number of inputs is

, the number of hidden neurons is

q, while

is the context unit associated with the recurrent connection that keeps the content of output from the previous training stage [

9]. It represents the long-term memory of the network [

34]. This type of structure is convenient to derive some special cases. In particular, if

then the JNN reduces to the feedforward structure, i.e., FNN

. Moreover, if

and

m are both zero, it reduces to the neural network autoregression, i.e., NNAR

according to [

3]. Assuming that the link function

is identity and there is one hidden neuron then the NNAR

reduces to the most restrictive linear autoregression, i.e., AR

model. The same restrictions can be employed to perform Teräsvirta’s and White’s nonlinearity tests. Fitted outputs from the JNN are compared to the observed (target) values and their differences represent the error terms. These terms are then used to update the network weights in the back-propagation stage, that is, the recursive back-propagation (BP) learning algorithm is used in training weights adjustment until a minimum error is achieved. A recursive algorithm provides consistent and asymptotically normal estimators [

35].

To be more precise, consider NNAR

model with logistic smooth function:

According to Equation (

2), neural network is univariate, dynamic and feedforward (no recurrent connection) with three lagged inputs (

) and two hidden neurons (

). The functional form of

is set to be logistic as the most frequent choice in practice [

36], while the number of hidden neurons is set by the rule of thumb, i.e., the integer number

according to [

3]. Model NNAR

presents a linear combination of two logistic functions with the same inputs but different neuron weights

and

. These weights along with other weights

,

,

,

and

are estimated (or trained as referred to the jargon of artificial intelligence) by the BP algorithm, i.e., a gradient descent method is used to minimize the loss function with respect to weights that are being updated iteratively by the chain rule [

37]. From the econometric perspective, Equation (

2) can be considered as fully parametric and the nonlinear least squares (NLS) method can be applied. However, NLS is computationally demanding with respect to a large number of parameters and there is a high risk of wedging in a local minimum within a certain quasi-Newton. On the other hand, NNs are non-parametric and more flexible, but it is evident that the number of hidden neurons strongly depends on the number of lagged inputs. The number of hidden neurons increases almost linearly, which is also the case when a neural network is expanded for additional exogenous inputs

. The number of inputs as well as the number of hidden neurons should be properly handled to overcome the overfitting problem.

Thus, in this paper utilizing a recurrent neural network, such as in Equation (

1) is proposed, by adding a recurrent connection from output layer to input layer as the user can control the overfitting problem more easily. In particular, when using the JNN the upper bound of hidden neurons should be set by the rule of thumb and afterwards the network should be pruned until no improvement in forecasting accuracy is achieved in the testing data set (observations “out-of-sample”). Our modeling strategy supports the top-down approach starting with the

q hidden neurons and proceeding with

hidden neuron in each step and assuring that context unit weight

to preserve the long memory of the network. The proposed modeling strategy is employed in forecasting inflation for the same reasons discussed in previous sections to provide some empirical evidence. The performance of the JNN is compared against the aforementioned nested models. Moreover, predicted values are compared with survey-based measure of expected inflation, obtained from ECBs’ Survey of Professional Forecasters, and ex-post actual inflation as well. Although, the SPF’s expectations are here used as point forecasts, the probability distributions are embedded in the data, providing a quantitative assessment of risk and uncertainty.

The remaining issue is the selection of appropriate exogenous inputs which can be also helpful in reducing the overfitting problem and increasing the forecasting accuracy. In order to include all the aspects of inflation expectations, the characteristics of demand-pull and cost-push inflation are considered, similar to [

14,

38]. Namely, vector

in Equation (

1) is four dimensional, that is,

, where

represents the monetary expansion rate,

is the unit labour cost change,

is the depreciation exchange rate, and

is the rate of industrial production change. Monthly data from January 2000 to December 2019 at the aggregate level of the euro zone countries, obtained from EUROSTAT, ECB and FRED public sources, are employed (

Table 1).

As the financial factor, the rate of change in monetary aggregate M3 is used

because of its stability and information content for medium-term price movement. The rate of change in unit labour costs

is used as an indicator of labour-market movements. The nominal effective exchange rate (NEER) is used

as an external factor. The rate of change of industrial production index

is also used to represent the aggregate demand and supply shocks. A positive influence of wages, money and industrial production is expected, while the effect of exchange rate should be negative. Wages can affect inflation on both supply and demand sides as they appear to be an essential cost of production and at the same time affect the purchasing power [

39]. The number of exogenous variables is limited in this paper, i.e., a larger set of exogenous inputs can increase overfitting problem, which is primarily tried to be solved using the proposed strategy.

4. Empirical Results and Discussion

Descriptive statistics along with the normality JB (Jarque–Berra) test, unit root ADF (Augmented Dickey–Fuller) test and PP (Phillips–Perron) test as well as independence BDS (Brock, Dechert and Scheinkma) test are presented for each variable in

Table 2. The results indicate that inflation (

) is nonstationary time-series (both ADF and PP statistics confirm the same findings) and it is not identically and independently distributed according to the BDS statistic. Moreover, the null hypothesis of the JB test is also rejected. The same results are obtained for other variables, indicating the presence of nonstationarity, nonlinearity and deviation from normality. Accordingly, it imposes a need for a non-parametric approach that successfully captures the nonlinearity, i.e., the recurrent neural network as suggested in this paper.

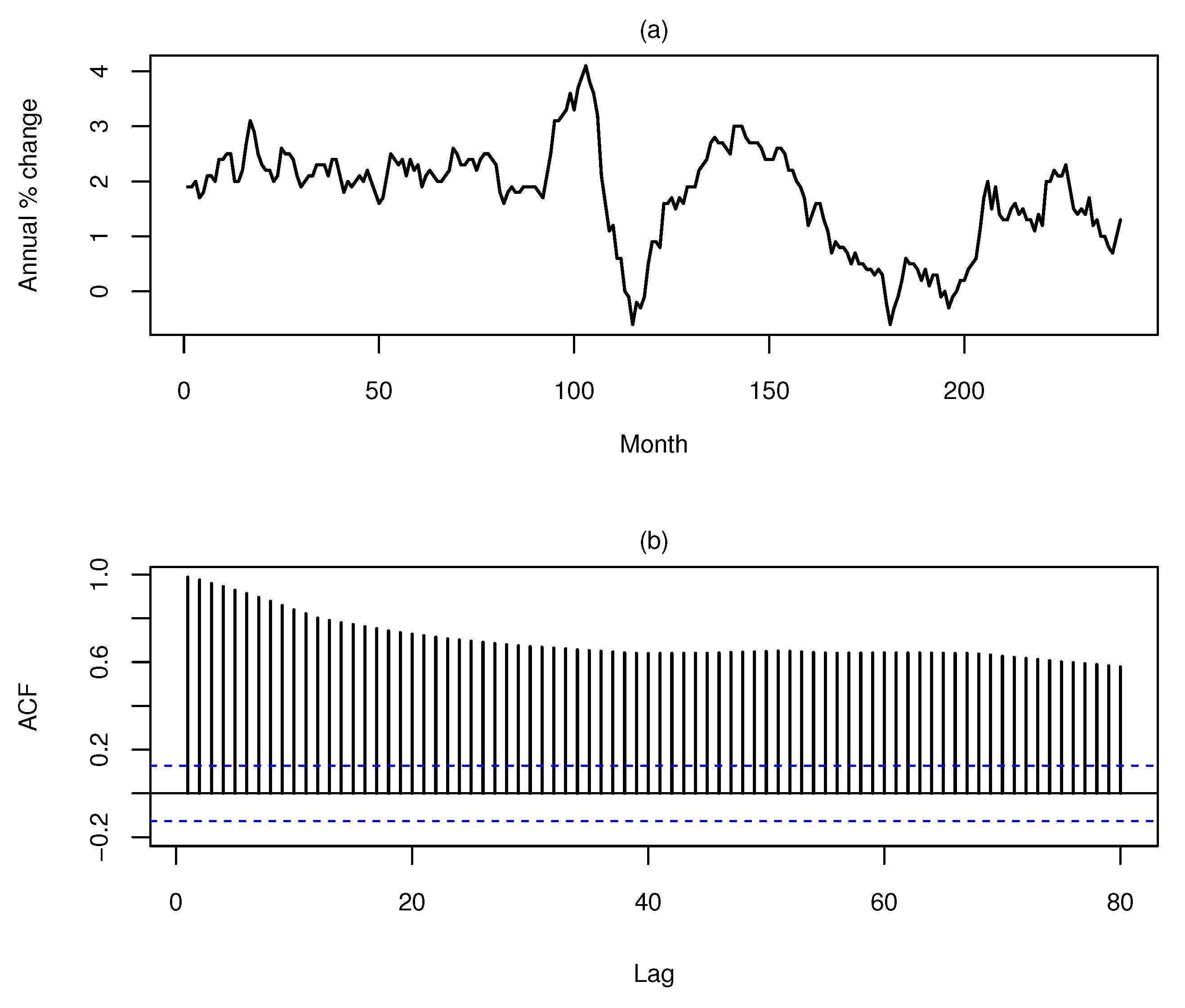

Figure 1 presents the euro zone inflation along with the correlogram up to 80 lags. A visual inspection of

Figure 1 strongly indicates a high persistence of inflation and consequently a long memory property. This supports the extremely slow decay of autocorrelation function with significant coefficients at very distant lags.

Not only the nonlinearity of a single time-series itself, but also the nonlinear dependence between multiple time-series should be checked. For that purpose, the Teräsvirta neural network test [

40] and White neural network test [

41] were applied to test the null hypothesis if all weights from the hidden neurons are zero. If the null hypothesis is rejected, it can be concluded there is evidence of a nonlinear dependence between output and inputs. Since the null hypothesis of linearity is rejected in both cases, according to

statistics in

Table 3, the nonlinear dependence is confirmed in favour of hidden neurons.

Prior to the specification, estimation and forecasting accuracy determination of neural networks, the entire sample was divided into two parts, that is, training data from January 2000 to December 2017 (216 observations “in-the sample”) used for estimation and testing data from January 2018 to December 2019 (24 observations “out-of-sample”) used for forecasting purposes. Forecasting horizon of 2 years is reasonable to consider as twice the length of time-series frequency. Likewise, SPF predictions are also limited to two years interval.

Several conclusions emerge from the results in

Table 4. Both types on neural networks, i.e., JNN and NNAR, respectively, fit the training data almost perfectly, but they notably differ in forecasting performance considering the testing data. Namely, almost all Jordan’s neural networks have a smaller RMSE “out-of-sample” compared with autoregression neural networks within forecasting horizon of 2 years. JNNs with only one lagged input and no exogenous inputs require six hidden neurons to reduce the overfitting problem (forecasting accuracy does not improve after six hidden neurons).

When exogenous inputs are also included, the overfitting problem is reduced more as the RMSE decreases due to even fewer hidden neurons (3 hidden neurons are required).

Between the two remaining JNNs, the one with a context unit 0.7 performs better (RMSE 0.581). The weight of recurrent connection (context unit) makes no difference in selection of hidden neurons, as long as it is higher than 0.5 to preserve the long memory of the network. However, the recurrent connection makes a huge difference towards NNARs, i.e., recurrent connection makes the JNN competing and outperforming.

Moreover, additional exogenous inputs increase the RMSE of NNARs regardless of the number of hidden neurons. This means that the overfitting problem can be reduced when JNNs are applied with additional exogenous inputs, which is not possible to achieve with NNARs. These findings support our modeling strategy when neural networks are considered in practical applications, i.e., for forecasting purposes. This is in line with [

18,

38] who found that multivariate models and more variables can improve inflation forecasts, while it is opposite to findings of [

24,

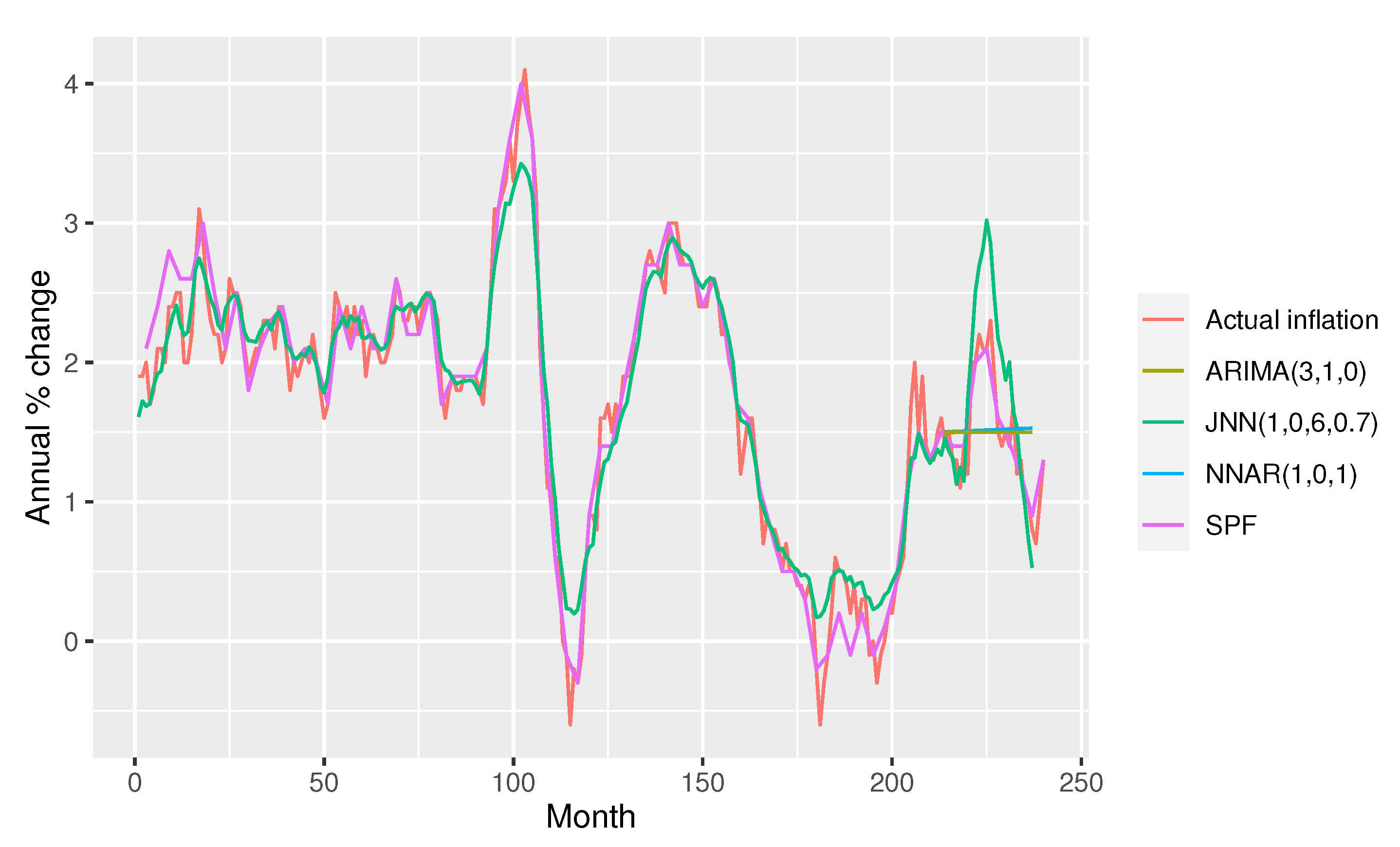

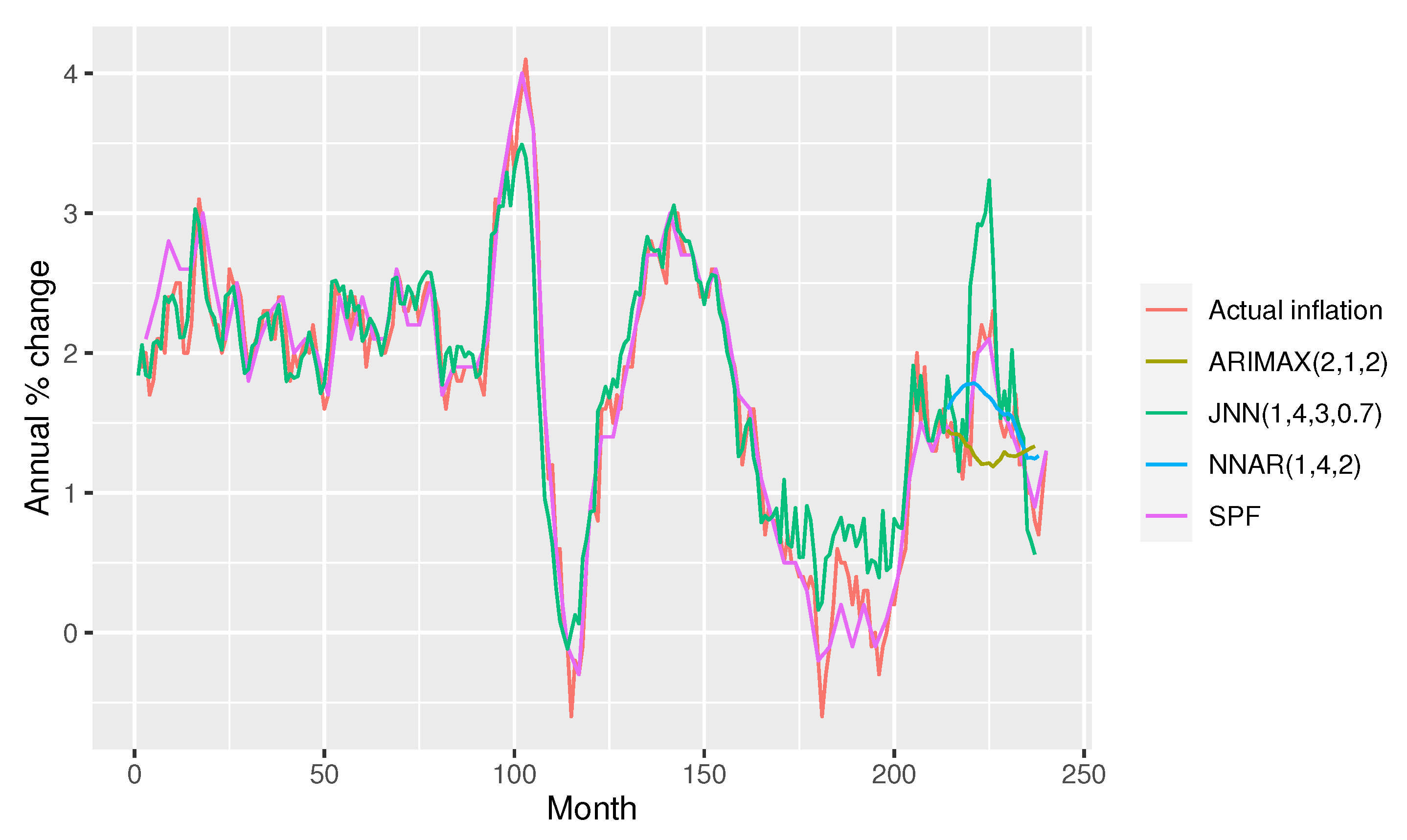

28] favoring simpler models. The same conclusion emerges when JNNs are compared with appropriate ARIMA models. ARIMA(3,1,0) and ARIMAX(2,1,2) both exhibit very poor predictions according to

Figure 2 and

Figure 3, although having rather low RMSE error “out-of-sample” for models without and with the external regressors (0.452 and 0.519, respectively). Appropriate lags of ARIMA models

p,

d and

q are selected according to information criteria AIC and BIC and whether additional regressors are considered as exogenous inputs or not. Unlike ARIMA and NNAR models, the JNN provides stable and more precise inflation forecasts. The JNN’s RMSE is somewhat higher because of the peak in the middle of the year 2018, when JNN forecasted much higher inflation than other models. In other periods the inflation forecasts were in line with the actual inflation. These findings of superiority of JNN against FNN in inflation forecasting is found in [

10], while the superiority of NNs in general compared to ARIMA models are found in [

17,

20,

26].

From

Figure 2 and

Figure 3, it can also be concluded that the JNN predicts inflation as good as professional forecasters. While the actual inflation continued to decline in 2015, the expected inflation has nonetheless increased, which can be explained by economic recovery in the euro zone in the period that followed. In such circumstances, the main task in the euro zone was to strengthen confidence in order to recover and support the return of inflation to the target level below but close to 2%. At the beginning of 2016, there was uncertainty of a new global downturn, which resulted in significantly volatile financial markets.

There was a risk of delayed returning to target inflation, as the actual inflation was already very low. Deflation pressures emerged and inflation expectations were revised downwards. Thus, an overestimation of the expected inflation during crisis was not surprising. In 2017 and 2018, inflation recovered from its past lows, although the two spikes could not be predicted by neither JNN nor SPF, i.e., the first one in 2017 was underestimated by both forecasts and the second one in 2018 was overestimated by the JNN and underestimated by the SPF. The following decline in inflation in 2019 was equally well perceived by both SPF and JNN. Despite these minor exceptions, there is strong evidence that JNN fits inflation expectations as good as SPF, as similarly concluded in [

22] for other agencies.

Accurate inflation forecast is extremely important to conduct an effective monetary policy. If the predictions are not correct, the central banks may implement a policy that is tighter or looser than necessary. This in turn can lower the central banks’ credibility and consequently lead to large welfare costs. Therefore, JNNs can be used by central banks as a very good alternative to forecast inflation and can help them modify their policies to achieve the specified economic goals.

5. Conclusions

Expected inflation has an important role in managing monetary policy. As it is an unobserved phenomenon, different forecasting approaches have emerged in the literature, e.g., model-based, market-based and survey-based approaches. After providing an overview of advantages and drawbacks of the existing model-based approaches, a specific type of recurrent neural network, that is, Jordan neural network (JNN) was proposed. The specification of the JNN structure was not straightforward which is unfortunately often case in previous studies because neural networks are usually trained using the default settings and users frequently neglect the overfitting problem. Therefore, a modeling strategy was set up, which reduces the overfitting problem, i.e., the problem was reduced until no improvement in forecasting accuracy was achieved. In particular, the JNN(1, 4, 3, 0.7) with the characteristics of the demand-pull and cost-push inflation outperforms NNARs and ARIMA(X) models in terms of the RMSE accuracy measure “out-of-sample”. Demand-pull and cost-push inflation characteristics enabled to reduce the overfitting problem even more due to less number of hidden neurons required in a single hidden layer. In total, 72 neural networks were trained using the BP algorithm. The vast majority of JNNs have a smaller RMSE compared to NNARs with the forecasting horizon of 2 years. This result supports the finding that recurrent neural networks should be considered as competing alternatives to forecasting time-series [

10,

42]. The superiority of neural networks against the traditionally used ARIMA models is also confirmed in this paper which is in line with [

6,

17].

The main contribution of this study in comparison to existing similar studies is the suggested modelling strategy in terms of correct specification of neural network type and structure for forecasting purpose. It supports the top-down approach starting with the hidden neurons determined by the rule of thumb and proceeding with one less hidden neuron in each step with a context unit weight higher than 0.5. The proposed modeling strategy is the most convenient strategy when dealing with nonstationary time-series, which exhibit long memory property and nonlinear dependence with respect to lagged inputs or other exogenous inputs. This was empirically demonstrated using the aggregated euro zone monthly observations from January 2000 to December 2019. Teräsvirta’s and White’s neural network tests along with the BDS test have provided empirical evidence that supports the usage of neural networks in inflation forecasting. Additionally, the results of present research indicate that JNN forecasts fit the expected inflation almost as good as the SPF. When compared to the actual inflation ex-post, it was found that the JNN anticipates future inflation quite accurately. Therefore, the JNN can be used as a complementary tool for inflation forecasting due to its advantages, but also because of the shortcomings of other inflation expectations measures.

The limitation of this study is the variable selection since it had to be narrowed so that overfitting problem can be easier controled. The direction for further research is to investigate whether the JNN generally predicts better than other network types, using simulation techniques. Moreover, it would be valuable to test the proposed methodology in the COVID-crisis as it stands out as a challenging forecasting period in many fields, including the important and current issue of increasing inflation in all countries.