A ResNet50-Based Method for Classifying Surface Defects in Hot-Rolled Strip Steel

Abstract

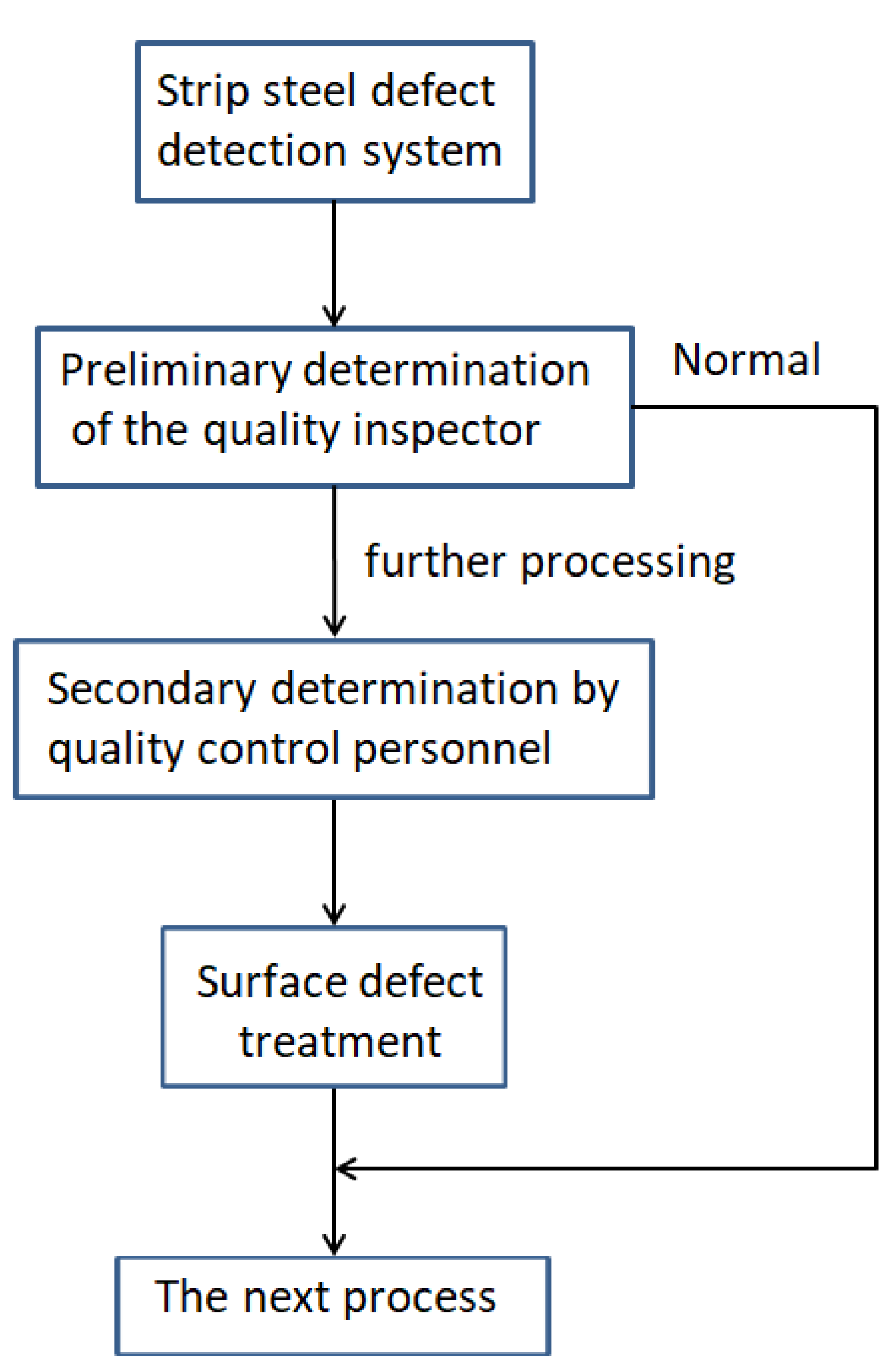

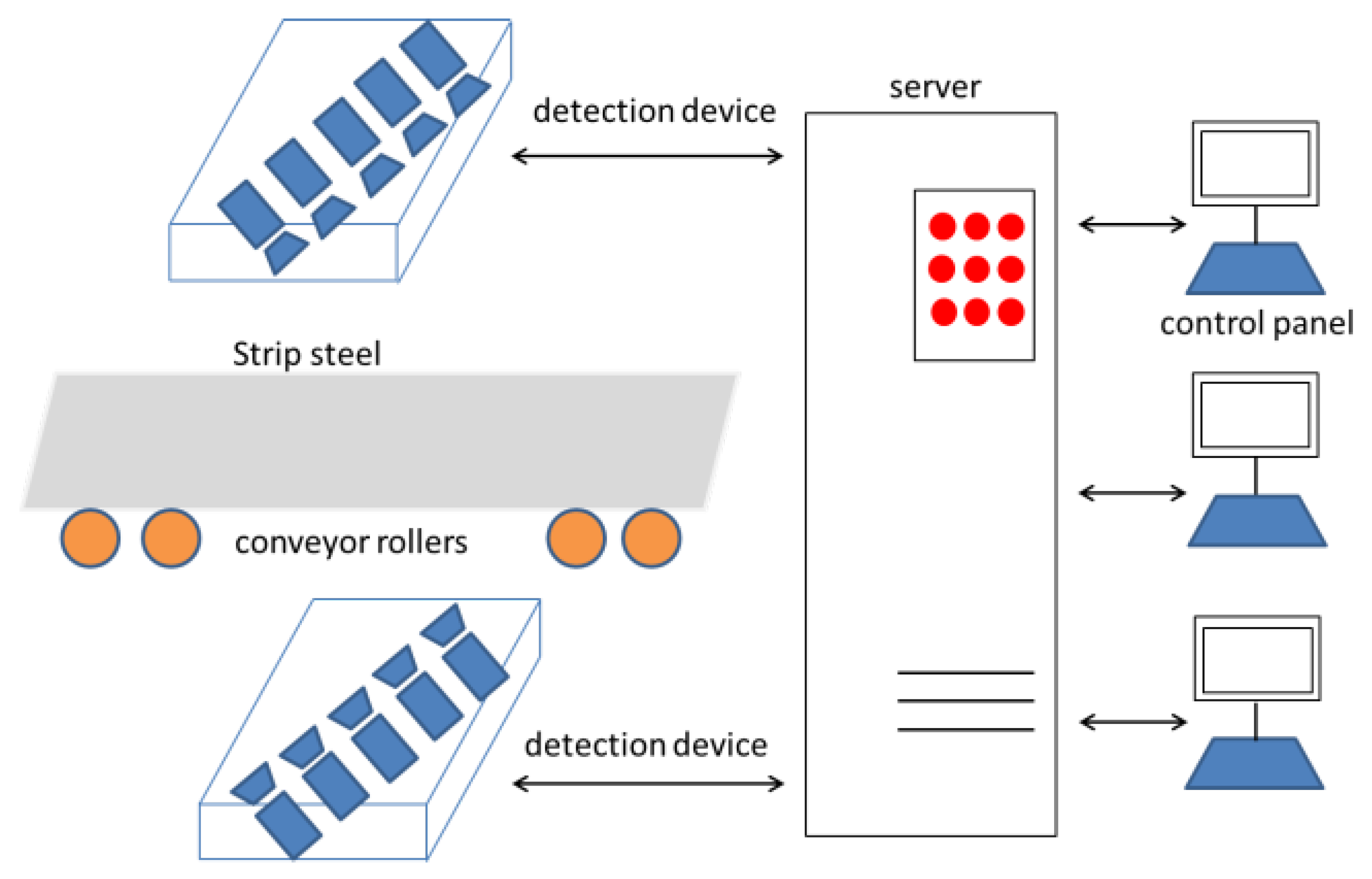

:1. Introduction

- We combine ResNet50, FcaNet and CBAM to propose a fused network for the classification of surface defects in hot-rolled steel strips.

- We validate the proposed algorithm on the X-SDD dataset [7], compare it with several deep learning models, and design ablation experiments to verify the effectiveness of the algorithm.

2. Related Work

2.1. Machine Learning Based Methods

2.2. Deep Learning Based Methods

3. Method

3.1. Introduction of ResNet

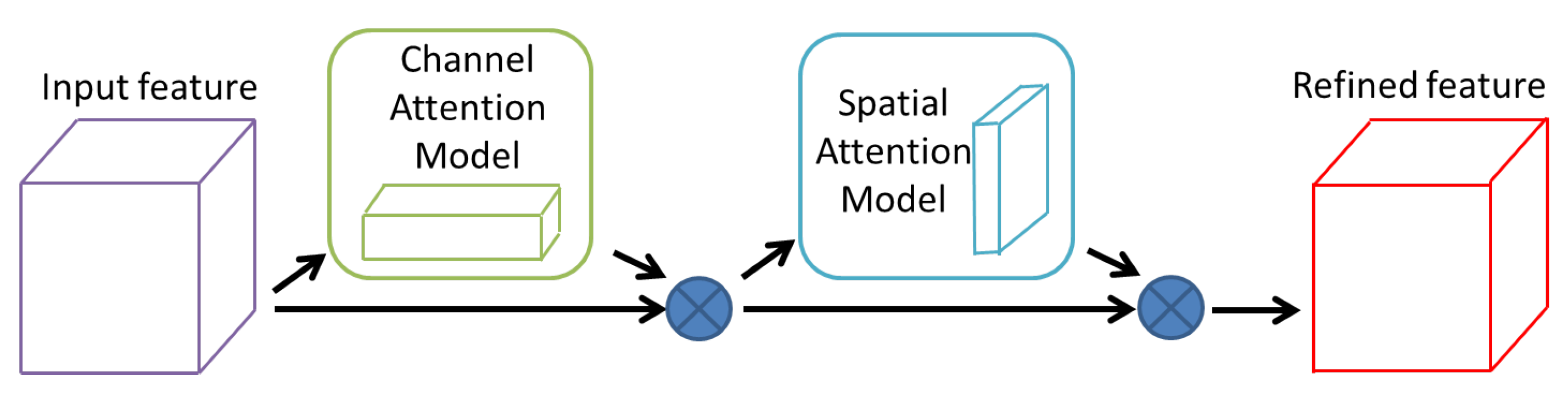

3.2. Introduction of CBAM

3.3. Introduction of FcaNet

3.4. Our Method

4. Experiments

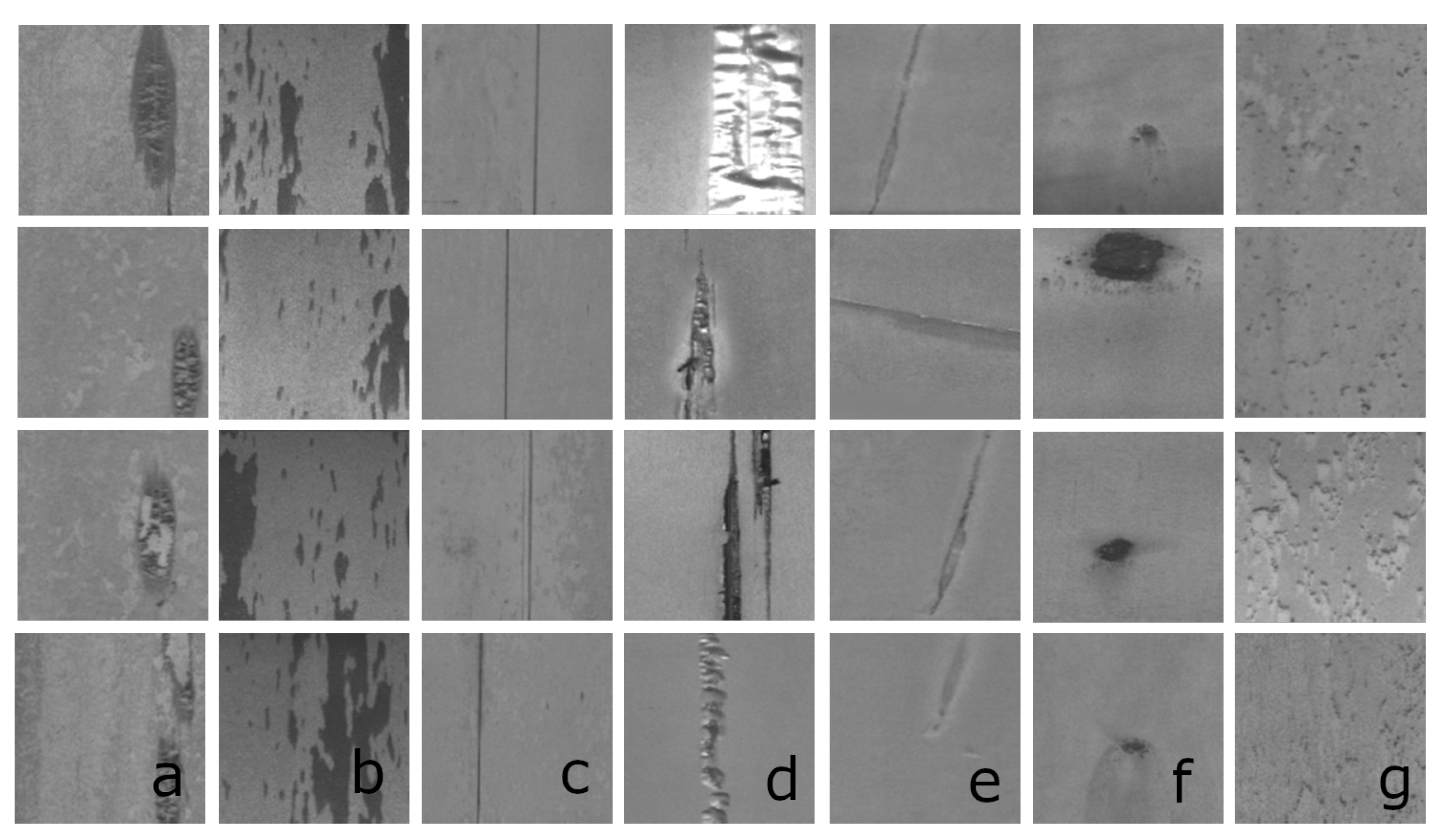

4.1. Introduction of the Dataset

4.2. Experimental Settings

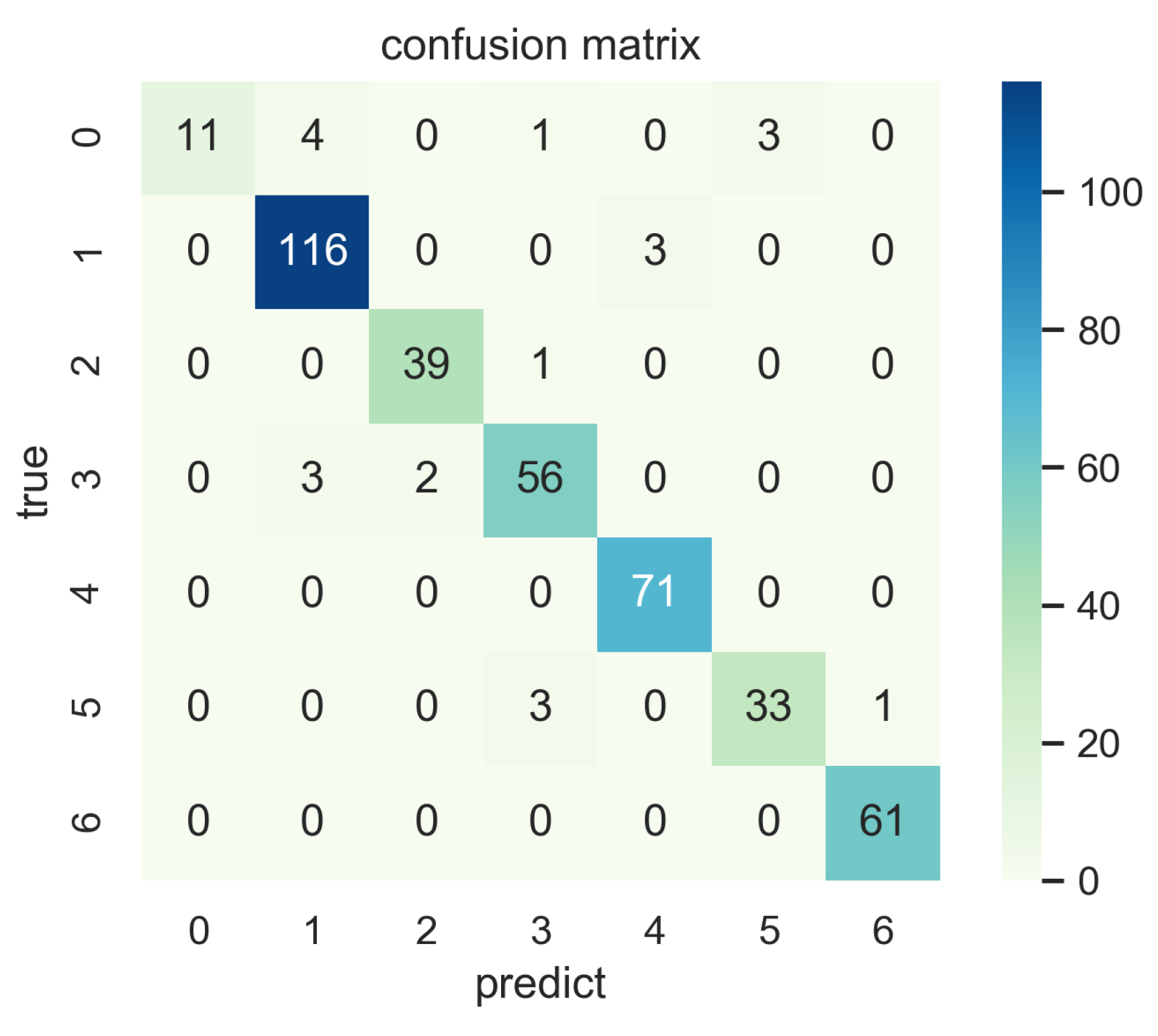

4.3. Experimental Results

4.4. Ablation Experiments

4.5. Comparison of Model Complexity

4.6. The Ensemble Model

5. Discussion and Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Kumar, A.; Das, A.K. Evolution of microstructure and mechanical properties of Co-SiC tungsten inert gas cladded coating on 304 stainless steel. Eng. Sci. Technol. Int. J. 2020, 24, 591–604. [Google Scholar] [CrossRef]

- Afanasieva, L.E.; Ratkevich, G.V.; Ivanova, A.I.; Novoselova, M.V.; Zorenko, D.A. On the Surface Micromorphology and Structure of Stainless Steel Obtained via Selective Laser Melting. J. Surf. Investig. X-ray Synchrotron Neutron Tech. 2018, 12, 1082–1087. [Google Scholar] [CrossRef]

- Gromov, V.E.; Gorbunov, S.V.; Ivanov, Y.F.; Vorobiev, S.V.; Konovalov, S.V. Formation of surface gradient structural-phase states under electron-beam treatment of stainless steel. J. Surf. Investig. X-ray Synchrotron Neutron Tech. 2011, 5, 974–978. [Google Scholar] [CrossRef]

- Youkachen, S.; Ruchanurucks, M.; Phatrapomnant, T.; Kaneko, H. Defect Segmentation of Hot-rolled Steel Strip Surface by using Convolutional Auto-Encoder and Conventional Image processing. In Proceedings of the 2019 10th International Conference of Information and Communication Technology for Embedded Systems (IC-ICTES), Bangkok, Thailand, 25–27 March 2019; pp. 1–5. [Google Scholar] [CrossRef]

- Ashour, M.W.; Khalid, F.; Halin, A.A.; Abdullah, L.N.; Darwish, S.H. Surface defects classification of hot-rolled steel strips using multi-directional shearlet features. Arab. J. Sci. Eng. 2019, 44, 2925–2932. [Google Scholar] [CrossRef]

- Luo, Q.; Fang, X.; Sun, Y.; Liu, L.; Ai, J.; Yang, C.; Simpson, O. Surface Defect Classification for Hot-Rolled Steel Strips by Selectively Dominant Local Binary Patterns. IEEE Access 2019, 7, 23488–23499. [Google Scholar] [CrossRef]

- Feng, X.; Gao, X.; Luo, L. X-SDD: A New Benchmark for Hot Rolled Steel Strip Surface Defects Detection. Symmetry 2021, 13, 706. [Google Scholar] [CrossRef]

- Xiao, M.; Jiang, M.; Li, G.; Xie, L.; Yi, L. An evolutionary classifier for steel surface defects with small sample set. EURASIP J. Image Video Process. 2017, 2017, 1–13. [Google Scholar] [CrossRef] [Green Version]

- Gong, R.; Chu, M.; Yang, Y.; Feng, Y. A multi-class classifier based on support vector hyper-spheres for steel plate surface defects. Chemom. Intell. Lab. Syst. 2019, 188, 70–78. [Google Scholar] [CrossRef]

- Chu, M.; Liu, X.; Gong, R.; Zhao, J. Multi-class classification method for strip steel surface defects based on support vector machine with adjustable hyper-sphere. J. Iron Steel Res. Int. 2018, 25, 706–716. [Google Scholar] [CrossRef]

- Luo, Q.; Sun, Y.; Li, P.; Simpson, O.; Tian, L.; He, Y. Generalized completed local binary patterns for time-efficient steel surface defect classification. IEEE Trans. Instrum. Meas. 2018, 68, 667–679. [Google Scholar] [CrossRef] [Green Version]

- Hu, H.; Li, Y.; Liu, M.; Liang, W. Classification of defects in steel strip surface based on multiclass support vector machine. Multimed. Tools Appl. 2014, 69, 199–216. [Google Scholar] [CrossRef]

- Zhang, Z.F.; Liu, W.; Ostrosi, E.; Tian, Y.; Yi, J. Steel strip surface inspection through the combination of feature selection and multiclass classifiers. Eng. Comput. 2020, 38, 1831–1850. [Google Scholar] [CrossRef]

- Fu, G.; Sun, P.; Zhu, W.; Yang, J.; Cao, Y.; Yang, M.Y.; Cao, Y. A deep-learning-based approach for fast and robust steel surface defects classification. Opt. Lasers Eng. 2019, 121, 397–405. [Google Scholar] [CrossRef]

- Liu, Y.; Geng, J.; Su, Z.; Yin, Y. Real-time classification of steel strip surface defects based on deep CNNs. In Proceedings of 2018 Chinese Intelligent Systems Conference; Springer: Singapore, 2019; pp. 257–266. [Google Scholar]

- Zhou, S.; Chen, Y.; Zhang, D.; Xie, J.; Zhou, Y. Classification of surface defects on steel sheet using convolutional neural networks. Mater. Technol. 2017, 51, 123–131. [Google Scholar]

- Konovalenko, I.; Maruschak, P.; Brezinová, J.; Viňáš, J.; Brezina, J. Steel Surface Defect Classification Using Deep Residual Neural Network. Metals 2020, 10, 846. [Google Scholar] [CrossRef]

- Yi, L.; Li, G.; Jiang, M. An end to end steel strip surface defects recognition system based on convolutional neural networks. Steel Res. Int. 2017, 88, 1600068. [Google Scholar] [CrossRef]

- Song, K.; Yan, Y. Micro Surface defect detection method for silicon steel strip based on saliency convex active contour model. Math. Probl. Eng. 2013, 2013, 429094. [Google Scholar] [CrossRef]

- Wang, F.; Jiang, M.; Qian, C.; Yang, S.; Li, C.; Zhang, H.; Wang, X.; Tang, X. Residual attention network for image classification. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 6450–6458. [Google Scholar]

- Wang, X.; Girshick, R.; Gupta, A.; He, K. Non-local neural networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 7794–7803. [Google Scholar]

- Cao, Y.; Xu, J.; Lin, S.; Wei, F.; Hu, H. Gcnet: Non-local networks meet squeeze-excitation networks and beyond. In Proceedings of the IEEE/CVF International Conference on Computer Vision Workshops, Seoul, Korea, 27–28 October 2019. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Woo, S.; Park, J.; Lee, J.Y.; Kweon, I.S. Cbam: Convolutional block attention module. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 3–19. [Google Scholar]

- Bahdanau, D.; Cho, K.; Bengio, Y. Neural machine translation by jointly learning to align and translate. arXiv 2014, arXiv:1409.0473. [Google Scholar]

- Xu, K.; Ba, J.; Kiros, R.; Cho, K.; Courville, A.; Salakhudinov, R.; Zemel, R.; Bengio, Y. Show, attend and tell: Neural image caption generation with visual attention. In Proceedings of the International Conference on Machine Learning (PMLR), Lille, France, 7–9 July 2015; pp. 2048–2057. [Google Scholar]

- Gregor, K.; Danihelka, I.; Graves, A.; Rezende, D.; Wierstra, D. Draw: A recurrent neural network for image generation. In Proceedings of the International Conference on Machine Learning (PMLR), Lille, France, 7–9 July 2015; pp. 1462–1471. [Google Scholar]

- Jaderberg, M.; Simonyan, K.; Zisserman, A. Spatial transformer networks. arXiv 2015, arXiv:1506.02025. [Google Scholar]

- Qin, Z.; Zhang, P.; Wu, F.; Li, X. FcaNet: Frequency Channel Attention Networks. arXiv 2020, arXiv:2012.11879. [Google Scholar]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-excitation networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 7132–7141. [Google Scholar]

- Qilong, W.; Banggu, W.; Pengfei, Z.; Li, P.; Zuo, W.; Hu, Q. ECA-Net: Efficient Channel Attention for Deep Convolutional Neural Networks. arXiv 2020, arXiv:1910.03151. [Google Scholar]

- Ahmed, N.; Natarajan, T.; Rao, K.R. Discrete cosine transform. IEEE Trans. Comput. 1974, 100, 90–93. [Google Scholar] [CrossRef]

- Jeon, M.; Jeong, Y.S. Compact and accurate scene text detector. Appl. Sci. 2020, 10, 2096. [Google Scholar] [CrossRef] [Green Version]

- Vu, T.; Van Nguyen, C.; Pham, T.X.; Luu, T.M.; Yoo, C.D. Fast and efficient image quality enhancement via desubpixel convolutional neural networks. In Proceedings of the European Conference on Computer Vision (ECCV) Workshops, Glasgow, UK, 23–28 August 2018; pp. 243–259. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. Adv. Neural Inf. Process. Syst. 2012, 25, 1097–1105. [Google Scholar] [CrossRef]

- Howard, A.; Sandler, M.; Chu, G.; Chen, L.; Chen, B.; Tan, M.; Wang, W.; Zhu, Y.; Pang, R.; Vasudevan, V.; et al. Searching for mobilenetv3. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Korea, 27–28 October 2019; pp. 1314–1324. [Google Scholar]

- Chollet, F. Xception: Deep learning with depthwise separable convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 1251–1258. [Google Scholar]

- Zhang, X.; Zhou, X.; Lin, M.; Sun, J. Shufflenet: An extremely efficient convolutional neural network for mobile devices. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 6848–6856. [Google Scholar]

- Mehta, S.; Rastegari, M.; Shapiro, L.; Hajishirzi, H. Espnetv2: A light-weight, power efficient, and general purpose convolutional neural network. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seoul, Korea, 27–28 October 2019; pp. 9190–9200. [Google Scholar]

- Han, K.; Wang, Y.; Tian, Q.; Guo, J.; Xu, C.; Xu, C. Ghostnet: More features from cheap operations. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 14–19 June 2020; pp. 1580–1589. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Tolstikhin, I.; Houlsby, N.; Kolesnikov, A.; Beyer, L.; Zhai, X.; Unterthiner, T.; Yung, J.; Steiner, A.; Keysers, D.; Uszkoreit, J.; et al. Mlp-mixer: An all-mlp architecture for vision. arXiv 2021, arXiv:2105.01601. [Google Scholar]

| Model | Accuary | Macro-Recall | Macro-Precision | Macro-F1 |

|---|---|---|---|---|

| AlexNet | 90.69% | 82.79% | 88.95% | 84.21% |

| MobileNet v3 | 91.67% | 87.95% | 91.83% | 88.59% |

| Xception | 91.18% | 84.30% | 90.28% | 85.37% |

| ShuffleNet | 89.71% | 84.76% | 89.44% | 84.87% |

| EspNet v2 | 86.52% | 82.46% | 84.10% | 81.88% |

| GhostNet | 89.22% | 82.99% | 87.16% | 83.91% |

| ResNet101 | 92.40% | 86.29% | 93.30% | 88.02% |

| ResNet152 | 89.22% | 89.10% | 87.26% | 87.54% |

| VGG16 | 89.71% | 86.64% | 88.68% | 87.47% |

| VGG19 | 86.52% | 86.06% | 88.98% | 86.86% |

| RegVGG B1g2 | 88.48% | 80.33% | 92.54% | 81.34% |

| Our Method | 93.87% | 87.33% | 94.35% | 88.71% |

| Model | Accuary | Macro-Recall | Macro-Precision | Macro-F1 |

|---|---|---|---|---|

| ResNet50 | 92.40% | 86.45% | 94.08% | 88.32% |

| ResNet50+CBAM | 92.65% | 88.62% | 91.40% | 89.71% |

| ResNet50+CBAM+FcaNet | 93.87% | 87.33% | 94.35% | 88.71% |

| Model | Flops (G) | Params (M) |

|---|---|---|

| AlexNet | 0.309 | 14.596 |

| MobileNet v3 | 0.300 | 4.317 |

| Xception | 4.617 | 20.822 |

| ShuffleNet | 0.132 | 0.860 |

| EspNet v2 | 0.092 | 0.638 |

| GhostNet | 0.213 | 3.127 |

| ResNet50 | 4.109 | 23.522 |

| ResNet101 | 7.832 | 42.515 |

| ResNet152 | 11.557 | 58.158 |

| VGG16 | 15.484 | 138.358 |

| VGG19 | 19.647 | 143.667 |

| RepVGG B1g2 | 9.815 | 43.748 |

| ResNet50+CBAM | 4.111 | 23.523 |

| Our Method | 4.114 | 26.038 |

| Model | Accuary | Macro-Recall | Macro-Precsion | Macro-F1 |

|---|---|---|---|---|

| The ensemble model | 94.85% | 90.71% | 95.04% | 92.06% |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Feng, X.; Gao, X.; Luo, L. A ResNet50-Based Method for Classifying Surface Defects in Hot-Rolled Strip Steel. Mathematics 2021, 9, 2359. https://doi.org/10.3390/math9192359

Feng X, Gao X, Luo L. A ResNet50-Based Method for Classifying Surface Defects in Hot-Rolled Strip Steel. Mathematics. 2021; 9(19):2359. https://doi.org/10.3390/math9192359

Chicago/Turabian StyleFeng, Xinglong, Xianwen Gao, and Ling Luo. 2021. "A ResNet50-Based Method for Classifying Surface Defects in Hot-Rolled Strip Steel" Mathematics 9, no. 19: 2359. https://doi.org/10.3390/math9192359

APA StyleFeng, X., Gao, X., & Luo, L. (2021). A ResNet50-Based Method for Classifying Surface Defects in Hot-Rolled Strip Steel. Mathematics, 9(19), 2359. https://doi.org/10.3390/math9192359