1. Introduction

Reactive transport in porous media is important for many industrial and environmental applications such as water treatment, soil contamination and remediation, catalytic filters, etc. Most of the experimental and theoretical studies of porous media transport and reactive transport have been carried out on the macroscopic Darcy scale [

1,

2]. In many instances, the weak point in the computational modeling of reactive transport is the lack of data on the rate of adsorption and desorption at the pore scale (or, more generally, the parameters of heterogeneous reactions). Despite the progress in the development of devices for making experimental observations at the pore scale, the experimental determination of these rates is still quite difficult.

In the case of surface (heterogeneous) reactions at the pore scale, the species’ transport is coupled to surface reaction via Robin boundary conditions. When the reaction rates are not known, their identification belongs to the class of inverse boundary value problems [

3,

4,

5,

6]. The additional information that is needed to identify these parameters is often provided in the form of dynamic data related to the concentration at the outlet (e.g., so called breakthrough curves). Different algorithms can be applied for solving parameter identification problems, see, e.g., [

7,

8]. The main idea behind parameter identification is to find these parameters for which the model predictions best match our measurements or observations in a real physical system. Many algorithms use deterministic methods based on Tikhonov’s regularization method [

9,

10]. There are algorithms using a stochastic approach such as Pure Random Search [

11]. However, any approach that gives only one best value for the parameter vector does not take into account the fact that our measurements contain measurement noise. In other words, deterministic inversion methods do not account for the uncertainty in parameter estimates.

Uncertainty quantification (UQ) is an important area of research in many applications. All experimental data have regular errors arising from instruments or human operators. This leads to a shift in the parameters of the fitted empirical model [

12,

13]. In our applications, besides measurement errors, we deal with model errors. The measured data show an integrated response, which averages the information related to pore-scale geometries. In our simulations, we use a specific pore-scale geometry which can differ from the real geometry. Thus, including errors in the measurement is important.

The commonly used method for UQ is a Bayesian inversion, which formulates the posterior distribution via likelihood and prior information. In the paper, uninformative prior distribution for the parameters is used. For the likelihood, we use the error in the average concentration at the outlet. In particular, we use the

difference between the average concentration at the outlet and the observed data. The likelihood function involves solving forward PDE model, which can be expensive due to complex pore-scale geometries. Consequently, the evaluation of the likelihood is expensive. For sampling from the posterior distribution, the Markov chain Monte-Carlo methods (MCMC) is used [

14,

15,

16,

17]. Sampling the posterior distribution is the major computational difficulty associated with Bayesian inversion. MCMC—an increasingly popular method of obtaining information on distribution, especially for the evaluation of posterior distributions in Bayesian inference [

18]. Areas in which this approach works well include dynamic systems models in ecology, geophysics, chemical kinetics, signal detection theory and decision theory [

19,

20,

21].

In our previous works on reactive flows [

22,

23] a deterministic approach was considered, where the parameter space was divided into a uniform grid, where for each point the direct problem was solved to construct the residual functional. This approach was one of the simplest, but depending on the functionality, a denser mesh partition may be required, which could lead to a large amount of computation. Furthermore, research was done on stochastic approaches, where points were taken at random in the parameter space. The good thing about this approach was that it was quite cheap compared to the deterministic approach to evaluate the functionality of the residual, but this approach was more useful as a preliminary exercise to reduce the search space. In connection with these results, it was decided to continue the work and consider other approaches for identifying the parameters of the rates of surface reactions.

In this paper, we will discuss the determination of reaction rates, namely adsorption and desorption parameters for pore scale transport in porous media using MCMC sampling within the framework of Bayesian inference. This method provides the ability not only to identify parameters, but also to quantify uncertainty. The reactive transport is governed by incompressible Stokes equations in pore-scale geometry, coupled with a convection–diffusion equation for species transport. The surface reactions are accounted via Robin boundary conditions. The Henry isotherm is considered for correct description of the reaction dynamics at the solid–fluid interface. The main feature of this problem is a nonlinear boundary condition for surface concentration of adsorbed solute on obstacles surface. Finite element method (FEM) is exploited. The family of MCMC methods, namely Metropolis–Hastings and Adaptive Metropolis algorithms was implemented. Sample analysis using autocorrelation function and acceptance rate is performed to estimate mixing of the Markov chain. Credible intervals have been plotted from sampled posterior distributions for both algorithms. The impact of the noise in the measurements and influence of several measurements for Bayesian inversion procedure is studied.

The remainder of the paper is organized as follows.

Section 2 formulates a mathematical model of reactive flow in porous media. In

Section 3, we consider the Bayesian statement of the problem. In

Section 4, we observe MCMC sampling techniques. In

Section 5, we provide an estimate of the parameters based on sample data. Finally,

Section 6 summarizes the results presented in this paper.

2. Forward Problem

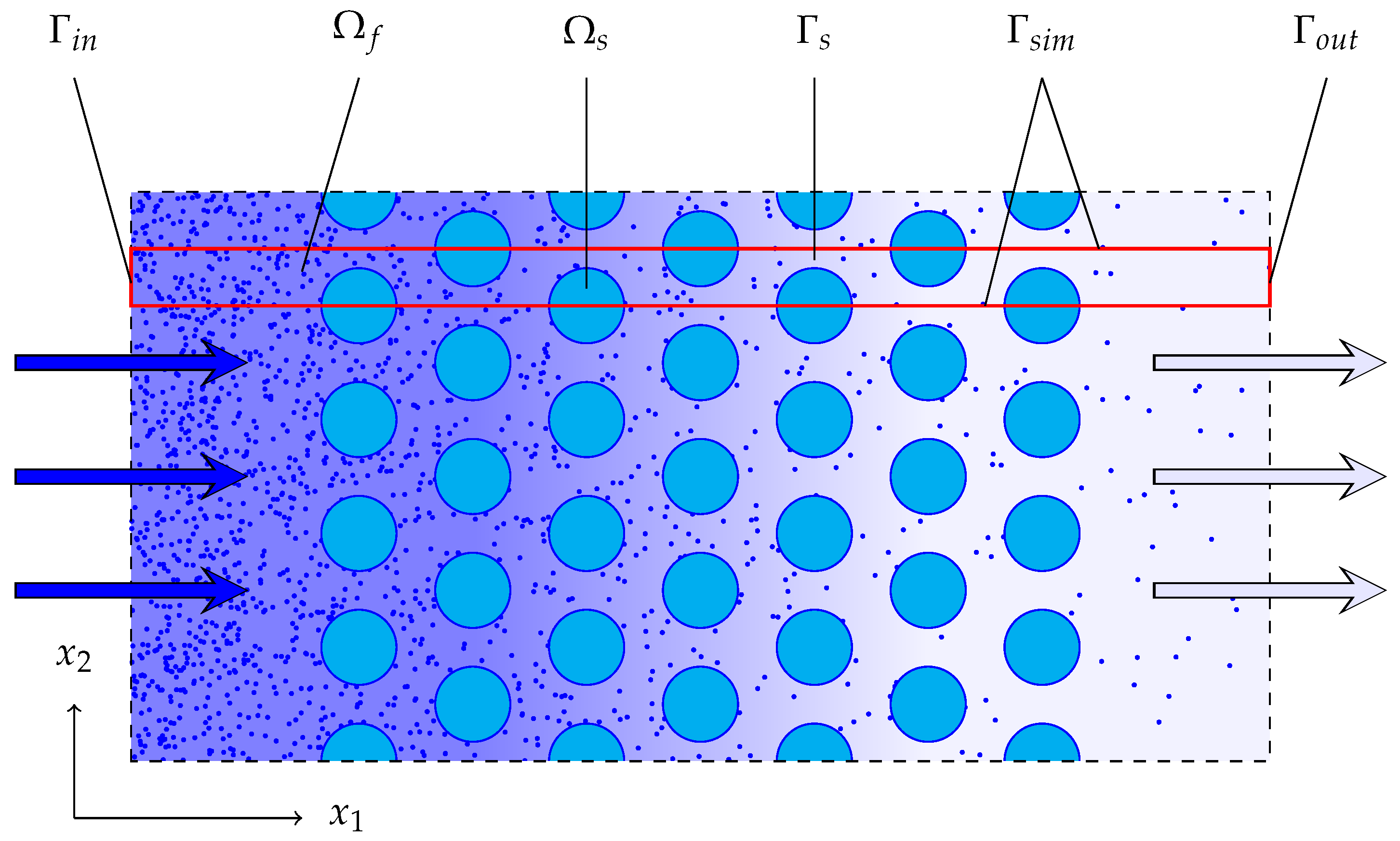

Two dimensional transport of a dissolved substance is considered at pore scale in the presence of heterogeneous reactions. Periodic porous media geometry is considered here, see

Figure 1. The computational domain

is occupied by a fluid. The reaction occurs at the surface of the obstacles, the latter is denoted by

. Symmetry boundary conditions are assigned at the top and the bottom boundary

. The inlet boundary is denoted by

, and the outlet is denoted by

. Coupled incompressible Stokes equations and convection–diffusion equations for species transport are governing the reactive transport. Adsorption and desorption are accounted via Robin boundary conditions. The dimensionless form of the governing equations is used in this paper.

This problem that has already been investigated by us in our previous works [

22,

23] in conjunction with deterministic methods for parameter identification, but for the completeness of the presentation we describe here it again.

The IBVP is discretized in space by Finite Element Method (FEM), and implicit discretization in time is used. The computations are performed using the FEniCS PDE computing platform [

24].

2.1. System of Equations

Steady state incompressible Stokes equations are governing the flow:

where

and

are the fluid velocity and pressure, respectively, and

is the viscosity, which we consider constant [

1,

25].

The notation

stands for the concentration of the solute in the fluid. The unsteady transfer of solute in the absence of homogeneous reactions is described by the convection–diffusion equation

Here, stands for the diffusion coefficient of a solute, which is assumed to be constant and scalar.

Mass conservation is used to specify the interface conditions at the reactive surface

. Namely, the change in the adsorbed surface concentration is equal to the flux from the fluid to the surface. Thus

where

m is the concentration of adsorbed solute on the surface [

26]. A mixed kinetic–diffusion description of adsorption is used:

The choice of

f and its dependence on

c and

m is depend on the particular reaction dynamics. There are a number of different isotherms (i.e., different functions

) to describe these dynamics. The Henry isotherm, which assumes a linear relationship between the near surface concentration and the surface concentration of the adsorbed particles, is the simplest one. It is specified as

where

is the rate of adsorption, measured in unit length per unit time, and

is the rate of desorption, measured per unit time.

2.2. Boundary and Initial Conditions

Let us denote by

the outer normal vector to the boundary. Boundary conditions on

are specified as follows. The velocity of the fluid

is prescribed at the inlet

Standard conditions are prescribed at the outlet, namely, pressure and absence of tangential force

Standard no-slip and no-penetration conditions are prescribed on the obstacles surface:

Symmetry conditions are assigned on the symmetry boundary:

The concentration of the solute at the inlet is specified as follows:

where

is assumed to be constant. On the external boundaries and at the outlet zero diffusive flux is prescribed:

Convective flux through the outlet is implicitly allowed by the above equations.

Initial conditions need to be specified to complete the formulation of the IBVP, in addition to the governing Equations (3) and (5), boundary conditions (7)–(12), and specified isotherm (6),

2.3. Dimensionless Form of the Equations

The same notations are used for the dimensionless variables (velocity, pressure, concentration). The height of the computational domain , namely l, is used for scaling spatial sizes, the scaling of the concentration is done by the inlet concentration and the scaling of the velocity is done by the inlet velocity .

Assuming the dimensionless viscosity to be equal to unity, the Stokes Equations (1) and (2) and its boundary conditions in dimensionless remain are written as

In dimensionless form (3) reads

where

is the Peclet number.

Next, (11) turns into

where the boundary condition (12) takes the form

The dimensionless form of (4) is given by

In dimensionless form the interface reaction is described as

here the Damköler numbers for adsorption and desorption are given by following:

2.4. Variational Formulation

Note that the fluid flow influences the species transport, however the species concentration does not influence the fluid flow. Due to this, the flow can be computed only once in advance. The FEM approximation of the steady state flow problem [

27] is based on variational formulation of the considered boundary value problem (1)–(10). The following functional space

is defined for the velocity

(

):

Test function

, where

For the pressure

p and the related test functions

q, it is required that

, where

Multiplying (1) by

, (2) by

q, integrating over the computational domain and taking into account the boundary conditions (7)–(10), the following system of equations is obtained with respect to

,

The following finite dimensional subspaces are used for the basis and test functions in the FEM approximation of the velocity and the pressure: , and .

The unsteady species transport problem (15)–(19) is solved numerically using finite elements. Here we define

The approximate solution

is sought from

where the following notations are used

For determining

(see (5)) we use

Symmetric Crank–Nicolson discretization method is used for time discretization (see, e.g., [

28,

29]). Let

be a step-size of a uniform grid in time such that

,

.

Equation (22) is approximated in time as follows

In a similar way for (23) we get

Zero initial conditions are posed in the considered here case,

2.5. Numerical Solution of the Forward Problem

Small pure fluid regions are added in front of the inlet and behind the outlet, as it is usual for pore scale simulations. The computational domain

is triangulated using the mesh generator software Gmsh [

30]. In order to control the accuracy of results, computations on consecutively refined grids were performed on our previous work [

23].

Taylor–Hood

elements [

31] are selected in solving Stokes equations. These are continuous

and

Lagrange elements for the velocity components and for the pressure field, respectively. The computations are performed using the PDE framework FEniCS [

24,

32].

The unsteady species transport problem (15), (18) and (19) is solved numerically using standard Lagrangian

finite elements. For details on the FE discretization, see [

33].

Sensitivity studies are performed first to evaluate the influence of the grid parameters on the accuracy. The basic set of parameters reads as follows:

The non-stationary problem is solved on dimensionless time interval

,

, with a time step

. Breakthrough curve (i.e., time dependent average outlet concentration) is used to characterize the reactive transport:

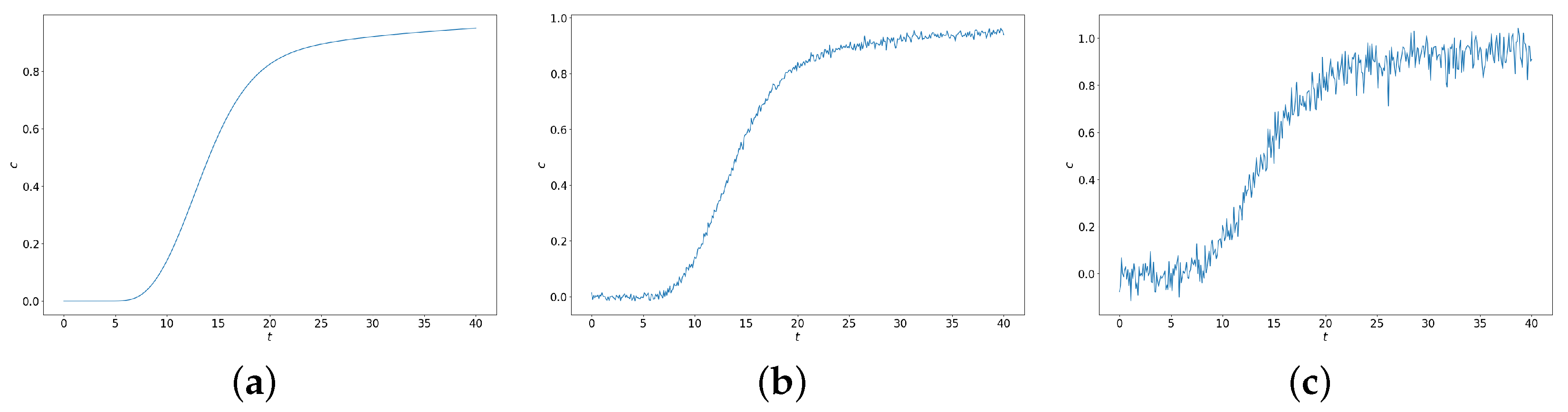

The breakthrough curve for these parameters is shown in

Figure 2a.

Detailed results of solving the direct problem can be found in our previous work [

23].

4. Markov Chain Monte-Carlo

MCMC is a computer-driven method that allows to represent a distribution by randomly sampling values from the distribution, without knowing all mathematical properties [

38,

39]. The MCMC method has a fairly simple concept and in order to apply this method, it is enough to know how to calculate the density for different samples. The name MCMC fuses two properties: Monte-Carlo and Markov chain. Monte-Carlo is the practice of evaluating the properties of a distribution by extracting random samples from the distribution. As an example, instead of finding the mean of the normal distribution by calculating it directly from the distribution’s equations, the Monte Carlo approach would be to extract a large number of random samples from the normal distribution and calculate their mean. The advantage of the Monte Carlo method is clear: calculating the mean over a large sample of numbers can be much easier than calculating the mean directly from the normal distribution’s equations. This advantage is most evident when random samples are easy to draw and distribution’s equations are difficult to work with in other ways. The property of a Markov chain is that random samples are generated using a special rule: the creation of a new random sample depends only on the current sample, hence the name—chain. A special property of the chain is that, although each new sample depends on the previous one, the new samples do not depend on any samples before the previous one, this is the Markov property. MCMC is especially useful in Bayesian inference because of its emphasis on posterior distributions, which are often difficult to work with analytically. MCMC allows the user to approximate aspects of the posterior distribution that cannot be calculated directly [

18]. The Markov chain constructed by this method in its stationary distribution will approximate the desired distribution.

4.1. Metropolis–Hastings Algorithm

The most popular MCMC method is probably the Metropolis–Hasting (MH) algorithm. MH enables us to obtain samples from any probability distribution, , given that we can compute at least a value proportional to it, hence ignoring the normalization factor. This is very useful because in a lot of problems, the hard part is to compute the normalization factor, especially in large dimensions.

The MH algorithm reads as follows:

Choose an initial value for a parameter, . This can be done randomly or by making an educated guess.

Choose a new parameter value, , by sampling from an easy-to-sample distribution, such as a Gaussian or uniform distribution, . We can think of this step as perturbing the state somehow.

Compute the probability of accepting a new parameter value by using the Metropolis–Hastings criterion:

If the probability computed on step 3 is larger than the value taken from a uniform distribution on the [0, 1] interval, we accept the new state; otherwise, we stay in the old state.

We iterate from step 2 until we have enough samples.

4.2. Adaptive Metropolis Algorithm

The weak point of the MCMC in general and MH in particular is that they require a proposal that fits the target distribution, so the sampling was efficient. Finding such proposal can be very time consuming and therefore expensive process because, requires adjustment by trial and error. Naturally, there were developed various algorithms to adjust the proposals in the run-time adaptively [

40]. One of the simplest adaptive algorithms, which was chosen in this work as an alternative to the MH algorithm, is the Adaptive Metropolis (AM) algorithm [

41], which calculates the covariance matrix of the resulting chain and uses it as a proposal. In the AM algorithm, a new point does not depend only on the previous point, it depends on all previously selected points, thus, it loses the Markov property, but nevertheless, if the adaptation is based on an increasing part of the chain, it can be proved that the algorithm gives the correct ergodic result.

Adaptive Metropolis is a random walk algorithm that uses Gaussian proposal with a covariance that depend on the chain generated so far,

where

is a scaling parameter which taken as

and depends only on the dimension

d of the sampling space,

is a constant that we can choose very small,

is the

d—dimensional identity matrix, and

, defines the length of the initial non-adaptation period.

The choice of the length of the initial non-adaptive part of the simulation,

, is free. It is not necessary to update the covariance every step, this can be done every

th step only using the entire history. This adaptation method improves the mixing properties of the algorithm, thereby increasing the simulation efficiency, which is commonly good for large dimensions. In this work,

was chosen equal to 30. The role of the parameter

is to ensure that

will not become singular. In practical computations

can be safely set to zero. The initial covariance

should be strictly positive definite matrix and must move chain sufficiently [

41]. The algorithm is exactly the same as in the MH algorithm, with the exception of proposal, which is given as a Gaussian distribution with mean

and covariance (

30). Additionally, the item is added, that the covariance matrix must be updated every

th step using the entire history.

Roberts et al. [

42] proved that for

, the asymptotically optimal acceptance rate is 0.234. This is a significant refinement of the general principle, where the acceptance rate must necessarily be far from 0 and far from 1.

5. Results

Bayesian estimation using MCMC sampling for identification of adsorption and desorption rates are performed in combination with reactive flow considered at the pore-scale. The reactive transport is governed by incompressible Stokes equations, coupled with convection–diffusion equation for species’ transport. Adsorption and desorption are accounted via Robin boundary conditions. The Henry isotherm is considered for describing the reaction terms. Synthetic experimental measurements of concentration at the outlet of the domain is provided to carry out the identification procedure. Metropolis–Hastings and Adaptive Metropolis algorithms are implemented.

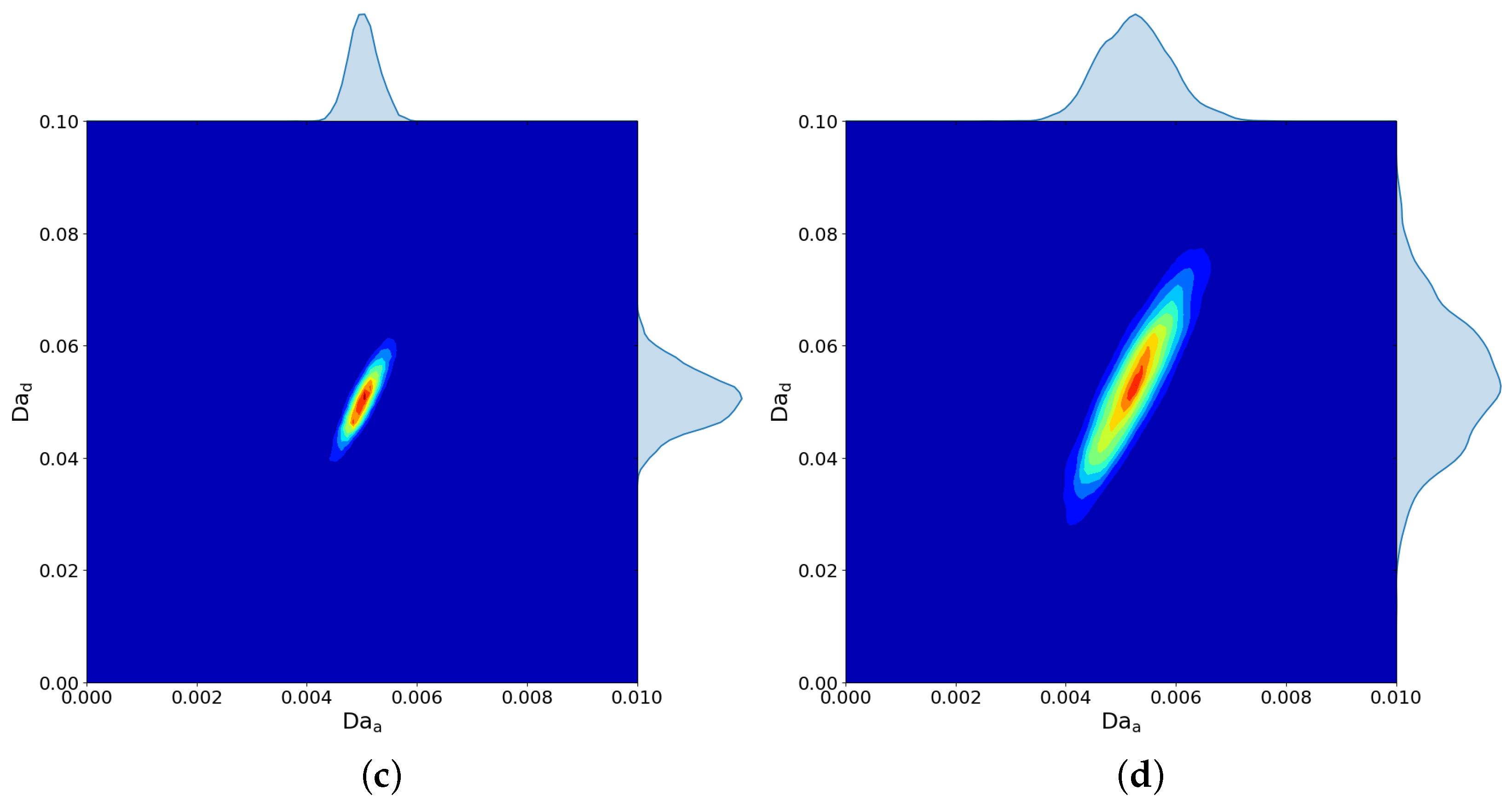

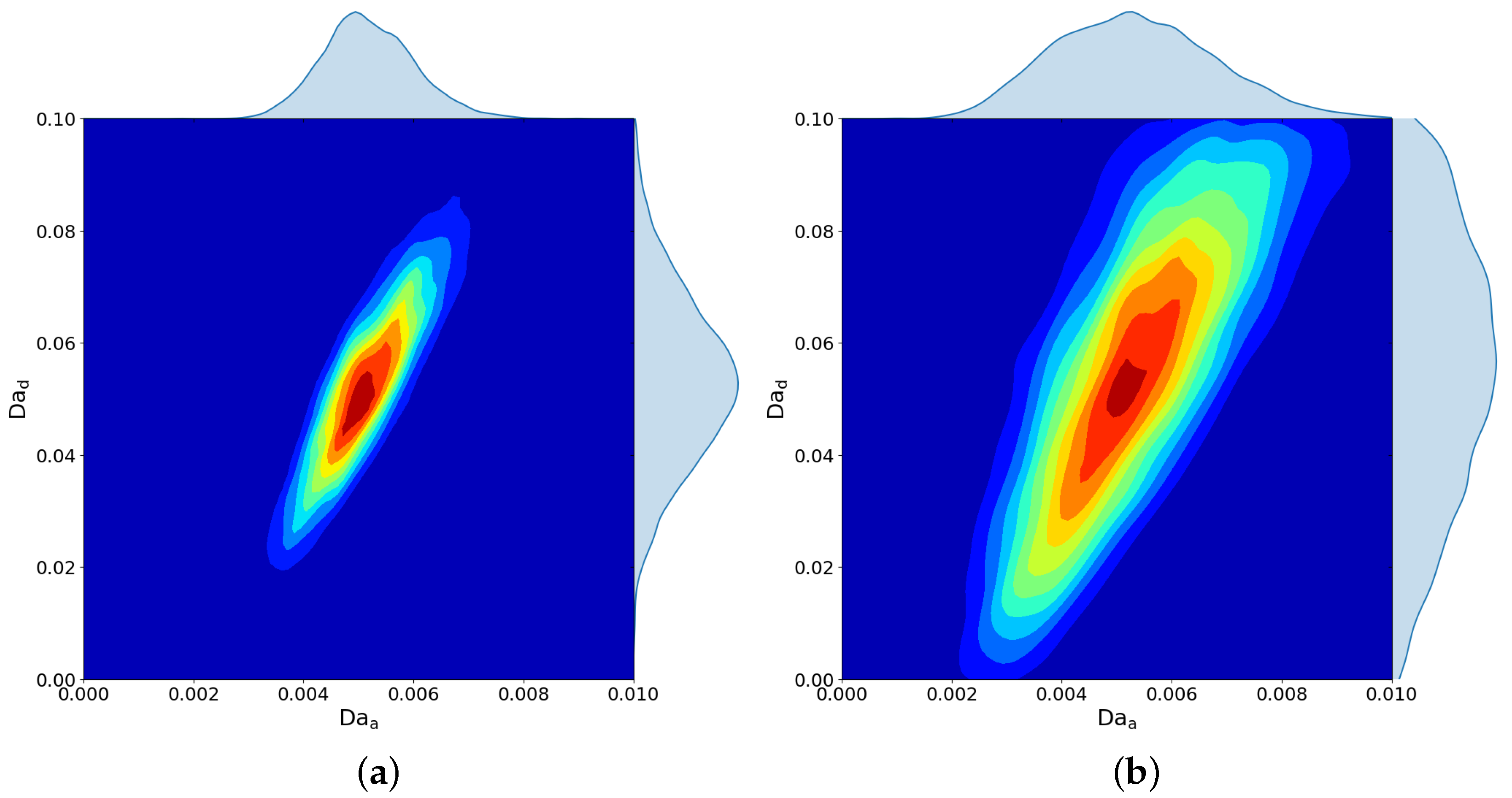

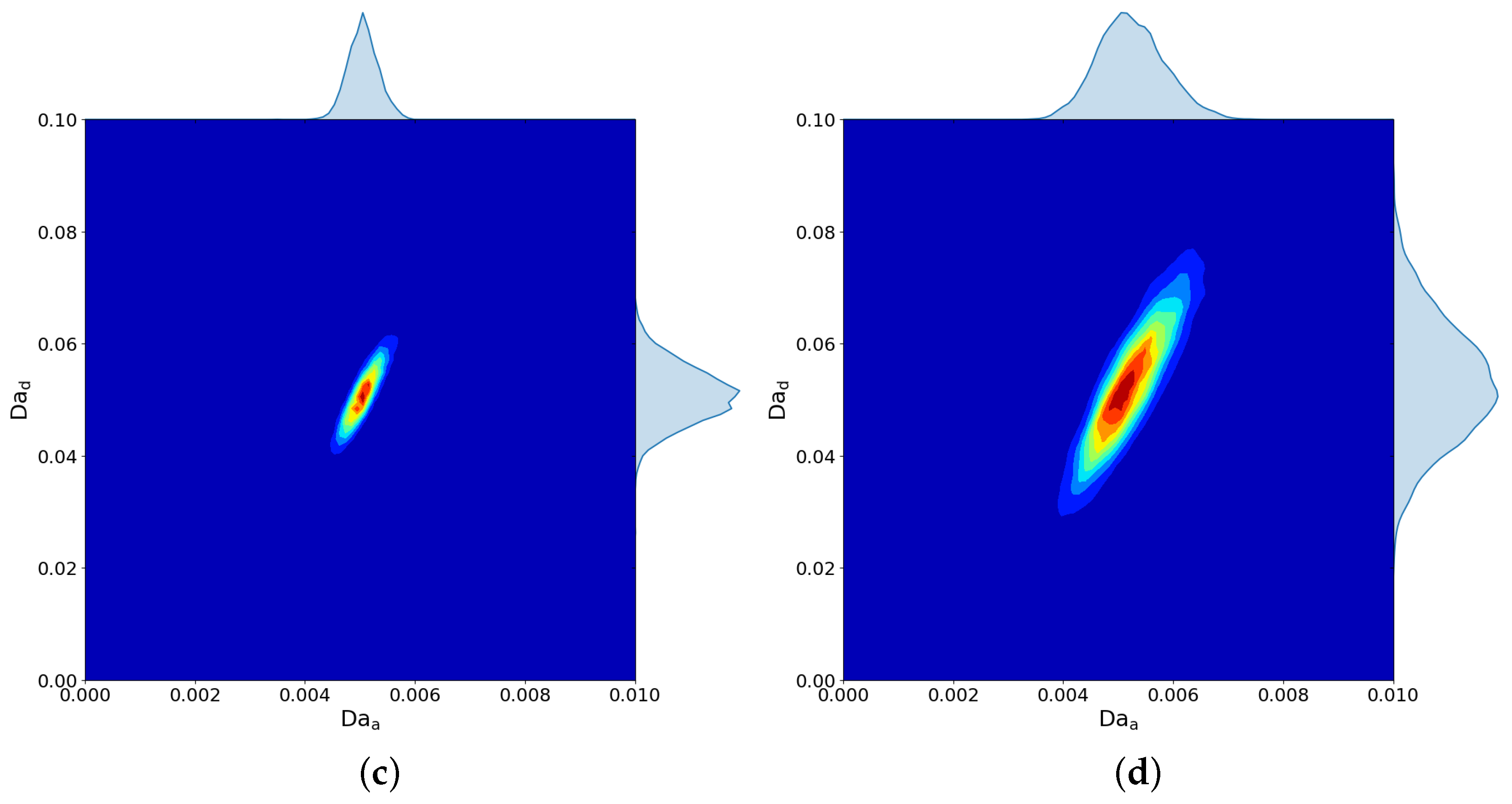

Figure 3 and

Figure 4 clearly show that the higher noise amplitude leads to higher uncertainty and the larger count of observation data leads to more accurate parameter estimate. Results of MH is presented in

Figure 3 and AM is presented in

Figure 4. As we can see, the results quite similar and AM results are obtained without the time-consuming trial–error proposal tuning procedure as in the MH algorithm.

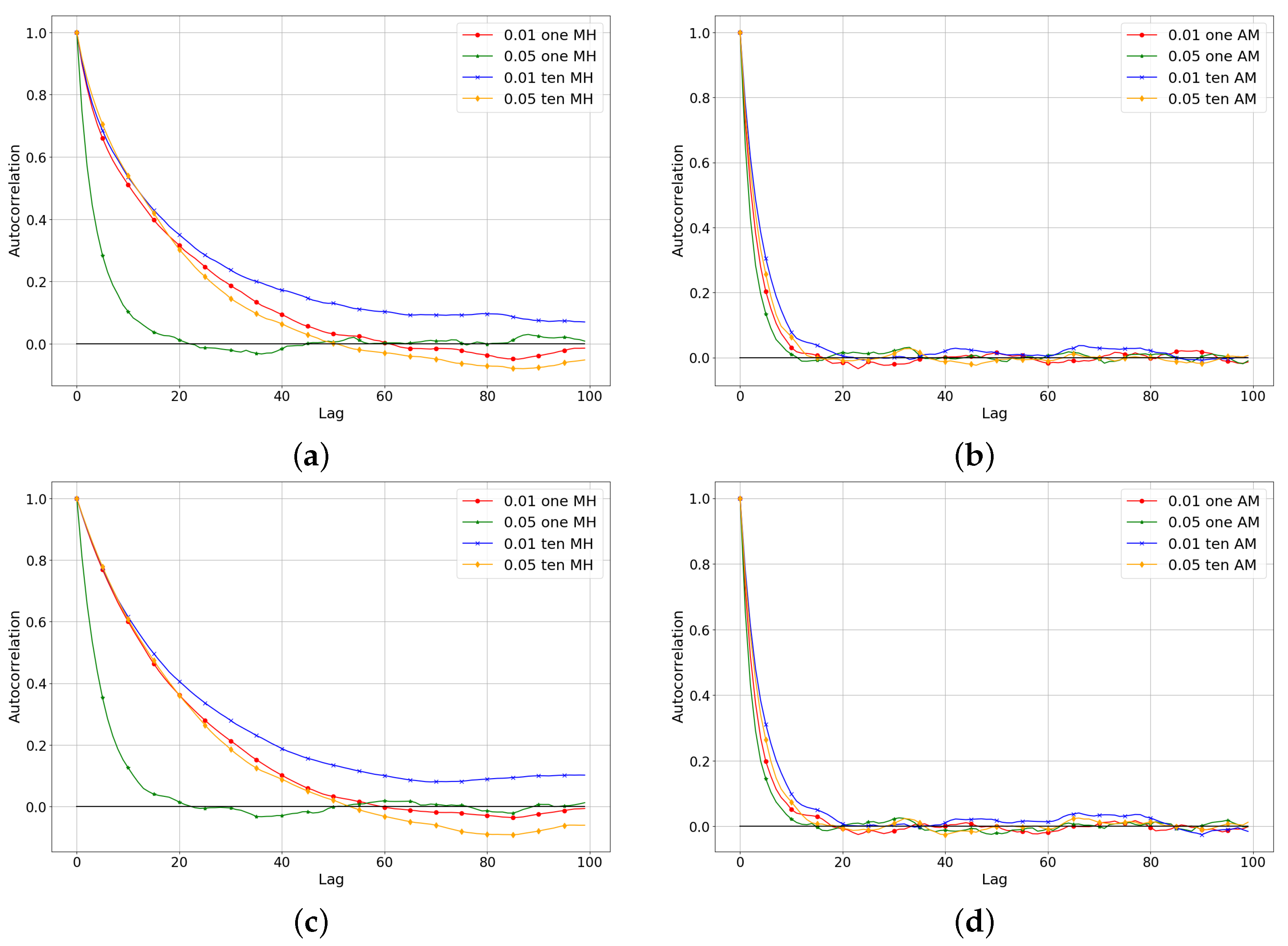

Table 1,

Figure 5 and

Figure 6 uses the following rule to describe: “

N Alg”, where

stands for noise variance,

N—number of measurements, and then the name of the MCMC algorithm. For example, “0.01 ten MH” means a noise variance of 0.01 for ten observations sampled using the Metropolis–Hastings algorithm.

In order to obtain a more quantitative measure of the quality of a sample, it is necessary to look at the correlation structure. The autocorrelation of a signal is a useful tool to analyze the independency of realizations. Autocorrelation calculating as follows: from the generated sample {

} of size

N = 15,000, calculate the mean,

and the lagged autocorrelations of the centered components,

Autocorrelations of all cases for MH and AM are presented in

Figure 5a and

Figure 5b, respectively. For the AM algorithm, the autocorrelation falls off quickly, which says that the chain is well mixed. It is common for the MH algorithm to have high autocorrelation, since it has the Markov property (each subsequent sample is drawn by using the current sample).

When tuning the MH algorithm, necessary to look at the acceptance ratio. The acceptance rate depends on the problem but typically for 1-D problems, the acceptance rate should be around 0.44 for one parameter and around 0.234 for more than five parameters as mentioned earlier. Look at

Figure 6, where the plotted acceptance rate exceeds the iteration for all cases that we consider here. For almost all cases, the acceptance rate lies around 0.4 except for two cases: AM with one measurement with noise variance 0.05 and AM with ten measurements with noise variance 0.01. For the first case, this happened due to the fact that the target functional proportional to the posterior distribution is very large and thus has a large acceptance ratio. This is also the reason that in

Figure 5a,c the case with 0.05 noise variance for one measurement has small autocorrelation: the MH algorithm has a proposal with a wide step, so the samples get further from each other and the correlation between them reduces. For the second case, on the contrary, this happened due to the fact that the target functional proportional to the posterior distribution has very small sizes and thus has a small acceptance ratio.

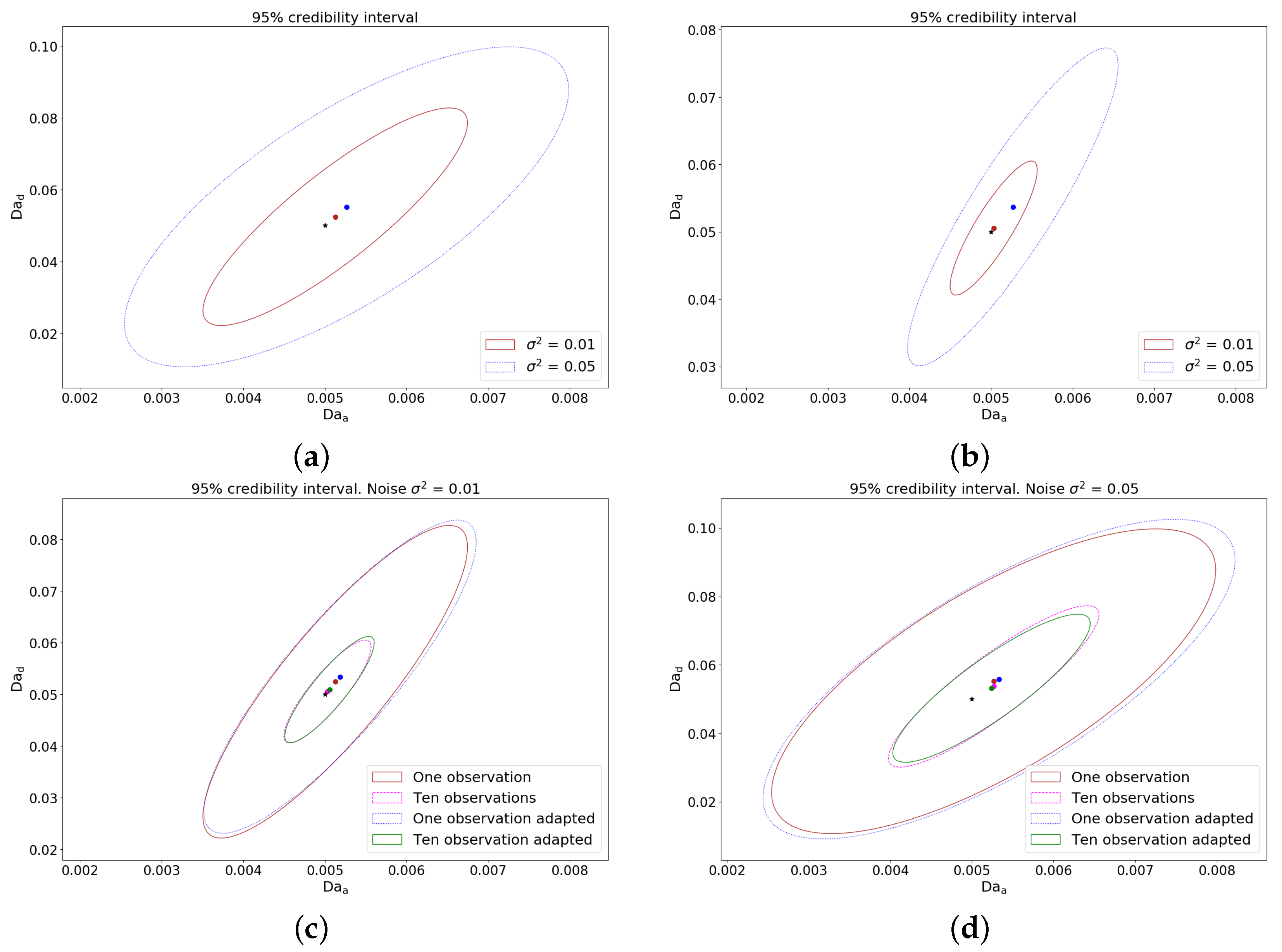

From the posterior PDF, the Bayesian credible interval can be computed, which is the shortest interval containing

probability. This means that there is a

probability for the observational data, that the true value

falls within credible interval. It is easy to see that in

Figure 7c,d MH and AM has just a little differences: AM is slightly smaller. In fact, this is completely normal, as it cannot be better or worse than a well-tuned MH algorithm. In these figures, it can also be seen how credible intervals are reduced with the addition of observations. The obtained results even for noise variance

are comparable with work we have done in [

22,

23] using deterministic and stochastic approaches. The ★ symbol in these figures shows the exact parameters and the • symbol shows the mean values.

It can be seen from the simulation results in

Table 1 that the estimated mean and maximum PDF values have a relative error of less than 1%. The last two columns of the table show the error in the

norm and have already been converted to percentages.

6. Conclusions

The determination of reaction rates, namely adsorption and desorption parameters for pore scale transport in porous media using MCMC sampling within the framework of Bayesian inference, is discussed. The parameter identification procedure is carried out via the measured concentration of the species at the outlet of the domain. Metropolis–Hastings and Adaptive Metropolis algorithms were implemented and compared. Credible intervals have been plotted from sampled posterior distributions for both algorithms. The impact of the noise in the measurements for the Bayesian estimation procedure is studied. The count of observations was increased up to ten for both algorithms to decrease credible interval. The sample analysis using the autocorrelation function and acceptance rate is performed to estimate mixing of the Markov chain.

The Bayesian approach is a fairly powerful tool, capable not only of identifying the range of acceptable values using a credible interval, not to mention the individual parameters found through the mean or maximum of the posterior density, but also to quantify the associated uncertainty. However, sampling for the Bayesian approach can be quite costly, especially in pore-scale problems, so it may be useful to apply order reduction techniques to the model before applying this approach.

Previously, we conducted research with different approaches on the same mathematical model in the same geometry of the region (for more details, see [

23]). The following were implemented: a deterministic approach, where the residual functional was built on a uniform grid; a stochastic approach, when uniformly distributed random points were generated via Sobol sequences [

43,

44,

45] and the functional was constructed from them; the Monte Carlo method, when the functional was built for 10 different realizations of noise, thereby looking at the intersections of the regions of admissible sets. In those approaches, the region of admissible sets was built through a given threshold, which depended on the noise in the observations. Compared to those approaches, all the results obtained in this work are comparable with the previous results. In the Bayesian approach, the region of admissible sets can be determined through the credible interval, where in

Figure 7 you can estimate how its size changes depending on the variance of the noise and the number of observations. Compared to previous approaches, this approach is more elegant, but more computationally expensive. The AM algorithm is preferred as it does not need time-consuming tuning like the MH algorithm.

As this article is part of our systematic study and takes into account the results obtained, it is necessary to work on the reduced order model, which we will do in future work.