3.1. Modeling of Ionospheric Parameter Time Series

The ionosphere is the upper region of the earth’s atmosphere. It is located at heights from 70 to 1000 km and higher, and affects radio wave propagation [

41]. Ionospheric anomalies occur during extreme solar events (solar flares and particle ejections) and magnetic storms. They cause serious malfunctions in the operation of modern ground and space technical equipment [

42]. An important parameter characterizing the state of the ionosphere is the critical frequency of the ionospheric F2-layer (foF2). The foF2 time series have a complex structure and contain seasonal and diurnal components, as well as local features of various shapes and durations occurring during ionospheric anomalies. Intense ionospheric anomalies can cause failures in the operation of technical systems. Therefore, their timely detection is an important applied problem.

In the experiments, we used hourly (1969–2019) and 15-min (2015–2019) foF2 data obtained by the method of vertical radiosonding of the ionosphere at Paratunka station (53.0° N and 158.7° E, Kamchatka, Russia, IKIR FEB RAS). The proposed HMTS was identified separately for foF2 hourly and 15-min data.

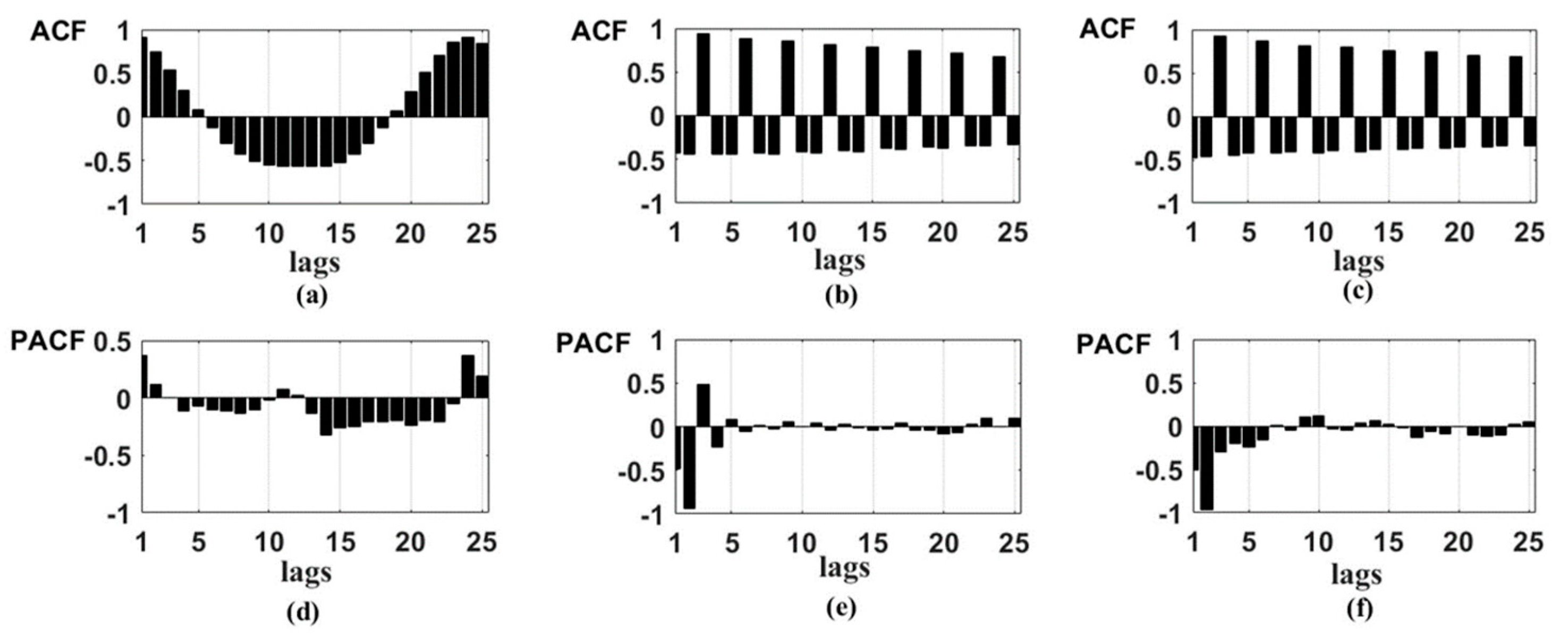

To identify HMTS regular components, we used the foF2 data recorded during the periods of absence of ionospheric anomalies. The application of Algorithm 1 showed that the components

and

are stationary (having damping ACF), thus ARIMA models can be identified for them.

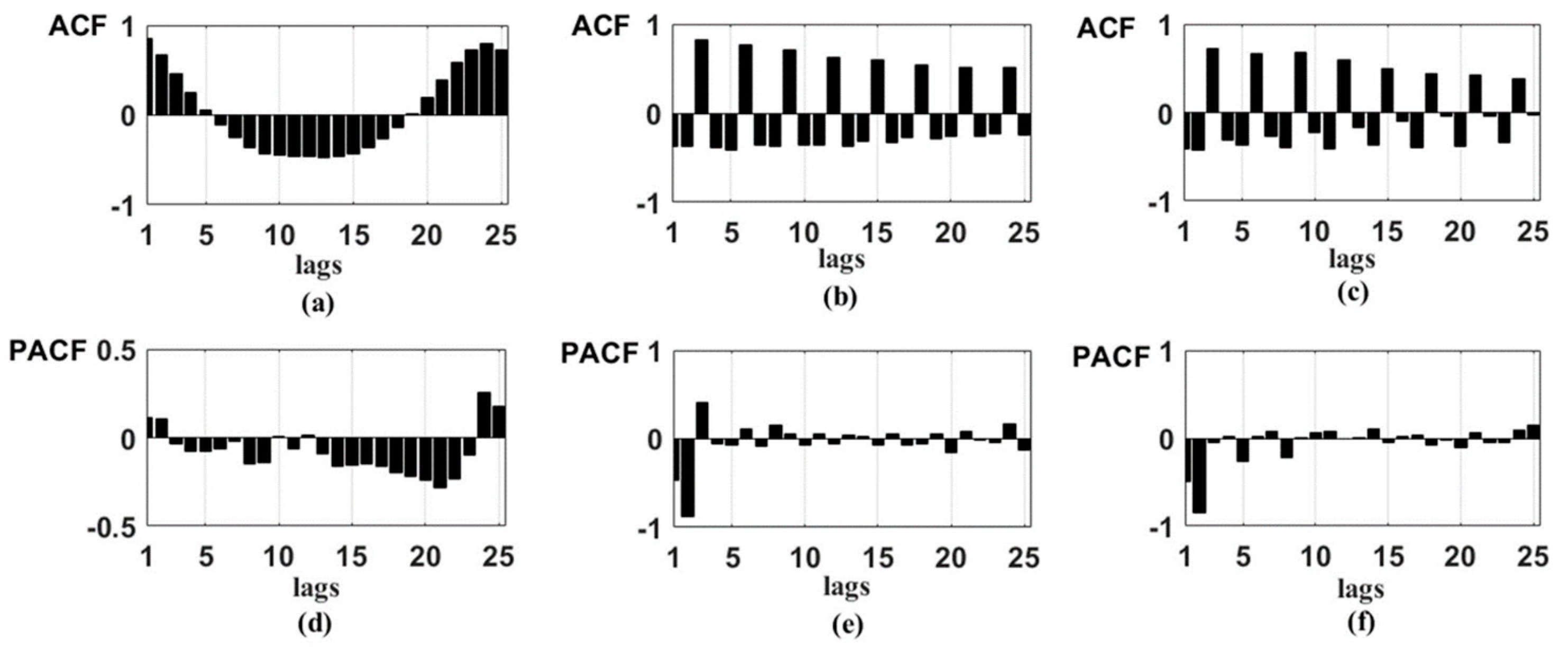

Figure 2 and

Figure 3 show ACF and PACF of foF2 initial time series, as well as the components

and

. The results confirm stationarity of the components

and

. An analysis of PACF shows the possibility to identify the AR models of orders 2 and 3 for the first differences of these components. The results in

Figure 2 and

Figure 3 also illustrate that foF2 initial time series are non-stationary and, therefore, it is impossible to approximate them by ARIMA model without wavelet decomposition operation.

According to ratio (10) and based on the PACF of the first differences of the components

and

(

Figure 3e,f), we obtain the HMTS regular component

where

,

,

,

,

,

. Estimated parameters for

and

are presented in

Table 1. The parameters were estimated separately for different seasons and different levels of solar activity.

Based on the data from

Table 1 we obtain

- (1)

for wintertime:

- (2)

for summertime and high solar activity:

- (3)

for summertime and low solar activity:

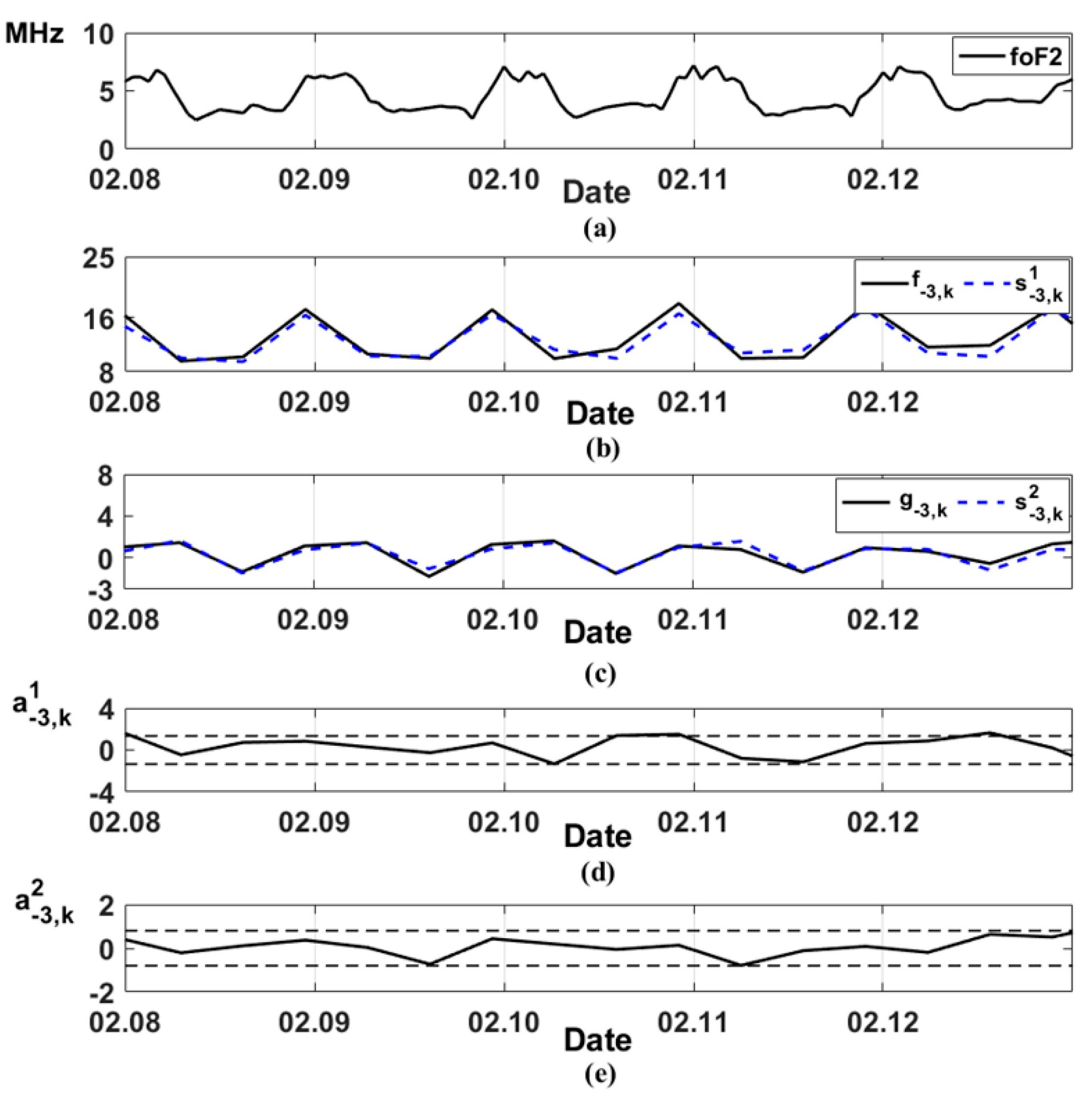

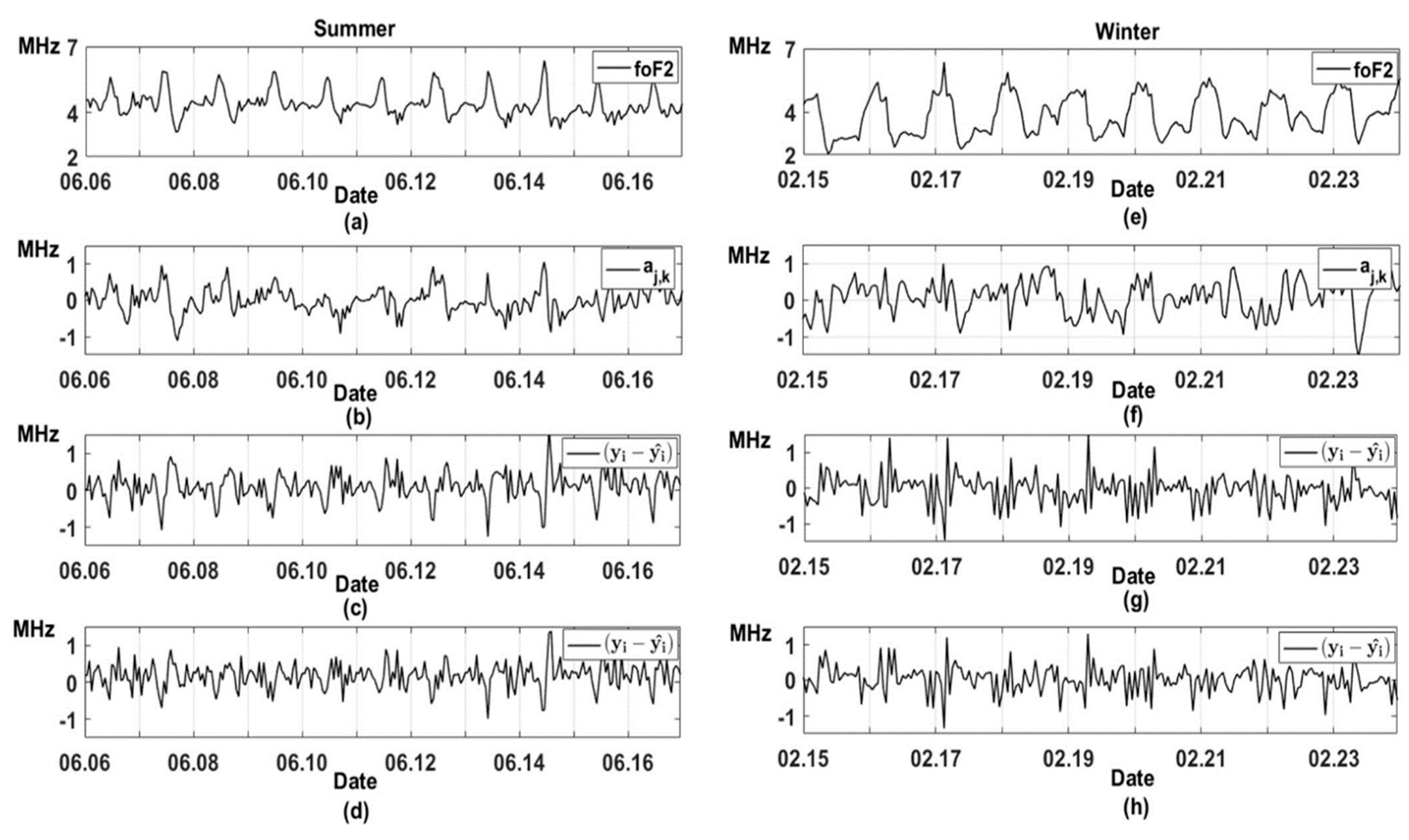

Figure 4 shows the modeling results for HMTS regular components (

and

) during the absence of ionospheric anomalies. The model errors do not exceed the confidence interval that indicates their adequacy.

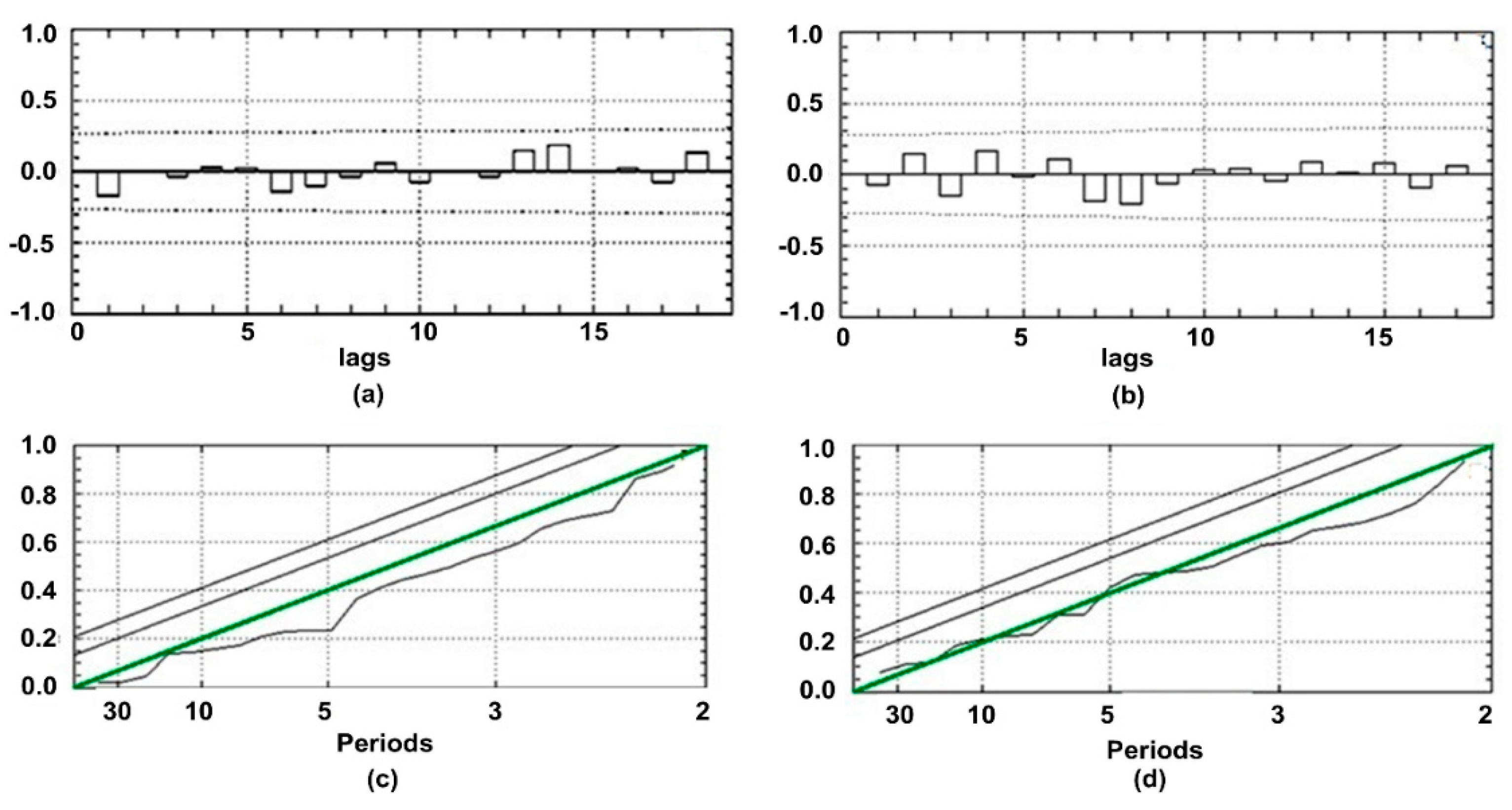

Table 2 and

Table 3, and

Figure 5 show the results of validation tests for the obtained models. The tests were carried out for the foF2 data that were not used at the stage of model identification. In order to verify the models, we used the cumulative fitting criterion (

Table 2 and

Table 3), analysis of model residual error ACF (

Figure 5a,b) and normalized cumulative periodogram (

Figure 5c,d).

Based on the cumulative fitting criterion [

20], the fitted model is satisfactory if

is distributed approximately as

, where

are the considered first autocorrelations of model errors,

is the AR model order,

is the MA model order,

are the autocorrelations of model error series,

,

is the series length,

is the model difference order.

According to the criterion, if the model is inadequate, the average

grows. Consequently, the model adequacy can be verified by comparing

with the table of

distribution. The results in

Table 2 and

Table 3 show that the

values of the estimated models, at a significance level

, do not exceed the table

values. The model adequacy is also confirmed by the analysis of residual error ACF (

Figure 5a,b) and the normalized cumulative periodogram (

Figure 5c,d).

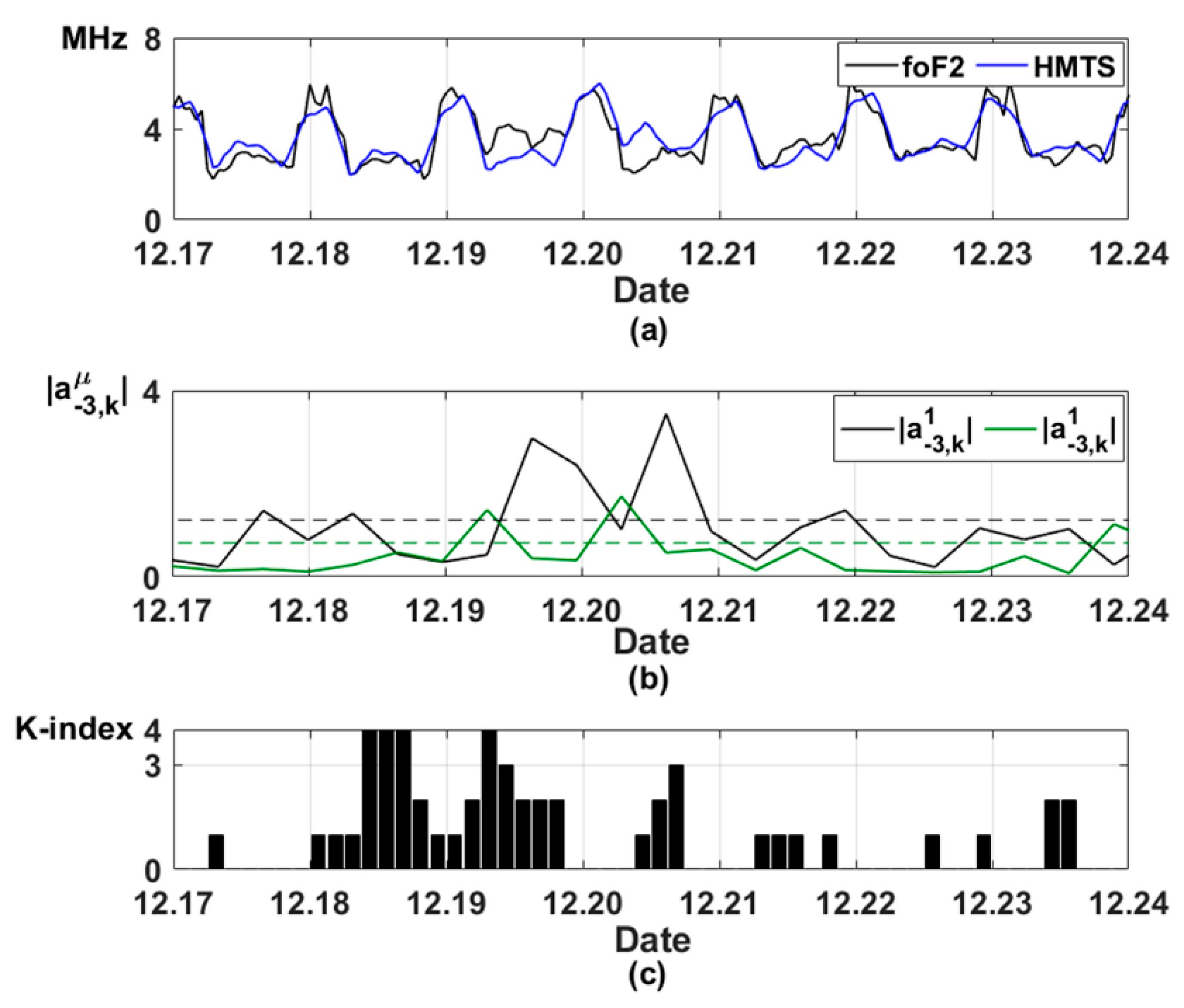

Figure 6a,b shows the results of modeling of the hourly foF2 data during the magnetic storm on 18 and 19 December 2019.

Figure 6c shows the geomagnetic activity index K (K-index), which characterizes geomagnetic disturbance intensity. The K-index represents the values from 0 to 9, estimated for the three-hour interval. It is known that during increased geomagnetic activity (K > 3), anomalous changes are observed in ionospheric parameters [

43]. The analysis of the results in

Figure 6 shows an increase in the model errors during the increase in K-index and magnetic storm occurrence (

Figure 6b). This indicates ionospheric anomaly occurrences. The results show that the HMTS allows us to detect ionospheric anomalies successfully.

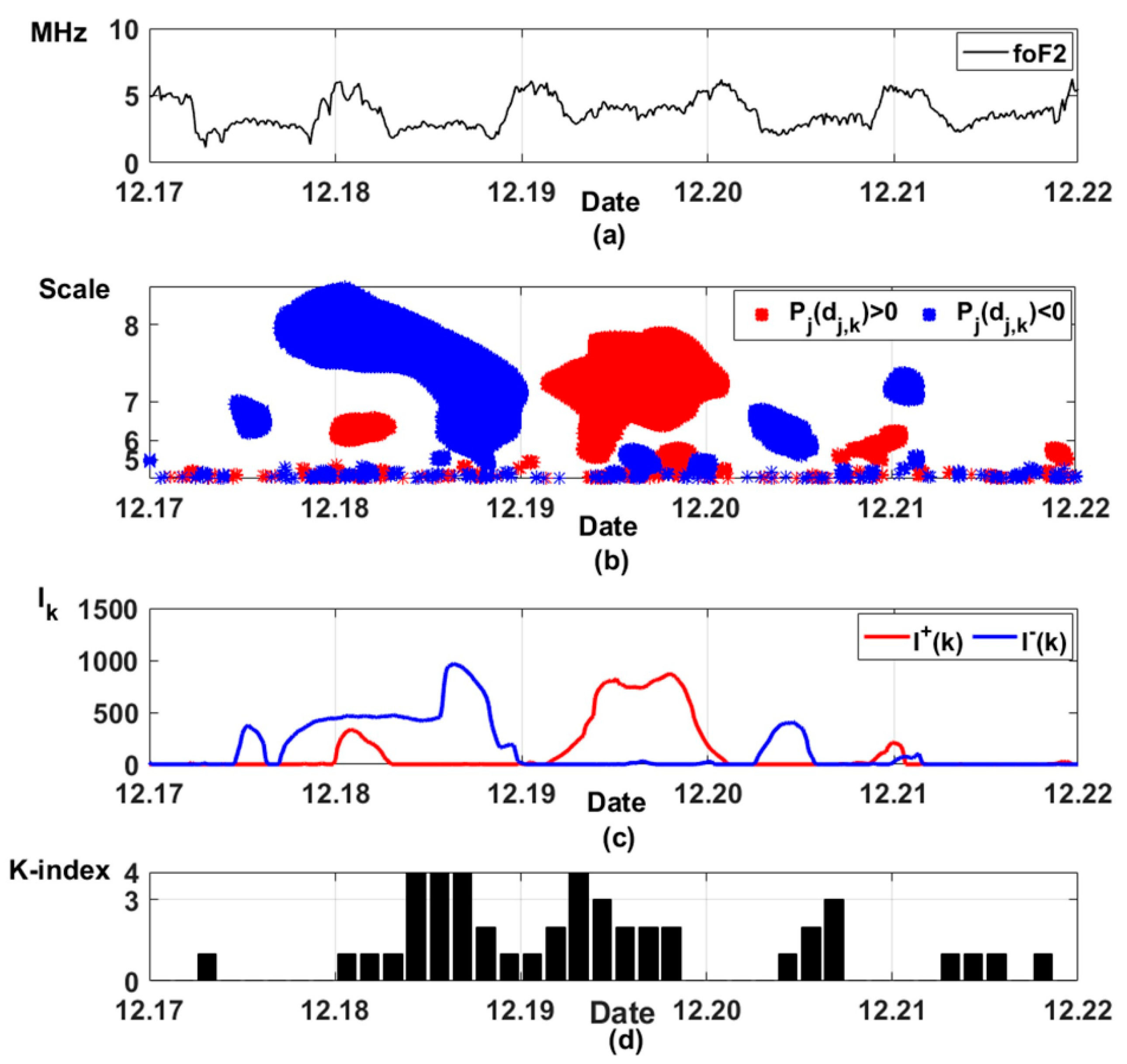

Figure 7 shows the results of the application of operation (11) to 15-min foF2 data during the same magnetic storm. Based on operation (11), ionospheric anomaly occurrences are determined by the threshold function

with the thresholds

.

In this paper, we used the thresholds

where the coefficient

was estimated by minimizing a posteriori risk (ratio (15)),

is the average value calculated in a moving time window with the length

(it corresponds to the interval of 5 days).

Positive

and negative

anomalies were considered separately. Positive anomalies (shown in red in

Figure 7b) characterize the anomalous increase in foF2 values. Negative anomalies (shown in blue in

Figure 7b) characterize anomalous decrease in foF2 values. To evaluate the intensity of ionospheric anomalies we used the value

Assessment of the intensity of positive

and negative

ionospheric anomalies is shown in

Figure 7c, positive anomalies are shown in red, negative ones are shown in blue.

Figure 7d shows the K-index values. The results show the occurrence of a negative ionospheric anomaly during the initial and the main phases of the magnetic storm (18 December 2019), and a positive ionospheric anomaly during the recovery phase of the storm (19 December 2019). The observed dynamics of the ionospheric parameters are characteristic of the periods of magnetic storms [

43]. The results show the efficiency of HMTS application for detecting ionospheric anomalies of different intensities.

3.2. Comparison of HMTS with NARX Neural Network

To evaluate the HMTS efficiency, we compared it with the NARX neural network [

44]. The NARX network is a non-linear autoregressive neural network, and it is often used to forecast time series [

44,

45,

46,

47]. The architectural structure of recurrent neural networks can take different forms. There are NARX with a Series-Parallel Architecture (NARX SPA) and NARX with a Parallel Architecture (NARX PA) [

44,

45].

The dynamics of the NARX SPA model is described by the equation

where

is the neural network display function,

is the neural network output,

are neural network inputs,

are past values of the time series.

In NARX PA, the network input takes the network outputs instead of the past values of the time series .

The neural networks were trained separately for different seasons and different levels of solar activity. During the training, we used the data for the periods without ionospheric anomalies. We obtained the networks with delays

and

for each season. The results of the networks are shown in

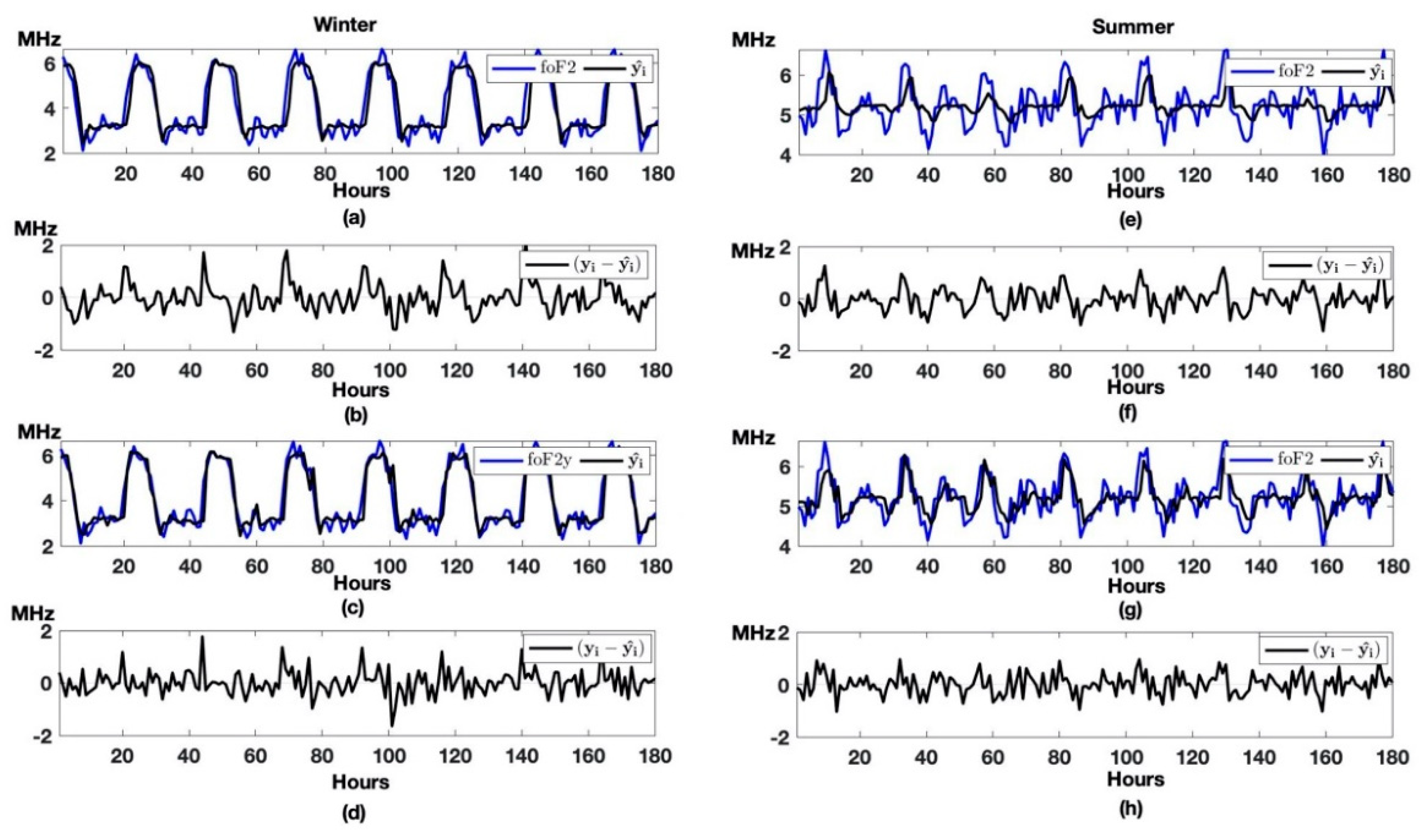

Figure 8.

Table 4 shows the standard deviations of errors (SD) of networks, which were determined as

The analysis of the results (

Figure 8,

Table 4) shows that the NARX SPA predicts the data with fewer errors than the NARX PA. Sending the past time series values to the NARX SPA network input (rather than network outputs) made it possible to obtain a more accurate data prediction. The comparison results of the NARX SPA with the HMTS are presented below.

Figure 9 shows the results of ionospheric data modeling based on HMTS and NARX SPA during the periods of absence of ionospheric anomalies. The results show that the model errors have similar values for the winter and summer seasons, and vary within the interval of [−1,1], both for HMTS and NARX SPA.

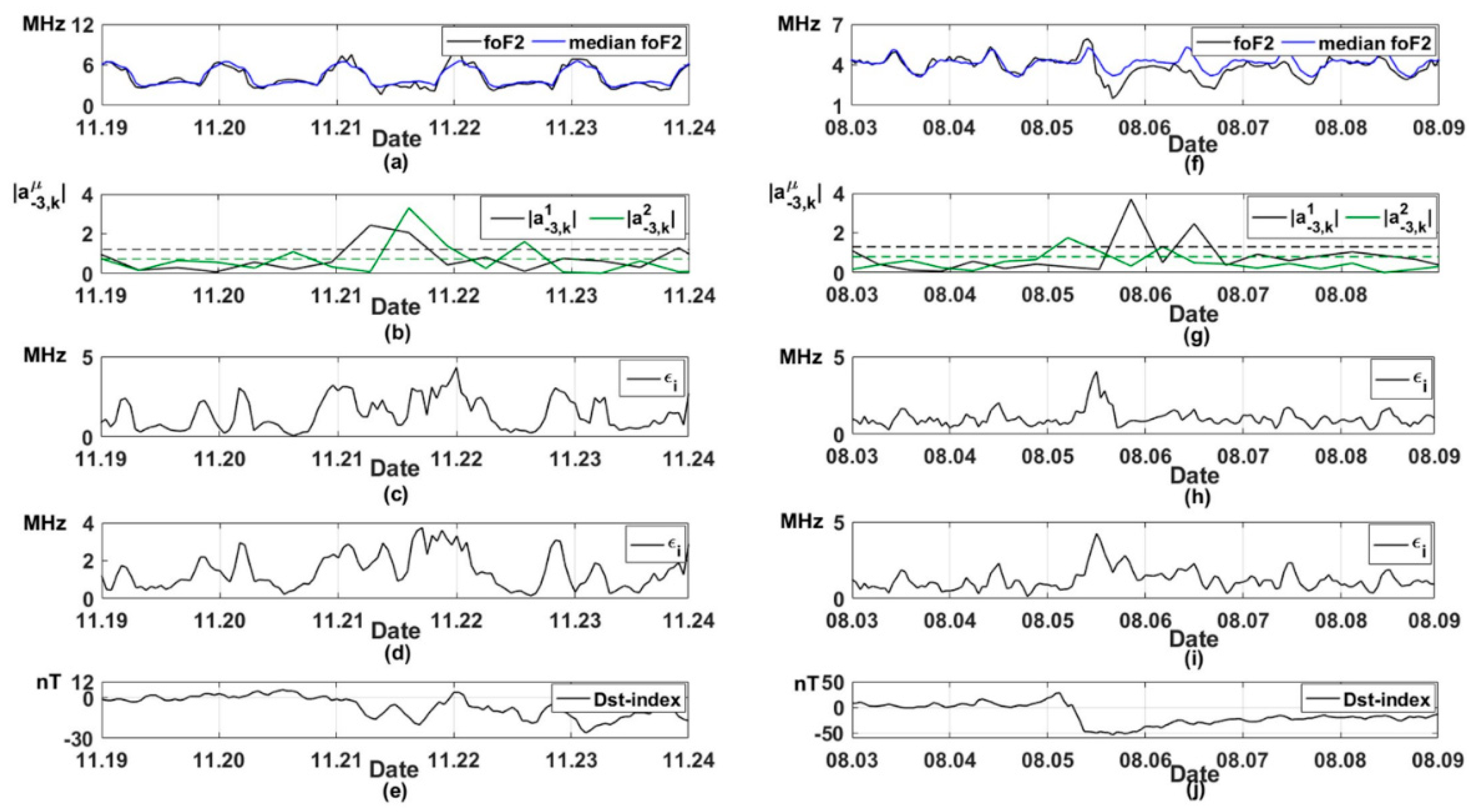

Figure 10 shows the results of the application of HMTS and NARX SPA for hourly foF2 data during magnetic storms that occurred on 21–22 November 2017 and 5–6 August 2019. NARX SPA errors were calculated in a 3-h moving time window:

.

Figure 10e,j shows the geomagnetic activity Dst-index, which characterizes geomagnetic disturbance intensity during magnetic storms. Dst-index takes negative values during magnetic storms. The increases in HMTS and NARX SPA errors during the analyzed magnetic storms (

Figure 10b–d,g–i) indicate ionospheric anomaly occurrences. The results show that HMTS and NARX SPA allow us to detect ionospheric anomalies successfully. However, an increase in NARX SPA errors is also observed in wintertime on the eve and after the magnetic storm (

Figure 10c,d). This shows the presence of false alarms.

The results of detecting ionospheric anomalies based on HMTS and NARX SPA are shown in

Table 5,

Table 6,

Table 7 and

Table 8. The estimates were based on statistical modeling. The HMTS results are shown for the 90% confidence interval. The analysis of the results shows that NARX SPA efficiency exceeds that for HMTS during high solar activity. However, the frequency of false alarms for HMTS is significantly less than that for NARX SPA.