1. Introduction

The topic of this paper concerns the analysis of (one-dimensional) inhomogeneous

continuous-time Markov chains (CTMC) with discrete state space. The inhomogeneity property implies that (some or all) transition intensities are non-random functions of time and (may or may not) depend on the state of the chain. For such mathematical models many operations research applications are known (see, for example, [

1,

2,

3,

4] and [Section 5] in [

5]), but the motivation of this paper is queueing. Thus all the examples considered in this paper are devoted to time varying queues. Substantial literature on the problem exists in which various aspects (like existence of processes, numerical algorithms, asymptotics, approximations and others) are analyzed. The attempt to give a systematic classification of the available approaches (based on the papers published up to 2016) is made in [

5]; up-to-date point of view is given in [Sections 1 and 1.2] of [

4] (see also [

6]).

The specific question, being the topic of this paper, is the computation of the long-run (see, for example, in [Introduction] of [

7]), (limiting) time-dependent performance characteristics of a CTMC with time varying intensities. This question can be considered from different point of views: computation time, accuracy, complexity, storage use etc. As a result, various solution techniques have been developed, but none of them is the ubiquitous tool. One of the ways to improve the efficiency of a solution technique is to supply it with a method for the limiting regime detection, (or, in other words, a method providing ergodicity bounds): once the limiting regime is reached, there is no need to continue the computation indefinitely. The main contribution of this paper is the review of one such method (see

Section 2) and presentation of its applicability in two new use-cases, not considered before in the literature (see

Section 4 and

Section 5). It is worth noting that methods, which provide ergodicity bounds, can be also helpful, whenever a truncation of the countable state space of the chain is required. The method presented in

Section 2, whenever applicable, is helpful in this aspect as well (see also [

8,

9]).

The end of this section is devoted to the review (by no means exhaustive) of the popular solution techniques for the analysis of Markov chains in time varying queueing models. The attention is drawn to the ability of a technique to yield limiting time-dependent performance characteristics of a Markov chain with time varying intensities. For each technique mentioned, (computer simulation methods and numerical transform inversion algorithms are not discussed here), it is highlighted if any benefit can be gained when the technique is used along with a method providing ergodicity bounds.

In many applied settings the performance analysis is based on the procedure known as point-wise stationary approximation [

10] and its ramifications. According to it the time-dependent probability vector

at time

t is approximated by the steady-state probability vector

by solving

and

, where

is the time-dependent intensity matrix (throughout the paper the vectors denoted by bold letters are regarded as column vectors,

denotes the

kth unit basis vector,

—row vector of 1’s with

denoting the matrix transpose). In its initial version, the approximation breaks down if the instantaneous system’s load is allowed to exceed 1. In general its quality depends on the values of the transition rates, and for some models (like time-dependent birth-and-death processes) the approach is proved to be correct asymptotically in the limit (as transition intensities increase). Another fruitful set of techniques, which help one understand the performance of complex queueing systems, is the (conventional and many-server) heavy-traffic approximations, (another approximation technique, worth mentioning here especially because of its applicability to non-Markov time varying queues, is robust optimization. See [

4], Section 2). Since scaling is important in heavy-traffic limits, usually the technique is more justified whenever the state space of a chain is in some intuitive sense close to continuous (see e.g., [

11,

12] and no doubt others), and less (or even not at all) justified if the state space is essentially discrete, (for example, when formed by the number of customers in the system

(for fixed

N) at time

t). Due to the nature of both class of techniques mentioned above they do not benefit from methods providing ergodicity bounds.

The very popular set of techniques to calculate performance measures, which stands apart from the two mentioned above, is comprised of numerical methods for systems of ordinary differential equations (ODEs)—Kolmogorov forward equations, (for an illustration the reader can refer to, for example, [

13]). Due to the increasing computer power such methods keep gaining popularity. By introducing approximations these methods can be made more efficient. For example, when only moments of the Markov chain are of interest one can use closure approximations, (since the moment dynamics are (when available) close to the true dynamics of the original process, the benefits from the methods providing ergodicity bounds, when used alongside, are clear), (see e.g., [

14,

15,

16]). Another method for the computation of transient distributions of Markov chains is uniformization (see [

17]). It is numerically stable and, as reported, usually outperforms known differential equation solvers (see [Section 6] in [

18]).

The methods based on uniformization suffer from slow convergence of a Markov chain: whenever it is slow, computations involve a large number of matrix-vector products. An ODE technique yields the numerical values of performance measures, but it is complicated by a number of facts, among which we highlight only those which are related to the topic of this paper. Firstly, there can be infinitely many ODEs in the system of equations. Traditionally this is circumvented by truncating the system, i.e., making the number of equations finite. But there is no general “rule of thumb” for choosing the truncation threshold. Secondly, (time-dependent) limiting characteristics of a CTMC are usually considered to be identical to the solution of the system on some distant time interval (see, for example, [

17,

18,

19,

20,

21,

22,

23]). This procedure yields limiting characteristics with any desired accuracy, whenever the CTMC is ergodic. Yet, in general, it is not suitable for Markov chains with countable (or finite but large) state space. Moreover it is not clear, (convergence tests are usually required, which result in additional computations). how to choose the position and the length of the “distant time interval”, on which the solution of the system must be found. Thus in practice without an understanding a priori about when the limiting regime is reached, significant computational efforts are required to make oneself sure that the obtained solution is the one required, (and, for example, the steady-state is not detected prematurely (see [

24]). The authors in [

20] propose the solution technique equipped with the steady-state detection. As is shown, it allows significant computational savings and simultaneously ensures strict error bounding. Yet the technique is only applicable, when the stationary solution of a Markov chain can be efficiently calculated in advance).

The approaches mentioned in the previous paragraph have straightforward benefit from the methods providing a priori determination of point of convergence. Although generally this task is not feasible, certain techniques exist, which provide ergodicity bounds for some classes of Markov chains. In the next section we review one such technique, being developed by the authors, which is based on the logarithmic norm of linear operators and special transformations of the intensity matrix, governing the behaviour of a CTMC. In the

Section 3,

Section 4 and

Section 5 it is applied to three use-cases.

Section 6 concludes the paper.

In what follows by we denote the -norm, i.e., if is an -dimensional column vector then . If is a probability vector, then . The choice of operator norms will be the one induced by the -norm on column vectors, i.e., for a linear operator (matrix) A.

2. Logarithmic Norm Method

Ergodic properties of Markov chains have been the subject of many research papers (see e.g., [

25,

26]). Yet obtaining practically useful general ergodicity bounds is difficult and remains, to large extent, an open problem. Below we describe one method, called the “logarithmic norm” method, which is applicable in the situations, when the discrete state space of the Markov chain cannot be replaced by the continuous one and the transition intensities are such that the chain is either null or weakly ergodic. The method is based on the notion of the logarithmic norm (see e.g., [

27,

28]) and utilizes the properties of linear systems of differential equations.

Consider an ODE system

where the entries of the matrix

are locally integrable on

and

is bounded in the sense that

is finite for any fixed

t. Then

where

is the logarithmic norm of

i.e.

Thus the following upper bound holds:

If

has non-negative non-diagonal elements (and arbitrary elements on the diagonal, (such a matrix in the literature is called sometimes essentially nonnegative).) and all of its column sums are identical, then there exist

such that in (

4) the equality holds.

The logarithmic norm method is put into an application in four consecutive steps. Firstly one has to determine whether the given Markov chain (further always denoted by

) is null-ergodic or weakly ergodic,(a Markov chain is called null-ergodic, if for all its state probabilities

as

for any initial condition; a Markov chain is called weakly ergodic if

as

for any initial condition

, where the vector

contains state probabilities). Secondly one excludes one “border state” from the Kolmogorov forward equations and thus obtains the new system with the matrix which, in general, may have negative off-diagonal terms. The third step is to perform (if possible) the similarity transformation (see (

11) and (

24)), i.e., to transform the new matrix in such a way that its off-diagonal terms are nonnegative and the column sums differ as little as possible. At the final, fourth step one uses the logarithmic norm to estimate the convergence rate. The key step is the third one. The transformation is made using a sequence of positive numbers (see the sequences

below), which usually has to be guessed, does not have any probabilistic meaning and can be considered as an analogue of Lyapunov functions.

3. Time-Varying System

We start with the well-known time-varying

system with two servers and the infinite-capacity queue in which customers arrive one by one with the intensity

. The service intensity of each server does not depend on the total number of customers in the queue and is equal to

. The functions

and

are assumed to be nonrandom, nonnegative and locally integrable on

continuous functions. Let the integer-valued time-dependent random variable

denote the total number of customers in the system at time

. Then

is the CTMC with the state space

. Its transposed time-dependent intensity matrix (generator)

has the form

For all

we represent the distribution of

as a probability vector

, where

(as above,

denotes the

kth unit basis vector). Given any proper initial condition

, the Kolmogorov forward equations for the distribution of

can be written as

Assume that

is null ergodic. The condition on the intensities

and

, which guarantees null ergodicity will be derived shortly below, (clearly, if the intensities are constants, i.e.,

and

, then the condition is simply

. If both are periodic and the smallest common multiple of the periods is

T, then the condition is

). Fix a positive number

and define the sequence

by

. It is the decreasing sequence of positive numbers. By multiplying (

5) from the right with

, we get

where

and

. Denote by

the sum of all elements in the

kth column of

. By direct inspection it can be checked that

Since

and

, the upper bound follows from (

4) applied to (

6):

If

d is chosen such that

and

, then from (

7) it follows that

as

for each

and thus

is null ergodic. In such a case it is possible to extract more information from (

7). Note that for any fixed

it holds that

Thus, if

, i.e.,

then for any

the following upper bound for the conditional probability

,

, holds:

Now assume that

is weakly ergodic (the corresponding condition on the intensities

and

will be derived shortly below). Using the normalization condition

it can be checked that the system (

5) can be rewritten as follows:

where the matrix

with the elements

has no probabilistic meaning and the vectors

and

are

Let

and

be the two solutions of (

9) corresponding to two different initial conditions

and

. Then for the vector

, with arbitrary elements we have the system

The matrix

in (

10) may have negative off-diagonal elements. But it is straightforward to see, that the similarity transformation

, where

T is the upper triangular matrix of the form

gives the matrix

:

which off-diagonal elements are always nonnegative. Let

. Then by multiplying both parts of (

10) from the left by

T, we get

Fix a positive number

and define the increasing sequence of positive numbers

by

. Let

. By putting

in (

12), we obtain the system of equations

where the matrix

has nonnegative off-diagonal elements. Denote by

the sum of all elements in the

kth column of

i.e.

Note that if

then

and

. Now, remembering that

, the upper bound for

in the weighted norm due to (

4) is (from (

14) the purpose of the similarity transformation

can be recognized: it is to make

in the exponent as large as possible).

The upper bound for

is obtained from (

14). Firstly notice that

since

is the solution of (

10)—the system with the excluded state

. Secondly, it can be proved, (this is shown, for example, in [Equation (18)] of the [

29]), that

for any vector

. Hence

If

d is chosen such that

and

, then from (

15) it follows that

as

for any initial conditions

and

, i.e.,

is weakly ergodic. Note that it is sufficient to choose

: if the integral diverges for

it also diverges for

and this is sufficient for (

14) to hold.

Sometimes it is also possible to obtain bounds similar to (

15) for other characteristics of

. For example, denote by

the conditional mean number of customers in the system at time

t, given that initially there where

k customers in the system, i.e.,

. Then using [Equation (22)] of [

29] it can be shown, that

The results obtained above for both, null and weak ergodic, cases can be put together in the single theorem.

Theorem 1. Let there exist a positive such that . Then is null (weakly) ergodic if () and the ergodicity bounds (7) and (15) hold. Whenever the intensities and are constants or periodic functions stronger results can be obtained.

Corollary 1. If in the Theorem 1 the intensities and are constants or periodic, (i.e., and are periodic functions and the length of their periods is equal to one), then is exponentially null (weakly) ergodic if () and there exist and such that for .

We now consider the numerical example. Let

and

. It is straightforward to check from the

Theorem 1 that if

then

is weakly ergodic. Then the ergodicity bounds follow from (

15) and (

16):

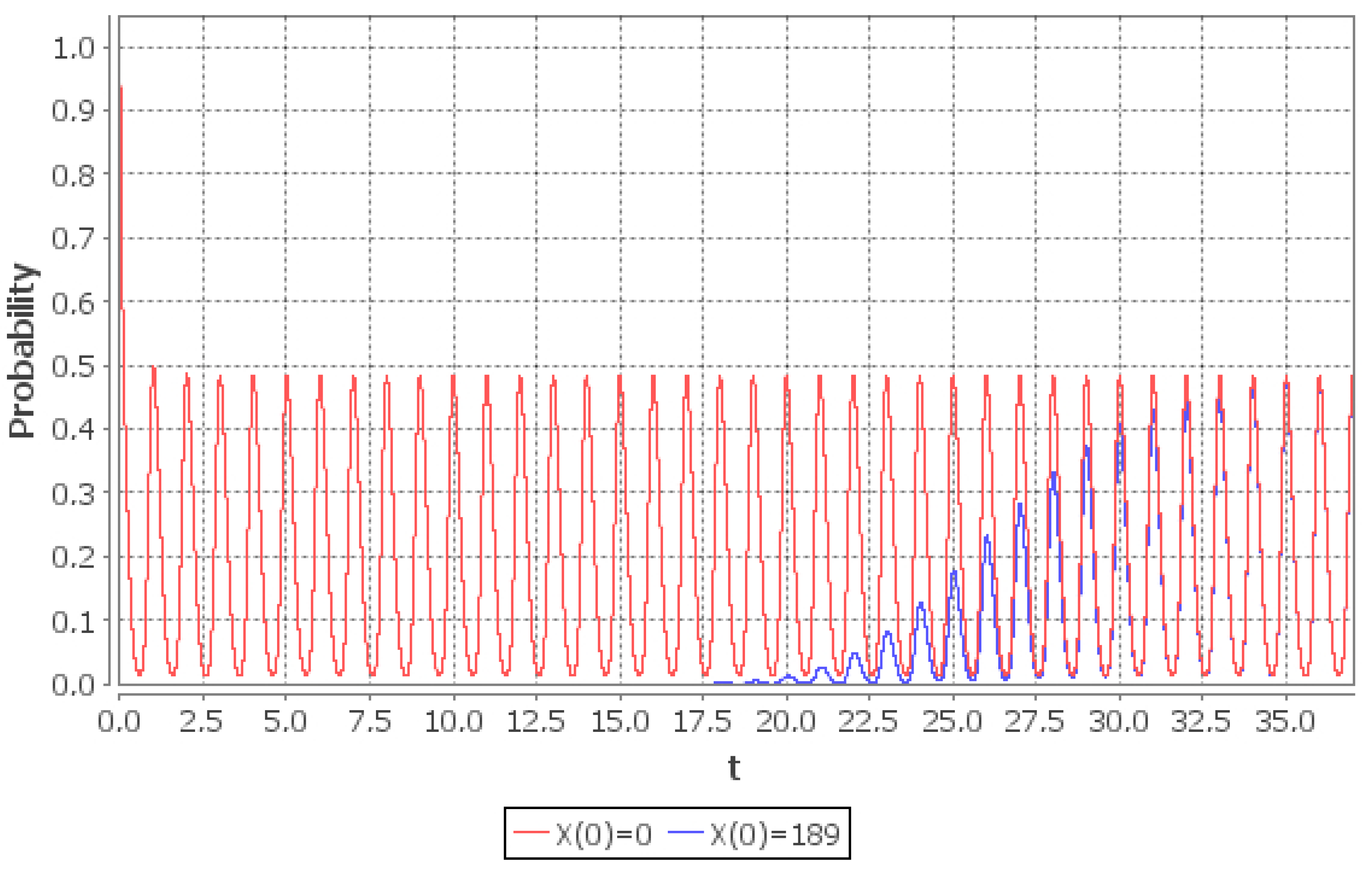

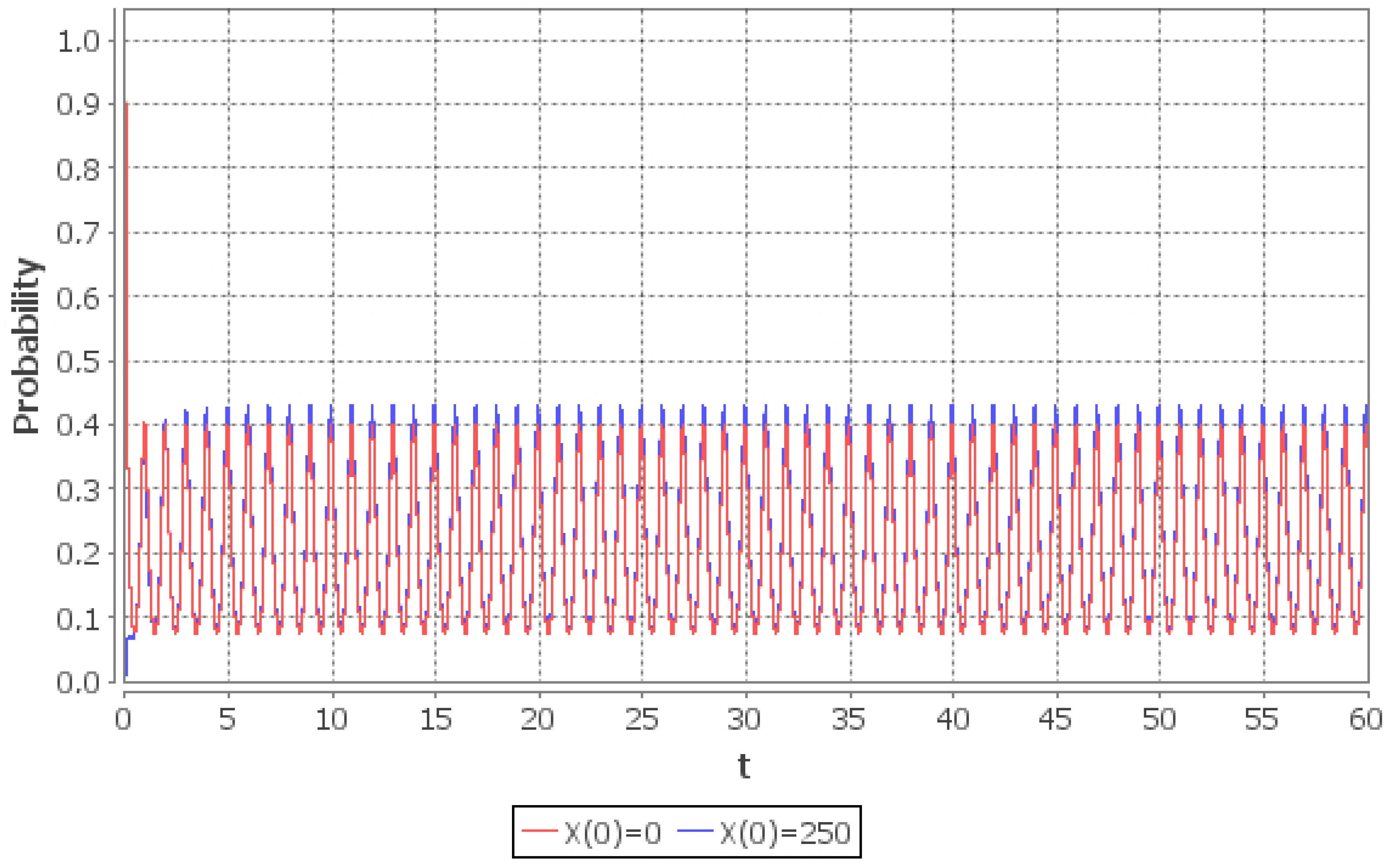

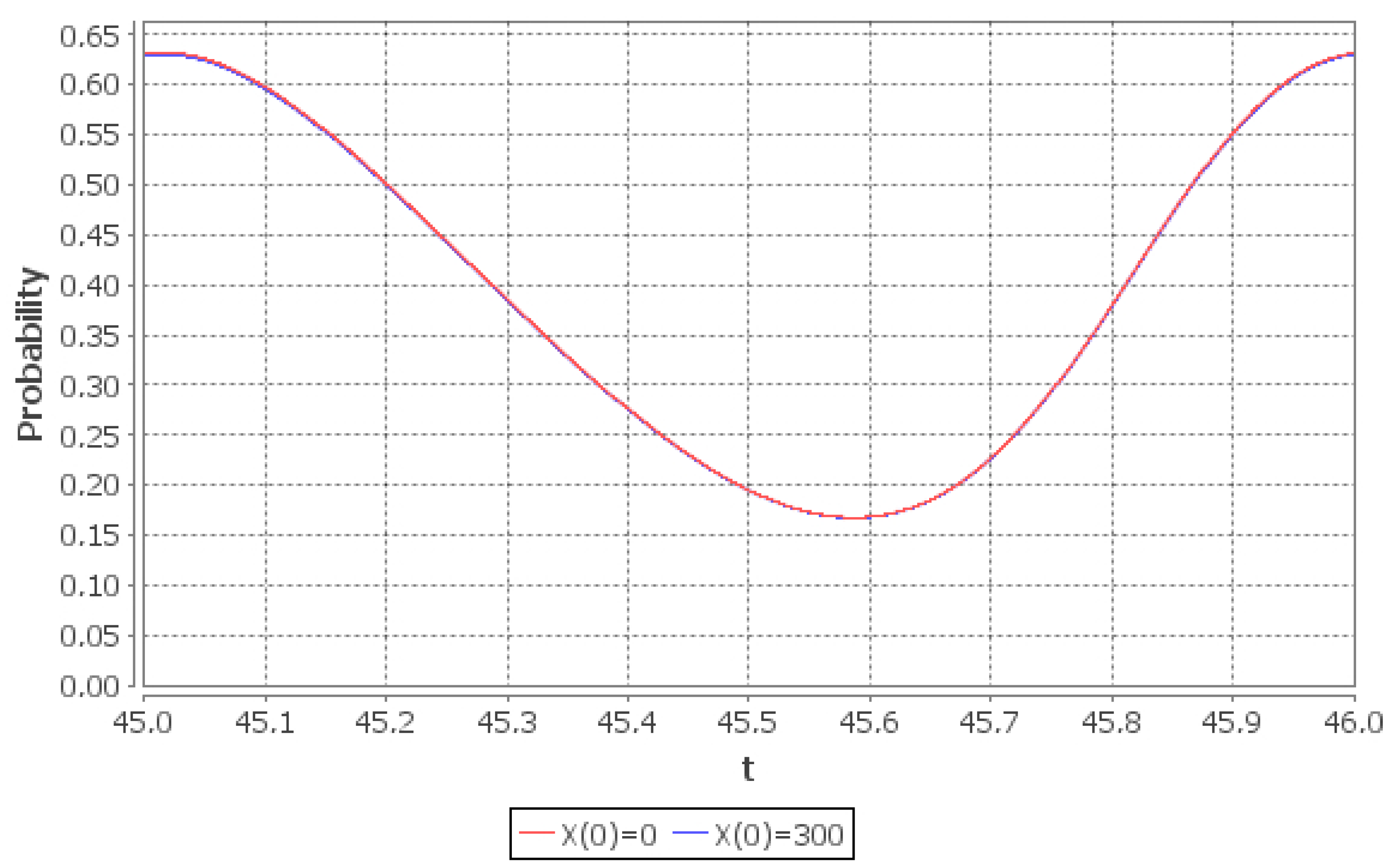

Figure 1 shows the graph of the probability

as

t increases. It can be seen that for any initial condition

there exists one periodic function of

t, say

(i.e.,

, where

is the smallest common multiple of the periods of

and

), such that

.

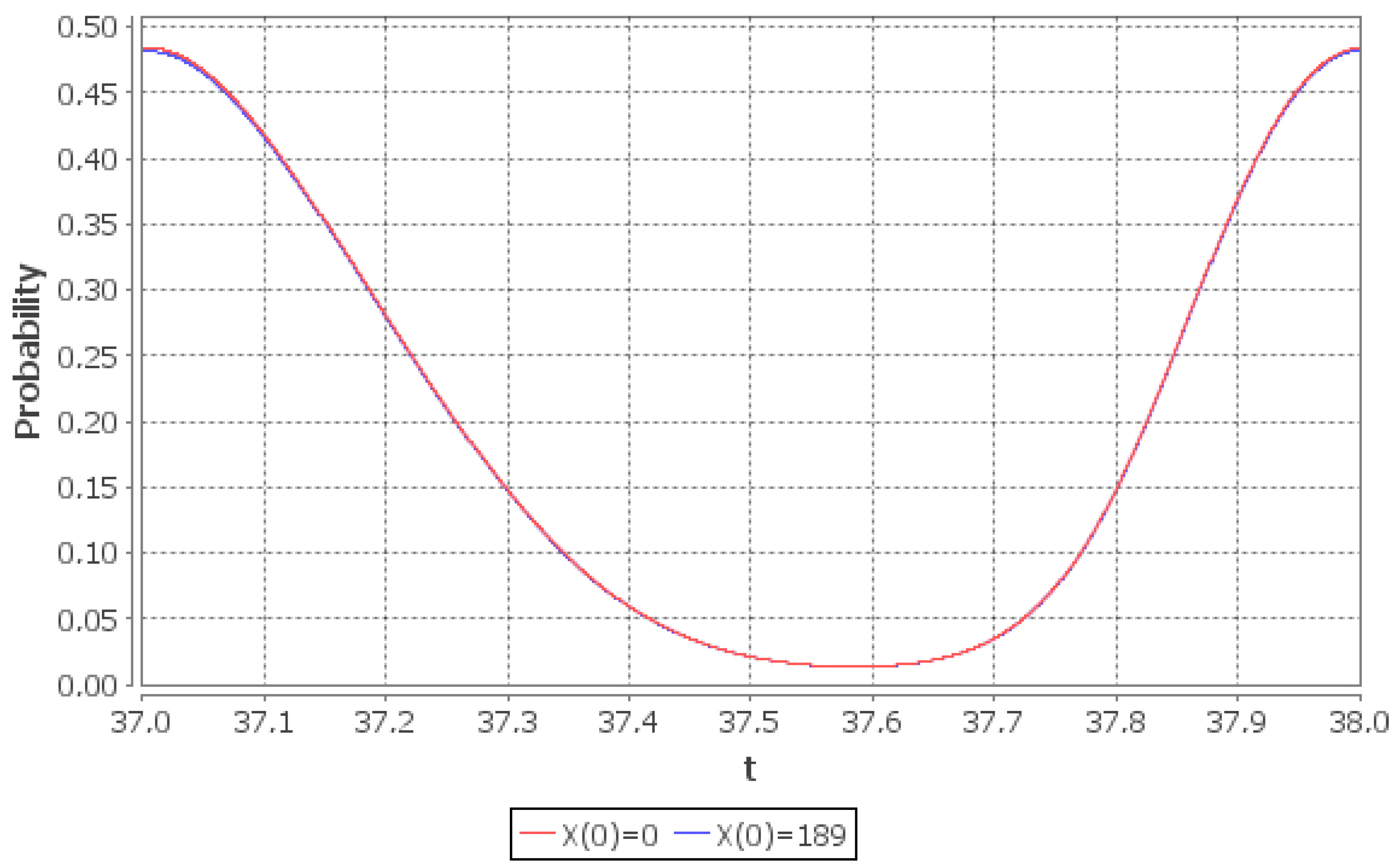

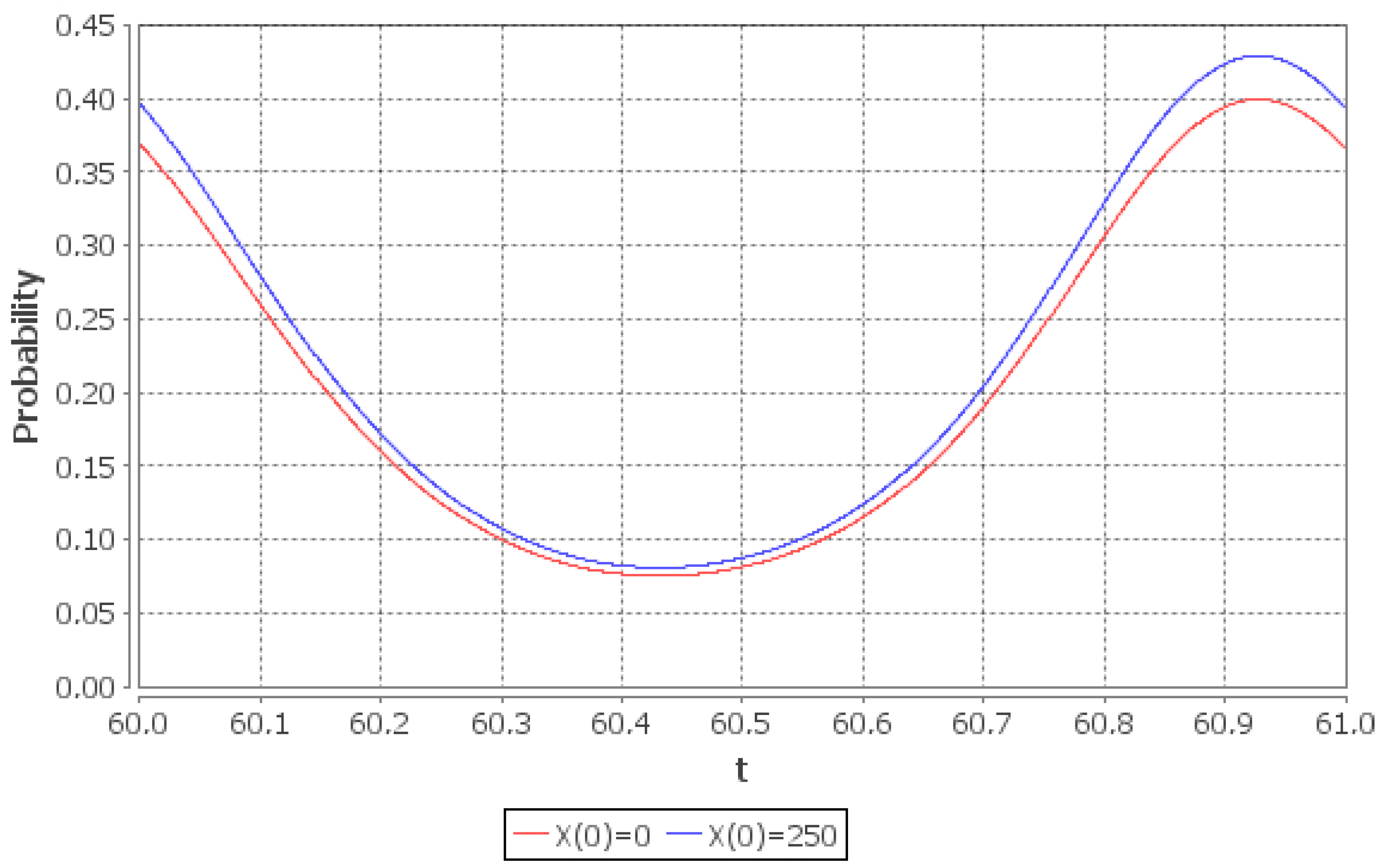

Figure 2 shows the detailed behaviour of

. Now consider (

17). If

then the right part of (

17) does not exceed

i.e., starting from the instant

the system “forgets” its initial state and the distribution of

for

can be regarded as limiting. The error (in

-norm), which is thus made, is not greater than

. Moreover, since the limiting distribution of

is periodic, it is sufficient to solve numerically the system of ODEs only in the interval

. The distribution of

in the interval

is the limiting probability distribution of

(with error not greater than

in

-norm). Note that the system of ODEs contains infinite number of equations. Thus in order to solve it numerically one has to truncate it; this truncation was performed according to the method in [

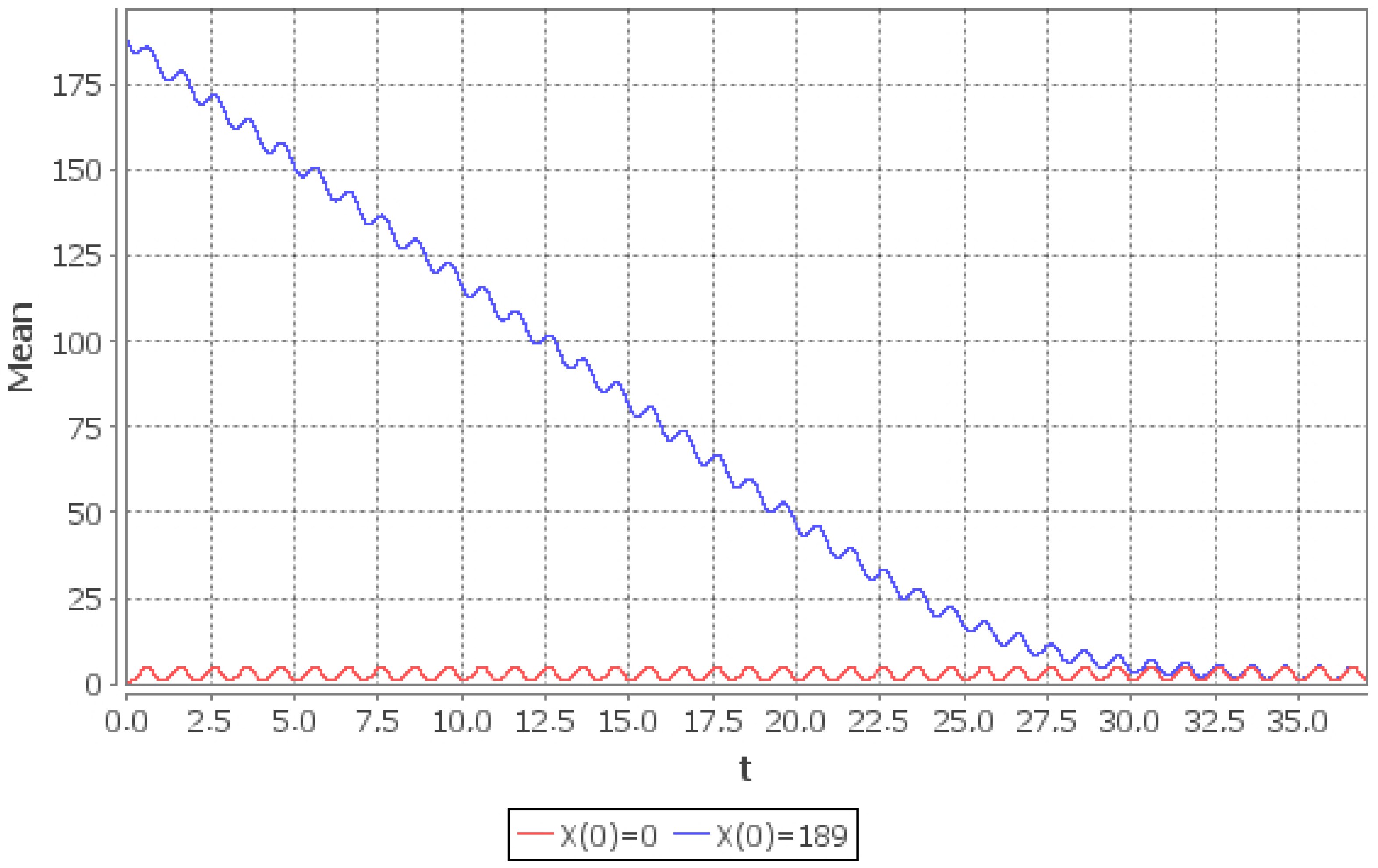

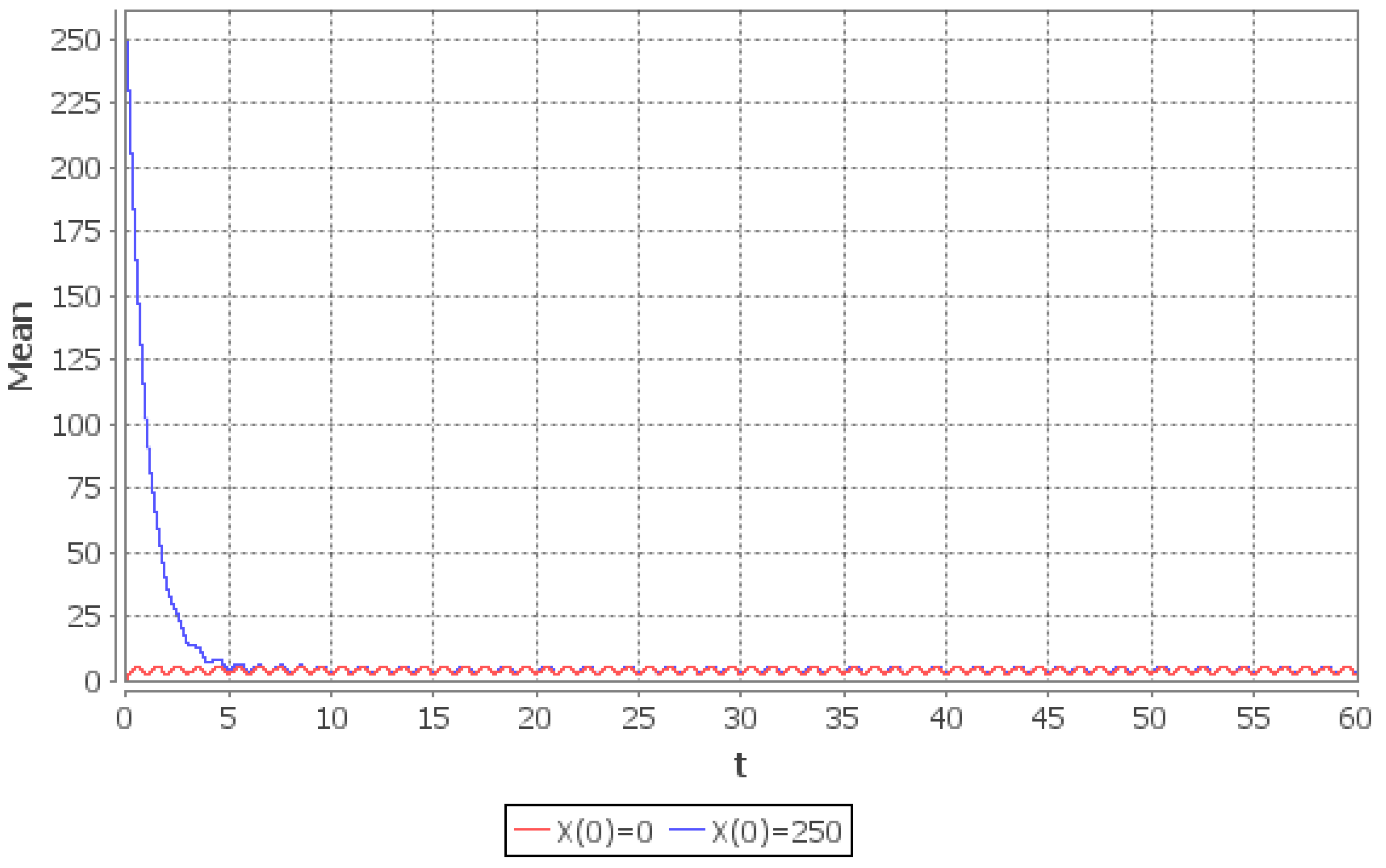

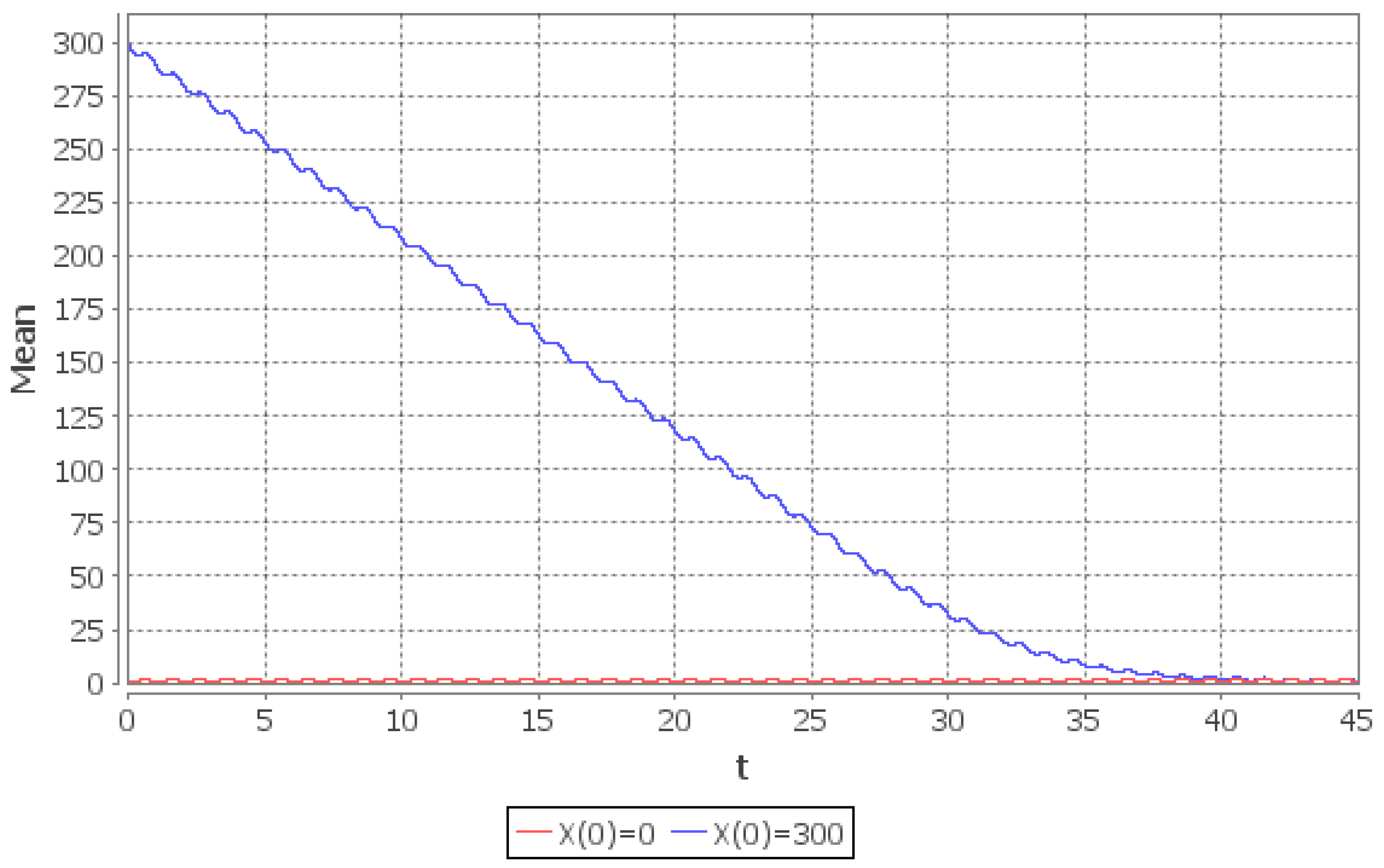

30]. The upper bound on the rate of convergence of the conditional mean

is given in (

18). If

then the right part does not exceed

i.e., starting from

the system “forgets” its initial state and the value of

can be regarded as the limiting value of the conditional mean number of customers with the error not greater than

. The rate of convergence of

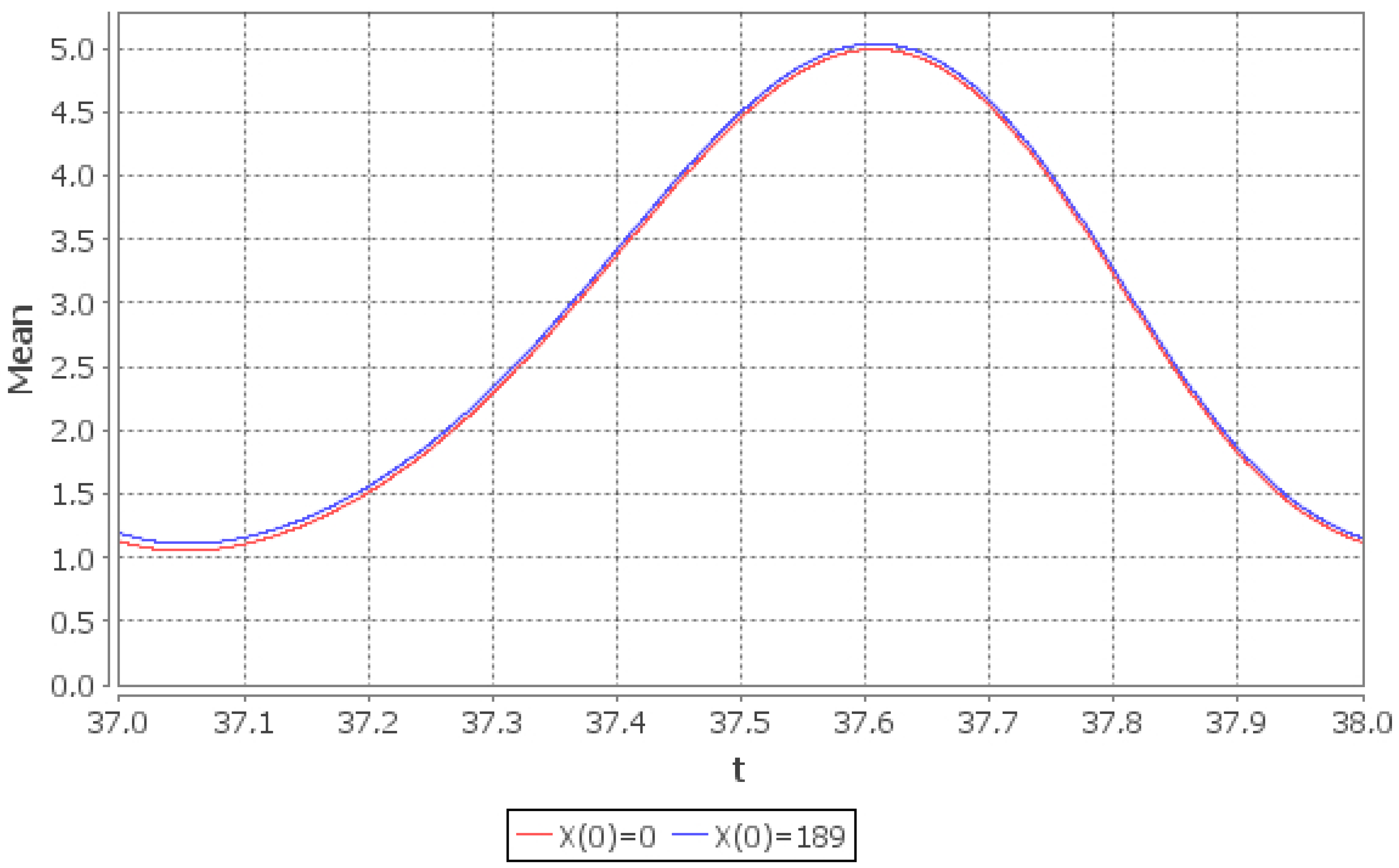

and the behaviour of its limiting value is shown in the

Figure 3 and

Figure 4. Note that the obtained upper bounds are not tight: the system enters the periodic limiting regime before the instant

.

4. Time-Varying Single-Server Markovian System with Bulk Arrivals, Queue Skipping Policy and Catastrophes

Consider the time-varying

system with the intensities being periodic functions of time and the queue skipping policy as in [

31] (see also [

32]). Customers arrive to the system in batches according to the inhomogeneous Poisson process with the intensity

. The size of an arriving batch becomes known upon its arrival to the system and is the random variable with the given probability distribution

, having finite mean

,

. The implemented queue skipping policy implies that whenever a batch arrives to the system its size, say

, is compared with the remaining total number of customers in the system, say

. If

, then all customers, that are currently in the system, are instantly removed from it, the whole batch

is placed in the the queue and one customer from it enters server. If

the new batch leaves the system without having any effect on it. Whenever the server becomes free the first customer from the queue (if there is any) enters server and gets served according to the exponential distribution with the intensity

. Finally the additional inhomogeneous Poisson flow of negative customers with the intensity

arrives to the system. Each negative arrival results in the removal of all customers present in the system at the time of arrival. The negative customer itself leaves the system. Since

depends on

t it can happen that the effect of negative arrivals fades away too fast as

(for example, if

,

). Such cases are excluded from the consideration.

Let be the total number of customers in the system at time t. From the system description it follows that is the CTMC with state space , where is the maximum possible batch size i.e., . Thus if the batch size distribution has infinite support then the state space is countable, otherwise it is finite.

It is straightforward to see that the transposed time-dependent generator

for

has the form

We represent the distribution of

as a probability vector

, where

tor all

. Given a proper

, the probabilistic dynamics of

is described by the Kolmogorov forward equations

, which can be rewritten in the form

where

and

is the matrix with the terms

equal to

Due to the restrictions imposed on , we have that . Thus cannot be null ergodic irrespective of the values of and .

Theorem 2. Assume that the catastrophe intensity is such that . Then the Markov chain is weakly ergodic and for any two initial conditions and it holds that Proof. It is straightforward to check, that the logarithmic norm (see (

3)) of the operator

is equal to

. Denote now by

the Cauchy operator of the Equation (

19). Then the statement of the theorem follows from the inequalities

and

□

Even though (

21) is the valid ergodicity bound for

, it is of little help whenever the state space of

is countable and one needs to perform the numerical solution of (

5). This is due to the fact that the bound (

21) is in the uniform operator topology, which does not allow to use the analytic frameworks (for example, [

29]) for finding proper truncations of an infinite ODE system. For the latter task ergodicity bounds for

in stronger (than

), weighted norms are required. It can be said that with such bounds we have a weight assigned to each initial state and thus a truncation procedure becomes sensitive to the number of states. Below (in the Theorem 3) we obtain such a bound under the additional assumption, (for the definition used see [

33]; appropriate test for monotone functions can be found in [Proposition 1] of [

34]. Although the Theorem 2 below holds for any distribution

, this assumption is essential for the Theorem 3. For distributions with tails heavier than the geometric distribution we were unable to find the conditions, which guarantee the existence of the limiting regime of queue-size process even for periodic intensities). that the batch size distribution

is harmonic new better than used in expectation i.e.,

for all

.

Using the normalization condition

the forward Kolmogorov system

can be rewritten as

where

and

Fix

and define the increasing sequence of positive numbers

by

. Then instead of the matrix

in (

13) we have the matrix

with the following structure:

Since the logarithmic norm (see (

3)) of

is equal to

then from (

4) we get:

Arguments similar to those used to establish the

Theorem 1 lead to the following ergodicity bounds for

and the conditional mean

:

These results can be put together in the single theorem.

Theorem 3. Assume that the distribution with finite mean is harmonic new better than used in expectation. Then if for some , then the Markov chain is weakly ergodic and the ergodicity bound (26) holds. We close this section with the example, showing the dependence on

t of the same two quantities —

and

—considered in the

Section 3. Assume here that

,

,

and

, i.e., the catastrophe intensity is constant and the mean size

of an arriving batch is equal to 3. It can be checked that

satisfies the conditions of the

Theorem 3. Then from (

26) and (

27) we get the upper bounds

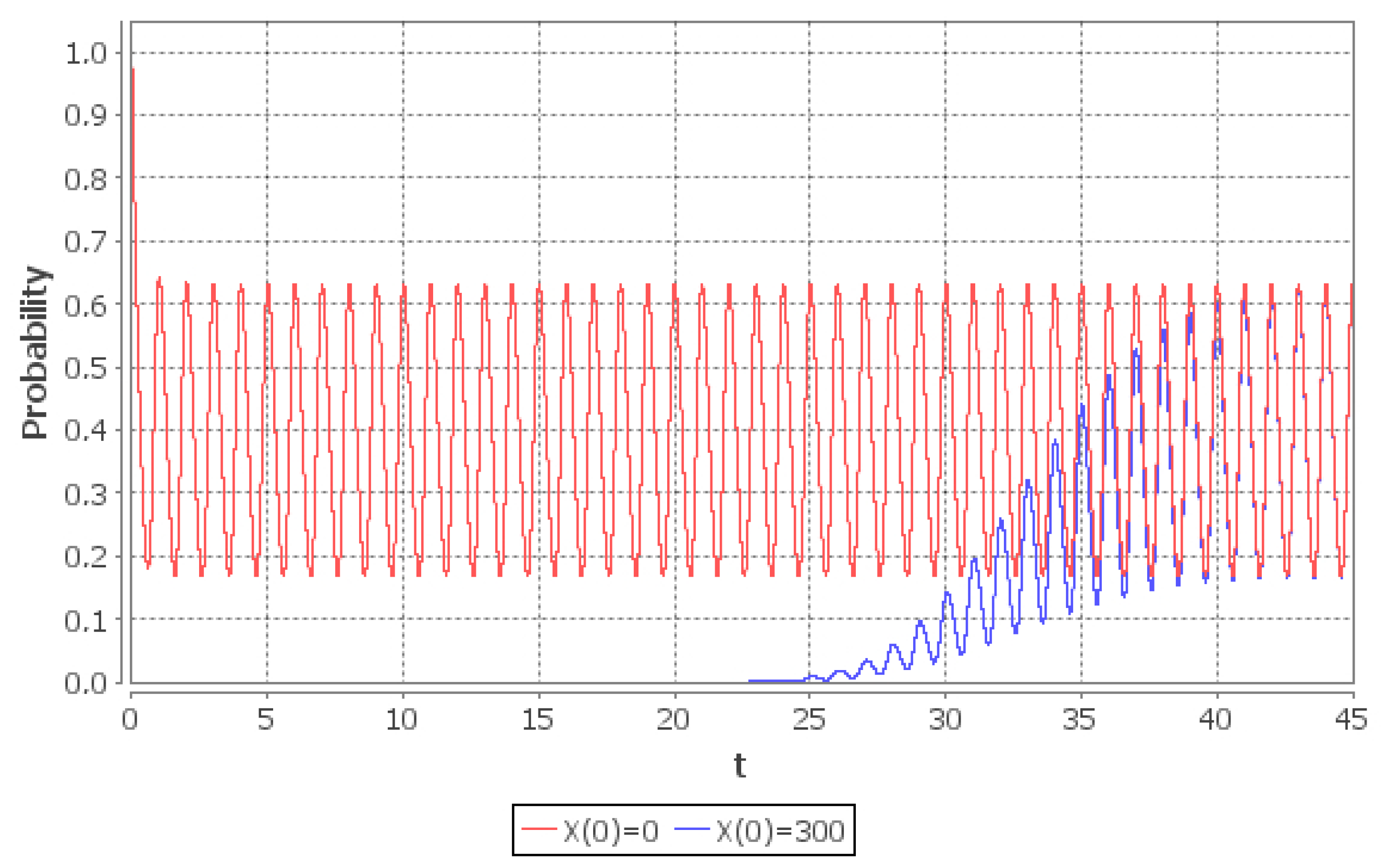

In

Figure 5 it is depicted how

behaves as

t increases and

Figure 6 shows its limiting value. If

then the right part of (

28) does not exceed

, i.e., starting from the instant

the system “forgets” its initial state and the distribution of

for

can be regarded as limiting. Moreover, since the limiting distribution of

is periodic, it is sufficient to solve (numerically, (it must be noticed that since

for all

k, the system of ODEs contains infinite number of equations. Thus in order to solve it numerically one has to truncate it. We perform this truncation according to the method in [

30])). the system of ODEs only in the interval

, where

T is the smallest common multiple of the periods of

and

i.e.,

. The probability distribution of

in the interval

is the estimate (with error not greater than

in

-norm) of the limiting probability distribution of

. The upper bound on the rate of convergence of the conditional mean number of customers in the system

is given in (

29). If

then the right part does not exceed

, i.e., starting from the instant

the system “forgets” its initial state and the value of

can be regarded as the limiting value of the mean number of customers with the error not greater than

. The rate of convergence of

and the behaviour of its limiting value can be seen in

Figure 7 and

Figure 8. As in the previous numerical example, the obtained upper bounds are not tight: the system enters the periodic limiting regime before the instant

.

5. Time-Varying Markovian Bulk-Arrival and Bulk-Service System with State-Dependent Control

In the recent paper [

35] the authors considered the Markovian bulk-arrival and bulk-service system with the general state-dependent control (see also [

35,

36,

37,

38,

39]). The total number

of customers at time

t in that system constitutes CTMC with state space

. Its generator

has quite a specific structure:

where

is the fixed integer. For further explanations and the motivation behind such structure of

we refer the reader to [Section 1] in [

35]. The purpose of this section is to show that for at least one particular case of this system, even when the intensities are time-dependent, one can obtain the upper bounds for the rate of convergence using the method based on the logarithmic norm. Specifically, we take the example, (in the example of [Section 7] in [

35] the entries of the intensity matrix

are:

,

,

,

,

,

and

). from the Section 7 of [

35], with the exception that all the transition intensities are time-dependent i.e.,

and

and are both nonnegative locally integrable on

. Then the transposed generator

of

has the form

Denote the distribution of by i.e.,

(as above, denotes the kth unit basis vector). The ergodicity bound for in the null ergodic case is given below in the Theorem 4.

Theorem 4. If for some , then the Markov chain is null ergodic, and for any and the following inequality holds: Proof. Fix

and define the decreasing sequence of positive numbers

by

. Put

, where

. Then we have (

6). Denote by

the sum of all elements in the

kth column of

i.e.

If

then

,

and

, and thus (

32) and (

33) follow from (

4) and (

8) respectively. □

The ergodicity bound in the weakly ergodic case, state below in the Theorem 5, is obtained by analogy with the Theorem 1. Define an increasing sequence of positive numbers

. Then the matrix

built from the matrix

, in the same way as it is done in the

Section 3, has the form:

Denote by

the sum of all elements in the

kth column of

i.e.,

Since the logarithmic norm of

is equal to

, we can apply (

4) to (

13) and (

15) with

,

.

Theorem 5. If for some , then the Markov chain is weakly ergodic and the ergodicity bound (15) holds. As the numerical example we again consider the periodic case:

and

. By direct inspection it can be checked that the sequence

, defined by

, leads to

. Thus the conditions of the

Theorem 5 are fulfilled with

. The pre-limiting and the limiting values of the same quantities as in the two previous examples—

and

—are shown in

Figure 9,

Figure 10,

Figure 11 and

Figure 12.