Electrostatic Capacity of a Metallic Cylinder: Effect of the Moment Method Discretization Process on the Performances of the Krylov Subspace Techniques

Abstract

1. Introduction

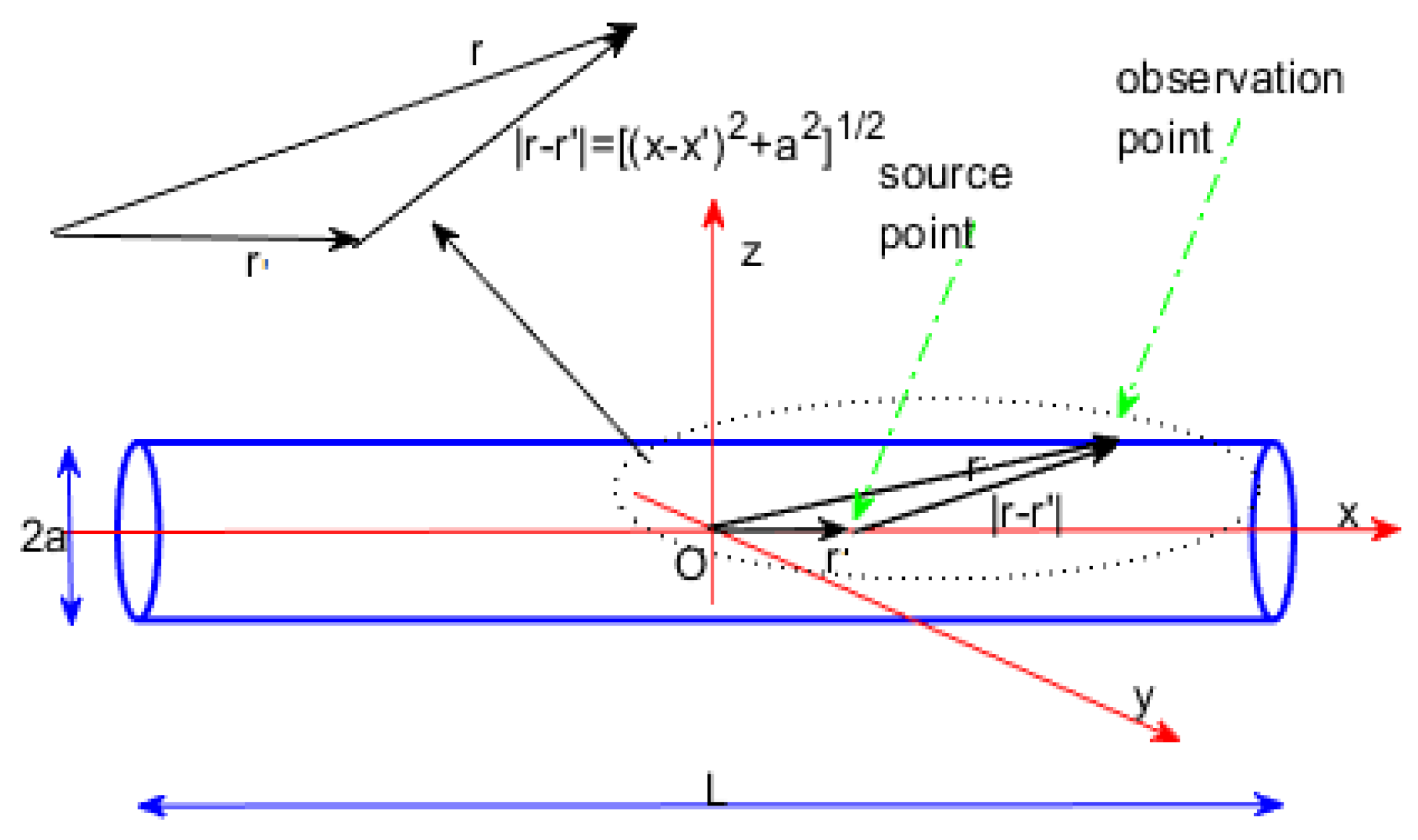

2. The Electrostatic Problem

2.1. Geometry

2.2. Formulation

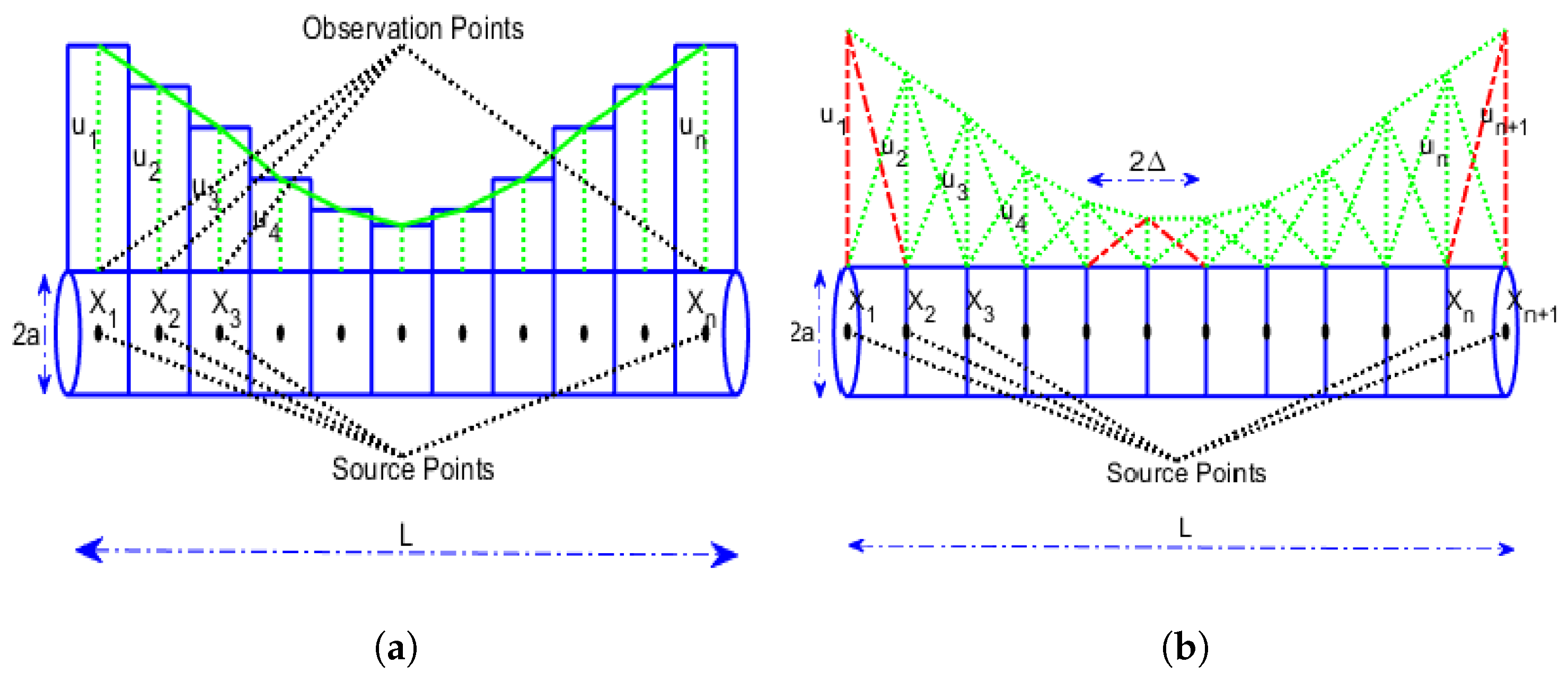

3. The Method of Moments: A Brief Overview

3.1. Backgrounds

- the first step involves discretization of (1) into a matrix equation exploiting basis functions (or expansions) and weighting functions (or testing);

- the evaluation of the matrix elements is obtained;

- the matrix equation is solved to obtain the parameters of interest.

3.2. Point Matching

3.3. Galerkin Procedure

3.4. The Set of Functions

3.4.1. Pulse Functions

3.4.2. Delta Function

3.4.3. Piecewise Triangular Functions

4. The Formulations

- In the first case, we present the study carried out that exploits the pulse function as the basis function, and as the testing function, the delta function will be considered as required in the point-matching approach;

- In the second case, following the pseudo-Galerkin procedure, the pulse function will be exploited as the basis function as well as the testing function;

- In the third case, the triangular function will be exploited as the basis function, while the delta function will be exploited as the testing function (point-matching approach);

- In the fourth case, the triangular function will be considered the basis function while the pulse function will be exploited as the testing function;

- Finally, in the fifth case, the triangular function will be considered the basis function as well as the testing function according to the pseudo-Galerkin approach.

4.1. First Case: Pulse Function as Basis Function and Delta Function as Testing Function (Point Matching)

4.2. Second Case: Pulse Function as Basis Function as well as Testing Function

4.3. Third Case: Triangular Function as Basis Function and Delta Function as Testing Function (Point Matching)

4.4. Fourth Case: Triangular Function as Basis Function and Pulse Function as Testing Function

4.5. Fifth Case: Triangular Function as a Basis Function with the Galerkin Procedure

5. High-Dimension Linear Systems Resolution: A Krylov Subspaces Approach

5.1. Iterations in Krylov Subspaces

- (1)

- Ritz–Galerkin approach: construct the vector such that the corresponding residual is orthogonal to the Krylov sub-space with index k, i.e.,

- (2)

- The residual minimum norm approach: identify for which the Euclidean residual norm is minimum on ;

- (3)

- Petrov–Galerkin approach: find such that is orthogonal to some subspace of size k;

- (4)

- The least norm error approach: determine(Krylov subspace with index k generated by and ) so the Euclidean norm of error is minimal.

5.2. Conjugate Gradient (CG) as Krylov Method

5.3. The Arnoldi Method

The Arnoldi Iteration

- The algorithm starts by means of an arbitrary vector whose norm is 1;

- For ; in addition, forand

- Then,and

- The algorithm breaks down when is a null vector or, alternatively, each of its components is less than a fixed tolerance. This occurs when the minimal polynomial of A is of degree k.

5.4. GMRES Method

5.5. The Biconjugate Gradient Method (BiCG)

5.6. The CGS Method

5.7. The BicGStab Method

- (1)

- Firstly, we compute ;

- (2)

- Then, we set ;

- (3)

- For until convergence we compute the following quantities

5.8. Comparison between GMRES and BicGStab

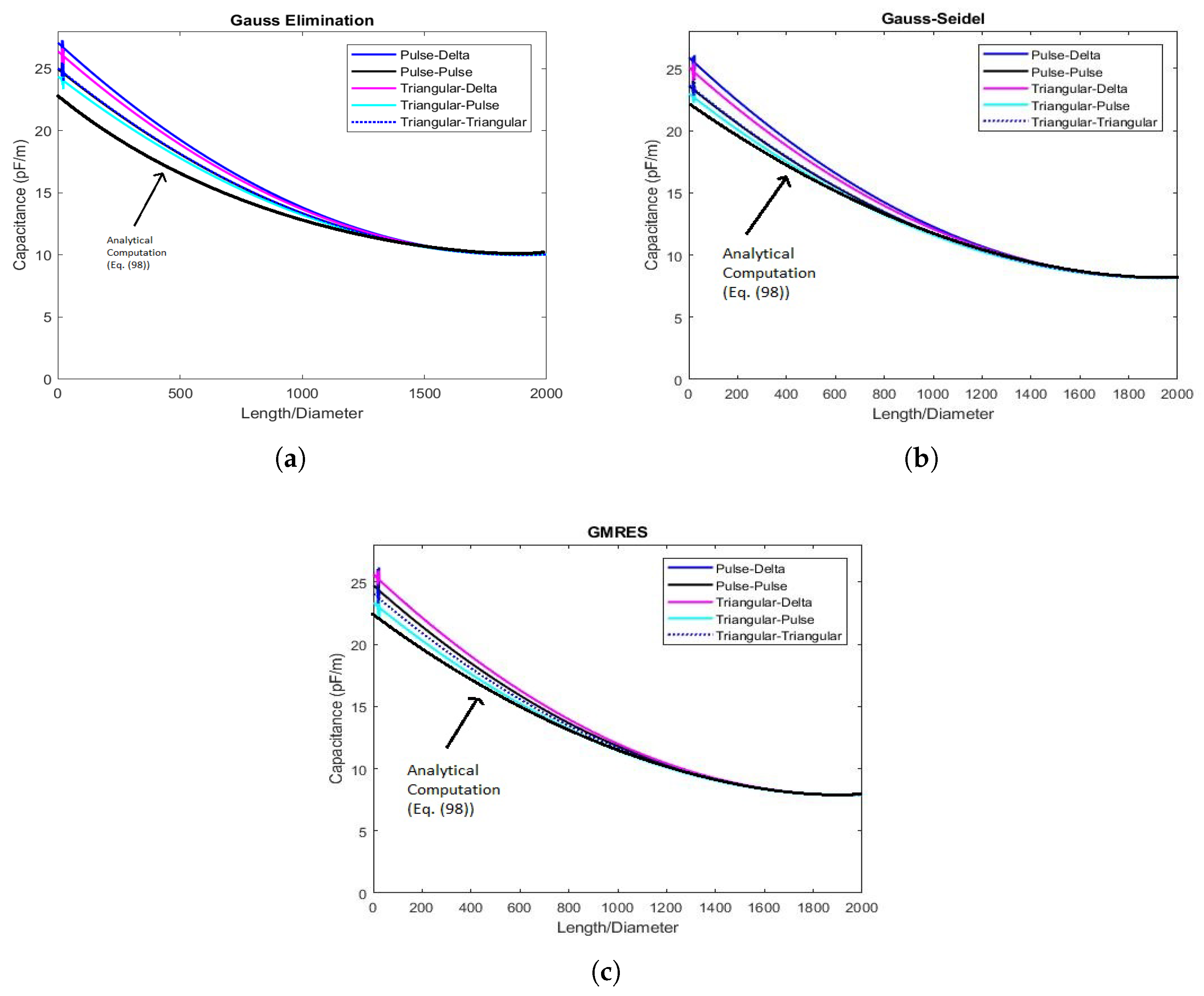

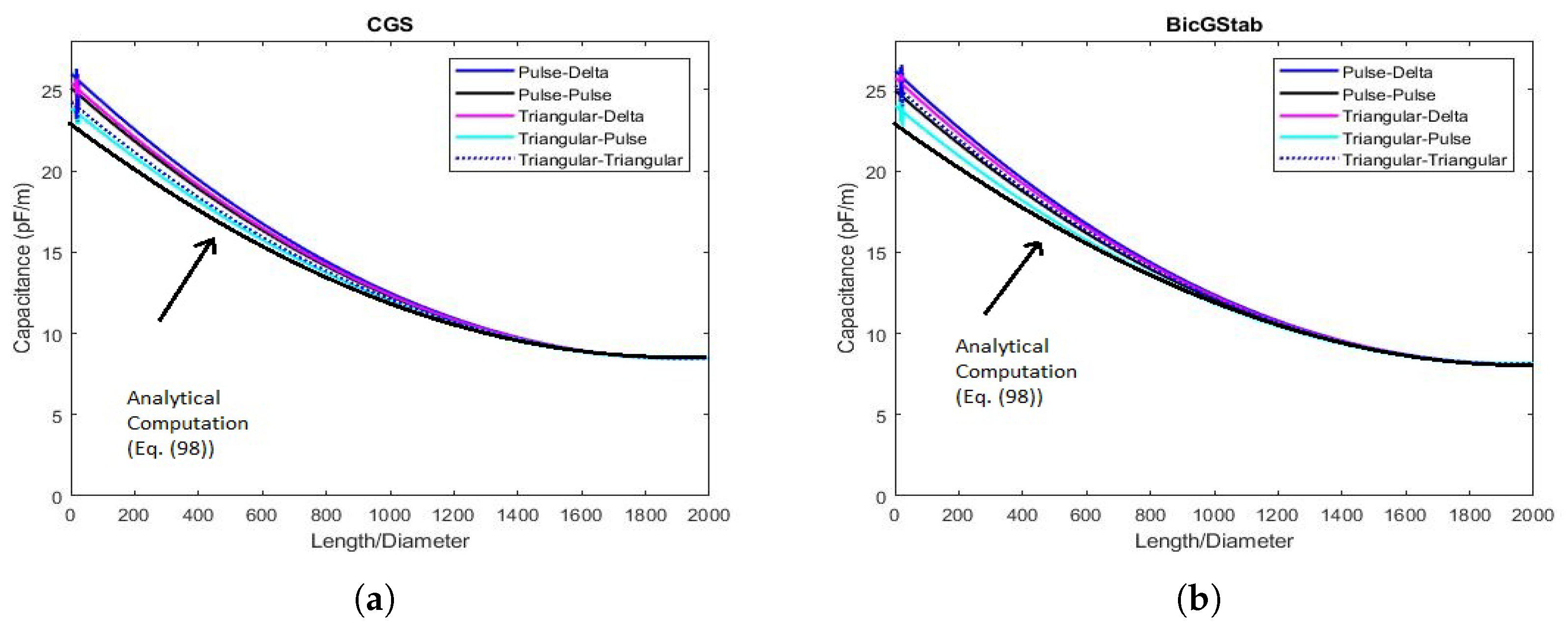

6. Numerical Results

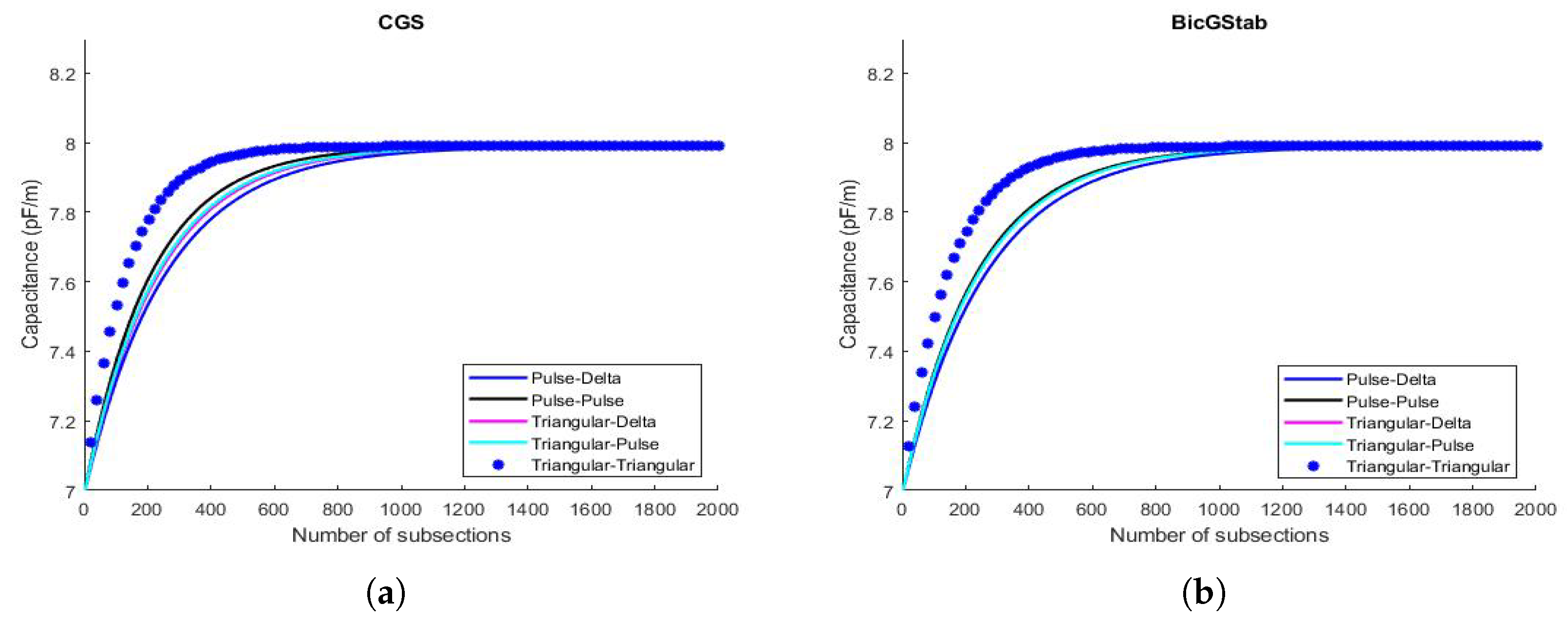

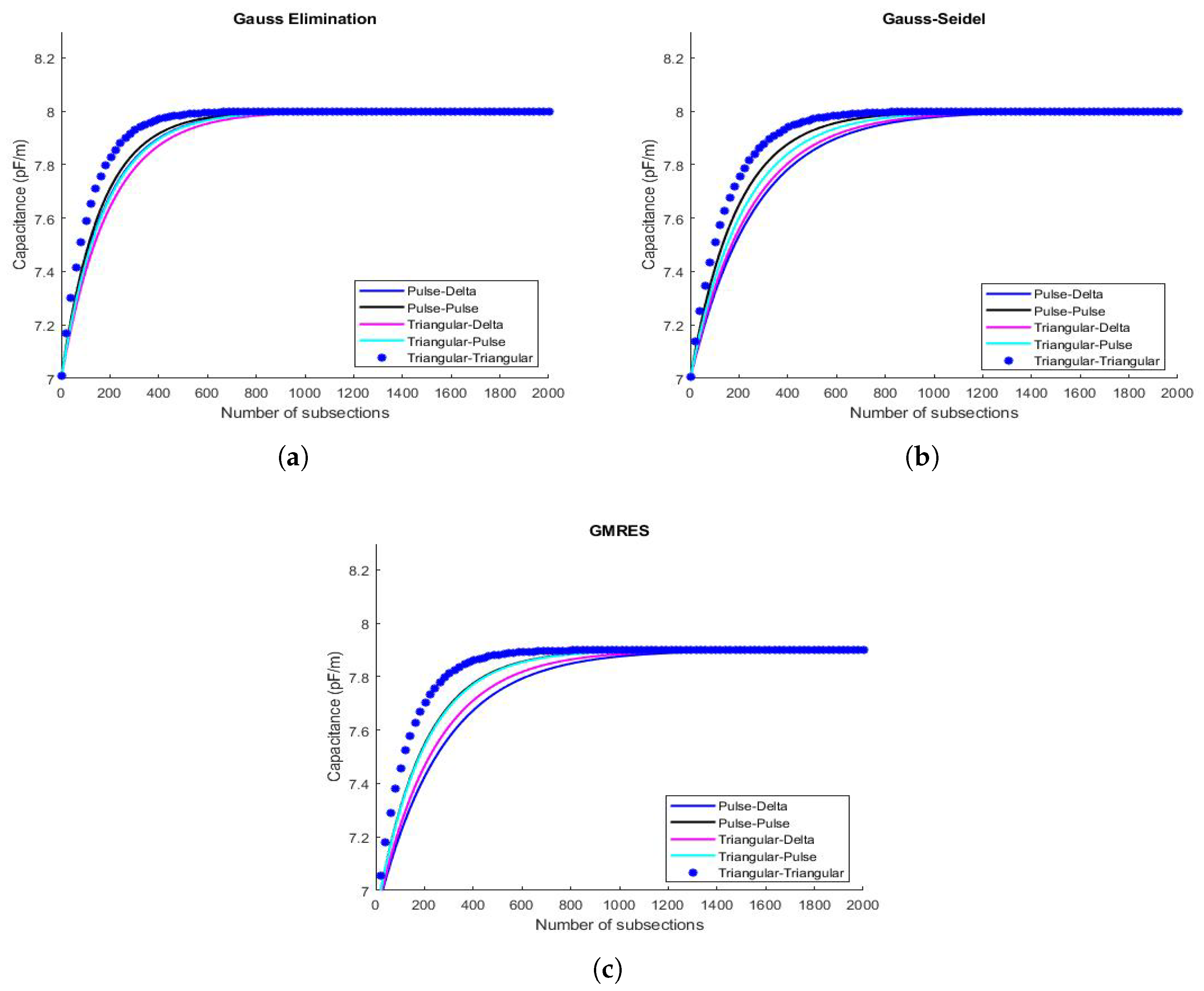

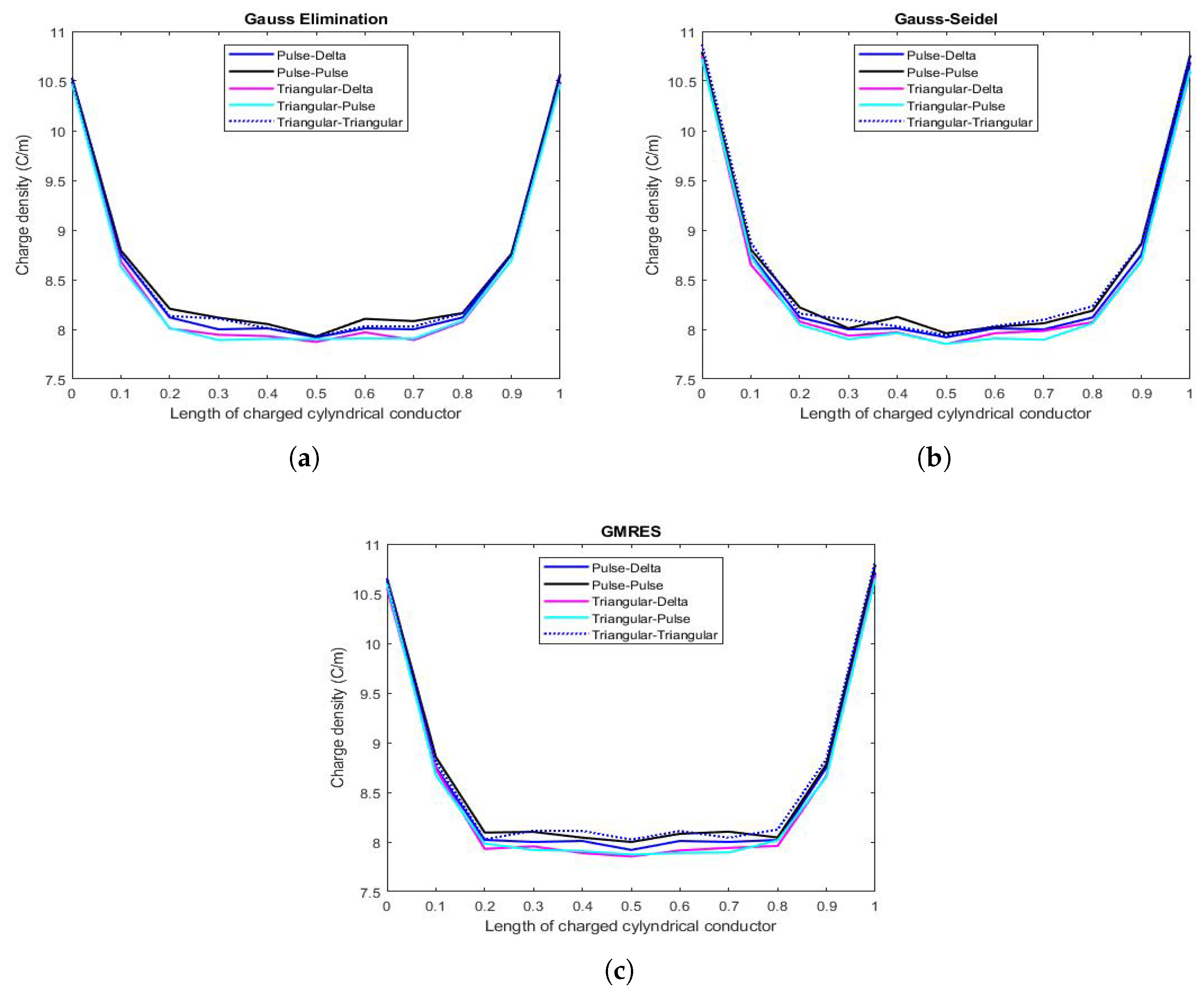

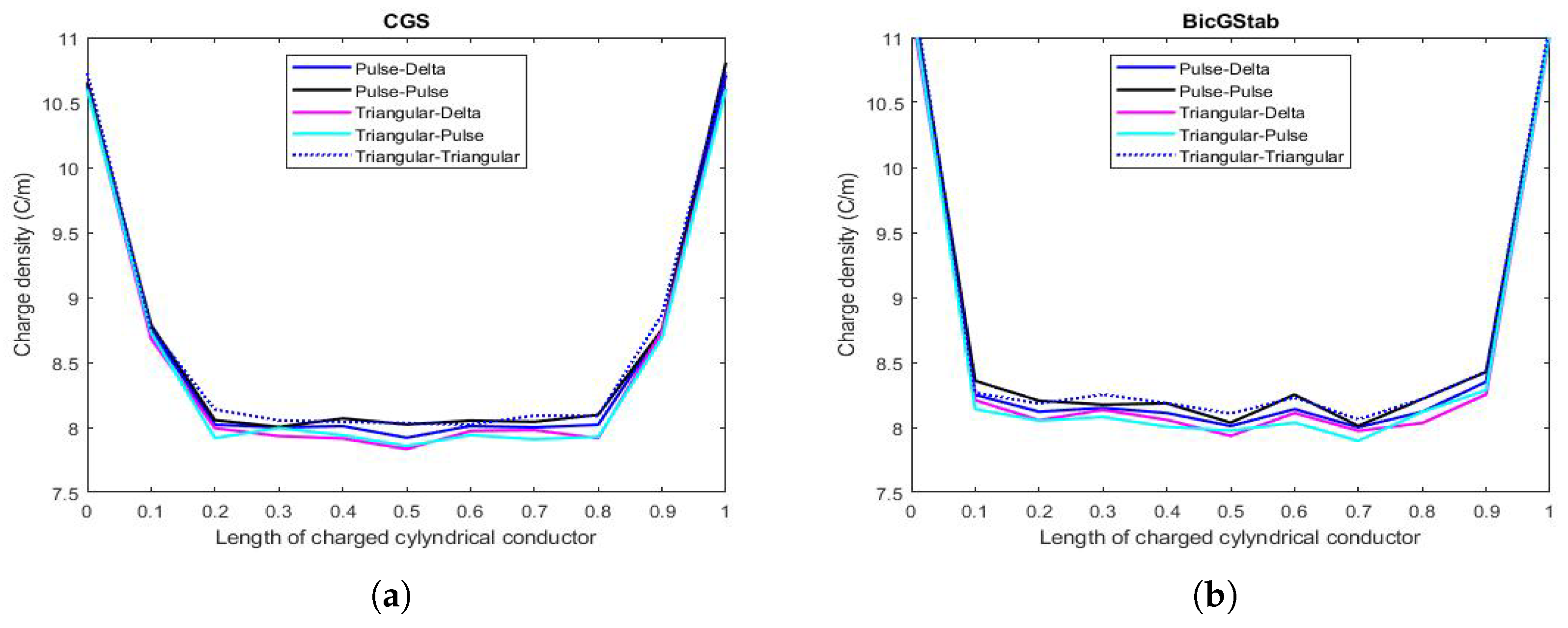

6.1. Results Concerning the Values of Capacitance C & Instability Phenomena

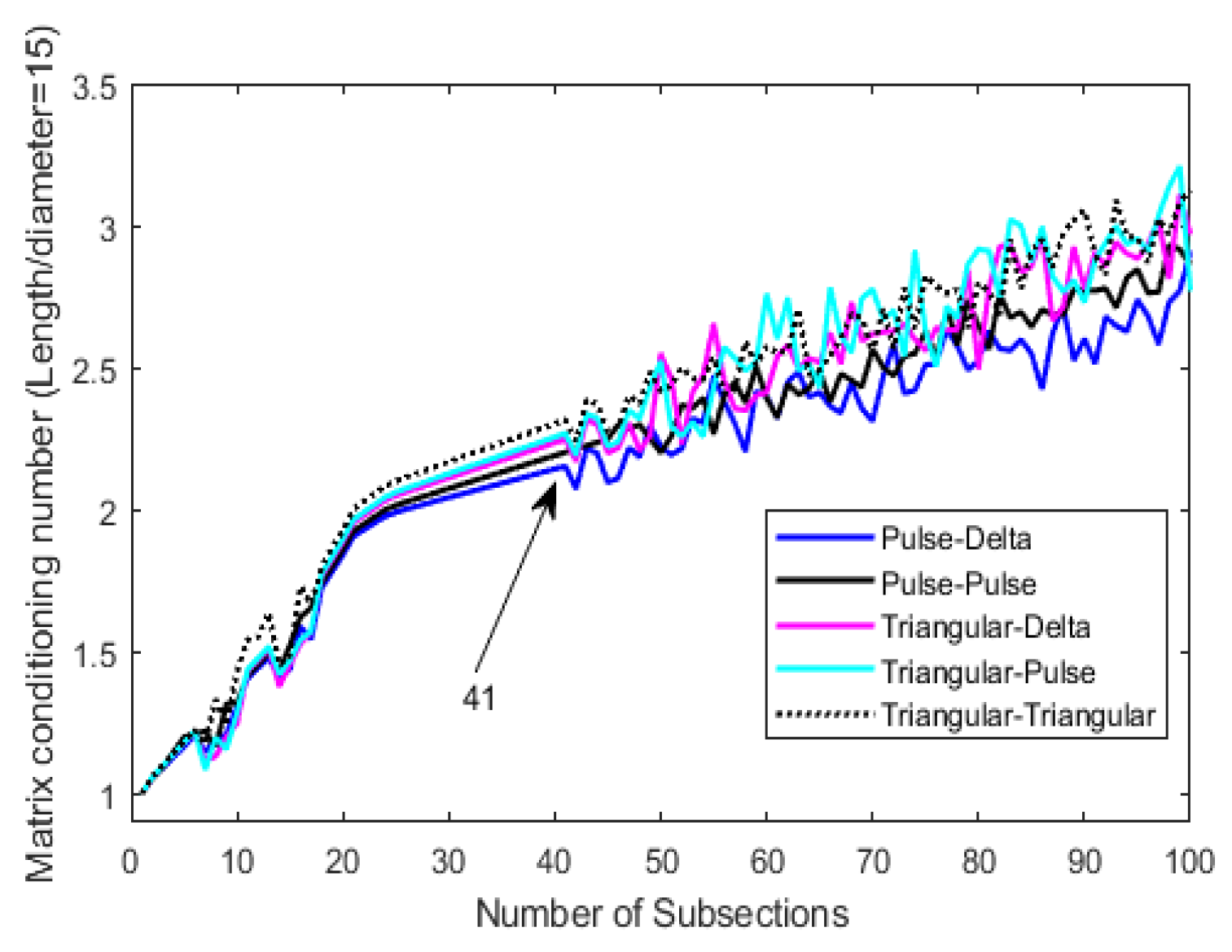

6.2. & Performance of the Procedures

6.3. Selection Criteria for N

- 1.

- , ;

- 2.

- if only if ;

- 3.

- , , ;

- 4.

- , .

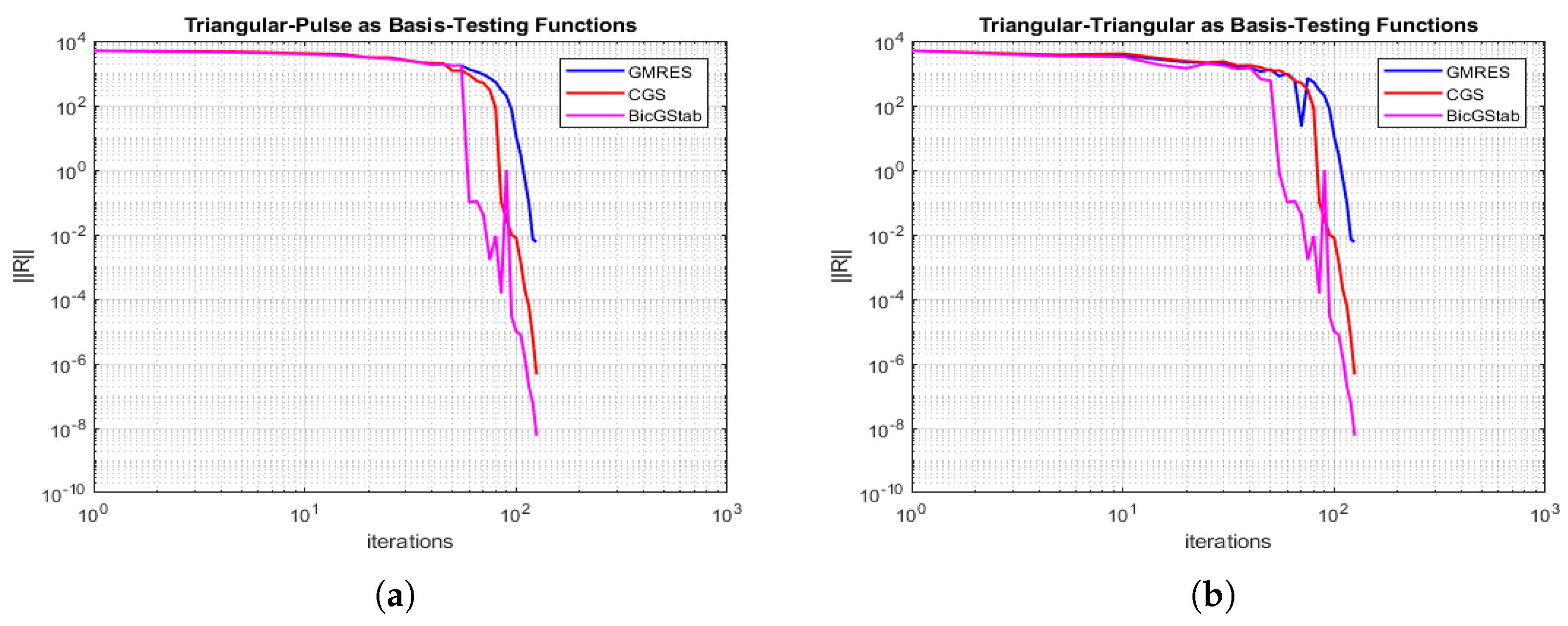

6.4. Convergence Speed & CPU-Time

7. Conclusions and Perspectives

Author Contributions

Funding

Conflicts of Interest

Abbreviations

| EM | electromagnetic problems |

| differential, integral, or integro-differential operator | |

| h | excitation source |

| f | unknown function to be determined |

| L | algebraic sparse matrix |

| MoM | method of moments |

| electrostatic charge distribution | |

| C | electrostatic capacitance |

| GMRES | generalized minimal residual method |

| CGS | conjugate gradient squared |

| BicGStab | biconjugate gradient Stabilized |

| system of Cartesian axes | |

| x | longitudinal symmetry axis of the cylindrical conductor |

| source region | |

| length of the conductor | |

| a | radius of the conductor |

| vector pointing the observation points | |

| vector pointing the source points | |

| electrostatic potential | |

| permittivity of the free space | |

| constants to be determined | |

| basis functions or expansion functions | |

| R | residual |

| N | number of sections of the conductor |

| , | weighting or testing functions |

| Dirac Delta | |

| V | electrical voltage |

| individual elements of the matrix L | |

| Krylov subspace with index r | |

| Krylov subspace with index k generated by and | |

| K | conditioning number |

References

- Harrington, R.F. Field Computation by Moment Methods; MacMillan: New York, NY, USA, 1968. [Google Scholar]

- Papas, C.H. Theory of Electromagnetic Wave Propagation; Dove Publications, Inc.: New York, NY, USA, 2005. [Google Scholar]

- Angiulli, G.; Tringali, S. Stabilizing the E-Field Integral Equation at the Internal Resonances through the Computation of Its Numerical Null Space. Int. J. Appl. Electromagn. Mech. 2010, 32, 63–72. [Google Scholar] [CrossRef]

- Dault, D.; Nair, N.V.; Li, J.; Shanker, B. The Generalized Method of Moments for Electromagnetic Boundary Integral Equations. IEEE Trans. Antennas Propag. 2014, 62, 3174–3188. [Google Scholar] [CrossRef]

- Jonassen, N. Electrostatics; Springer Nature Switzerland AG: Cham, Switzerland, 2019. [Google Scholar]

- Luek, A.; Wolf, V. Generalized Method of Moments for Estimating Parameters of Stochastic Reaction Networks. Syst. Biol. 2016, 10, 98. [Google Scholar]

- Gibson, W.C. The Method of Moments in Electromagnetics; Chapman & Hall/CRC; Taylor & Francis Group: Boca Raton, FL, USA; London, UK; New York, NY, USA, 2008. [Google Scholar]

- Chakrabarty, S.B. Capacitance of Dielectric Coated Cylinder of Finite Axial Length and Truncated Cone Isolated in Free Space. IEEE Trans. Electromagn. Compat. 2002, 44, 394–398. [Google Scholar] [CrossRef]

- Angiulli, G.; Cacciola, M.; Calcagno, S.; De Carlo, D.; Morabito, F.C.; Sgró, A.; Versaci, M. A Numerical Study on the Performances of the flexible BicGStab to Solve the Discretized E-Field Integral Equation. Int. J. Appl. Electromagn. Mech. 2014, 46, 547–553. [Google Scholar] [CrossRef]

- Alad, R.H.; Chakrabarty, S.B. Capacitance and Surface Charge Distribution Computations for a Satellite Modeled as a Rectangular Cuboid and Two Plates. J. Electrost. 2013, 71, 1005–1010. [Google Scholar] [CrossRef]

- Cacciola, M.; Morabito, F.C.; Polimeni, D.; Versaci, M. Fuzzy Characterization of Flawed Metallic Plates with Eddy Current Tests. Prog. Electromagn. Res. 2007, 72, 241–252. [Google Scholar] [CrossRef]

- Burrascano, P.; Callegari, S.; Montisci, A.; Ricci, M.; Versaci, M. Ultrasonic Nondestructive Evaluation Systems: Industrial Application Issues; Springer International Publishing: Cham, Switzerland, 2015. [Google Scholar]

- Mishra, M.; Gupta, N.; Dubey, A.; Shekhar, S. A New Algorithm for Method of Moments Solution of Static Charge Distribution. In Proceedings of the 2008 IEEE Regium 10 Colloquium amd the Third International Conference on Industrial and Information Systems, Kharagpur, India, 8–10 December 2008; pp. 1–3. [Google Scholar]

- Alad, R.H.; Chakrabarty, S.B. Investigation of Different Basis and Testing Functions in Method of Moments for Electrostatic Problems. Prog. Electromagn. Res. B 2012, 44, 31–52. [Google Scholar] [CrossRef]

- Barrett, J.; Formaggio, J.; Corona, T. A Method to Calculate the Spherical Multipole Expansion Electrostatic Charge Distribution on a Triangular Boundary. Prog. Electromagn. Res. B 2015, 63, 123–143. [Google Scholar] [CrossRef]

- Hamada, S. Electrostatic Field Analysis of Anisotropic Conductive Media Using Voxe-Based Static Method of Moments with Fast Multipole Method. Eur. J. Comput. Mech. 2016, 25, 54–70. [Google Scholar] [CrossRef]

- Maity, K. Capacitance Computation of a Charge Conducting Plate Using Mehod of Moments. Int. J. Res. Sci. Innov. 2018, V, 7–11. [Google Scholar]

- Salama, F.; Siwinski, A.; Atieh, K.; Mehrani, P. Investigation of Electrostatic Charge Distribution Within the Reactor Wall Fouling and Bulk Regions of a Gas-Solid Fluidized Bed. J. Electrost. 2013, 71, 21–27. [Google Scholar] [CrossRef]

- Ahmed, S.; Sharma, D.; Chaturved, S. Finite-Difference Time-Domain Analysis of Parallel-Plate Capacitors. Int. J. Comput. Methods Eng. Sci. Mech. 2005, 6, 105–114. [Google Scholar] [CrossRef]

- Chen, J.; McInnes, L.C.; Xhang, H. Analysis and Practical Use of Flexible BicGStab. J. Sci. Comput. 2016, 68, 803–825. [Google Scholar] [CrossRef]

- Van Der Vorst, H. Iterative Krylov Methods for Large Linear Systems; Cambridge University Press: Cambridge, UK, 2003. [Google Scholar]

- Simoncini, V.; Szyld, D.B. Recent Computational Developments in Krylov Subspace Methods for Linear Systems. Numer. Linear Algebra Appl. 2007, 14, 112–138. [Google Scholar] [CrossRef]

- Trefethen, L.N.; Bau, D. Numerical Linear Algebra; SIAM: Philadelphia, PA, USA, 2018. [Google Scholar]

- Parks, M.L.; de Sturler, E.; Mackey, G. Recycling Krylov Subspace for Sequences of Linear Systems. SIAM J. Sci. Comput. 2004, 28, 1–24. [Google Scholar] [CrossRef]

- Yang, J.M.; Jiang, Y.L. Krylov Subspace Approximation for Quadratic-Bilinear Differential System. Int. J. Syst. Sci. 2018, 49, 1950–1963. [Google Scholar] [CrossRef]

- Tranquilli, P.; Sandu, A. Rosenbrock-Krylov Methods for Large Systems of Differential Equations. SIAM J. Sci. Comput. 2014, 36, A1313–A1338. [Google Scholar] [CrossRef]

- Gosselet, P.; Rey, C.; Pebrel, J. Total and Selective Reuse of Krylov Subspaces for the Resolution of Sequences of Non-Linear Structural Problems. Int. J. Numer. Methods Eng. 2013, 94, 60–83. [Google Scholar] [CrossRef]

- Wang, S.; de Sturler, E.; Paulino, G.H. Large-Scale Topology Optimization using Precondictioned Krylov Subspace Methods with Recycling. Int. J. Numer. Methods Eng. 2007, 69, 2441–2468. [Google Scholar] [CrossRef]

- Harkort, C.; Deutscher, J. Krylov Subspace Methods for Linear Infinite-Dimensional Systems. IEEE Trans. Autom. Control 2011, 56, 441–447. [Google Scholar] [CrossRef]

- Beerwerth, R.; Bauke, H. Krylov Subspace Methods for the Dirac Equation. Comput. Phys. Commun. 2015, 188, 189–197. [Google Scholar] [CrossRef]

- Saad, Y. Analysis of Some Krylov Subspace Approximations to the Matrix Exponential Operator. SIAM J. Nunerical Anal. 2002, 29, 209–228. [Google Scholar] [CrossRef]

- Pedroza-Montero, J.N.; Morales, J.L.; Geudtner, G.; Alvarez-Ibarra, A.; Calaminici, P.; Koester, M. Variational Density Fitting with a Krylov Subspace Method. J. Chem. Theory Comput. 2020, 16, 2965–2974. [Google Scholar] [CrossRef] [PubMed]

- Sou-Cheng, T.; Christopher, C.; Saunders, M.A.; Koester, M. MINRES-QLP: A Krylov Subspace Method for Indefinite or Singular Symmetric Systems. SIAM J. Sci. Comput. 2011, 33, 1810–1836. [Google Scholar]

- Puzyrev, V.; Cela, J.M. A Review of Block Krylov Subspace Methods for Multisource Electromagnetic Modelling. Geophys. J. Int. 2015, 202, 1241–1252. [Google Scholar] [CrossRef]

- Balidemaj, E.; Remis, R.F. A Krylov Subspace Approach to Parametric Inversion of Electromagnetic Data Based on Residual Minimization. PIERS Online 2010, 6, 773–777. [Google Scholar] [CrossRef][Green Version]

- Oscar, B.; Elling, T.; Paffenroth, R.; Catalin, T. Electromagnetic Integral Equations Requiring Small Number of Krylov-Subspace Iterations. J. Comput. Phys. 2009, 228, 6169–6183. [Google Scholar]

- Huang, B.; Xie, Y.; Ma, C. Krylov Subspace Methods to Solve a Class of Tensor Equations via the Einstein Product. Numer. Linear Algebra Appl. 2019, 26, 122–139. [Google Scholar] [CrossRef]

- Jaimoukha, I.M.; Kasenally, E.M. Krylov Subspace Methods for Solving Large Lyapunov Equations. SIAM J. Numer. Anal. 2004, 31, 227–251. [Google Scholar] [CrossRef]

- Freund, R.W. Krylov Subspace Methods for Reduced-Order Modeling in Circuit Simulation. J. Comput. Appl. Math. 2000, 123, 395–421. [Google Scholar] [CrossRef]

- Heres, P.; Schilders, W. Krylov Subspace Methods in the Electronic Industry. In Progress in Industrial Mathematics at ECMI 2004; Springer: Berlin/Heidelberg, Germany, 2006; Volume 8, pp. 227–239. [Google Scholar]

- Jackson, J.D. Charge Density on Thin Straight Wire, Revisited. Am. J. Phys. 2000, 68, 789–799. [Google Scholar] [CrossRef]

| GMRES | ||||

|---|---|---|---|---|

| Iterations | Pulse–Delta CPU-Time | Restarting Iterations | CPU-Time | |

| 6 | 2 | 2.0140 | 3 | 2.0090 |

| 10 | 2 | 2.1400 | 3 | 2.0190 |

| 15 | 2 | 2.2813 | 3 | 2.2217 |

| 20 | 2 | 2.2501 | 3 | 2.2307 |

| 25 | 3 | 2.1875 | 5 | 2.1755 |

| 30 | 3 | 2.3281 | 5 | 2.1875 |

| 60 | 3 | 2.6719 | 5 | 2.5313 |

| 100 | 5 | 2.5469 | 7 | 2.3906 |

| 250 | 6 | 2.7969 | 8 | 2.6875 |

| 500 | 6 | 4.0938 | 8 | 3.9063 |

| 1000 | 7 | 11.0000 | 8 | 10.2656 |

| 2000 | 8 | 48.6719 | 9 | 32.2188 |

| GMRES | ||||

|---|---|---|---|---|

| Iterations | Pulse–Pulse CPU-Time | Restarting Iterations | CPU-Time | |

| 6 | 2 | 2.7969 | 3 | 2.6063 |

| 10 | 2 | 2.8438 | 3 | 2.6094 |

| 15 | 2 | 3.0156 | 3 | 3.0125 |

| 20 | 2 | 2.6875 | 3 | 2.6399 |

| 25 | 3 | 2.3906 | 3 | 2.2501 |

| 30 | 3 | 2.0910 | 3 | 2.0154 |

| 60 | 3 | 2.6719 | 4 | 2.5750 |

| 100 | 4 | 2.6999 | 4 | 2.8910 |

| 250 | 4 | 3.1406 | 4 | 3.0694 |

| 500 | 4 | 4.1875 | 5 | 3.9219 |

| 1000 | 5 | 9.3438 | 6 | 7.2344 |

| 2000 | 6 | 34.3281 | 7 | 32.7344 |

| GMRES | ||||

|---|---|---|---|---|

| Iterations | Triangular–Delta CPU-Time | Restarting Iterations | CPU-Time | |

| 6 | 2 | 2.7344 | 3 | 2.5781 |

| 10 | 2 | 2.7656 | 3 | 3.0156 |

| 15 | 2 | 2.5625 | 3 | 2.4844 |

| 20 | 3 | 2.7188 | 3 | 2.2500 |

| 25 | 3 | 2.7969 | 4 | 2.3281 |

| 30 | 3 | 2.7813 | 4 | 2.2344 |

| 60 | 3 | 2.6406 | 4 | 2.1875 |

| 100 | 3 | 2.6719 | 4 | 2.6250 |

| 250 | 4 | 3.4625 | 4 | 3.3906 |

| 500 | 4 | 4.3281 | 5 | 3.4375 |

| 1000 | 5 | 8.6250 | 6 | 8.5938 |

| 2000 | 6 | 42.7813 | 7 | 37.3750 |

| GMRES | ||||

|---|---|---|---|---|

| Iterations | Triangular-Pulse CPU-Time | Restarting Iterations | CPU-Time | |

| 6 | 2 | 2.8281 | 3 | 3.0625 |

| 10 | 2 | 3.1250 | 3 | 2.9844 |

| 15 | 2 | 2.5313 | 3 | 2.2344 |

| 20 | 3 | 2.5938 | 3 | 2.2031 |

| 25 | 3 | 2.2384 | 4 | 2.1875 |

| 30 | 3 | 3.0000 | 4 | 2.1563 |

| 60 | 3 | 2.4375 | 4 | 2.3594 |

| 100 | 4 | 3.2344 | 5 | 2.9688 |

| 250 | 4 | 2.7344 | 5 | 2.7188 |

| 500 | 5 | 3.5156 | 6 | 3.4250 |

| 1000 | 6 | 9.7969 | 7 | 9.1250 |

| 2000 | 6 | 34.2500 | 7 | 31.0587 |

| GMRES | ||||

|---|---|---|---|---|

| Iterations | Triangular–Triangular CPU-Time | Restarting Iterations | CPU-Time | |

| 6 | 2 | 2.0589 | 3 | 2.0358 |

| 10 | 2 | 2.0825 | 3 | 1.9875 |

| 15 | 2 | 2.1875 | 3 | 2.0987 |

| 20 | 3 | 2.5156 | 3 | 2.1719 |

| 25 | 3 | 2.6250 | 4 | 2.2656 |

| 30 | 3 | 2.1406 | 4 | 2.3594 |

| 60 | 3 | 2.4063 | 4 | 2.4001 |

| 100 | 4 | 2.7656 | 5 | 24844 |

| 250 | 4 | 2.4219 | 5 | 2.4089 |

| 500 | 5 | 4.3125 | 6 | 3.8281 |

| 1000 | 6 | 8.5156 | 7 | 7.7188 |

| 2000 | 6 | 35.9219 | 7 | 33.4688 |

| Gauss Elimination | |||||

|---|---|---|---|---|---|

| Pulse–Delta | Pulse–Pulse | Triangular–Delta | Triangular–Pulse | Triangular–Triangular | |

| CPU-Time | CPU-Time | CPU-Time | CPU-Time | CPU-Time | |

| 6 | 0.0313 | 0.0156 | 0.0156 | 0.0156 | 0.0156 |

| 10 | 0.0156 | 0.0163 | 0.0169 | 0.0173 | 0.0174 |

| 15 | 0.0434 | 0.0436 | 0.0433 | 0.0469 | 0.0521 |

| 20 | 0.0625 | 0.0691 | 0.0729 | 0.0785 | 0.0873 |

| 25 | 0.0794 | 0.0749 | 0.0757 | 0.0789 | 0.0798 |

| 30 | 0.0813 | 0.0836 | 0.0849 | 0.0875 | 0.0899 |

| 60 | 0.2313 | 0.2156 | 0.2515 | 0.2790 | 0.2873 |

| 100 | 0.3290 | 0.3334 | 0.3512 | 03586 | 0.3599 |

| 250 | 0.6250 | 0.6094 | 0.6406 | 0.6563 | 0.7344 |

| 500 | 2.0781 | 2.0625 | 2.4375 | 2.3125 | 2.4219 |

| 1000 | 15.7188 | 17.0625 | 17.9063 | 17.6563 | 18.2031 |

| 2000 | 115.6875 | 117.3281 | 117.8125 | 122.5781 | 143.3438 |

| Gauss–Seidel | |||||

|---|---|---|---|---|---|

| Pulse–Delta | Pulse–Pulse | Triangular–Delta | Triangular–Pulse | Triangular–Triangular | |

| CPU-Time | CPU-Time | CPU-Time | CPU-Time | CPU-Time | |

| 6 | 0.0078 | 0.0093 | 0.0099 | 0.0089 | 0.0080 |

| 10 | 0.0145 | 0.0130 | 0.0096 | 0.0117 | 0.0159 |

| 15 | 0.0192 | 0.0195 | 0.0148 | 0.0171 | 0.0216 |

| 20 | 0.0245 | 0.0279 | 0.0314 | 0.0301 | 0.0317 |

| 25 | 0.0305 | 0.0377 | 0.0288 | 0.0313 | 0.0399 |

| 30 | 0.0443 | 0.0437 | 0.0534 | 0.0829 | 0.0827 |

| 60 | 0.1476 | 0.2309 | 0.2284 | 0.2378 | 0.1849 |

| 100 | 0.4046 | 0.5806 | 0.4640 | 0.5668 | 0.5505 |

| 250 | 3.0992 | 4.4617 | 4.9277 | 5.4185 | 6.4172 |

| 500 | 16.0497 | 18.5411 | 21.9452 | 20.6219 | 20.8443 |

| 1000 | 63.5523 | 74.6598 | 71.0213 | 80.4083 | 72.5813 |

| 2000 | 326.7238 | 311.5719 | 321.5325 | 352.0632 | 377.7439 |

| CGS | ||||||

| Pulse-Delta | Pulse-Pulse | Triangular-Delta | ||||

| Iterations | CPU-Time | Iterations | CPU-Time | Iterations | CPU-Time | |

| 6 | 2 | 2.0151 | 2 | 2.7841 | 2 | 2.6999 |

| 10 | 2 | 2.1235 | 2 | 2.8891 | 2 | 2.7391 |

| 15 | 2 | 2.2789 | 2 | 3.0045 | 2 | 2.5701 |

| 20 | 2 | 2.2124 | 2 | 2.6587 | 2 | 2.7089 |

| 25 | 3 | 2.1822 | 3 | 2.3924 | 3 | 2.7899 |

| 30 | 3 | 2.3214 | 3 | 2.0892 | 3 | 2.7796 |

| 60 | 3 | 2.6745 | 3 | 2.6752 | 3 | 2.6498 |

| 100 | 5 | 2.5402 | 5 | 2.6905 | 5 | 2.6746 |

| 250 | 6 | 2.7912 | 6 | 3.1382 | 6 | 3.4601 |

| 500 | 6 | 4.1598 | 6 | 4.1926 | 6 | 4.3301 |

| 1000 | 7 | 11.0548 | 7 | 9.3875 | 7 | 8.6254 |

| 2000 | 8 | 48.0254 | 8 | 34.0369 | 8 | 42.9816 |

| CGS | ||||||

| Triangular-Pulse | Triangular-Triangular | |||||

| Iterations | CPU-Time | Iterations | CPU-Time | |||

| 6 | 2 | 2.8101 | 2 | 2.5147 | ||

| 10 | 2 | 3.1341 | 2 | 2.0799 | ||

| 15 | 2 | 2.5401 | 2 | 2.1798 | ||

| 20 | 2 | 2.5899 | 2 | 2.5124 | ||

| 25 | 3 | 2.2322 | 3 | 2.6212 | ||

| 30 | 3 | 2.9971 | 3 | 2.1427 | ||

| 60 | 3 | 2.4301 | 3 | 2.3983 | ||

| 100 | 5 | 3.2314 | 5 | 2.7691 | ||

| 250 | 6 | 2.7391 | 6 | 2.4290 | ||

| 500 | 6 | 3.5149 | 6 | 4.3259 | ||

| 1000 | 7 | 9.9782 | 7 | 8.5203 | ||

| 2000 | 8 | 34.9311 | 8 | 35.9315 | ||

| BicGStab | ||||||

|---|---|---|---|---|---|---|

| Pulse-Delta | Pulse-Pulse | Triangular-Delta | ||||

| Iterations | CPU-Time | Iterations | CPU-Time | Iterations | CPU-Time | |

| 6 | 5 | 0.2188 | 5 | 0.3438 | 5 | 0.4531 |

| 10 | 12 | 0.2656 | 11 | 0.5156 | 14 | 0.3125 |

| 15 | 28 | 0.2969 | 20 | 0.4844 | 21 | 0.2656 |

| 20 | 48 | 0.1563 | 48 | 0.1496 | 38 | 0.1094 |

| 25 | 59 | 0.2500 | 64 | 0.2500 | 93 | 0.2188 |

| 30 | 134 | 0.1250 | 50 | 0.1406 | 181 | 0.4219 |

| 60 | 130 | 0.500 | 868 | 0.6094 | 286 | 0.3125 |

| 100 | 189 | 0.3438 | 307 | 0.3906 | 204 | 0.3281 |

| 250 | 98 | 0.5313 | 851 | 0.7969 | 95 | 0.4219 |

| 500 | 317 | 0.8906 | 251 | 0.5156 | 325 | 0.6875 |

| 1000 | 304 | 1.3125 | 320 | 1.3906 | 143 | 0.8906 |

| 2000 | 468 | 24.8281 | 799 | 25.8439 | 924 | 24.4219 |

| Triangular-Pulse | Triangular-Triangular | |||||

| Iterations | CPU-Time | Iterations | CPU-Time | |||

| 6 | 5 | 0.3987 | 5 | 0.4193 | ||

| 10 | 16 | 0.2188 | 16 | 0.2982 | ||

| 15 | 26 | 0.2656 | 27 | 0.0938 | ||

| 20 | 67 | 0.2656 | 63 | 0.2188 | ||

| 25 | 65 | 0.2969 | 90 | 0.1875 | ||

| 30 | 197 | 0.2656 | 141 | 0.1406 | ||

| 60 | 135 | 0.3750 | 344 | 0.3281 | ||

| 100 | 191 | 0.4063 | 179 | 0.5625 | ||

| 250 | 449 | 0.7969 | 115 | 0.3750 | ||

| 500 | 416 | 0.7656 | 235 | 0.5625 | ||

| 1000 | 395 | 1.5938 | 113 | 0.5781 | ||

| 2000 | 1013 | 25.5000 | 1023 | 25.9243 | ||

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Versaci, M.; Angiulli, G. Electrostatic Capacity of a Metallic Cylinder: Effect of the Moment Method Discretization Process on the Performances of the Krylov Subspace Techniques. Mathematics 2020, 8, 1431. https://doi.org/10.3390/math8091431

Versaci M, Angiulli G. Electrostatic Capacity of a Metallic Cylinder: Effect of the Moment Method Discretization Process on the Performances of the Krylov Subspace Techniques. Mathematics. 2020; 8(9):1431. https://doi.org/10.3390/math8091431

Chicago/Turabian StyleVersaci, Mario, and Giovanni Angiulli. 2020. "Electrostatic Capacity of a Metallic Cylinder: Effect of the Moment Method Discretization Process on the Performances of the Krylov Subspace Techniques" Mathematics 8, no. 9: 1431. https://doi.org/10.3390/math8091431

APA StyleVersaci, M., & Angiulli, G. (2020). Electrostatic Capacity of a Metallic Cylinder: Effect of the Moment Method Discretization Process on the Performances of the Krylov Subspace Techniques. Mathematics, 8(9), 1431. https://doi.org/10.3390/math8091431