1. Introduction

Nowadays classification models have various limitations related to the use of a single model. Data preprocessing plays a significant role in the entire dataset. In the data preprocessing, detecting outliers which appear to not belong in the data is one of the important methods, and can be caused by human error, such as mislabeling, transposing numerals, and programming bugs. Outliers corrupt the results to a small or large degree, depending on the circumstances, if they are not removed from the raw dataset. This study develops a fuzzy selection strategy that addresses fuzzy membership for selecting data to be eliminated from datasets. New algorithms should provide high quality and clean data to treat the noise (smart data) in Big Data analysis problems [

1]. Therefore, this study attempts to handle noisy data by proposing a new algorithm for unstructured datasets. Some studies have developed outlier detection methods, for example, van der Gaag [

2] used FDSTools noise profiles to obtain training datasets and a test set to analyze the impact of FDSTools noise correction for different analysis thresholds. This method was able to obtain a higher quality training dataset, leading to improved performance. Niu and Wang [

3] proposed a combined model to achieve accurate prediction results. The combined model included complete empirical mode decomposition ensemble, four neural network models, and a linear model. Cai et al. [

4] adopted Kalman filter-deduced noisy datasets. The Kalman filter is insensitive to non-Gaussian noises because it uses the maximum correntropy criteria. Numerical experiments have shown to outperform this model on four benchmark datasets for traffic flow forecasting. Liu and Chen [

5] conducted a comprehensive review of data processing strategies in wind energy forecasting models. This research mentioned that the existing data-driven forecasting models attach great significance to the proper application of data processing methods. Ma et al. [

6] developed an unscented Kalman filter (UKF) with the generalized correntropy loss (GCL) which can be termed GCL-UKF. GCL-UKF has been used to estimate and forecast the power system state. Numerical simulation results have validated the efficacy of the proposed methods for state estimation using various types of measurement. Wang et al. [

7] developed wavelet de-noising (WD) and Rank-Set Pair Analysis (RSPA), which is a hybrid model. RSPA takes full advantage of a combination of the two approaches to improve forecasts of hydro-meteorological time series. Florez-Lozano et al. [

8] developed an intelligent system that combined both classic aggregation operators and neural and fuzzy systems. These studies proposed pre-processing methods to effectively handle datasets for various forecasting methods. Therefore, this study develops a fuzzy selection strategy that addresses fuzzy membership. The new fuzzy selection operator based on the fuzzy clustering algorithm is carefully formulated to ensure that there are better members of the dataset in the proposed classification system.

This study develops the support vector machine (SVM) classification model with new fuzzy selection to improve the performance of the classification problem. Supervised classification is the essential technique used for extracting quantitative information from the database, and the SVM is one a popular classifier. Tang et al. [

9] developed a joint segmentation and classification framework for sentence-level sentiment classification. Their method simultaneously generates useful segmentations and predicts sentence-level polarity based on the segmentation results. The effectiveness of the approach was verified by applying it to sentiment classification. Jiang et al. [

10] proposed a method for the effective classification and localization of mixed sources. The advantage of this method is that it could make good use of known information in order to distinguish the distances of sources from mixed sources and estimate the range parameters of near-field sources. Kasabov et al. [

11] developed a new and efficient neuromorphic approach to the most complex rich spatiotemporal brain data (STBD) and functional magnetic resonance imaging (fMRI) data. Shao et al. [

12] developed a prototype-based classification model which evolving data streams. Building upon the techniques of error-driven representativeness learning, P-Tree based data maintenance, and concept drift handling, SyncStream allows dynamic modeling of the evolving concepts and supports good prediction performance. Wang et al. [

13] developed Noise-resistant Statistical Traffic Classification (NSTC) to solve the traffic classification problem. NSTC could reduce the noise and reliability, thereby improving the classification performance. Phan et al. [

14] developed a joint classification-and-prediction framework based on convolutional neural networks (CNNs) for automatic sleep staging. The CNNs divide the dataset into nontransition and transition sets and explored = how different frameworks perform on them. Basha et al. [

15] proposed the interval principal component analysis to detect faults in the Tennessee Eastman (TE) Process with a higher degree of accuracy than other methods. The interval principal component analysis method has been capable of maintaining a high performance rate even at low GLR window sample sizes and low interval aggregation window sizes. The main focus of these studies was to solve actual problems using new classification methods. Moreover, pre-processing methods, such as those presented in [

12,

13], could improve performance. Therefore, this study develops a fuzzy selection strategy that addresses how fuzzy membership and the support vector machine (SVM) method provide an effective way to perform supervised classification. The recent SVM literature is summarized and shown in

Table 1. It can be observed that the UCI dataset [

16] has been examined in many studies, and many studies have developed hybrid SVMs for improving the classification performance.

Furthermore, some literature has combined the fuzzy c-means (FCM) with SVM for improving the performance of the classifier ([

33,

34,

35,

36,

37,

38]). These studies used the clustering label of FCM as the preprocess mechanism for improving the SVM classifier. This study adopts the roulette wheel selection with a membership function of FCM to select appropriate data for the training set. Traditional roulette wheel selection is based on probability to select possible eliminates in genetic algorithms. This study adopts membership values of FCM to roulette wheel selection for the possible eliminates of training datasets.

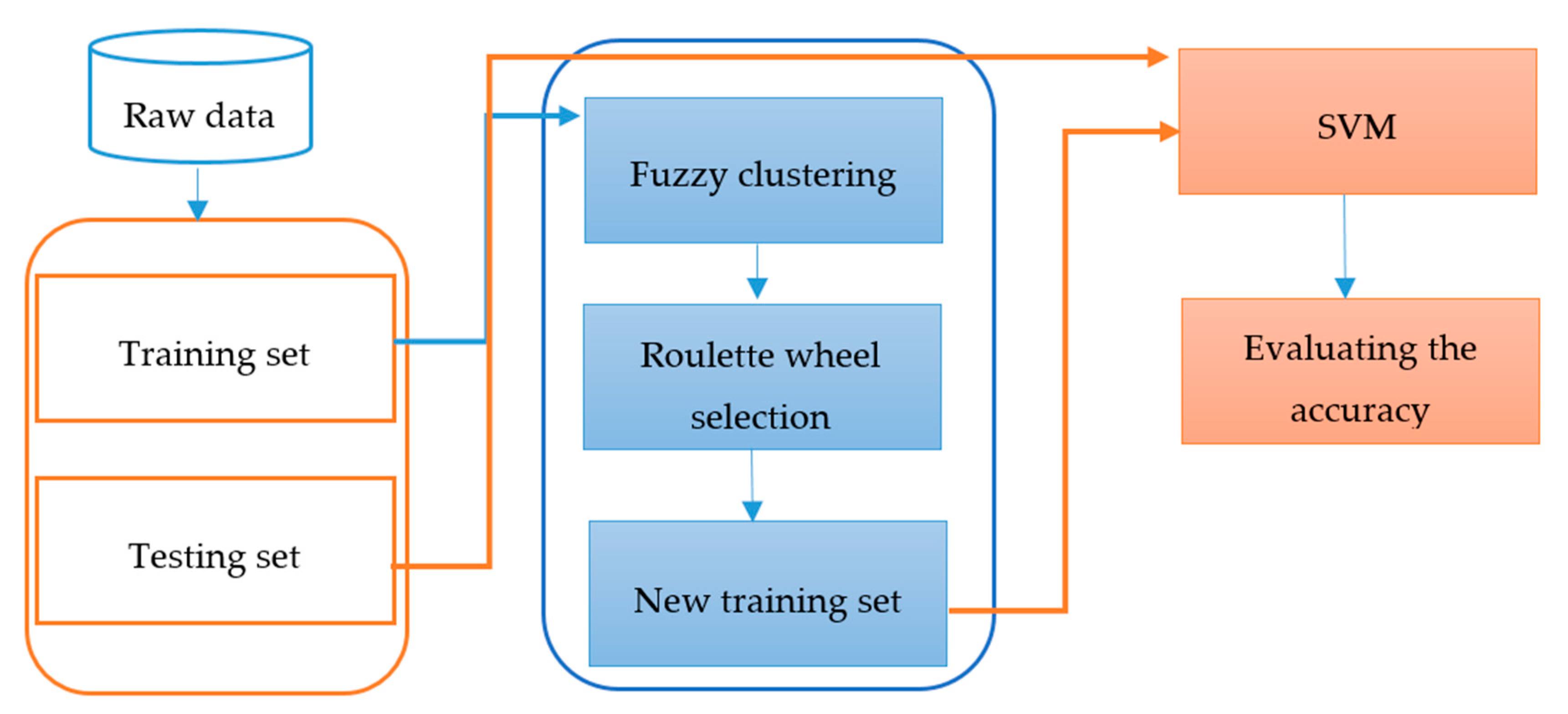

The purpose of this study is to develop a new classification method that combines SVM with new fuzzy selection. A core component of this study is the development of a new fuzzy selection method, roulette wheel selection with a membership function, to select appropriate data for the training set. The proposed methodology draws on the advantages of fuzzy clustering, roulette wheel selection, and SVM to effectively handle the dataset, reduce outlier data, and improve classification performance. The remainder of this paper is organized as follows:

Section 2 presents the proposed support vector machine with new fuzzy selection method and also introduces the new fuzzy selection method;

Section 3 provides the research design of the support vector machine with new fuzzy selection (SVMFS) for the actual infringement of patent rights problem; and

Section 4 offers conclusions and suggestions for further research.