Abstract

The aim of this paper is to study the local dynamical behaviour of a broad class of purely iterative algorithms for Newton’s maps. In particular, we describe the nature and stability of fixed points and provide a type of scaling theorem. Based on those results, we apply a rigidity theorem in order to study the parameter space of cubic polynomials, for a large class of new root finding algorithms. Finally, we study the relations between critical points and the parameter space.

1. Introduction

The computation of solutions for equations of the form

is a classic problem that arises in different areas of mathematics and in particular in numerical analysis. Here is a complex function, and usually it is assumed that . Due to the dependence on the space where the equation is defined, and where possible solutions are acting, it is ambitious to expect a unified theory that provides the exact, or even approximate solutions to this class of equations. Also, depending on the objective that is being addressed, solving an equation as above can be very different in nature, as well as, the techniques used to solve it. For instance, Picard–Lindelöf’s theorem (see for example [1]) on existence and uniqueness of solutions of ordinary differential equations, and fundamental theorem of Algebra in complex analysis. On the other hand, if we turn our attention to explicit solutions, then the problem becomes even more difficult.

Consider a complex polynomial f and

the classical Newton’s method. In this case, higher–order methods have been extensively used and studied in order to approach the equation The iterative function defines a rational map on the extended complex plane (Riemann sphere) . The simple roots of the equation , or in other words, the roots of the equation that are not roots of the derivative , are super–attracting fixed points of , that is, let be a simple root of , then and . For a review of the dynamics of Newton’s method, see for instance [2,3].

More generally, and denote the space of polynomials of degree d and the space of rational functions of degree k, respectively. By a root–finding algorithm or root–finding method it is meant a rational map , such that the roots of the polynomial map f are attracting fixed points of . A root–finding algorithm has order , if the local degree of in every simple root of f is .

In this paper we study the dynamical aspects of

where , , , and are real numbers. Depending on those parameters, this family is of order 2, 3, 4 or 5. Also, this family can be viewed as a generalization of –iterative functions (for a definition see Example 5 below).

In [4] C. Mcmullen proved a rigidity theorem that implies that a purely iterative root finding algorithm generally convergent for cubic polynomials, is conformally conjugate to a generating map. Applying this result, J. Hawkins in [5] was able to obtain an explicit expression for rational maps which are generating, and so it is natural to ask which of these rational maps are generating maps. We use that rigidity result in order to show that over the space of cubic polynomials, those maps that generate a generally convergent algorithm are restricted to Halley’s method applied to the cubic polyomial.

The paper is organized as follows. Section 2 contains some basic notions of the classic theory of complex dynamics. In addition to establish the notation and main examples, Section 3 contains the definition of purely iterative iterative algorithm for Newton’s maps, that will be used throughout the article. Section 4 is devoted to the study of the nature of fixed points. In Section 5 we study the order of convergence of , and in Section 6 we provide the results about Scaling theorems. We provide the result concerning maps that generates generally convergent root finding algorithms for cubic polynomials in Section 7. In Section 8 we provide the relation between critical points and parameter space. The last Section summarize the conclusion.

2. Basic Notions in Complex Dynamics

We recall the reader so see [6] or [7,8,9,10,11,12,13,14,15,16] to obtain some basic notions of the classic theory of Fatou-Julia of complex dynamics which appear in (as a reference of the Fatou– Julia theory see for instance P. Blanchard [17] and J. Milnor [18]). Here we show a small summary: Let

be a rational map of the extended complex plane into itself, where P and Q are polynomials with no common factors.

- A point is called a fixed point of R if , and the multiplier of R at a fixed point is the complex number .

- Depending on the value of the mulitplier, a fixed pointcan be superattracting (), attracting(), repelling(), indifferent ()

- Let be a fixed point of which is not a fixed point of , for any j with . We say that is a cycle of lengthn or simply an n–cycle. Note that for any , and R acts as a permutation on .

- The multiplier of an cycle is the complex number .

- At each point of the cycle, the derivative has the same value.

- An cycle is said to be attracting, repelling, indifferent, depending the value of the associated multiplier (same conditions than in the fixed points).

- The Julia set of a rational map R, denoted , is the closure of the set of repelling periodic points. Its complement is the Fatou set . If is an attracting fixed point of R, then the convergence region is contained in the Fatou set and , where ∂ denotes the topological boundary.

3. Definitions and Notations

Now we recall the definition of purely iterative algorithms due to S. Smale in [19]. Let be the space of all polynomials of degree less than or equal to . For every , define the space and the map

given by

where denotes the kth derivative of f. Let be the rational map defined as

where and are polynomials in variables , with no common factors. A purely iterative algorithm is a rational endomorphism that depends on and takes the form

for a rational map as in (2).

Consider a modification of the preceding definition. Let be the space consisting of the rational maps of degree less than or equal to . Define a subset as

Since Newton’s method applied to is a rational map that satisfies the conditions in , we conclude that , for every .

As above, define

by

Let be the rational map defined as

where and are polynomials in variables with no common factors.

We define a rational endomorphism , depending on , by

where .

In [6] it is proved the following.

Theorem 1.

For everydefined as before, there exists a complex polynomial f of degree d such that for every,, whereis the Newton method. Also, there exists a linear space H of dimensionsuch thatis contained in.

Theorem 1 motivates the following definition.

Definition 1.

Letbe the rational endomorphism depending on, given by

whereis Newton’s map applied to f, and G is defined by the Formula (4). A rational endomorphism as above will be called a purely iterative algorithm for Newton’s maps.

Remark 1.

In this paper we consider the family of purely iterative algorithm for Newton’s maps given by the Formula (5), with

and

where , , , and are real numbers. Then the family is given by

which is exactly the Formula (1) above.

Example 1.

The family of Purely iterative algorithms for Newton’s maps (1) include several important families of root–finding algorithms.

- 1.

- Newton’s method is obtained by taking,. Indeed, in this caseHence. This method has been briefly studied in the last decades [20].

- 2.

- Halley’s method is obtained by considering,,,and. Indeed,Therefore,For a study of dynamical and numerical properties of Halley’s method, see for instance [21,22].

- 3.

- Whittaker’s iterative method also known as convex acceleration of Whittaker’s method (see [23,24]), is an iterative map of order of convergence two given by

- 4.

- Newton’s method for multiple roots is obtained by considering,and. Indeed,Note thatThis method has been studied by several authors. See for example [25,26] and more recently [27,28].

- 5.

- The following method, that may be new and it is denoted by, is a modification of the super–Halley method(for a study of this method see for instance [29]). This is given by the formulaConsider the polynomials

- 6.

- More generally, considering,,,andwe obtain the following third-order family studied in [30,31].In this case

- 7.

- The following family of iterative functions represents Newton’s method, Chebyshev’s iterative function, Halley’s method, Super-Halley, c–iterative function (consideringbelow) and Chebyshev-Halley family, among others. See for instance [22,29,30,31,32,33,34,35,36,37,38]. The family of iterative methods given bywhere θ and c are complex parameters conveniently chosen, form a family of purely iterative algorithms for Newton’s maps. Indeed, this follows by considering the polynomialsandin (4) and (5).It is clear that,,,and.

Remark 2.

Note that a purely iterative algorithm for Newton’s maps may not be a root–finding algorithm. For instance, by considering the polynomial, and the purely iterative Newton’s map defined by

and

where, it follows that root 1 is repelling for the associated rational map. In fact, in this case

Note that. Sincethen. Therefore

As a consequence the root 1 is a repelling fixed point.

4. The Nature of Fixed Points

In order to ensure that be a root–finding algorithm (see Remark 2), some restrictions over the choice of the real parameters , , , and , are required. Let be an integer and define

where,

and and .

Theorem 2.

Suppose that

for every. Letbe a complex polynomial of degree. Denote byits zeros and bytheir multiplicities. Thendefined in (1) is a root finding algorithm. Moreover,

- (a)

- Each rootof multiplicityis an attracting fixed point forwith multiplier. Assuming that, we have that every simple root is a superattracting fixed point for.

- (b)

- has a repelling fixed point at ∞ with multiplier.

- (c)

- If,andthen the extraneous fixed points ofare the zeros ofwhich are not zeros of f. More precisely, if β is a zero of orderof, then it is a repelling fixed point ofwith multiplier

Remark 3.

If, then by Formula (6) we have that, that is, the simple roots of a complex polynomial are parabolic fixed points for. In this casecannot be a root–finding algorithm. So, from now on,.

Proof.

(a) First note that the factor in (1) implies that for every i. If has a zero of multiplicity , then is a (super)attracting fixed point of Newton’s method with multiplier . Thus

It follows that

Consequently,

Consequently, is an attracting fixed point with multiplier . By supposing that , we have that , which implies that is a superattracting fixed point.

Note that the degree d polynomial f can be written as

for some . Indeed, if , then

Therefore, when tends to , it follows that , and we may write Newton’s method applied to the polynomial f as

By constructing the Formula (1), this implies that

and so,

Thus,

Suppose that is a zero of order of which is not a zero of f. Then is a pole of order for the map , that is,

This implies that

and consequently,

provided . Since and , the quantity is greater than one, and the proof is complete. □

Example 2.

Now we give some examples of Theorem 2:

- 1.

- Since Newton’s method is given by considering,, thenand. Henceand. Thus the condition (7) is satisfied for every integer.

- 2.

- Halley’s method is obtained with,,,and. Then,and. Thus, the condition (7) is satisfied for every integer. In this case, repelling fixed point has multiplier of the form, provided.

- 3.

- 4.

- The root finding algorithmhas order of convergence 3 and does not satisfy. In this case.

The following table summarizes the examples (1)

5. Order of Convergence

This section will describe the order of convergence of defined in (1). In this section, denote the nth derivative of Newton’s method.

Lemma 1.

Consideras a root finding algorithm applied to a degree d polynomial f. Then:

- 1.

- If, thenis at least of order 2.

- 2.

- Ifand, thenis at least of order 3.

- 3.

- If,,andfor every simple root α of f, thenis at least of order 4.

- 4.

- If condition inis satisfied and additionallyandfor every simple root α of f, thenhas order 5.

Proof.

Recall that . Let be a simple root of a polynomial f. Since Newton’s method is an order 2 root–finding algorithm, it follows that

for some . Hence,

Consequently,

Now to prove part write the Formula (1) as

Thus, if we have that , and so is a root–finding algorithm of order at least 2, which proves part .

In order to prove part consider that . Thus

where . Hence, if , then and we conclude that , , , and so is a root–finding algorithm of order at least 3, which proves part .

Now we will prove part by similar computations as above. For this, consider the Taylor expansion of Newton’s method around the simple root of the polynomial f,

where . Hence, and combining those computations with the fact that , , and so

Since , which implies that . Thus if , we conclude that , , , , and so is a root–finding algorithm of order at least 4. This conclude part .

Finally to prove part , consider the Taylor expansion of Newton’s method around the simple root of the polynomial f,

where . Hence, , and combining those computations with the hypothesis if , and , implies that

Since , we have that . Additionally, by supposing that , it follows that , , , , , and so is a root–finding algorithm of order at least 5. This conclude part , and the proof of the Lemma.

□

Remark 4.

Note that in the Lemma above, while conditions in partandare easy to check, in the opposite, partandare harder to verify.

The following corollary characterizes the Newton’s method for Multiple roots. See part in Example 1.

Corollary 1.

Let f be a complex polynomial and denote byits roots. Suppose thatis a root finding algorithm with order of convergence equal to two and the order does not depend on the multiplicity of the roots. Thenis the Newton’s multiple for multiple roots.

Proof.

By part (a) of Theorem 2 we have that

Since the order of convergence of the root finding algorithm is 2, then for every root we have that and for all . This implies that

for every , if and only if

Therefore

This concludes the proof. □

Example 3.

The following example show three examples to find an approximation of the roots of

which areandNote that for simple roots and order higher than two, we have thatSuppose the first two parts of Lemma 1, that is,

Thus, (1) takes the form

Suppose, in addition thatand. Therefore

After some calculations, we obtain

Now suppose thatand. Then for

and so,

which implies

Finally consider

Note that this set of parameters gives convergence of order 3. Then

The following table show the iterations of order three, where

and.

6. Conjugacy Classes of the Schemes

We next prove an extension of the Scaling Theorem for purely iterative algorithms for Newton’s maps. Let be two rational maps. Then and are conjugated if there exists a Möbius transformation such that for all z.

Conjugacy plays a central role in the understanding of the behavior of classes of maps under iteration in the following sense. Suppose that we wish to describe both, the quantitative and the qualitative behaviors of the map where is an iterative function resulting from an iterative method .

Let be an arbitrary analytic function. Since conjugacy preserves fixed points, cycles and their character (whether (super)attracting, or repelling, or indifferent), and their basins of attraction, it is a worthwhile idea to try to construct a parameterized family or families consisting of polynomials , as simple as possible so that, for a suitable choice of the complex parameter , there may exists a conjugacy between and .

In order to describe the conjugacy classes of , recall a next useful result (see [39], Section 5, Theorem 1).

Theorem 3

(The Scaling Theorem for Newton’s method). Let be an analytic function on the Riemann sphere, and let , with , be an affine map. If , then that is, is analytically conjugated to by A.

Theorem 4

Let f andbe polynomials, and let A be the affine mapwithConsider the purely iterative algorithms

If, then, that is,is analytically conjugated toby A.

Proof.

Assume that there exists a constant such that . According to Theorem 3, we have . Hence

This yields

which completes the proof. □

7. Methods Generally Convergent for Cubic Polynomials

A purely iterative rational root-finding algorithm is generally convergent if converge to the roots of the polynomial f for almost all complex polynomials of degree and almost all initial conditions. C. Mcmullen proved that if , then there is no possibility to find a generally convergent root–finding algorithm. Moreover, he proved the following result:

Theorem 5

([4]). Every generally convergent algorithm for cubic polynomials is obtained by specifying a rational map R in such a way that

- 1.

- R is convergent for.

- 2.

- contains those Möbius maps that permutes the roots of unity.

Moreover the generated algorithm has the form

whereis a Möbius transformation that associate the roots of unity to the points 1,and.

So, the following definition is natural: a rational map R generates a generally convergent algorithm if it is convergent for the cubic polynomial representing the roots of unity and its associated automorphism group contain the Möbius transformations which commutes the roots of unity.

As an example, consider Halley’s method applied to the family of cubic polynomials . Hawkins’s theorem implies that if a rational map R generates a generally convergent algorithm, then zero is a fixed point of R. Hence, the condition implies that or . Also the group of automorphisms must contain the Möbius transformations that permutes the roots of unity, then cannot be 0. Thus, and we obtain Halley’s method applied to .

McMullen’s theorem tells us how to generate a generally convergent iterative algorithm by finding the map R. The following question is natural: When contain rational maps which are generating of generally convergent algorithms?

The following theorem is due to J. Hawkins (Theorem 1 [5]) and describes explicitly the rational maps which are generating of generally convergent algorithms for cubic polynomials according to their degree.

Theorem 6.

If R generates a generally convergent root–finding algorithm for cubic polynomials, then there exist constants, such that R has the following form:

Using this theorem it is proved that over the space of cubic polynomials, those maps that generates a generally convergent algorithm are restricted to Halley’s method applied to the cubic polyomial.

Theorem 7.

Let f be a cubic polynomial. Suppose that

where,,,andare real numbers, generates a generally convergent root–finding algorithm for cubic polynomials. Thenis the Halley method applied to.

Proof.

Since satisfies the Scaling theorem, then in the case of cubic polynomials, we can restrict to . From Theorem 6 we see that 0 is always a fixed point of the generating map. This imposes restrictions on the values of , , , and .

If , then, since and , we have that which implies two cases: or . Also the case must be treated separately. Remark 3 shows that cannot be 0. Consequently the cases are:

Case (1). If , and generates a generally convergent root–finding algorithm for cubic polynomials then and , otherwise 0 would be a pole of . In fact, in this case , and in a neighborhood of zero we have , with . Thus

where and are non–zero constants. This implies that 0 is a pole of order 5 for . Moreover,

Hence, if y , then we have

and then 0 is a pole of order 2. Therefore, if and , we are able to remove the poles of and consequently has a fixed point at 0. Thus the map must have the form

According to Theorem 6, Formula (12) must also satisfy the equation

in order to generates a generally convergent root–finding algorithm for cubic polynomials.

Since the roots of unity are superattracting fixed points, we have

which impose the relation . Together with Formula (8), it follows that . As a consequence we have

which is exactly Halley’s method applied to .

Case (2). If , it follows that 0 is a fixed point of , however it cannot generate a generally convergent method. Indeed, note that

Hence, in order to obtain that 1 to be a superattracting fixed point, we must have that . Furthermore, this implies that 0 is a superattracting fixed point, that is, there exists an open subset of the plane which belongs to the basin of attraction of 0. Therefore the rational map cannot generate a generally convergent root finding algorithm for cubic polynomials, and the proof is complete. □

8. Dynamical Study of the Fourth-Order Family

In this section we will study the complex dynamics of the family (1) when , and . In this case, according to Lemma 1 is of order 4. The fixed point operator associated to this family of methods, on a nonlinear function is

where . Note that in this case depends only on and .

By applying this operator on a generic polynomial , and by using the Möebius map , whose properties are

the rational operator associated to the family of iterative schemes is finally

It is easy to see that this family of methods has at least order of convergence 4. We have seen that for the special case the family have fifth order of convergence and the family has the form

8.1. Study of the Fixed Points and Their Stability

It is clear that and are fixed points of which are related to the root a and b respectively. Now, we focus our the attention on the extraneous fixed points (those points which are fixed points of and are not solutions of the equation ). First of all, we notice that is an extraneous fixed point, which is associated with the original convergence to infinity. Moreover, there are also another two strange fixed points which correspond to the roots of the polynomial

whose analytical expression, depending on and , are:

There exist relations between the extraneous fixed points and they are described in the following result.

Lemma 2.

The number of simple extraneous fixed points ofis three, except in the following cases:

- (i)

- If, thenthat is not a fixed point, so there is only one extraneous fixed point.

- (ii)

- If, thenthat is not a fixed point, so there is only one extraneous fixed point.

- (iii)

- If, thenthat is a fixed point related to the root a, so there are is only one extraneous fixed point.

- (iv)

- If, thenthat is an extraneous fixed point, so there is only one extraneous fixed points.

Related to the stability of that extraneous fixed points, the first derivative of must be calculated

Taking into account the form of the derivative, it is immediate that the origin and ∞ are superattractive fixed points for every value of and .

The stability of the other fixed points is more complicated and will be shown in a separate way. First of all, focussing the attention in the extraneous fixed point , which is related to the original convergence to ∞, and the following result can be shown.

Related to the stability of the extraneous fixed point we have the following result.

Lemma 3.

The behavior ofis the following:

- (i)

- If, thenis a superattracting fixed point.

- (ii)

- Ifandor. Then,is attracting.

- (iii)

- Ifandor. Then,is attracting.

- (iv)

- Ifand, thenis an indifferent fixed point.

- (v)

- Ifand, thenis an indifferent fixed point.

In the rest, of the casesis repelling.

Due to the complexity of the stability function of each one of the extraneous fixed points

and

to characterize its domain analytically is not affordable. We will use the graphical tools of software Mathematica in order to obtain the regions of stability of each of them, in the complex plane.

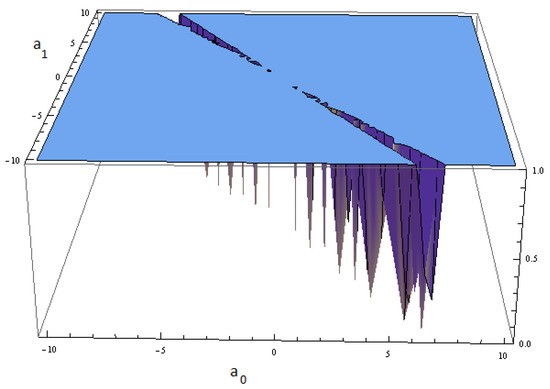

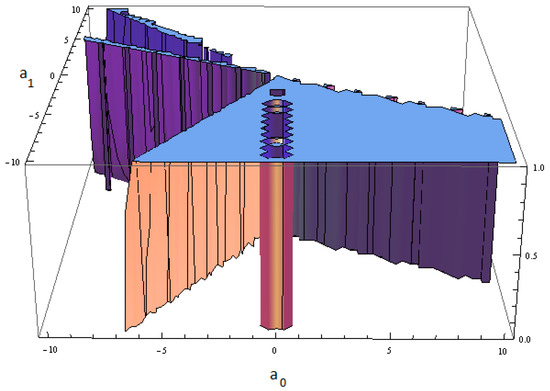

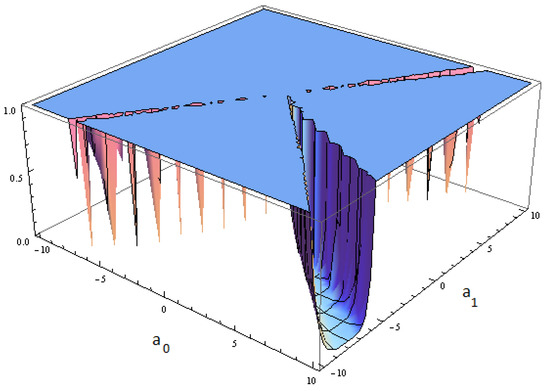

In Figure 1, the stability region of can be observed and in Figure 2 and Figure 3, the region of stability of and are shown. These stability regions are drawn in 3D, since we study the behavior of the derivative which depends on two parameters, so we need three axes to observe it. Taking into account these regions the following result summarize the behavior of the extraneous fixed points. These Figures are important, as it can be seen in [40,41,42] due to the fact that they give light about the stability of the extraneous fixed points, if there is no region with attracting behaviour of them, they won’t have any problematic behaviour.

Figure 1.

Stability region of .

Figure 2.

Stability region of .

Figure 3.

Stability region of .

As a conclusion we can remark that the number and the stability of the fixed points depend on the parameters and .

8.2. Study of the Critical Points and Parameter Spaces

In this section, we compute the critical points and we show the parameter spaces associated to the free critical points. It is well known that there is at least one critical point associated with each invariant Fatou component. The critical points of the family are the solutions of is , where

By solving this equation, it is clear that and are critical points, which are related to the roots of the polynomial and they have associated their own Fatou component. Moreover, there exist critical points no related to the roots, these points are called free critical points. Their expressions are:

and

The relations between the free critical points are described in the following result.

Lemma 4.

- (a)

- Ifor(i) .

- (b)

- Ifor(i) .

- (c)

- For other values ofand(i) The family has 3 free critical points.

Moreover, it is clear that for every value ofand,

It is easy to see that is a pre-periodic point as it is the pre-image of the fixed point related to the convergence to infinity, , and the other free critical points are conjugated . So, there are only two independent free critical points and only one is not pre-periodic. Without loss of generality, we consider in this paper the free critical point . In order to find the best members of the family in terms of stability, the parameter space corresponding to this independent free critical point will be shown.

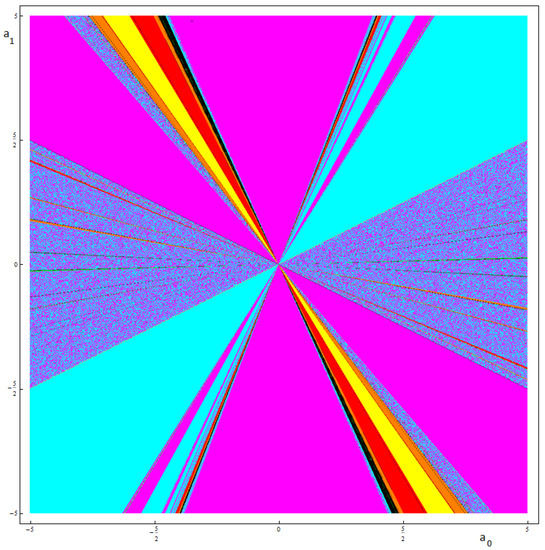

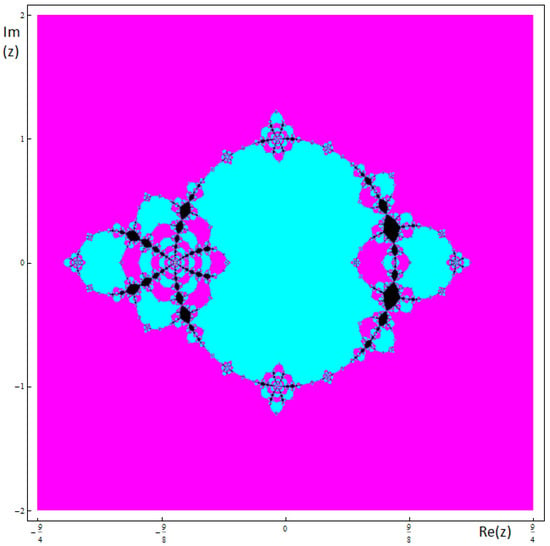

The study of the orbits of the critical points gives rise about the dynamical behavior of an iterative method. More precisely, to determinate if there exists any attracting extraneous fixed point or periodic orbit, the following question must be answered: For which values of the parameters, the orbits of the free critical points are attracting periodic orbits? In order to answer this question we are going to draw the parameter space but our main problem is that we have 2 free parameters , . In order to avoid this problem, we are going to use a a variant of the algorithm that appears in [43] and similar, in which we will consider the horizontal axis as the possible real values of and the vertical one as the possible values of . When the critical point is used as an initial estimation, for each value of the parameter, the color of the point tell us about the place it has converged to: to a fixed point, to an attracting periodic orbit or even the infinity.

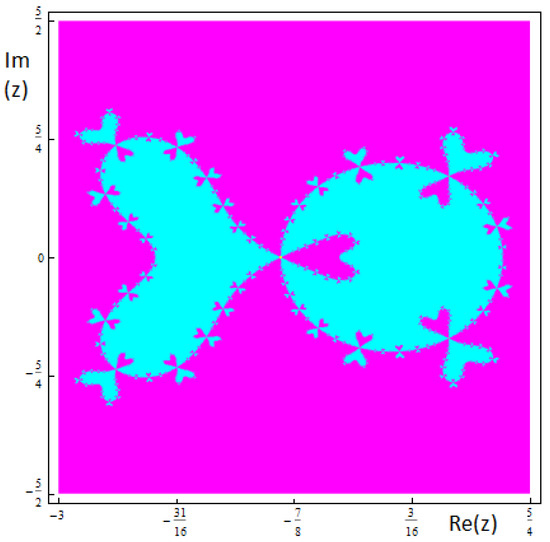

In Figure 4, the parameter space associated to is shown. The algorithm to draw this parameter speace is similar to the one used in [44]: A point is painted in cyan if considerint the iteration converges to 0 (which is related to one root), in magenta the convergence to ∞ (which is related to the other root) and in yellow appear the points which iteratations converges to 1 (which is related to ∞). Other colors used are:red for theconvergence to a extraneous fixed points and other colors, including black, for cycles.

Figure 4.

Parameter space associated to the free critical point .

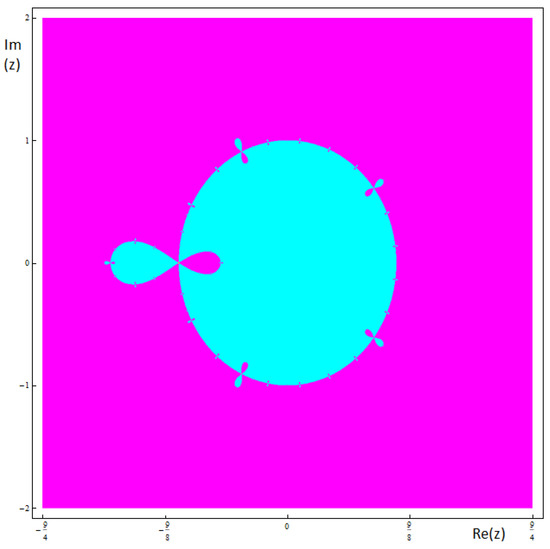

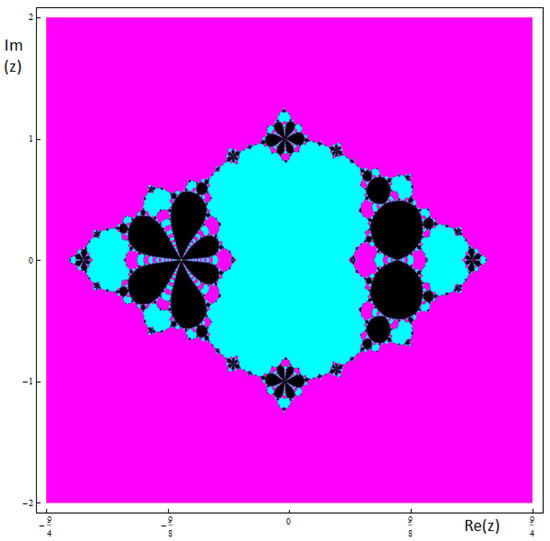

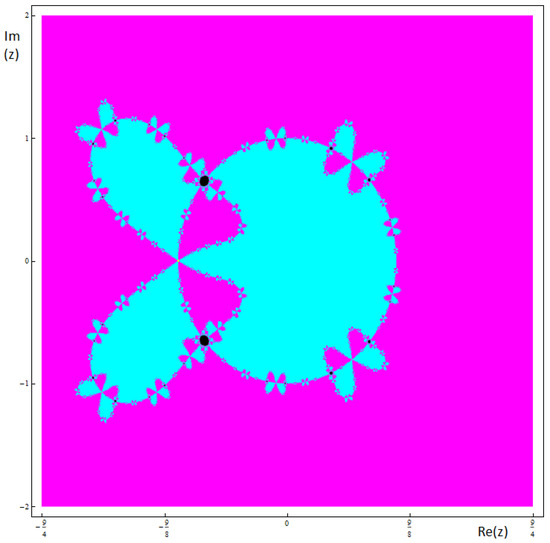

Now, we are going to show these anomalies using dynamical planes where the convergence to 0, after a maximum of 2000 iterations and with a tolerance of appear in magenta, in cyan it appears the convergence to ∞, after a maximum of 2000 iterations and with a tolerance of and in black the zones with no convergence to the roots. First of all, in Figure 5 and Figure 6 the dynamical planes associated with the values of for which there is no convergence problems, are shown. As a consequence, those selections of pair of values are a good choice since all points converge to the roots of the original equation.

Figure 5.

Basins of attraction associated to the member of the family and .

Figure 6.

Basins of attraction associated to the member of the family and . This member has fifth order of convergence.

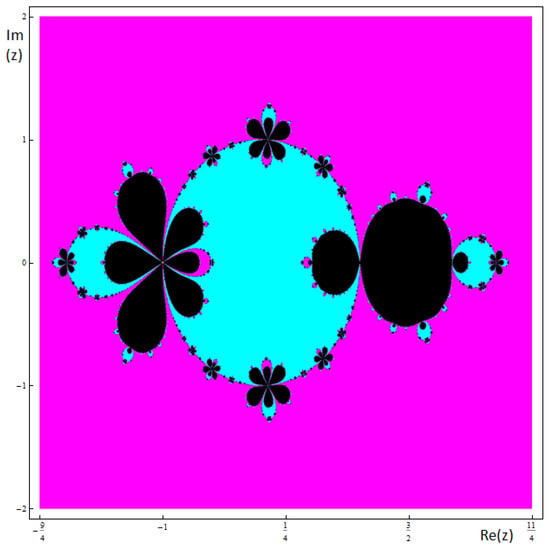

Then, focussing the attention in the region shown in Figure 4 it is evident that there exist members of the family with complicated behavior. In Figure 7, the dynamical planes of a member of the family with regions of convergence to any of the extraneous fixed points is shown. In this case, there exist regions of points which iterations do not converge to any of the roots of the original equations, so these values are not a good choice.

Figure 7.

Basins of attraction associated to the member of the family and .

On the other hand, in Figure 4 and Figure 8, the dynamical planes of a member of the family with regions of convergence to , related to ∞ is shown, in which we observe that there exist. In this case, there exist regions of points which iterations do not converge to any of the roots of the original equations, so these values are not a good choice.

Figure 8.

Basins of attraction associated to the member of the family and .

Finally, in Figure 9 and Figure 10, some dynamical planes of members of the family with convergence to different attracting cycles are shown.

Figure 9.

Basins of attraction associated to the member of the family and . The black zones are related to the convergence of a 2-cycle.

Figure 10.

Basins of attraction associated to the member of the family and . The black zones in this case correspond with zones of convergence to a 3-cycle.

If we choose as a particular value of and we can observe the existence of a periodic orbit of period 3

moreover, this orbit is attracting as

Sharkovsky’s Theorem [31], states that the existence of orbits of period 3, guaranties orbits of any period.

9. Conclusions

This article discusses purely iterative algorithms for Newton’s maps , given by the Formula (1), and that were proposed in [6]. This family represents a large class of root finding algorithms, including the best known, and those of high order of convergence. Depending on the parameters , , , and , in general the family may not define a root finding algorithm. To avoid this difficulty, we achieved a characterization in terms of those parameters, so that it is effectively a root finding algorithm. The scaling theorem has the advantage of reducing the parameter space in dimension, and it is useful for plotting the parameter space in low dimension, among other things. We give a classification of extraneous fixed points and indifferent fixed points of , in terms of the parameters , , , and . Then, we use those results and Hawkins’s theorem to conclude that over the family , the rational map that generates generally convergent root finding algorithms, is the Halley’s method applied to cubic polynomials. This shows that rigidity is even stronger, and is not obtained only in terms of the conjugation.

Author Contributions

All authors have contributed equally. All authors have read and agreed to the published version of the manuscript.

Funding

S.A. was partially supported by Séneca 20928/PI/18 and by MINECO PID2019-108336GB-I00. G.H.was partially supported by REDES 190071, 180151 and 180018 from ANID Chile. Á.A.M. was partially supported by Séneca 20928/PI/18 and by MINECO PGC2018-095896-B-C21.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Hartman, P. Ordinary Differential Equations; John Wiley and Sons, Inc.: New York, NY, USA; London, UK; Sydney, Australia, 1964; p. xiv+612. [Google Scholar]

- Blanchard, P. The dynamics of Newton’s method, Complex dynamical systems. Proc. Symp. Appl. Math. Am. Math. Soc. 1994, 49, 139–154. [Google Scholar]

- Haeseler, F.V.; Peitgen, H.-O. Newton’s Method and Dynamical Systems; Peitgen, H.-O., Ed.; Kluwer Academic Publishers: Dordrecht, The Netherlands, 1989; pp. 3–58. [Google Scholar]

- McMullen, C. Families of rational maps and iterative root-finding algorithms. Ann. Math. 1987, 125, 467–493. [Google Scholar] [CrossRef]

- Hawkins, J. McMullen’s root-finding algorithm for cubic polynomials. Proc. Am. Math. Soc. 2002, 130, 2583–2592. [Google Scholar] [CrossRef]

- Honorato, G.; Plaza, S. Dynamical aspects of some convex acceleration methods as purely iterative algorithm for Newton’s maps. Appl. Math. Comput. 2015, 251, 507–520. [Google Scholar] [CrossRef]

- Arney, D.C.; Robinson, B.T. Exhibiting chaos and fractals with a microcomputer. Comput. Math. Appl. 1990, 19, 1–11. [Google Scholar] [CrossRef][Green Version]

- Buff, X.; Henriksen, C. König’s root-finding algorithms. Nonlinearity 2003, 16, 989–1015. [Google Scholar] [CrossRef]

- Crane, E. Mean value conjectures for rational maps. Complex Var. Elliptic Equ. 2006, 51, 41–550. [Google Scholar] [CrossRef]

- Curry, J.H.; Garnett, L.; Sullivan, D. On the iteration of a rational function: Computer experiment with Newton’s method. Commun. Math. Phys. 1983, 91, 267–277. [Google Scholar] [CrossRef]

- Drakopoulos, V. On the additional fixed points of Schröder iteration functions associated with a one–parameter family of cubic polynomials. Comput. Graph. 1998, 22, 629–634. [Google Scholar] [CrossRef]

- Honorato, G.; Plaza, S.; Romero, N. Dynamics of a higher-order family of iterative methods. J. Complex. 2011, 27, 221–229. [Google Scholar] [CrossRef]

- Kneisl, K. Julia sets for the super-Newton method, Cauchy’s method, and Halley’s method. Chaos 2001, 11, 359–370. [Google Scholar] [CrossRef] [PubMed]

- Rückert, J.; Schleicher, D. On Newton’s method for entire functions. J. Lond. Math. Soc. 2007, 75, 659–676. [Google Scholar] [CrossRef][Green Version]

- Sarría, Í.; González, R.; González-Castaño, A.; Magreñán, Á.A.; Orcos, L. A pedagogical tool based on the development of a computer application to improve learning in advanced mathematics. Rev. Esp. Pedagog. 2019, 77, 457–485. [Google Scholar]

- Vrscay, E. Julia sets and Mandelbrot–like sets associated with higher order Schröder rational iteration functions: A computer assisted study. Math. Comput. 1986, 46, 151–169. [Google Scholar]

- Blanchard, P. Complex Analytic Dynamics on the Riemann Sphere. Bull. AMS 1984, 11, 85–141. [Google Scholar] [CrossRef]

- Milnor, J. Dynamics in One Complex Variable: Introductory Lectures, 3rd ed.; Princeton U. Press: Princeton, NJ, USA, 2006. [Google Scholar]

- Smale, S. On the efficiency of algorithms of analysis. Bull. AMS 1985, 13, 87–121. [Google Scholar] [CrossRef]

- Amorós, C.; Argyros, I.K.; González, D.; Magreñán, A.A.; Regmi, S.; Sarría, Í. New improvement of the domain of parameters for newton’s method. Mathematics 2020, 8, 103. [Google Scholar] [CrossRef]

- Vrscay, E.; Gilbert, W. Extraneous fixed points, basin boundary and chaotic dynamics for Schröder and König rational iteration functions. Numer. Math. 1988, 52, 1–16. [Google Scholar] [CrossRef]

- Varona, J.L. Graphic and numerical comparison between iterative methods. Math. Intell. 2002, 24, 37–46. [Google Scholar] [CrossRef]

- Whittaker, E.T. A formula for the solution of algebraic and transcendental equations. Proc. Edinburgh Math. Soc. 1918, 36, 103–106. [Google Scholar] [CrossRef]

- Ezquerro, J.A.; Hernández, M.A. On a convex acceleration of Newton’s method. J. Optim. Theory Appl. 1999, 100, 311–326. [Google Scholar] [CrossRef]

- Schröder, E. On infinitely many algorithms for solving equations. Math. Annal. 1870, 2, 317–365. [Google Scholar] [CrossRef]

- Schröder, E. Ueber iterirte Functionen. Math. Ann. 1870, 3, 296–322. (In German) [Google Scholar] [CrossRef]

- Pomentale, T. A class of iterative methods for holomorphic functions. Numer. Math. 1971, 18, 193–203. [Google Scholar] [CrossRef]

- Gilbert, W.J. Newton’s method for multiple roots. Comput. Graph. 1994, 18, 227–229. [Google Scholar] [CrossRef]

- Gutiérrez, J.M.; Hernández, M.A. An acceleration of Newton’s method: Super-Halley method. Appl. Math. Comput. 2001, 117, 223–239. [Google Scholar] [CrossRef]

- Gutiérrez, J.M.; Hernández, M.A. A family of Chebyshev-Halley type methods in Banach spaces. Bull. Aust. Math. Soc. 1997, 55, 113–130. [Google Scholar] [CrossRef]

- Cordero, A.; Torregrosa, J.R.; Vindel, P. Dynamics of a family of Chebyshev Halley type methods. Appl. Math. Comput. 2013, 219, 8568–8583. [Google Scholar] [CrossRef]

- Amat, S.; Busquier, S.; Plaza, S. Review of some iterative root-finding methods from a dynamical point of view. Sci. Ser. A Math. Sci. 2004, 10, 3–35. [Google Scholar]

- Amat, S.; Busquier, S.; Gutiérrez, J.M. Geometric constructions of iterative functions to solve nonlinear equations. J. Comput. Appl. Math. 2003, 157, 197–205. [Google Scholar] [CrossRef]

- Amorós, C.; Argyros, I.K.; Magreñán, Á.A.; Regmi, S.; González, R.; Sicilia, J.A. Extending the applicability of Stirling’s method. Mathematics 2020, 8, 35. [Google Scholar] [CrossRef]

- Argyros, I.K.; Chen, D. Results on the Chebyshev method in Banach spaces. Proyecciones 1993, 12, 119–128. [Google Scholar] [CrossRef]

- Drakopoulos, V.; Argyropoulos, N.; Böhm, A. Generalized computation of Schröder iteration functions to motivated families of Julia and Mandelbrot–like sets. SIAM J. Numer. Anal. 1999, 36, 417–435. [Google Scholar] [CrossRef]

- Hernández, M.A.; Salanova, M.A. A family of Chebyshev-Halley type methods. Int. J. Comput. Math. 1993, 47, 59–63. [Google Scholar] [CrossRef]

- Moysi, A.; Argyros, I.K.; Regmi, S.; González, D.; Magreñán, Á.A.; Sicilia, J.A. Convergence and dynamics of a higher-order method. Symmetry 2020, 12, 420. [Google Scholar] [CrossRef]

- Plaza, S. Conjugacy classes of some numerical methods. Proyecciones 2001, 20, 1–17. [Google Scholar] [CrossRef]

- Argyros, I.K.; Magreñán, Á.A. Iterative Methods and Their Dynamics with Applications: A Contemporary Study; CRC Press: Boca Raton, FL, USA; Taylor & Francis Group: Boca Raton, FL, USA, 2017. [Google Scholar]

- Magreñán, Á.A.; Argyros, I.K. A Contemporary Study of Iterative Methods: Convergence, Dynamics and Applications; Elsevier: Amsterdam, The Netherlands, 2017. [Google Scholar]

- Magrenán, Á.A. Different anomalies in a Jarratt family of iterative root-finding methods. Appl. Math. Comput. 2014, 233, 29–38. [Google Scholar]

- Magreñán, Á.A. A new tool to study real and complex dynamics: The convergence plane. Appl. Math. Comput. 2014, 248, 215–224. [Google Scholar]

- Amat, S.; Argyros, I.K.; Busquier, S.; Magreñán, Á.A. Local convergence and the dynamics of a two-point four parameter Jarratt-like method under weak conditions. Numer. Algorithms 2017, 74, 371–391. [Google Scholar] [CrossRef]

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).