Abstract

This paper aims at extending a previous contribution dealing with the random autonomous-homogeneous linear differential equation with discrete delay , by adding a random forcing term that varies with time: , , with initial condition , . The coefficients a and b are assumed to be random variables, while the forcing term and the initial condition are stochastic processes on their respective time domains. The equation is regarded in the Lebesgue space of random variables with finite p-th moment. The deterministic solution constructed with the method of steps and the method of variation of constants, which involves the delayed exponential function, is proved to be an -solution, under certain assumptions on the random data. This proof requires the extension of the deterministic Leibniz’s integral rule for differentiation to the random scenario. Finally, we also prove that, when the delay tends to 0, the random delay equation tends in to a random equation with no delay. Numerical experiments illustrate how our methodology permits determining the main statistics of the solution process, thereby allowing for uncertainty quantification.

1. Introduction

In this paper, we are concerned with random delay differential equations, defined as classical delay differential equations whose inputs (coefficients, forcing term, initial condition, …) are considered as random variables or regular stochastic processes on an underlying complete probability space , which may take a wide variety of probability distributions, such as Binomial, Poisson, Gamma, Gaussian, etc.

Equations of this kind should not be confused with stochastic differential equations of Itô type, forced by an irregular error term called White noise process (formal derivative of Brownian motion). In contrast to random differential equations, the solutions to stochastic differential equations exhibit nondifferentiable sample-paths. See [1] (pp. 96–98) for a detailed explanation of the difference between random and stochastic differential equations. See [1,2,3,4,5,6], for instance, for applications of random differential equations in engineering, physics, biology, etc. Thus, random differential equations require their own treatment and study: they model smooth random phenomena, with any type of input probability distributions.

From a theoretical viewpoint, random differential equations may be studied in two senses: the sample-path sense or the -sense. The former case considers the trajectories of the stochastic processes involved, so that the realizations of the random system correspond to deterministic versions of the problem. The latter case works with the topology of the Lebesgue space of random variables with finite absolute p-th moment, where the norm is defined as: for ( denotes the expectation operator), and (essential supremum), being any random variable. The Lebesgue space has the structure of a Banach space. Continuity, differentiability, Riemann integrability, etc., can be considered in the aforementioned space , which gives rise to the random -calculus.

In order to fix concepts, given a stochastic process on , where is an interval (notice that as usual the -sample notation might be hidden), we say that x is -continuous at if . We say that x is -differentiable at if , for certain random variable (called the derivative of x at ). Finally, if , we say that x is -Riemann integrable on if there exists a sequence of partitions with mesh tending to 0, , such that, for any choice of points , , the limit exists in . In this case, these Riemann sums have the same limit, which is a random variable and is denoted by .

This -approach has been widely used in the context of random differential equations with no delay, especially the case which corresponds to the Hilbert space and yields the so-called mean square calculus; see [5,7,8,9,10,11,12,13,14,15]. Only recently, a theoretical probabilistic analysis of random differential equations with discrete constant delay has been addressed in [16,17,18]. In [16], general random delay differential equations in were analyzed, with the goal of extending some of the existing results on random differential equations with no delay from the celebrated book [5]. In [17], we started our study on random delay differential equations with the basic autonomous-homogeneous linear equation, by proving the existence and uniqueness of -solution under certain conditions. In [18], the authors studied the same autonomous-homogeneous random linear differential equation with discrete delay as [17], but considered the solution in the sample-path sense and computed its probability density function via the random variable transformation technique, for certain forms of the initial condition process. Other recent contributions for random delay differential equations, but focusing on numerical methods instead, are [19,20,21].

There is still a lack of theoretical analysis for important random delay differential equations. Motivated by this issue, the aim of this contribution is to advance further in the theoretical analysis of relevant random differential equations with discrete delay. In particular, in this paper we extend the recent study performed in [17] for the basic linear equation by adding a stochastic forcing term:

The delay is constant. The coefficients a and b are random variables. The forcing term and the initial condition are stochastic processes on and respectively, which depend on the outcome of a random experiment which might be sometimes omitted in notation. The term represents the differentiable solution stochastic process in a certain probabilistic sense. Formally, according to the deterministic theory [22], we may express the solution process as

where and

is the delayed exponential function [22] (Definition 1), , and (here denotes the integer part defined by the so-called floor function). This formal solution is obtained with the method of steps and the method of variation of constants.

The primary objective of this paper is to set probabilistic conditions under which is an -solution to (1). We decompose the original problem (1) as

and

System (3) does not possess a stochastic forcing term, and it was deeply studied in the recent contribution [17]. Under certain assumptions, its -solution is expressed as

as a generalization of the deterministic solution to (3) obtained via the method of steps [22] (Theorem 1). Problem (4) is new and requires an analysis in the -sense, in order to solve the initial problem (1). Its formal solution is given by

see [22] (Theorem 2). In order to differentiate (6) in the -sense, one requires the extension of the deterministic Leibniz’s integral rule for differentiation to the random scenario. This extension is an important piece of this paper.

In Section 2, we show preliminary results on -calculus that are used through the exposition, which correspond to those preliminary results from [17] and the new random Leibniz’s rule for -Riemann integration. Auxiliary but novel results to demonstrate the random Leibniz’s integral rule are Fubini’s theorem and a chain rule theorem. In Section 3, we prove in detail that defined by (2) is the unique -solution to (1), by analyzing problem (4). We also find closed-form expressions for some statistics (expectation and variance) of related to its moments. Section 4 deals with the -convergence of as the delay tends to 0. We then show a numerical example that illustrates the theoretical findings. Finally, Section 5 draws the main conclusions.

In order to complete a fair overview of the existing literature, it must be pointed out that, apart for random delay differential equations (which is the context of this paper), other complementary approaches are stochastic delay differential equations and fuzzy delay differential equations. Stochastic delay differential equations are those in which uncertainty appears due to stochastic processes with irregular sample-paths: the Brownian motion process, Wiener process, Poisson process, etc. A new tool is required to tackle equations of this type, called Itô calculus [23]. Studies on stochastic delay differential equations can be read in [24,25,26,27,28], for example. On the other hand, in fuzzy delay differential equations, uncertainty is driven by fuzzy processes; see [29] for instance. In any of these approaches, the delay might even be considered random; see [30,31].

2. Results on -Calculus

In this section, we state the preliminary results on -calculus needed for the following sections. Proposition 1 is the chain rule theorem in -calculus, which was first proved in [8] (Theorem 3.19) in the setting of mean square calculus (). Both Lemma 1 and Lemma 2 provide conditions under which the product of three stochastic processes is -continuous or -differentiable. Proposition 2 is a result concerning -differentiation under the -Riemann integral sign, when the interval of integration is fixed. These four results have been already used and stated in the recent contribution [17], and will be required through our forthcoming exposition.

For the sake of completeness, we demonstrate Proposition 2 with an alternative proof to [17], based on Fubini’s theorem for -Riemann integration. In the random framework, Fubini’s theorem has not been tackled yet in the recent literature. It states that, if a stochastic process depending on two variables is -continuous, then the two iterated -Riemann integrals can be interchanged.

We present a new result, Proposition 3, in which we put conditions in order to -differentiate an -Riemann integral whose interval of integration depends on t. This proposition supposes the extension of the so-called Leibniz’s rule for integration to the random scenario. The proof relies on a new chain rule theorem.

Proposition 1

(Chain rule theorem ([17] Proposition 2.1)). Let be a stochastic process, where is any interval in . Let f be a deterministic function on an open set that contains . Fix . Let be any point such that:

- (i)

- X is -differentiable at t;

- (ii)

- X is path continuous on ;

- (iii)

- there exist and such that .

Then is -differentiable at t and .

Lemma 1

([17] Lemma 2.1). Let , and be three stochastic processes and fix . If and are -continuous for all , and is -continuous for certain , then the product process is -continuous.

On the other hand, if and are -continuous, and is -continuous, then the product process is -continuous.

Lemma 2

([17] Lemma 2.2). Let , and be three stochastic processes, and . If and are -differentiable for all , and is -differentiable for certain , then the product process is -differentiable and .

Additionally, if and are assumed to be -differentiable, and is -differentiable, then is -differentiable, with .

Lemma 3

(Fubini’s theorem for iterated -Riemann integrals). Let be a process on . If H is -continuous, then , where the integrals are regarded as -Riemann integrals.

Proof.

The proof is a variation of Fubini’s theorem for Itô stochastic integration with respect to the standard Brownian motion ([32] Theorem 2.10.1). The stochastic processes , and are -continuous, so the iterated integrals exist. Let be a sequence of partitions of with mesh tending to 0. Write , and let , , . Consider the processes (here denotes the characteristic function of a set) and . Notice that, by definition of -Riemann integral, in .

By definition of -Riemann integral,

in . On the other hand,

where the last inequality is due to a property of -integration ([5] p. 102). As and are -bounded on and , respectively (uniformly on ), the dominated convergence theorem allows concluding that in . □

Proposition 2

(-differentiation under the -Riemann integral sign). Let be a stochastic process on . Fix . Suppose that is -continuous on , for each , and that there exists the -partial derivative for all , which is -continuous on . Let (the integral is understood as an -Riemann integral). Then G is -differentiable on and .

Proof.

We present an alternative and simpler proof to ([17] Proposition 2.2), based on Fubini’s theorem (Lemma 3). Since is -continuous, by Barrow’s rule ([5] p. 104) we can write . The stochastic process is -continuous; therefore, in , as a consequence of the fundamental theorem for -calculus; see ([5] p. 103). □

Lemma 4

(Version of the chain rule theorem). Let be a stochastic process on . Let be a differentiable deterministic function. Suppose that has -partial derivatives, with being -continuous on , and being -continuous on , for each . Then in .

Proof.

For , by the triangular inequality,

By Barrow’s rule ([5] p. 104) and an inequality from ([5] p. 102),

The process is -uniform continuous on ; therefore,

On the other hand, let , for fixed. To conclude that , we need . We have that Y is - and that u is differentiable on , so the following existing version of the chain rule theorem applies: ([33] Theorem 2.1). □

Remark 1.

Although not needed in the subsequent development, Lemma 4 gives in fact a general multidimensional chain rule theorem for -calculus, for the composition of a stochastic process and two deterministic functions . This is the generalization of ([33] Theorem 2.1) to several variables. Indeed, let be a stochastic process on an open set , with -partial derivatives, and , being -continuous on Λ. Let be two deterministic functions with . Then . For the proof, just define . By ([33] Theorem 2.1), , which is -continuous on . Additionally, is -continuous. Then can be -differentiated at each r, by our Lemma 4: .

Proposition 3

(Random Leibniz’s rule for -calculus). Let be a stochastic process on . Let be two differentiable deterministic functions. Suppose that is -continuous on , for each , and that exists in the -sense and is -continuous on . Then is -differentiable and

(the integral is considered as an -Riemann integral).

Proof.

First, notice that is well-defined, since is -continuous. Decompose as . Let , , . We have .

Let us check the conditions of Lemma 4. By Lemma 2, , which is -continuous on as a consequence of the -continuity of . On the other hand, , by the fundamental theorem of -calculus ([5] p. 103), with being -continuous. Thus, by Lemma 4,

□

Remark 2

(Proposition 3 against another proof of the random Leibniz’s rule). In [10] Proposition 6, a result pointing towards the conclusion of Proposition 3 was stated (in the mean square case , with , and ). However, the proof presented therein is not correct. In the notation therein, the authors proved an inequality of the form

The authors justified correctly that and as , for each . However, this fact does not imply

as they stated at the end of their proof. For , one has to utilize the dominated convergence theorem. For , one should use uniform continuity.

Remark 3

(Random Leibniz’s rule cannot be proved with a mean value theorem). In the deterministic setting, both Proposition 2 and Proposition 3 can be proven with the mean value theorem. However, such proofs do not work in the random scenario, as there is no version of the stochastic mean value theorem. In previous contributions (see [15] Lemma 2.4, Corollary 2.5; [34] Lemma 3.1, Theorem 3.2), there is an incorrect version of it. For instance, if and , , then Y is mean square continuous on (notice that ). Suppose that there exists such that almost surely. Then almost surely. But this is not possible, since and . Thus, Y does not satisfy any mean square mean value theorem.

3. -Solution to the Random Linear Delay Differential Equation with a Stochastic Forcing Term

In this section we solve (1) in the -sense. To do so, we will demonstrate that defined by (2) is the unique -solution to (1). We will take advantage of the decomposition of problem (1) into its homogeneous part, (3), and its complete part, (4). The formal solution to (3) is given by defined as (5), while the formal solution to (4) is given by expressed as (6). The previous contribution [17] provides conditions under which defined by (5) solves (3) in the -sense. Thus, our primary goal will be to find conditions under which given by (6) is a true solution to (4) in the -sense.

Again, recall that the integrals that appear in the expressions (2), (5) and (6) are -Riemann integrals.

The uniqueness (not existence for now) of (1) is proved analogously to ([17] Theorem 3.1), by invoking results from [7] that connect -solutions with sample-path solutions, which satisfy analogous properties to deterministic solutions. The precise uniqueness statement is as follows.

Theorem 1

(Uniqueness). The random differential equation problem with delay (1) has at most one -solution, for .

Proof.

Assume that (1) has an -solution. We will prove it is unique. Let and be two -solutions to (1). Let , which satisfies the random differential equation problem with delay

If , then ; therefore, . Thus, satisfies a random differential equation problem with no delay on :

In [7], it was proved that any -solution to a random initial value problem has a product measurable representative which is an absolutely continuous solution in the sample-path sense. Since the sample-path solution to (7) must be 0 (from the deterministic theory), we conclude that on , as desired. For the subsequent intervals , , etc., the same reasoning applies. □

Now we move on to existence results. First, recall that the random delayed exponential function is the solution to the random linear homogeneous differential equation with pure delay that satisfies the unit initial condition.

Proposition 4

(-derivative of the random delayed exponential function ([17] Prop 3.1)). Consider the random system with discrete delay

where is a random variable.

If c has absolute moments of any order, then is the unique -solution to (8), for all .

On the other hand, if c is bounded, then is the unique -solution to (8).

In [17], two results on the existence of solution to (3) were stated and proved. In terms of notation, the moment-generating function of a random variable a is denoted as , .

Theorem 2

Theorem 3

In what follows, we establish two theorems on the existence of a solution to (4); see Theorem 4 and Theorem 5. As a corollary, we will derive the solution to (1); see Theorem 6 and Theorem 7.

Theorem 4

Proof.

At the beginning of the proof of ([17] Theorem 3.2), it was proved that has absolute moments of any order, as a consequence of Cauchy-Schwarz inequality, therefore Proposition 4 tells us that the process is -differentiable, for each , and . It was also proved that, by the chain rule theorem (Proposition 1), the process is -differentiable, for each , and . To justify these two assertions on and , the hypotheses and b having absolute moments of any order are required.

Fix . Let , and , according to the notation of Lemma 1. Set the product of the three processes , so that our candidate solution process becomes . We check the conditions of the random Leibniz’s rule, see Proposition 3, to differentiate . By the first paragraph of this proof, in which we stated that both and are -differentiable, for each , we derive that and are -continuous on both variables, for all . Since is -continuous, for certain by assumption, we deduce that F is -continuous on both variables, as a consequence of Lemma 1.

Fixed s, let , and . We have that and are -differentiable, for each . The random variable belongs to . By Lemma 2, is -differentiable at each t, with

Let us see that is -continuous at . Since a has absolute moments of any order (by finiteness of its moment-generating function) and is -continuous at , for each , we derive that is -continuous at each , for every , by Hölder’s inequality. Thus, we have that and are -continuous at , for each , while is -continuous. By Lemma 1, is -continuous at each . Analogously, is -continuous at . Therefore, is -continuous at . By Proposition 3, the process is -differentiable and (by definition of in the proof, , where by definition of delayed exponential function), and we are done.

Once the existence of -solution has been proved, uniqueness follows from Theorem 1. □

Theorem 5

Proof.

As was shown in ([17] Theorem 3.4), the process is -differentiable and , because is bounded. Additionally, the process is -differentiable and , as a consequence of the deterministic mean value theorem and the boundedness of a.

The rest of the proof is completely analogous to the previous Theorem 4, by applying the second part of both Lemma 1 and Lemma 2. □

Theorem 6

Proof.

This is a consequence of Theorem 2 and Theorem 4, with . Uniqueness follows from Theorem 1. □

Theorem 7

Proof.

This is a consequence of Theorem 3 and Theorem 5, with . Uniqueness follows from Theorem 1. □

Remark 4.

As emphasized in ([17] Remark 3.6), the condition of boundedness for a and b in Theorem 7 is necessary if we only assume that in the -sense. See ([7] Example p. 541), where it is proved that, in order for a random autonomous and homogeneous linear differential equation of first-order to have an -solution for every initial condition in , one needs the random coefficient to be bounded.

Assume the conditions from Theorem 6 or Theorem 7. From expression (2), it is possible to approximate the statistical moments of . We focus on its expectation, , and on its variance, . These statistics provide information on the average and the dispersion of , and they are very useful for uncertainty quantification for . For ease of notation, denote the stochastic processes

Due to the linearity of the expectation and its interchangeability with the -Riemann integral ([5] p. 104), if ,

To compute when , we start by

Each of these integrals has to be considered in ; see ([35] Remark 2). This is due to the loss of integrability of the product, by Hölder’s inequality. By applying expectations,

As a consequence, one derives an expression for , by utilizing the relation . Other statistics related to moments could be derived in a similar fashion.

In Example 1, we will show how useful these expressions are to determine and in practice. Our procedure is an alternative to the usual techniques for uncertainty quantification: Monte Carlo simulation, generalized polynomial chaos (gPC) expansions, etc. [1,2].

4. -Convergence to a Random Complete Linear Differential Equation When the Delay Tends to 0

Given a discrete delay , we denote the -solution (2) to (1) by . We denote the -solutions (5) and (6) to (3) and (4) by and , respectively, so that . Thus, we are making the dependence on the delay explicit. If we put into (1), (3) and (4), we obtain random linear differential equations with no delay:

respectively. The following results establish conditions under which (11), (12) and (13) have -solutions.

Theorem 8

([17] Corollary 4.1). Fix . If and for all , and for certain , then the stochastic process is the unique -solution to (12).

On the other hand, if a and b are bounded random variables and , then the stochastic process is the unique -solution to (12).

Theorem 9.

Fix . If and for all , and f is continuous on in the -sense for certain , then the stochastic process is the unique -solution to (13).

On the other hand, if a and b are bounded random variables and f is continuous on in the -sense, then the stochastic process is the unique -solution to (13).

Proof.

Take the first set of assumptions. Let be the process inside the integral sign. Since and , the chain rule theorem (Proposition 1) allows differentiating in , for each . In particular, is -continuous at , for . As f is continuous on in the -sense, we derive that F is -continuous at . It also exists in . Since has absolute moments of any order, is -continuous at , for . Then is -continuous at . By Proposition 3, is -differentiable and , and we are done.

Suppose that a and b are bounded random variables and f is continuous on in the -sense. If a and b are bounded, then is -differentiable (this is because of an application of the deterministic mean value theorem; see ([17] Theorem 3.4)). Then an analogous proof to the previous paragraph works in this case, by only assuming that f is continuous on in the -sense. □

Theorem 10.

Fix . If and for all , , and f is continuous on in the -sense for certain , then the stochastic process is the unique -solution to (11).

On the other hand, if a and b are bounded random variables, , and f is continuous on in the -sense, then the stochastic process is the unique -solution to (11).

Proof.

It is a consequence of Theorem 8 and Theorem 9 with . □

Our goal is to establish conditions under which in , for each . To do so, we will utilize and .

The first limit, , was demonstrated in ([17] Theorem 4.5), by using inequalities for the deterministic and random delayed exponential function ([36] Theorem A.3), ([17] Lemma 4.2, Lemma 4.3, Lemma 4.4).

Theorem 11

([17] Theorem 4.5). Fix . Let a and b be bounded random variables and let g be a stochastic process that belongs to in the -sense. Then, in , uniformly on , for each .

Next we prove the convergence . As a corollary, we will be able to derive .

Theorem 12.

Fix . Let a and b be bounded random variables and let f be a continuous stochastic process on in the -sense. Then, in , uniformly on , for each .

Proof.

Notice that defined by (6) (see the first paragraph of this section) exists by Theorem 5, which used the boundedness of a and b and the -continuity of f on . Analogously, exists by Theorem 9.

Fix . We bound

We have and . These bounds yield

Let k be a number such that , for all . By ([17] Lemma 4.3),

for , and . On the other hand, by the deterministic mean value theorem (applied for each outcome ),

where . In particular, . We apply again the deterministic mean value theorem to :

where . In particular,

As a consequence,

Substituting this inequality into (14),

uniformly on . □

Theorem 13.

Fix . Let a and b be bounded random variables, let g be a stochastic process that belongs to in the -sense, and let f be a continuous stochastic process on in the -sense. Then, in , uniformly on , for each .

Proof.

This is a consequence of Theorem 11 and Theorem 12, with and . □

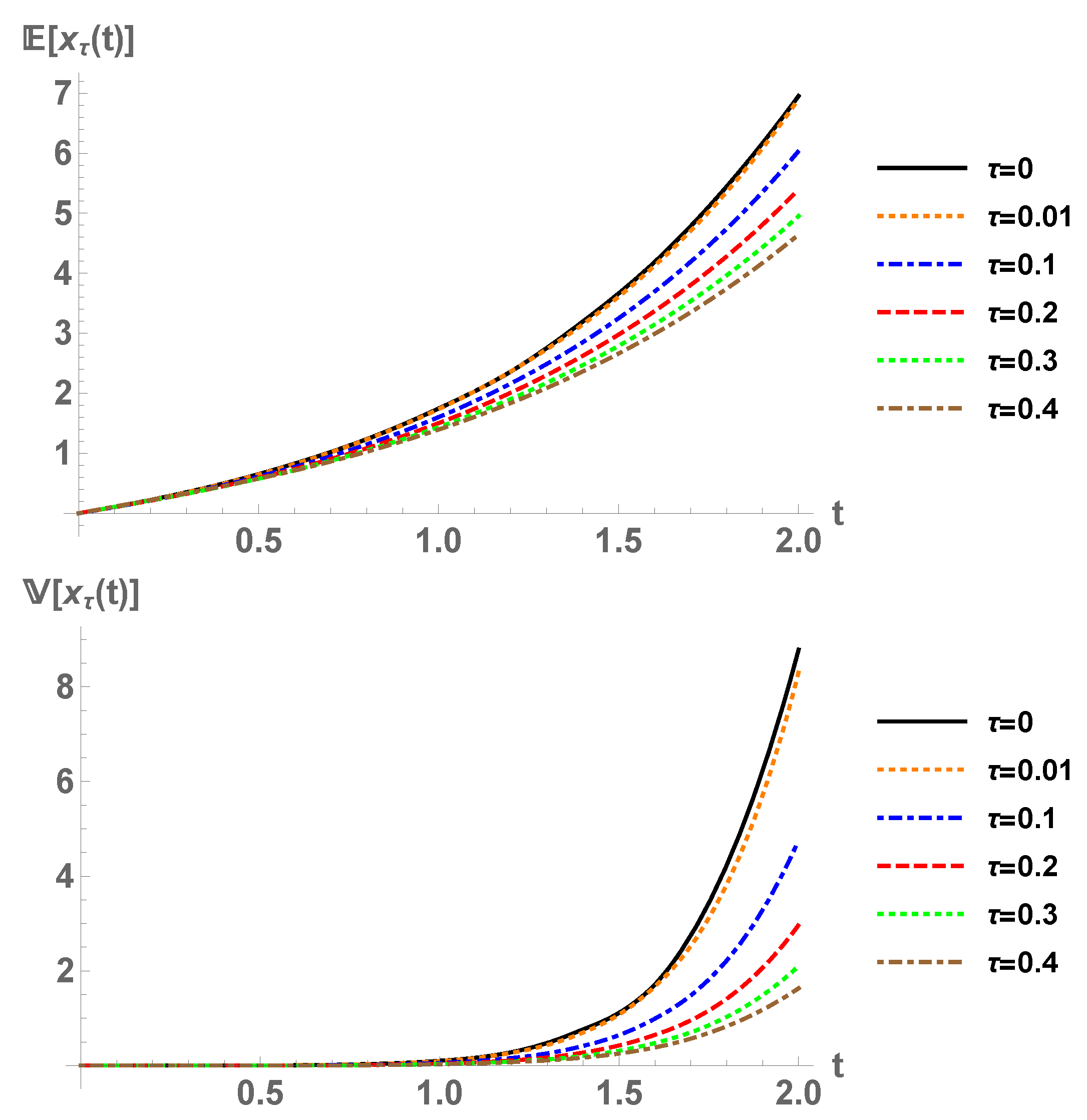

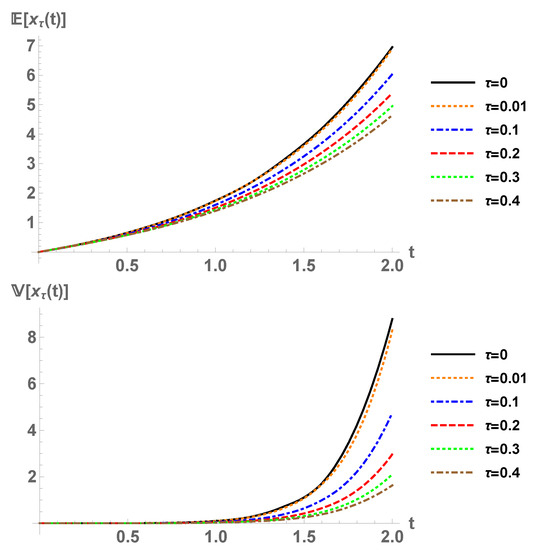

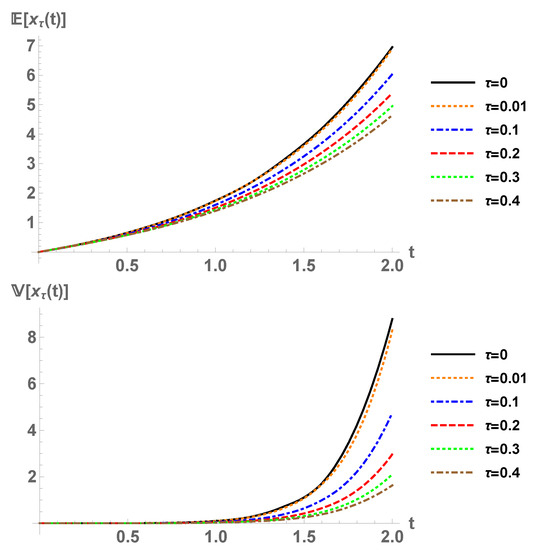

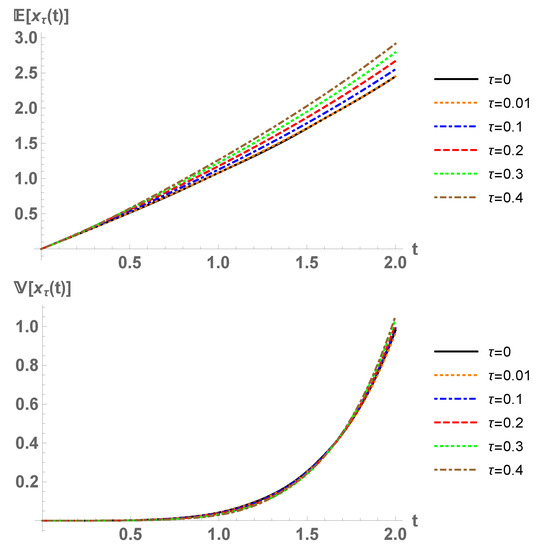

Example 1.

This is a test example, with arbitrary distributions, to show how (9) and (10) may be employed to compute the expectation and the variance of the stochastic solution. Theoretical results are also illustrated. Let and . Define and , where d and e are random variables with and . By using the chain rule theorem, Proposition 1, it is easy to prove that both g and f are in the -sense, . The random variables a, b, d and e are assumed to be independent. Consider the solution stochastic process defined by (2). It is an -solution for all , by Theorem 7. With expressions (9) and (10), we can compute and ; see Figure 1. The results agree with Monte Carlo simulation on (1). Observe that, as approaches 0, the solution stochastic process tends to the solution with no delay defined in Theorem 10, as predicted by Theorem 13.

Figure 1.

Expectation (up) and variance (down) of , Example 1.

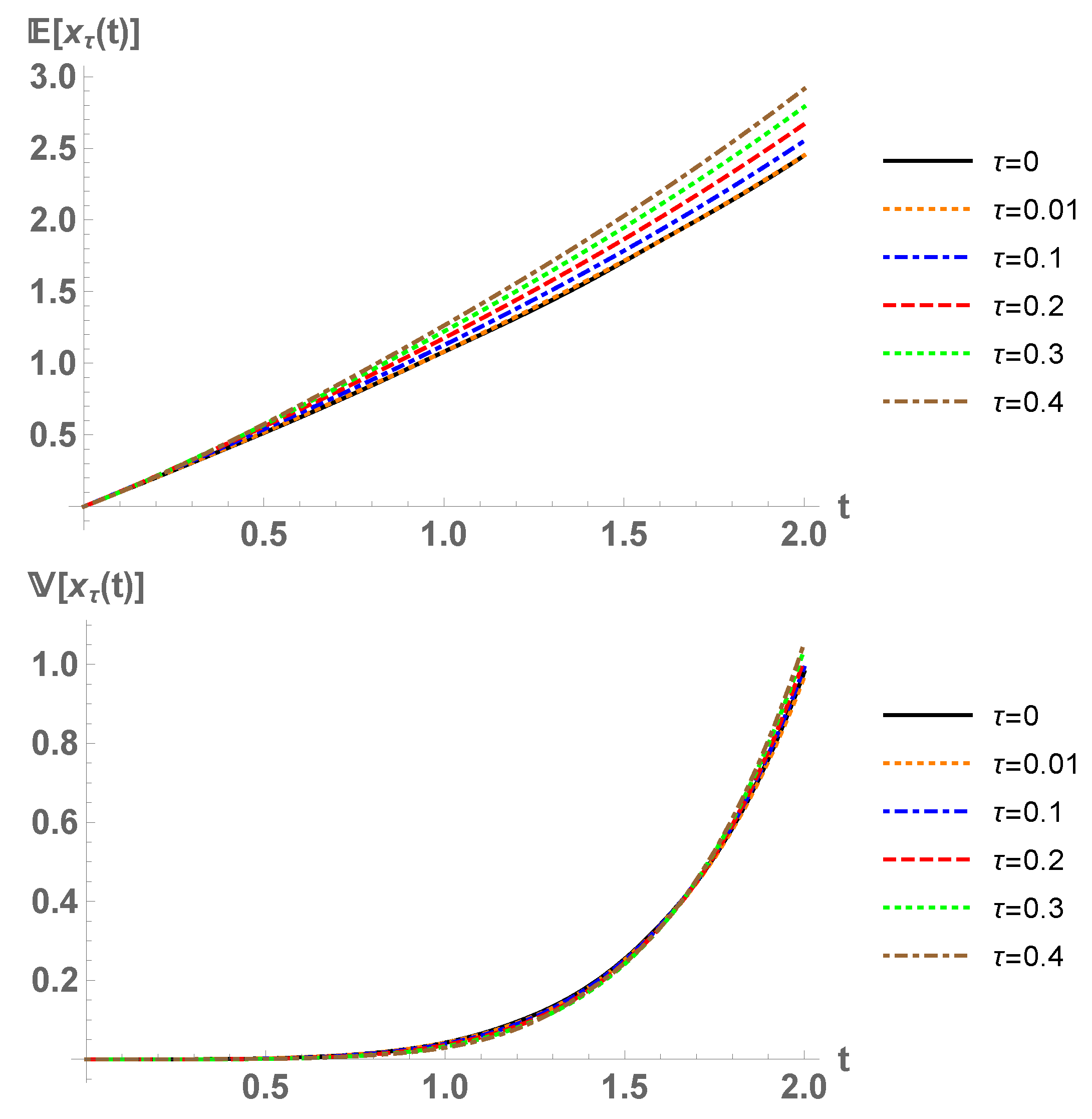

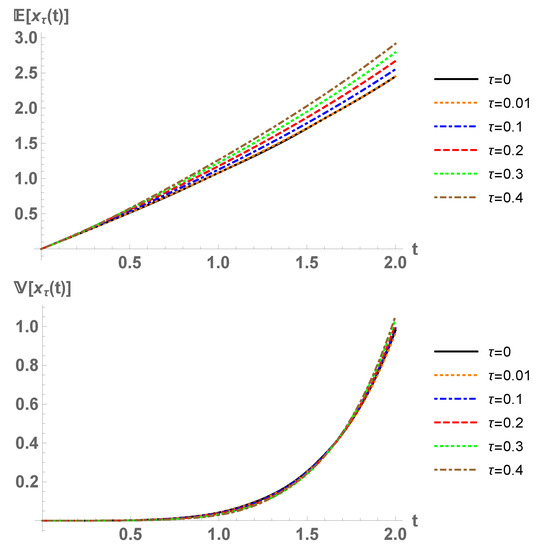

Example 2.

In this example, we specify new probability distributions for the input coefficients. Let , , and , all of them independent. The stochastic process given by (2) is an -solution for all , by Theorem 7. We compute and with (9) and (10), see Figure 2. Observe that the convergence when agrees with Theorem 13.

Figure 2.

Expectation (up) and variance (down) of , Example 2.

We now comment on some computational aspects. We have used the software Mathematica®, version 11.2 [37]. The integrals and expectations from (9) and (10) have been computed as multidimensional integrals with the built-in function NIntegrate (recall that the expectation is an integral with respect to the corresponding probability density function). Expression (9) does not pose serious numerical challenges, and one can use a standard NIntegrate routine with no specified options. However, for expression (10), we have set the option quasi-Monte Carlo with sampling points (otherwise the computational time would increase dramatically). We have checked numerically that the following factors increase the computational time: large ratio ; probability distributions with unbounded support for the input data; and moderate or large dimensions of the random space.

5. Conclusions

In this paper, we have performed a comprehensive stochastic analysis of the random linear delay differential equation with stochastic forcing term. The equation considered has one discrete delay , two random coefficients a and b (corresponding to the non-delay and the delay term, respectively) and two stochastic processes and (corresponding to the forcing term on and the initial condition on , respectively). Our setting supposes a step further than the previous contribution [17], in which no forcing term was considered (i.e., ). We have rigorously addressed the problem of extending the deterministic theory to the random scenario, by proving that the deterministic solution constructed via the method of steps and the method of variation of constants is an -solution, under certain assumptions on the random data. A new result, the random Leibniz’s rule for -Riemann integration has been necessary to derive our conclusions. We have also studied the behavior in of the random delay equation when the delay tends to zero.

Our approach has been shown to be useful to approximate the statistical moments of the solution stochastic process, in particular its expectation and its variance. Thus, it is possible to perform uncertainty quantification. Our procedure is an alternative to the usual techniques for uncertainty quantification: Monte Carlo simulation, generalized polynomial chaos (gPC) expansions, etc.

Our approach could be extendable to other random differential equations with or without delay. As usual, one could prove that the deterministic solution also works in the random framework. To do so, a rigorous and careful analysis of the probabilistic properties of the solution based on -calculus should be conducted.

Finally, we humbly think that advancing in theoretical aspects of random differential equations with delay will permit rigorously applying this class of equations to modeling phenomena involving memory and aftereffects together with uncertainties. In particular, they may be crucial to capture uncertainties inherent to some complex modeling problems, since input parameters of this type of equations may belong to a wider range of probability distributions than the ones considered in Itô differential equations.

Author Contributions

Investigation, M.J.; methodology, M.J.; software, M.J.; supervision, J.C.C.; validation, J.C.C.; visualization, J.C.C. and M.J.; writing—original draft, M.J.; writing—review and editing, J.C.C. and M.J. All authors have read and agreed to the published version of the manuscript.

Funding

This work has been supported by the Spanish Ministerio de Economía, Industria y Competitividad (MINECO), the Agencia Estatal de Investigación (AEI) and Fondo Europeo de Desarrollo Regional (FEDER UE) grant MTM2017–89664–P.

Conflicts of Interest

The authors declare that there is no conflict of interests regarding the publication of this article.

References

- Smith, R.C. Uncertainty Quantification: Theory, Implementation, and Applications; SIAM: Philadelphia, PA, USA, 2013; Volume 12. [Google Scholar]

- Xiu, D. Numerical Methods for Stochastic Computations: A Spectral Method Approach; Princeton University Press: Princeton, NJ, USA, 2010. [Google Scholar]

- Le Maître, O.P.; Knio, O.M. Spectral Methods for Uncertainty Quantification: With Applications to Computational Fluid Dynamics; Springer Science & Business Media: Berlin, Germany, 2010. [Google Scholar]

- Xiu, D.; Karniadakis, G.E. Supersensitivity due to uncertain boundary conditions. Int. J. Numer. Methods Eng. 2004, 61, 2114–2138. [Google Scholar] [CrossRef]

- Soong, T.T. Random Differential Equations in Science and Engineering; Academic Press: New York, NY, USA, 1973. [Google Scholar]

- Casabán, M.C.; Cortés, J.C.; Navarro-Quiles, A.; Romero, J.V.; Roselló, M.D.; Villanueva, R.J. A comprehensive probabilistic solution of random SIS-type epidemiological models using the random variable transformation technique. Commun. Nonlinear Sci. Numer. Simul. 2016, 32, 199–210. [Google Scholar] [CrossRef]

- Strand, J.L. Random ordinary differential equations. J. Differ. Equ. 1970, 7, 538–553. [Google Scholar] [CrossRef]

- Villafuerte, L.; Braumann, C.A.; Cortés, J.C.; Jódar, L. Random differential operational calculus: Theory and applications. Comput. Math. Appl. 2010, 59, 115–125. [Google Scholar] [CrossRef]

- Saaty, T.L. Modern Nonlinear Equations; Dover Publications: New York, NY, USA, 1981. [Google Scholar]

- Cortés, J.C.; Jódar, L.; Roselló, M.D.; Villafuerte, L. Solving initial and two-point boundary value linear random differential equations: A mean square approach. Appl. Math. Comput. 2012, 219, 2204–2211. [Google Scholar] [CrossRef]

- Calatayud, J.; Cortés, J.C.; Jornet, M.; Villafuerte, L. Random non-autonomous second order linear differential equations: Mean square analytic solutions and their statistical properties. Adv. Differ. Equ. 2018, 2018, 392. [Google Scholar] [CrossRef]

- Calatayud, J.; Cortés, J.C.; Jornet, M. Improving the approximation of the first-and second-order statistics of the response stochastic process to the random Legendre differential equation. Mediterr. J. Math. 2019, 16, 68. [Google Scholar] [CrossRef]

- Licea, J.A.; Villafuerte, L.; Chen-Charpentier, B.M. Analytic and numerical solutions of a Riccati differential equation with random coefficients. J. Comput. Appl. Math. 2013, 239, 208–219. [Google Scholar] [CrossRef]

- Burgos, C.; Calatayud, J.; Cortés, J.C.; Villafuerte, L. Solving a class of random non-autonomous linear fractional differential equations by means of a generalized mean square convergent power series. Appl. Math. Lett. 2018, 78, 95–104. [Google Scholar] [CrossRef]

- Nouri, K.; Ranjbar, H. Mean square convergence of the numerical solution of random differential equations. Mediterr. J. Math. 2015, 12, 1123–1140. [Google Scholar] [CrossRef]

- Calatayud, J.; Cortés, J.C.; Jornet, M. Random differential equations with discrete delay. Stoch. Anal. Appl. 2019, 37, 699–707. [Google Scholar] [CrossRef]

- Calatayud, J.; Cortés, J.C.; Jornet, M. Lp-calculus approach to the random autonomous linear differential equation with discrete delay. Mediterr. J. Math. 2019, 16, 85. [Google Scholar] [CrossRef]

- Caraballo, T.; Cortés, J.C.; Navarro-Quiles, A. Applying the Random Variable Transformation method to solve a class of random linear differential equation with discrete delay. Appl. Math. Comput. 2019, 356, 198–218. [Google Scholar] [CrossRef]

- Zhou, T. A stochastic collocation method for delay differential equations with random input. Adv. Appl. Math. Mech. 2014, 6, 403–418. [Google Scholar] [CrossRef]

- Shi, W.; Zhang, C. Generalized polynomial chaos for nonlinear random delay differential equations. Appl. Numer. Math. 2017, 115, 16–31. [Google Scholar] [CrossRef]

- Licea-Salazar, J.A. The Polynomial Chaos Method With Applications To Random Differential Equations. Ph.D. Thesis, University of Texas at Arlington, Arlington, TX, USA, 2013. [Google Scholar]

- Khusainov, D.Y.; Ivanov, A.; Kovarzh, I.V. Solution of one heat equation with delay. Nonlinear Oscil. 2009, 12, 260–282. [Google Scholar] [CrossRef]

- Øksendal, B. Stochastic Differential Equations: An Introduction with Applications; Springer Science & Business Media: New York, NY, USA, 1998. [Google Scholar]

- Shaikhet, L. Lyapunov Functionals and Stability of Stochastic Functional Differential Equations; Springer Science & Business Media: New York, NY, USA, 2013. [Google Scholar]

- Shaikhet, L. Stability of equilibrium states of a nonlinear delay differential equation with stochastic perturbations. Int. J. Robust Nonlinear Control. 2017, 27, 915–924. [Google Scholar] [CrossRef]

- Benhadri, M.; Zeghdoudi, H. Mean square asymptotic stability in nonlinear stochastic neutral Volterra-Levin equations with Poisson jumps and variable delays. Funct. Approx. Comment. Math. 2018, 58, 157–176. [Google Scholar] [CrossRef]

- Santonja, F.J.; Shaikhet, L. Analysing social epidemics by delayed stochastic models. Discret. Dyn. Nat. Soc. 2012, 2012. [Google Scholar] [CrossRef]

- Liu, L.; Caraballo, T. Analysis of a stochastic 2D-Navier–Stokes model with infinite delay. J. Dyn. Differ. Equ. 2019, 31, 2249–2274. [Google Scholar] [CrossRef]

- Lupulescu, V.; Abbas, U. Fuzzy delay differential equations. Fuzzy Optim. Decis. Mak. 2012, 11, 99–111. [Google Scholar] [CrossRef]

- Krapivsky, P.L.; Luck, J.M.; Mallick, K. On stochastic differential equations with random delay. J. Stat. Mech. Theory Exp. 2011, 2011, P10008. [Google Scholar] [CrossRef]

- Garrido-Atienza, M.J.; Ogrowsky, A.; Schmalfuß, B. Random differential equations with random delays. Stochastics Dyn. 2011, 11, 369–388. [Google Scholar] [CrossRef]

- Calatayud, J. A Theoretical Study of a Short Rate Model. Master’s Thesis, Universitat de Barcelona, Barcelona, Spain, 2016. [Google Scholar]

- Cortés, J.C.; Villafuerte, L.; Burgos, C. A mean square chain rule and its application in solving the random Chebyshev differential equation. Mediterr. J. Math. 2017, 14, 35. [Google Scholar] [CrossRef]

- Cortés, J.C.; Jódar, L.; Villafuerte, L. Numerical solution of random differential equations: A mean square approach. Math. Comput. Model. 2007, 45, 757–765. [Google Scholar] [CrossRef]

- Braumann, C.A.; Cortés, J.C.; Jódar, L.; Villafuerte, L. On the random gamma function: Theory and computing. J. Comput. Appl. Math. 2018, 335, 142–155. [Google Scholar] [CrossRef]

- Khusainov, D.Y.; Pokojovy, M. Solving the linear 1D thermoelasticity equations with pure delay. Int. J. Math. Math. Sci. 2015, 2015. [Google Scholar] [CrossRef]

- Wolfram Mathematica, V.11.2; Wolfram Research Inc.: Champaign, IL, USA, 2017.

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).