Abstract

We introduce three new estimators of the drift parameter of a fractional Ornstein–Uhlenbeck process. These estimators are based on modifications of the least-squares procedure utilizing the explicit formula for the process and covariance structure of a fractional Brownian motion. We demonstrate their advantageous properties in the setting of discrete-time observations with fixed mesh size, where they outperform the existing estimators. Numerical experiments by Monte Carlo simulations are conducted to confirm and illustrate theoretical findings. New estimation techniques can improve calibration of models in the form of linear stochastic differential equations driven by a fractional Brownian motion, which are used in diverse fields such as biology, neuroscience, finance and many others.

MSC:

60G22; 62M09

1. Introduction

Stochastic models with fractional Brownian motion (fBm) as the noise source have attained increasing popularity recently. This is because fBm is a continuous Gaussian process, increments of which are positively, or negatively correlated if Hurst parameter , or , respectively. If fBm coincides with classical Brownian motion and its increments are independent. The ability of fBm to include memory into the noise process makes it possible to build more realistic models in such diverse fields as biology, neuroscience, hydrology, climatology, finance and many others. The interested reader may check monographs [1,2], or more recent paper [3] and the references therein for more information.

Let be a fractional Brownian motion with Hurst parameter H defined on an appropriate probability space . Fractional Ornstein–Uhlenbeck process (fOU) is the unique solution to the following linear stochastic differential equation

where is a drift parameter (we consider ergodic case only) and is a noise intensity (or volatility). Recall that solution to Equation (1) can be expressed by the exact analytical formula:

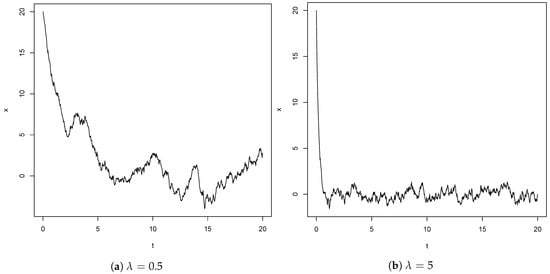

A single realization of the random process for a particular is the model for the single real-valued trajectory, part of which is observed. Two examples of such trajectories are given in Figure 1. We assume throughout this paper so that the fOU exhibits long-range dependence. For an example of application, see a neuronal model based on fOU described in the recent work [4].

Figure 1.

Two single trajectories of fOU with different values of λ, where σ = 2, x0 = 20, T = 20, H = 0.6.

The aim of this paper is to study the problem of estimating drift parameter based on an observation of a single trajectory of a fOU in discrete time instants with fixed mesh size and increasing time horizon (long-span asymptotics). Estimating drift parameter of a fOU observed in continuous time has been considered in [5,6], where least-squares estimator (LSE) and ergodic-type estimator are studied. These have advantageous asymptotic properties, they are strongly consistent and, if , also asymptotically normal. Ergodic-type estimator is easy to implement, but it has greater asymptotic variance compared to LSE, requires a priori knowledge of H and and does not provide acceptable results for non-stationary processes with limited time horizon.

A straightforward discretization of the least-squares estimator for a fOU has been introduced and studied in [7] for and in [8] for . For the precise formula, see (8). This estimator is consistent provided that both the time horizon and the mesh size (mixed in-fill and long-span asymptotics). However, it is not consistent when h is fixed and . This has led us to construct and study LSE-type estimators that converge in this long-span setting.

An easy modification of the ergodic-type estimator to discrete-time setting with fixed time step was given in [9], see (10) for precise formula, and its strong consistency (assuming ) and asymptotic normality (for ) when were proved, but with possibly incorrect technique (as pointed out in [10]). Correct proofs of asymptotic normality for and strong consistency for of this estimator were provided (in more general setup) in [10]. Note that the use of this discrete ergodic estimator requires the knowledge of parameter (in contrast to the estimators of least-squares type introduced below). Other works related to estimating drift parameter for discretely observed fOU include [11,12,13], but this list is by no means complete.

This work contributes to the problem of estimating drift parameter of fOU by introducing three new LSE-type estimators: least-squares estimator from exact solution, asymptotic least-squares estimator and conditional least-squares estimator. These estimators are tailored to discrete-time observations with fixed time step. We provide proofs of their asymptotic properties and identify situations, in which these new estimators perform better than the already known ones. In particular, we eliminate the discretization error (the LSE from exact solution), construct strongly consistent estimators in the long-span regime without assuming in-fill condition (the asymptotic LSE and the conditional LSE), and eliminate the bias in the least-squares procedure caused by autocorrelation of the noise term (the conditional LSE). Especially the conditional LSE demonstrates outstanding performance in all studied scenarios. This suggests that the newly introduced (to our best knowledge) concept of conditioning in the least-squares procedure applied to the models with fractional noise provides a powerful framework for parameter estimation in this type of models. The proof of its strong consistency, presented within this paper, is rather non-trivial and may serve as a starting point for investigation of similar estimators in possibly different settings. A certain disadvantage of the conditional LSE is its complicated implementation (involving optimization procedure), which is in contrast to the other studied estimators.

Let us explain the strength of the conditional least-squares estimator in more detail. Comparison of the two trajectories in Figure 1 demonstrates the effect of different values of on trajectories of fOU. In particular, it affects the speed of exponential decay in initial non-stationary phase and the variability in stationary phase. As we illustrate below, the discretized least-squares estimator, cf. (8), utilizes information about from the exponential decay in initial phase, but is not capable to make use of the information contained in the variability in stationary phase. As a consequence, it is not consistent (in long-span setting). On the contrary, the ergodic-type estimator, cf. (10), is derived from the variance of the stationary distribution of the process. It works well for stationary processes (and is consistent), but leaves idle (and even worse, it is corrupted by) the observation of the process in its initial non-stationary phase. In result, neither of these estimators can efficiently estimate drift from long trajectories with far-from-stationary initial values. This gap is best filled with the conditional least-squares estimator, cf. (25), which effectively utilizes both information stored in non-stationary phase and in stationary phase of the observed process. This unique property is demonstrated in Results and Discussion, where the conditional LSE (denoted by ) dominates the other estimators.

For the three newly introduced estimators the value of the Hurst parameter H is considered to be known a priori, whereas the knowledge of volatility parameter is not required, which is an advantage of these methods. If H is not known, it can be estimated in advance by some of many methods, such as methods based on quadratic variations (cf. [14]), sample quantiles or trimmed means (cf. [15]), or on a wavelet transform (cf. [16]), to name just a few. Another useful works in this direction include simultaneous estimation of and H using the powers of the second order variations (see [17], Chapter 3.3). The estimates of H (obtained independently from ) can subsequently be used in the LSE-type estimators of lambda introduced below in a way similar to [18].

In Section 2, some elements of stochastic calculus with respect to fBm are recalled, stationary fOU is introduced and precise formulas for two existing drift estimators and are provided. Section 3 is devoted to construction of a new LSE type estimator () based on exact formula for fOU. A certain modification of (denoted as ), which ensures long-span consistency, is introduced in Section 4. In Section 5, we rewrite the linear model using conditional expectations to overcome the bias in LSE caused by autocorrelation of the noise. Least-squares method, applied to the conditional model with explicit formulas for conditional expectations, results in the conditional least-squares estimator (). We prove strong consistency of this estimator. The actual performance of the newly introduced estimators , and as well as its comparison to the already-known and , is studied by Monte Carlo simulations in various scenarios and reported in Section 6. The simulated trajectories have been obtained in software R with YUIMA package (see [19]). Section 7 summarizes key points of the article and provides possible future extensions.

2. Preliminaries

For reader’s convenience we briefly review the basic concepts from theory of stochastic models with fractional noise in this section, including definition of fBm, Wiener integral of deterministic functions w.r.t. fBm and stationary fOU. This exposition follows [2,20]. For further reading, see also the monograph [1]. In the end of this section, we also recall formulas for discretized LSE and discrete ergodic estimator.

Fractional Brownian motion with Hurst parameter is a centered (zero-mean) continuous Gaussian process starting from zero ( and having the following covariance structure

Note that for the purpose of construction of the stationary fOU, we need a two-sided fBm with t ranging over the whole . In this case we have

As a consequence, the increments of fBm are negatively correlated for , independent for and positively correlated for .

Consider a two-sided fBm with and define Wiener integral of a deterministic step function with respect to the fBm by formula

for any positive integer N, real-valued coefficients and a partition . This definition constitutes the following isometry for any pair of deterministic step functions f and g

where . Using this isometry, we can extend the definition of the Wiener integral w.r.t. fBm to all elements of the space , defined as the completions of the space of deterministic step functions w.r.t. the scalar product defined above. In result, the formula (3) holds true for any , see also [21]. We will frequently use this formula in what follows, mainly to calculate the covariances of Wiener integrals.

Let be again a two-sided fBm with . Define

and denote by the solution to (1) with initial condition in the sense that it satisfies

This process is referred to as the stationary fOU and it can be expressed as

Note that the stationary fOU is an ergodic stationary Gaussian process (its autocorrelation function vanishes at infinity).

Consider now a stationary fOU observed at discrete time instants . The ergodicity and the formula for the second moment of stationary fOU (see e.g., [20]) imply

The rest of this section is devoted to the two popular estimators of the drift parameter of fOU observed at discrete time instants described in Introduction—the discretized LSE and the discrete ergodic estimator. Start with the former. Consider a straightforward discrete approximation of the Equation (1):

Application of the standard least-squares procedure to the linear approximation above provides the discretized LSE studied in [7,8], which takes the form

where h is the mesh size (time step) and

with and being the observations at adjacent time instants and respectively, of the process defined by (1) or (2). Note that having expressed in term of simplifies its comparison with the estimators newly constructed in this paper. Recall that for consistency of mixed in-fill and long-span asymptotics is required due to the approximation error in (7).

The discrete ergodic estimator is derived from asymptotic behavior of the (stationary) fOU. Recall the convergence in (4). Rearranging the terms provides an asymptotic formula for drift parameter expressed in terms of the limit of the second sample moment of the stationary fOU. Substituting the stationary fOU by the observed fOU in the asymptotic formula results in the discrete ergodic estimator:

which was studied in [9,10]. Recall that this estimator is strongly consistent in the long span regime (no in-fill condition needed), however, it heavily builds upon the asymptotic (stationary) behavior of the process and fails for processes with non-stationary initial phase (as illustrated by numerical experiments below).

3. Least-Squares Estimator from Exact Solution

Since the estimator obtained from naive discretization of (1) provides reasonable approximations only for non-stationary solutions with short time horizon and small time step (as seen from numerical simulations below), we eliminate discretization error by considering exact analytical formula for , see (2), and corresponding exact discrete formula for ,

where

The least-squares estimator for w.r.t. linear model (11) is given by , cf. (9), and the estimator for can be defined as

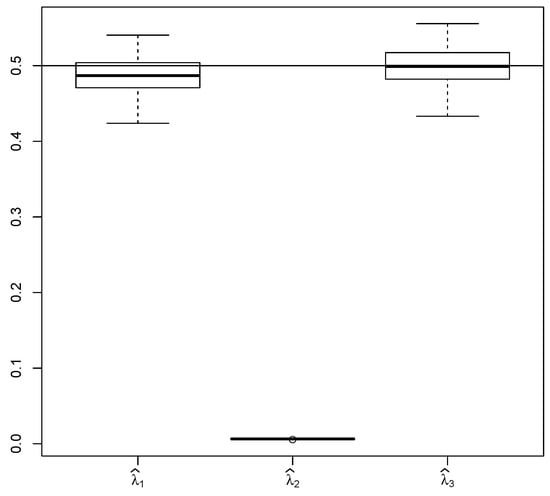

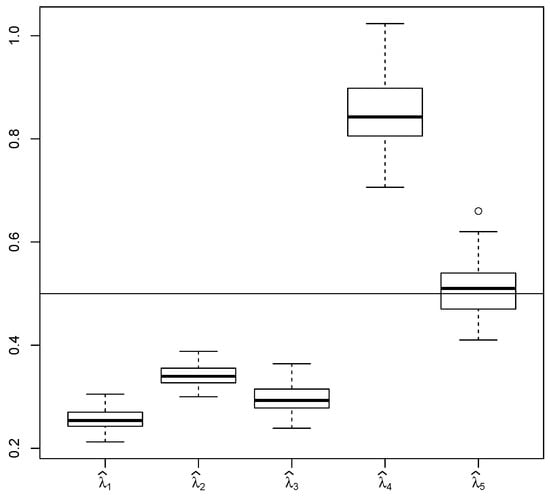

Numerical simulations show that works well for non-stationary solutions and short time horizon ( in simulations). The results for are presented in Figure 2. Simulation results for are similar.

Figure 2.

Comparison of , and for 100 trajectories, where , , , , ; the horizontal line shows the true value of the estimated parameter .

On the other hand, estimator does not provide good results for observations with long time horizon ( in simulations) since is not consistent if and is fixed. The reason is that and in (11) are correlated. In fact, we can calculate the almost sure limit of exactly. The limit is provided in Theorem 1. Its proof uses the following simple lemma (see [22]) to show the diminishing effect of the initial condition on limiting behaviour of sample averages. It is later used in the proof of Lemma 3 as well.

Lemma 1.

Consider real-valued sequences and such that

Then .

Theorem 1.

Let be fixed and define by

Then

In particular, .

Proof.

Since the effect of the initial condition vanishes at infinity, the limit behaviour of the non-stationary solution is same. Indeed,

The convergence of the first summand to zero follows from the facts that

and Lemma 1. Similar argument guarantees convergence of the second summand to zero as well. The convergence

can be shown correspondingly. In result, we obtain the almost sure convergence

The claim follows immediately from definition of . □

4. Asymptotic Least-Squares Estimator

Our goal in this section is modifying so that it converges to when and is fixed. Combination of (12) and (14) yields a.s. Thus, we can define the asymptotic least-squares estimator by relation . Since f is one-to-one (see below), the explicit formula for reads

The following lemma justifies invertibility of f in the definition of .

Lemma 2.

In our setting the function f defined by (13) is strictly decreasing on .

Proof.

Calculate the derivative

Continue with

Plug this estimate into the formula for to see

□

Remark 2.

Note that f is monotonous also for , but it is not monotonous if , which rules out the possibility to use estimator in this singular case.

Theorem 2.

The asymptotic least-squares estimator is strongly consistent, i.e.,

Proof.

Further recall that is differentiable with strictly negative derivative (see (17)). Thus, is also differentiable with strictly negative derivative and this implies

□

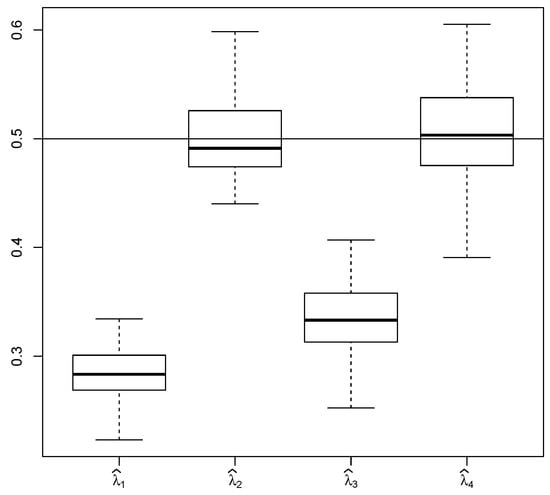

The strongly consistent estimator works well for stationary solutions or observations with long time horizon (see Figure 3). Moreover it does not require explicit knowledge of (in contrast to , which is also strictly consistent). On the other hand it does not provide adequate results for non-stationary solutions and short time horizon, since the correction function f reflects stationary behavior of the process (see Figure 4).

Figure 3.

Comparison of , , and for 100 trajectories, where , , , , ; the horizontal line shows the true value of the estimated parameter .

Figure 4.

Comparison of , , and for 100 trajectories, where , , , , ; the horizontal line shows the true value of the estimated parameter .

5. Conditional Least-Squares Estimator

Non-stationary trajectories with long time horizon contain a lot of information about , which is encoded mainly in two aspects: speed of decay in initial non-stationary phase and variance in stationary phase (see Figure 1). However, neither of the estimators ,, or can utilize all the information effectively. This motivates us to introduce another estimator. Recall that fails to be consistent because of bias in LSE caused by the correlation between and in Equation (11). To eliminate the correlation between explanatory variable and noise term in the linear model, we switch to conditional expectations. Start from the following equation, which defines :

where is the true value of the unknown drift parameter and the (conditional) expectation with respect to the measure generated by the fOU with drift value and initial condition . ( stands for an unknown throughout this section). In other words, means the conditional expectation of , conditioned by , where the process X is given by (2) with drift . Hence, has the same meaning as in previous sections.

Obviously and, consequently, and are uncorrelated. Indeed,

In result, we apply the least-squares technique to Equation (18), where is to be estimated, i.e., we would like to minimize

To calculate explicitly, use (11) and obtain

Note that random vector has 2-dimensional normal distribution (dependent on parameter )

and we can use explicit expression for its conditional expectation to write

With respect to the exact formula for given by (2) and relation (3) we get

where we used change-of-variable formula in the last step. Analogously

Using the expressions for and in (20) we obtain

We can thus reformulate the Equation (18) for the observed process X as the following model (linear in , but non-linear in ):

Now we aim to apply the least-squares method to the reformulated model to get the conditional least-squares estimator . To ensure the existence of global minima, we choose a closed interval ) and define as the minimizer of sum-of-squares function on this interval:

with criterion function defined as

where we used (24) with .

Note that is continuous in and therefore a minimum on the compact interval exists. Although model (24) is linear in , the coefficients A and B depend on t and that complicates the numerical minimization of .

Remark 3.

Let be the stationary solution to (1). Then

where f is defined in (13) and is arbitrary. Since the coefficient does not depend on t, it is possible to calculate LSE for explicitly and to construct the estimator of λ by applying . Such estimator coincides with introduced in previous chapter. Thus can be understood as the special case of conditional LSE for the stationary solution.

In order to prove strong consistency of the estimator we need to verify uniform convergence of to a function specified below. Let us start with the following proposition on uniform convergence of and . This proposition will help us in the sequel to investigate limiting behaviour of the two terms and in the sum-of-squares function .

Proposition 1.

and

Choose any and recall that is fixed. The uniform convergences in (27) and (28) imply the following convergences uniformly in :

and

respectively. Indeed, set and and fix any . There is such that for any ,

If , then for any . Consequently

which proves (29). The convergence in (30) can be shown analogously. These uniform convergences will be helpful in the proof of the following Lemma, which provides uniform convergence of to a limiting function . This uniform convergence is the key ingredient for the convergence of the minimizers .

Lemma 3.

Let f be defined by (13) and let be defined by (26), where is the observed process with drift value λ. Denote

Then

Proof.

First consider the stationary solution to (1) corresponding to drift value . Comparison of (6) with (13) yields

It enables us to write

for any , and, consequently

Recall that is ergodic and vanishes at infinity. Using Lemma 1 in the same way as in the proof of Theorem 1 implies

For the second term, write

Application of Lemma 1, the convergence in (29) and the continuity of f ensure the convergence with probability one of both summands to zero as .

The uniform convergence of the third term can be shown analogously:

where we use

which follows directly from (29) and the continuity of f.

Lemma 1 concludes the proof:

□

Previous considerations lead to the convergence of , being the minimizers of to the minimizer of . Next lemma ensures that this minimizer coincides with the true drift value .

Lemma 4.

Proof.

By definition

The claim follows immediately, because f is one-to-one (it is strictly decreasing).

Continuity of is a direct consequence of the continuity of f. □

Now we are in a position to prove the strong consistency of .

Theorem 3.

Proof.

The proof follows standard argumentation from nonlinear regression and utilizes Lemma 3 and Lemma 4. Choose sufficiently small so that and set

Consider a set of full measure on which the uniform convergence (32) holds and take such that

Fix any . Then for arbitrary we get

As minimizes , for all we have

Since was arbitrary (if small enough), we obtain the convergence

on a set of full measure. □

6. Results and Discussion

In Table 1 we present comparison of the root mean square errors (RMSE) of all considered estimators for and several combinations of , T and H. Estimators and demonstrate good performance in scenarios with far-from-zero initial () condition and short time horizon () This illustrates the fact that these estimators reflect mainly the speed of convergence to zero of the observed process in its initial phase. Increasing time horizon to adds a stationary phase to the observed trajectories, which distorts the estimators and .

Table 1.

Root mean square errors of the studied estimators calculated using 100 numerical simulations with .

Estimators and perform well in settings with stationary-like initial condition () and long time horizon (). This is because they are constructed from the stationary behavior of the process. Taking far-from-zero initial condition ruins these estimators, unless trajectory is very long.

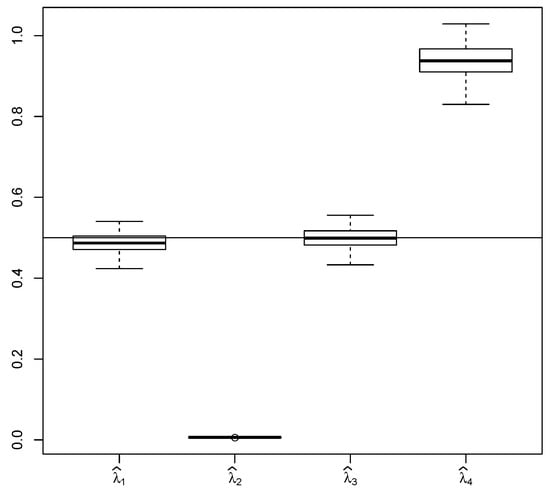

The conditional LSE, , shows reasonable performance in all studied scenarios and it significantly outperforms the other estimators in scenario with far-from-stationary initial condition () and long time horizon (). This results from the unique ability of this estimator to reflect and utilize information about the drift from both non-stationary (decreasing) phase and stationary (oscillating) phase. This is also illustrated on Figure 5. On the other hand, evaluation of is the most numerically demanding compared the other studied estimators.

Figure 5.

Comparison of , , , and for 100 trajectories, where , , , , ; the horizontal line shows the true value of the estimated parameter .

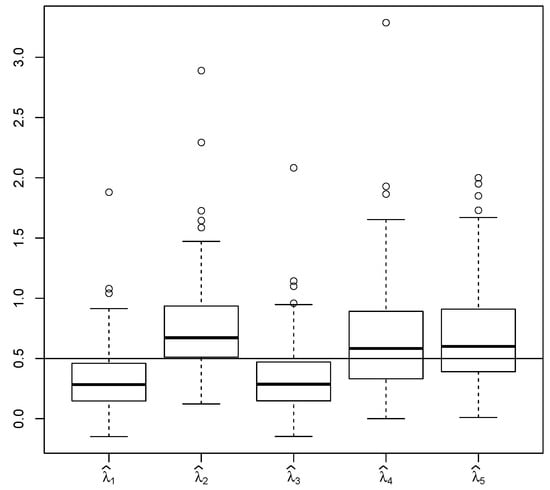

If and , shows greater RMSE than and in Table 1 due to being relatively close to zero. This causes that and have smaller variance (although greater bias) compared to (see Figure 6). In order to present this effect we have calculated RMSE for simulations in same scenario but with (see Table 2). provides smaller RMSE than the other estimators in this setting.

Figure 6.

Comparison of , , , and for 100 trajectories, where , , , , ; the horizontal line shows the true value of the estimated parameter .

Table 2.

Root mean square errors of the studied estimators calculated using 100 numerical simulations with .

7. Conclusions

Three new estimators were defined and studied:

- The least-squares estimator from exact solution (), which improves the popular discretized LSE () by eliminating the discretization error. It is easy to implement, since it can be calculated by a closed formula. However, it fails to be strongly consistent in long-span regime.

- The asymptotic least-squares estimator (), which is a modification of with respect to its asymptotic behavior. In result, is strongly consistent in the long-span regime and behaves similarly to the well-established discrete ergodic estimator (). The advantage of is that it does not require a priori knowledge of the volatility . On the other hand, its implementation includes a root-finding numerical procedure.

- The conditional least-squares estimator (), which eliminates the bias in the least-squares procedure by considering the conditional expectation of the response as the explanatory variable. The possibility to express the conditional expectation explicitly makes this approach feasible. This conditioning idea (which is new in the context of the models with fractional noise, to our best knowledge) provides exceptionally reliable estimator, which outperforms all the other studied estimators. We proved the strong consistency (in long-span regime) of this estimator. The implementation comprises solving an optimization problem.

These new estimating procedures can help practitioners or scientists from various fields to improve the calibration of their models based on available data with autocorrelated noise (these are typically observed/measured in discrete time instants) and, consequently, obtain more reliable conclusions from the calibrated models.

An interesting future extension would certainly be to explore the potential of the promising idea of the conditioning within least-squares procedure to more general models and settings (including d-dimensional fOU, fOU with , non-linear drift, multiplicative noise, etc.).

Author Contributions

Conceptualization, P.K.; methodology, P.K.; software, L.S.; writing—original draft, P.K. and L.S. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the grant LTAIN19007 Development of Advanced Computational Algorithms for Evaluating Post-surgery Rehabilitation.

Acknowledgments

We are grateful to four anonymous reviewers for their valuable comments, which helped improve this paper significantly.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Mishura, Y. Stochastic Calculus for Fractional Brownian Motion and Related Processes; Springer: Berlin/Heidelberg, Germany, 2008. [Google Scholar]

- Biagini, F.; Hu, Y.; Øksendal, B.; Zhang, T. Stochastic Calculus for Fractional Brownian Motion and Applications; Springer: London, UK, 2008. [Google Scholar]

- Abundo, M.; Pirozzi, E. On the Integral of the Fractional Brownian Motion and Some Pseudo-Fractional Gaussian Processes. Mathematics 2019, 7, 991. [Google Scholar] [CrossRef]

- Ascione, G.; Mishura, Y.; Pirozzi, E. Fractional Ornstein–Uhlenbeck Process with Stochastic Forcing, and its Applications. Methodol. Comput. Appl. Probab. 2019. [Google Scholar] [CrossRef]

- Hu, Y.; Nualart, D. Parameter estimation for fractional Ornstein–Uhlenbeck processes. Stat. Probab. Lett. 2010, 80, 1030–1038. [Google Scholar] [CrossRef]

- Hu, Y.; Nualart, D.; Zhou, H. Parameter estimation for fractional Ornstein–Uhlenbeck processes of general Hurst parameter. Stat. Inference Stoch. Process. 2019, 22, 111–142. [Google Scholar] [CrossRef]

- Es-Sebaiy, K. Berry-Esseen bounds for the least squares estimator for discretely observed fractional Ornstein–Uhlenbeck processes. Stat. Probab. Lett. 2013, 83, 2372–2385. [Google Scholar] [CrossRef]

- Kubilius, K.; Mishura, Y.; Ralchenko, K.; Seleznjev, O. Consistency of the drift parameter estimator for the discretized fractional Ornstein–Uhlenbeck process with Hurst index H is an element of (0,1/2). Electron. J. Stat. 2015, 9, 1799–1825. [Google Scholar] [CrossRef]

- Hu, Y.; Song, J. Parameter estimation for fractional Ornstein–Uhlenbeck processes with discrete observations. In Malliavin Calculus and Stochastic Analysis; Springer: Boston, MA, USA, 2013; Volume 34, pp. 427–442. [Google Scholar] [CrossRef]

- Es-Sebaiy, K.; Viens, F. Optimal rates for parameter estimation of stationary Gaussian processes. Stoch. Process. Their. Appl. 2019, 129, 3018–3054. [Google Scholar] [CrossRef]

- Azmoodeh, E.; Viitasaari, L. Parameter estimation based on discrete observations of fractional Ornstein–Uhlenbeck process of the second kind. Stat. Inference Stoch. Process. 2015, 18, 205–227. [Google Scholar] [CrossRef][Green Version]

- Neuenkirch, A.; Tindel, S. A least square-type procedure for parameter estimation in stochastic differential equations with additive fractional noise. Stat. Inference Stoch. Process. 2014, 17, 99–120. [Google Scholar] [CrossRef]

- Xiao, W.; Zhang, W.; Xu, W. Parameter estimation for fractional Ornstein–Uhlenbeck processes at discrete observation. Appl. Math. Model. 2011, 35, 4196–4207. [Google Scholar] [CrossRef]

- Istas, J.; Lang, G. Quadratic variations and estimation of the local Hölder index of a Gaussian process. Annales de l’I.H.P. Probabilités et Statistiques 1997, 33, 407–436. [Google Scholar] [CrossRef]

- Coeurjolly, J. Hurst exponent estimation of locally self-similar Gaussian processes using sample quantiles. Ann. Stat. 2008, 36, 1404–1434. [Google Scholar] [CrossRef]

- Rosenbaum, M. Estimation of the volatility persistence in a discretely observed diffusion model. Stoch. Process. Their. Appl. 2008, 118, 1434–1462. [Google Scholar] [CrossRef]

- Berzin, C.; Latour, A.; León, J. Inference on the Hurst Parameter and Variance of Diffusions Driven by Fractional Brownian Motion; Springer International Publishing: Cham, Switzerland, 2014. [Google Scholar]

- Brouste, A.; Iacus, S. Parameter estimation for the discretely observed fractional Ornstein–Uhlenbeck process and the Yuima R package. Comput. Stat. 2013, 28, 1529–1547. [Google Scholar] [CrossRef]

- Brouste, A.; Fukasawa, M.; Hino, H.; Iacus, S.; Kamatani, K.; Koike, Y.; Masuda, H.; Nomura, R.; Ogihara, T.; Shimuzu, Y.; et al. The YUIMA Project: A Computational Framework for Simulation and Inference of Stochastic Differential Equations. J. Stat. Softw. 2014, 4, 1–51. [Google Scholar] [CrossRef]

- Kubilius, K.; Mishura, Y.; Ralchenko, K. Parameter Estimation in Fractional Diffusion Models; Springer International Publishing AG: Cham, Switzerland, 2017. [Google Scholar]

- Pipiras, V.; Taqqu, M. Integration questions related to fractional Brownian motion. Probab. Theory Relat. Fields 2000, 118, 251–291. [Google Scholar] [CrossRef]

- Kříž, P.; Maslowski, B. Central limit theorems and minimum-contrast estimators for linear stochastic evolution equations. Stochastics 2019, 91, 1109–1140. [Google Scholar] [CrossRef]

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).