2. Maximum Likelihood Estimation

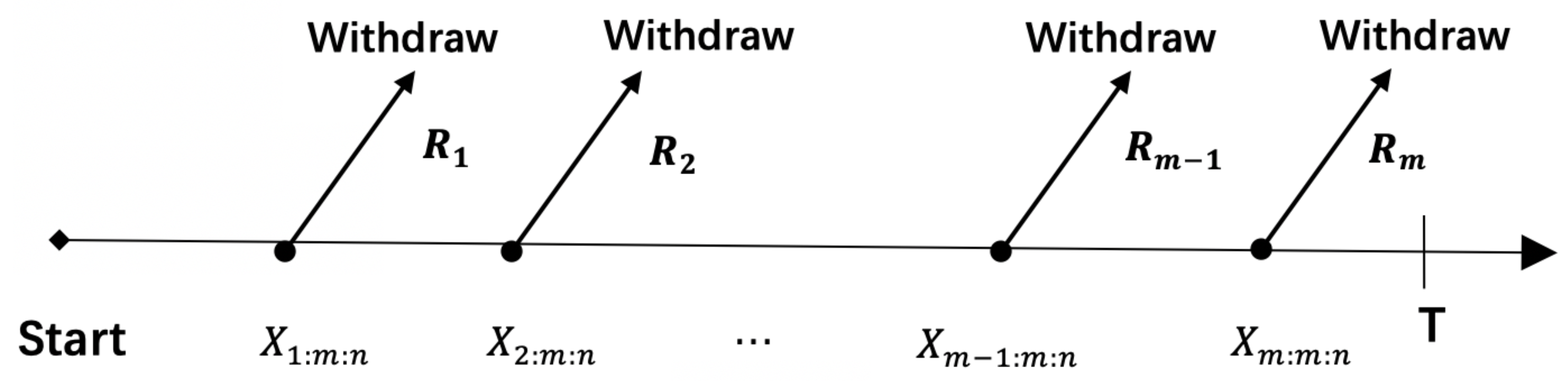

Suppose that

is an adaptive progressive type-II censored sample of size

m from a sample of size

n with censoring scheme

taken from distribution having

as the pdf and

as the cdf, and

is the last observed failure before

T which is prefixed best testing time. The parameter

(simplified as

in later equations) is used to represent the observed values of such an adaptive type-II progressively censored sample. On this basis, the corresponding likelihood function is given by

where

and

.

Therefore, the likelihood function for

taken from the Chen

is correspondingly written as

Further, the log-likelihood function can then be written as

Then take the partial derivatives of the log-likelihood function, and obtain the likelihood equations as follows:

From (

8), the maximum likelihood estimate of

can be illustrated as a function of

Thus, given

, the corresponding

with (

9) can be obtained. However, we cannot get the closed forms of (

7) and (

9). Therefore, numerical methods are included, such as Newton’s method, to determine the expected estimates.

Further, asymptotic confidence intervals are discussed. When the sample size n is large enough, the Fisher information matrix can be involved to get approximate confidence intervals of two unknown parameters. The Fisher information matrix is derived based on likelihood functions. By formula derivation, the pivot quantities with unknown parameters subject to asymptotically normal distribution are constructed. Then the asymptotic confidence intervals are obtained through Monte Carlo simulations.

The Fisher information matrix

is written as

and from the log-likelihood function (

6), we have the second partial of

with respect to

and

which is illustrated in the

Appendix A.

It is obvious that it is difficult to get the exact expression of the above expectation. Therefore, the observed Fisher information matrix without taking expectation will be used instead. Let

represent the maximum likelihood estimate of

, the observed Fisher information matrix

is given as follows:

Correspondingly, an approximated covariance matrix of

is given as follows:

The asymptotic normality of maximum likelihood estimation (MLE) supports that

follow bivariate normal distribution with mean vector

and covariance matrix

(

12) approximately. Thus,

approximate confidence intervals for the two parameters

and

can be written respectively, as

where

is the percentile of the standard normal distribution

with right-tail probability

.

3. Bayes Estimation

Different from maximum likelihood estimation, Bayes estimation analyzes the problem by combining the prior information of random samples from a certain distribution and maximum likelihood estimation. Therefore, Bayes estimation considers both the provided data and the prior probability and can infer the interested parameters more reasonably. When there is no prior probability information, non-information priors are considered.

First, it is supposed here that the unknown parameters

and

are independent and follow the gamma prior distributions

Then, the joint prior distribution for

and

becomes

According to the Bayes theory, we subsequently get the joint posterior probability distribution

as:

and

where

,

.

Thus, the marginal posterior probability distributions of

and

can be expressed, respectively, as

Generally, squared error loss function

of unknown parameter vector

is as follows:

where

represents the estimator of

.

Then we consider the Bayes estimators of

and

under squared error loss function and their corresponding minimum Bayes risks. It is proved that they are the expectation and the variance of the function (

17) and (

18), respectively. We can easily obtain Bayes estimates for the unknown parameters

and

and the corresponding minimum Bayes risks by using the marginal posterior distribution function to generate the first and second moments of

and

. Using

and

(

) to represent the

r-th central moments of

and

, then we have equations below

Thus, the Bayes point estimators and the corresponding minimum Bayes risks (MBR) of

and

are

and

Furthermore, since the marginal posterior probability distributions of and are obtained, the Bayesian two-sided credible intervals can also be derived.

By solving the following integral equations for

and

, the

Bayesian two-sided credible interval of

, say

, can be obtained.

Similarly for

, we can construct a credible interval, say

, using integral equations below:

Obviously, the Bayes estimation formulas above do not have closed forms in general, so we will use approximation techniques and numerical methods to generate the Bayes estimates. The Lindley approximation and Gibbs sampling are illustrated in the following sections.

3.1. Lindley Approximation

Lindley [

11] introduced a method to approximate the ratio of integrals in the following forms:

where

represents a vector of unknown parameters,

is the log-likelihood function and

,

are two arbitrary functions of

. Here, set

.

is a function of

whose expectation is of interest and

is the prior probability distribution of

.

Therefore, the posterior expectation of

is

where

.

Then the ratio of integral (

27) can have a linear approximation as follows:

where

,

,

,

and

is the

-th element of the Fisher information matrix.

In this case,

and

is the

-th element of the matrix (

10). The Lindley approximation to Bayes estimators of

and

(i.e., (

20), (

21))under the squared error loss function are

where

and

are maximum likelihood estimates of

and

. From (

6), other expressions in (

29) and (

30) can be calculated which are shown in details in the

Appendix B.

3.2. Gibbs Sampling

In statistics, Gibbs sampling is one of Markov chain Monte Carlo (MCMC) algorithm for approximating a set of observations from an interested multivariate probability distribution in situations where direct sampling is difficult. In this section, Gibbs sampling is involved to calculate the expectation (

20) and (

21) and two-sided credible intervals (

22)–(

25). Although it is difficult to generate samples directly from a posterior distribution, combining Metropolis-Hasting algorithm [

12] with Gibbs sampling can easily generate samples. Two-sided credible intervals can be generated with this method. Several Bayesian estimation methods of two parameters of the Chen distribution have already been discussed in many references, like in [

6], but the Gibbs (M-H) sampling method has not been involved yet. Therefore, in this section, we will conduct Bayes estimates through Gibbs sampling.

We can simplify the posterior distribution of parameters

to

as the Gibbs sampling density function, because the asymptotic estimate of

through the Gibbs (M-H) sampling method can be obtained without calculating the constants.

and

represent the conditional density functions of the parameters, respectively, as follows:

Then, sample and get a series values

and finally obtain Bayesian point estimation and

two-sided credible intervals from those new samplings. The main idea of Gibbs sampling is that instead of getting the estimate of

simultaneously, we estimate the parameter discretely in a sequence, which can significantly simplify the process. Obviously, the conditional density functions of

and

given in (

32) and (

33) cannot transfer analytically into distributions that can be sampled directly using existing algorithms. Therefore the Metropolis-Hastings algorithm with a normal proposal distribution is involved to generate

and

. The Gibbs (M-H) sampling procedure can be explained briefly in the following steps.

Thus, a series of Gibbs (M-H) samplings of

,

is obtained through former process. Now, the approximate expectation of

could be calculated as,

where

represents the burn-in time of the Gibbs sampling process when the samples can be assumed to come from the posterior distribution (

16), which is a constant determined in advance.

Under the squared error loss function, the Bayesian point estimate of parameter is generated with the procedure mentioned above.

To generate two-sided credible intervals of parameters

in following steps. First sort the sampling sequence

. Denote

=

-

. Then we have two groups of ordered samples:

Therefore, the Bayesian two-sided credible interval of can be computed as . Similarly, we can construct the Bayesian two-sided credible interval of as: .

4. Simulation and Comparisons

In this section, the simulation study is carried out to verify the feasibility of our estimation method and compare the effects of different estimation methods.

Using the algorithm offered by [

10,

13], the adaptive progressive type-II censored data from Chen

is generated with R program. Maximum likelihood estimates are computed by solving (

7) and (

8) in R program. The informative hyper-parameters

in (

15) are set to be

and Bayes estimates under Gibbs sampling are obtained using (

34) and (

35). Bayes point estimates under the Lindley approximation method are obtained by calculating (

29) and (

30). The simulation experiment is conducted for

times, and the mean values are taken as expected values (EV) of the unknown parameters, as it is illustrated below.

In order to compare the performances of maximum likelihood estimators for different settings of parameters, mean square error (MSE) of the estimated values

is also calculated as,

The asymptotic 95% confidence interval obtained by fisher observation matrix is obtained by (

13) an (

14). The Bayes two-sided 95% credible interval using Gibbs sampling is generated with Algorithm 1. The simulation experiment is repeated for

times, and the corresponding interval coverages and average interval lengths are calculated using R. We further present the average 95% intervals of the parameter setting

to discuss the reason for the difference in interval coverage and average interval length of interval estimation results obtained by the two methods.

| Algorithm 1 Gibbs sampling with Metropolis-Hastings algorithms. |

Based on the sampling , first initialize the parameters as of and set repeat Set and Generate a candidate parameter from where is the variance of . Set = Calculate Accept with probability and accept with probability . Generate another candidate parameter from . Set = Calculate Accept with probability and accept with probability . Therefore, we obtain Let until

|

In our study, two expected total time on test

T are

. We consider three different values of

within which three progressive censoring schemes

R are considered (shown in

Table 1 in details). For brevity, we simplify the representation of

R. For example, the censoring scheme (0,0,0,0,0,0,0,0,0,0,0,0,0,0,15) is denoted by (0*14,15). Under each censoring scheme, four studied settings of

are

which represent the Chen distribution in different situations.

From the simulation study, the following conclusions are drawn.

(1)

Table 2 and

Table 3 present the comparison of the maximum likelihood method and Bayes (using Gibbs-MH) method in terms of point estimation results. For both maximum likelihood estimation and Bayes estimation, under the condition that the parameters to be estimated remain unchanged when the sample size

n increases, the expected values are closer to the set true values and the mean square errors are smaller. Simulation results show a better fitness performance when the expected total time on test is set with

under most censoring schemes. Although the results in simulation experiments of other parameter settings are satisfactory, the estimation effect of

is poor. When the sample sizes

n and expected finishing time

T are fixed, the first censoring scheme

is more efficient than others. Bayes estimates are, in most cases, slightly more effective than maximum likelihood estimates in terms of expected values and smaller mean square errors.

(2)

Table 4 and

Table 5 present the coverage probability and average length of two-sided 95% confidence intervals of parameters constructed by maximum likelihood method (using fisher observation matrix) and the Bayes method (using Gibbs (M-H)). For the same set of parameter, both the asymptotic confidence interval obtained by fisher observation matrix and two-sided probability interval constructed with Gibbs sampling are more accurate when the sample size

n increases. That is, the interval coverage probability of the 95% asymptotic confidence interval of

and

will be closer to 95%; and the average length of the interval is getting shorter. Meanwhile, other variables in the simulation experiment, namely the experiment end time

T and different censoring schemes

R, had no significant effect on the results of interval estimation. For the asymptotic confidence intervals using fisher observation matrix, under almost all parameter settings, the asymptotic confidence intervals of

are more ideal than that of

, that is, the coverage of 95% confidence intervals of

are closer to 95%, while the coverage probability of

are always low. In this term, the two-sided credible intervals of the Gibbs sampling method perform comparatively better.

(3) The coverage probability and average length of the interval obtained by two methods are significantly different under the set of parameters

. For the estimation results of

, the interval coverage probability of the asymptotic confidence interval obtained by the Fisher observation matrix is slightly higher than that obtained by Gibbs sampling, while the average interval length of the latter is slightly lower. For the estimation results of

, the interval coverage probability of the asymptotic confidence interval obtained by the Fisher observation matrix is noticeably higher than that obtained by Gibbs sampling, but the average interval length of the latter is only half of that of the former. Therefore the average interval results of

are shown in

Table 6 for further illustration. There are obviously different results for the upper bound of the interval of

under the two methods. For the asymptotic confidence interval obtained by the Fisher observation matrix (where the average length is longer), the truth value of

is in the middle of the interval and can be well covered. For the interval obtained by Gibbs sampling, the truth value of

is very close to the upper bound of the credible interval, so the randomness of the simulation experiment may lead to the fact that the true value is not covered in the credible interval.

(4) Lindley approximation method, as a kind of linear approximation method in the Bayesian estimation, whose estimation result is not ideal from the perspective of estimated value and mean square error when the lifetime distribution property is special and the sample structure is complex. Therefore, we only show Lindley approximation estimation results of

when

in

Table 7 to illustrate. In addition, Lindley approximation can only obtain point estimation, but not confidence interval which is more widely used in real life, so compared with it, maximum likelihood estimation and Bayes estimation obtained by Gibbs-MH sampling are better.

5. Real Data Analysis

In this section, we apply our estimation methods in a real data set to verify the feasibility. The data set from Michele D. Nichols and W. J. Padgett [

14] was used for illustration. A complete sample of data shows the observed fracture stress of 100 carbon fibers (shown in

Table 8).

The data was previously thought to come from the exponentiated Weibull distribution with probability density function of

(

distribution) in [

15]. We used the Kolmogorov-Smirnov (K-S) teat and compared the K-S distances (maximum vertical deviation between the probability density curve of the dataset and the given probability density curve) and p-value (in statistical hypothesis test) of four multi-parameter distributions, including the Chen distribution and the

distribution. The results in

Table 9 show that the K-S distance of the Chen distribution is smaller than that of the

distribution, indicating that the fitting effect of the data set is better, so it is reasonable to assume that the data set comes from the Chen distribution.

Then consider the estimation problem under adaptive type-II progressively censored data. We withdraw samples of size

from the complete sample of size

. Censoring schemes are set to be

and

, while two values of expected finish time

T are (1.6,3.2).

Table 10,

Table 11,

Table 12 and

Table 13 illustrate our adaptive type-II progressively censored data in details.

We consider gamma priors for Bayes estimation on both

and

where the hyper-parameters are 0 (i.e.,

). Using the formulas mentioned in the simulation experiment, we can obtain the maximum likelihood point estimates, confidence intervals using the observed fisher matrix, Bayes point estimates, and Bayes credible intervals of unknown parameters

and

of the real data set. The estimation results are shown in

Table 14. We have conclusions as follows:

With the same estimation method (i.e., MLE, Gibbs-MH, Lindley), results show little difference under different censoring schemes R and best finishing times T.

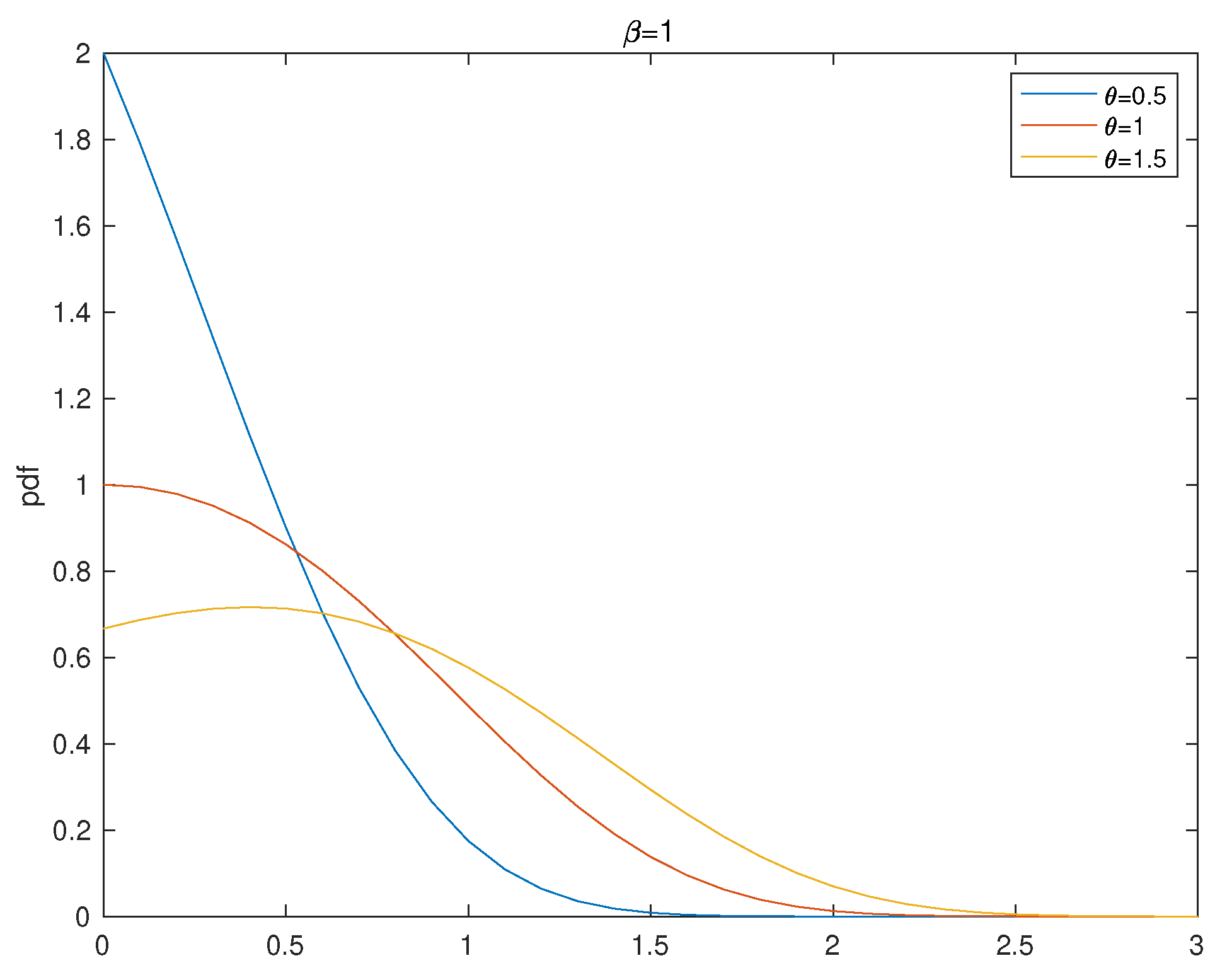

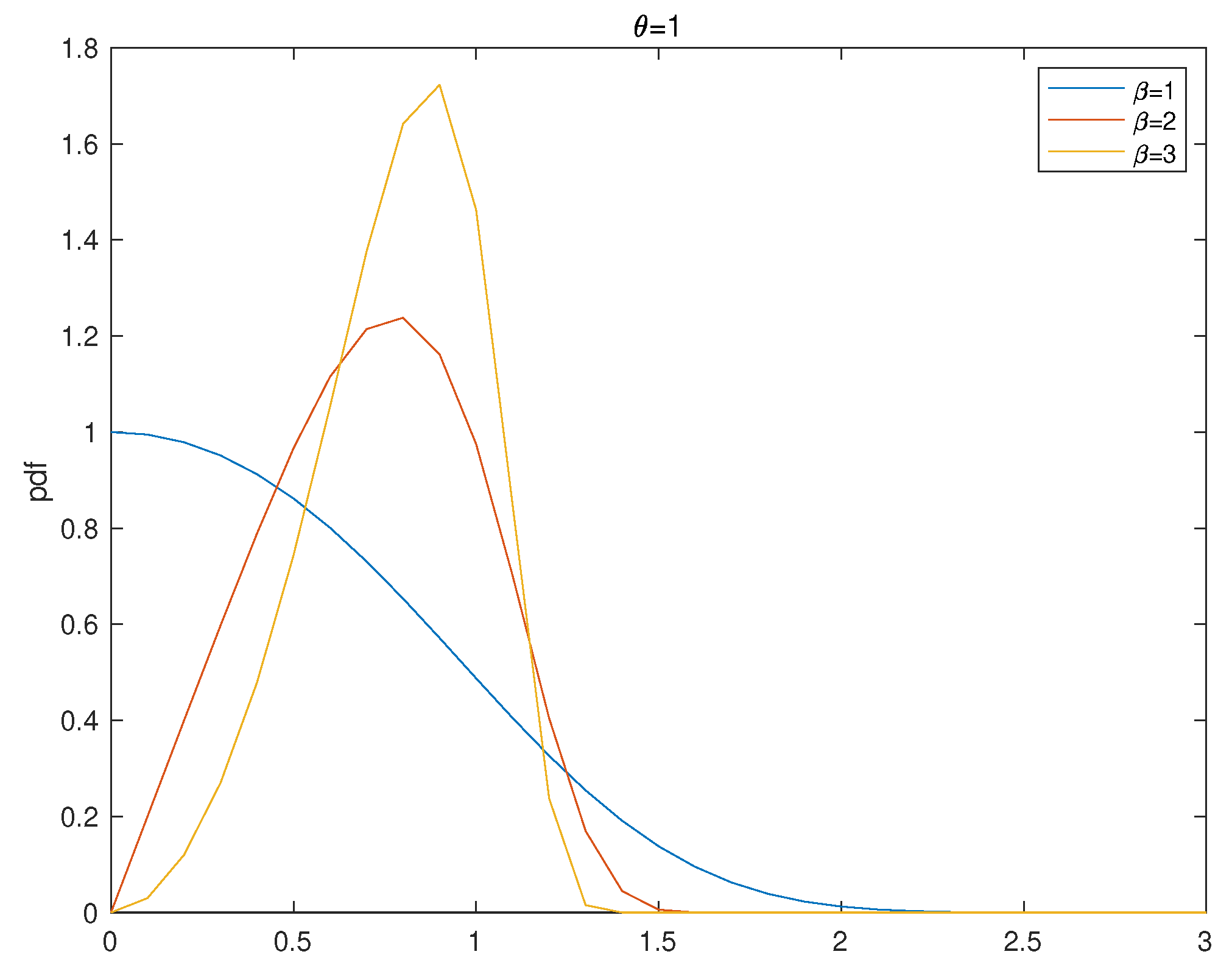

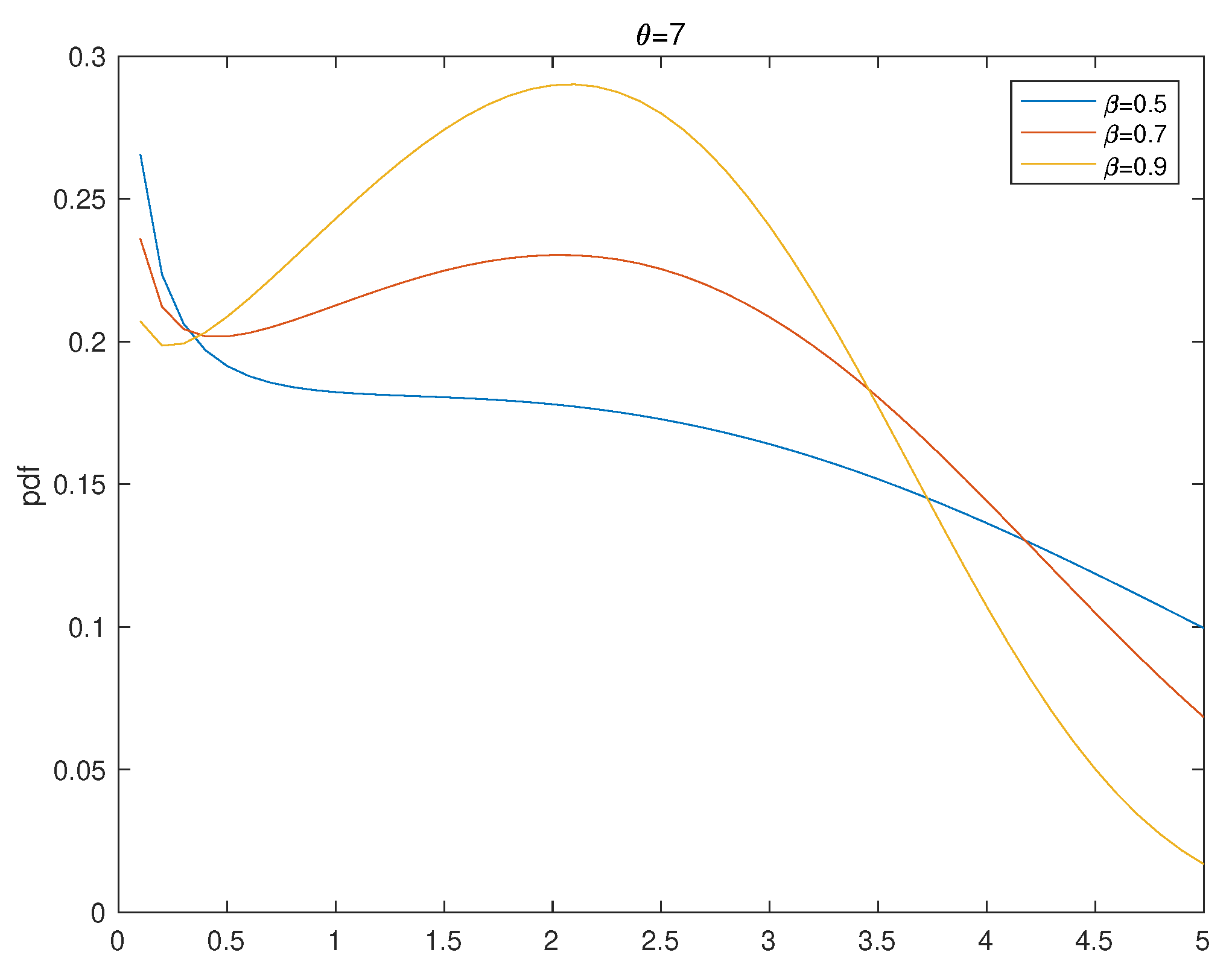

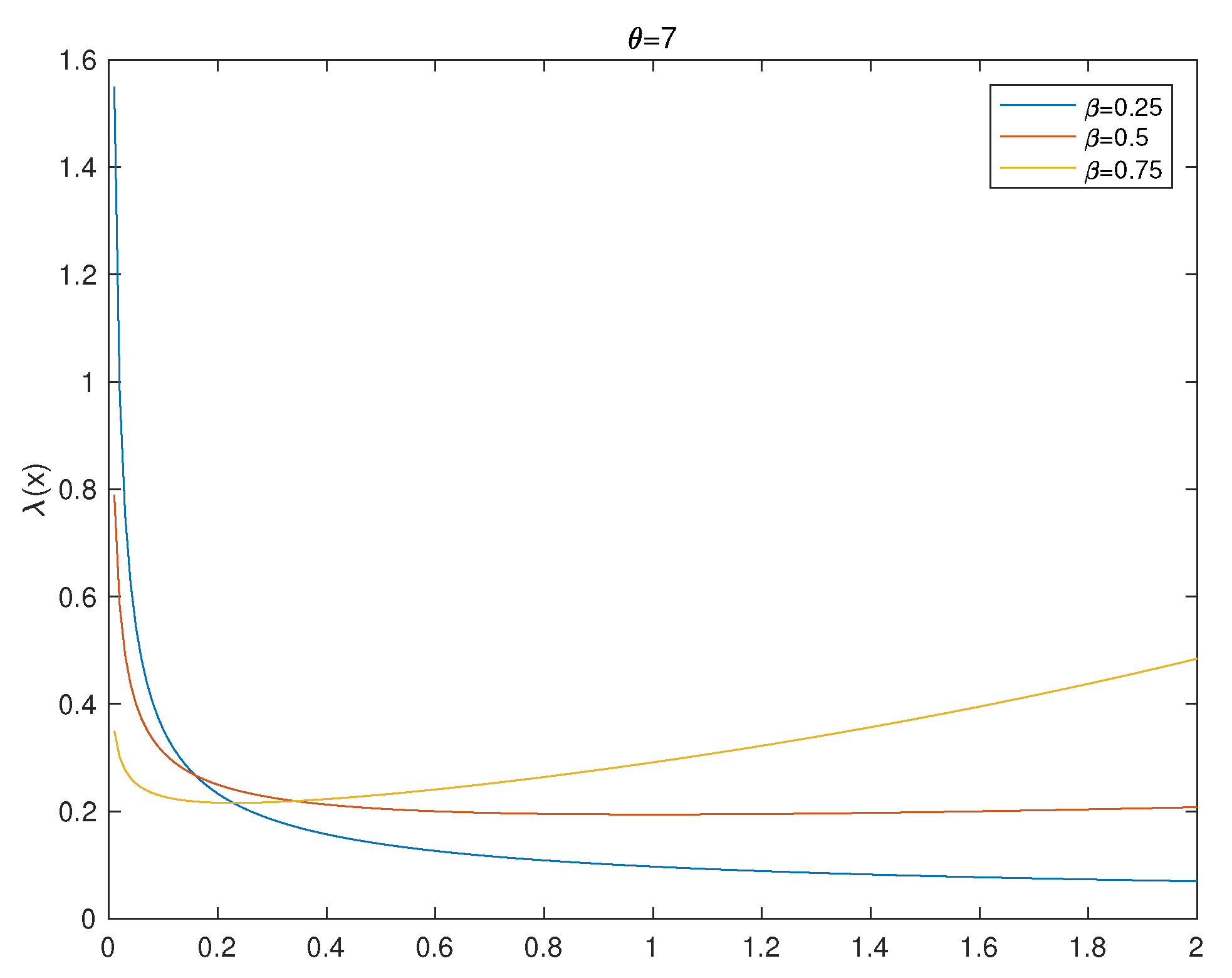

It can be seen from the estimated value of the parameters that the Chen distribution of the data follows has a bathtub-shaped failure rate function. There is no significant difference between the results of point estimation and interval estimation obtained by MLE and Gibbs sampling. The point estimation obtained by the Lindley approximation method is different from that of the former two methods. The reason can be that the setting of parameter allows the failure rate function of the Chen distribution takes the shape of a bathtub, which makes it more complicated to estimate its parameters. This difficulty is also illustrated in our simulation process. We find that the MSE of the point estimates, as well as interval coverage probability of the confidence interval for the setting , are apparently larger than other parameter settings.

6. Conclusions

In this paper, the estimation problem of two unknown parameters and from the Chen distribution is discussed. This distribution has a bathtub-shaped failure rate function, making it suitable for fitting many real-world data. The statistical properties of this highly applicable distribution are studied based on adaptive type-II progressively censored data, which make our research easier to be applied to practical industrial fields. This censoring scheme gives people better control over the process of experiments in terms of time and funding because it can shorten or lengthen the process.

We discuss the maximum likelihood estimators as well as asymptotic confidence intervals by including the observed Fisher information matrix for unknown parameters. On the premise that the prior distributions are gamma distributions, the theoretical results of the Bayes point estimation and Bayes two-sided credible intervals under the squared error loss function are also given. Finding that accurate estimates are not available, we use approximation techniques such as Lindley approximation and Gibbs sampling to calculate the results.

In the simulation and real data tests, the maximum likelihood estimates and the Bayes estimate under Gibbs sampling show an insignificant difference, though the latter has slightly better performances than the former. In terms of estimated value and mean square error, the result of the point estimator obtained by Lindley approximation is not as good as the former two. When the parameters are set so that the Chen distribution has a bathtub-shaped failure rate function, the accuracy of the estimation results decreases. In the future work, we may explore the optimization algorithm for this remaining problem and propose a more accurate estimation method.

Moreover, we will also study the change-point analysis of the lifetime distribution having a bathtub-shaped failure rate function, which has a wide range of applications. For example, it can help people better analyze the performance of products and predict the total life of products in the actual production.