Abstract

A parameter-free optimization technique is applied in Quasi-Newton’s method for solving unconstrained multiobjective optimization problems. The components of the Hessian matrix are constructed using q-derivative, which is positive definite at every iteration. The step-length is computed by an Armijo-like rule which is responsible to escape the point from local minimum to global minimum at every iteration due to q-derivative. Further, the rate of convergence is proved as a superlinear in a local neighborhood of a minimum point based on q-derivative. Finally, the numerical experiments show better performance.

Keywords:

multiobjective programming; methods of quasi-Newton type; Pareto optimality; q-calculus; rate of convergence MSC:

90C29; 90C53; 58E17; 05A30; 41A25

1. Introduction

Multiobjective optimization is the method of optimizing two or more real valued objective functions at the same time. There is no ideal minimizer to minimize all objective functions at once, thus the optimality concept is replaced by the idea of Pareto optimality/efficiency. A point is called Pareto optimal or efficient if there does not exist an alternative point with the equivalent or smaller objective function values, such that there is a decrease in at least one objective function value. In many applications such as engineering [1,2], economic theory [3], management science [4], machine learning [5,6], and space exploration [7], etc., several multiobjective optimization techniques are used to make the desired decision. One of the basic approaches is the weighting method [8], where a single objective optimization problem is created by the weighting of several objective functions. Another approach is the -constraint method [9], where we minimize only the chosen objective function and keep other objectives as constraints. Some multiobjective algorithms require a lexicographic method, where all objective functions are optimized in their order of priority [10,11]. First, the most preferred function is optimized, then that objective function is transformed into a constraint and a second priority objective function is optimized. This approach is repeated until the last objective function is optimized. The user needs to choose the sequence of objectives. Two distinct lexicographic optimizations with distinct sequences of objective functions do not produce the same solution. The disadvantages of such approaches are the choice of weights, constraints, and importance of the functions, respectively, which are not known in advance and they have to be specified from the beginning. Some other techniques [12,13,14] that do not need any prior information are developed for solving unconstrained multiobjective optimization problems (UMOP) with at most linear convergence rate. Other methods like heuristic approaches or evolutionary approaches [15] provide an approximate Pareto front but do not guarantee the convergence property.

Newton’s method [16] that solves the single-objective optimization problems is extended for solving (UMOP), which is based on an a priori parameter-free optimization method [17]. In this case, the objective functions are twice continuously differentiable, no other parameter or ordering of the functions is needed, and each objective function is replaced with a quadratic model. The rate of convergence is observed as superlinear, and it is quadratic if the second-order derivative is Lipschitz continuous. Newton’s method is also studied under the assumptions of Banach and Hilbert spaces for finding the efficient solutions of (UMOP) [18]. A new type of Quasi-Newton algorithm is developed to solve the nonsmooth multiobjective optimization problems, where the directional derivative of every objective function exists [19].

A necessary condition for finding the vector critical point of (UMOP) is introduced in the steepest descent algorithm [12], where neither weighting factors nor ordering information for the different objective functions are assumed to be known. The relationship between critical points and efficient points is discussed in [17]. If the domain of (UMOP) is a convex set and the objective functions are convex component-wise then every critical point is the weak efficient point, and if the objective functions are strictly convex component-wise, then every critical point is the efficient point. The new classes of vector invex and pseudoinvex functions for (UMOP) are also characterized in terms of critical points and (weak) efficient points [20] by using Fritz John (FJ) optimality conditions and Karush–Kuhn–Tucker (KKT) conditions. Our focus is on Newton’s direction for a standard scalar optimization problem which is implicitly induced by weighting the several objective functions. The weighting values are a priori unknown and non-negative KKT multipliers, that is, they are not required to fix in advance. Every new point generated by the Newton algorithm [17] initiates such weights in the form of KKT multipliers.

Quantum calculus or q-calculus is also called calculus without limits. The q-analogues of mathematical objects can be again recaptured as . The history of quantum calculus can be traced back to Euler (1707–1783), who first proposed the quantum q in Newton’s infinite series. In recent years, many researchers have shown considerable interest in examining and exploring the quantum calculus. Therefore, it emerges as an interdisciplinary subject. Of course, the quantum analysis is very useful in numerous fields such as in signal processing [21], operator theory [22], fractional integral and derivatives [23], integral inequalities [24], variational calculus [25], transform calculus [26], sampling theory [27], etc. The quantum calculus is seen as the bridge between mathematics and physics. To study some recent developments in quantum calculus, interested researches should refer to [28,29,30,31].

The q-calculus was first studied in the area of optimization [32], where the q-gradient is used in steepest descent method to optimize objective functions. Further, global optimum was searched using q-steepest descent method and q-conjugate gradient method where a descent scheme is presented using q-calculus with the stochastic approach which does not focus on the order of convergence of the scheme [33]. The q-calculus is applied in Newton’s method to solve unconstrained single objective optimization [34]. Further, this idea is extended to solve (UMOP) within the context of the q-calculus [35].

In this paper, we present the q-calculus in Quasi-Newton’s method for solving (UMOP). We approximate the second q-derivative matrices instead of evaluating them. Using q-calculus, we present the convergence rate is superlinear.

The rest of this paper is organized as follows. Section 2 recalls the problem, notation, and preliminaries. Section 3 derives a q-Quasi-Newton direction search method solved by (KKT) conditions. Section 4 establishes the algorithms for convergence analysis. The numerical results are given in Section 5 and the conclusion is in the last section.

2. Preliminaries

Denote as the set of real numbers, as the set of positive integers, and or as the set of strictly positive or (negative) real numbers. If a function is continuous on any interval excluding zero, then the function is called continuous q-differentiable. For a function , the q-derivative of f [36] denoted as , is given as

Suppose , whose partial derivatives exist. For , consider an operator on f as

The q-partial derivative of f at x with respect to , indicated by , is [23]:

We are interested to solve the following (UMOP):

where is a feasible region and . Note that the function is a vector function whose components are real valued functions such as where In general, n and m are independent. For , we present the vector inequalities as:

A point is called Pareto optimal point such that there is no any point for which A point is called weakly Pareto optimal point if there is no for which Similarly, a point is a local Pareto optimal if there exists a neighborhood of such that the point is a Pareto optima for F restricted on Y. Similarly, a point is a local weak Pareto optima if there exists a neighborhood of such that the point is a weak Pareto optimal for F restricted on Y. The matrix is the Jacobian matrix of at x, i.e., the j-th row of is (q-gradient) for all Let be the Hessian matrix of at x for all Note that every Pareto optimal point is a weakly Pareto optimal point [37]. The directional derivative of at x in the descent direction is given as:

The necessary condition to get the critical point for multiobjective optimization problems is given in [17]. For any , denotes the Euclidean norm in . Let with a center and radius Norm of the matrix is The following proposition indicates that when is a linear function, then the q-gradient is similar to the classical gradient.

Proposition 1

([33]). If , where and , then for any , and , we have .

All the quasi-Newton methods approximate the Hessian of function f as , and update the new formula based on previous approximation [38]. Line search methods are imperative methods for (UMOP) in which a search direction is first computed and then along this direction a step-length is chosen. The entire process is an iterative.

3. The -Quasi-Newton Direction for Multiobjective

The most well-known quasi-Newton method for single objective function is the BFGS (Broyden, Fletcher, Goldfarb, and Shanno) method. This is a line search method along with a descent direction within the context of q-derivative, given as:

where f is a continuously q-differentiable function, and is a positive definite matrix that is updated at every iteration. The new point is:

In the case of the Steepest Descent method and Newton’s method, is taken to be an Identity matrix and exact Hessian of f, respectively. The quasi-Newton BFGS scheme generates the next as

where , and . In Newton’s method, second-order differentiability of the function is required. While calculating , we use q-derivative which behaves like a Hessian matrix of . may not be a positive definite, which can be modified to be a positive definite through the symmetric indefinite factorization [39]. The q-Quasi-Newton’s direction is an optimal solution of the following modified problem [40] as:

where is computed as (8). The solution and optimal value of (9) are:

and

The problem (9) becomes a convex quadratic optimization problem (CQOP) as follows:

The Lagrangian function of (CQOP) is:

For , we obtain the following (KKT) conditions [40]:

The solution is unique, and set for all with and for satisfying (14)–(18). From (14), we obtain

This is a so-called q-Quasi-Newton’s direction for solving (UMOP). We present the basic result for relating the stationary condition at a given point x to its q-Quasi-Newton direction and function .

Proposition 1.

- 1.

- for all .

- 2.

- The conditions below are equivalent:

- (a)

- The point x is non stationary.

- (b)

- (c)

- .

- (d)

- is a descent direction.

- 3.

- The function ψ is continuous.

Proof.

Since then from (10), we have

thus It means that . Thus, the given point is non-stationary. Since is positive definite, and from (10) and (11), we have

Since is the optimal value of (CQOP), and it is negative, thus solution of (CQOP) can never be It is sufficient to show that the continuity [41] of in set . Since then

for all and where are positive definite for all . Thus, the eigenvalues of Hessian matrices , where are uniformly bounded away from zero on Y so there exists such that

and

From (20) and using Cauchy–Schwarz inequality, we get

that is,

for all , that is, Newton’s direction is uniformly bounded on Y. We present the family of function , where

and

We shall prove that this family of functions is uniformly equicontinuous. For small value there exists , and for , we have

and

for all . because of q-continuity of Hessian matrices, the second inequality is true. Since Y is compact space, then there exists a finite sub-cover.

that is

To show the q-continuous of last term, set such that for small , then

is uniformly continuous [40] for all and for all There exists such that for all , implies for all . Thus,

Thus, . If we interchange y and z, then . It proves the continuity of □

The following modified lemma is due to [17,42].

Lemma 1.

Let be continuously q-differentiable. If is not a critical point for , where , , and . Then,

for any and

Proof.

Since is not a critical point, then . Let such that and Therefore,

Since , for , then

The last term in the right-hand side of the above equation is non-positive because for . □

4. Algorithm and Convergence Analysis

We first present the following Algorithm 1 [43] to find the gradient of the function using q-calculus. The higher-order q-derivative of f can be found in [44].

| Algorithm 1q-Gradient Algorithm |

|

Example 1.

Given that defined by Then .

We are now prepared to write the unconstrained q-Quasi-Newton’s Algorithm 2 for solving (UMOP). At each step, we solve the (CQOP) to find the q-Quasi-Newton direction. Then, we obtain the step length using the Armijo line search method. In every iteration, the new point and Hessian approximation are generated based on historical values.

| Algorithm 2q-Quasi-Newton’s Algorithm for Unconstrained Multiobjective (q-QNUM) |

|

We now finally start to show that every sequence produced by the proposed method converges to a weakly efficient point. It does not matter how poorly the initial point is guessed. We assume that the method does not stop, and produces an infinite sequence of iterates. We now present the modified sufficient conditions for the superlinear convergence [17,40] within the context of q-calculus.

Theorem 1.

Let be a sequence generated by (q-QNUM), and be a convex set. Also, and and

- (a)

- for all

- (b)

- for all with

- (c)

- for all ,

- (d)

- (e)

- (f)

Then, for all , we have that

- 1.

- 2.

- ,

- 3.

- 4.

Then, the sequence converges to local Pareto points , and the convergence rate is superlinear.

Proof.

From part 1, part 3 of this theorem and triangle inequality,

From (d) and (f), we follow and . We also have

that is,

Since and we get

for all The Armijo conditions holds for . Part 1 of this theorem holds. We now set , and . Thus, we get . We now define Therefore,

We now estimate . For , we define

and

where for all are KKT multipliers. We obtain following:

and

Then, . We get

From assumptions (b) and (c) of this theorem,

hold for all with and . We have

Since , then

and

We have

Thus,

Thus, part 4 is proved. We finally prove superlinear convergence of . First we define

and

From triangle inequality, assumptions (e), (f) and part 1, we have Choose any , and define

For inequalities

for all with and

for all and holds both for Assumptions (a)–(f) are satisfied for and instead of and , respectively. We have

Let and we get Using the last inequality, and part 4, we have

From above and triangle inequality, we have

that is,

Since and , then we get

where is chosen arbitrarily. Thus, the sequence converges superlinearly to □

5. Numerical Results

The proposed algorithm (q-QNUM), i.e., Algorithm 2, presented in Section 4 is implemented in MATLAB (2017a) and tested on some test problems known from the literature. All tests were run under the same conditions. The box constraints of the form are used for each test problem. These constraints are considered under the direction search problem (CQOP) such that the newly generated point always lies in the same box, that is, holds. We use the stopping criteria at as: where . All test problems given in Table 1 are solved 100 times. The starting points are randomly chosen from a uniform distribution between and . The first column in the given table is the name of the test problem. We use the abbreviation of author’s names and number of the problem in the corresponding paper. The second column indicates the source of the paper. The third column is for lower bound and upper bound. We compare the results of (q-QNUM) with (QNMO) of [40] in the form of a number of iterations (), number of objective functions evaluation (), and number of gradient evaluations (), respectively. From Table 1, we can conclude that our algorithm shows better performance.

Table 1.

Numerical Results of Test Problems.

Example 2.

Find the approximate Pareto front using (q-QNUM) and (QNMO) for the given (UMOP) [45]:

where .

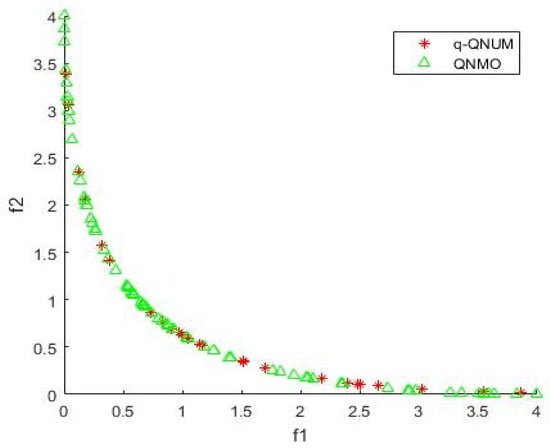

The number of Pareto points generated due to (q-QNUM) with Algorithm 1 and (QNMO) is shown in Figure 1. One can observe that the number of iterations as in (q-QNUM) and in (QNMO) are responsible for generating the approximate Pareto front of above (UMOP).

Figure 1.

Approximate Pareto Front of Example 1.

6. Conclusions

The q-Quasi-Newton method converges superlinearly to the solution of (UMOP) if all objective functions are strongly convex within the context of q-derivative. In a neighborhood of this solution, the algorithm uses a full Armijo steplength. The numerical performance of the proposed algorithm is faster than their actual evaluation.

Author Contributions

K.K.L. gave reasonable suggestions for this manuscript; S.K.M. gave the research direction of this paper; B.R. revised and completed this manuscript. All authors have read and agreed to the published version of the manuscript.

Funding

This research was supported by the Science and Engineering Research Board (Grant No. DST-SERB- MTR-2018/000121) and the University Grants Commission (IN) (Grant No. UGC-2015-UTT–59235).

Acknowledgments

The authors are grateful to the anonymous reviewers and the editor for the valuable comments and suggestions to improve the presentation of this paper.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Eschenauer, H.; Koski, J.; Osyczka, A. Multicriteria Design Optimization: Procedures and Applications; Springer: Berlin, Germany, 1990. [Google Scholar]

- Haimes, Y.Y.; Hall, W.A.; Friedmann, H.T. Multiobjective Optimization in Water Resource Systems; Elsevier Scientific: Amsterdam, The Netherlands, 1975. [Google Scholar]

- Nwulu, N.I.; Xia, X. Multi-objective dynamic economic emission dispatch of electric power generation integrated with game theory based demand response programs. Energy Convers. Manag. 2015, 89, 963–974. [Google Scholar] [CrossRef]

- Badri, M.A.; Davis, D.L.; Davis, D.F.; Hollingsworth, J. A multi-objective course scheduling model: Combining faculty preferences for courses and times. Comput. Oper. Res. 1998, 25, 303–316. [Google Scholar] [CrossRef]

- Ishibuchi, H.; Nakashima, Y.; Nojima, Y. Performance evaluation of evolutionary multiobjective optimization algorithms for multiobjective fuzzy genetics-based machine learning. Soft Comput. 2011, 15, 2415–2434. [Google Scholar] [CrossRef]

- Liu, S.; Vicente, L.N. The stochastic multi-gradient algorithm for multi-objective optimization and its application to supervised machine learning. arXiv 2019, arXiv:1907.04472. [Google Scholar]

- Tavana, M. A subjective assessment of alternative mission architectures for the human exploration of mars at NASA using multicriteria decision making. Comput. Oper. Res. 2004, 31, 1147–1164. [Google Scholar] [CrossRef]

- Gass, S.; Saaty, T. The computational algorithm for the parametric objective function. Nav. Res. Logist. Q. 1955, 2, 39–45. [Google Scholar] [CrossRef]

- Miettinen, K. Nonlinear Multiobjective Optimization; Kluwer Academic: Boston, MA, USA, 1999. [Google Scholar]

- Fishbum, P.C. Lexicographic orders, utilities and decision rules: A survey. Manag. Sci. 1974, 20, 1442–1471. [Google Scholar] [CrossRef]

- Coello, C.A. An updated survey of GA-based multiobjective optimization techniques. ACM Comput. Surv. (CSUR) 2000, 32, 109–143. [Google Scholar] [CrossRef]

- Fliege, J.; Svaiter, B.F. Steepest descent method for multicriteria optimization. Math. Method. Oper. Res. 2000, 51, 479–494. [Google Scholar] [CrossRef]

- Drummond, L.M.G.; Iusem, A.N. A projected gradient method for vector optimization problems. Comput. Optim. Appl. 2004, 28, 5–29. [Google Scholar] [CrossRef]

- Drummond, L.M.G.; Svaiter, B.F. A steepest descent method for vector optimization. J. Comput. Appl. Math. 2005, 175, 395–414. [Google Scholar] [CrossRef]

- Branke, J.; Dev, K.; Miettinen, K.; Slowiński, R. (Eds.) Multiobjective Optimization: Interactive and Evolutionary Approaches; Springer: Berlin, Germany, 2008. [Google Scholar]

- Mishra, S.K.; Ram, B. Introduction to Unconstrained Optimization with R; Springer Nature: Singapore, 2019; pp. 175–209. [Google Scholar]

- Fliege, J.; Drummond, L.M.G.; Svaiter, B.F. Newton’s method for multiobjective optimization. SIAM J. Optim. 2009, 20, 602–626. [Google Scholar] [CrossRef]

- Chuong, T.D. Newton-like methods for efficient solutions in vector optimization. Comput. Optim. Appl. 2013, 54, 495–516. [Google Scholar] [CrossRef]

- Qu, S.; Liu, C.; Goh, M.; Li, Y.; Ji, Y. Nonsmooth Multiobjective Programming with Quasi-Newton Methods. Eur. J. Oper. Res. 2014, 235, 503–510. [Google Scholar] [CrossRef]

- Jiménez, M.A.; Garzón, G.R.; Lizana, A.R. (Eds.) Optimality Conditions in Vector Optimization; Bentham Science Publishers: Sharjah, UAE, 2010. [Google Scholar]

- Al-Saggaf, U.M.; Moinuddin, M.; Arif, M.; Zerguine, A. The q-least mean squares algorithm. Signal Process. 2015, 111, 50–60. [Google Scholar] [CrossRef]

- Aral, A.; Gupta, V.; Agarwal, R.P. Applications of q-Calculus in Operator Theory; Springer: New York, NY, USA, 2013. [Google Scholar]

- Rajković, P.M.; Marinković, S.D.; Stanković, M.S. Fractional integrals and derivatives in q-calculus. Appl. Anal. Discret. Math. 2007, 1, 311–323. [Google Scholar]

- Gauchman, H. Integral inequalities in q-calculus. Comput. Math. Appl. 2004, 47, 281–300. [Google Scholar] [CrossRef]

- Bangerezako, G. Variational q-calculus. J. Math. Anal. Appl. 2004, 289, 650–665. [Google Scholar] [CrossRef]

- Abreu, L. A q-sampling theorem related to the q-Hankel transform. Proc. Am. Math. Soc. 2005, 133, 1197–1203. [Google Scholar] [CrossRef]

- Koornwinder, T.H.; Swarttouw, R.F. On q-analogues of the Fourier and Hankel transforms. Trans. Am. Math. Soc. 1992, 333, 445–461. [Google Scholar]

- Ernst, T. A Comprehensive Treatment of q-Calculus; Springer: Basel, Switzerland; Heidelberg, Germany; New York, NY, USA; Dordrecht, The Netherlands; London, UK, 2012. [Google Scholar]

- Noor, M.A.; Awan, M.U.; Noor, K.I. Some quantum estimates for Hermite-Hadamard inequalities. Appl. Math. Comput. 2015, 251, 675–679. [Google Scholar] [CrossRef]

- Pearce, C.E.M.; Pec̆arić, J. Inequalities for differentiable mappings with application to special means and quadrature formulae. Appl. Math. Lett. 2000, 13, 51–55. [Google Scholar] [CrossRef]

- Ernst, T. A Method for q-Calculus. J. Nonl. Math. Phys. 2003, 10, 487–525. [Google Scholar] [CrossRef]

- Sterroni, A.C.; Galski, R.L.; Ramos, F.M. The q-gradient vector for unconstrained continuous optimization problems. In Operations Research Proceedings; Hu, B., Morasch, K., Pickl, S., Siegle, M., Eds.; Springer: Heidelberg, Germany, 2010; pp. 365–370. [Google Scholar]

- Gouvêa, E.J.C.; Regis, R.G.; Soterroni, A.C.; Scarabello, M.C.; Ramos, F.M. Global optimization using q-gradients. Eur. J. Oper. Res. 2016, 251, 727–738. [Google Scholar] [CrossRef]

- Chakraborty, S.K.; Panda, G. Newton like line search method using q-calculus. In International Conference on Mathematics and Computing. Communications in Computer and Information Science; Giri, D., Mohapatra, R.N., Begehr, H., Obaidat, M., Eds.; Springer: Singapore, 2017; Volume 655, pp. 196–208. [Google Scholar]

- Mishra, S.K.; Panda, G.; Ansary, M.A.T.; Ram, B. On q-Newton’s method for unconstrained multiobjective optimization problems. J. Appl. Math. Comput. 2020. [Google Scholar] [CrossRef]

- Jackson, F.H. On q-functions and a certain difference operator. Earth Environ. Sci. Trans. R. Soc. Edinb. 1908, 46, 253–281. [Google Scholar] [CrossRef]

- Bento, G.C.; Neto, J.C. A subgradient method for multiobjective optimization on Riemannian manifolds. J. Optimiz. Theory App. 2013, 159, 125–137. [Google Scholar] [CrossRef]

- Andrei, N. A diagonal quasi-Newton updating method for unconstrained optimization. Numer. Algorithms 2019, 81, 575–590. [Google Scholar] [CrossRef]

- Nocedal, J.; Wright, S.J. Numerical Optimization, 2nd ed.; Springer Series in Operations Research and Financial Engineering; Springer: New York, NY, USA, 2006. [Google Scholar]

- Povalej, Z. Quasi-Newton’s method for multiobjective optimization. J. Comput. Appl. Math. 2014, 255, 765–777. [Google Scholar] [CrossRef]

- Ye, Y.L. D-invexity and optimality conditions. J. Math. Anal. Appl. 1991, 162, 242–249. [Google Scholar] [CrossRef]

- Morovati, V.; Basirzadeh, H.; Pourkarimi, L. Quasi-Newton methods for multiobjective optimization problems. 4OR-Q. J. Oper. Res. 2018, 16, 261–294. [Google Scholar] [CrossRef]

- Samei, M.E.; Ranjbar, G.K.; Hedayati, V. Existence of solutions for equations and inclusions of multiterm fractional q-integro-differential with nonseparated and initial boundary conditions. J. Inequal Appl. 2019, 273. [Google Scholar] [CrossRef]

- Adams, C.R. The general theory of a class of linear partial difference equations. Trans. Am. Math.Soc. 1924, 26, 183–312. [Google Scholar]

- Sefrioui, M.; Perlaux, J. Nash genetic algorithms: Examples and applications. In Proceedings of the 2000 Congress on Evolutionary Computation, La Jolla, CA, USA, 16–19 July 2000; Volume 1, pp. 509–516. [Google Scholar]

- Huband, S.; Hingston, P.; Barone, L.; While, L. A review of multiobjective test problems and a scalable test problem toolkit. IEEE T. Evolut. Comput. 2006, 10, 477–506. [Google Scholar] [CrossRef]

- Ikeda, K.; Kita, H.; Kobayashi, S. Failure of Pareto-based MOEAs: Does non-dominated really mean near to optimal? In Proceedings of the 2001 Congress on Evolutionary Computation, Seoul, Korea, 27–30 May 2001; Volume 2, pp. 957–962. [Google Scholar]

- Shim, M.B.; Suh, M.W.; Furukawa, T.; Yagawa, G.; Yoshimura, S. Pareto-based continuous evolutionary algorithms for multiobjective optimization. Eng Comput. 2002, 19, 22–48. [Google Scholar] [CrossRef]

- Valenzuela-Rendón, M.; Uresti-Charre, E.; Monterrey, I. A non-generational genetic algorithm for multiobjective optimization. In Proceedings of the Seventh International Conference on Genetic Algorithms, East Lansing, MI, USA, 19–23 July 1997; pp. 658–665. [Google Scholar]

- Vlennet, R.; Fonteix, C.; Marc, I. Multicriteria optimization using a genetic algorithm for determining a Pareto set. Int. J. Syst. Sci. 1996, 27, 255–260. [Google Scholar] [CrossRef]

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).