1. Introduction

In exploratory data analysis, the statistician often uses clustering and visualization in order to improve his knowledge on the data. In visualization he looks for some principal components explaining some major characteristics of the data. For example in principal component analysis (PCA) the goal is to find a linear combination of the variables explaining the major variability of the data. In cluster analysis, the goal is to find some clusters explaining the major heterogeneity of the data. In this article we suppose that the data can contain several clustering latent variables, that is, we are in the multiple partition setting, and we are simultaneously looking for clustering subspaces, that is, linear projections of the data each one related to some clustering latent variable thus the developed model is later called multi-partitions subspace clustering. A solution to perform multi-partition subspace clustering is to use a probabilistic model on the data such as a mixture model [

1], it allows to perform the parameters estimation, and model selection such as the choice of the number of subspaces and the number of clusters per subspace using standard model choice criteria such as BIC [

2]. Thus the main fields related to our work are model based subspace clustering and multi-partitions clustering.

In the model based subspace clustering framework, let first notice that PCA can be re-interpreted in a probabilistic way by considering a parsimonious version of a multivariate Gaussian distribution [

3] and that the

k-means algorithm can be re-interpreted as a particular parsimonious Gaussian mixture model estimated using a classification EM algorithm [

4]. A re-interpretation of the probabilistic PCA has also been used in clustering by Bouveyron et al. [

5] in order to cluster high-dimensional data. Although the proposed high dimensional mixture does not performs dimension reduction, it rather operates a class per class dimension reduction which does not allow to have a global model-based data visualization. Thus Bouveyron and Brunet [

6] proposed the so called Fisher-EM algorithm which simultaneously performs clustering and dimension reduction. This is performed through a modified version of the EM algorithm [

7] by including a Fisher step between the E and the M step. This approach allows the same projection to be applied to all data, but does not guarantee the increasing of the likelihood at each iteration of the algorithm.

In the context of multi-partitions clustering, Galimberti and Soffritti [

8] assumed that the variables can be partitioned into several independent blocks, each one following a full-covariance Gaussian mixture model. The model selection was done by maximizing the BIC criterion by a forward/backward approach. Then, Galimberti et al. [

9] generalized thier previous work by relaxing the assumption of block independence. The proposed extension takes into account three types of variables, classifying variables, redundant variables and non-classifying variables. In this context, the choice of the model is difficult because several roles have to be taken into account for each variable, which requires a lot of calculations, even for the reallocation of only one variable. Poon et al. [

10] also took into account the multi-partition setting, called as facet determination in their article. The model considered is similar to that of Galimberti and Soffritti [

8], but it also allows tree dependency between latent class variables, resulting in the Pouch Latent Tree Models (PLTM). Model selection is performed by a greed search to maximize the BIC criterion. The resulting model allows a broad understanding of the data, but the tree structure search makes estimation even more difficult as the number of variables increases. More recently, Marbac and Vandewalle [

11] proposed a tractable muti-partition clustering algorithm not limited to continuous data; in the Gaussian setting it can be seen as particular case of Galimberti and Soffritti [

8] where they assume a diagonal covariance matrix allowing a particularly efficient search of the partition of the variables in sub-vectors.

In this article we suppose that the data can contain

several clustering latent variables, that is we are in the multiple partition setting. But contrary to Marbac and Vandewalle [

11] where it is assumed that variables are divided into blocks each one related to some clustering of the data, we are looking for

clustering subspaces, i.e., linear projections of the data each one related to some particular clustering latent variable thus replacing the combinatorial question of finding the partition of the variables in independent sub-vectors by the question of finding the coefficients of the linear combinations. The proposed approach can be related to the independent factor analysis [

12] where the author deals with source separation, in our framework a source can be interpreted as some specific clustering subspace; however, their approach becomes intractable as the numbers of sources increases and does not allow to consider multivariate subspaces. Moreover, it is not invariant up to a rotation and rescaling of the data, where our proposed methodology is.

The organisation of the paper is the following, in

Section 2 we present a reinterpretation of the factorial discriminant analysis as a search of discriminant components and of independent non-discriminant components. In

Section 3, the multi-partitions subspace clustering model and the EM algorithm to estimate the parameters of the model will be presented. In

Section 4, results on simulated and real data will show the interest of the method in practice. In

Section 5, a conclusion and discussion of future extension of the paper will be made.

2. Probabilistic Interpretation of the Factorial Discriminant Analysis

2.1. Linear Discriminant Analysis (LDA)

It is supposed that n quantitative data in dimension d are available, the data number i will be denoted by , where is the value of variable j of data i. The whole dataset will be denoted by . Let assume that the data is clustered in K clusters, the class label of data i will be denoted by , with equals to 1 if data i belongs to cluster k and 0 otherwise. Let also denote by the partition of . In this section is supposed to be known. For sake of simplicity the random variables and their realisations will be denoted in lower case, and p will be used as a generic notation to denote a probability distribution function (p.d.f.) which will be interpreted according to its arguments.

In the context of linear discriminant analysis [

13], it is supposed that the distribution

given the cluster follows a

d-variate Gaussian distribution with common covariance matrices:

with

the vector of means in cluster

k and

the common class conditional covariance matrix. Let also denote by

the prior weights of each cluster.

The posterior cluster membership probabilities can be computed using the Bayes formula:

where

stands for the p.d.f. of the

d-variable Gaussian distribution with expectation

and covariance matrix

.

Let

denote the class conditional mean in cluster

k:

with

the number of data in cluster

k, and by

the unconditional mean. Let also denote by

the empirical intra-class covariance matrix:

and by

the empirical between class covariance matrix:

If the data are supposed to be independent, the likelihood can simply be written as:

The maximum likelihood estimators of the parameters of

,

and

are

,

and

. A new data point can be then classified by plugin the estimated values of the parameters in Equation (

1):

the resulting classification boundary being linear in this case.

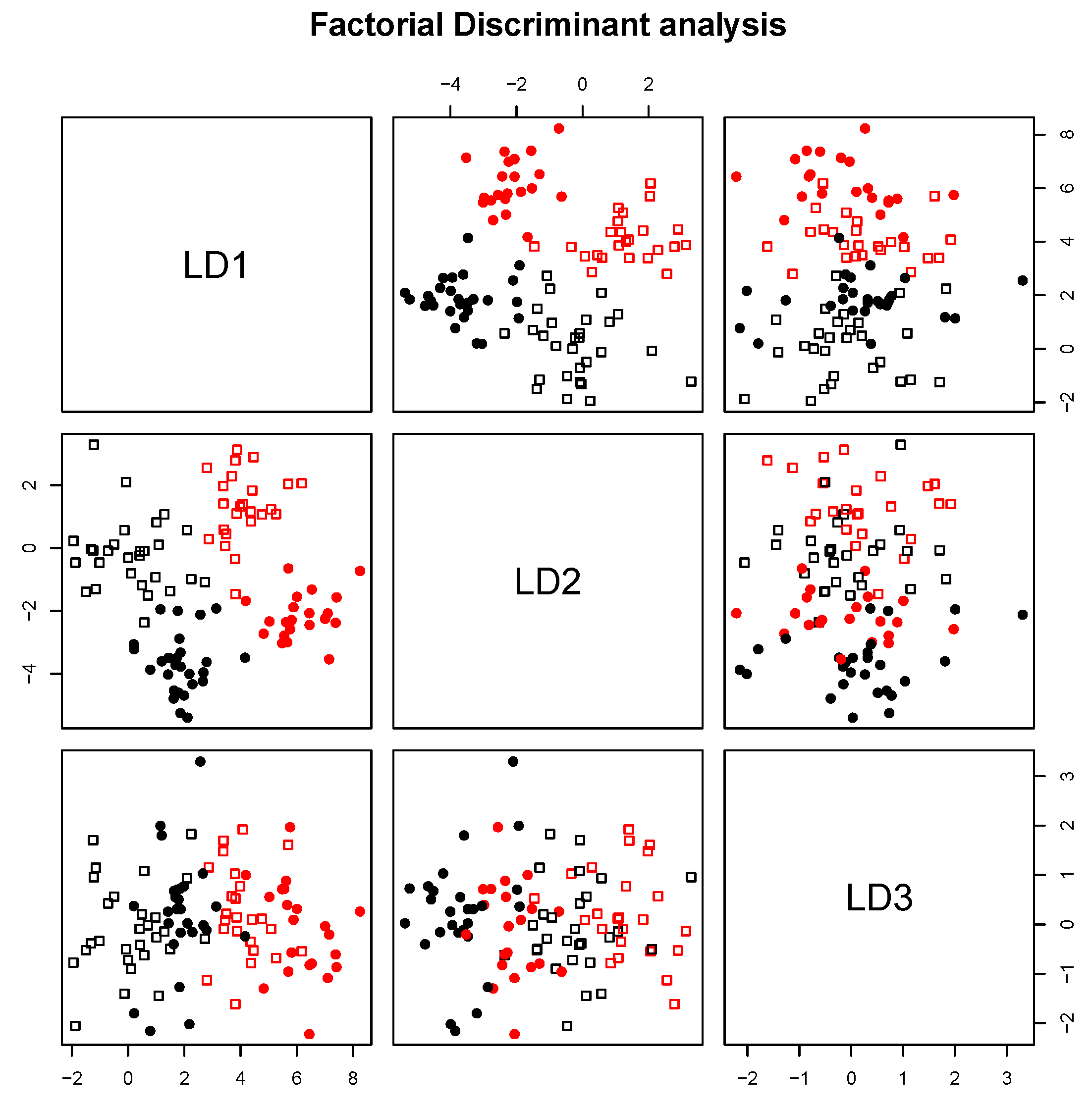

2.2. Factorial Discriminant Analysis (FDA)

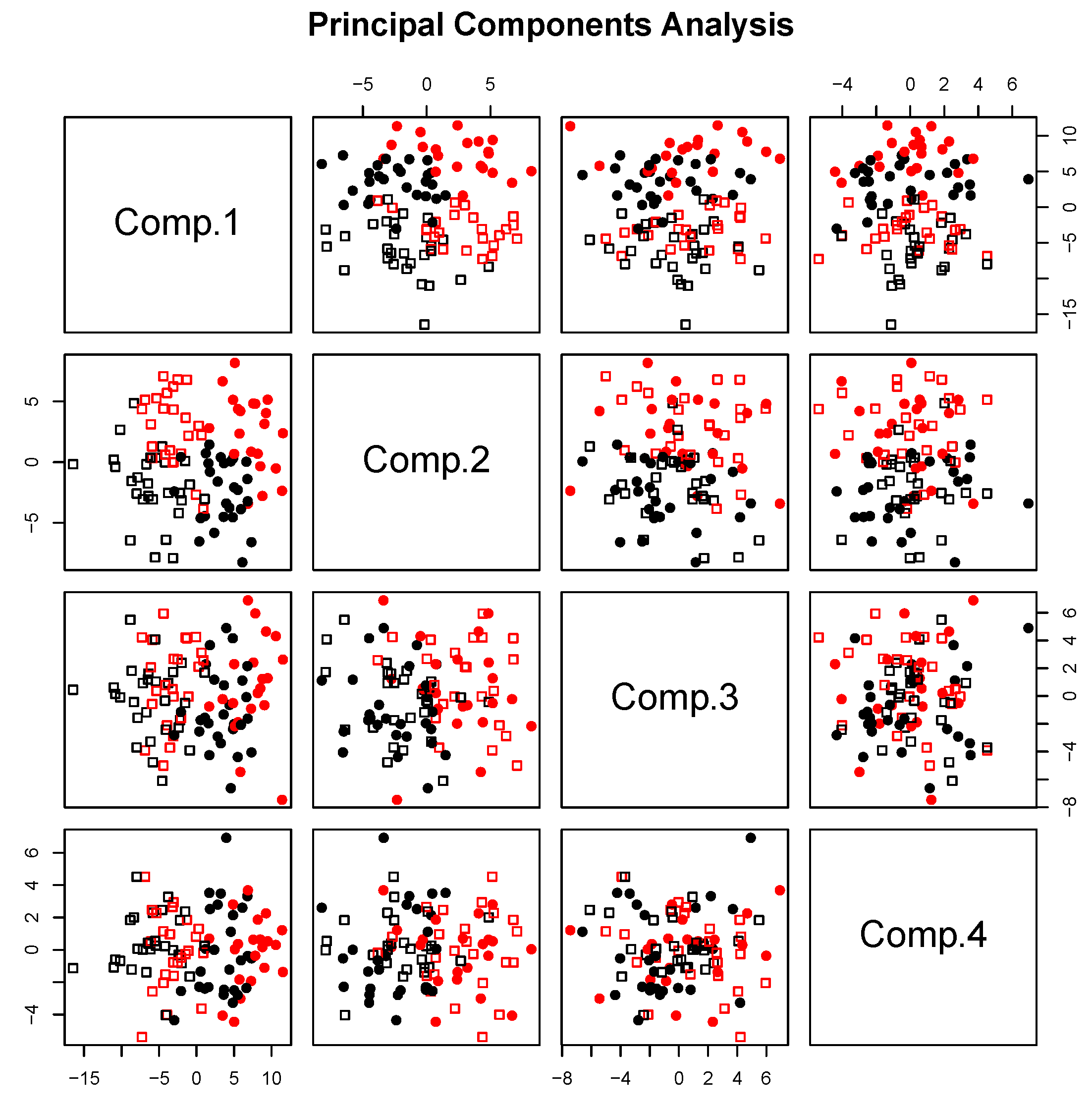

Let us note that, from a descriptive viewpoint, one can be interested in dimension reduction in order to visualize the data. This could be done by using PCA, but from a classification perspective the component explaining the largest variability in the data are often not the same that the components providing the best separation between the clusters.

The goal of factorial discriminant analysis (FDA) is to find the component maximizing the variance explained by the cluster above the intra-class variance. The coefficients of the first discriminant component

is defined by

It is well known that

is the eigen vector associated with the highest eigen value

of

[

14]. The remaining discriminant components are obtained through the remaining eigen vectors of

. Let denote by

the eigen values of

sorted in decreasing order and by

the associated eigen vectors. Moreover, if each component is constrained to have an intra-class variance equal to one (i.e.,

,

), the classification obtained using the Mahalonobis distance can simply be obtained by using the Euclidean distance on the data projected on the discriminant components.

2.3. Equivalence between LDA and FDA

As proved in Campbell [

15] and detailed in Trevor Hastie [

16], the FDA can be interpreted in a probabilistic way as an LDA where the rank of

is constrained to be equal to

p with

under the common class covariance matrix assumption. This allows us to reparametrize the probabilistic model in the following way:

where

,

and

. Let notice that this new parametrization at this step is not unique but the model can easily be made identifiable by imposing some constraints on the parameters.

Let and two random variables, the new parametrization can be reinterpreted in the following generative framework:

Draw : where stands for the multinomial distribution

Draw :

Draw :

Compute based on and : .

Thus the p.d.f. of

can be factorized in the following way:

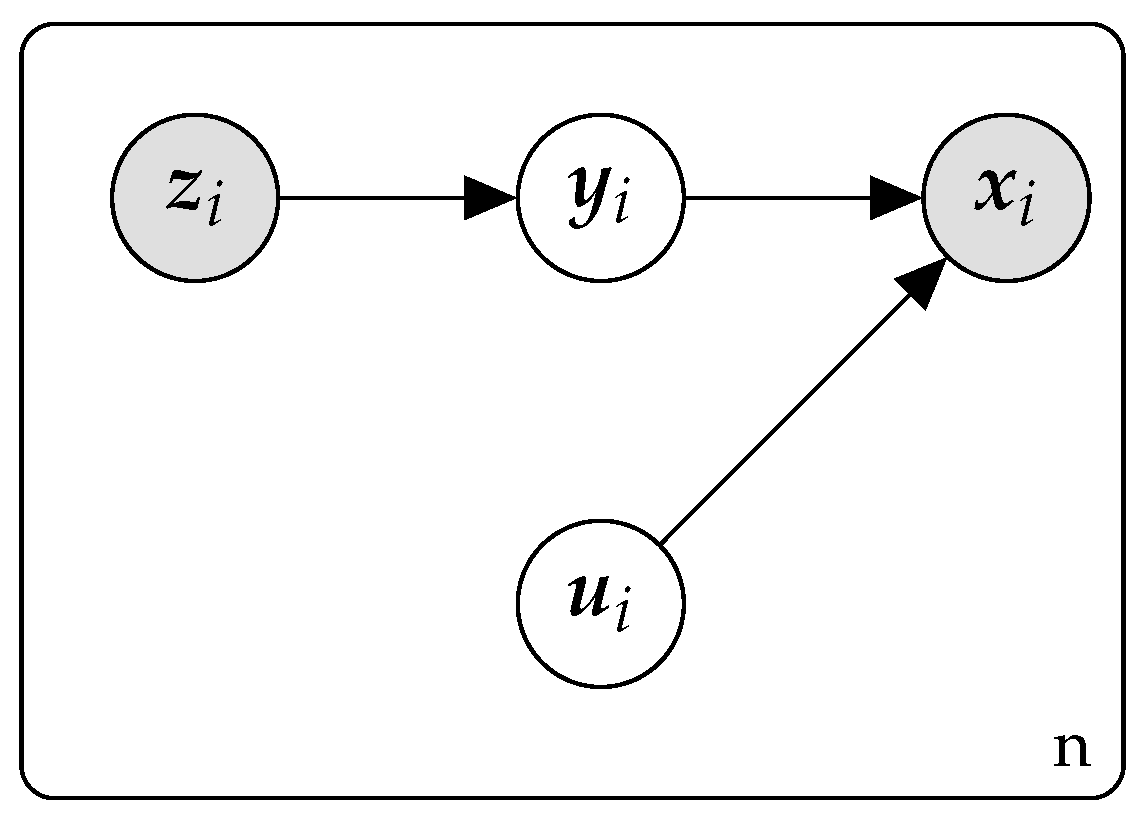

From a graphical angle the model can be reinterpreted as in

Figure 1, where

and

are latent random variables.

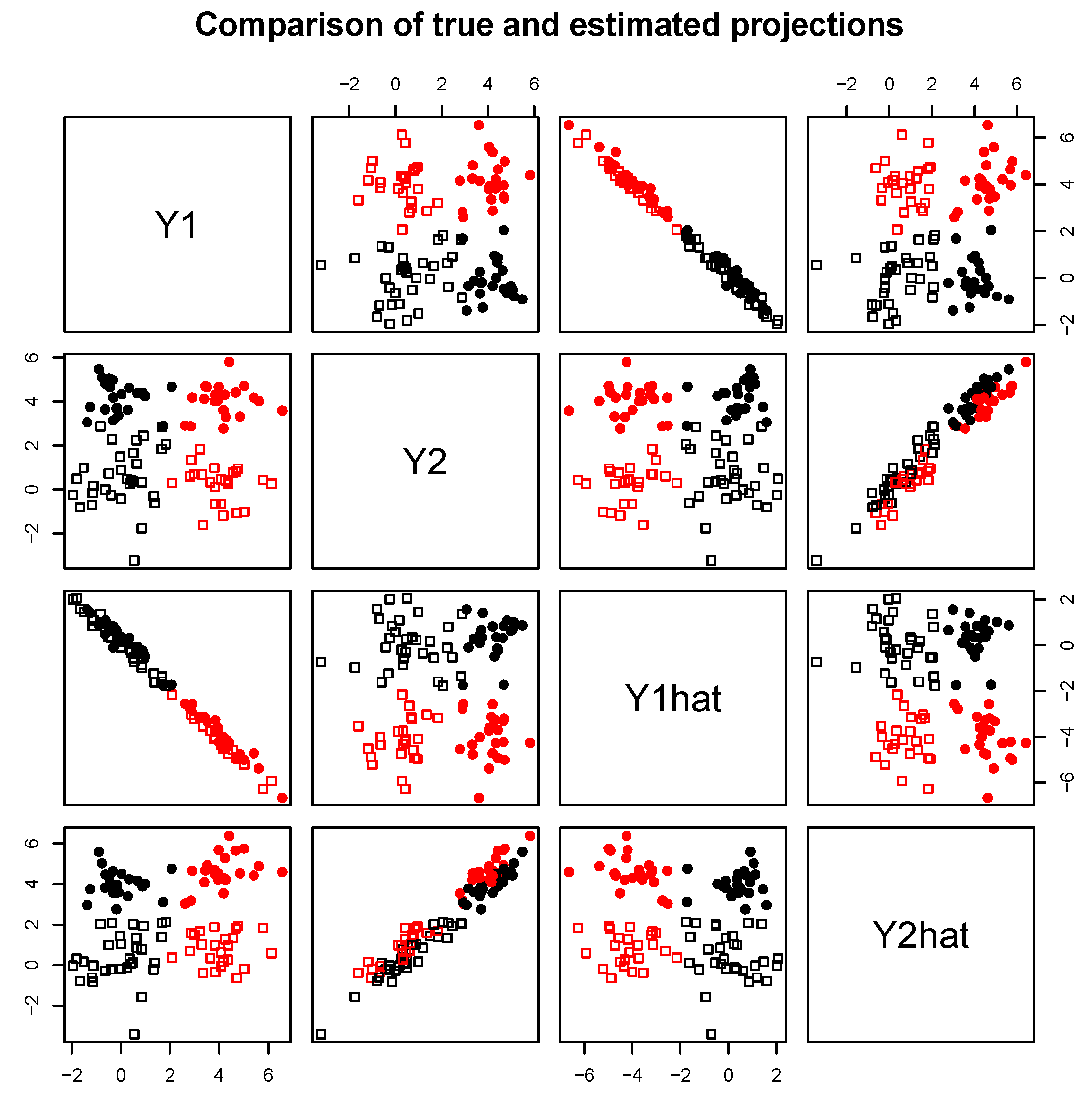

In practice we are interested in finding and from . We will denote by and the matrices which allow this computation: , . It is obvious that , for the rest of the article only the parametrization in terms of and will be used.

The main interest of this parametrization is that

It means that only

is required to compute the posterior class membership probabilities:

Parameters are estimated by maximum likelihood, using the variable change formulae the likelihood can be written:

The first term is related to the variable change, the second term is related to the discriminant components and the third term is related to prior class membership probabilities and the fourth term is related to the non-discriminant components. In practice, this decomposition is the corner stone of the proposed approach since it separates the clustering part from the non-clustering part.

As stated in Campbell [

15] the maximum likelihood estimator of

are the first

p eigen vectors

of

in rows:

renormalized such that:

The maximum likelihood estimator of

is obtained such that:

and that

with

the diagonal matrix of the

last eigen-values of

(with the

last eigen-values which are null).

can simply be obtained by multiplying the last

egien-vectors of

by

then renormalize them such that Equation (

3) is satisfied. Such renormalization makes the parameters

V et

R identifiable up to a sign.

Moreover

and

are estimated by

As stated in Trevor Hastie [

16] the link between the two parametrizations is as follows:

and

From this formula we can clearly see that the reduced rank constraint operates some regularization on the parameters estimation. Moreover, from a practical perspective the reparametrization Equation (

2) is more efficient for computing the posterior class membership probabilities.

2.4. Application in the Clustering Setting

As in Trevor Hastie [

16], the model can easily be used in the clustering setting using the EM algorithm to maximise the likelihood. Thus

,

,

are recomputed at each iteration by using their version weighted by their posterior membership probabilities.

The EM algorithm is now presented. The algorithm is first initialized with some starting value of the parameters or of the partition. Then the E step and the M step are iterated until convergence. Let denote the value of the parameters at iteration r, the E and the M steps are the followings:

E step: compute the posterior class membership probabilities.

with

.

Then deduce , , and as in the previous section using the eigenvalue decomposition of .

As noticed in Trevor Hastie [

16], this approach is not equivalent to performing a standard EM algorithm and then performing FDA at the end of the EM algorithm. FDA must be computed at each iteration of the EM algorithm since the posterior membership probabilities are only computed based on the

p first clustering projections.

Let us also notice that the M step can be interpreted in a Fisher-M step since FDA is required. In this sense it can be interpreted as a particular version of the Fisher-EM algorithm of Bouveyron and Brunet [

6]. Although the homoscedasticity could be seen as particularly constraining, it is the best framework for introducing our model in the next section, since it is easily interpretable and allows for efficient computation owing to the closed form of FDA. This limitation could be easily overcome by using rigorous extensions of the FDA in the heteroscedastic setting as in Kumar and Andreou [

17]; however, the computation would be much more intensive, since in this case no closed form formula is available and an iterative algorithm would be required even for finding the best projection.

3. Multi-Partition Subspace Mixture Model

3.1. Presentation of the Model

Let us now suppose that instead of having only one class variable

for data

i, we now have

H class variables

with

modalities. It is assumed that

are independent, with

denoted by

. Let also denote by

the variables related to the clustering variable

such that:

and that we will denote by

.

Let us still denote by

the non clustering variables

Let us also define

by:

The

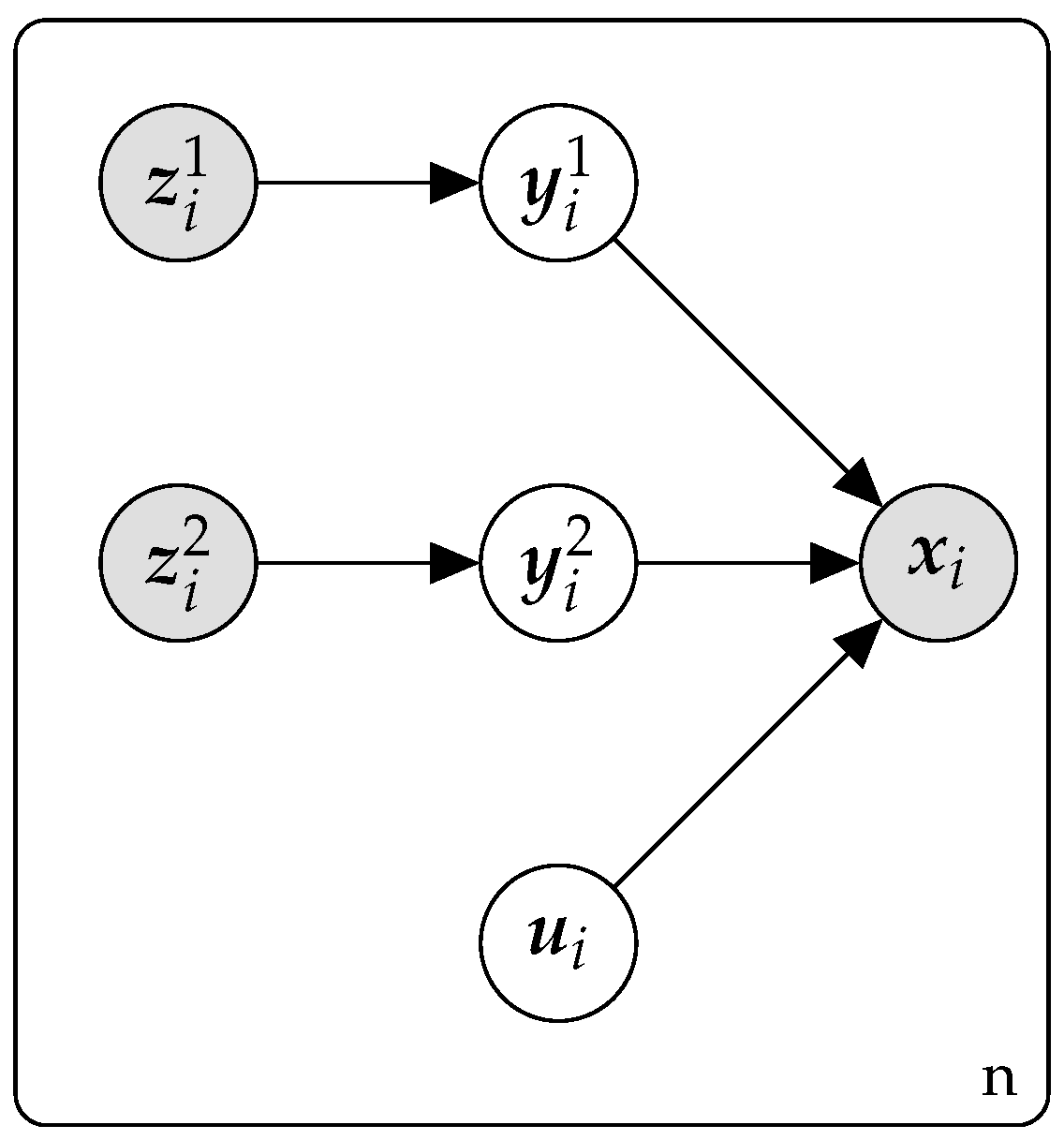

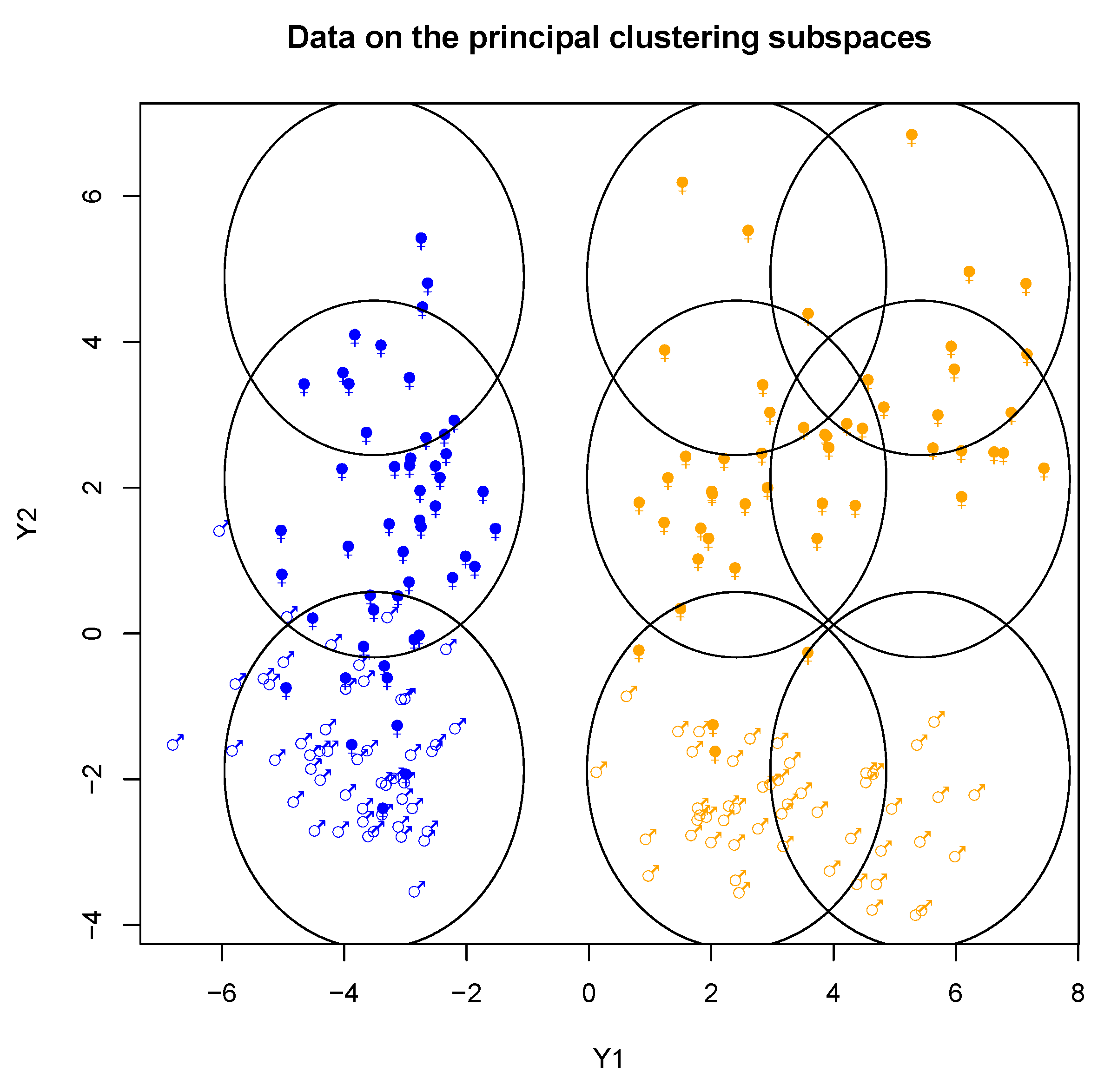

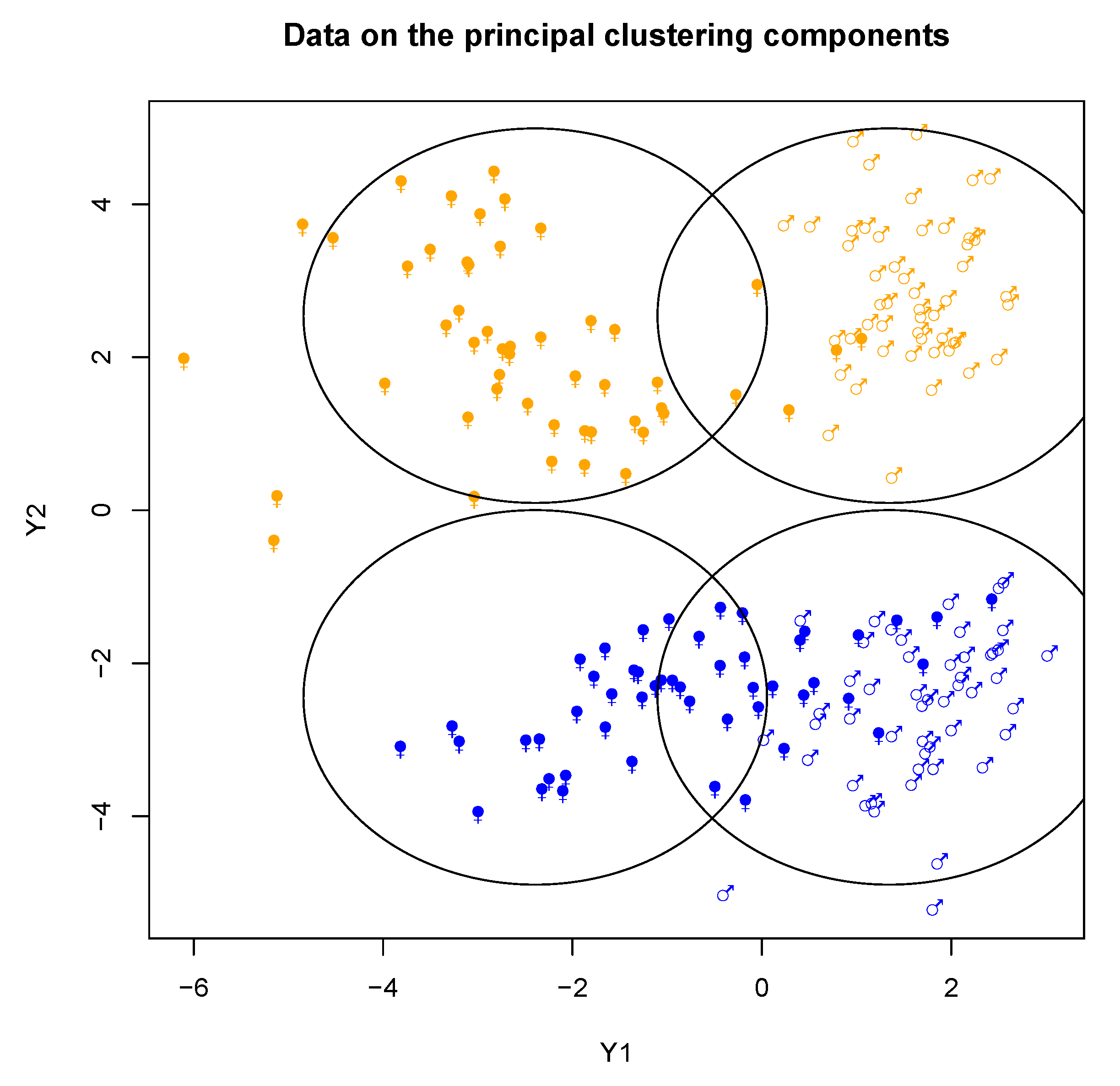

Figure 2 illustrates the model in the case of

.

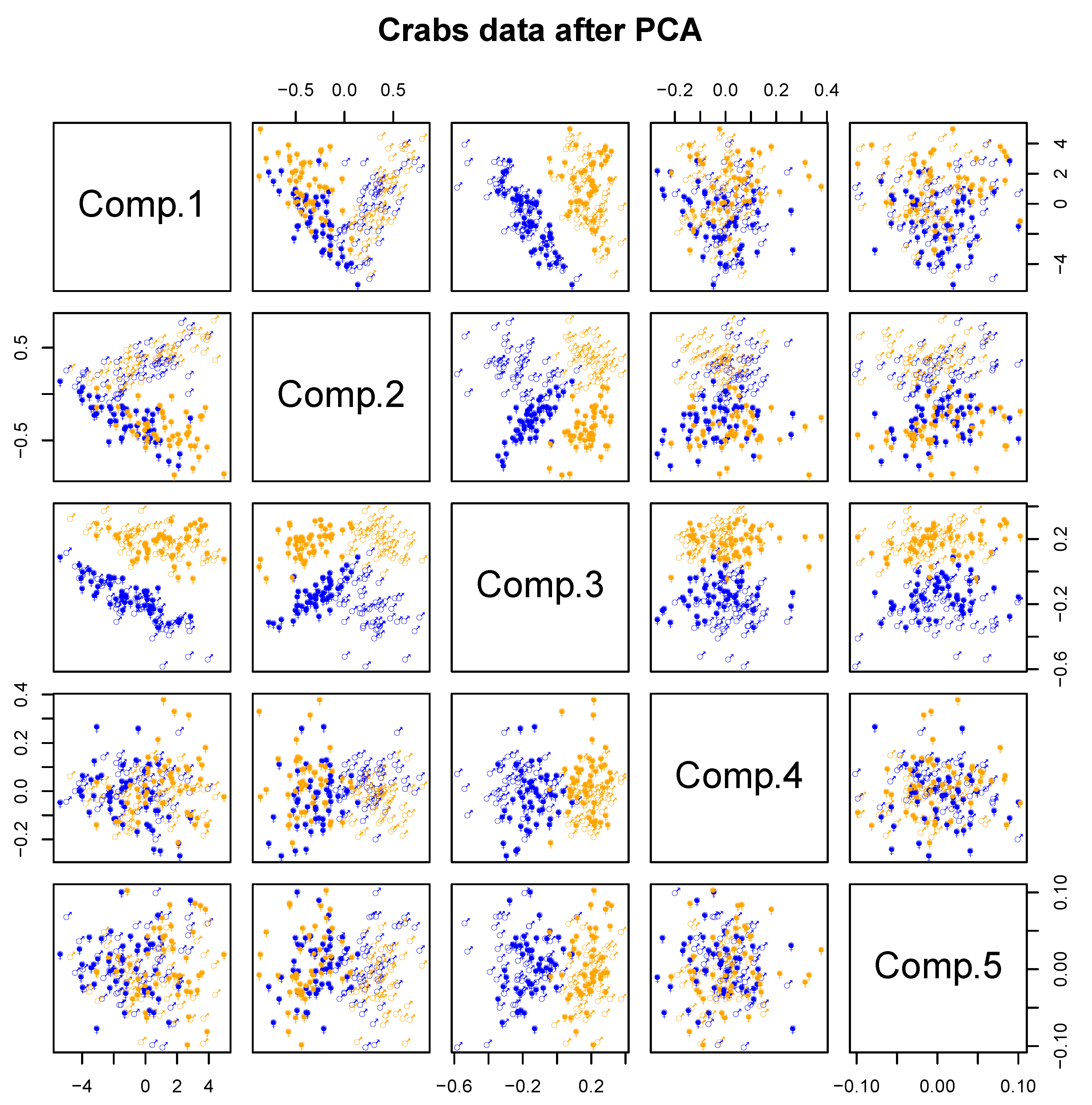

Let us notice that this model allows us to visualize many clustering viewpoints in a low dimensional space since can be summarized by , …, . For instance, one can suppose that . In this case each clustering variable can be visualized on one component. We will denote by the parameters of the model to be estimated.

3.2. Discussion about the Model

The Cartesian product of cluster spaces results in

clusters, which can be very large without needing many parameters. Thus the proposed model can be interpreted as being a very sparse Gaussian mixture model allowing the possibility to deal with a very large number of clusters, the resulting conditional means and covariances matrices are given in the following formulas:

and

Thus, the expectation of given in all the clusters is a linear combination of the cluster specific means which can be referred to as a multiple-way MANOVA setting. On the one hand, as a particular homoscedastic Gaussian mixture, our model is more prone to model bias than free homoscedastic Gaussian mixture, and in the case when our model would be well-specified the homoscedastic Gaussian mixture would give a similar clustering for a large sample size (i.e., the same partitions with respect to the partition resulting from the product space of our multi-partitions model). On the other hand, our approach produces a factorised version of the partition space as well as the related clustering subspaces which is not a standard output of clustering methods, and it can deal with a large number of clusters in a sparse way which can be particularly useful for a moderated sample size. In practice, the choice between our model and an other mixture model can simply be performed through the BIC criterion.

In some sense our model can be linked with the mixture of factor analyzers [

18]. In mixture of factor analyzers the model is of the type:

where

is a low rank matrix. But here we have chosen a model of the type

which allows us to deal with the noise in a different way. Actually, our model is invariant up to a bijective linear transformation of the data which is not the case for the mixtures of factor analyzers. On the other hand, our model can only deal with data with moderated dimension with respect to the number of statistical units; it assumes that the sources

can be recovered from the observed data

.

3.3. Estimation of the Parameters of the Model in the Supervised Setting

The likelihood of the model can be written:

The likelihood cannot be maximized directly. However, in the case of

, it reduces to the problem of

Section 2. Let notice that if all the parameters are fixed except

and

,

and

, the optimisation can be easily performed by constraining

and

to be linear combinations of

and

. Thus the likelihood will be optimized by using an alternate optimization algorithm. Let

the matrix which allow to compute

and

based on

and

:

where

is the sub-matrix containing the

first rows of

and

the matrix containing the last

rows of

.

Thus, the increase of the likelihood when all the parameters are fixed except

,

,

and

becomes:

By denoting

we have to maximize:

Consequently, and the others parameters can be obtained by applying a simple FDA on the data . In order to optimise over all the parameters, we can loop over all the clustering dimensions.

Thus, in the case of mixed continuous and categorical data, this model can be used to visualize the clustering behavior of the categorical variables with respect to the quantitative ones.

3.4. Estimation of the Parameters of the Model in the Clustering Setting

Here our main goal is to consider the clustering setting, that is, when are unknown. Consequently we will use an EM algorithm to “reconstitute the missing label” in order to maximize the likelihood. Thus the algorithm stays the same as in the supervised setting, except that the data at each iteration are now weighted by instead of .

The algorithm is the following:

3.5. Parsimonious Models and Model Choice

The proposed model needs the user to define the number of clustering subspaces H, the number of cluster in each clustering subspace , …, , and the dimensionality , …, of each subspace. The constraints are that , that and . It is clear that the number of possible models can become very high. To limit the combinatorial aspect, one can impose and/or . In practice the choice of enforces to find clustering which could be visualized in one dimension, which can help the practitioner. Moreover, choosing is the minimal requirement in order to investigate a clustering structure. However, if possible we recommend to explore the largest possible number of models and choosing the best one with the BIC. Let us define the following parsimonious models and their related number of parameters:

the general form of the proposed model, the index

h will be removed if values are the same for each clustering subspace.

where the number of clusters is the same for each subspace

where the dimensionalities are the same for each subspaces

where the dimensionalities are equals to one for each subspaces

where the number of clusters is the same for each subspace and the dimensionalities are the same for each subspace

where the number of clusters is the same for each subspace and the dimensionalities are equals to one for each subspaces

For a given model

m the BIC is computed as:

where

is the number of parameters of the model detailed above. Thus the model choice consists of choosing the model maximising the BIC. BIC enjoys good theoretical consistency properties, thus providing a guarantee to select the true model as the number of data increases. The ICL criterion [

19] could also be used to enforce the choice of well separated clusters, since from a classification perspective BIC is known to over-estimate the number of clusters if model assumption are violated. Let us however notice that in practice the user could be mainly interested by a low value of

H, since even

can provide him with new insights about his data, focusing on finding several clustering view points.

5. Conclusions and Perspectives

We have proposed a model which allows us to combine visualization and clustering with many clustering viewpoints. Moreover, we have shown the possibility of performing model choice by using the BIC criterion. The proposed model can provide new information on the structure present in the data by trying to reinterpret the cluster as a result of the Cartesian product of several clustering variables.

The proposed model is limited to the homoscedatic setting, which could be seen as a limitation; however, from our point of view this is more robust than the heteroscedastic setting, which is known to be jeopardized by the degeneracy issue [

21]. However, the extension of our work on the heteroscedastic setting can easily be performed from the modeling point of view; the main issue in this case would be the parameters estimation where an extension of the FDA to the heteroscedastic setting would be needed, as presented in Kumar and Andreou [

17]. Another difficult issue is the choice of

H,

and

, which is very combinatorial. Here we have proposed an estimation strategy for all these tuning parameters being fixed, and then performed a selection of the best tuning according to BIC. However, in future work, a model selection strategy to perform the model selection through a modified version of the EM algorithm will also be investigated as in Green [

22]; it would thus limit the combinatorial aspect of the global model search through EM-wise local model searches.