1. Introduction

In image deblurring we are concerned in reconstructing an approximation of an image from blurred and noisy measurements. This process can be modeled by an integral equation of the form

where

is the original image and

is the observed imaged which is obtained from a combination of an (Hilbert-Schmidt) integral operator, represented by

, and the add of some (unavoidable) noise

coming from, e.g., perturbations on the observed data, measurement errors, and approximation errors. By assuming the kernel

to be compactly supported and considering the ideal case

Equation (

1) becomes

where

K is a compact linear operator. In this contest,

is generally called

point spread function (PSF).

Considering an uniform grid, images are represented by their color intensities measured on the grid (pixels). In this paper, for the sake of simplicity, we will deal only with square and gray-scale images, even if all the techniques presented here carry over to images of different sizes and colors as well.

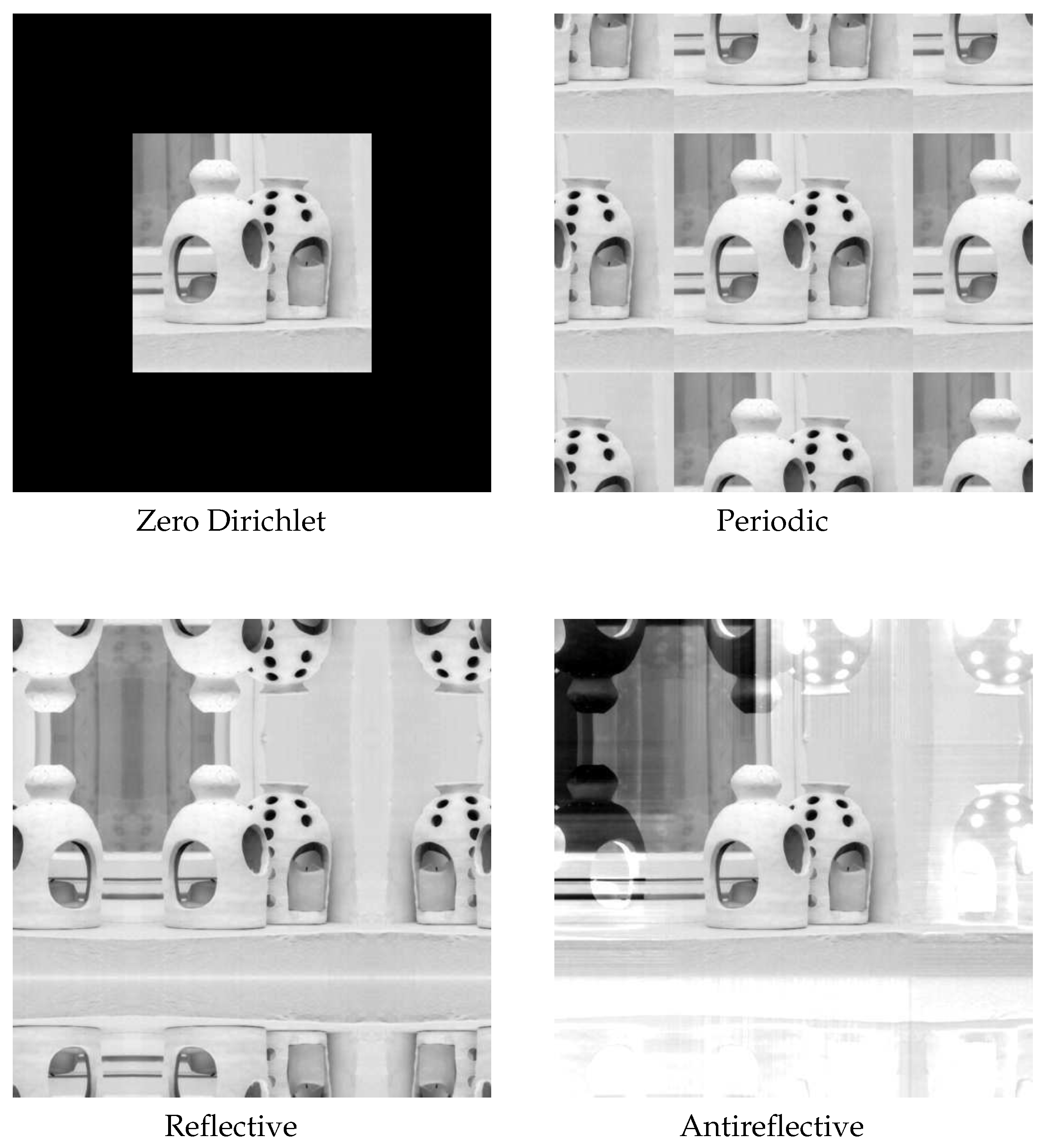

Collected images are available only in a finite region, the field of view (FOV), and the measured intensities near the boundary are affected by data which lie outside the FOV; see

Figure 1 for an illustration.

Denoting by

and

the stack ordered vectors corresponding to the observed image and the true image, respectively, the discretization of (

1) becomes

where the matrix

K is of size

, being

m and

k the dimensions (in pixels) of the original picture. The matrix

K is often called the

blurring matrix. When imposing proper Boundary Conditions (BCs), the matrix

K becomes square

and in some cases, depending on the BCs and the symmetry of the PSF, it can be diagonalized by discrete trigonometric transforms. Indeed, specific BCs induce specific matrix structures that can be exploited to lessen the computational costs using fast algorithms. Of course, since BCs are artificially introduced, their advantages could come with drawbacks in terms of reconstruction accuracy, depending on the type of problem. The BCs approach forces a functional dependency between the elements of

external to the FOV and those internal to this area. If the BC model is not a good approximation of the real world outside the FOV, the reconstructed image can be severely affected by some unwanted artifacts near the boundary, called ringing effects; see, e.g., [

1].

The choice of the different BCs can be driven by some additional knowledge on the true image and/or from the availability of fast transforms to diagonalize the matrix

K within

arithmetic operations. Indeed, the matrix-vector product can be always computed by the 2D FFT, after a proper padding of the image to convolve, (see, e.g., [

2]), while the availability of fast transforms to diagonalize the matrix

K depends on the BCs. Among the BCs present in the literature, we consider: Zero (Dirichlet), Periodic, Reflective and Anti-Reflective, but our approach can be extended to other BCs like, e.g., synthetic BCs [

3] or high order BCs [

4,

5]. See

Figure 2 for an illustration of the described BCs.

On the other hand, since Equation (

2) is the product of the discretization of a compact operator,

K is severely ill-conditioned and may be singular. Such linear systems are commonly referred to as linear discrete ill-posed problems; see, e.g., [

6] for a discussion. Therefore a good approximation of

cannot be obtained from the algebraic solution (e.g., the least-square solution) of (

2), but regularization methods are required. The basic idea of regularization is to replace the original ill-conditioned problem with a nearby well-conditioned problem, whose solution approximates the true solution. One of the popular regularization techniques is Tikhonov regularization and it amounts in solving

where

denotes the vector 2-norm and

is a regularization parameter to be chosen. The first term in (

3) is usually refereed to as fidelity term and the second as regularization term. This translates into solving a linear problem for which many efficient methods have been developed for computing its solution and for estimating the regularizing parameter

, see [

6]. This approach unfortunately comes with a drawback: the edges of restored images are usually over-smoothed. Therefore, nonlinear strategies have been employed, in order to overcome this unpleasant property, like total variation (TV) [

7] and thresholding iterative methods [

8,

9]. That said, typically many nonlinear regularization methods have an inner step that apply a least-square regularization and therefore can benefit from strategies previously developed for such simpler model.

In the present paper, both the regularization strategies that we propose share two common ingredients: wavelet decomposition and

-norm minimization on the regularization term. This is motivated by the fact that the wavelet coefficients (under some basis) of most real images are usually very sparse. In particular, here we consider the tight frame systems used in [

10,

11,

12], but the result can be easily extended to any framelet/wavelet system. Let

be a wavelet or tight-frame synthesis operator (

), the wavelets or tight-frame coefficients of the original image

are

such that

Within this frame set, the model Equation (

2) translates into

Recently, in [

13], a new technique was proposed which directly applies a single preconditioning operator

P to Equation (

4). The new preconditioned system becomes

Combining this approach with a soft-thresholding technique such as the modified linearized Bregman splitting algorithm [

14], in order to mimic the

-norm minimization, leads to the following preconditioned iterative scheme

where

is a positive relaxation parameter and

is the soft-thresholding function as defined in

6. Hereafter we will put

: this is justified by applying an implicit rescaling of the preconditioned system matrix

.

The paper is organized as follows: in

Section 2 we propose a generalization of an approximated iterative Tikhonov scheme that was firstly introduced in [

15] and then developed and adapted into different settings in [

16,

17]. Here the preconditioner

P takes the form

where

B is an approximation of

A, in the sense that

with

C the discretization of the same problem (

1) as the original blurring matrix

K but imposing Periodic BCs. The operator

can be a function of

or the discretization of a differential operator. The method is nonstationary and the parameter

is computed by solving a nonlinear problem with a computational cost of

. Related work on this kind of preconditioner can be found in [

18,

19,

20]. In

Section 3 we define a class of preconditioners

P endowed with the same structure of the system matrix

A, as initially proposed in [

21] and then further developed in [

22]. It is called structure preserving reblurring preconditioning strategy and we combine it with the generalized regularization filtering approach of the preceding

Section 2. The idea is to preserve both the informations carried over by the spectra of the operator

A and the structure itself of the operator induced by the best fitting BCs.

Section 4 contains a selection of significant numerical examples which confirm the robustness and quality of the proposed regularization schemes.

Section 5 provides a summary of the techniques presented in this work and draws some conclusions. Finally, in

Appendix A are provided proofs of convergence and regularization properties of the proposed algorithms.

3. Structured PISTA with General Regularizing Operator

The structured case is a generalization of what was developed in [

21,

22], merging these ideas with the general approach described in

Section 2. We skip some details since they can be easily recovered from the aforementioned papers.

The blurring matrix K is made by two parts: the PSF and the BCs inherited by the discretization. Different types of structured matrices are given rise by this latter choice. Without loosing generality and for the sake of simplicity, we consider a square PSF and we suppose that the center of the PSF is known.

Consider the pixels

of the PSF, we can define the following generating function

where

and we assumed that

if the entry

does not belong to

[

5]. Observe that

can be seen as the Fourier coefficients of

, so that the same information is contained in the generating function

and in

.

Summarizing the notation that we set in the Introduction about the BCs, we have

We notice that, since the continuous operator is shift-invariant, in all these four cases K has a Toeplitz structure which depends on plus a correction term which depends on the chosen BCs.

In conclusion, we employ the unified notation , where can be any of the classes of matrices just introduced (i.e., , , , ). With this notation we wish to highlight the two crucial elements that determine K: the blurring phenomenon associated with the PSF described by and the chosen BCs represented by .

Given the generating function

(

13) associated to the PSF

, let us compute the eigenvalues

of the corresponding BCCB matrix

by means of the 2D-FFT, where

. Fix a regularizing (differential) operator

as in

Section 2, and suppose that the Assumptions 1 and 2 hold. The differential operator can be of the form

, as in Algorithm 1 as well. Let now

be the new eigenvalues after the application of the Tikhonov filter to

, where

are the eigenvalues (singular values) of

and

is computed as in Algorithms 1 and 2. Let us compute now the coefficients

of

by means of the 2D-iFFT and, finally, let us define

where

corresponds to the most fitting BCs for the model problem (

1).

We are now ready to formulate the last method whose computation are reported in Algorithm 3.

| Algorithm 3 |

Fix , BCs, . Set . Get by computing an FFT of . Fix , and set , . Set and . Compute and . while

do Compute . Compute . Compute . Get the mask of the coefficients of of ( 14) by computing an IFFT of . Generate the matrix from the coefficient mask and BCs.

|

In the case that

, then the algorithm is modified in the following way:

where

,

. We will denote this version by

. We will not provide a direct proof of convergence for this last algorithm. Let us just observe that the difference between (

15) and (

12)–(

8) is just a correction of small rank and small norm.

4. Numerical Experiments

We now compare the proposed algorithms with some methods from the literature. In particular, we consider the AIT-GP algorithm described in [

16] and the ISTA algorithm described in [

8]. The AIT-GP method can be seen as Algorithm 2 with

, while the ISTA algorithm is equivalent to iterations of Algorithm 2 without the preconditioner. These comparisons allow us to show how the quality of the reconstructed solution is improved by the presence of both the soft-thresholding and the preconditioner.

The ISTA method and our proposals require the selection of a regularization parameter. For all these methods we select the parameter that minimizes the relative restoration error (RRE) defined by

For the comparison of the algorithms we consider the Peak Signal to Noise Ratio (PSNR) defined by

where

is the the number of elements of

and

M denotes the maximum value of

. Moreover, we consider the Structure Similarity index (SSIM); the definition of the SSIM is involved, here we recall that this index measures how accurately the computed approximation is able to reconstruct the overall structure of the image. The higher the value of the SSIM the better the reconstruction is, and the maximum value achievable is 1; see [

27] for a precise definition of the SSIM.

We now describe how we construct the operator

W. We use the tight frames determined by linear B-splines; see, e.g., [

28]. For one-dimensional problems they are composed by a low-pass filter

and two high-pass filters

and

. These filters are determined by the masks are given by

Imposing reflexive boundary conditions we determine the analysis operator

W so that

. Define the matrices

and

Then the operator

W is defined by

To construct the two-dimensional framelet analysis operator we use the tensor products

The matrix

is a low-pass filter; all the other matrices

contain at least one high-pass filter. The analysis operator is given by

In

, following [

26], we set

All the computations were performed on MATLAB R2018b running on a laptop with an Intel i7-8750H @ 2.20 GHz CPU and 16 GB of RAM.

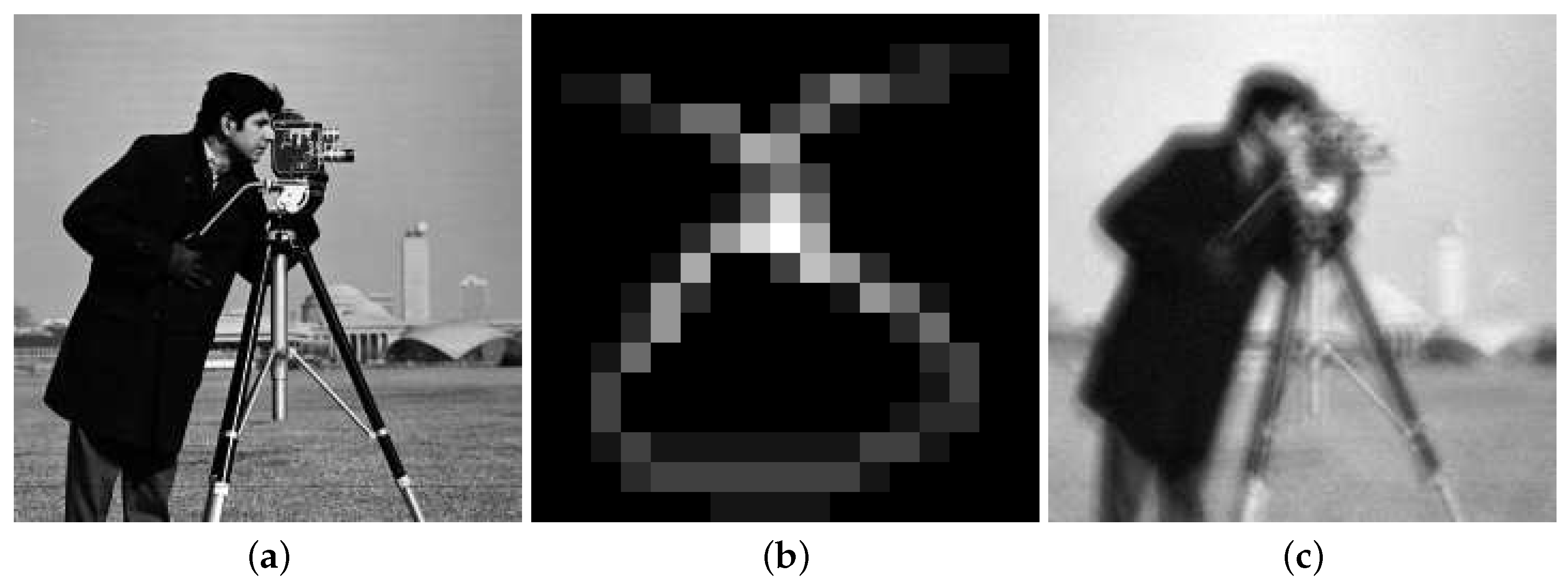

4.1. Cameraman

We first considered the cameraman image in

Figure 3a and we blurred it with the non-symmetric PSF in

Figure 3b. We then added

white Gaussian noise obtaining the blurred and noisy image in

Figure 3c. Note that we cropped the boundaries of the image to simulate real data; see [

1] for more details. Since the image was generic we imposed reflexive BCs.

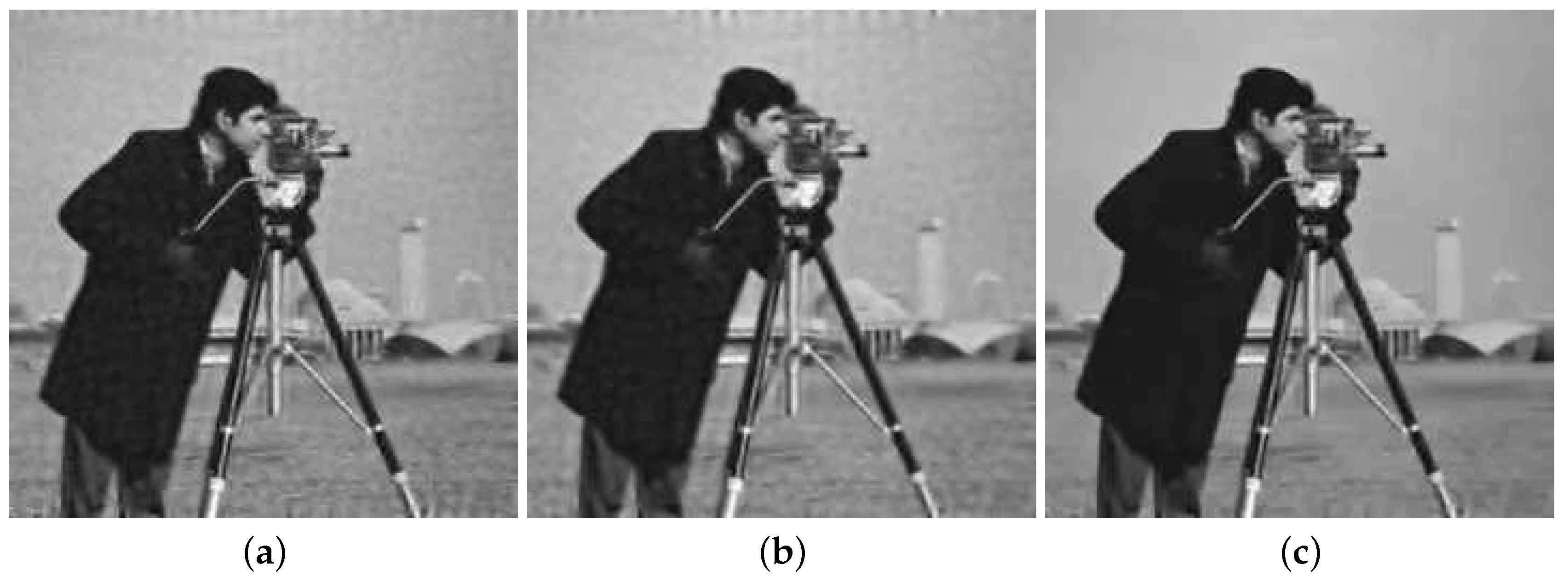

In

Table 1 we report the results obtained with the different methods. We can observe that

provided the best reconstruction of all considered algorithms. Moreover, we can observe that, in general, the introduction of the structured preconditioner improved the quality of the reconstructed solutions, especially in terms of SSIM. From the visual inspection of the reconstructions in

Figure 4 we can observe that the introduction of the structured preconditioner allowed us to evidently reduce the boundary artifacts as well as avoid the amplification of the noise.

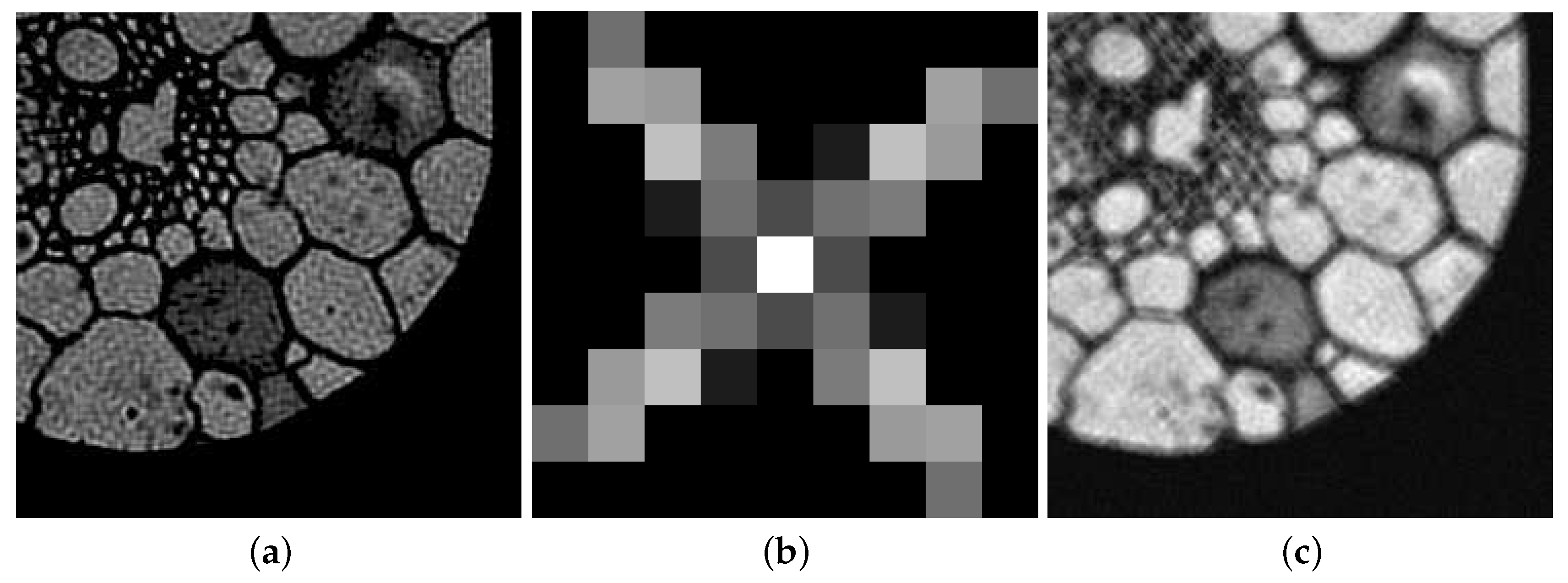

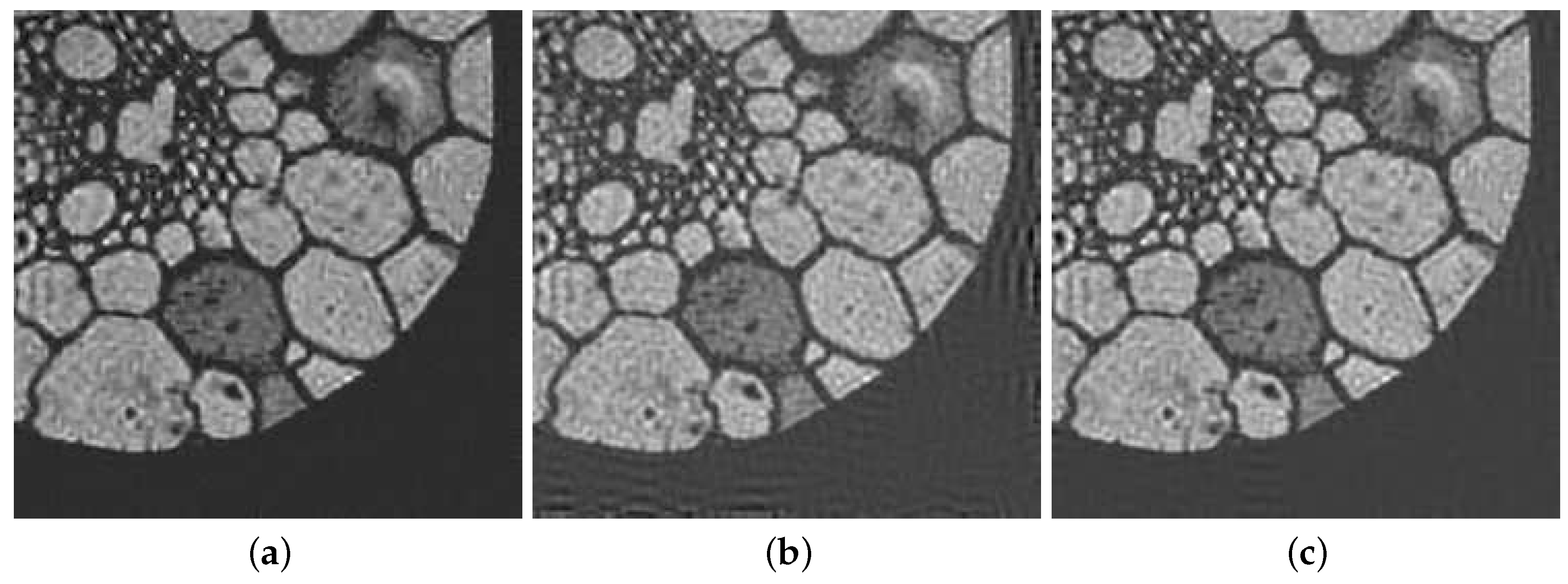

4.2. Grain

We now considered the grain image in

Figure 5a and blurred it with the PSF, obtained by the superposition of two motions PSF, in

Figure 5b. After adding

of white Gaussian noise and cropping the boundaries we obtained the blurred and noisy image in

Figure 5c. According to the nature of the image we used reflexive bc’s.

Again in

Table 1 we report all the results obtained with the considered methods. In this case ISTA provided the best reconstruction in terms of RRE and PSNR. However,

provided the best reconstruction terms of SSIM and very similar results in term of PSNR and RRE. In

Figure 6 we report some of the reconstructed solution. From the visual inspection of these reconstruction we can see that the introduction of the structured preconditioner reduced the ringing and boundary effects in the computed solutions.

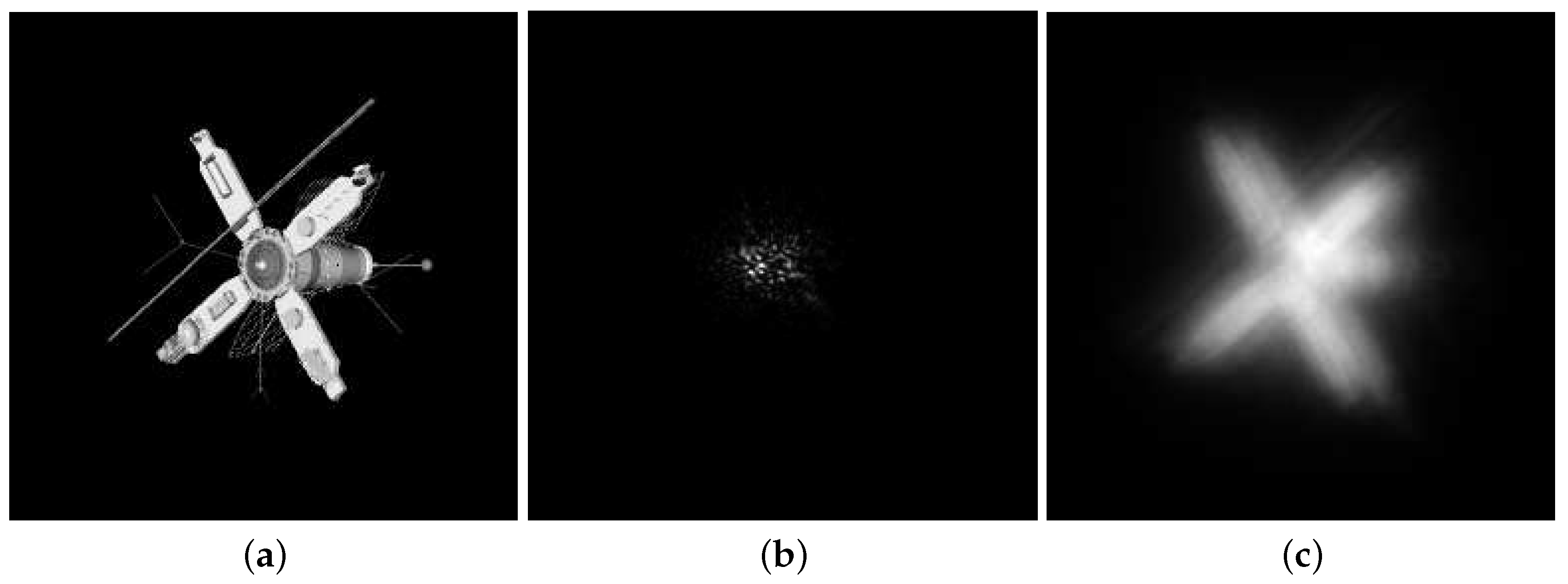

4.3. Satellite

Our final example is the

atmosphericBlur30 from the MATLAB toolbox RestoreTools [

2]. The true image, PSF, and blurred and noisy image are reported in

Figure 7a–c, respectively. Since we knew the true image we could estimate the noise level in the image, which was approximately

. Since this was an astronomical image we imposed zero bc’s.

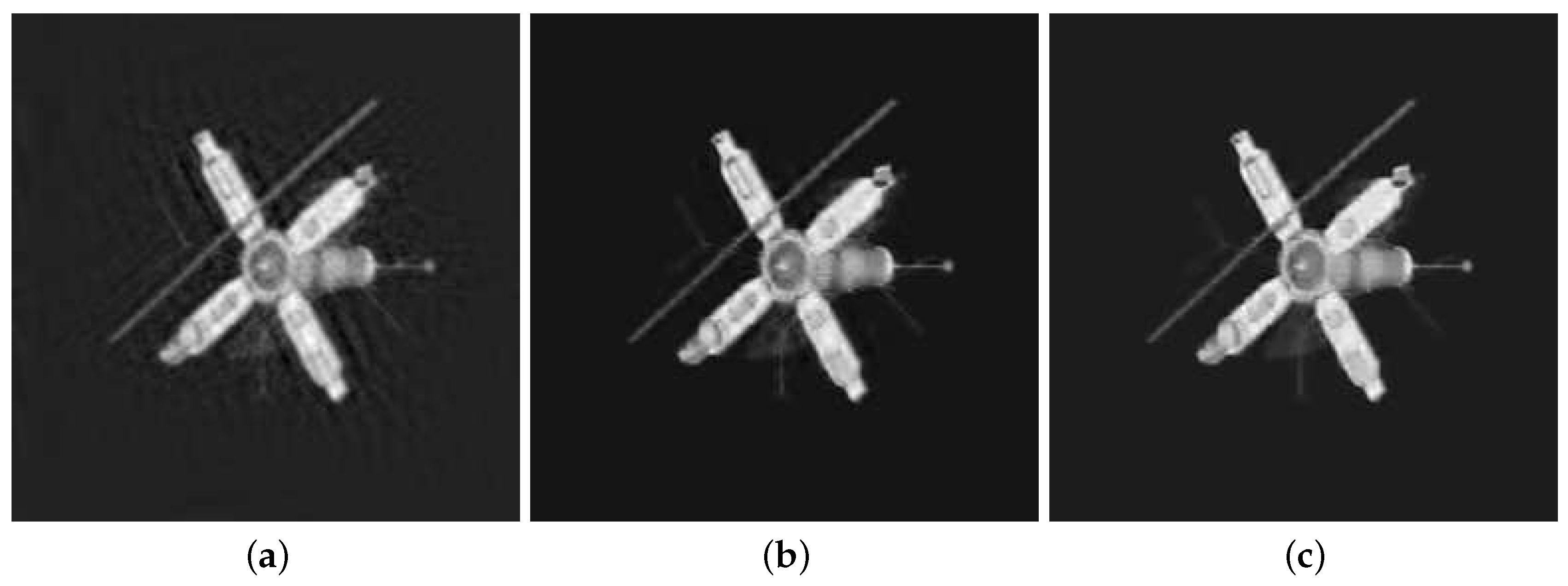

From the comparison of the computed results in

Table 1 we can see that the

method provided the best reconstruction among all considered methods. We can observe that, in this particular example, ISTA provided a very low quality reconstruction both in term of RRE and SSIM. We report in

Figure 8 some reconstructions. From the visual inspection of the computed solutions we can observe that both the approximations obtained with

and

did not present heavy ringing effects, while the reconstruction obtained by AIT-GP presented very heavy ringing around the “arms” of the satellite. This allowed us to show the benefits of introducing the soft-thresholding into the AIT-GP method.