Abstract

We are interested in fast and stable iterative regularization methods for image deblurring problems with space invariant blur. The associated coefficient matrix has a Block Toeplitz Toeplitz Blocks (BTTB) like structure plus a small rank correction depending on the boundary conditions imposed on the imaging model. In the literature, several strategies have been proposed in the attempt to define proper preconditioner for iterative regularization methods that involve such linear systems. Usually, the preconditioner is chosen to be a Block Circulant with Circulant Blocks (BCCB) matrix because it can efficiently exploit Fast Fourier Transform (FFT) for any computation, including the (pseudo-)inversion. Nevertheless, for ill-conditioned problems, it is well known that BCCB preconditioners cannot provide a strong clustering of the eigenvalues. Moreover, in order to get an effective preconditioner, it is crucial to preserve the structure of the coefficient matrix. On the other hand, thresholding iterative methods have been recently successfully applied to image deblurring problems, exploiting the sparsity of the image in a proper wavelet domain. Motivated by the results of recent papers, the main novelty of this work is combining nonstationary structure preserving preconditioners with general regularizing operators which hold in their kernel the key features of the true solution that we wish to preserve. Several numerical experiments shows the performances of our methods in terms of quality of the restorations.

1. Introduction

In image deblurring we are concerned in reconstructing an approximation of an image from blurred and noisy measurements. This process can be modeled by an integral equation of the form

where is the original image and is the observed imaged which is obtained from a combination of an (Hilbert-Schmidt) integral operator, represented by , and the add of some (unavoidable) noise coming from, e.g., perturbations on the observed data, measurement errors, and approximation errors. By assuming the kernel to be compactly supported and considering the ideal case Equation (1) becomes

where K is a compact linear operator. In this contest, is generally called point spread function (PSF).

Considering an uniform grid, images are represented by their color intensities measured on the grid (pixels). In this paper, for the sake of simplicity, we will deal only with square and gray-scale images, even if all the techniques presented here carry over to images of different sizes and colors as well.

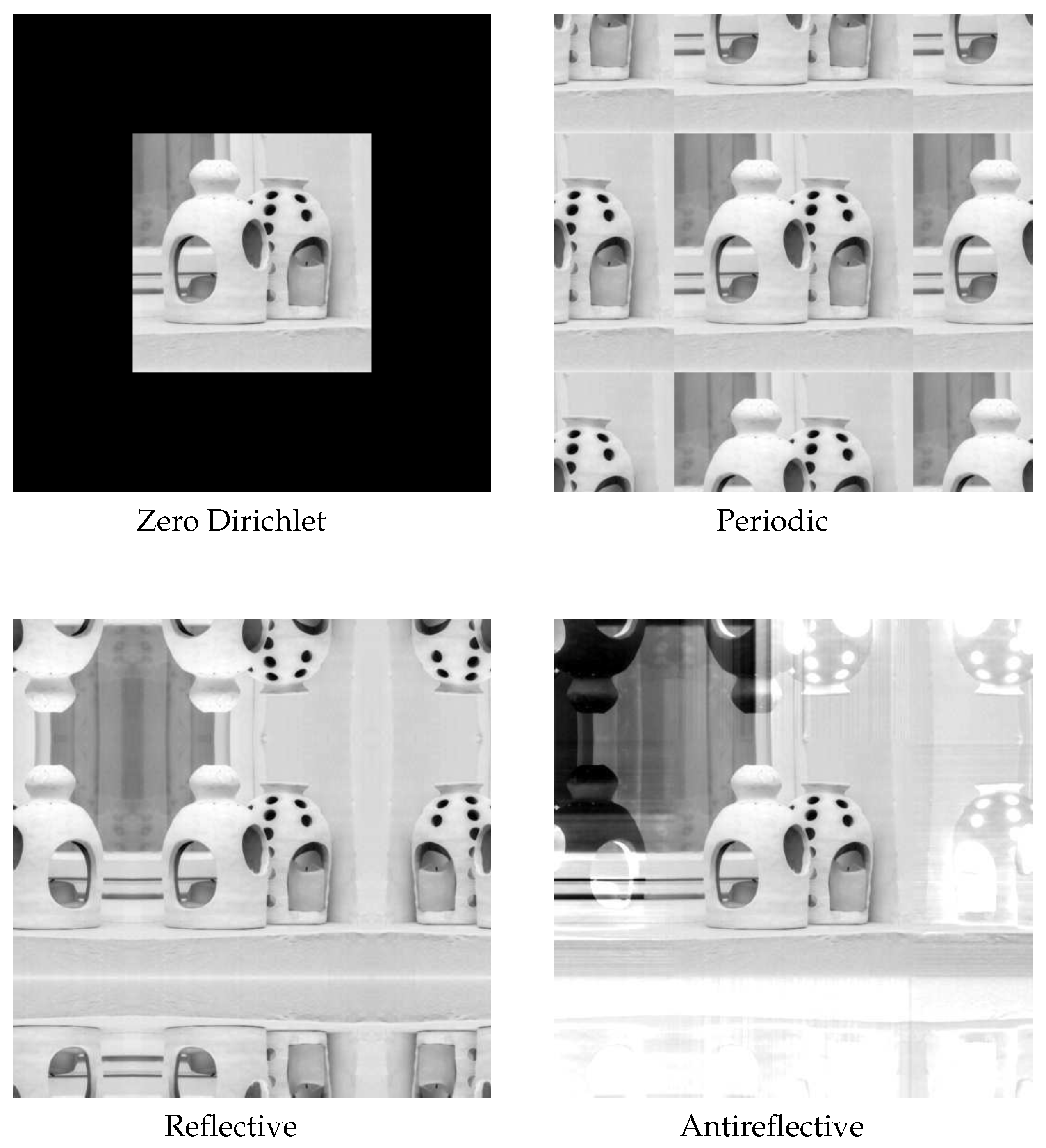

Collected images are available only in a finite region, the field of view (FOV), and the measured intensities near the boundary are affected by data which lie outside the FOV; see Figure 1 for an illustration.

Figure 1.

Field of view. We see what is inside the square box.

Denoting by and the stack ordered vectors corresponding to the observed image and the true image, respectively, the discretization of (1) becomes

where the matrix K is of size , being m and k the dimensions (in pixels) of the original picture. The matrix K is often called the blurring matrix. When imposing proper Boundary Conditions (BCs), the matrix K becomes square and in some cases, depending on the BCs and the symmetry of the PSF, it can be diagonalized by discrete trigonometric transforms. Indeed, specific BCs induce specific matrix structures that can be exploited to lessen the computational costs using fast algorithms. Of course, since BCs are artificially introduced, their advantages could come with drawbacks in terms of reconstruction accuracy, depending on the type of problem. The BCs approach forces a functional dependency between the elements of external to the FOV and those internal to this area. If the BC model is not a good approximation of the real world outside the FOV, the reconstructed image can be severely affected by some unwanted artifacts near the boundary, called ringing effects; see, e.g., [1].

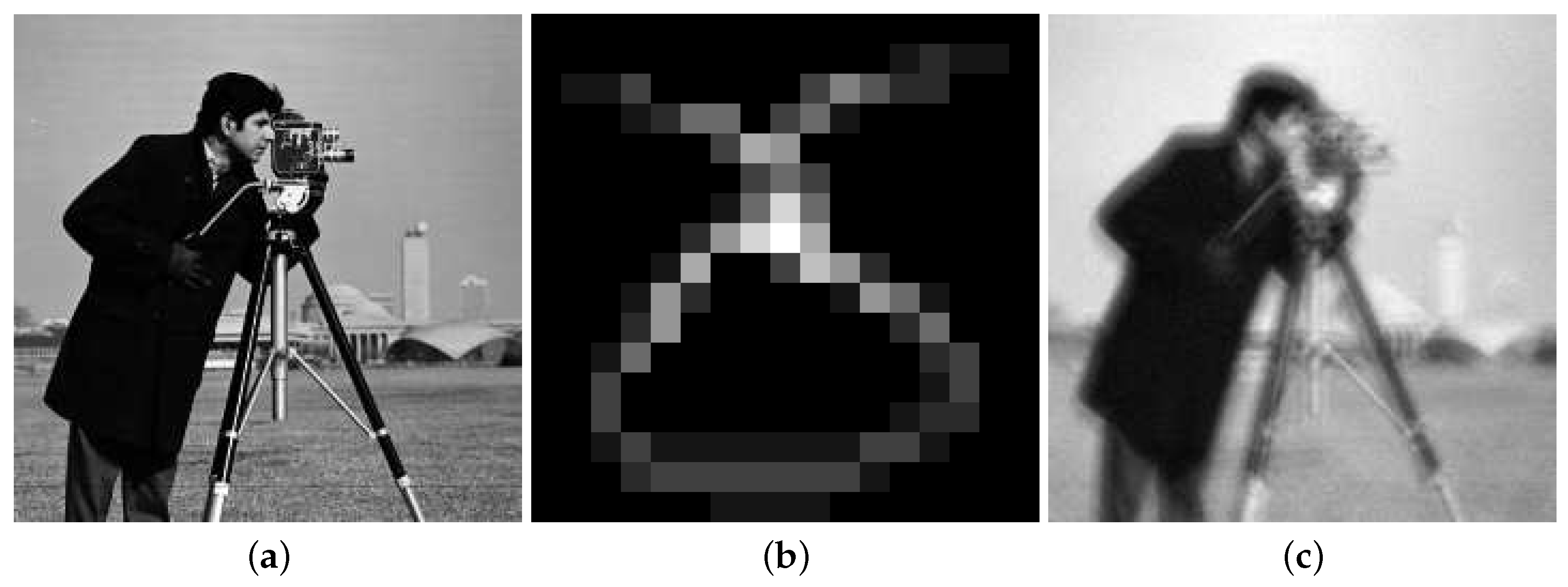

The choice of the different BCs can be driven by some additional knowledge on the true image and/or from the availability of fast transforms to diagonalize the matrix K within arithmetic operations. Indeed, the matrix-vector product can be always computed by the 2D FFT, after a proper padding of the image to convolve, (see, e.g., [2]), while the availability of fast transforms to diagonalize the matrix K depends on the BCs. Among the BCs present in the literature, we consider: Zero (Dirichlet), Periodic, Reflective and Anti-Reflective, but our approach can be extended to other BCs like, e.g., synthetic BCs [3] or high order BCs [4,5]. See Figure 2 for an illustration of the described BCs.

Figure 2.

Examples of boundary conditions.

On the other hand, since Equation (2) is the product of the discretization of a compact operator, K is severely ill-conditioned and may be singular. Such linear systems are commonly referred to as linear discrete ill-posed problems; see, e.g., [6] for a discussion. Therefore a good approximation of cannot be obtained from the algebraic solution (e.g., the least-square solution) of (2), but regularization methods are required. The basic idea of regularization is to replace the original ill-conditioned problem with a nearby well-conditioned problem, whose solution approximates the true solution. One of the popular regularization techniques is Tikhonov regularization and it amounts in solving

where denotes the vector 2-norm and is a regularization parameter to be chosen. The first term in (3) is usually refereed to as fidelity term and the second as regularization term. This translates into solving a linear problem for which many efficient methods have been developed for computing its solution and for estimating the regularizing parameter , see [6]. This approach unfortunately comes with a drawback: the edges of restored images are usually over-smoothed. Therefore, nonlinear strategies have been employed, in order to overcome this unpleasant property, like total variation (TV) [7] and thresholding iterative methods [8,9]. That said, typically many nonlinear regularization methods have an inner step that apply a least-square regularization and therefore can benefit from strategies previously developed for such simpler model.

In the present paper, both the regularization strategies that we propose share two common ingredients: wavelet decomposition and -norm minimization on the regularization term. This is motivated by the fact that the wavelet coefficients (under some basis) of most real images are usually very sparse. In particular, here we consider the tight frame systems used in [10,11,12], but the result can be easily extended to any framelet/wavelet system. Let be a wavelet or tight-frame synthesis operator (), the wavelets or tight-frame coefficients of the original image are such that

Within this frame set, the model Equation (2) translates into

Recently, in [13], a new technique was proposed which directly applies a single preconditioning operator P to Equation (4). The new preconditioned system becomes

Combining this approach with a soft-thresholding technique such as the modified linearized Bregman splitting algorithm [14], in order to mimic the -norm minimization, leads to the following preconditioned iterative scheme

where is a positive relaxation parameter and is the soft-thresholding function as defined in 6. Hereafter we will put : this is justified by applying an implicit rescaling of the preconditioned system matrix .

The paper is organized as follows: in Section 2 we propose a generalization of an approximated iterative Tikhonov scheme that was firstly introduced in [15] and then developed and adapted into different settings in [16,17]. Here the preconditioner P takes the form

where B is an approximation of A, in the sense that with C the discretization of the same problem (1) as the original blurring matrix K but imposing Periodic BCs. The operator can be a function of or the discretization of a differential operator. The method is nonstationary and the parameter is computed by solving a nonlinear problem with a computational cost of . Related work on this kind of preconditioner can be found in [18,19,20]. In Section 3 we define a class of preconditioners P endowed with the same structure of the system matrix A, as initially proposed in [21] and then further developed in [22]. It is called structure preserving reblurring preconditioning strategy and we combine it with the generalized regularization filtering approach of the preceding Section 2. The idea is to preserve both the informations carried over by the spectra of the operator A and the structure itself of the operator induced by the best fitting BCs. Section 4 contains a selection of significant numerical examples which confirm the robustness and quality of the proposed regularization schemes. Section 5 provides a summary of the techniques presented in this work and draws some conclusions. Finally, in Appendix A are provided proofs of convergence and regularization properties of the proposed algorithms.

2. Preconditioned Iterated Soft-Thresholding Tikhonov with General Regularizing Operator

2.1. Preliminary Definitions

Before proceeding further, let us introduce here some definitions and notations that will be used in the forthcoming sections. We consider

to be the discretization of a compact linear operator

where the Euclidean 2-norm is induced by the standard Euclidean inner product

Hereafter, we will specify the vector space where the inner product acts only whenever it is necessary for disambiguation. The analysis that will follow in the next sections will be performed generally on a perturbed data , namely

with , and where is a noise vector such that , is called the noise level.

Let

be the discretization of a compact linear operator that approximates K, in a sense that will be specified later. Let

be such that

where indicates the adjoint operator of W, i.e., for each pair . We define

Let us introduce the following matrix norm. Given a generic linear operator

where is the sup norm, let us define the matrix norm as

Finally, let and let be such that

with the soft-thresholding function

2.2. General Regularization Operator as

Let be a continuous function such that

where are positive constants, and define . By the continuity of h and by well-known facts from functional analysis [23] we can write as the operator defined by

where is the spectral decomposition of a (generic) self-adjoint operator and is the singular value expansion of C. We summarize the computations in Algorithm 1.

| Algorithm 1 |

|

A rigorous and full detailed analysis of the preceding algorithm will be performed in Appendix A. In order to prove all the desired properties we will need a couple of assumptions on the operators K, C, and on the parameter , that we present here below.

Assumption 1.

Let us observe that Equation (9a) translates into

Let us spread some light on the preceding conditions. Assumption (9a), or equivalently (10), is a strong assumption. It may be hard to satisfy it for every specific problem, as it implies

or equivalently

that is, K and C are spectrally equivalent. Nevertheless, in image deblurring the boundary conditions have a very local effect, i.e., the approximation error can be decomposed as

where E is a matrix of small norm (and the zero matrix if the PSF is compactly supported), and R is a matrix of small rank, compared to the dimension of the problem. This suggests that Assumption (9a) needs to be satisfied only in a relatively small subspace, supposedly being a zero measure subspace. In particular only for every , with and N fixed, such that Proposition A1 in Appendix A could hold. All the numerical experiments are consistent with this observation but for a deeper understanding and a full treatment of this aspect we refer the reader to ([15], Section 4).

On the other hand instead, Assumption (9b) is quite natural. It is indeed equivalent to require that

that is, the soft-thresholding parameter is continuously noise-dependent and it holds that as .

2.3. General Regularization Operator as

In image deblurring, in order to better preserve the edges of the reconstructed solution, it is usually introduced a differential operator , where is chosen as a first or second order differential operator which holds in its kernel all these functions which posses the key features of the true solution that we wish to preserve. In particular, since we are interested to recover the edges and curves of discontinuities of the true image, it is a common choice to rely on the Laplace operator with Neumann BCs, see [24]. In these recent papers [25,26], observing the spectral distribution of the Laplacian, it was proposed to substitute with

with .

Adding some new assumptions, we propose a modified version of the preceding Algorithm 1 that can take into account directly the operator .

Assumption 2.

We summarize the computations in Algorithm 2.

| Algorithm 2 |

|

We skip all the proofs of convergence since they can be recovered easily adapting the proofs in Appendix A with ([16], Section 4).

3. Structured PISTA with General Regularizing Operator

The structured case is a generalization of what was developed in [21,22], merging these ideas with the general approach described in Section 2. We skip some details since they can be easily recovered from the aforementioned papers.

The blurring matrix K is made by two parts: the PSF and the BCs inherited by the discretization. Different types of structured matrices are given rise by this latter choice. Without loosing generality and for the sake of simplicity, we consider a square PSF and we suppose that the center of the PSF is known.

Consider the pixels of the PSF, we can define the following generating function

where and we assumed that if the entry does not belong to [5]. Observe that can be seen as the Fourier coefficients of , so that the same information is contained in the generating function and in .

Summarizing the notation that we set in the Introduction about the BCs, we have

We notice that, since the continuous operator is shift-invariant, in all these four cases K has a Toeplitz structure which depends on plus a correction term which depends on the chosen BCs.

In conclusion, we employ the unified notation , where can be any of the classes of matrices just introduced (i.e., , , , ). With this notation we wish to highlight the two crucial elements that determine K: the blurring phenomenon associated with the PSF described by and the chosen BCs represented by .

Given the generating function (13) associated to the PSF , let us compute the eigenvalues of the corresponding BCCB matrix by means of the 2D-FFT, where . Fix a regularizing (differential) operator as in Section 2, and suppose that the Assumptions 1 and 2 hold. The differential operator can be of the form , as in Algorithm 1 as well. Let now

be the new eigenvalues after the application of the Tikhonov filter to , where are the eigenvalues (singular values) of and is computed as in Algorithms 1 and 2. Let us compute now the coefficients of

by means of the 2D-iFFT and, finally, let us define

where corresponds to the most fitting BCs for the model problem (1).

We are now ready to formulate the last method whose computation are reported in Algorithm 3.

| Algorithm 3 |

|

In the case that , then the algorithm is modified in the following way:

where , . We will denote this version by . We will not provide a direct proof of convergence for this last algorithm. Let us just observe that the difference between (15) and (12)–(8) is just a correction of small rank and small norm.

4. Numerical Experiments

We now compare the proposed algorithms with some methods from the literature. In particular, we consider the AIT-GP algorithm described in [16] and the ISTA algorithm described in [8]. The AIT-GP method can be seen as Algorithm 2 with , while the ISTA algorithm is equivalent to iterations of Algorithm 2 without the preconditioner. These comparisons allow us to show how the quality of the reconstructed solution is improved by the presence of both the soft-thresholding and the preconditioner.

The ISTA method and our proposals require the selection of a regularization parameter. For all these methods we select the parameter that minimizes the relative restoration error (RRE) defined by

For the comparison of the algorithms we consider the Peak Signal to Noise Ratio (PSNR) defined by

where is the the number of elements of and M denotes the maximum value of . Moreover, we consider the Structure Similarity index (SSIM); the definition of the SSIM is involved, here we recall that this index measures how accurately the computed approximation is able to reconstruct the overall structure of the image. The higher the value of the SSIM the better the reconstruction is, and the maximum value achievable is 1; see [27] for a precise definition of the SSIM.

We now describe how we construct the operator W. We use the tight frames determined by linear B-splines; see, e.g., [28]. For one-dimensional problems they are composed by a low-pass filter and two high-pass filters and . These filters are determined by the masks are given by

Imposing reflexive boundary conditions we determine the analysis operator W so that . Define the matrices

and

Then the operator W is defined by

To construct the two-dimensional framelet analysis operator we use the tensor products

The matrix is a low-pass filter; all the other matrices contain at least one high-pass filter. The analysis operator is given by

In , following [26], we set

All the computations were performed on MATLAB R2018b running on a laptop with an Intel i7-8750H @ 2.20 GHz CPU and 16 GB of RAM.

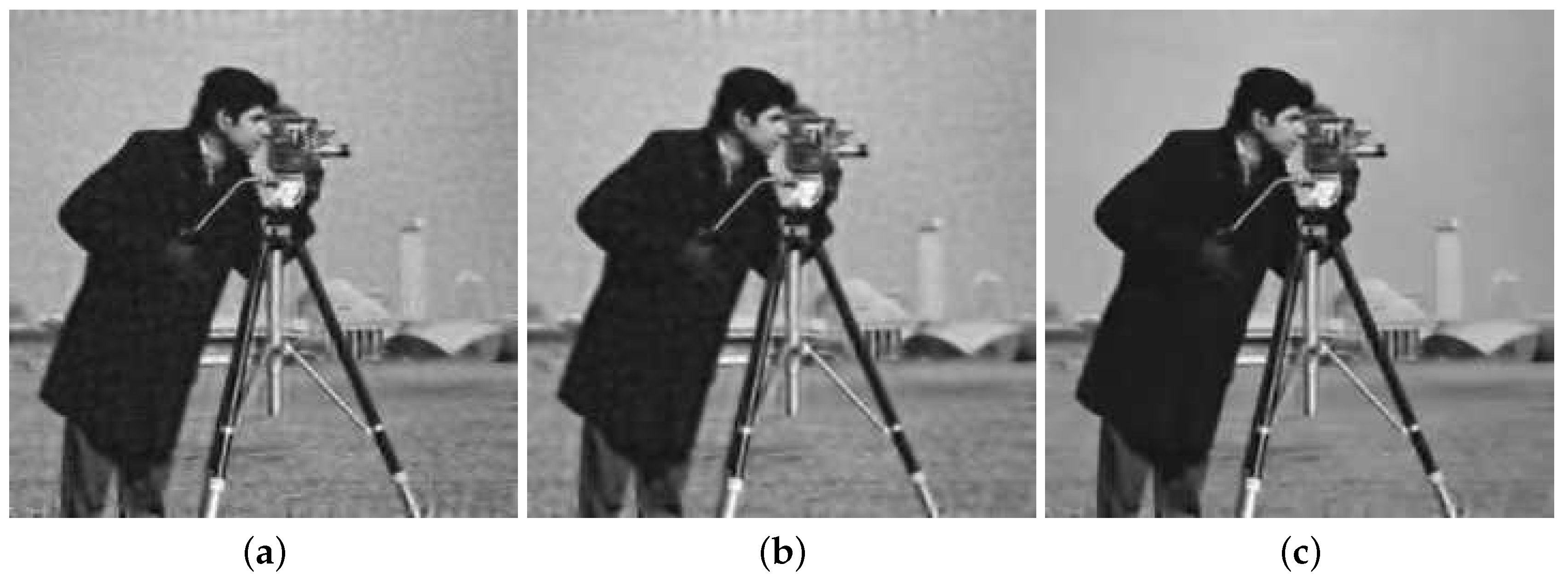

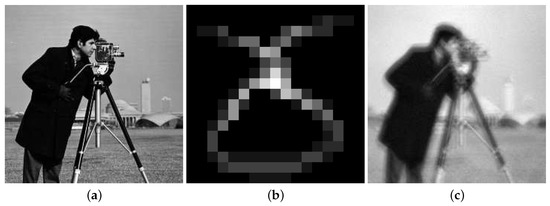

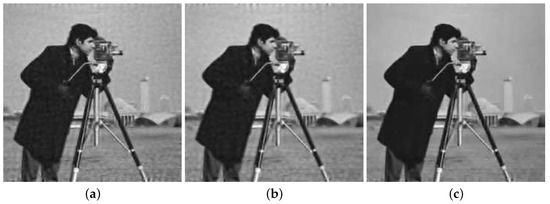

4.1. Cameraman

We first considered the cameraman image in Figure 3a and we blurred it with the non-symmetric PSF in Figure 3b. We then added white Gaussian noise obtaining the blurred and noisy image in Figure 3c. Note that we cropped the boundaries of the image to simulate real data; see [1] for more details. Since the image was generic we imposed reflexive BCs.

Figure 3.

Cameraman test problem: (a) True image ( pixels), (b) point spread function (PSF) ( pixels), (c) Blurred and noisy image with of white Gaussian noise ( pixels).

In Table 1 we report the results obtained with the different methods. We can observe that provided the best reconstruction of all considered algorithms. Moreover, we can observe that, in general, the introduction of the structured preconditioner improved the quality of the reconstructed solutions, especially in terms of SSIM. From the visual inspection of the reconstructions in Figure 4 we can observe that the introduction of the structured preconditioner allowed us to evidently reduce the boundary artifacts as well as avoid the amplification of the noise.

Table 1.

Comparison of the quality of the reconstructions for all considered examples. We highlight in boldface the best result.

Figure 4.

Cameraman test problem reconstructions: (a) ISTA, (b) , (c) .

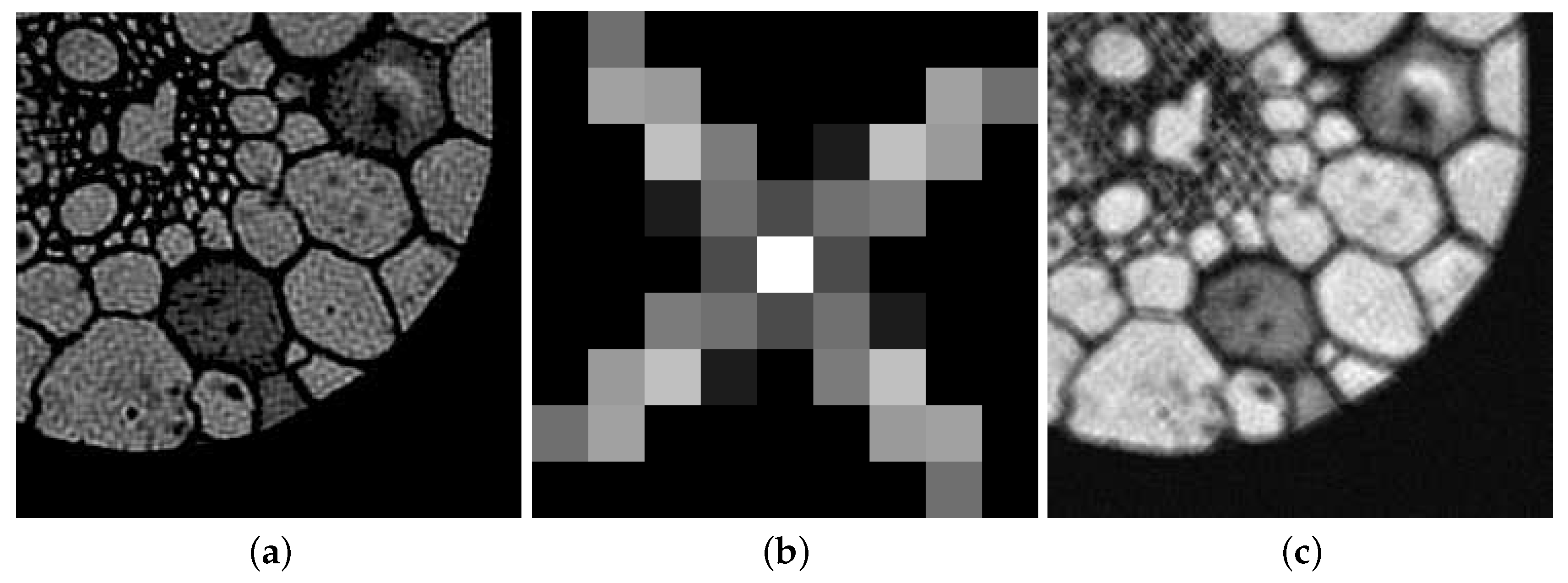

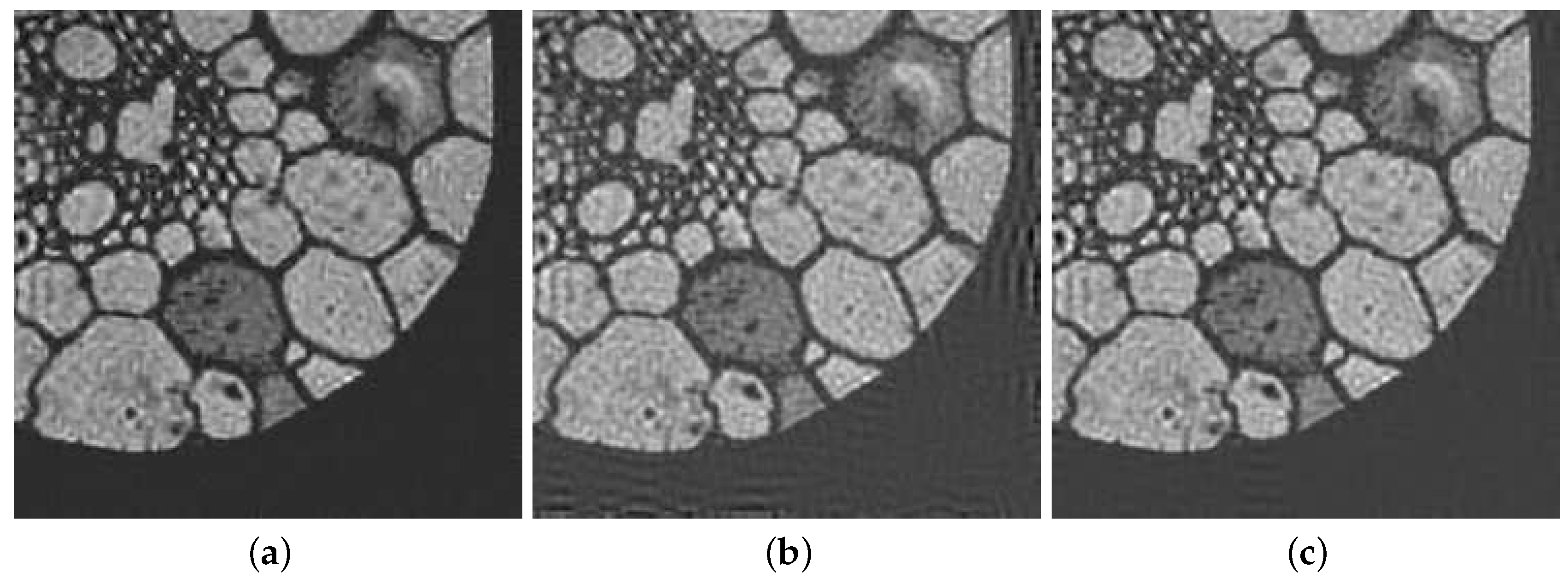

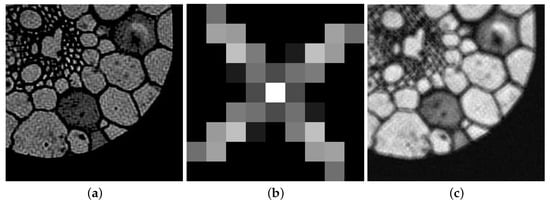

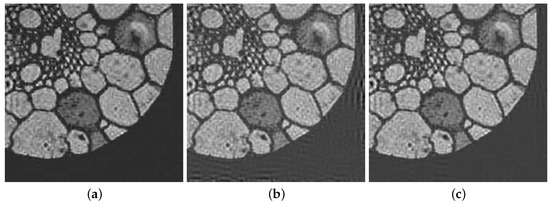

4.2. Grain

We now considered the grain image in Figure 5a and blurred it with the PSF, obtained by the superposition of two motions PSF, in Figure 5b. After adding of white Gaussian noise and cropping the boundaries we obtained the blurred and noisy image in Figure 5c. According to the nature of the image we used reflexive bc’s.

Figure 5.

Grain test problem: (a) True image ( pixels), (b) PSF ( pixels), (c) Blurred and noisy image with of white Gaussian noise ( pixels).

Again in Table 1 we report all the results obtained with the considered methods. In this case ISTA provided the best reconstruction in terms of RRE and PSNR. However, provided the best reconstruction terms of SSIM and very similar results in term of PSNR and RRE. In Figure 6 we report some of the reconstructed solution. From the visual inspection of these reconstruction we can see that the introduction of the structured preconditioner reduced the ringing and boundary effects in the computed solutions.

Figure 6.

Grain test problem reconstructions: (a) ISTA, (b) , (c) .

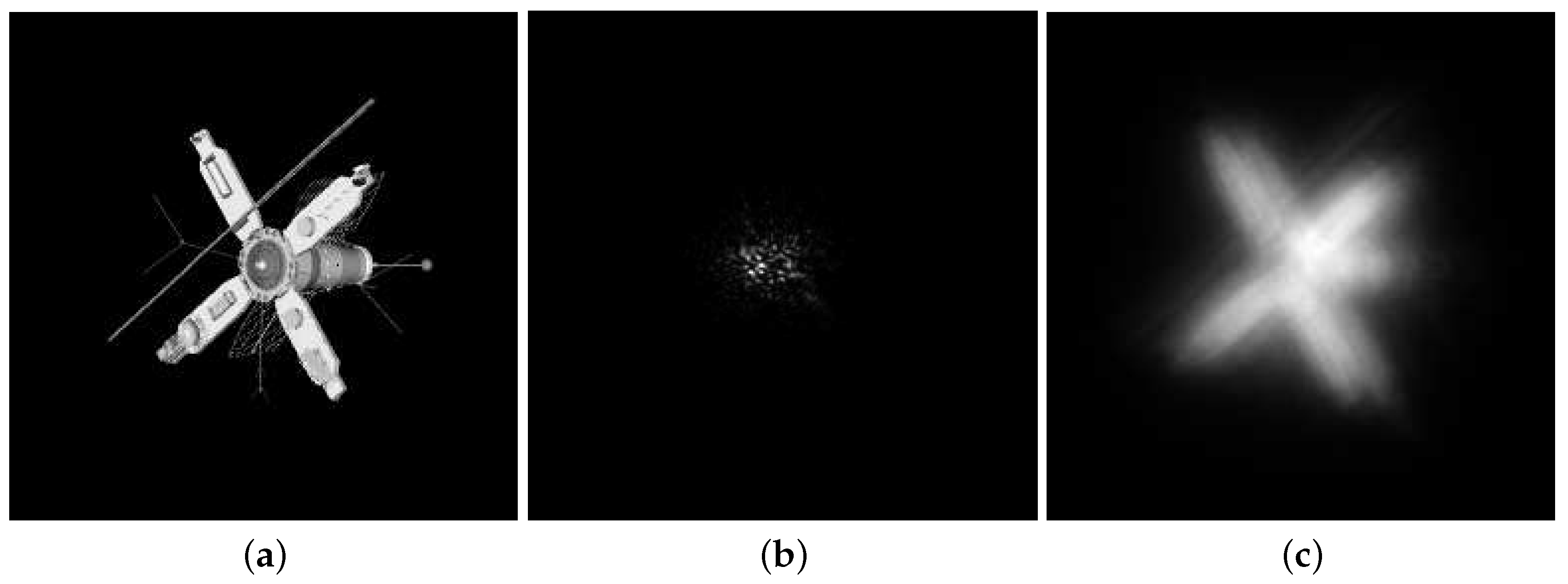

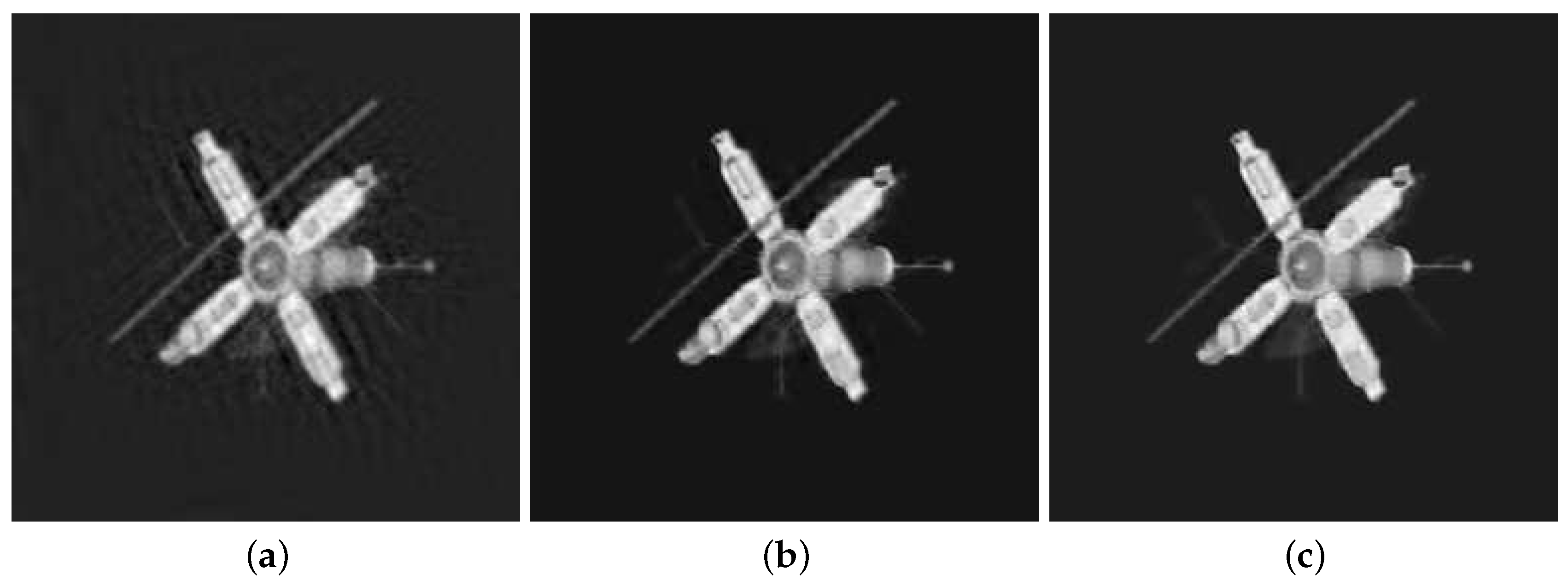

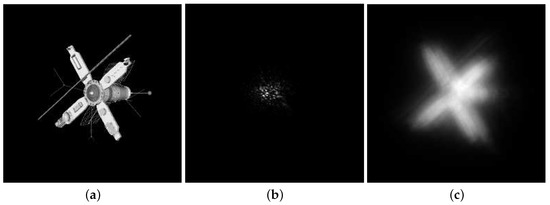

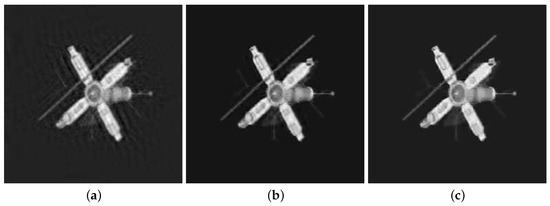

4.3. Satellite

Our final example is the atmosphericBlur30 from the MATLAB toolbox RestoreTools [2]. The true image, PSF, and blurred and noisy image are reported in Figure 7a–c, respectively. Since we knew the true image we could estimate the noise level in the image, which was approximately . Since this was an astronomical image we imposed zero bc’s.

Figure 7.

Satellite test problem: (a) True image ( pixels), (b) PSF ( pixels), (c) Blurred and noisy image with ≈ of white Gaussian noise ( pixels).

From the comparison of the computed results in Table 1 we can see that the method provided the best reconstruction among all considered methods. We can observe that, in this particular example, ISTA provided a very low quality reconstruction both in term of RRE and SSIM. We report in Figure 8 some reconstructions. From the visual inspection of the computed solutions we can observe that both the approximations obtained with and did not present heavy ringing effects, while the reconstruction obtained by AIT-GP presented very heavy ringing around the “arms” of the satellite. This allowed us to show the benefits of introducing the soft-thresholding into the AIT-GP method.

Figure 8.

Satellite test problem reconstructions: (a) AIT-GP, (b) , (c) .

5. Conclusions

This work develops further and brings together all the techniques studied in [16,17,21,22,29]. The idea is to combine thresholding iterative methods, an approximate Tikhonov regularization scheme depending on a general (differential) operator and a structure preserving approach, with the main goal in mind to reduce the boundary artifacts which appear in the resulting de-blurred image when imposing artificial boundary conditions. The numerical results are promising and show improvements with respect to known state-of-the-art deblurring algorithms. There are still open problems, mainly concerning the theoretical assumptions and convergence proofs which will be furtherly investigated in future works.

Author Contributions

Writing–original draft, D.B. and A.B. These authors contributed equally to this work. All authors have read and agreed to the published version of the manuscript.

Funding

Both authors are members of INdAM-GNCS Gruppo Nazionale per il Calcolo Scientifico. A.B. work is partially founded by the Young Researcher Project “Reconstruction of sparse data” of the GNCS group of INdAM and by the Regione Autonoma della Sardegna research project “Algorithms and Models for Imaging Science [AMIS]” (RASSR57257, intervento finanziato con risorse FSC 2014-2020 - Patto per lo Sviluppo della Regione Sardegna).

Conflicts of Interest

The authors declare no conflict of interest.

Appendix A. Proofs

Hereafter we analyze Algorithm 1, aiming to prove its convergence. The techniques carried out in the proofs of most of the following results can be tracked down to the papers [15,16,17], therefore bringing not many mathematical novelties other than the results themselves. Nevertheless, since the proofs are very technical such that even a slight change can produce non trivial difficulties, we will present a full treatment leaving no details, in order to make this paper self-contained and easily readable.

Following up Section 2.1, we need to set some more notations. Let us consider the singular value decomposition (SVD) of C as the triple such that

where is the orthonormal group and is the adjoint of the operator V, i.e., for every pair . We will denote the spectrum of by

Hereafter, without loss of generality we will assume that

The first issue we have to consider is the existence of the sequence .

Lemma A1.

Let . Then for every fixed n there exists that satisfies (7). It can be computed by the following iteration

where

The convergence is locally quadratic. The existence of the regularization parameter and the locally quadratic convergence of the algorithm above are independent and uniform with respect to the dimension .

Proof.

The existence of is an easy consequence of the monotonicity of

with respect to . Indeed, let us rewrite (7) as follows

where is the discrete spectral measure associated to with respect to the SVD of C and are the singular values of the spectrum of C. Since for every , then by monotone convergence it holds that

Indeed, it is not difficult to prove that whenever and , as assumed in the hypothesis. Since for the left hand-side of (A2) is zero, then we conclude that there exists an unique such that equality holds in (7). Due to the generality of our proof and the fact that we could pass the limit under the sign of integral, the existence of such an is granted uniformly with respect to the dimension .

Since

fixing , let us now define the following function

Since

then there exist two constants independents of such that

and in particular for every n and m. Therefore, if we define

it holds that

Then the Newton iteration applied to yields the iteration

By (A3), is a decreasing convex function in . Since , obviously we have that

If

then necessarily we would have that . Hence, , and consequently

From (A6) we would deduce that , but this is absurd since as already observed above, if . Therefore and by standard properties of the Newton iteration, converges to the minimizer from below and the convergence is locally quadratic. Finally, defining

then we get (A1), converges monotonically from above to and the convergence is locally quadratic. Again, thanks to (A4) and (A5), the rate of convergence is uniform with respect to the dimension of . □

From now on, instead of working with the error , in order to simplify the following proofs and notations, it is useful to consider the partial error with respect to , namely

This will not affect the generality of our proofs, thanks to the continuity of with respect to the noise level .

Proposition A1.

Proof.

Combining the preceding proposition with (7), we are going to show that the sequence is monotonically decreasing. We have the following result.

Proposition A2.

Let be defined in (A7). If the assumptions (9) are satisfied, then of Algorithm 1 decreases monotonically for . In particular, we deduce

Proof.

Corollary A1.

Proof.

The first inequality follows by taking the sum of the quantities in (A9) from up to .

For the second inequality, note that for every

and every , we have

and hence,

as for . This implies that in (7) satisfies , thus

According to the choice of parameters in Algorithm 1, we deduce

and

Therefore, there exists , depending only on and q such that

and

Now the second inequality follows immediately. □

From (A10) it can be seen that the sum of the squares of the residual norms is bounded, and hence, if , there must be a first integer such that (A10) is fulfilled, i.e., Algorithm 1 terminates after finitely many iterations.

Finally, we are ready to prove a convergence and regularity result.

Theorem A1.

Proof.

We are going to show convergence for the sequence and then the thesis will follow easily from the continuity of , i.e.,

The proof of the convergence for the sequence can be divided into two steps: at step one, we show the convergence in the free noise case . In particular, the sequence converges to a solution of (A11) that is the closest to . At the second step, we show that given a sequence of positive real numbers as , then we get a corresponding sequence converging as .

Step 1: Fix . It follows that , and the sequence will not stop, i.e., , since the discrepancy principle will not be satisfied by any n, in particular for . Set , with . It holds that

where the last inequality comes from (11). At the same time, we have that

Combining together (A12) and (A13), we obtain that

This is valid for every . Choosing l such that , it follows that

From Proposition A2, is a converging sequence, and from Corollary A1

since it is the tail of a converging series. Therefore,

and is a Cauchy sequence, and then convergent.

Step 2: Let be the converging point of the sequence and let be a sequence of positive real numbers converging to 0. For every , let be the first positive integer such that (A10) is satisfied, whose existence is granted by Corollary A1, and let be the corresponding sequence. For every fixed , there exists such that

and there exists for which

due to the continuity of the operator for every fixed n. Therefore, let us choose large enough such that and such that for every . Such does exists since and for . Hence, for every , we have

where the first inequality comes from Proposition A2 and the last one from (A14) and (A15). □

References

- Hansen, P.C.; Nagy, J.G.; O’leary, D.P. Deblurring Images: Matrices, Spectra, and Filtering; Siam: Philadelphia, PA, USA, 2006; Volume 3. [Google Scholar]

- Nagy, J.G.; Palmer, K.; Perrone, L. Iterative methods for image deblurring: A Matlab object-oriented approach. Numer. Algorithms 2004, 36, 73–93. [Google Scholar] [CrossRef]

- Almeida, M.S.; Figueiredo, M. Deconvolving images with unknown boundaries using the alternating direction method of multipliers. IEEE Trans. Image Process. 2013, 22, 3074–3086. [Google Scholar] [CrossRef] [PubMed]

- Dell’Acqua, P. A note on Taylor boundary conditions for accurate image restoration. Adv. Comput. Math. 2017, 43, 1283–1304. [Google Scholar] [CrossRef]

- Donatelli, M. Fast transforms for high order boundary conditions in deconvolution problems. BIT Numer. Math. 2010, 50, 559–576. [Google Scholar] [CrossRef]

- Hanke, M.; Hansen, P.C. Regularization methods for large-scale problems. Surv. Math. Ind. 1993, 3, 253–315. [Google Scholar]

- Rudin, L.I.; Osher, S.; Fatemi, E. Nonlinear total variation based noise removal algorithms. Physica D 1992, 60, 259–268. [Google Scholar] [CrossRef]

- Daubechies, I.; Defrise, M.; De Mol, C. An iterative thresholding algorithm for linear inverse problems with a sparsity constraint. Commun. Pure Appl. Math. 2004, 57, 1413–1457. [Google Scholar] [CrossRef]

- Figueiredo, M.A.; Nowak, R.D. An EM algorithm for wavelet-based image restoration. IEEE Trans. Image Process. 2003, 12, 906–916. [Google Scholar] [CrossRef]

- Chan, R.H.; Chan, T.F.; Shen, L.; Shen, Z. Wavelet algorithms for high-resolution image reconstruction. SIAM J. Sci. Comput. 2003, 24, 1408–1432. [Google Scholar] [CrossRef]

- Cai, J.F.; Osher, S.; Shen, Z. Linearized Bregman iterations for frame-based image deblurring. SIAM J. Imag. Sci. 2009, 2, 226–252. [Google Scholar] [CrossRef]

- Cai, J.F.; Osher, S.; Shen, Z. Split Bregman methods and frame based image restoration. Multiscale Model. Simul. 2010, 8, 337–369. [Google Scholar] [CrossRef]

- Dell’Acqua, P.; Donatelli, M.; Estatico, C. Preconditioners for image restoration by reblurring techniques. J. Comput. Appl. Math. 2014, 272, 313–333. [Google Scholar] [CrossRef]

- Yin, W.; Osher, S.; Goldfarb, D.; Darbon, J. Bregman iterative algorithms for ℓ1-minimization with applications to compressed sensing. SIAM J. Imag. Sci. 2008, 1, 143–168. [Google Scholar] [CrossRef]

- Donatelli, M.; Hanke, M. Fast nonstationary preconditioned iterative methods for ill-posed problems, with application to image deblurring. Inverse Probl. 2013, 29, 095008. [Google Scholar] [CrossRef]

- Buccini, A. Regularizing preconditioners by non-stationary iterated Tikhonov with general penalty term. Appl. Numer. Math. 2017, 116, 64–81. [Google Scholar] [CrossRef]

- Cai, Y.; Donatelli, M.; Bianchi, D.; Huang, T.Z. Regularization preconditioners for frame-based image deblurring with reduced boundary artifacts. SIAM J. Sci. Comput. 2016, 38, B164–B189. [Google Scholar] [CrossRef]

- Buccini, A.; Donatelli, M.; Reichel, L. Iterated Tikhonov regularization with a general penalty term. Numer. Linear Algebra Appl. 2017, 24, e2089. [Google Scholar] [CrossRef]

- Buccini, A.; Pasha, M.; Reichel, L. Generalized singular value decomposition with iterated Tikhonov regularization. J. Comput. Appl. Math. 2020, 373, 112276. [Google Scholar] [CrossRef]

- Huang, G.; Reichel, L.; Yin, F. Projected nonstationary iterated Tikhonov regularization. BIT Numer. Math. 2016, 56, 467–487. [Google Scholar] [CrossRef]

- Dell’Acqua, P.; Donatelli, M.; Estatico, C.; Mazza, M. Structure preserving preconditioners for image deblurring. J. Sci. Comput. 2017, 72, 147–171. [Google Scholar] [CrossRef]

- Bianchi, D.; Buccini, A.; Donatelli, M. Structure Preserving Preconditioning for Frame-Based Image Deblurring. In Computational Methods for Inverse Problems in Imaging; Springer: Berlin/Heidelberg, Germany, 2019; pp. 33–49. [Google Scholar]

- Rudin, W. Functional Analysis; International Series in Pure and Applied Mathematics; McGraw-Hill Education: New York, NY, USA, 1991. [Google Scholar]

- Engl, H.W.; Hanke, M.; Neubauer, A. Regularization of Inverse Problems; Springer Science & Business Media: Berlin/Heidelberg, Germany, 1996; Volume 375. [Google Scholar]

- Bianchi, D.; Donatelli, M. On generalized iterated Tikhonov regularization with operator-dependent seminorms. Electron. Trans. Numer. Anal. 2017, 47, 73–99. [Google Scholar] [CrossRef]

- Huckle, T.K.; Sedlacek, M. Tikhonov–Phillips regularization with operator dependent seminorms. Numer. Algorithms 2012, 60, 339–353. [Google Scholar] [CrossRef][Green Version]

- Wang, Z.; Bovik, A.C.; Sheikh, H.R.; Simoncelli, E.P. Image quality assessment: From error visibility to structural similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar] [CrossRef] [PubMed]

- Buccini, A.; Reichel, L. An ℓ2–ℓq Regularization Method for Large Discrete Ill-Posed Problems. J. Sci. Comput. 2019, 78, 1526–1549. [Google Scholar] [CrossRef]

- Huang, J.; Donatelli, M.; Chan, R.H. Nonstationary iterated thresholding algorithms for image deblurring. Inverse Probl. Imaging 2013, 7, 717–736. [Google Scholar] [CrossRef]

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).