Abstract

This paper is concerned with the orthogonal polynomials. Upper and lower bounds of Legendre polynomials are obtained. Furthermore, entropies associated with discrete probability distributions is a topic considered in this paper. Bounds of the entropies which improve some previously known results are obtained in terms of inequalities. In order to illustrate the results obtained in this paper and to compare them with other results from the literature some graphs are provided.

1. Introduction

The classical orthogonal polynomials play an important role in applications of mathematical analysis, spectral method with applications in fluid dynamics and other areas of interest. In the recent years many theoretical and numerical studies about Jacobi polynomials were given. Inequalities for Jacobi polynomials using entropic and information inequalities were obtained in [1]. Bernstein type inequalities for Jacobi polynomials and their applications in many research topics in mathematics were considered in [2]. A very recent conjecture (see [3]) which asserts that the sum of the squared Bernstein polynomials is a convex function in was validated using properties of Jacobi polynomials.This conjecture aroused great interest, so that new proofs of it were given (see [4,5]). The main objective of this paper is to obtain upper and lower bounds of Legendre polynomials and to apply these results in order to give lower and upper bounds for entropies. Usually the entropies are described by complicated expressions, then it is useful to establish some bounds of them. The concept of entropy is a measure of uncertainty of a random variable and was introduced by C.E. Shannon in [6]. Later, A. Rényi [7] introduced a parametric family of information measures, that includes Shannon entropy as a special case. The classical Shannon entropy was given using discrete probability distributions. This concept was extended to the continuous case involving the continuous probability distributions. For more details about this topic, the reader is referred to the recent papers [8,9,10,11,12]. This paper is devoted to the entropy associated with discrete probability distribution.

The Jacobi polynomials are orthogonal polynomials with respect to the weight , on the interval . Their representation by hypergeometric function is given as follows (see [13,14])

where

and , is the shifted factorial.

The normalization of is effected by

Denote by the polynomials:

If , Jacobi’s polynomials are called Gegenbauer polynomials (ultraspherical polynomial). The usual notation and normalization for ultraspherical polynomial is the following (see [15])

Note that

Some important special cases are the Chebyshev polynomial of the first kind

the Chebyshev polynomial of the second kind

and the Legendre polynomial

2. Preliminary Results

Theorem 1.

Let . Then, the inequalities

hold for all , where

Proof.

From (8), it follows that

Let , and consider a function defined by

where .

Further, we determine the extreme points of function f.

We consider . From the system

we get

Solving the sistem we obtain the following stationary points:

where

We have,

Since , it follows that A is a maximum point for f. For , we have

Then f attains its minimum value at the points B and C

We compute

We observe that

It follows

For and , we obtain (7). □

Remark 1.

The bounds of for and were obtained [16], as follows

3. Bounds for Legendre Polynomials

Lemma 1.

Let

where is integer part of positive real number x. The following relation holds

Proof.

From [14] (eq. 4.7.30, p. 83) we have

Therefore,

Using the below formula (see [17] ((15.3.3), p. 559))

we obtain

Then,

The link between Chebyshev polynomial of the first and second kind are presented in the following two relations (see [14] (Theorem 4.1, p. 59))

Using the equalities

we obtain, after a straight forward computation:

Therefore

□

Theorem 2.

Let be the Legendre polynomial of degree . Then, for

where is the Chebyshev polynomial of the second kind.

Proof.

Using the relation (see [18] (p. 713))

we get

The Jacobi polynomials verify the following equality (see [14] ((4.5.4), p. 71)):

Using relation (11), we get

Therefore,

Consequently,

and the Theorem is proved. □

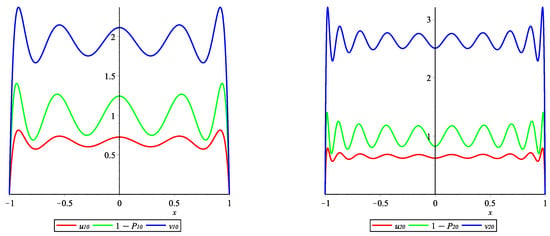

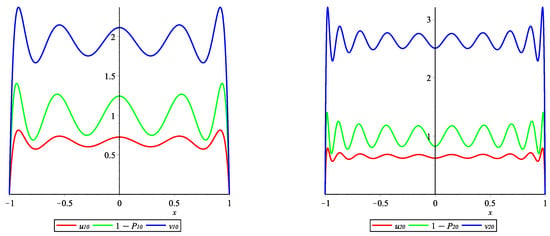

Example 1.

Let , . In Figure 1 are given the graphics of the polynomial and the lower and the upper bounds of it obtained in Theorem 2.

Figure 1.

Graphs of , and .

4. Bounds for Information Potentials

Let be a parameterized discrete probability distribution. The associated information potential is defined by

The Rényi entropy of order 2 and the Tsallis entropy of order 2 associated with can be expressed in terms of the information potential as follows (see [18] (pp. 20–21)):

In the following, we consider the binomial distribution , the Poisson distribution and the negative binomial distribution :

The associated information potentials (index of coincidence) of this distributions are defined as follows:

From [10] (56) and [12] (21) we know that

The following lower and upper bounds for the information potential associated to binomial distribution was obtained in [11]:

Using Remark 1 can be obtained new bounds for the information potential and as follows:

Theorem 3.

The following inequalities are satisfied

Remark 2.

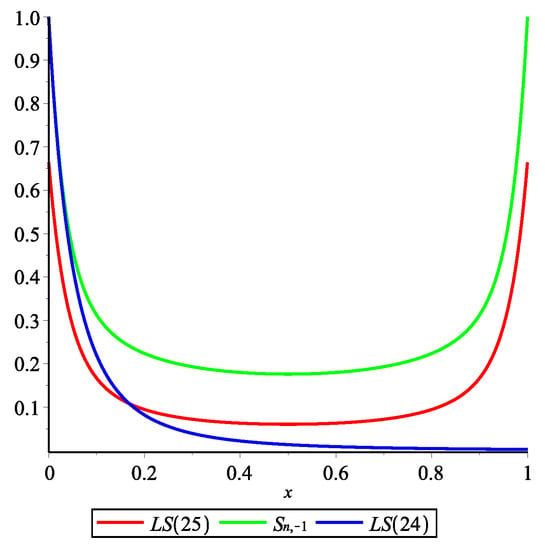

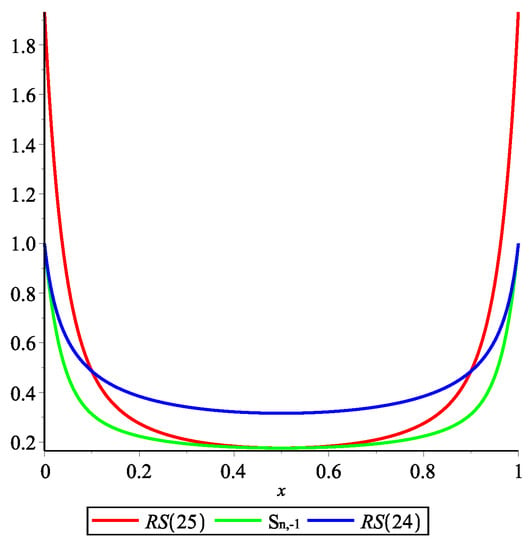

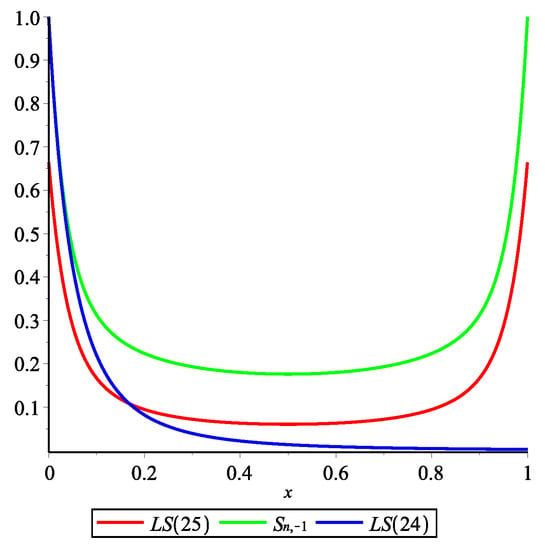

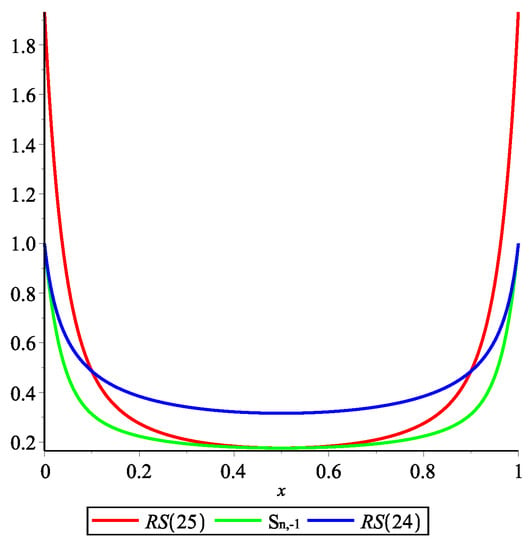

Let . In Figure 2 we give a graphical representation of the lower bound of from (24) and (25), respectively. Denote these bounds with LS(24) and LS(25), respectively. In Figure 3 we give a graphical representation of the upper bound of from (24) and (25), respectively. Denote these bounds with RS(24) and RS(25), respectively. Remark that on certain intervals the results obtained in Theorem 3 improve the result from [11].

Figure 2.

Graphs of lower bounds of .

Figure 3.

Graphs of upper bounds of .

5. Conclusions

This paper is devoted to the orthogonal polynomials. Bounds of Legendre polynomials are obtained in terms of inequalities. A more general result in regard with the estimate of the coefficients is obtained and used in order to give bounds of information potentials associated of the binomial distribution and the negative binomial distribution. The bounds of the entropies are useful especially when they are described by complicated expressions. The results obtained in this paper improve some results from the literature. Motivated by many applications of entropies in secure data transmission, speech coding, cryptography, algorithmic complexity theory, finding such bounds for the entropies will be a topic for future work.

Author Contributions

These authors contributed equally to this work. All authors have read and agreed to the published version of the manuscript.

Funding

Project financed by Lucian Blaga University of Sibiu & Hasso Plattner Foundation research grants LBUS-IRG-2019-05.

Acknowledgments

The authors are very greateful to the reviewers for their valuable comments and suggestions.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Manko, V.I.; Markovich, L.A. Entropic Inequalities and Properties of Some Special Functions. J. Russ. Laser Res. 2014, 35, 200–210. [Google Scholar] [CrossRef]

- Koornwinder, T.; Kostenko, A.; Teschl, G. Jacobi polynomials, Bernstein-type inequalities and dispersion estimates for the discrete Laguerre operator. Adv. Math. 2018, 333, 796–821. [Google Scholar] [CrossRef]

- Gonska, H.; Rasa, I.; Rusu, M.D. Chebyshev–Grüss-type inequalities via discrete oscillations. Buletinul Academiei de Stiinte a Republicii Moldova. Matematica 2014, 1, 63–89. [Google Scholar]

- Gavrea, I.; Ivan, M. On a conjecture concerning the sum of the squared Bernstein polynomials. Appl. Math. Comput. 2014, 241, 70–74. [Google Scholar] [CrossRef]

- Nikolov, G. Inequalities for ultraspherical polynomials. Proof of a conjecture of I. Raşa. J. Math. Anal. Appl. 2014, 418, 852–860. [Google Scholar] [CrossRef]

- Shannon, C.E. A mathematical theory of communication. Bell Syst. Tech. J. 1948, 27, 379–423. [Google Scholar] [CrossRef]

- Rényi, A. On measures of information and entropy. In Proceedings of the fourth Berkeley Symposium on Mathematics, Statistics and Probability 1960, Berkeley, CA, USA, 20 June–30 July 1960; University of California Press: Berkeley, CA, USA, 1961; pp. 547–561. [Google Scholar]

- Acu, A.M.; Başcanbaz-Tunca, G.; Raşa, I. Information potential for some probability density functions. Appl. Math. Comput. 2021, 389, 125578. [Google Scholar] [CrossRef]

- Raşa, I. Convexity Properties of Some Entropies (II). Results Math. 2019, 74, 154. [Google Scholar] [CrossRef]

- Raşa, I. Entropies and Heun functions associated with positive linear operators. Appl. Math. Comput. 2015, 268, 422–431. [Google Scholar] [CrossRef]

- Bărar, A.; Mocanu, G.R.; Raşa, I. Bounds for some entropies and special functions. Carpathian J. Math. 2018, 34, 9–15. [Google Scholar]

- Barar, A.; Mocanu, G.; Rasa, I. Heun functions related to entropies. RACSAM 2019, 113, 819–830. [Google Scholar] [CrossRef]

- Askey, R.; Gasper, G. Positive Jacobi polynomial sums. Am. J. Math. 1976, 98, 709–737. [Google Scholar] [CrossRef]

- Szegö, G. Orthogonal Polynomials; Amer. Math. Soc. Colloq, Pub.; American Mathematical Society: Providence, RI, USA, 1967; Volume 23. [Google Scholar]

- Available online: https://dlmf.nist.gov/18 (accessed on 12 November 2020).

- Toth, L. On the Wallis formula Mat. Lapok (Cluj) 1993, 4, 128–130. (In Hungarian) [Google Scholar]

- Abramowitz, M.; Stegun, I.A. Spavochnik po Spetsial’nym Funktsiyam s Formulami, Grafikami, Matematicheskimi Tablitsami (Hanbook of Mathematical Function with Formulas, Graphs and Mathematical Tables); Nouka: Moskova, Russia, 1979. [Google Scholar]

- Principe, J.C. Information Theoretic Learning. Renyi’s Entropy and Kernel Perspectives; Springer: New York, NY, USA, 2010. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).