Abstract

High dimensional embeddings of graph data into hyperbolic space have recently been shown to have great value in encoding hierarchical structures, especially in the area of natural language processing, named entity recognition, and machine generation of ontologies. Given the striking success of these approaches, we extend the famous hyperbolic geometric random graph models of Krioukov et al. to arbitrary dimension, providing a detailed analysis of the degree distribution behavior of the model in an expanded portion of the parameter space, considering several regimes which have yet to be considered. Our analysis includes a study of the asymptotic correlations of degree in the network, revealing a non-trivial dependence on the dimension and power law exponent. These results pave the way to using hyperbolic geometric random graph models in high dimensional contexts, which may provide a new window into the internal states of network nodes, manifested only by their external interconnectivity.

1. Introduction

Many complex networks, which arise from extremely diverse areas of study, surprisingly share a number of common properties. They are sparse, in the sense that the number of edges scales only linearly with the number of nodes, they have small radius, connected nodes tend to have many of their neighbors in common (beyond what would be expected from, for example, random graphs drawn from a distribution with a fixed degree sequence), and their degree sequence has a power law tail, generally with an exponent less than 3 [1,2,3,4]. One of the major efforts of network science is to devise models which are able to simultaneously capture these common properties.

Many models of complex networks have been proposed which are able to capture some of the common properties above. Perhaps the most well known is the Barabási–Albert model of preferential attachment [5]. This model is described in terms of a growing network, in which nodes of high degree are more likely to attract connections from newly born nodes. It is effective in capturing the power law degree sequence, but fails to generate graphs in which connected nodes tend to have a large fraction of their neighbors in common (often called ‘strong clustering’). There are growing indications that the strong clustering, the tendency for affiliation of neighbors of connected nodes, is a manifestation of the network having a geometric structure [6], based upon the notion of a geometric random graph. However, geometric random graphs based upon a flat, Euclidean geometry do not give rise to power law degree distributions. Curved space, on the other hand, in particular the negatively curved space of hyperbolic geometry, does give rise to such heterogeneous distribution of degrees, as shown by Krioukov et al. [7]. Random geometric graphs in hyperbolic geometry therefore provide extremely promising models for the structure of complex networks. Besides curvature, an arguably even more fundamental aspect of a geometric space is its dimension. After all, a lot more possibilities open when one is attempting to navigate a space of higher dimension. Can random geometric graph models based on higher dimensional geometry account for structures in graphs which so far have eluded description within lower dimensional models?

Recent developments in machine learning for natural language processing suggest that this is likely the case, as higher dimensional hyperbolic geometries prove more effective at link prediction in author collaboration networks than do embeddings into lower dimensional hyperbolic space [8]. High dimensional hyperbolic embeddings are also proving fruitful in representing taxonomic information within machine generated knowledge bases [9,10]. It may be worth noting that, in these machine learning contexts, the hyperbolic geometry generally plays a slightly different role. There one often seeks an isometric embedding of some structured data, such as word proximity graphs in natural language texts, into hyperbolic space of some dimension. In our approach based upon random geometric graphs, we are taking the geometric description more fundamentally, as we seek an embedding which covers the entire space uniformly, rather than covering merely an isometric subset of the space. The idea, ultimately, is to associate data with a dynamical description of geometry, for example, as is done in gravitational physics [11].

We consider statistical mechanical models of complex networks as introduced in [12], and, in particular, their application to random geometric graphs in hyperbolic space [7]. Fountoulakis has computed the asymptotic distribution of the degree of an arbitrary vertex in the hyperbolic model, and shown that it gives rise to a degree sequence which follows a power law [13]. More generally, it is known that the hyperbolic models are effective in capturing all of the above properties, including the strong clustering behavior which typically evades non-geometric models. Fountoulakis also proves that the degrees of any finite collection of vertices are asymptotically independent, so that in this sense the correlations that one might expect from spatial proximity vanish in the and limit. Furthermore, it is possible to assign an effective meaning to the dimensions of the hyperbolic embedding space: the radial dimension can be regarded as a measure of popularity of a network node, while the angular dimension acts as a space in which the similarity of nodes can be represented, such that nearby nodes are similar to each other, while distant nodes are not [14].

As far as we are aware, to date, all attention on this model has been focused on two -dimensional hyperbolic space. Generalization to higher dimension does appear in an as yet unpublished manuscript [15], which extends the hyperbolic geometric models to arbitrary dimension, however, explicit proofs regarding the degree distribution are not provided. High dimensional hyperbolic embeddings have also been explored from the machine learning perspective, but not as an explicit model of random geometric graphs as considered here [8]. It is reasonable to expect that, in order to capture the behavior of real world complex networks, it will be necessary to allow for similarity spaces of larger than one dimension. We therefore construct a model of random geometric graphs in a ball of , in direct analogy to the construction of [7]. In this model, the radial coordinate retains its effective meaning as a measure of the popularity of a node, while the similarity space becomes a full d-sphere, allowing for a much richer characterization of the interests of a person in a social network, or the role of an entity in some complex organizational structure. We perform a careful asymptotic analysis, yielding precise results which help clarify various aspects of the model.

In particular, we compute the asymptotic degree distribution in five regions of the parameter space, for arbitrary dimension, only one region of which ( and ) was considered in Fountoulakis’ treatment of [13]. For , the angular probability distribution is simply a constant , while when it is a dimension-dependent power of the sine of the angular separation between two nodes, so that we cannot expect to straightforwardly generalize the prior results for to higher dimension. In fact, the angular integrals are tedious and not easy to compute. We use the series expansion to decompose the integrated function, to get a fine infinitesimal estimation of the angular integral, which is the key step to performing the high dimensional analysis.

For Fountoulakis also computes the correlation of node degrees in the model, and shows that it goes to zero in the asymptotic limit [13]. We generalize this result to arbitrary dimension, finding that the asymptotic independence of degree requires a steeper fall off for the degree distribution (governed by the parameter ) at larger dimension d. This dimensional dependence is reasonable, in the sense that, in higher dimensions, there are more directions in which nodes can interact. When computing clustering for the high dimensional model, the non-trivial angular dependence will pose an even greater challenge, since for three nodes there is much less angular symmetry. Hence our present analysis paves the way for future research in high dimensional hyperbolic space.

Our model employs a random mapping of graph nodes to points in a hyperbolic ball, giving these nodes hyperbolic coordinates, and connecting pairs of nodes if they are nearby in the hyperbolic geometry. Based upon their close relationship with hyperbolic geometry, we might assume that they are therefore intrinsically hyperbolic, in the sense of Gromov’s notion of -hyperbolicity. This may be an important question, in that it would connect to a number of important results on hyperbolicity of graphs [16], and may cast an important light on the relevance of these hyperbolic geometric models to real world networks [17]. We do not expect our model to generate -hyperbolic networks for the entire range of the parameter space of our model because, for example, one can effectively send the curvature to zero by choosing (with ). However, we would expect it to be the case when the effect of curvature is large, such as when R grows rapidly with respect to N. We do not know for certain whether the particular ranges of parameters that we explore in this paper generate -hyperbolic graphs for , almost surely, but imagine it likely to be the case.

1.1. Model Introduction

We consider a class of exponential random graph models, which are Gibbs (or Boltzmann) distributions on random labeled graphs. [12,18] These models define a probability distribution on graphs which is defined in terms of a Hamiltonian ‘energy functional’, for which the probability of a graph G is . T is a ‘temperature’, which controls the relevance of the Hamiltonian , and is a normalizing factor, often called the ‘partition function’. Note that the probability distribution becomes uniform in the limit . The Hamiltonian, which encodes the ‘energy’ of the graph, consists of a sum of ‘observables’, each multiplied by a corresponding Lagrange multiplier, which controls the relevance of that observables’ value in the probability of a given graph. We begin with the most general model for which the probability of each edge is independent, wherein each probability is governed by its own Lagrange multiplier . Thus, our Hamiltonian is , where if u and v are connected by an edge in G, and 0 otherwise. The partition function , and the therefore the probability of occurrence of an edge between vertices u and v is

Following [7], we embed each of N nodes into a hyperbolic ball of finite radius R in , and set the ‘link energies’ to be based on the distances between the embedded locations of the vertices u and v, through here we allow all integer values of . In particular, we set

where is a connectivity distance threshold. Note that each pair of vertices for which contribute negatively to the ‘total energy’ whenever a link between them is present, so that the ‘ground state’ (minimum energy, highest probability) graph will have links between every pair of vertices for which , and no links with . Note that in the limit as , the probability of the ground state graph goes to 1, and that of every other graph vanishes. Thus

In a real world network, we may expect the largest node degree to be comparable with N itself, and the lowest degree nodes to have degree of order 1. To maintain this behavior, following [7], we restrict attention to the submanifold of models for which (If then , the expected degree of the origin node, satisfies if then from (10); if then .) Thus

Additionally, for ease of comparison with numerous results in the literature, and to keep track of ‘distance units’, we follow [7] in retaining the parameter which governs the curvature of the hyperbolic geometry, even though modifying it adds nothing to the space of models which cannot be achieved through a tuning of R. (Changing has the sole effect of scaling all radial coordinates, which can equivalently be achieved by scaling R.)

We will use spherical coordinates on , so that each point x has coordinates , with the usual coordinates on , with , for , and . In these coordinates the distance between two points and is given by the hyperbolic law of cosines

where is the angular distance between and on . In these coordinates the uniform probability density function, from the volume element in , factorizes into a product of functions of the separate radial and spherical coordinates

With the usual spherical coordinates on , the uniform distribution arises from , with for and . In place of a uniform distribution of points, we use a spherically symmetric distribution whose radial coordinates are sampled from the probability density

where is a real parameter which controls the radial density profile, and the normalizing factor . Note that corresponds to a uniform density with respect to the hyperbolic geometry. Thus, when , the nodes are more likely to lie at large radius than they would with a uniform embedding in hyperbolic space, and when they are more likely to lie nearer the origin than in hyperbolic geometry. We will see later that the degree of a node diminishes exponentially with its distance from the origin, and that with larger values of the model tends to produce networks with a degree distribution which falls off more rapidly at large degree, while with smaller it produces networks whose degree distribution possesses a ‘fatter tail’ at large degree.

We would like to compute the expected degree sequence of the model, in a limit with and as . The model is label independent, meaning that, before embedding the vertices into , each vertex is equivalent. To determine the expected degree sequence, it is therefore sufficient to compute the degree distribution of a single vertex, as a function of its embedding coordinates, and then integrate over the hyperbolic ball.

Given that each of the other vertices are randomly distributed in according to the probability density (3), the expected degree of u will be equal to the connection probability , integrated over , for each of the other vertices. Thus,

Since the geometry is independent of angular position, without loss of generality, we can assume our vertex u is embedded at the ‘north pole’ , so the relative angle between u and any other embedding vertex v is just the angle of v. (The full hyperbolic geometry is of course completely homogeneous, and thus independent of the radial coordinate as well; however, the presence of the boundary at breaks the radial homogeneity.) Thus,

where are the radial coordinates of the vertices ; is the angle between them in the direction, and , with

To simplify the notation below, we use in place of .

In the case of , we have and

1.2. Main Results

Let be the vertex set of a random graph G with N vertices, whose elements are randomly distributed with a radially dependent density into a ball of radius R in , . Let denote the degree of the vertex u. Throughout as , unless otherwise indicated.

The main results of this paper are as follows:

Theorem 1.

Theorem 2.

Let and , and k be a non-negative integer, then

where , , and are defined by (A5). Here, as .

Theorem 3.

Let , and , for dimension d, for any integer and for any collection of m pairwise distinct vertices , , their degrees are asymptotically independent in the sense that, for any non-negative integers ,

The paper is organized as follows. In Section 1.1 above, we introduce the model. Specifically, we explain the exponential random graph model in hyperbolic space, explain the roles of the parameters, and provide some basic formulas for mean degree. In Section 1.2 above, we state three main theorems. Theorems 1 and 2 state that the probability of degree taking value k for any node, in the limit of large N, takes the form of a power law. Theorem 4 states that the probability of several nodes taking respective k values is asymptotically independent as the number of nodes is growing. In Section 2, we provide some useful preliminary results; in particular, we give an explicit approximation of the hyperbolic distance formula for two points on some conditions, which is superior to previous such expressions. In Section 3, we compute the angular integral, which is the key obstacle that we need to overcome to extend the model to high dimensional hyperbolic space. We use analytic expansion to perform a fine infinitesimal estimation for the angular integral, which could not be done on a computer. We put these computations in Appendix A. Our main proof processes lie in Section 4, Section 5, Section 6 and Section 7. We compute the expected degree of a given vertex as a function of radial coordinate, the mean degree of the network, and the asymptotic distribution of degree for the cases , , and , respectively, in Section 4, Section 5 and Section 6. The proof of Theorem 1 lies in Section 4.1 and the proof of Theorem 2 lies in Section 6.1. In Section 7, we analyze the asymptotic correlations of degree for and finally prove Theorem 4.

1.3. Simulations

The Cactus High Performance Computing Framework [19] defines a new paradigm in scientific computing, by defining software in terms of abstract APIs, which allows scientists to construct interoperable modules without having to know the details of function argument specifications from these modules at the outset. One can write toolkits within the framework, which are collections of modules which perform computations in some scientific domain. For the simulation results shown in this paper, we have used a ComplexNetworks toolkit within Cactus, which we hope to be available soon under a freely available open source license.

The ComplexNetworks toolkit is an extension of the CausalSets toolkit [20] which allows many computations involving complex networks to be easily run on distributed memory supercomputers, though the simulations we perform here are small enough to fit on a single 12 core Xeon workstation. The model is implemented by a module which allows direct control of a number of parameters, including , , and T, of (1), and N.

2. Preliminaries

We begin with some basic calculations, before attempting to directly evaluate (4).

2.1. Radial Density

Let with , , , integer , and . Below are some basic calculations which will be very useful in the sequel.

Let . Then,

(, for some function , means control of growth, i.e., , for some constant , when the variable x is growing.) When , we have

i.e.,

where

Now, letting ,

For , we have

If we set , for some , then

and

Note that provides some control over the effective density of nodes with respect to the hyperbolic volume measure, though subject to the asymptotic constraint that . Furthermore, we have

uniformly for all r with , where the function as . The following Lemma follows immediately from the above.

Lemma 1.

Let be the radial coordinate of the vertex . We have

If we choose , then

uniformly for all

Furthermore, letting with , then

Corollary 1.

If , a.a.s. all vertices have when .

(Throughout, a.a.s. stands for asymptotically almost surely, i.e., with a probability that tends to 1 as .)

Proof.

. Thus, . □

2.2. Distance

As mentioned in Section 1.1, the distance between two vertices u and v is given by the hyperbolic law of cosines

This hyperbolic distance can be approximated according to the following lemma.

Lemma 2.

Let such that as . Let be two distinct points in with denoting their relative angle. Let also If we assume with , then as

we have

here uniformly for all satisfying the above condition. Note that we can take , for example.

Proof.

For , we get , so . Thus, we may choose to make hence the condition is well defined. Obviously, we can take so that .

Next, we prove (13). The right-hand side of (12)

Here, , and we also make use of Young’s inequality to get

Thus,

so from (12), we get

Furthermore, from (12) and (14), we have

and, from (15), we have

where . Given that

(13) is achieved uniformly for all with . Notice that should grow more slowly than R as □

3. Angular Integral

The conditional probability that a node u at fixed radial coordinate connects to a node v at fixed radius is the connection probability (2) integrated over their possible relative angular separations :

We call this the angular integral, and, to simplify the notation, we will rescale variables as follows:

and set

so from Lemma 2, if and , then

with

so

Claim 1.

If , then .

Proof.

For , so we get □

Now, we set

which means as , where

Thus, for , we divide the integral (16) as follows:

We begin from the first part of the integral

The second part of the integral

- for ,since ,

- for ,

- and for ,

Finally, we get the angular integral estimation:

Lemma 3.

assuming .

4. Expected Degree Distribution for

We are now in a position to compute the expected degree of node u. Let be an indicator random variable which is 1 when there is an edge between nodes u and v in the graph, and 0, otherwise. Recall that the coordinates of u are . Below, we assume . Now,

Notice that depends only on the radius (or rescaled radius coordinate ), as one would expect from spherical symmetry. We therefore omit the in and write instead of . From (4),

We estimate the first part of the integral (20). From (7), (8) and as , we have

Next, we estimate the second part of the integral (20). From Lemma 3 and (11), we have

where .

4.1. and

4.1.1. Mean Degree

4.1.2. Asymptotic Degree Distribution

Now, we compute the asymptotic degree distribution. When , we have

from (21) and from (22). Thus,

where When , we have as uniformly. Furthermore, we have

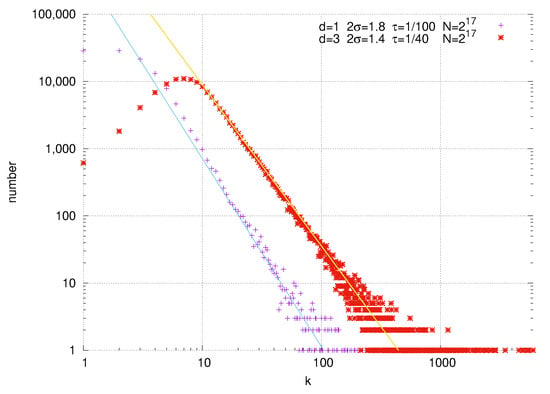

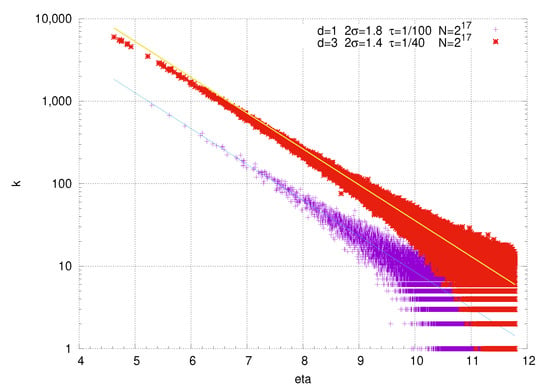

Equation (29) is compared with simulation in Figure 1.

Figure 1.

Comparison of (the asymptotic approximation to) Equation (29) (the two straight lines) with two sample node networks generated from the model. The degree of each node u is plotted as a function of its (rescaled) radial coordinate , for a network sprinkled into . The lower data points, in red, come from a network with parameters , and , while the upper data points, in black, arise from a sprinkling with , and . In each case, we sprinkle into a ball of radius (i.e., ). Note that the vertical axis is in logscale, so that the exponential decay of node degree with distance appears as straight lines. The simulation results appear to be consistent with Equation (29).

, where , is the number of connections to the vertex u, i.e., its degree. In general, it depends on the coordinates of u: and ; however, due to the angular symmetry, only the dependence remains. The quantities are a family of independent and identically distributed indicator random variables, whose expectation values are given by . Let be a Poisson random variable with parameter equal to , and from (29). Recall that the total variation distance between two non-negative discrete random variables is defined as .

Claim 2.

If , then

uniformly for all .

Proof.

From Theorem 2.10 in [21]

□

Using the above claim, we have, for any , the probability that the vertex u has k connections is

since

from (7) and (8). Taking the specific expression of the Poisson distribution into (30) and rescaling the integral, we have

where from (11). On the other hand,

Thus,

Changing the integration variable to ,

where as , and we used that as . Above, we made use of the mean value theorem to assert that

Theorem 1 follows from (35).

4.2. and

4.2.1. Mean Degree

4.2.2. Asymptotic Degree Distribution

Next, we compute the asymptotic degree distribution. When , we have

from (21) when we choose satisfying , and

from (22). Thus,

uniformly for all . Similarly to Claim 2, we have

if we choose . The degree distribution is

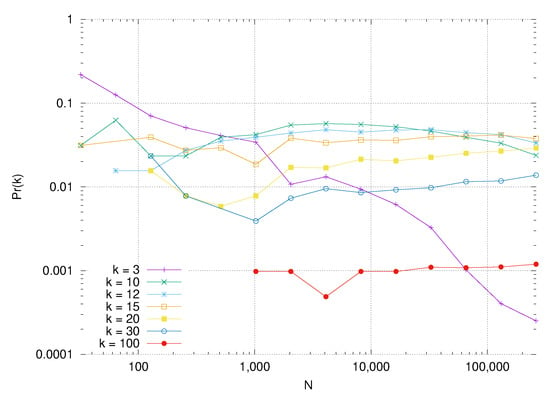

Figure 3 gives the tendency of as N is increasing. If we choose , then

uniformly for all

Since is decreasing to 0 when , then for any , if N is large enough, then

uniformly about . Therefore,

which means that the k-degree probability will be very small when N is large enough, for any fixed . Note that, comparing the k-degree probability with the mean degree, we see that the rates at which the k-degree probabilities go to zero as cannot be uniform. It would be interesting to study the behavior of the limiting degree distribution as .

Figure 3.

Plot of probabilities of node degrees having various values of k, against the network size N, for , , , and . Here, for the measured probabilities, we generate a single network for each N, and simply plot the number of nodes of degree k divided by N. Although we have not directly estimated the accuracy of these numbers, one can nevertheless see how for fixed k as .

5. Expected Degree Distribution for

5.1. and

5.1.1. Asymptotic Degree Distribution

If , when , we have from (21) and

from (38), so

For all , we have as uniformly. From this, the mean degree

As with Claim 2, we compute the asymptotic behavior of and . By (39), we have

Claim 3.

If we choose satisfying , with constant , then

uniformly for all .

Proof.

□

Thus, as with (34), we have

for any . First, we compute

and notice that, for we have for , so

Altogether, we have

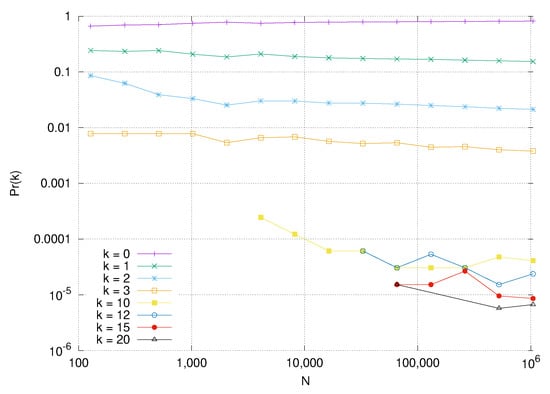

for any Figure 4 shows that decreases as N increases for .

Figure 4.

Plot of probabilities of node degrees having various values of k, against the network size N, for , , , and . Here, for the measured probabilities, we generate a single network for each N, and simply plot the number of nodes of degree k divided by N. Although we have not directly estimated the accuracy of these numbers, one can nevertheless see how for fixed as .

5.1.2. Mean Degree

6. Expected Degree Distribution for

We again begin the calculation from

Taking for into the above integral, we have

6.1. and

6.1.1. Asymptotic Degree Distribution

Claim 4.

If we choose ,

uniformly for all .

Proof.

□

6.1.2. Mean Degree

6.2. and

6.2.1. Asymptotic Degree Distribution

If , we consider We still may achieve from (21) if is small enough, and from (41), so

uniformly for all Then,

when Furthermore, as with (30), we still have

Notice that uniformly for all and since the function is decreasing to 0 when , then, for any , when N is large enough,

uniformly for . Thus,

which means that the k-degree probability will be very small when N is large enough, for any fixed .

6.2.2. Mean Degree

If we choose then, from (25) and (41),

If we choose satisfying , from (24) and (26), we have the mean degree

which will go to infinity as . Similarly, comparing the probability of the degree being equal to k with the mean degree, we see that the rates at which the k-degree probabilities go to zero as cannot be uniform.

7. Asymptotic Correlations of Degree

We extend Theorem 4.1 of [13], governing the independence of degrees of vertices at separate angular positions in a hyperbolic ball in , to arbitrary dimension .

Theorem 4.

If , and for dimension d, and any fixed integer , and for any collection of m pairwise distinct vertices , , their degrees are asymptotically independent in the sense that, for any non-negative integers , ,

It is important to note that m is constant while , so the collection of m vertices of Theorem 4 forms a vanishingly small fraction of all vertices. Furthermore, since each of these m vertices has a finite degree, each of their neighbors will almost surely not be found among the other m vertices.

For the purposes of this section, we choose and .

Definition 1.

For a vertex , define to be the set of points where is the angle between the points v and w in , and . is called the vital region in [13] (Note that might not be a topological neighborhood for the vertex v because v might lie outside of its vital region). ( is defined at the end of Section 2.1, and at the beginning of Section 3.) Similarly, we write to indicate that the embedded location of vertex u lies in the vital region .

We will prove that the vital regions , are mutually disjoint with high probability. Let be this event, i.e., that

Claim 5.

Let be fixed. Then, .

Proof.

Assume . For any point the parameter is maximized when , so let be this maximum, that is

Thus, for and , we have if

The probability for this to occur is bounded as the following equation, for sufficiently large N. Making use of the angular symmetry, we have

Additionally, for a vertex v, we have from (7) and (8)

Since , and we may choose small enough to satisfy then

so . □

Definition 2.

Define by the event that , . For a given vertex , define by the event that w is connected to and . Define by the event that the vertex w is outside of but is connected to . Furthermore, define as the event that vertices satisfy the event , i.e., are vertices connected to vertex and lying within its vital region, for , whereas all other vertices do not. In addition, lastly, let . Thus, is the event that at least one of the vertices has a neighbor outside of its vital region.

Note that, from Corollary 1, . Assuming , if is not realized, then the event that vertex has degree , for all , is realized if and only if is realized.

Lemma 4.

For any and we have

Proof.

The proof appears in Appendix B. □

Thus, we have

We first calculate the probability of event that the vertex is located in and is linked to . Notice that does not depend directly on , but only the angle between and w, and furthermore the volume element in is rotationally invariant, so, when averaging over the position of w, is independent of . Thus, by the angular symmetry, we have the below lemma.

Lemma 5.

For and ,

uniformly for .

Proof.

Set . We have

Firstly, we estimate the second part. Assuming , and making use of (21), we have

For the first part,

where for from (17). The above estimation is because

and

where

Thus, similar to the calculation process for the degree of a vertex with and we have

assuming Finally we get

uniformly for when and . □

Comment: For and , we found . This is important because we can see that, for the vertex w,

- the is asymptotically very close to from (28);

- the radius of the vertex w is likely larger than when the event occurs; and as uniformly about with ;

so we can expect that the vertex w has a very small relative angle with , and lies only in , and not in any other vital regions , , . Thus, we expect that the events are asymptotically independent. For and , we cannot get the above analysis, so the same result may not hold in that case.

Lemma 6.

Let and . For fixed , are vertices in , and are integers; then,

Proof.

The proof is lengthy so we put it in Appendix C. □

Finally, together with (46), we get Theorem 4, i.e., for and , then

8. Summary and Conclusions

Models of complex networks based upon hyperbolic geometry are proving to be effective in their ability to capture many important characteristics of real world complex networks, including a power law degree distribution and strong clustering. As explicated in Ref. [14], the radial dimension can be regarded as a measure of popularity of a network node, while the angular dimension provides a space to summarize internal characteristics of a network node. Prior to this work, all hyperbolic models that have been considered to date have been two-dimensional, thus allowing only a one-dimensional representation of these internal characteristics. However, it is reasonable to expect that, in order to capture the behavior of real world complex networks, one must allow for similarity spaces of larger than one dimension. One place to see this need is in networks embedded in space, such as transportation at intracity and intercity scales, or galaxies arranged in clusters and superclusters, each of which naturally live in two or three dimensions, respectively. However, the greater need may in fact be in information-based networks, such as those which represent how data are organized, for example in the realm of natural language processing. See, for example, the following references: [8,10,22,23,24,25]. We thus have generalized the hyperbolic model to allow for arbitrarily large dimensional similarity spaces.

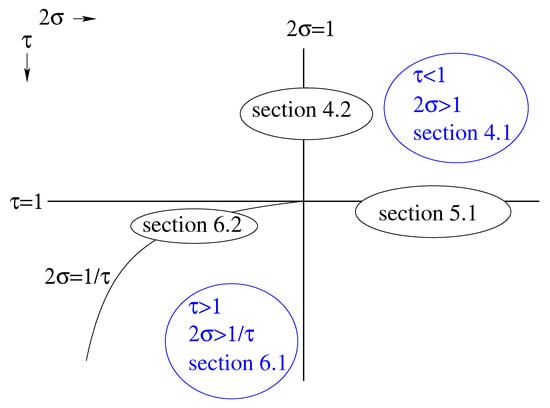

Specifically, we have computed the exact asymptotic degree distribution for a generalization of hyperbolic geometric random graphs in arbitrary dimensional hyperbolic space. We considered five regions of the parameter space, as depicted in Figure 5: one for temperature and , another at and , a third at a critical temperature and , and last two at high temperature regimes with : one with , and a second with . For two of the regions, we found a power law expected degree distribution whose exponent is governed by the parameter , which controls the radial concentration of nodes in the geometric embedding. When , we find that the degree distribution degenerates, such that only zero degree nodes have non-vanishing probability, i.e., almost every node is disconnected from the rest of the network. For the remaining two regions, with or , we find that the degree distribution ‘runs off to infinity’, such that the probability of any node having finite degree goes to zero in the asymptotic limit.

Figure 5.

Regions of parameter space explored in this paper, with the respective subsections of the paper indicated for each. The temperature parameter increases in the downward direction (as it generally does on the Earth), and the parameter which often controls the exponent of the power law degree distribution, , increases to the right. Since larger generally means faster fall off of the degree distribution, we can imagine fat or long tails to the left, and more truncated tails to the right. We can think of increased temperature as promoting noise which shifts the model in the direction of a uniform distribution on graphs. The two ‘generic’ regions in blue both manifest power law degree distributions. The three other ‘measure zero’ regions do not yield non-trivial degree distributions at finite degree, using the growth rate of with N that we have chosen for them. Only the upper right region and has appeared in the previous literature.

We have also proved a generalization of Fountoulakis’s theorem governing correlations in degree to arbitrary dimension, and discovered a non-trivial dependence on the dimension and the exponent of the power law in these correlations.

It is important to be able to model somewhat denser complex networks with ‘fat tailed’ degree distributions, for example those whose degree sequence is still a power law , but with . We have made an important first step in this direction, by exploring three parameter regimes with . One of them manifests a power law degree distribution, at , while, for the two others, the degree distribution ‘runs off to infinity’, which is not necessarily unexpected in the context of a fat tailed distribution. It would be instructive to understand this denser regime of complex networks in more detail, and provide models which can help predict the behavior of these networks.

Another significant step is to explore the clustering behavior of these higher dimensional models. Krioukov has shown that clustering can be an important herald of geometric structure of networks, and is common in real-world complex networks. [6] Does the clustering behavior of the two-dimensional hyperbolic models generalize to arbitrary dimension?

One can also study more carefully the effect of the constraints we impose on the growth of R with N, such as the choice . Might some other characterization of the ball radius as a function of network size be more effective, in a wider region of the parameter space? We have already seen hints to this effect in Section 5 and Section 6.

Another important step is to extend the model to allow for bipartite networks, by assigning to each node one of two possible ‘types’, and allowing only nodes of different types to connect. This would generalize the approach of [26] to arbitrary dimensional hyperbolic space.

Of course, it is arguably of greatest interest to apply the model to real world networks, by embedding such networks into higher dimensional hyperbolic space. To do so, one would need to estimate the model parameters which are most closely associated with a given network. The ideal process for doing so is Bayesian parameter estimation; however, there are a number of techniques, such as measuring the network’s degree sequence, which can serve as efficient proxies in place a full Bayesian statistical analysis. Some steps in this direction for a somewhat similar () model, along with some initial results for link prediction and soft community detection, are given in [27]. It will be of great interest to see if these higher dimensional models provide more effective predictions for many real world networks, such as the information-based networks mentioned above, or social or biological networks, where we may expect that a one-dimensional similarity space lacks the depth and sophistication necessary to effectively represent the behavior of these complicated entities.

Author Contributions

Funding

The work of W.Y. was funded by the China Scholarship Council and Beijing University of Technology, grant file No. 201606545018. The work of D.R. was supported in part by The Cancer Cell Map Initiative NIH/NCI U54 CA209891, and the Center for Peace and Security Studies (cPASS) at UCSD.

Acknowledgments

D.R. is extremely grateful to Maksim Kitsak for sharing the unpublished manuscript, “Lorentz-invariant maximum-entropy network ensembles”. We appreciate the assistance of the anonymous referees for many very useful comments and suggestions. We thank the San Diego Supercomputing Center for hosting us while these computations were performed.

Conflicts of Interest

The authors declare no conflict of interest.

Appendix A. Angular Integrals

Consider the integral

where is a small constant. Define by

Split I into two pieces , with

(where, by construction, we keep the second term in the denominator smaller than the first) and

We evaluate the integrals for general dimension d.

Appendix A.1. τ < 1

We first estimate I for . We will use the below expansion formula

and the fact that for .

Appendix A.1.1. I1 Estimation

Appendix A.1.2. I2 Estimation

To evaluate

note that, for , , and in general , thus

where and are constants about dimension d. Therefore,

Thus,

Appendix A.1.3. Estimation of I

Appendix A.2. τ = 1

We next estimate I for . It is similar to Section A.1.

Appendix A.2.1. I1 Estimation

Appendix A.2.2. I2 Estimation

Appendix A.2.3. Estimation for I

Appendix A.3. τ > 1

Appendix A.3.1. I1 Estimation

Appendix A.3.2. I2 Estimation

Appendix A.3.3. Estimation for I

Thus,

where

Appendix A.3.4. Alternate Method to Estimate the Angular Integral (16) When τ > 1

Appendix B. The Proof of Lemma 4

Proof.

First, for , assume that . From Corollary 1, if , for any Now, for a given , the probability of the event conditional on , can be bounded as follows:

The second integral can be bounded

uniformly for For the first integral, we calculate the inner integral first

thus,

Now, we take the average over

since and . From (7) and (8), we have

if is small enough. Thus, finally

which implies that

□

Appendix C. The Proof of Lemma 6

Proof.

If the positions of have been fixed, then is an independent family of events. Thus, assuming the coordinates of , in have values such that realized, i.e., and for then, for the other vertices in , we can write

Since , are independent of the angular positions,

From Lemma 5, we assume , , and firstly compute the last factor in (A6). Thus,

Notice and

where . Since as doesn’t rely on , ,

Furthermore, we have

uniformly, for all , and for any in . Recall that the effective ‘Poisson parameter’ for vertex is (c.f. Section 4.1.2), and here as uniformly for , , so

Furthermore, by (36) in multi-variable form, we have

where , and From Section 4.1.2, for any m integers , ,

uniformly for Thus, we have

From the preliminary Formulas (7) and (8), we know that the probabilities of , are so we have

□

References

- Chung, F.; Lu, L. Complex Graphs and Networks; AMS: Providence, RI, USA, 2006; Available online: http://www.math.ucsd.edu/~fan/complex/ (accessed on 21 October 2020).

- Dorogovtsev, S. Lectures on Complex Networks; Oxford University Press: Oxford, UK, 2010. [Google Scholar]

- Newman, M. Networks, An Introduction; Oxford University Press: Oxford, UK, 2010. [Google Scholar]

- Albert, R.; Barabási, A.L. Statistical mechanics of complex networks. Rev. Mod. Phys. 2002, 74, 47–97. [Google Scholar] [CrossRef]

- Barabási, A.L.; Albert, R. Emergence of Scaling in Random Networks. Science 1999, 15, 509–512. [Google Scholar] [CrossRef] [PubMed]

- Krioukov, D. Clustering implies geometry in networks. Phys. Rev. Lett. 2016, 116, 208302. [Google Scholar] [CrossRef] [PubMed]

- Krioukov, D.; Papadopoulos, F.; Kitsak, M.; Vahdat, A.; Boguñá, M. Hyperbolic geometry of complex networks. Phys. Rev. E 2010, 82, 036106. [Google Scholar] [CrossRef] [PubMed]

- Nickel, M.; Kiela, D. Poincaré Embeddings for Learning Hierarchical Representations. In Advances in Neural Information Processing Systems 30; Guyon, I., Luxburg, U.V., Bengio, S., Wallach, H., Fergus, R., Vishwanathan, S., Garnett, R., Eds.; Curran Associates, Inc.: New York, NY, USA, 2017; pp. 6338–6347. [Google Scholar]

- Sarkar, R. Low distortion delaunay embedding of trees in hyperbolic plane. In International Symposium on Graph Drawing; Springer: Berlin/Heidelberg, Germany, 2011. [Google Scholar]

- Ganea, O.E.; Bécigneul, G.; Hofmann, T. Hyperbolic entailment cones for learning hierarchical embeddings. arXiv 2018, arXiv:1804.01882. [Google Scholar]

- Krioukov, D.; Kitsak, M.; Sinkovits, R.S.; Rideout, D.; Meyer, D.; Boguñá, M. Network Cosmology. Sci. Rep. 2012, 2, 793. [Google Scholar] [CrossRef] [PubMed]

- Park, J.; Newman, M.E.J. The statistical mechanics of networks. Phys. Rev. E 2004, 70, 066117. [Google Scholar] [CrossRef] [PubMed]

- Fountoulakis, N. On a geometrization of the Chung-Lu model for complex networks. J. Complex Netw. 2015, 3, 361–387. [Google Scholar] [CrossRef]

- Papadopoulos, F.; Kitsak, M.; Serrano, M.A.; Ná, M.B.; Krioukov, D. Popularity versus similarity in growing networks. Nature 2012, 489, 537–540. [Google Scholar] [CrossRef] [PubMed]

- Kitsak, M.; Aldecoa, R.; Zuev, K.; Krioukov, D. Lorentz-Invariant Maximum-Entropy Network Ensembles. Unpublished Manuscript: See also Chapter 3 of the Online Video. Available online: https://mediaserveur.u-bourgogne.fr/videos/technical-session-s8-economic-networks (accessed on 21 October 2020).

- DasGupta, B.; Karpinski, M.; Mobasheri, N.; Yahyanejad, F. Effect of Gromov-hyperbolicity Parameter on Cuts and Expansions in Graphs and Some Algorithmic Implications. Algorithmica 2018, 80, 772–800. [Google Scholar] [CrossRef]

- Alrasheed, H.; Dragan, F.F. Core–periphery models for graphs based on their δ-hyperbolicity: An example using biological networks. J. Algorithms Comput. Technol. 2017, 11, 40–57. [Google Scholar] [CrossRef]

- Holland, P.W.; Leinhardt, S. An Exponential Family of Probability Distributions for Directed Graphs. J. Am. Stat. Assoc. 1981, 76, 33–50. [Google Scholar] [CrossRef]

- Goodale, T.; Allen, G.; Lanfermann, G.; Massó, J.; Radke, T.; Seidel, E.; Shalf, J. The Cactus framework and toolkit: Design and applications. In VECPAR’02: Proceedings of the 5th International Conference on High Performance Computing for Computational Science; Springer: Berlin/Heidelberg, Germany, 2002; pp. 197–227. [Google Scholar]

- Allen, G.; Goodale, T.; Löffler, F.; Rideout, D.; Schnetter, E.; Seidel, E.L. Component specification in the Cactus Framework: The Cactus Configuration Language. In Proceedings of the 11th IEEE/ACM International Conference on Grid Computing, Brussels, Belgium, 25–28 October 2010. [Google Scholar] [CrossRef]

- Van der Hofstad, R. Random Graphs and Complex Networks: Volume 1; Cambridge Series in Statistical and Probabilistic Mathematics; Cambridge University Press: Cambridge, UK, 2017; Available online: http:/www.win.tue.nl/~rhofstad/ (accessed on 21 October 2020).

- Nickel, M.; Kiela, D. Learning Continuous Hierarchies in the Lorentz Model of Hyperbolic Geometry. arXiv 2018, arXiv:1806.03417. [Google Scholar]

- Tifrea, A.; Bécigneul, G.; Ganea, O. Poincaré GloVe: Hyperbolic Word Embeddings. arXiv 2018, arXiv:1810.06546. [Google Scholar]

- Camacho-Collados, J.; Pilehvar, M.T. From Word To Sense Embeddings: A Survey on Vector Representations of Meaning. J. Artif. Intell. Res. 2018, 63, 743–788. [Google Scholar] [CrossRef]

- Gulcehre, C.; Denil, M.; Malinowski, M.; Razavi, A.; Pascanu, R.; Hermann, K.M.; Battaglia, P.; Bapst, V.; Raposo, D.; Santoro, A.; et al. Hyperbolic Attention Networks. arXiv 2018, arXiv:1805.09786. [Google Scholar]

- Kitsak, M.; Papadopolous, F.; Krioukov, D. Latent Geometry of Bipartite Networks. Phys. Rev. E 2017, 95, 032309. [Google Scholar] [CrossRef]

- Papadopoulos, F.; Psomas, C.; Krioukov, D. Network Mapping by Replaying Hyperbolic Growth. IEEE/ACM Trans. Netw. 2015, 23, 198–211. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).