Abstract

In this work, we aim to investigate the convex minimization problem of the sum of two objective functions. This optimization problem includes, in particular, image reconstruction and signal recovery. We then propose a new modified forward-backward splitting method without the assumption of the Lipschitz continuity of the gradient of functions by using the line search procedures. It is shown that the sequence generated by the proposed algorithm weakly converges to minimizers of the sum of two convex functions. We also provide some applications of the proposed method to compressed sensing in the frequency domain. The numerical reports show that our method has a better convergence behavior than other methods in terms of the number of iterations and CPU time. Moreover, the numerical results of the comparative analysis are also discussed to show the optimal choice of parameters in the line search.

1. Introduction

Let H be a real Hilbert space, and let be proper, lower-semicontinuous, and convex functions. The convex minimization problem is modeled as follows:

Throughout this paper, assume that Problem (1) is nonempty, and the solution set is denoted by . We know that Problem (1) can be described by the fixed point equation, that is,

where and is the proximal operator of g defined by where denotes the identity operator in H and is the classical convex subdifferential of g. Using this fixed point equation, one can define the following iteration process:

where is a suitable stepsize. This algorithm is known as the forward-backward algorithm, and it includes, as special cases, the gradient method [1,2,3] and the proximal algorithm [4,5,6,7]. Recently, the construction of algorithms has become a crucial technique for solving some nonlinear and optimization problems (see also [8,9,10,11,12,13,14,15]).

In 2005, Combettes and Wajs [16] proposed the following algorithm, which is based on iteration (3) as follows:

| Algorithm 1 ([16]). Given and letting for :

|

It was proven that the sequence generated by (4) converges to minimizers of . However, we note that the convergence of Algorithm 1 depends on the Lipschitz continuity of the gradient of f, which is not an easy task to find in general.

The Douglas–Rachford algorithm is another method that can be used to solve the problem (1). It is defined in the following manner:

| Algorithm 2 ([16]). Fix and let For , calculate |

It was shown that the sequence defined by (5) converges to minimizers of . In this case, we see that the main drawback of Algorithm 2 is that it requires two proximity operators of convex functions f and g per iteration. This leads to a slow convergence speed of algorithms based on Algorithm 2. Please see Section 4 for its convergence.

Very recently, Cruz and Nghia [17] introduced the forward-backward algorithm by using the line search technique in the framework of Hilbert spaces. Assume that the following conditions are satisfied:

are two proper, , convex functions where and is nonempty, closed, and convex.

f is Fréchet differentiable on an open set that contains . The gradient is uniformly continuous on bounded subsets of . Moreover, it maps any bounded subset of to a bounded set in H.

Linesearch 1.

Letand.

Input. Givenandwith

While

do

End While

Output..

It was proven that Line Search 1 is well defined, i.e., this line search stops after finitely many steps. By this fact, Cruz and Nghia [17] also considered the following algorithm:

| Algorithm 3.Fixand, and let. For, calculate: |

They showed that the sequence generated by Algorithm 3 involving the line search technique that eliminates the Lipschitz assumption on the gradient of f converges weakly to minimizers of . It is observed that, to obtain its convergence, one has to find the stepsize in each iteration. This can be costly and time consuming in computation.

Recently, there have been many works on modifying the forward-backward method for solving convex optimization problems (see, for example, [18,19,20,21,22,23]).

In variational theory, Tseng [24] introduced the following method for solving the variational inequality problem (VIP):

where is a metric projection from a Hilbert space onto the set C, F is a monotone and L-Lipschitz continuous mapping, and . Then, the sequence generated by (6) weakly converges to a solution of VIP. This method is often called Tseng’s extragradient method and has received great attention by researchers due to its convergence speed (see, for example, [25,26,27,28]). Following this research direction, the main challenge is to design novel algorithms that can speed up the convergence rate compared to Algorithms 1–3.

In this paper, inspired by Cruz and Nghia [17], we suggest a new forward-backward algorithm to solve the convex minimization problem. We then prove weak convergence theorems of the proposed algorithm. Finally, some numerical experiments in signal recovery are given to show its efficiency. Numerical experiments show that our new algorithms have a better convergence behavior than other methods in comparison. The main advantage of this work is that our schemes do not require the computation of the Lipschitz constant as assumed in Algorithm 1.

The content is organized as follows: In Section 2, we recall the useful concepts that will be used in the sequel. In Section 3, we establish the main theorem of our algorithms. In Section 4, we give numerical experiments to validate the convergence theorems, and finally, in Section 5, we give the conclusions of this paper.

2. Preliminaries

In this section, we give some definitions and lemmas that play an essential role in our analysis. The strong and weak convergence of to x will be denoted by and respectively. The subdifferential of h at z is defined by:

It is known that is maximal monotone [29].

The proximal operator of g is defined by with . We know that the is single valued with full domain. Moreover, we have:

The following lemma is crucial in convergence analysis.

Lemma 1

([29]). Let H be a Hilbert space. Let S be a nonempty, closed, and convex set of H, and let be a sequence in H that satisfies:

(i) exists for each ;

(ii) .

Then, weakly converges to an element of S.

3. Main Results

In this section, we suggest a new forward-backward algorithm and prove the weak convergence. Next, we assume that Conditions (A1)–(A2) hold.

| Algorithm 4.Step 0.Given, andLet. Step 1.Calculate: Step 2.Calculate: |

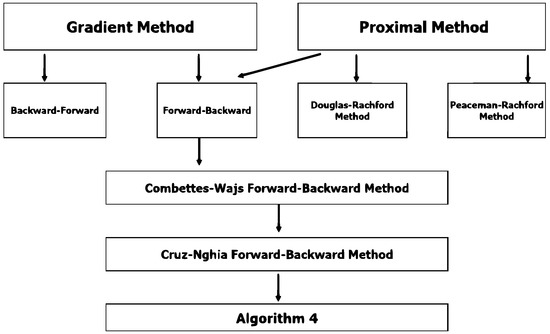

We next summarize the methods for solving the convex minimization problem (CMP) in Figure 1.

Figure 1.

The flowchart of the method for the convex minimization problem (CMP).

Theorem 1.

Let and be generated by Algorithm 4. If there is such that for all , then weakly converges to an element of .

Proof.

Let be a solution in . Then, we obtain:

Using the definition of and the line search (8), we have:

Since , it follows that . Moreover, since is maximal monotone, there is such that:

This shows that:

On the other hand, and . Therefore, we see that:

This gives:

Since , we have . This shows that:

Therefore, from (9) and the above, we have:

Since , it follows that . This implies:

Thus, exists, and hence, is bounded. This yields We note, by (18), that:

By the monotonicity of , we see that:

equivalently:

Therefore, we have:

On the other hand, we have:

Therefore, it follows that:

Since as , as . By the boundedness of , we know that the set of its weak accumulation points is nonempty. Let be a weak accumulation point of . Therefore, there is a subsequence of . Next, we show that Let , that is . Since we obtain:

which yields:

Since is maximal, we have:

This shows that

Since and by (A2), we have . Hence, we obtain:

Hence, we obtain , and consequently, . This shows that converges weakly to an element of by applying Lemma 1. We thus complete the proof. □

4. Numerical Experiments

Next, we apply our result to the signal recovery in compressive sensing. We show the performance of our proposed Algorithm 4, Algorithm 1 of Combettes and Wajs [16], Algorithm 2 of Douglas–Rachford [22], and Algorithm 3 of Cruz and Nghia [17]. This problem can be modeled as:

where is the observed data, is the noise, is a bounded and linear operator, and is a recovered vector containing m nonzero components. It is known that (23) can be modeled as the LASSO problem:

where . Therefore, we can apply the proposed method to solve (1) when and

In experiment, y is generated by the Gaussian noise with SNR = 40, A is generated by the normal distribution with mean zero and variance one, and is generated by a uniform distribution in [−2, 2]. We use the stopping criterion by:

where is an estimated signal of .

In the following, the initial point is chosen randomly, and in Algorithm 1 is and . In Algorithm 2, and . Let in Algorithm 3 and Algorithm 4, and let in Algorithm 4. We denote by CPU the time of CPU and by iter the number of iterations. In Table 1, we test the experiment five times and then calculate the averages of CPU and iter. All numerical experiments presented were obtained from MATLAB R2010a running on the same laptop computer. The numerical results are shown as follows:

Table 1.

Computational results for compressed sensing.

From Table 1, we see that the experiment result of Algorithm 4 was better than those of Algorithms 1 and 2 in terms of CPU time and number of iterations in each cases.

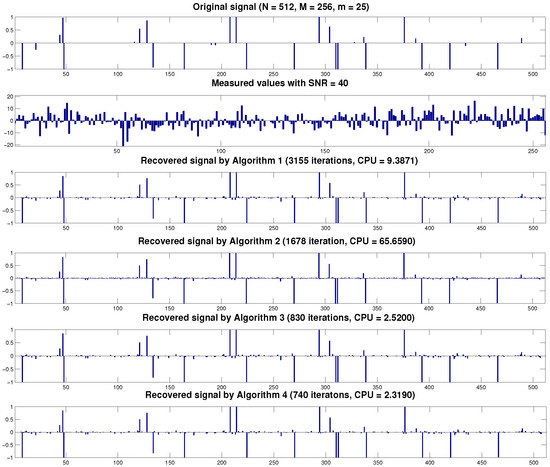

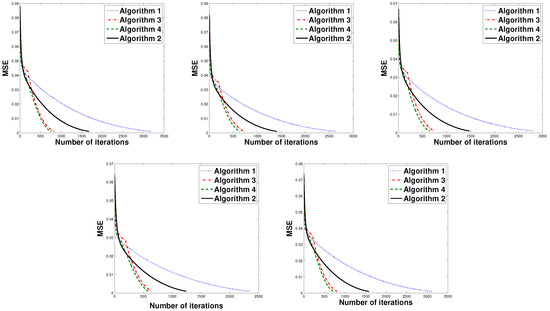

Next, we provide Figure 2 to show signal recovery in compressed sensing for one example and Figure 3 to show the convergence of each algorithm for all cases via the graph of the MSE value and number of iterations when , , and .

Figure 2.

Original signal, observed data, and recovered signal by Algorithm 1, Algorithm 2, Algorithm 3, and Algorithm 4 when and .

Figure 3.

The MSE value and number of iterations for all cases when and .

From Figure 2 and Figure 3, it is revealed that the convergence speed of Algorithm 4 was better than the other algorithm. To be more precise, Algorithm 2 had the highest CPU time since it required two proximity operators in computation per iteration. Moreover, Algorithm 1 that had the stepsize that was bounded above by the Lipschitz constant had the highest number of iterations. In our experiments, it was observed that the initial guess did not have any significant effect on the convergence behavior.

Next, we analyze the convergence and the effects of the stepsizes, which depended on parameters , and in Algorithm 4.

We next study the effect of the parameter in the proposed algorithm for each value of .

From Table 2, we observe that the CPU time and the number of iterations of Algorithm 4 became larger when the parameter approached when and . Figure 4 shows the numerical results for each .

Table 2.

The convergence of Algorithm 4 with each .

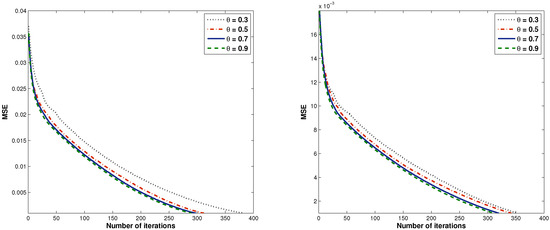

Figure 4.

Graph of number of iterations versus MSE when and , respectively.

From Figure 4, we see that our algorithm worked effectively when the value of was taken close to zero.

Next, we investigate the effect of the parameter in the proposed algorithm. We intend to vary this parameter and study its convergence behavior. The numerical results are shown in Table 3.

Table 3.

The convergence of Algorithm 4 with each .

From Table 3, we observe that the CPU time of Algorithm 4 became larger and the number of iterations had a small reduction when the parameter approached one when and . Figure 5 shows the numerical results for each .

Figure 5.

Graph of the number of iterations versus MSE where and , respectively.

From Figure 5, it is shown that Algorithm 4 worked effectively when the value of was chosen close to one.

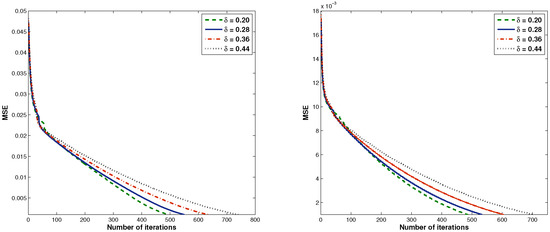

Next, we study the effect of the parameter in the proposed algorithm. The numerical results are shown in Table 4.

Table 4.

The convergence of Algorithm 4 with each .

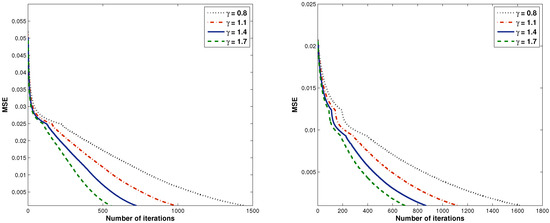

From Table 4, we see that the CPU time and the number of iterations of Algorithm 4 became smaller when the parameter approached two when and . We show numerical results for each cases of in Figure 6.

Figure 6.

Graph of the number of iterations versus MSE where and , respectively.

From Figure 6, it is shown that Algorithm 4 worked effectively when the value of was chosen close to two.

Next, we study the effect of the parameter in the proposed algorithm. The numerical results are given in Table 5.

Table 5.

The convergence of Algorithm 4 with each .

From Table 5, we see that the parameter had no effect in terms of the number of iterations and CPU time when and .

5. Conclusions

In this work, we studied the modified forward-backward splitting method using line searches to solve convex minimization problems. We proved the weak convergence theorem under some weakened assumptions on the stepsize. It was found that the proposed algorithm had a better convergence behavior than other methods through experiments. Our algorithms did not require the Lipschitz condition on the gradient of functions. We also presented numerical experiments in signal recovery and provided a comparison to other algorithms. Moreover, the effects of all parameters were shown in Section 4. This main advantage was very useful and convenient in practice for solving some optimization problems.

Author Contributions

Supervision, S.S.; formal analysis and writing, K.K.; editing and software, P.C. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by Chiang Mai University.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Dunn, J.C. Convexity, monotonicity, and gradient processes in Hilbert space. J. Math. Anal. Appl. 1976, 53, 145–158. [Google Scholar] [CrossRef]

- Wang, C.; Xiu, N. Convergence of the gradient projection method for generalized convex minimization. Comput. Optim. Appl. 2000, 16, 111–120. [Google Scholar] [CrossRef]

- Xu, H.K. Averaged mappings and the gradient-projection algorithm. J. Optim. Theory Appl. 2011, 150, 360–378. [Google Scholar] [CrossRef]

- Guler, O. On the convergence of the proximal point algorithm for convex minimization. SIAM J. Control Optim. 1991, 29, 403–419. [Google Scholar] [CrossRef]

- Martinet, B. Brvév communication. Règularisation d’inèquations variationnelles par approximations successives. Revue franaise d’informatique et de recherche opèrationnelle. Sèrie rouge. 1970, 4, 154–158. [Google Scholar]

- Parikh, N.; Boyd, S. Proximal algorithms. Found. Trends Optim.. 2014, 1, 127–239. [Google Scholar] [CrossRef]

- Rockafellar, R.T. Monotone operators and the proximal point algorithm. SIAM J. Control Optim. 1976, 14, 877–898. [Google Scholar] [CrossRef]

- Zhang, Z.; Hong, W.C. Electric load forecasting by complete ensemble empirical mode decomposition adaptive noise and support vector regression with quantum-based dragonfly algorithm. Nonlinear Dyn. 2019, 98, 1107–1136. [Google Scholar] [CrossRef]

- Kundra, H.; Sadawarti, H. Hybrid algorithm of cuckoo search and particle swarm optimization for natural terrain feature extraction. Res. J. Inf. Technol. 2015, 7, 58–69. [Google Scholar] [CrossRef]

- Cholamjiak, W.; Cholamjiak, P.; Suantai, S. Convergence of iterative schemes for solving fixed point problems for multi-valued nonself mappings and equilibrium problems. J. Nonlinear Sci. Appl. 2015, 8, 1245–1256. [Google Scholar] [CrossRef][Green Version]

- Cholamjiak, P.; Suantai, S. Viscosity approximation methods for a nonexpansive semigroup in Banach spaces with gauge functions. Global Optim. 2012, 54, 185–197. [Google Scholar] [CrossRef]

- Cholamjiak, P. The modified proximal point algorithm in CAT (0) spaces. Optim. Lett. 2015, 9, 1401–1410. [Google Scholar] [CrossRef]

- Cholamjiak, P.; Suantai, S. Weak convergence theorems for a countable family of strict pseudocontractions in banach spaces. Fixed Point Theory Appl. 2010, 2010, 632137. [Google Scholar] [CrossRef][Green Version]

- Shehu, Y.; Cholamjiak, P. Another look at the split common fixed point problem for demicontractive operators. RACSAM Rev. R. Acad. Cienc. Exactas Fis. Nat. Ser. A Mat. 2016, 110, 201–218. [Google Scholar] [CrossRef]

- Suparatulatorn, R.; Cholamjiak, P.; Suantai, S. On solving the minimization problem and the fixed-point problem for nonexpansive mappings in CAT (0) spaces. Optim. Methods Softw. 2017, 32, 182–192. [Google Scholar] [CrossRef]

- Combettes, P.L.; Wajs, V.R. Signal recovery by proximal forward-backward splitting. Multiscale Model. Simul. 2005, 4, 1168–1200. [Google Scholar] [CrossRef]

- Bello Cruz, J.Y.; Nghia, T.T. On the convergence of the forward-backward splitting method with line searches. Optim. Methods Softw. 2016, 31, 1209–1238. [Google Scholar] [CrossRef]

- Bauschke, H.H.; Borwein, J.M. Dykstra’s alternating projection algorithm for two sets. J. Approx. Theory. 1994, 79, 418–443. [Google Scholar] [CrossRef]

- Chen, G.H.G.; Rockafellar, R.T. Convergence rates in forward-backward splitting. SIAM J. Optim. 1997, 7, 421–444. [Google Scholar] [CrossRef]

- Cholamjiak, W.; Cholamjiak, P.; Suantai, S. An inertial forward–backward splitting method for solving inclusion problems in Hilbert spaces. J. Fixed Point Theory Appl. 2018, 20, 42. [Google Scholar] [CrossRef]

- Dong, Q.; Jiang, D.; Cholamjiak, P.; Shehu, Y. A strong convergence result involving an inertial forward–backward algorithm for monotone inclusions. J. Fixed Point Theory Appl. 2017, 19, 3097–3118. [Google Scholar] [CrossRef]

- Combettes, P.L.; Pesquet, J.C. Proximal Splitting Methods in Signal processing. In Fixed-Point Algorithms for Inverse Problems in Science and Engineering; Springer: New York, NY, USA, 2011; pp. 185–212. [Google Scholar]

- Cholamjiak, P. A generalized forward-backward splitting method for solving quasi inclusion problems in Banach spaces. Numer. Algorithms 2016, 71, 915–932. [Google Scholar] [CrossRef]

- Tseng, P. A modified forward–backward splitting method for maximal monotone mappings. SIAM J. Control. Optim. 2000, 38, 431–446. [Google Scholar] [CrossRef]

- Cholamjiak, P.; Thong, D.V.; Cho, Y.J. A novel inertial projection and contraction method for solving pseudomonotone variational inequality problems. Acta Appl. Math. 2019, 1–29. [Google Scholar] [CrossRef]

- Gibali, A.; Thong, D.V. Tseng type methods for solving inclusion problems and its applications. Calcolo 2018, 55, 49. [Google Scholar] [CrossRef]

- Thong, D.V.; Cholamjiak, P. Strong convergence of a forward–backward splitting method with a new step size for solving monotone inclusions. Comput. Appl. Math. 2019, 38, 94. [Google Scholar] [CrossRef]

- Suparatulatorn, R.; Khemphet, A. Tseng type methods for inclusion and fixed point problems with applications. Mathematics 2019, 7, 1175. [Google Scholar] [CrossRef]

- Bauschke, H.H.; Combettes, P.L. Convex Analysis and Monotone Operator Theory in Hilbert Spaces; Springer: Berlin/Heidelberg, Germany, 2011; Volume 408. [Google Scholar]

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).