An Interactive Data-Driven (Dynamic) Multiple Attribute Decision Making Model via Interval Type-2 Fuzzy Functions

Abstract

1. Introduction

- Decision makers are expected to fill out tedious questionnaires to articulate their preferences over alternatives at each period. This is especially very time consuming and demanding when the number of criteria and alternatives are high, and the decision points are frequent, i.e., performance evaluation, risk assessment, etc.

- The models do not provide any mechanism to help decision makers making use of past decision making matrices when articulating their preferences at the current period. An interactive mechanism is needed to facilitate preference elicitation in the light of historical performance of alternatives.

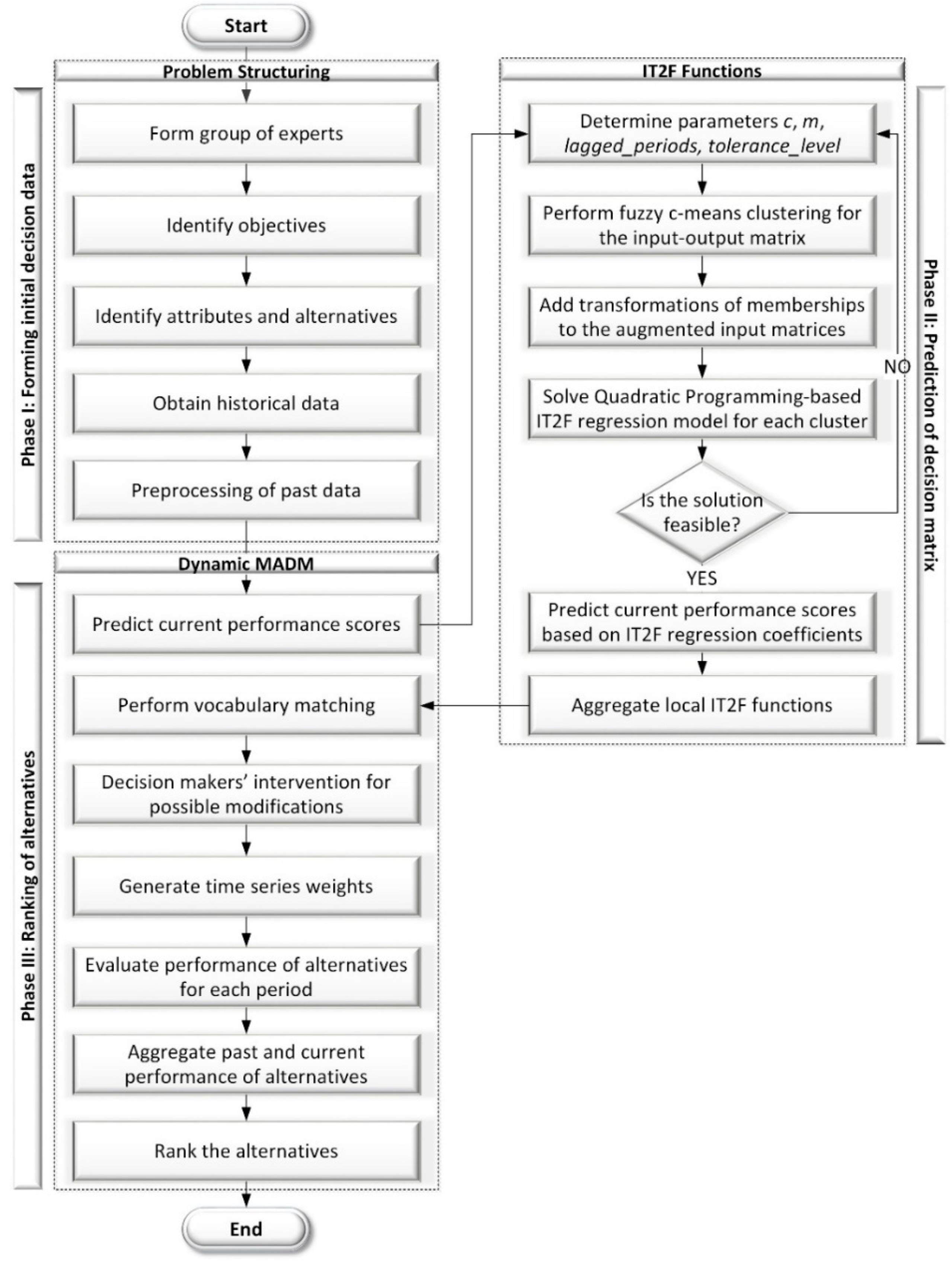

- A dynamic MADM model is proposed based on a new IT2F functions approach.

- An interactive procedure is provided that the current decision making matrix is predicted in forms of IT2F sets. Moreover, vocabulary matching procedure is developed so that the predicted performance scores of alternatives are recommended to the decision makers through linguistic terms such as low, medium, high, etc.

- The proposed model interacts with decision makers whose subjective judgments are combined with the notion of “let the data speak for itself”. By providing decision makers with data-driven suggestions regarding the performance of alternatives, preference elicitation effort at each period is considerably reduced.

- The proposed model does not require any technical knowledge such as fuzzy sets, t-norms, t-conorms, implication functions, etc. The proposed model can be easily integrated into the legacy systems of the firms, since the crisp values are processed when providing IT2F outputs.

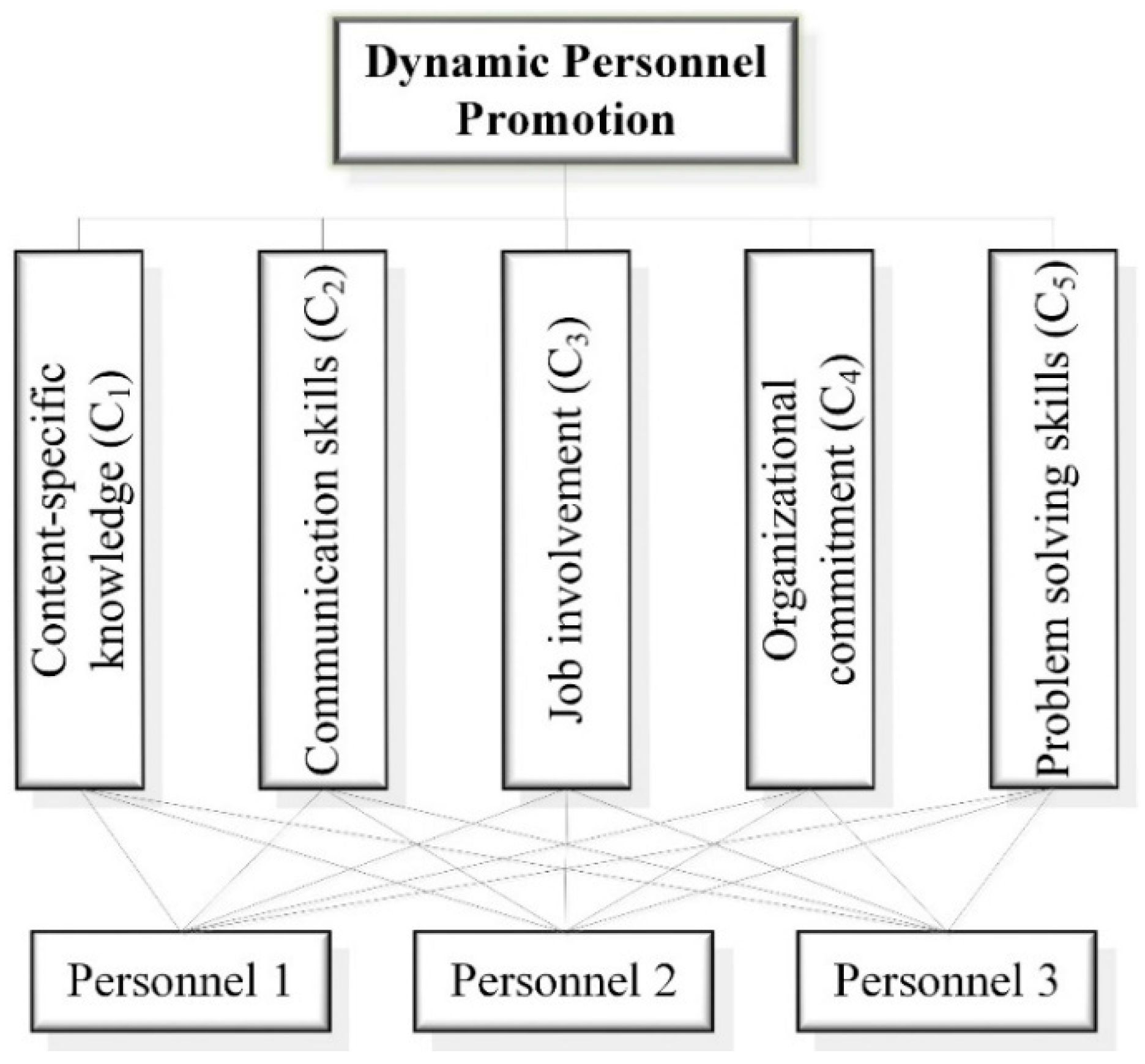

- A real-life personnel promotion problem is used to demonstrate the applicability of the proposed model. Rankings of employees are calculated based on past and current performance matrices with appropriate time series weights.

2. Theoretical Background

2.1. Traditional Dynamic Multiple Attribute Decision Making

2.2. Possibilistic Fuzzy Regression

2.3. Turksen’s Fuzzy Functions Approach

- Membership functions pertaining to antecedent and consequent parts of the fuzzy rules should be identified.

- Aggregation of antecedents requires selection of suitable conjunction and disjunction operators (t-norms, t-conorms).

- Proper implication operators should be identified for representation of the rules, which can be a challenging issue.

- A suitable defuzzification method should be identified.

3. Developed IT2F Model

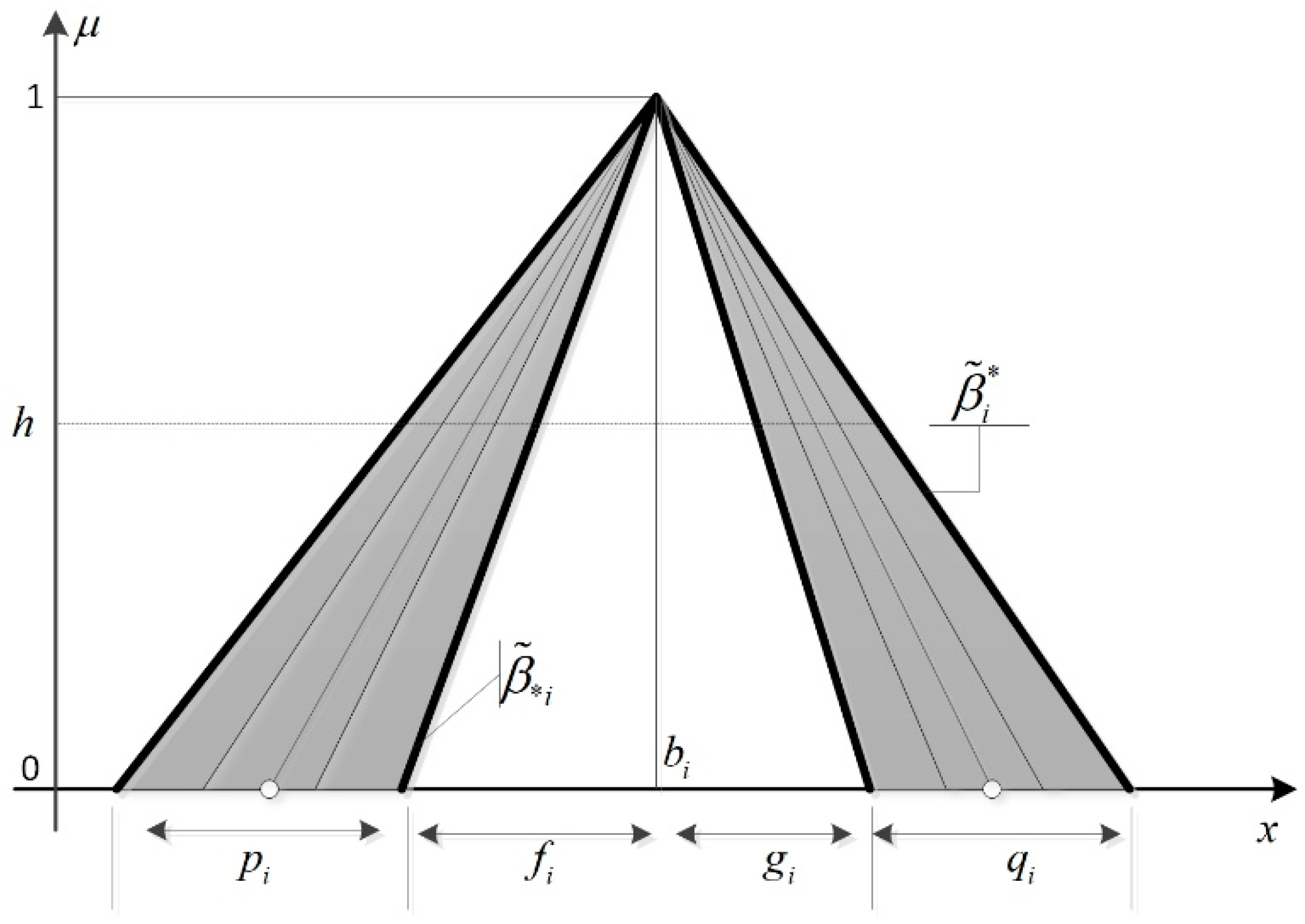

3.1. Interval Type-2 Fuzzy Sets

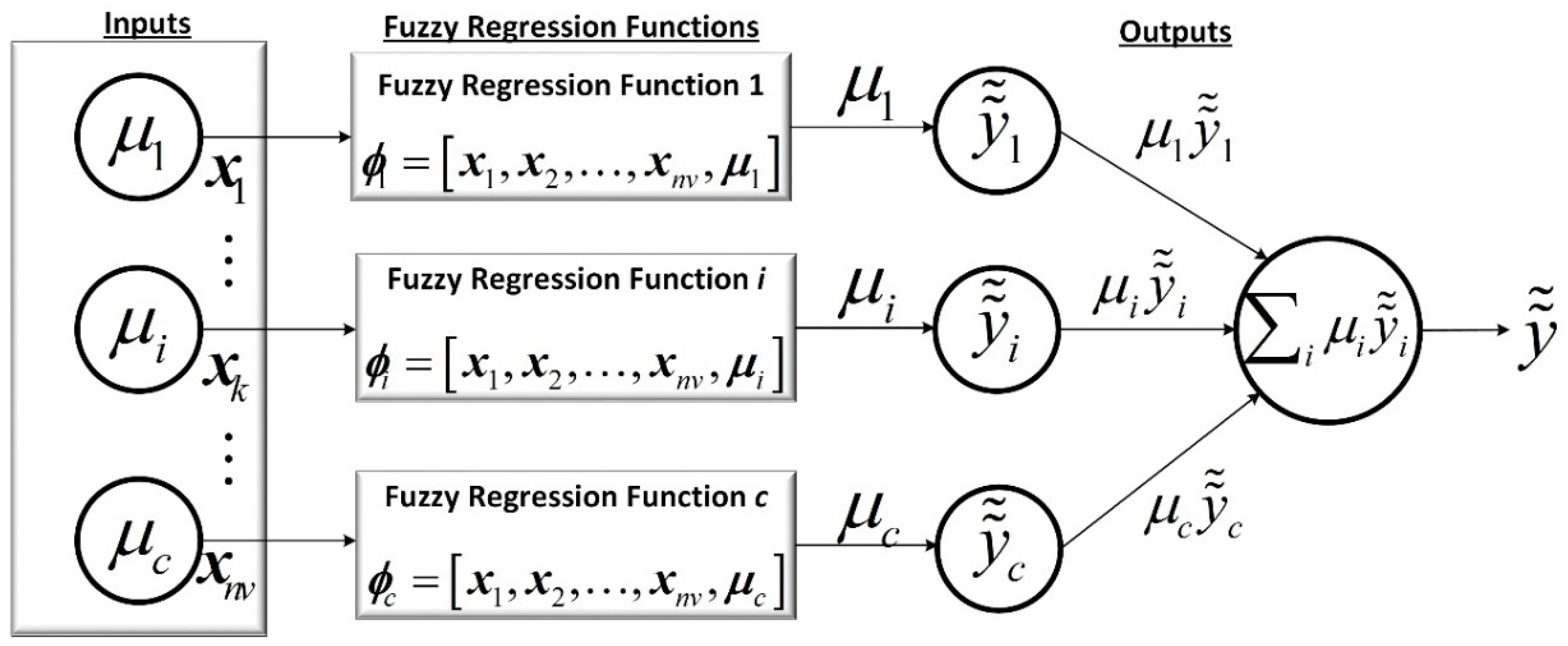

3.2. IT2F Regression Model

3.3. Dynamic MADM Model via Proposed IT2F Functions

3.3.1. Phase-I: Problem Structuring

3.3.2. Phase-II: Training of Fuzzy Functions Approach

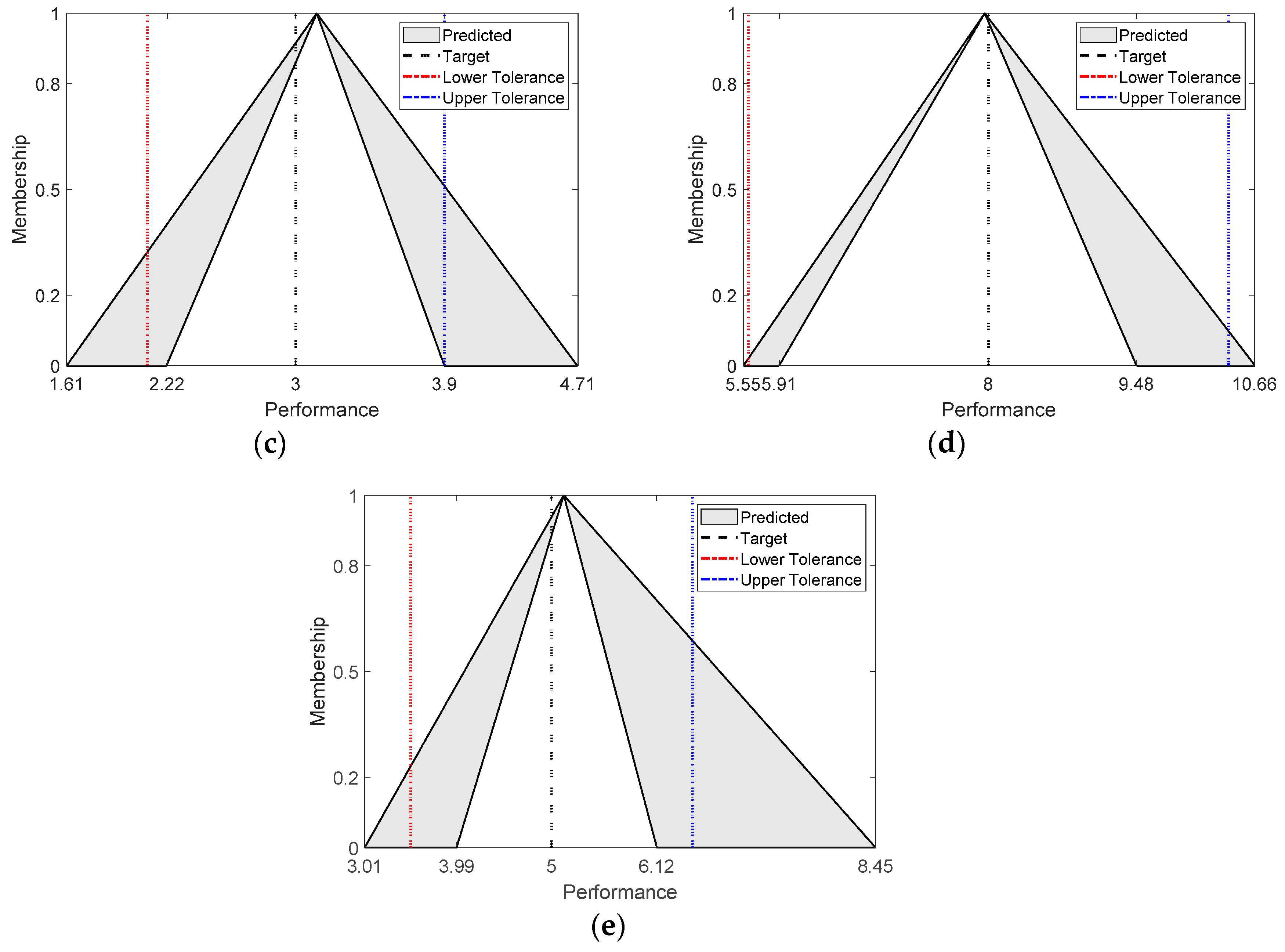

3.3.3. Phase-III: Ranking of Alternatives

- ,

- ,

- ,where is a monotonically non-decreasing function defined in the unit interval .

4. Case Study

4.1. Structering Personnel Promotion Problem

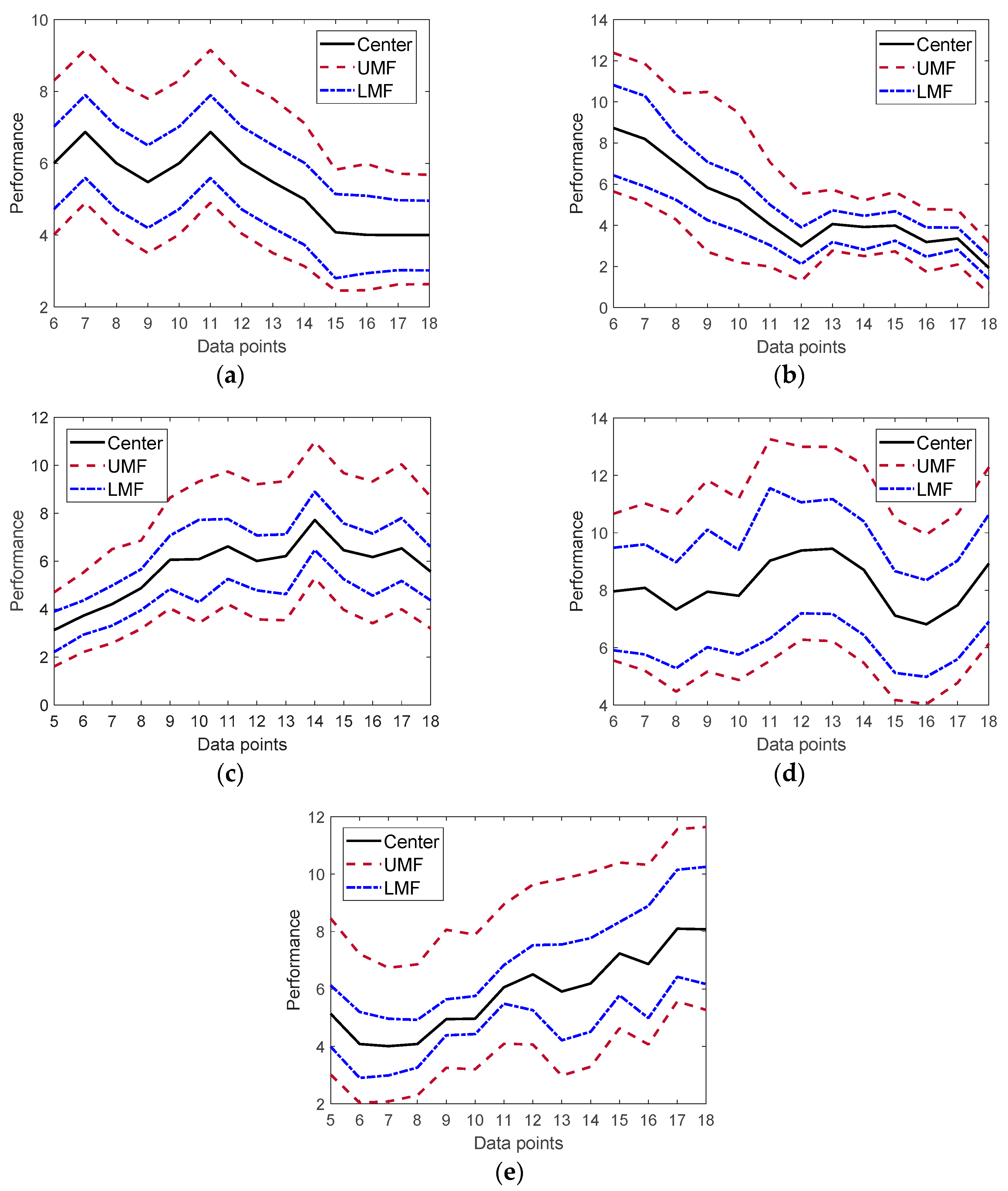

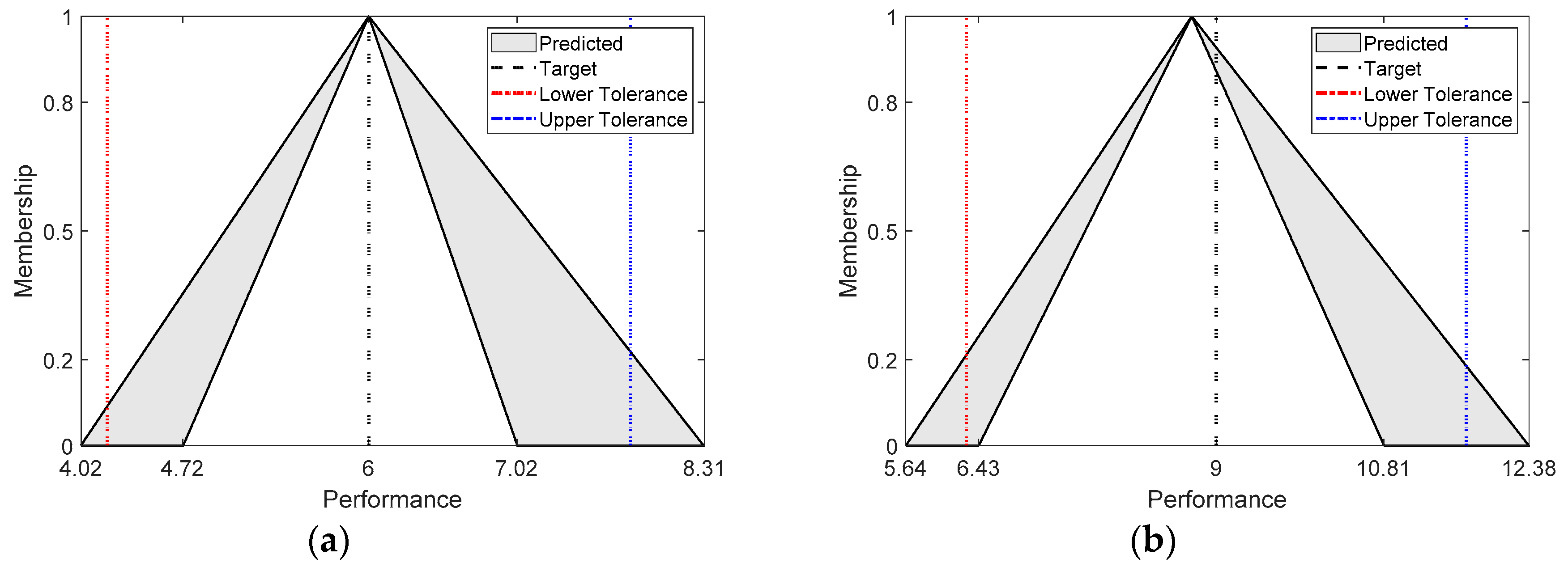

4.2. Estimating the Current Decision Matrix

4.3. Ranking of Employees

5. Discussion

6. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Dyer, J.S. Maut—Multiattribute Utility Theory. In Multiple Criteria Decision Analysis: State of the Art Surveys; Springer: New York, NY, USA, 2005; Volume 78, pp. 265–292. [Google Scholar]

- Fishburn, P.C. Utility Theory for Decision Making; Wiley: New York, NY, USA, 1970. [Google Scholar]

- Keeney, R.L. Building models of values. Eur. J. Oper. Res. 1988, 37, 149–157. [Google Scholar] [CrossRef]

- Saaty, T.L. The Analytic Hierarchy Process; McGraw-Hill: New York, NY, USA, 1980. [Google Scholar]

- Saaty, T.L. Decision Making with Dependence and Feedback: The Analytic Network Process; RWS Publications: Pittsburgh, PA, USA, 1996; p. 481. [Google Scholar]

- Hwang, C.L.; Yoon, K. Multiple Attribute Decision Making, Methods and Applications; Springer: New York, NY, USA, 1981; Volume 186. [Google Scholar]

- Roy, B. The Outranking Approach and the Foundations of Electre Methods. In Readings in Multiple Criteria Decision Aid; Bana e Costa, C., Ed.; Springer: Berlin/Heidelberg, Germany, 1990; pp. 155–183. [Google Scholar]

- Opricovic, S.; Tzeng, G.-H. Compromise solution by MCDM methods: A comparative analysis of VIKOR and TOPSIS. Eur. J. Oper. Res. 2004, 156, 445–455. [Google Scholar] [CrossRef]

- Fontela, E.; Gabus, A. The DEMATEL Observer; Battelle Geneva Research Center: Geneva, Switzerland, 1976. [Google Scholar]

- Kornbluth, J.S.H. Dynamic multi-criteria decision making. J. Multi-Criteria Decis. Anal. 1992, 1, 81–92. [Google Scholar] [CrossRef]

- Dong, Q.X.; Guo, Y.J.; He, Z.Y. Method of dynamic multi-criteria decision-making based on integration of absolute and relative differences. In Proceedings of the IEEE International Conference on Advanced Computer Control, Shenyang, China, 27–29 March 2010; pp. 353–356. [Google Scholar]

- Lou, C.; Peng, Y.; Kou, G.; Ge, X. DMCDM: A dynamic multi criteria decision making model for sovereign credit default risk evaluation. In Proceedings of the 2nd International Conference on Software Engineering and Data Mining, Chengdu, China, 23–25 June 2010; pp. 489–494. [Google Scholar]

- Campanella, G.; Ribeiro, R.A. A framework for dynamic multiple-criteria decision making. Decis. Support Syst. 2011, 52, 52–60. [Google Scholar] [CrossRef]

- Xu, Z.; Yager, R.R. Dynamic intuitionistic fuzzy multi-attribute decision making. Int. J. Approx. Reason. 2008, 48, 246–262. [Google Scholar] [CrossRef]

- Park, J.H.; Cho, H.J.; Kwun, Y.C. Extension of the VIKOR method to dynamic intuitionistic fuzzy multiple attribute decision making. Comput. Math. Appl. 2013, 65, 731–744. [Google Scholar] [CrossRef]

- Zhou, F.; Wang, X.; Samvedi, A. Quality improvement pilot program selection based on dynamic hybrid MCDM approach. Ind. Manag. Data Syst. 2018, 118, 144–163. [Google Scholar] [CrossRef]

- Bali, Ö.; Gümüş, S.; Kaya, İ. A Multi-Period Decision Making Procedure Based on Intuitionistic Fuzzy Sets for Selection Among Third-Party Logistics Providers. J. Mult. Valued Log. Soft Comput. 2015, 24, 547–569. [Google Scholar]

- Chen, Y.; Li, B. Dynamic multi-attribute decision making model based on triangular intuitionistic fuzzy numbers. Sci. Iran. 2011, 18, 268–274. [Google Scholar] [CrossRef]

- Liang, C.Y.; Zhang, E.Q.; Qi, X.W.; Cai, M.J. A dynamic multiple attribute decision making method under incomplete information. In Proceedings of the 6th International Conference on Natural Computation, Yantai, China, 10–12 August 2010; pp. 2720–2723. [Google Scholar]

- Bali, O.; Dagdeviren, M.; Gumus, S. An integrated dynamic intuitionistic fuzzy MADM approach for personnel promotion problem. Kybernetes 2015, 44, 1422–1436. [Google Scholar] [CrossRef]

- Xu, Z. A method based on the dynamic weighted geometric aggregation operator for dynamic hybrid multi-attribute group decision making. Int. J. Uncertain. Fuzziness Knowl. Based Syst. 2009, 17, 15–33. [Google Scholar] [CrossRef]

- Zulueta, Y.; Martínez-Moreno, J.; Pérez, R.B.; Martínez, L. A discrete time variable index for supporting dynamic multi-criteria decision making. Int. J. Uncertain. Fuzziness Knowl. Based Syst. 2014, 22, 1–22. [Google Scholar] [CrossRef]

- Lin, Y.-H.; Lee, P.-C.; Ting, H.-I. Dynamic multi-attribute decision making model with grey number evaluations. Expert Syst. Appl. 2008, 35, 1638–1644. [Google Scholar] [CrossRef]

- Zhang, C.L. Risk assessment of supply chain finance with intuitionistic fuzzy information. J. Intell. Fuzzy Syst. 2016, 31, 1967–1975. [Google Scholar] [CrossRef]

- Wei, G.W. Some geometric aggregation functions and their application to dynamic multiple attribute decision making in the intuitionistic fuzzy setting. Int. J. Uncertain. Fuzziness Knowl. Based Syst. 2009, 17, 179–196. [Google Scholar] [CrossRef]

- Ai, F.Y.; Yang, J.Y. Approaches to dynamic multiple attribute decision making with 2-tuple linguistic information. J. Intell. Fuzzy Syst. 2014, 27, 2715–2723. [Google Scholar] [CrossRef]

- Liu, H.; Jiang, L.; Martínez, L. A dynamic multi-criteria decision making model with bipolar linguistic term sets. Expert Syst. Appl. 2018, 95, 104–112. [Google Scholar] [CrossRef]

- Liu, Y. A method for 2-tuple linguistic dynamic multiple attribute decision making with entropy weight. J. Intell. Fuzzy Syst. 2014, 27, 1803–1810. [Google Scholar] [CrossRef]

- Zulueta-Veliz, Y.; Sanchez, P.J. Linguistic dynamic multicriteria decision making using symbolic linguistic computing models. Granul. Comput. 2018. [Google Scholar] [CrossRef]

- Xu, Z. Multi-period multi-attribute group decision-making under linguistic assessments. Int. J. Gen. Syst. 2009, 38, 823–850. [Google Scholar] [CrossRef]

- Cui, J.; Liu, S.F.; Dang, Y.G.; Xie, N.M.; Zeng, B. A grey multi-stage dynamic multiple attribute decision making method. In Proceedings of the IEEE International Conference on Grey Systems and Intelligent Services, Nanjing, China, 15–18 September 2011; pp. 548–550. [Google Scholar]

- Shen, J.M.; Dang, Y.G.; Zhou, W.J.; Li, X.M. Evaluation for Core Competence of Private Enterprises in Xuchang City Based on an Improved Dynamic Multiple-Attribute Decision-Making Model. Math. Probl. Eng. 2015, 2015. [Google Scholar] [CrossRef]

- Mardani, A.; Nilashi, M.; Zavadskas, E.K.; Awang, S.R.; Zare, H.; Jamal, N.M. Decision Making Methods Based on Fuzzy Aggregation Operators: Three Decades Review from 1986 to 2017. Int. J. Inf. Technol. Decis. Mak. 2018, 17, 391–466. [Google Scholar] [CrossRef]

- Saaty, T.L. Time dependent decision-making; dynamic priorities in the AHP/ANP: Generalizing from points to functions and from real to complex variables. Math. Comput. Model. 2007, 46, 860–891. [Google Scholar] [CrossRef]

- Hashemkhani Zolfani, S.; Maknoon, R.; Zavadskas, E.K. An introduction to Prospective Multiple Attribute Decision Making (PMADM). Technol. Econ. Dev. Econ. 2016, 22, 309–326. [Google Scholar] [CrossRef]

- Orji, I.J.; Wei, S. An innovative integration of fuzzy-logic and systems dynamics in sustainable supplier selection: A case on manufacturing industry. Comput. Ind. Eng. 2015, 88, 1–12. [Google Scholar] [CrossRef]

- Baykasoğlu, A.; Gölcük, İ. A dynamic multiple attribute decision making model with learning of fuzzy cognitive maps. Comput. Ind. Eng. 2019. [Google Scholar] [CrossRef]

- Lee, H.; Tanaka, H. Fuzzy approximations with non-symmetric fuzzy parameters in fuzzy regression analysis. J. Oper. Res. Soc. Jpn. 1999, 42, 98–112. [Google Scholar] [CrossRef]

- Turksen, I.B. Fuzzy functions with LSE. Appl. Soft Comput. J. 2008, 8, 1178–1188. [Google Scholar] [CrossRef]

- Çelikyilmaz, A.; Turksen, I.B. Fuzzy functions with support vector machines. Inf. Sci. 2007, 177, 5163–5177. [Google Scholar] [CrossRef]

- Kasabov, N.K.; Song, Q. DENFIS: Dynamic evolving neural-fuzzy inference system and its application for time-series prediction. IEEE Trans. Fuzzy Syst. 2002, 10, 144–154. [Google Scholar] [CrossRef]

- Emami, M.R.; Türksen, I.B.; Goldenberg, A.A. Development of a systematic methodology of fuzzy logic modeling. IEEE Trans. Fuzzy Syst. 1998, 6, 346–361. [Google Scholar] [CrossRef]

- Kilic, K.; Sproule, B.A.; Turksen, I.B.; Naranjo, C.A. A fuzzy system modeling algorithm for data analysis and approximate reasoning. Robot. Auton. Syst. 2004, 49, 173–180. [Google Scholar] [CrossRef]

- Babuška, R.; Verbruggen, H.B. Constructing fuzzy models by product space clustering. In Fuzzy Model Identification; Springer: Berlin, Germany, 1997; pp. 53–90. [Google Scholar]

- Zarandi, M.H.F.; Turksen, I.B.; Rezaee, B. A systematic approach to fuzzy modeling for rule generation from numerical data. In Proceedings of the IEEE Annual Meeting of the Fuzzy Information, Banff, AB, Canada, 27–30 June 2004; pp. 768–773. [Google Scholar]

- Jang, J.-S.R. ANFIS: Adaptive-network-based fuzzy inference system. IEEE Trans. Syst. Man Cybern. 1993, 23, 665–685. [Google Scholar] [CrossRef]

- Cordón, O.; Gomide, F.; Herrera, F.; Hoffmann, F.; Magdalena, L. Ten years of genetic fuzzy systems: Current framework and new trends. Fuzzy Sets Syst. 2004, 141, 5–31. [Google Scholar] [CrossRef]

- Mendel, J.M.; John, R.I.; Feilong, L. Interval Type-2 Fuzzy Logic Systems Made Simple. IEEE Trans. Fuzzy Syst. 2006, 14, 808–821. [Google Scholar] [CrossRef]

- Mendel, J.M.; Wu, D. Perceptual Computing: Aiding People in Making Subjective Judgments; Wiley-IEEE Press: Hoboken, NJ, USA, 2010; Volume 13. [Google Scholar]

- Hamrawi, H.; Coupland, S. Type-2 fuzzy arithmetic using alpha-planes. In Proceedings of the IFSA-EUSFLAT Conference, Lisbon, Portugal, 20–24 July 2009; pp. 606–612. [Google Scholar]

- Chen, S.-M.; Yang, M.-W.; Lee, L.-W.; Yang, S.-W. Fuzzy multiple attributes group decision-making based on ranking interval type-2 fuzzy sets. Expert Syst. Appl. 2012, 39, 5295–5308. [Google Scholar] [CrossRef]

- Karnik, N.N.; Mendel, J.M. Centroid of a type-2 fuzzy set. Inf. Sci. 2001, 132, 195–220. [Google Scholar] [CrossRef]

- Wu, D.; Mendel, J.M. A comparative study of ranking methods, similarity measures and uncertainty measures for interval type-2 fuzzy sets. Inf. Sci. 2009, 179, 1169–1192. [Google Scholar] [CrossRef]

- Bajestani, N.S.; Kamyad, A.V.; Esfahani, E.N.; Zare, A. Prediction of retinopathy in diabetic patients using type-2 fuzzy regression model. Eur. J. Oper. Res. 2018, 264, 859–869. [Google Scholar] [CrossRef]

- Yager, R.R. On ordered weighted averaging aggregation operators in multicriteria decisionmaking. IEEE Trans. Syst. Man Cybern. 1988, 18, 183–190. [Google Scholar] [CrossRef]

| Period | Past Decision Matrices | Corresponding Time Series | Input Matrix | Output Matrix |

|---|---|---|---|---|

| t1 | ||||

| t2 | ||||

| t3 | ||||

| t4 |

| Period | C1 | C2 | C3 | C4 | C5 |

|---|---|---|---|---|---|

| 1 | 6 | 7 | 3 | 3 | 7 |

| 2 | 6 | 7 | 2 | 2 | 6 |

| 3 | 7 | 8 | 3 | 3 | 5 |

| 4 | 6 | 9 | 4 | 5 | 5 |

| 5 | 6 | 8 | 3 | 6 | 5 |

| 6 | 6 | 9 | 4 | 8 | 4 |

| 7 | 7 | 8 | 4 | 8 | 4 |

| 8 | 6 | 7 | 5 | 7 | 4 |

| 9 | 6 | 6 | 6 | 8 | 5 |

| 10 | 6 | 5 | 6 | 8 | 5 |

| 11 | 7 | 4 | 7 | 9 | 6 |

| 12 | 6 | 3 | 6 | 10 | 6 |

| 13 | 5 | 4 | 6 | 10 | 6 |

| 14 | 5 | 4 | 8 | 8 | 6 |

| 15 | 4 | 4 | 6 | 7 | 8 |

| 16 | 4 | 3 | 6 | 7 | 7 |

| 17 | 4 | 3 | 6 | 7 | 8 |

| 18 | 4 | 2 | 6 | 9 | 8 |

| Alternative | Criteria | Parameters | ||

|---|---|---|---|---|

| c | m | Lagged_Periods | ||

| 1 | 1 | 2 | 1.6 | 5 |

| 2 | 5 | 1.6 | 5 | |

| 3 | 4 | 2.1 | 4 | |

| 4 | 5 | 2.1 | 5 | |

| 5 | 4 | 1.6 | 4 | |

| 2 | 1 | 4 | 1.6 | 5 |

| 2 | 5 | 2.1 | 5 | |

| 3 | 2 | 1.1 | 5 | |

| 4 | 5 | 2.1 | 5 | |

| 5 | 5 | 1.6 | 5 | |

| 3 | 1 | 2 | 2.1 | 5 |

| 2 | 2 | 1.6 | 5 | |

| 3 | 3 | 1.6 | 5 | |

| 4 | 5 | 2.1 | 5 | |

| 5 | 5 | 1.6 | 5 | |

| Variable | IT2F Regression Coefficients | IT2F Coefficients | ||||

|---|---|---|---|---|---|---|

| b | f | g | p | q | ||

| 1 | 10.572 | 8.65 × 10−9 | 0.031 | 9.30 × 10−11 | 9.13 × 10−11 | ((10.572, 10.572, 10.603;1), (10.572, 10.572, 10.603;1)) |

| −8.524 | 3.39 × 10−9 | 0.070 | 6.45 × 10−11 | 6.44 × 10−11 | ((−8.524, −8.524, −8.454;1), (−8.524, −8.524, −8.454;1)) | |

| −4.550 | 2.04 × 10−9 | 0.016 | 2.79 × 10−11 | 2.78 × 10−11 | ((−4.55, −4.55, −4.534;1), (−4.55, −4.55, −4.534;1)) | |

| 13.466 | 7.27 × 10−9 | 0.085 | 7.34 × 10−11 | 7.34 × 10−11 | ((13.466, 13.466, 13.551;1), (13.466, 13.466, 13.551;1)) | |

| 0.017 | 0.146043 | 0.010 | 2.67 × 10−11 | 2.59 × 10−11 | ((−0.129, 0.017, 0.027;1), (−0.129, 0.017, 0.027;1)) | |

| −0.613 | 1.24 × 10−9 | 0.005 | 0.1 | 0.185714 | ((−0.713, −0.613, −0.423;1), (−0.613, −0.613, −0.609;1)) | |

| −0.211 | 1.44 × 10−9 | 0.004 | 1.67 × 10−11 | 1.63 × 10−11 | ((−0.211, −0.211, −0.207;1), (−0.211, −0.211, −0.207;1)) | |

| 0.822 | 0.078111 | 0.126 | 1.48 × 10−11 | 1.42 × 10−11 | ((0.744, 0.822, 0.948;1), (0.744, 0.822, 0.948;1)) | |

| 0.038 | 1.44 × 10−9 | 0.006 | 1.36 × 10−11 | 1.34 × 10−11 | ((0.038, 0.038, 0.044;1), (0.038, 0.038, 0.044;1)) | |

| Variable | IT2F Regression Coefficients | IT2F Coefficients | ||||

|---|---|---|---|---|---|---|

| b | f | g | p | q | ||

| 1 | −10.907 | 1.28 | 1.018 | 4.25 × 10−9 | 4.59 × 10−9 | ((−12.184, −10.907, −9.889;1), (−12.184, −10.907, −9.889;1)) |

| −18.964 | 3.03 × 10−9 | 0.000 | 1.44 × 10−8 | 1.44 × 10−8 | ((−18.964, −18.964, −18.964;1), (−18.964, −18.964, −18.964;1)) | |

| 15.077 | 1.79 × 10−9 | 0.000 | 3.39 × 10−9 | 3.61 × 10−9 | ((15.077, 15.077, 15.077;1), (15.077, 15.077, 15.077;1)) | |

| −3.447 | 3.12 × 10−9 | 0.000 | 7.20 × 10−1 | 1.16 | ((−4.167, −3.447, −2.289;1), (−3.447, −3.447, −3.447;1)) | |

| −0.107 | 1.50 × 10−9 | 0.000 | 8.08 × 10−10 | 8.56 × 10−10 | ((−0.107, −0.107, −0.107;1), (−0.107, −0.107, −0.107;1)) | |

| −0.725 | 9.06 × 10−10 | 0.000 | 3.64 × 10−7 | 0.024974 | ((−0.725, −0.725, −0.7;1), (−0.725, −0.725, −0.725;1)) | |

| −0.262 | 3.18 × 10−9 | 0.000 | 6.04 × 10−10 | 6.37 × 10−10 | ((−0.262, −0.262, −0.262;1), (−0.262, −0.262, −0.262;1)) | |

| 0.735 | 3.18 × 10−9 | 0.000 | 5.26 × 10−10 | 5.27 × 10−10 | ((0.735, 0.735, 0.735;1), (0.735, 0.735, 0.735;1)) | |

| 0.150 | 1.16 × 10−5 | 0.001 | 5.74 × 10−10 | 6.10 × 10−10 | ((0.15, 0.15, 0.151;1), (0.15, 0.15, 0.151;1)) | |

| Alternative | Criteria | Proposed IT2F Functions | IT2F Regression | ||

|---|---|---|---|---|---|

| RMSE | MAPE | RMSE | MAPE | ||

| 1 | 1 | 0.2307 | 2.3883 | 0.6974 | 11.4445 |

| 2 | 0.1948 | 3.3149 | 0.7844 | 15.1122 | |

| 3 | 0.2519 | 4.0679 | 0.9828 | 14.0217 | |

| 4 | 0.375 | 3.4459 | 0.6952 | 7.2826 | |

| 5 | 0.3606 | 5.6463 | 0.8976 | 16.7376 | |

| 2 | 1 | 0.2894 | 6.0687 | 0.4449 | 13.6456 |

| 2 | 0.2392 | 2.4306 | 0.6793 | 7.5079 | |

| 3 | 0.3733 | 4.2811 | 0.9343 | 11.083 | |

| 4 | 0.4283 | 5.1677 | 0.5934 | 7.8132 | |

| 5 | 0.3035 | 4.0027 | 0.5657 | 7.9788 | |

| 3 | 1 | 0.1457 | 1.722 | 0.7285 | 8.0463 |

| 2 | 0.1686 | 3.9912 | 0.5833 | 17.4631 | |

| 3 | 0.2417 | 2.6302 | 0.6196 | 6.6876 | |

| 4 | 0.2142 | 2.135 | 0.3221 | 3.4379 | |

| 5 | 0.2205 | 2.7494 | 0.5567 | 7.9517 | |

| Criteria | Alternatives | ||

|---|---|---|---|

| A1 | A2 | A3 | |

| C1 | ((1.694, 2.99, 4.515;1), | ((2.163, 3.73, 5.516;1), | ((5.223, 7.902, 11.27;1), |

| (2.064, 2.99, 3.822;1)) | (2.864, 3.73, 4.204;1)) | (5.601, 7.902, 10.396;1)) | |

| C2 | ((1.354, 2.255, 3.33;1), | ((4.992, 8.419, 12.522;1), | ((2.662, 3.919, 5.449;1), |

| (1.891, 2.255, 2.623;1)) | (5.964, 8.419, 10.802;1)) | (3.204, 3.919, 4.596;1)) | |

| C3 | ((2.986, 5.358, 8.46;1), | ((2.194, 4.143, 5.991;1), | ((6.016, 9.229, 12.788;1), |

| (4.13, 5.358, 6.362;1)) | (3.144, 4.143, 5.238;1)) | (6.68, 9.229, 11.589;1)) | |

| C4 | ((7.187, 10.353, 14.08;1), | ((0.469, 1.617, 3.242;1), | ((5.678, 8.415, 11.417;1), |

| (8.084, 10.353, 12.123;1)) | (0.933, 1.617, 2.178;1)) | (6.071, 8.415, 10.508;1)) | |

| C5 | ((2.409, 5.252, 9.04;1), | ((4.427, 6.446, 9.052;1), | ((3.715, 6.246, 8.94;1), |

| (3.372, 5.252, 7.208;1)) | (5.168, 6.446, 7.487;1)) | (4.566, 6.246, 7.472;1)) | |

| Linguistic Terms | IT2F Number | |

|---|---|---|

| Symbol | Explanation | |

| VL | Very Low | ((0, 0, 1;1), (0, 0, 0.5;1)) |

| L | Low | ((0, 1, 3;1), (0.5, 1, 2;1)) |

| ML | Medium Low | ((1, 3, 5;1), (2, 3, 4;1)) |

| M | Medium | ((3, 5, 7;1), (4, 5, 6;1)) |

| MH | Medium High | ((5, 7, 9;1), (6, 7, 8;1)) |

| H | High | ((7, 9, 10;1), (8, 9, 9.5;1)) |

| VH | Very High | ((9, 10, 10;1), (9.5, 10, 10;1)) |

| Criteria | Alternatives | ||

|---|---|---|---|

| A1 | A2 | A3 | |

| C1 | ML | ML | MH |

| C2 | ML | H | ML |

| C3 | M | M | H |

| C4 | H | L | H |

| C5 | M | MH | MH |

| Criteria | Alternatives | ||

|---|---|---|---|

| A1 | A2 | A3 | |

| C1 | ML | MH | MH |

| C2 | ML | H | ML |

| C3 | M | M | H |

| C4 | H | L | MH |

| C5 | M | H | MH |

| Periods | Distance to Positive Ideal Solution | ||

|---|---|---|---|

| Employee 1 | Employee 2 | Employee 3 | |

| t1 | 0.1458 | 0.1101 | 0.1111 |

| t2 | 0.2259 | 0.1569 | 0.1084 |

| t3 | 0.2155 | 0.1546 | 0.1161 |

| t4 | 0.1604 | 0.1203 | 0.0850 |

| t5 | 0.1730 | 0.1313 | 0.0938 |

| t6 | 0.1700 | 0.0919 | 0.0833 |

| t7 | 0.1700 | 0.0977 | 0.0750 |

| t8 | 0.1714 | 0.0709 | 0.0643 |

| t9 | 0.1256 | 0.0872 | 0.0750 |

| t10 | 0.1303 | 0.0874 | 0.0750 |

| t11 | 0.0960 | 0.0797 | 0.1086 |

| t12 | 0.1146 | 0.0901 | 0.1397 |

| t13 | 0.1204 | 0.0961 | 0.1393 |

| t14 | 0.1004 | 0.0914 | 0.1050 |

| t15 | 0.1226 | 0.1401 | 0.0797 |

| t16 | 0.1264 | 0.1330 | 0.0972 |

| t17 | 0.1422 | 0.1873 | 0.1001 |

| t18 | 0.1404 | 0.2335 | 0.0981 |

| tC | 0.1725 | 0.1955 | 0.1143 |

| Periods | Distance to Negative Ideal Solution | ||

|---|---|---|---|

| Employee 1 | Employee 2 | Employee 3 | |

| t1 | 0.1409 | 0.1556 | 0.1541 |

| t2 | 0.1169 | 0.1524 | 0.2462 |

| t3 | 0.1161 | 0.1334 | 0.2155 |

| t4 | 0.0898 | 0.0698 | 0.1613 |

| t5 | 0.0995 | 0.0805 | 0.1762 |

| t6 | 0.1011 | 0.1636 | 0.1836 |

| t7 | 0.0983 | 0.1278 | 0.1857 |

| t8 | 0.0744 | 0.1697 | 0.1807 |

| t9 | 0.0684 | 0.1259 | 0.1385 |

| t10 | 0.0563 | 0.0950 | 0.1336 |

| t11 | 0.0811 | 0.1102 | 0.0717 |

| t12 | 0.0997 | 0.1397 | 0.0750 |

| t13 | 0.0817 | 0.1311 | 0.0750 |

| t14 | 0.0680 | 0.1050 | 0.0914 |

| t15 | 0.0927 | 0.0850 | 0.1247 |

| t16 | 0.0810 | 0.1000 | 0.1244 |

| t17 | 0.1218 | 0.1050 | 0.1719 |

| t18 | 0.2143 | 0.1173 | 0.1966 |

| tC | 0.1873 | 0.1631 | 0.1751 |

| Periods | Closeness Coefficients | ||

|---|---|---|---|

| Employee 1 | Employee 2 | Employee 3 | |

| t1 | 0.4915 | 0.5856 | 0.5811 |

| t2 | 0.3411 | 0.4928 | 0.6942 |

| t3 | 0.3501 | 0.4633 | 0.6499 |

| t4 | 0.3588 | 0.3671 | 0.6549 |

| t5 | 0.3652 | 0.3801 | 0.6527 |

| t6 | 0.3729 | 0.6405 | 0.6878 |

| t7 | 0.3664 | 0.5666 | 0.7123 |

| t8 | 0.3028 | 0.7053 | 0.7376 |

| t9 | 0.3525 | 0.5907 | 0.6487 |

| t10 | 0.3016 | 0.5208 | 0.6404 |

| t11 | 0.4580 | 0.5802 | 0.3978 |

| t12 | 0.4652 | 0.6078 | 0.3493 |

| t13 | 0.4043 | 0.5769 | 0.3500 |

| t14 | 0.4039 | 0.5347 | 0.4653 |

| t15 | 0.4304 | 0.3776 | 0.6099 |

| t16 | 0.3906 | 0.4291 | 0.5614 |

| t17 | 0.4613 | 0.3593 | 0.6321 |

| t18 | 0.6041 | 0.3345 | 0.6671 |

| tC | 0.5206 | 0.4547 | 0.6051 |

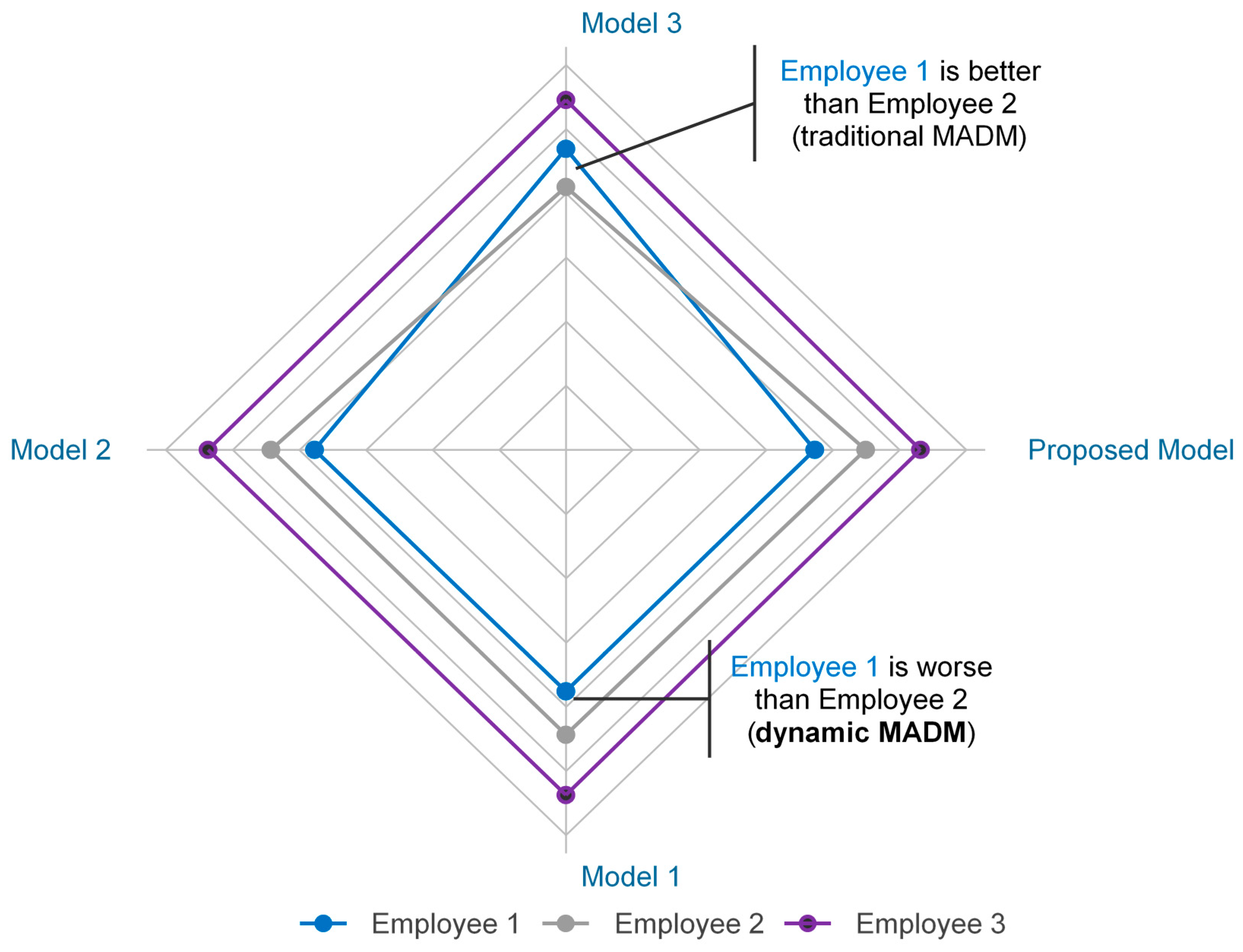

| Model Components | Models | |||

|---|---|---|---|---|

| Proposed Model | Model 1 | Model 2 | Model 3 | |

| IT2F Functions | Yes | Yes | Yes | Yes |

| Vocabulary Matching | Yes | Yes | No | Yes |

| Modified preferences (interactive) | Yes | No | No | Yes |

| DWA Operator | Yes | Yes | Yes | No |

| Model | Overall Assessment | |||||

|---|---|---|---|---|---|---|

| Employee 1 | Employee 2 | Employee 3 | ||||

| CC | Rank | CC | Rank | CC | Rank | |

| Proposed Model | 0.4138 | 3 | 0.4984 | 2 | 0.5895 | 1 |

| Model 1 | 0.4176 | 3 | 0.4926 | 2 | 0.5967 | 1 |

| Model 2 | 0.4185 | 3 | 0.4905 | 2 | 0.5951 | 1 |

| Model 3 | 0.5206 | 2 | 0.4547 | 3 | 0.6051 | 1 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Baykasoğlu, A.; Gölcük, İ. An Interactive Data-Driven (Dynamic) Multiple Attribute Decision Making Model via Interval Type-2 Fuzzy Functions. Mathematics 2019, 7, 584. https://doi.org/10.3390/math7070584

Baykasoğlu A, Gölcük İ. An Interactive Data-Driven (Dynamic) Multiple Attribute Decision Making Model via Interval Type-2 Fuzzy Functions. Mathematics. 2019; 7(7):584. https://doi.org/10.3390/math7070584

Chicago/Turabian StyleBaykasoğlu, Adil, and İlker Gölcük. 2019. "An Interactive Data-Driven (Dynamic) Multiple Attribute Decision Making Model via Interval Type-2 Fuzzy Functions" Mathematics 7, no. 7: 584. https://doi.org/10.3390/math7070584

APA StyleBaykasoğlu, A., & Gölcük, İ. (2019). An Interactive Data-Driven (Dynamic) Multiple Attribute Decision Making Model via Interval Type-2 Fuzzy Functions. Mathematics, 7(7), 584. https://doi.org/10.3390/math7070584