Recursive Algorithms for Multivariable Output-Error-Like ARMA Systems

Abstract

1. Introduction

- Based on the hierarchical identification principle, this paper decomposes the original system into m subsystems.

- Based on the coupled relationships between subsystems, a partially-coupled recursive generalized extended least squares (PC-RGELS) algorithm is proposed to identify the parameters of M-OEARMA-like systems.

- The derived PC-RGELS algorithm has higher computation efficiency and higher estimation accuracy than the recursive generalized extended least squares (RGELS) algorithm.

2. The System Description

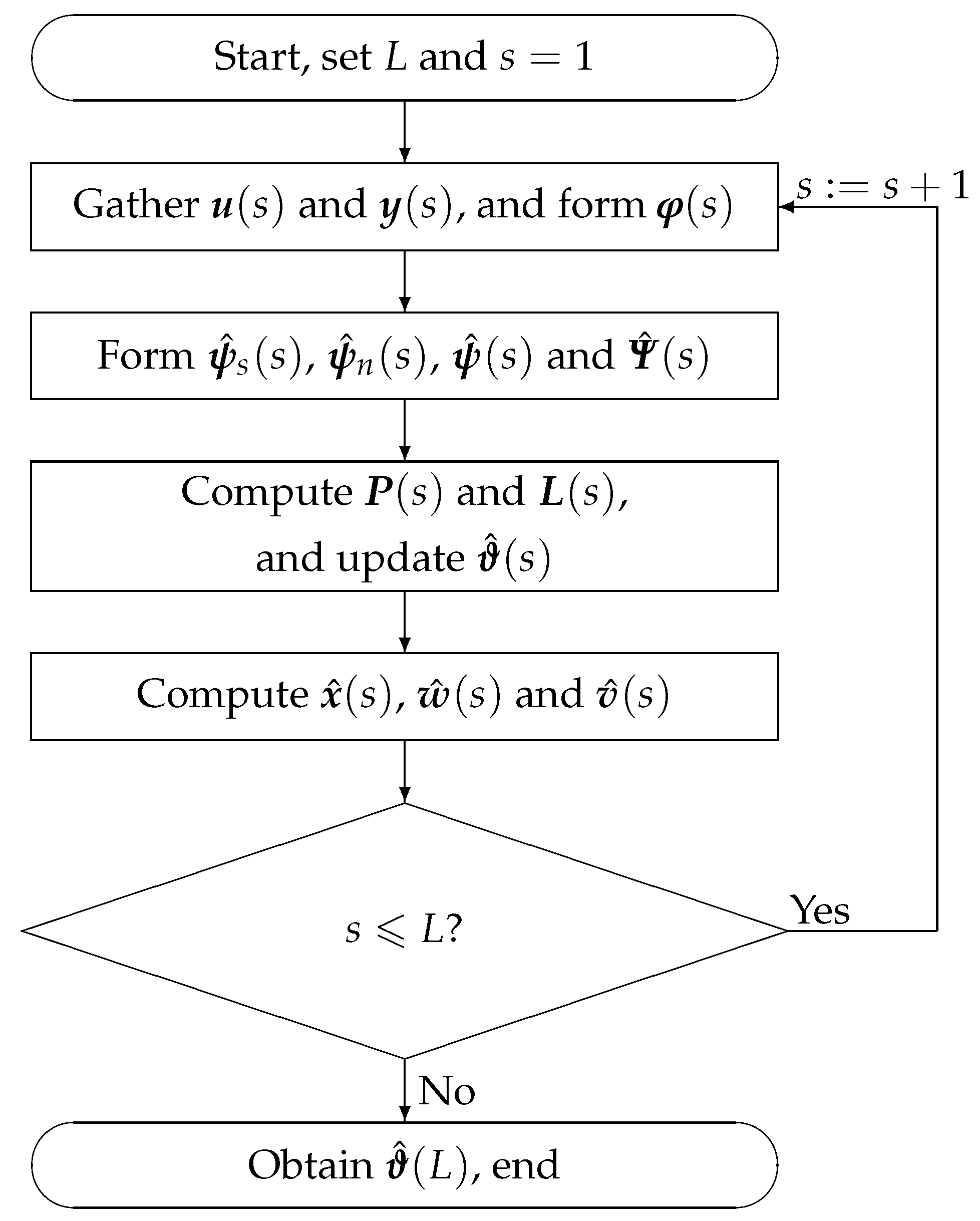

3. The RGELS Algorithm

- For , all variables are set to zero. Set the data length L. Let , set the initial values , , , , , .

- Collect the input-output data and , and construct using (33).

- Form , and using (35)–(36) and (34), and form using (32).

- Calculate the covariance matrix and the gain matrix using (31) and (30), and update the estimate using (29).

- Figure the estimates , and using (37)–(39).

- Compare s with L: if , increase s by 1 and go to Step 2; otherwise obtain the parameter estimate of and break up the program.

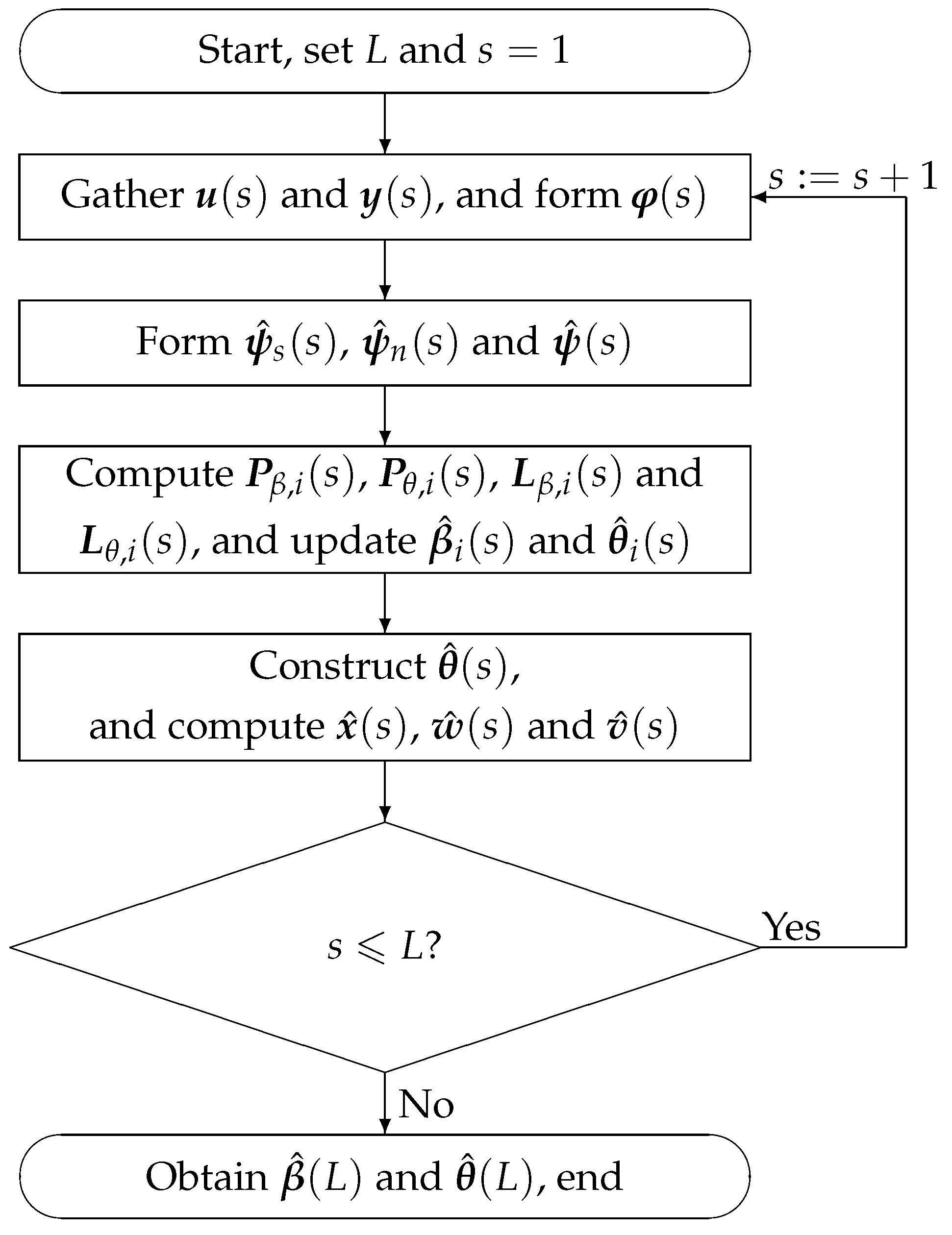

4. The PC-RGELS Algorithm

- For , all variables are set to zero. Set the data length L. Let , set the initial values , , , , , , , .

- Collect the input-output data and , and construct using (113).

- Form and using (116)–(117) and construct using (114), and read from in (115), .

- Compute and using (103) and (106), and compute and using (102) and (105), and update the estimates and using (101) and (104).

- For , calculate and using (109) and (112), and compute and using (108) and (111), and refresh the estimates and using (107) and (1110).

- Construct by (112), calculate the estimates , and using (118)–(120).

- Compare s with L: if , increase s by 1 and go to Step 2; otherwise obtain the estimate and and terminate this procedure.

5. Example

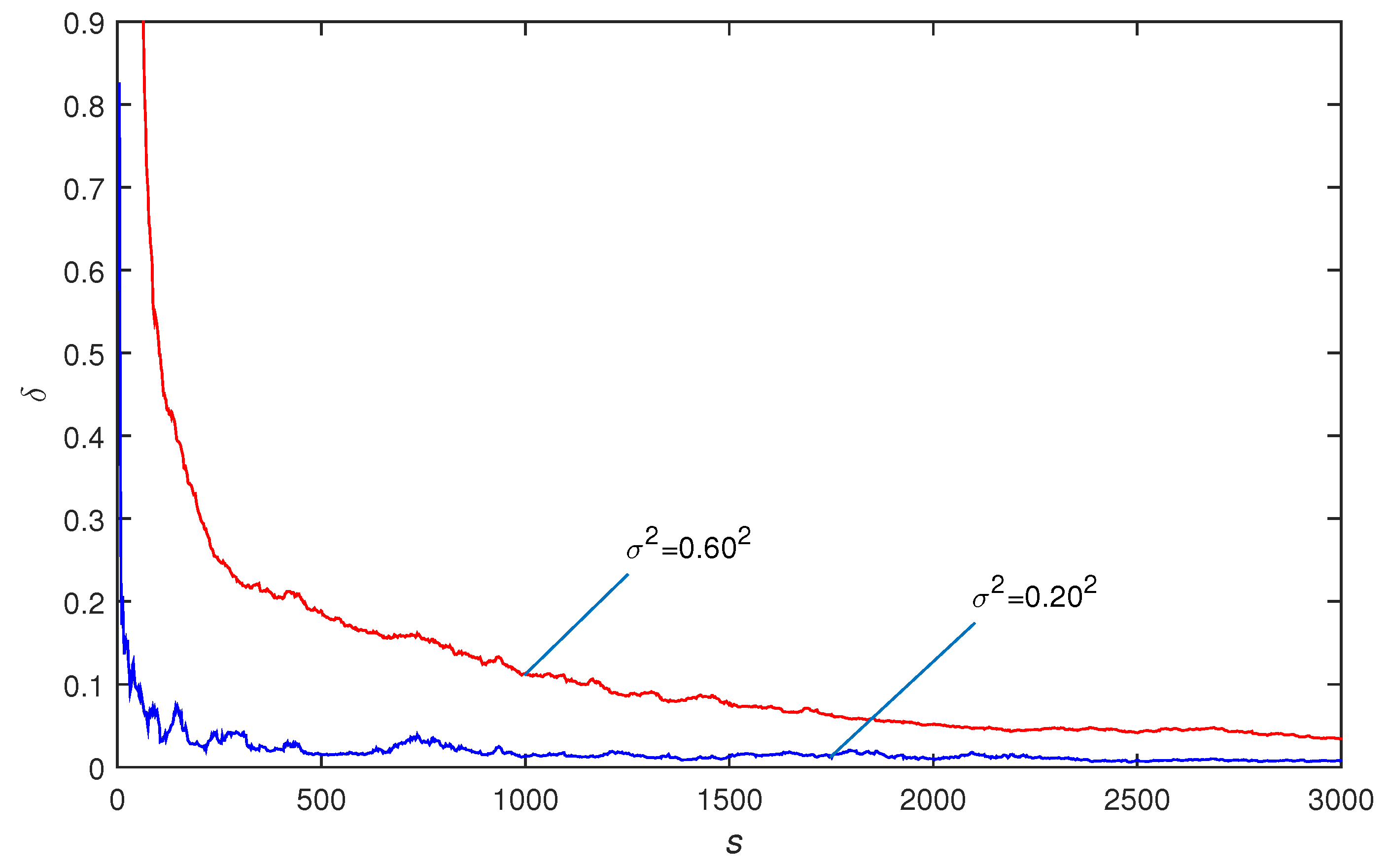

- The parameter estimation errors given by the RGELS, PC-S-RGELS and PC-RGELS algorithms become smaller as s increasing. Thus the proposed algorithms for multivariable OEARMA-like system are effective.

- Under the same simulation conditions, the PC-S-RGELS and PC-RGELS algorithms can give more accurate parameter estimates compared with the RGELS algorithm.

- A lower noise level leads to a higher parameter estimation accuracy by the PC-RGELS algorithm under the same data length.

6. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Na, J.; Chen, A.S.; Herrmann, G.; Burke, R.; Brace, C. Vehicle engine torque estimation via unknown input observer and adaptive parameter estimation. IEEE Trans. Veh. Technol. 2018, 67, 409–422. [Google Scholar] [CrossRef]

- Pan, J.; Li, W.; Zhang, H.P. Control algorithms of magnetic suspension systems based on the improved double exponential reaching law of sliding mode control. Int. J. Control Autom. Syst. 2018, 16, 2878–2887. [Google Scholar] [CrossRef]

- Zhang, X.; Ding, F.; Xu, L.; Yang, E.F. Highly computationally efficient state filter based on the delta operator. Int. J. Adapt. Control Signal Process. 2019, 33, 875–889. [Google Scholar] [CrossRef]

- Xu, H.; Ding, F.; Yang, E.F. Modeling a nonlinear process using the exponential autoregressive time series model. Nonlinear Dyn. 2019, 95, 2079–2092. [Google Scholar] [CrossRef]

- Xu, L.; Chen, L.; Xiong, W.L. Parameter estimation and controller design for dynamic systems from the step responses based on the Newton iteration. Nonlinear Dyn. 2015, 79, 2155–2163. [Google Scholar] [CrossRef]

- Xu, L. The parameter estimation algorithms based on the dynamical response measurement data. Adv. Mech. Eng. 2017, 9. [Google Scholar] [CrossRef]

- Xu, L.; Ding, F.; Gu, Y.; Alsaedi, A.; Hayat, T. A multi-innovation state and parameter estimation algorithm for a state space system with d-step state-delay. Signal Process. 2017, 140, 97–103. [Google Scholar] [CrossRef]

- Xu, L.; Ding, F. Iterative parameter estimation for signal models based on measured data. Circuits Syst. Signal Process. 2018, 37, 3046–3069. [Google Scholar] [CrossRef]

- Zhang, W.; Lin, X.; Chen, B.S. LaSalle-type theorem and its applications to infinite horizon optimal control of discrete-time nonlinear stochastic systems. IEEE Trans. Autom. Control 2017, 62, 250–261. [Google Scholar] [CrossRef]

- Li, N.; Guo, S.; Wang, Y. Weighted preliminary-summation-based principal component analysis for non-Gaussian processes. Control Eng. Pract. 2019, 87, 122–132. [Google Scholar] [CrossRef]

- Wang, Y.; Si, Y.; Huang, B.; Lou, Z. Survey on the theoretical research and engineering applications of multivariate statistics process monitoring algorithms: 2008–2017. Can. J. Chem. Eng. 2018, 96, 2073–2085. [Google Scholar] [CrossRef]

- Wang, Y.Q.; Zhang, H.; Wei, S.L.; Zhou, D.G.; Huang, B. Control performance assessment for ILC-controlled batch processes in a 2-D system framework. IEEE Trans. Syst. Man Cybern. Syst. 2017, 48, 1493–1504. [Google Scholar] [CrossRef]

- Tian, X.P.; Niu, H.M. A bi-objective model with sequential search algorithm for optimizing network-wide train timetables. Comput. Ind. Eng. 2019, 127, 1259–1272. [Google Scholar] [CrossRef]

- Wang, Y.J.; Ding, F.; Xu, L. Some new results of designing an IIR filter with colored noise for signal processing. Digit. Signal Process. 2018, 72, 44–58. [Google Scholar] [CrossRef]

- Wong, W.C.; Chee, E.; Li, J.L.; Wang, X.N. Recurrent neural network-based model predictive control for continuous pharmaceutical manufacturing. Mathematics 2018, 6, 242. [Google Scholar] [CrossRef]

- Ding, F.; Xu, L.; Alsaadi, F.E.; Hayat, T. Iterative parameter identification for pseudo-linear systems with ARMA noise using the filtering technique. IET Control Theory Appl. 2018, 12, 892–899. [Google Scholar] [CrossRef]

- Chen, G.Y.; Gan, M.; Chen, C.L.P.; Li, H.X. A regularized variable projection algorithm for separable nonlinear least squares problems. IEEE Trans. Autom. Control 2019, 64, 526–537. [Google Scholar] [CrossRef]

- Li, X.Y.; Li, H.X.; Wu, B.Y. Piecewise reproducing kernel method for linear impulsive delay differential equations with piecewise constant arguments. Appl. Math. Comput. 2019, 349, 304–313. [Google Scholar] [CrossRef]

- Xu, L.; Xiong, W.; Alsaedi, A.; Hayat, T. Hierarchical parameter estimation for the frequency response based on the dynamical window data. Int. J. Control Autom. Syst. 2018, 16, 1756–1764. [Google Scholar] [CrossRef]

- Zhang, X.; Xu, L.; Ding, F.; Hayat, T. Combined state and parameter estimation for a bilinear state space system with moving average noise. J. Frankl. Inst. 2018, 355, 3079–3103. [Google Scholar] [CrossRef]

- Li, M.H.; Liu, X.M.; Ding, F. Filtering-based maximum likelihood gradient iterative estimation algorithm for bilinear systems with autoregressive moving average noise. Circuits Syst. Signal Process. 2018, 37, 5023–5048. [Google Scholar] [CrossRef]

- Xu, L. The damping iterative parameter identification method for dynamical systems based on the sine signal measurement. Signal Process. 2016, 120, 660–667. [Google Scholar] [CrossRef]

- Xu, L. A proportional differential control method for a time-delay system using the Taylor expansion approximation. Appl. Math. Comput. 2014, 236, 391–399. [Google Scholar] [CrossRef]

- Ding, F.; Chen, H.B.; Xu, L.; Dai, J.Y.; Li, Q.S.; Hayat, T. A hierarchical least squares identification algorithm for Hammerstein nonlinear systems using the key term separation. J. Frankl. Inst. 2018, 355, 3737–3752. [Google Scholar] [CrossRef]

- Ding, J.; Chen, J.Z.; Lin, J.X.; Wan, L.J. Particle filtering based parameter estimation for systems with output-error type model structures. J. Frankl. Inst. 2019, 356, 5521–5540. [Google Scholar] [CrossRef]

- Liu, N.; Mei, S.; Sun, D.; Shi, W.; Feng, J.; Zhou, Y.M.; Mei, F.; Xu, J.; Jiang, Y.; Cao, X.A. Effects of charge transport materials on blue fluorescent organic light-emitting diodes with a host-dopant system. Micromachines 2019, 10, 344. [Google Scholar] [CrossRef] [PubMed]

- Xu, L.; Ding, F. Parameter estimation for control systems based on impulse responses. Int. J. Control Autom. Syst. 2017, 15, 2471–2479. [Google Scholar] [CrossRef]

- Cao, Y.; Wang, Z.; Liu, F.; Li, P.; Xie, G. Bio-inspired speed curve optimization and sliding mode tracking control for subway trains. IEEE Trans. Veh. Technol. 2019. [Google Scholar] [CrossRef]

- Cao, Y.; Lu, H.; Wen, T. A safety computer system based on multi-sensor data processing. Sensors 2019, 19, 818. [Google Scholar] [CrossRef]

- Cao, Y.; Zhang, Y.; Wen, T.; Li, P. Research on dynamic nonlinear input prediction of fault diagnosis based on fractional differential operator equation in high-speed train control system. Chaos 2019, 29, 013130. [Google Scholar] [CrossRef]

- Cao, Y.; Li, P.; Zhang, Y. Parallel processing algorithm for railway signal fault diagnosis data based on cloud computing. Future Gener. Comput. Syst. 2018, 88, 279–283. [Google Scholar] [CrossRef]

- Wan, L.J.; Ding, F. Decomposition-based gradient iterative identification algorithms for multivariable systems using the multi-innovation theory. Circuits Syst. Signal Process. 2019, 38, 2971–2991. [Google Scholar] [CrossRef]

- Chen, J.; Zhu, Q.M.; Li, J.; Liu, Y.J. Biased compensation recursive least squares-based threshold algorithm for time-delay rational models via redundant rule. Nonlinear Dyn. 2018, 91, 797–807. [Google Scholar] [CrossRef]

- Yin, C.C.; Wen, Y.Z.; Zhao, Y.X. On the optimal dividend problem for a spectrally positive levy process. Astin Bull. 2014, 44, 635–651. [Google Scholar] [CrossRef]

- Yin, C.C.; Wen, Y.Z. Optimal dividend problem with a terminal value for spectrally positive Levy processes. Insur. Math. Econ. 2013, 53, 769–773. [Google Scholar] [CrossRef]

- Yin, C.C.; Zhao, J.S. Nonexponential asymptotics for the solutions of renewal equations, with applications. J. Appl. Probab. 2006, 43, 815–824. [Google Scholar] [CrossRef]

- Yin, C.C.; Wang, C.W. The perturbed compound Poisson risk process with investment and debit interest. Methodol. Comput. Appl. Probab. 2010, 12, 391–413. [Google Scholar] [CrossRef]

- Yin, C.C.; Wen, Y.Z. Exit problems for jump processes with applications to dividend problems. J. Comput. Appl. Math. 2013, 245, 30–52. [Google Scholar] [CrossRef]

- Wen, Y.Z.; Yin, C.C. Solution of Hamilton-Jacobi-Bellman equation in optimal reinsurance strategy under dynamic VaR constraint. J. Funct. Spaces 2019, 6750892. [Google Scholar] [CrossRef]

- Sha, X.Y.; Xu, Z.S.; Yin, C.C. Elliptical distribution-based weight-determining method for ordered weighted averaging operators. Int. J. Intell. Syst. 2019, 34, 858–877. [Google Scholar] [CrossRef]

- Pan, J.; Jiang, X.; Wan, X.K.; Ding, W. A filtering based multi-innovation extended stochastic gradient algorithm for multivariable control systems. Int. J. Control Autom. Syst. 2017, 15, 1189–1197. [Google Scholar] [CrossRef]

- Ge, Z.W.; Ding, F.; Xu, L.; Alsaedi, A.; Hayat, T. Gradient-based iterative identification method for multivariate equation-error autoregressive moving average systems using the decomposition technique. J. Frankl. Inst. 2019, 356, 1658–1676. [Google Scholar] [CrossRef]

- Ding, F.; Xu, L.; Zhu, Q.M. Performance analysis of the generalised projection identification for time-varying systems. IET Control Theory Appl. 2016, 10, 2506–2514. [Google Scholar] [CrossRef]

- Xu, L.; Ding, F. Parameter estimation algorithms for dynamical response signals based on the multi-innovation theory and the hierarchical principle. IET Signal Process. 2017, 11, 228–237. [Google Scholar] [CrossRef]

- Zhan, X.S.; Cheng, L.L.; Wu, J.; Yang, Q.S.; Han, T. Optimal modified performance of MIMO networked control systems with multi-parameter constraints. ISA Trans. 2019, 84, 111–117. [Google Scholar] [CrossRef] [PubMed]

- Xu, L.; Ding, F.; Zhu, Q.M. Hierarchical Newton and least squares iterative estimation algorithm for dynamic systems by transfer functions based on the impulse responses. Int. J. Syst. Sci. 2019, 50, 141–151. [Google Scholar] [CrossRef]

- Wang, F.F.; Ding, F. Partially coupled gradient based iterative identification methods for multivariable output-error moving average systems. Int. J. Model. Identif. Control 2016, 26, 293–302. [Google Scholar] [CrossRef]

- Ding, F.; Liu, X.G.; Chu, J. Gradient-based and least-squares-based iterative algorithms for Hammerstein systems using the hierarchical identification principle. IET Control Theory Appl. 2013, 7, 176–184. [Google Scholar] [CrossRef]

- Wang, C.; Li, K.C.; Su, S. Hierarchical Newton iterative parameter estimation of a class of input nonlinear systems based on the key term separation principle. Complexity 2018, 2018, 7234147. [Google Scholar] [CrossRef]

- Ding, F.; Liu, G.; Liu, X.P. Partially coupled stochastic gradient identification methods for non-uniformly sampled systems. IEEE Trans. Autom. Control 2010, 55, 1976–1981. [Google Scholar] [CrossRef]

- Liu, Q.Y.; Ding, F.; Yang, E.F. Parameter estimation algorithm for multivariable controlled autoregressive autoregressive moving average systems. Digit. Signal Process. 2018, 83, 323–331. [Google Scholar] [CrossRef]

- Liu, Q.Y.; Ding, F.; Xu, L.; Yang, E.F. Partially coupled gradient estimation algorithm for multivariable equation-error autoregressive moving average systems using the data filtering technique. IET Control Theory Appl. 2019, 13, 642–650. [Google Scholar] [CrossRef]

- Ding, F. Coupled-least-squares identification for multivariable systems. IET Control Theory Appl. 2013, 7, 68–79. [Google Scholar] [CrossRef]

- Ding, F. Two-stage least squares based iterative estimation algorithm for CARARMA system modeling. Appl. Math. Model. 2013, 37, 4798–4808. [Google Scholar] [CrossRef]

- Xu, L. Application of the Newton iteration algorithm to the parameter estimation for dynamical systems. J. Comput. Appl. Math. 2015, 288, 33–43. [Google Scholar] [CrossRef]

- Ding, F.; Meng, D.D.; Dai, J.Y.; Li, Q.S.; Alsaedi, A.; Hayat, T. Least squares based iterative parameter estimation algorithm for stochastic dynamical systems with ARMA noise using the model equivalence. Int. J. Control Autom. Syst. 2018, 16, 630–639. [Google Scholar] [CrossRef]

- Wang, Y.J.; Ding, F. Iterative estimation for a non-linear IIR filter with moving average noise by means of the data filtering technique. IMA J. Math. Control Inf. 2017, 34, 745–764. [Google Scholar] [CrossRef]

- Li, M.H.; Liu, X.M. The least squares based iterative algorithms for parameter estimation of a bilinear system with autoregressive noise using the data filtering technique. Signal Process. 2018, 147, 23–34. [Google Scholar] [CrossRef]

- Ding, F.; Pan, J.; Alsaedi, A.; Hayat, T. Gradient-based iterative parameter estimation algorithms for dynamical systems from observation data. Mathematics 2019, 7, 428. [Google Scholar] [CrossRef]

- Ding, F.; Wang, Y.J.; Dai, J.Y.; Li, Q.S.; Chen, Q.J. A recursive least squares parameter estimation algorithm for output nonlinear autoregressive systems using the input-output data filtering. J. Frankl. Inst. 2017, 354, 6938–6955. [Google Scholar] [CrossRef]

- Zhang, X.; Ding, F.; Alsaadi, F.E.; Hayat, T. Recursive parameter identification of the dynamical models for bilinear state space systems. Nonlinear Dyn. 2017, 89, 2415–2429. [Google Scholar] [CrossRef]

- Xu, L.; Ding, F. Recursive least squares and multi-innovation stochastic gradient parameter estimation methods for signal modeling. Circuits Syst. Signal Process. 2017, 36, 1735–1753. [Google Scholar] [CrossRef]

- Zhang, X.; Ding, F.; Xu, L.; Alsaedi, A.; Hayat, T. A hierarchical approach for joint parameter and state estimation of a bilinear system with autoregressive noise. Mathematics 2019, 7, 356. [Google Scholar] [CrossRef]

- Liu, Q.Y.; Ding, F. Auxiliary model-based recursive generalized least squares algorithm for multivariate output-error autoregressive systems using the data filtering. Circuits Syst. Signal Process. 2019, 38, 590–610. [Google Scholar] [CrossRef]

- Ding, F. Decomposition based fast least squares algorithm for output error systems. Signal Process. 2013, 93, 1235–1242. [Google Scholar] [CrossRef]

- Ding, F.; Liu, X.P.; Liu, G. Gradient based and least-squares based iterative identification methods for OE and OEMA systems. Digital Signal Process. 2010, 20, 664–677. [Google Scholar] [CrossRef]

- Wang, Y.J.; Ding, F.; Wu, M.H. Recursive parameter estimation algorithm for multivariate output- error systems. J. Frankl. Inst. 2018, 355, 5163–5181. [Google Scholar] [CrossRef]

- Ding, J.L. The hierarchical iterative identification algorithm for multi-input-output-error systems with autoregressive noise. Complexity 2017, 2017, 5292894. [Google Scholar] [CrossRef]

- Wang, X.H.; Ding, F. Partially coupled extended stochastic gradient algorithm for nonlinear multivariable output error moving average systems. Eng. Comput. 2017, 34, 629–647. [Google Scholar] [CrossRef]

- Zhang, X.; Ding, F.; Xu, L.; Yang, E.F. State filtering-based least squares parameter estimation for bilinear systems using the hierarchical identification principle. IET Control Theory Appl. 2018, 12, 1704–1713. [Google Scholar] [CrossRef]

- Huang, W.; Ding, F.; Hayat, T.; Alsaedi, A. Coupled stochastic gradient identification algorithms for multivariate output-error systems using the auxiliary model. Int. J. Control Autom. Syst. 2017, 15, 1622–1631. [Google Scholar] [CrossRef]

- Wang, Y.J.; Ding, F. The filtering based iterative identification for multivariable systems. IET Control Theory Appl. 2016, 10, 894–902. [Google Scholar] [CrossRef]

- Meng, D.D. Recursive least squares and multi-innovation gradient estimation algorithms for bilinear stochastic systems. Circuits Syst. Signal Process 2016, 35, 1052–1065. [Google Scholar] [CrossRef]

- Wan, X.K.; Li, Y.; Xia, C.; Wu, M.H.; Liang, J.; Wang, N. A T-wave alternans assessment method based on least squares curve fitting technique. Measurement 2016, 86, 93–100. [Google Scholar] [CrossRef]

- Zhao, N. Joint Optimization of cooperative spectrum sensing and resource allocation in multi-channel cognitive radio sensor networks. Circuits Syst. Signal Process. 2016, 35, 2563–2583. [Google Scholar] [CrossRef]

- Zhao, X.L.; Liu, F.; Fu, B.; Na, F. Reliability analysis of hybrid multi-carrier energy systems based on entropy-based Markov model. Proc. Inst. Mech. Eng. Part O-J. Risk Reliab. 2016, 230, 561–569. [Google Scholar] [CrossRef]

- Zhao, N.; Liang, Y.; Pei, Y. Dynamic contract incentive mechanism for cooperative wireless networks. IEEE Trans. Veh. Technol. 2018, 67, 10970–10982. [Google Scholar] [CrossRef]

- Gong, P.C.; Wang, W.Q.; Li, F.C.; Cheung, H. Sparsity-aware transmit beamspace design for FDA-MIMO radar. Signal Process. 2018, 144, 99–103. [Google Scholar] [CrossRef]

- Zhao, X.L.; Lin, Z.Y.; Fu, B.; He, L.; Na, F. Research on automatic generation control with wind power participation based on predictive optimal 2-degree-of-freedom PID strategy for multi-area interconnected power system. Energies 2018, 11, 3325. [Google Scholar] [CrossRef]

- Liu, F.; Xue, Q.; Yabuta, K. Boundedness and continuity of maximal singular integrals and maximal functions on Triebel-Lizorkin spaces. Sci. China Math. 2019. [Google Scholar] [CrossRef]

- Liu, F. Boundedness and continuity of maximal operators associated to polynomial compound curves on Triebel-Lizorkin spaces. Math. Inequal. Appl. 2019, 22, 25–44. [Google Scholar] [CrossRef]

- Liu, F.; Fu, Z.; Jhang, S. Boundedness and continuity of Marcinkiewicz integrals associated to homogeneous mappings on Triebel-Lizorkin spaces. Front. Math. China 2019, 14, 95–122. [Google Scholar] [CrossRef]

- Wang, D.Q.; Yan, Y.R.; Liu, Y.J.; Ding, J.H. Model recovery for Hammerstein systems using the hierarchical orthogonal matching pursuit method. J. Comput. Appl. Math. 2019, 345, 135–145. [Google Scholar] [CrossRef]

- Zhang, S.; Wang, D.Q.; Liu, F. Separate block-based parameter estimation method for Hammerstein systems. R. Soc. Open Sci. 2018, 5, 172194. [Google Scholar] [CrossRef] [PubMed]

- Wang, D.Q.; Zhang, Z.; Xue, B.Q. Decoupled parameter estimation methods for Hammerstein systems by using filtering technique. IEEE Access 2018, 6, 66612–66620. [Google Scholar] [CrossRef]

- Wang, D.Q.; Li, L.W.; Ji, Y.; Yan, Y.R. Model recovery for Hammerstein systems using the auxiliary model based orthogonal matching pursuit method. Appl. Math. Modell. 2018, 54, 537–550. [Google Scholar] [CrossRef]

- Feng, L.; Li, Q.X.; Li, Y.F. Imaging with 3-D aperture synthesis radiometers. IEEE Trans. Geosci. Remote Sens. 2019, 57, 2395–2406. [Google Scholar] [CrossRef]

- Shi, W.X.; Liu, N.; Zhou, Y.M.; Cao, X.A. Effects of postannealing on the characteristics and reliability of polyfluorene organic light-emitting diodes. IEEE Trans. Electron Devices 2019, 66, 1057–1062. [Google Scholar] [CrossRef]

- Fu, B.; Ouyang, C.X.; Li, C.S.; Wang, J.W.; Gul, E. An improved mixed integer linear programming approach based on symmetry diminishing for unit commitment of hybrid power system. Energies 2019, 12, 833. [Google Scholar] [CrossRef]

- Wu, T.Z.; Shi, X.; Liao, L.; Zhou, C.J.; Zhou, H.; Su, Y.H. A capacity configuration control strategy to alleviate power fluctuation of hybrid energy storage system based on improved particle swarm optimization. Energies 2019, 12, 642. [Google Scholar] [CrossRef]

- Zhao, N.; Chen, Y.; Liu, R.; Wu, M.H.; Xiong, W. Monitoring strategy for relay incentive mechanism in cooperative communication networks. Comput. Electr. Eng. 2017, 60, 14–29. [Google Scholar] [CrossRef]

- Zhao, N.; Wu, M.H.; Chen, J.J. Android-based mobile educational platform for speech signal processing. Int. J. Electr. Eng. Educ. 2107, 54, 3–16. [Google Scholar] [CrossRef]

- Wan, X.K.; Wu, H.; Qiao, F.; Li, F.; Li, Y.; Wan, Y.; Wei, J. Electrocardiogram baseline wander suppression based on the combination of morphological and wavelet transformation based filtering. Comput. Math. Methods Med. 2019, 7196156. [Google Scholar] [CrossRef] [PubMed]

- Ma, F.Y.; Yin, Y.K.; Li, M. Start-up process modelling of sediment microbial fuel cells based on data driven. Math. Probl. Eng. 2019, 7403732. [Google Scholar] [CrossRef]

- Yang, F.; Zhang, P.; Li, X.X. The truncation method for the Cauchy problem of the inhomogeneous Helmholtz equation. Appl. Anal. 2019, 98, 991–1004. [Google Scholar] [CrossRef]

- Sun, Z.Y.; Zhang, D.; Meng, Q.; Cheng, C.C. Feedback stabilization of time-delay nonlinear systems with continuous time-varying output function. Int. J. Syst. Sci. 2019, 50, 244–255. [Google Scholar] [CrossRef]

- Wu, M.H.; Li, X.; Liu, C.; Liu, M.; Zhao, N.; Wang, J.; Wan, X.K.; Rao, Z.H.; Zhu, L. Robust global motion estimation for video security based on improved k-means clustering. J. Ambient Intell. Hum. Comput. 2019, 10, 439–448. [Google Scholar] [CrossRef]

- Zhao, X.L.; Lin, Z.Y.; Fu, B.; He, L.; Li, C.S. Research on the predictive optimal PID plus second order derivative method for AGC of power system with high penetration of photovoltaic and wind power. J. Electr. Eng. Technol. 2019, 14, 1075–1086. [Google Scholar] [CrossRef]

| s | ||||||||

|---|---|---|---|---|---|---|---|---|

| 100 | 0.21713 | −0.55471 | 0.90627 | 0.84332 | 0.71040 | −0.48095 | 0.18013 | 10.72413 |

| 200 | 0.27110 | −0.56221 | 0.94490 | 0.86938 | 0.64018 | −0.47391 | 0.23325 | 7.35692 |

| 500 | 0.24836 | −0.54410 | 0.93994 | 0.86873 | 0.66404 | −0.55063 | 0.06758 | 15.08382 |

| 1000 | 0.26144 | −0.55626 | 0.94061 | 0.86873 | 0.64676 | −0.59024 | 0.03508 | 16.99606 |

| 2000 | 0.25755 | −0.54862 | 0.94853 | 0.86843 | 0.65790 | −0.57538 | 0.03620 | 16.91802 |

| 3000 | 0.25925 | −0.55582 | 0.94269 | 0.86686 | 0.66788 | −0.56765 | 0.05916 | 15.52793 |

| True values | 0.26000 | −0.57000 | 0.93000 | 0.87000 | 0.65000 | −0.56000 | 0.32000 |

| s | ||||||||

|---|---|---|---|---|---|---|---|---|

| 100 | 0.20140 | −0.56387 | 0.92631 | 0.70446 | 0.79768 | −0.35762 | 0.68920 | 28.39191 |

| 200 | 0.24432 | −0.56531 | 0.93373 | 0.79687 | 0.69082 | −0.38330 | 0.64577 | 22.51734 |

| 500 | 0.25446 | −0.56183 | 0.94070 | 0.83956 | 0.67391 | −0.47448 | 0.47151 | 10.58749 |

| 1000 | 0.26117 | −0.56373 | 0.93438 | 0.85347 | 0.65752 | −0.53154 | 0.40254 | 5.29978 |

| 2000 | 0.25977 | −0.55982 | 0.93912 | 0.86161 | 0.65870 | −0.54046 | 0.37127 | 3.42342 |

| 3000 | 0.26036 | −0.56350 | 0.93576 | 0.86339 | 0.66214 | −0.54830 | 0.37027 | 3.20492 |

| True values | 0.26000 | −0.57000 | 0.93000 | 0.87000 | 0.65000 | −0.56000 | 0.32000 |

| s | ||||||||

|---|---|---|---|---|---|---|---|---|

| 100 | 0.24978 | −0.60667 | 0.87031 | 0.78115 | 0.56724 | −0.39507 | 0.30167 | 12.87537 |

| 200 | 0.27882 | −0.58559 | 0.91259 | 0.82014 | 0.61386 | −0.42910 | 0.41254 | 10.32160 |

| 500 | 0.25835 | −0.56532 | 0.93012 | 0.85414 | 0.64496 | −0.58010 | 0.25299 | 4.26796 |

| 1000 | 0.26982 | −0.56619 | 0.93045 | 0.85993 | 0.64143 | −0.60694 | 0.26914 | 4.21835 |

| 2000 | 0.26104 | −0.56177 | 0.93780 | 0.86480 | 0.65036 | −0.57656 | 0.30440 | 1.53744 |

| 3000 | 0.26119 | −0.56487 | 0.93488 | 0.86510 | 0.65647 | −0.56786 | 0.34146 | 1.49741 |

| True values | 0.26000 | −0.57000 | 0.93000 | 0.87000 | 0.65000 | −0.56000 | 0.32000 |

| s | ||||||||

|---|---|---|---|---|---|---|---|---|

| 100 | 0.24988 | −0.56806 | 0.90461 | 0.89323 | 0.60879 | −0.59192 | 0.23442 | 6.30236 |

| 200 | 0.26570 | −0.55866 | 0.90290 | 0.89140 | 0.62628 | −0.56227 | 0.30809 | 2.68948 |

| 500 | 0.26807 | −0.57330 | 0.92228 | 0.86927 | 0.62782 | −0.55656 | 0.31763 | 1.50427 |

| 1000 | 0.26418 | −0.57144 | 0.92234 | 0.87348 | 0.63193 | −0.56993 | 0.32145 | 1.34724 |

| 2000 | 0.26205 | −0.57095 | 0.92456 | 0.87546 | 0.64561 | −0.54789 | 0.32853 | 1.03059 |

| 3000 | 0.26050 | −0.56927 | 0.92491 | 0.87164 | 0.64979 | −0.54752 | 0.31805 | 0.81408 |

| True values | 0.26000 | −0.57000 | 0.93000 | 0.87000 | 0.65000 | −0.56000 | 0.32000 |

| s | ||||||||

|---|---|---|---|---|---|---|---|---|

| 100 | −0.07666 | −1.10323 | 1.30348 | 0.68217 | 0.72605 | −0.49016 | −0.09463 | 51.44481 |

| 200 | 0.02363 | −0.82959 | 1.02696 | 0.84406 | 0.70880 | −0.49353 | −0.03312 | 30.52969 |

| 500 | 0.16820 | −0.71220 | 1.00389 | 0.83283 | 0.64901 | −0.50435 | 0.06928 | 18.85790 |

| 1000 | 0.20791 | −0.63623 | 0.95860 | 0.86602 | 0.63011 | −0.54443 | 0.15480 | 11.21196 |

| 2000 | 0.23053 | −0.60174 | 0.94024 | 0.87889 | 0.65490 | −0.53307 | 0.25093 | 5.15448 |

| 3000 | 0.24053 | −0.58686 | 0.93174 | 0.87014 | 0.66124 | −0.53546 | 0.27576 | 3.42763 |

| True values | 0.26000 | −0.57000 | 0.93000 | 0.87000 | 0.65000 | −0.56000 | 0.32000 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ma, H.; Pan, J.; Lv, L.; Xu, G.; Ding, F.; Alsaedi, A.; Hayat, T. Recursive Algorithms for Multivariable Output-Error-Like ARMA Systems. Mathematics 2019, 7, 558. https://doi.org/10.3390/math7060558

Ma H, Pan J, Lv L, Xu G, Ding F, Alsaedi A, Hayat T. Recursive Algorithms for Multivariable Output-Error-Like ARMA Systems. Mathematics. 2019; 7(6):558. https://doi.org/10.3390/math7060558

Chicago/Turabian StyleMa, Hao, Jian Pan, Lei Lv, Guanghui Xu, Feng Ding, Ahmed Alsaedi, and Tasawar Hayat. 2019. "Recursive Algorithms for Multivariable Output-Error-Like ARMA Systems" Mathematics 7, no. 6: 558. https://doi.org/10.3390/math7060558

APA StyleMa, H., Pan, J., Lv, L., Xu, G., Ding, F., Alsaedi, A., & Hayat, T. (2019). Recursive Algorithms for Multivariable Output-Error-Like ARMA Systems. Mathematics, 7(6), 558. https://doi.org/10.3390/math7060558