Unified Local Convergence for Newton’s Method and Uniqueness of the Solution of Equations under Generalized Conditions in a Banach Space

Abstract

:1. Introduction

- (a1)

- At least as large a radius of convergence to at least as many choices of initial points;

- (a2)

- At least as small a ratio of convergence, so at most as few iterates must be computed to obtain a desired error tolerance; and

- (a3)

- The information on the location of the solution is at least as precise.

2. Local Convergence Analysis

- (a)

- There exists such that and for all .

- (b)

- There exists a function with such that is continuous, where , and is non-decreasing for some .

- (c)

- For all

- (d)

- There exists a minimal root of equation , such that

- (e)

- (a)

- There exists an and a function which is continuous and nondecreasing, with , such thatand, for all ,The equationhas a minimal positive root, denoted by . Set .

- (b)

- There exists a function which is continuous and non-decreasing with such that, for all ,

- (c)

- The equationhas a smallest root, .

- (d)

- The function is continuous and nondecreasing on the interval .

- (e)

- .

- (a)

- If , then, by Theorem 2, we conclude that the root is unique in .

- (b)

- (c)

- where is a positive integrable function and .Moreover, in light of (18), there exists a positive integrable function, , such thatNotice thatand is the minimal positive root of the equationThe radius of convergence, , is obtained in [26] under (18), and is given as the root of the equationThe radius of convergence found by us, if is the positive root of the equation, isIn view of (21)–(23), we have thatIndeed, let the functions and be defined asandThen, in light of (21), we getand, for ,by the definition of leading to (24).We can do even better, if . In this case, the function w (i.e., ) depends on (i.e., ), and we have thatwhere L is a positive integrable function.Then, we have thatsince . In general, we do not know which of the functions or L is smaller than the other (see, however, the numerical examples). Then, the radius of convergence is the positive solution of the equationand we have, by (26), thatusing a similar proof as the one below (24). Hence, we have that

- (d)

- where is a convex, strictly increasing function, with and .Notice that the following functions are convex, strictly increasing functions with , and :

- with ;

- with ; and

- with .

In view of (30), there exists a function with the same properties as , such thatThus, we can chooseIn our case, we have that solves the equationFurthermore, by (33),We can do better, if strictly, and replacing (30) byfor all .Then, chooseThen, the radius is given as the root of the equationOnce more, we have shown that the new results improve the old ones, since (29) holds. - (e)

- We can obtain the radii in explicit form. Indeed, specialize the functions , , and in , and , , and in . Then, we have thatsee the third numerical example.The corresponding error bounds for the radii , , and are given, respectively, byand

- (f)

- The same advantages are obtained if we use Smale-type [25] conditions or those of Ferreira [5] or Wang [26]. Then, we chooseand to be the solution of equationwith and .

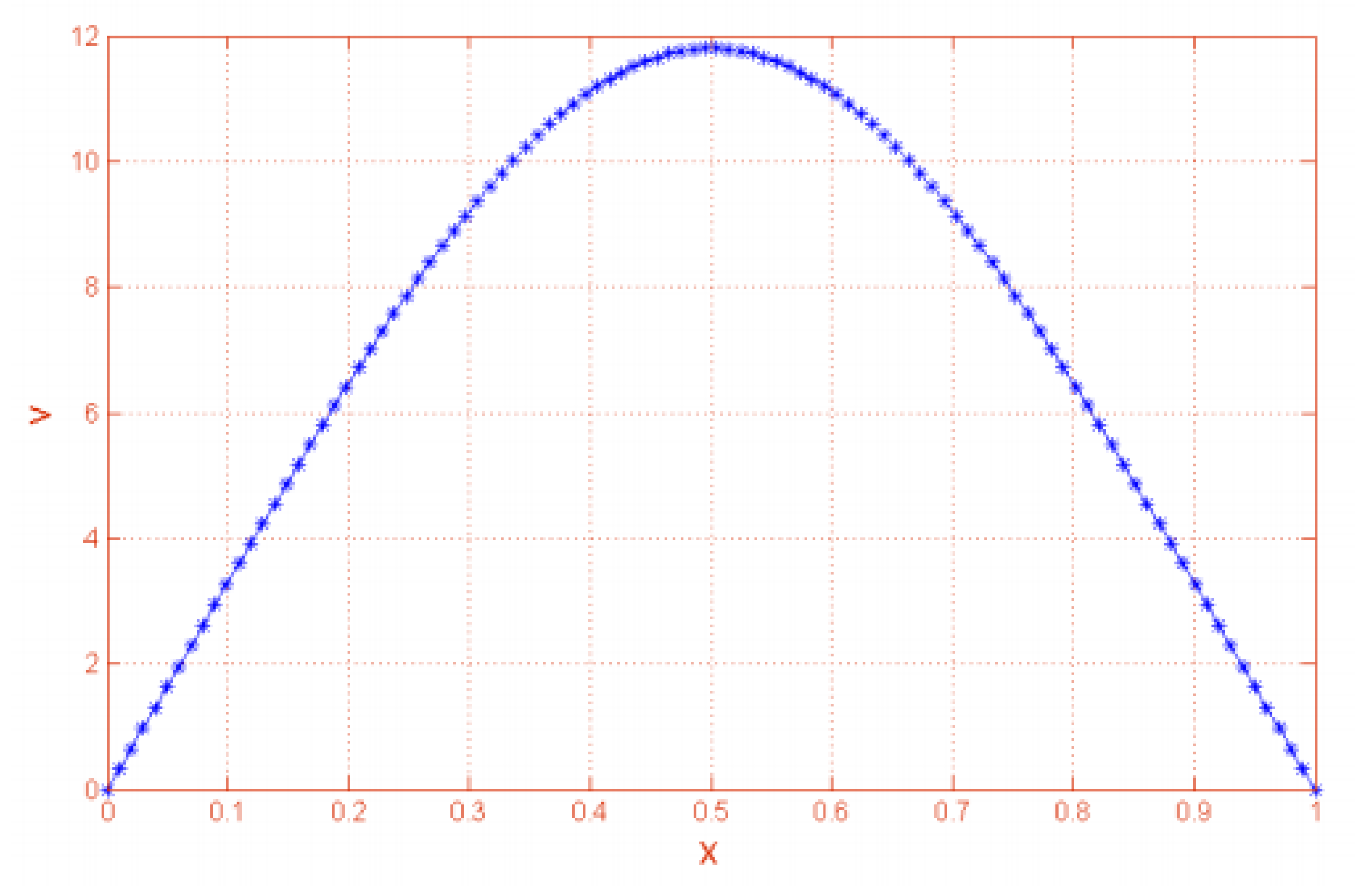

3. Numerical Examples

- (a)

- We obtain:andMoreover, the equationhas a minimal root . On the other hand, the functionis continuous and non-decreasing on the interval . Finally, it is clear that . Then, we can guarantee that the method (2) converges, due to Theorem 2.

- (b)

- We obtain:andMoreover, the equationhas a minimal root . On the other hand, the functionis continuous and non-decreasing on the interval . Finally, it is clear that . Then, we can guarantee that the method (2) converges, due to Theorem 2.

4. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Argyros, I.K.; Hilout, S. Weaker conditions for the convergence of Newton’s method. J. Complex. 2012, 28, 364–387. [Google Scholar] [CrossRef]

- Argyros, I.K.; Magreñán, Á.A. On the convergence of an optimal fourth-order family of methods and its dynamics. Appl. Math. Comput. 2015, 252, 336–346. [Google Scholar] [CrossRef]

- Argyros, I.K.; González, D. Local convergence for an improved Jarratt-type method in Banach space. Int. J. Artif. Intell. Interact. Multimed. 2015, 3, 20–25. [Google Scholar] [CrossRef]

- Deuflhard, P. Newton Methods for Nonlinear Problems: Affine Invariance and Adaptive Algorithms; Springer: Berlin/Heidelberg, Germany, 2011. [Google Scholar]

- Ferreira, O.P. Local convergence of Newton’s method in Banach space from the viewpoint of the majorant principle. IMA J. Numer. Anal. 2009, 29, 746–759. [Google Scholar] [CrossRef]

- Hernández, M.A.; Salanova, M.A. Modification of the Kantorovich assumptions for semilocal convergence of the Chebyshev method. J. Comput. Appl. Math. 2000, 126, 131–143. [Google Scholar]

- Kantorovich, L.V.; Akilov, G.P. Functional Analysis; Pergamon Press: Oxford, UK, 1982. [Google Scholar]

- Kou, J.S.; Li, Y.T.; Wang, X.H. A modification of Newton method with third-order convergence. Appl. Math. Comput. 2006, 181, 1106–1111. [Google Scholar] [CrossRef]

- Proinov, P.D. General local convergence theory for a class of iterative processes and its applications to Newton’s method. J. Complex. 2009, 25, 38–62. [Google Scholar] [CrossRef]

- Amat, S.; Busquier, S. Third order methods under Kantorovich conditions. J. Math. Anal. Appl. 2007, 336, 243–261. [Google Scholar] [CrossRef]

- Amat, S.; Bermúdez, C.; Busquier, S.; Plaza, S. On a third-order Newton-type method free of bilinear operators. Numer. Linear Algebra Appl. 2010, 17, 639–653. [Google Scholar] [CrossRef]

- Argyros, I.K. Computational Theory of Iterative Methods; Chui, C.K., Wuytack, L., Eds.; Studies in Computational Mathematics; Elsevier Publ. Co.: New York, NY, USA, 2007; Volume 15. [Google Scholar]

- Argyros, I.K. A semilocal convergence analysis for directional Newton methods. Math. Comput. 2011, 80, 327–343. [Google Scholar] [CrossRef]

- Argyros, I.K.; Hilout, S. Computational Methods in Nonlinear Analysis: Efficient Algorithms, Fixed Point Theory and Applications; World Scientific: Singapore, 2013. [Google Scholar]

- Argyros, I.K.; George, S. Ball Convergence for Steffensen-type Fourth-order Methods. Int. J. Artif. Intell. Interact. Multimed. 2015, 3, 37–42. [Google Scholar] [CrossRef]

- Ezquerro, J.A.; Hernández, M.A. Recurrence relations for Chebyshev-type methods. Appl. Math. Optim. 2000, 41, 227–236. [Google Scholar] [CrossRef]

- Ezquerro, J.A.; Hernández, M.A. Third-order iterative methods for operators with bounded second derivative. J. Comput. Math. Appl. 1997, 82, 171–183. [Google Scholar] [Green Version]

- Ezquerro, J.A.; Hernández, M.A. On the R-order of the Halley method. J. Math. Anal. Appl. 2005, 303, 591–601. [Google Scholar] [CrossRef]

- Gopalan, V.B.; Seader, J.D. Application of interval Newton’s method to chemical engineering problems. Reliab. Comput. 1995, 1, 215–223. [Google Scholar]

- Gutiérrez, J.A.; Magreñán, Á.A.; Romero, N. On the semilocal convergence of Newton Kantorovich method under center-Lipschitz conditions. Appl. Math. Comput. 2013, 221, 79–88. [Google Scholar]

- Ortega, L.M.; Rheinboldt, W.C. Iterative Solution of Nonlinear Equations in Several Variables; Academic Press: New York, NY, USA, 1970. [Google Scholar]

- Potra, F.A.; Pták, V. Nondiscrete Induction and Iterative Processes; Research Notes in Mathematics; Pitman: Boston, MA, USA, 1984; Volume 103. [Google Scholar]

- Rheinboldt, W.C. An adaptive continuation process for solving systems of nonlinear equations. Banach Cent. Publ. 1977, 3, 129–142. [Google Scholar] [CrossRef]

- Traub, J.F. Iterative Methods for the Solution of Equations; Prentice-Hall Series in Automatic Computation; Prentice-Hall: Englewood Cliffs, NJ, USA, 1964. [Google Scholar]

- Smale, S. Newton’s method estimates from data at one point. In The Merging of Disciplines: New Directions in Pure, Applied, and Computational Mathematics; Springer: New York, NY, USA, 1986; pp. 185–196. [Google Scholar]

- Wang, X. Convergence of Newton’s method and uniqueness of the solution of equations in Banach space. IMA J. Numer. Anal. 2000, 20, 123–134. [Google Scholar] [CrossRef]

- Shacham, M. An improved memory method for the solution of a nonlinear equation. Chem. Eng. Sci. 1989, 44, 1495–1501. [Google Scholar] [CrossRef]

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Argyros, I.K.; Magreñán, Á.A.; Orcos, L.; Sarría, Í. Unified Local Convergence for Newton’s Method and Uniqueness of the Solution of Equations under Generalized Conditions in a Banach Space. Mathematics 2019, 7, 463. https://doi.org/10.3390/math7050463

Argyros IK, Magreñán ÁA, Orcos L, Sarría Í. Unified Local Convergence for Newton’s Method and Uniqueness of the Solution of Equations under Generalized Conditions in a Banach Space. Mathematics. 2019; 7(5):463. https://doi.org/10.3390/math7050463

Chicago/Turabian StyleArgyros, Ioannis K., Ángel Alberto Magreñán, Lara Orcos, and Íñigo Sarría. 2019. "Unified Local Convergence for Newton’s Method and Uniqueness of the Solution of Equations under Generalized Conditions in a Banach Space" Mathematics 7, no. 5: 463. https://doi.org/10.3390/math7050463

APA StyleArgyros, I. K., Magreñán, Á. A., Orcos, L., & Sarría, Í. (2019). Unified Local Convergence for Newton’s Method and Uniqueness of the Solution of Equations under Generalized Conditions in a Banach Space. Mathematics, 7(5), 463. https://doi.org/10.3390/math7050463