Abstract

In this paper, the split variational inclusion problem (SVIP) and the system of equilibrium problems (EP) are considered in Hilbert spaces. Inspired by the works of Byrne et al., López et al., Moudafi and Thukur, Sobumt and Plubtieng, Sitthithakerngkiet et al. and Eslamian and Fakhri, a new self-adaptive step size algorithm is proposed to find a common element of the solution set of the problems SVIP and EP. Convergence theorems are established under suitable conditions for the algorithm and application to the common solution of the fixed point problem, and the split convex optimization problem is considered. Finally, the performances and computational experiments are presented and a comparison with the related algorithms is provided to illustrate the efficiency and applicability of our new algorithms.

Keywords:

equilibrium problem; split variational inclusion problem; convex minimization problem; self-adaptive step size MSC:

49J53; 65K10; 65K05; 49M37; 90C25

1. Introduction

Let be a bifunction, where C is a nonempty closed convex subset of a real Hilbert space H and is the set of real numbers. The equilibrium problem (EP) for is as follows:

The problem (EP) (1) represents a very suitable and common format for investigation and solution of various applied problems and involves many other general problems in nonlinear analysis, such as complementarity, fixed point, and variational inequality problems. A wide range of problems in finance, physics, network analysis, economics, and optimizations can be reduced to find the solution of the problem (1) (see, for instance, Blum and Oetti [1], Flam and Antipin [2], Moudafi [3], and Bnouhachem et al. [4]). Moreover, many authors have studied the methods and algorithms to approximate a solution of the problem (1), for instance, descent algorithms in Konnov and Ali [5], Konnov and Pinyagina [6], Charitha [7], and Lorenzo et al. [8]. For more details, refer to Ceng [9], Yao et al. [10,11,12], Qin et al. [13,14], Hung and Muu [15], Quoc et al. [16], Santos et al. [17], Thuy et al. [18], Rockafellar [19], Moudafi [20,21], Muu and Otelli [22], and Dong et al. [23].

On the other hand, many real-world inverse problems can be cast into the framework of the split inverse problem (SIP), which is formulated as follows:

and such that the point

where IP1 and IP2 are two inverse problems, X and Y are two vector spaces and is a linear operator.

Realistic problems can be represented by making different choices of the spaces X and Y (including the case ) and by choosing appropriate inverse problems for the problems IP1 and IP2. In particular, the well-known split convex feasibility problem (SCFP) (Censor and Elfving [24]) is illustrated as follows:

where C and Q are nonempty closed and convex subsets of real Hilbert spaces and , respectively, and A is a bounded and linear operator from to . The problem SCFP (2) has received important attention due to its applications in signal processing, image reconstruction, with particular progress in intensity modulated radiation therapy, compressed sensing, approximating theory, and control theory (see, for example, [25,26,27,28,29,30,31,32] and the references therein).

Initiated by the problem SCFP, several split type problems have been investigated and studied, for example, split variational inequality problems, split common fixed point problems, and split null point problems. Especially, Moudafi [33] introduced the split monotone variational inclusion problem (SMVIP) for two operators and and multi-valued maximal monotone mappings , as follows:

and

If in the problem SMVIPs (3) and (4), the problem SMVIP reduces to the following split variational inclusion problem (SVIP):

and

The problem SVIPs (5) and (6) constituted a pair of variational inclusion problems which have to be solved so that the image under a given bounded linear operator A of the solution of the problem SVIP (5) in is the solution of the other SVIP (6) in another Hilbert space . Indeed, one can see that and . The SVIPs (5) and (6) is at the core of modeling many inverse problems arising from phase retrieval and other real-world problems, for instance, in sensor networks in computerized tomography and data compression (see, for example, [34,35,36]).

In the process of studying equilibrium problems and split inverse problems, not only techniques and methods for solving the respective problems have been proposed (see, for example, CQ-algorithm in Byrne [37,38], relaxed CQ-algorithm in Yang [39] and Gibali et al. [40], self-adaptive algorithm in López et al. [41], Moudafi and Thukur [42], and Gibali [43]), but also the common solution of equilibrium problems, split inverse problems, and other problems have been considered in many works (see, for example, Plubtieng and Sombut [44] considered the common solution of equilibrium problems and nonspreading mappings; Sobumt and Plubtieng [45] studied a common solution of equilibrium problems and split feasibility problems in Hilbert spaces; Sitthithakerngkiet et al. [46] investigated a common solution of split monotone variational inclusion problems and fixed points problem of nonlinear operators; Eslamian and Fakhri [47] considered split equality monotone variational inclusion problems and fixed point problem of set-valued operators; Censor and Segal [48], Plubtieng and Sriprad [49] explored split common fixed point problems for directed operators). In particular, some applications to mathematical models for studying a common solution of convex optimizations and compressed sensing whose constraints can be presented as equilibrium problems and split variational inclusion problems, which stimulated our research on this kind of problem.

Motivated by the above works, we consider the following split variational inclusion problem and equilibrium problem:

Let and be real Hilbert spaces, be a bounded linear operator. Let and be two set-valued mappings with nonempty values and be a bifunction, where C is nonempty closed convex subset of .

The split monotone variational inclusion and equilibrium problem (SMVIEP) is as follows:

such that

where denotes the solution set of the problem EP.

Combined with techniques of Byrne et al., López et al., Moudafi and Thukur, Sitthithakerngkiet et al. as well as of Sobumt and Plubtieng and Eslamian and Fakhri, the purpose of this paper is to introduce a new iterative method which is called a new self-adaptive step size algorithm for solving the problem SMVIEPs (7) and (8) in Hilbert spaces.

The outline of the paper is as follows: In Section 2, we collect definitions and results which are needed for our further analysis. In Section 3, our new self-adaptive step size algorithms are introduced and analyzed, the weak and strong convergence theorems for the proposed algorithms are obtained, respectively, under suitable conditions. Moreover, as applications, the existence of a fixed point of a pseudo contractive mapping and a solution of the split convex optimization problem is considered in Section 4. Finally, the numerical examples and a comparison with some related algorithms are presented to illustrate the performances of our new algorithms.

2. Preliminaries

Now, we recall some concepts and results which are needed in the sequel.

Let H be a Hilbert space with the inner product and the induced norm and I be the identity operator on H. Let denote the fixed point set of an operator T if T has fixed point. The symbols and represent the strong and the weak convergence, respectively. For any sequence , denotes the weak w-limit set of , that is,

The following properties of the norm in a Hilbert space H are well known:

for all and . Moreover, the following inequality holds: for all ,

Let C be a closed convex subset of H. For all , there exists a unique nearest point in C, denote , such that

The operator is called the metric projection of H onto C. Some properties of the operator are as follows: for all ,

and, for all and ,

Definition 1.

Let H be a real Hilbert space and D be a subset of H. For all , an operator is said to be:

- (1)

- firmly nonexpansive on D if

- (2)

- Lipschitz continuous with constant on D if

- (3)

- nonexpansive on D if

- (4)

- hemicontinuous if it is continuous along each line segment in D.

- (5)

- averaged if there exist a nonexpansive operator and a number such that

Remark 1.

The following can be easily obtained:

- (1)

- An operator h is firmly nonexpansive if and only if is firmly nonexpansive (see [50], Lemma 2.3), then h is nonexpansive.

- (2)

- If and are averaged, then their composition is averaged (see [50], Lemma 2.2).

Definition 2.

Let H be a real Hilbert space and . The operator is said to be:

- (1)

- monotone if, for all and ,

- (2)

- maximal monotone if the graph of B,is not properly contained in the graph of any other monotone mapping.

- (3)

- The resolvent of B with parameter is denoted bywhere I is the identity operator.

Remark 2.

For any , the following hold:

Assume that an equilibrium bifunction satisfies the following conditions:

- (A1)

- for all ;

- (A2)

- for all ;

- (A3)

- For all , for all ;

- (A4)

- For all , is convex and lower semi-continuous.

Lemma 1.

(see [2]) Let C be a nonempty closed convex subset of a Hilbert space H and suppose that satisfies the conditions –. For all and , define a mapping by

Then the following hold:

- (1)

- is nonempty single-valued.

- (2)

- is firmly nonexpansive, that is, for all ,and, further, is nonexpansive.

- (3)

- is closed and convex.

Lemma 2.

(see [26,52]) Assume that is a sequence of nonnegative real numbers such that, for each ,

where is a sequence in and is a sequence such that

- (a)

- and ;

- (b)

- or .

Then the limit of the sequence exists and .

Lemma 3.

(see [53]) Assume that and are the sequences of nonnegative numbers such that, for each ,

If and has a subsequence converging to zero, then .

Lemma 4.

(see [52]) Let be a sequence of real numbers that does not decrease at infinity, in the sense that there exists a subsequence of such that for each . Also, consider the sequence of integers defined by

Then is a nondecreasing sequence satisfying and, for each ,

Lemma 5.

(see [54]) Let C be a nonempty closed convex subset of a real Hilbert space H. If is nonexpansive and , then the mapping is demiclosed at 0, that is, if is a sequence in C converges weakly to and , then .

3. The Main Results

In this section, we introduce our algorithms and state our main results.

Throughout this paper, we always assume that and are Hilbert spaces, C is a nonempty closed convex subset of , the bifunction satisfies the conditions –, is a bounded linear operator, denotes the conjugate transpose of A (in finite dimensional spaces, ). Let and be two maximal monotone operators.

Now, we define the functions by

and

where for any .

From Aubin [55], one can see that f and g are weakly lower semi-continuous and convex differentiable. Moreover, it is known that the functions F and G are Lipschitz continuous according to Beryne et al. [36]. Denote the solution of the problem SVIPs (5) and (6) by

3.1. Iterative Algorithms

Now, we introduce the following 3 algorithms for our main results:

| Algorithm 1.Choose a positive sequence satisfying for some small enough. Select arbitrary starting point , set and let , . |

| Iterative Step: For any iterate for each , compute Stop Criterion: If , then stop. Otherwise, set and return to Iterative Step. |

| Algorithm 2.Choose a positive sequence satisfying (for some small enough). Select arbitrary starting point , set and let , . |

| Iterative Step: For any iterate for each , compute Stop Criterion: If , then stop. Otherwise, set and return to Iterative Step. |

| Algorithm 3.Choose a positive sequence satisfying (for some small enough). Select arbitrary starting point , set and let , . |

| Iterative Step: For any iterate for each , compute Stop Criterion: If , then stop. Otherwise, set and return to Iterative Step. |

3.2. Weak Convergence Analysis for Algorithm 1

First, we give one lemma for our main result.

Lemma 6.

Suppose that . If in Algorithm 1, then .

Proof.

Denote . Then we can see .

If , then it follows from the construction of that and hence , that is, from Lemma 1.

On the other hand, the operator and are averaged from Remark 1. Since , it follows from Lemma 2.1 of [51], with the averaged operators and , that

If , then we can see from the recursion (31) and hence and , that is, and

It can be written as , where . Without loss generality, if we take , then and and so

which means that and so , that is, . Hence and, furthermore, . This completes the proof. □

Theorem 1.

Let , be two real Hilbert spaces and be a bounded linear operator. Let ϕ be a bifunction satisfying the conditions –. Assume that and are maximal monotone mappings with . Then the sequence generated by Algorithm 1 converges weakly to an element of , where the parameters , are in and satisfy the following conditions:

Proof.

Denote . Since is firmly nonexpansive according to Remark 2, is averaged from (1), (2) of Remark 1 and is averaged and nonexpansive.

First, we show that the sequences and are bounded. Since , we take and then , and . At the same time, it follows that and is nonexpansive according to Lemma 1 and so

and

which means that the sequence is bounded and so are , and , where and .

Next, we show Since , we have and, for all ,

and

Furthermore, we have . According to the definition of the iterative sequence , we have

and, since , we have

On the other hand, we have

Since is nonexpansive, we have

For any small enough, the sequence is Fejer monotone with respect to , which ensures the existence of the limit of and so we can denote . Thus it follows from (48) that

since F and G are Lipschitz continuous from Byrne [36] and so and are bounded.

In addition, it also follows (48) that

which means that

and hence it follows that and if and only if as .

Thus it follows from the above inequalities that

where and . Note that it follows from the conditions of and , combining the formula (51), that the limit of exists from Lemma 3. Since the limit of exists, there exists a subsequence of the sequence converges to a point and . Thus it follows that from Lemma 3. Further, because of and .

Next, we show , where is a weak cluster of the sequence . Note that is bounded and so there exists a point such that and so and . Also, since and , we can see that from Lemma 5, which implies that according to Lemma 1.

On the other hand, according to the lower semi-continuity of f and g, it follows from the formula (48) and the lower semi-continuities of f and g that

and

that is,

and so we can have and from Remark 2. Therefore, . This completes the proof. □

Remark 3.

If the operators and are set-valued, odd and maximal monotone mappings, then the operator is asymptotically regular (see Theorem 4.1 in [56] and Theorem 5 in [57]) and odd. Consequently, the strong convergence of Algorithm 1 is obtained (for the similar proof, see Theorem 1.1 in [58], Theorem 4.3 in [36]).

Remark 4.

If we take , where is a constant which depends on the norm of the operator A, then the conclusion of Theorem 1 also holds.

3.3. Strong Convergence Analysis for Algorithms 2 and 3

Theorem 2.

Let , be two real Hilbert spaces and be a bounded linear operator. Let ϕ be a bifunction satisfying the conditions –. Assume that and are maximal monotone mappings with . If the sequence in satisfies the following conditions:

then the sequence generated by Algorithm 2 converges strongly to a point .

Proof.

First, we show that the sequence and are bounded. Denote

and take , as in the proof of Theorem 1, we can see and

which implies that the sequence is bounded and so is the sequence .

Next, we show , where . Indeed, there exists a subsequence of such that

Since converges weakly to because is bounded, according to (11), we can see that

For the following strong convergence theorem of Algorithm 3, now, we recall the minimum-norm element of , which is a solution of the following problem:

Theorem 3.

Let and be two real Hilbert spaces and be a bounded linear operator. Let ϕ be a bifunction satisfying the conditions –. Assume that and are maximal monotone mappings with . If the sequences , , in with satisfy the following conditions:

then the sequences and generated by Algorithm 3 converge strongly to a point , the minimum-norm element of .

Proof.

We show several steps to prove the result.

Step 1. We show that the sequences and are bounded. Since is not empty, take a point . Since the operator is nonexpansive and , we have

which implies that is bounded and so is .

Step 2. We show that and , where , the minimum-norm element of . To this end, we denote

For a point , similarly as in the proof of Theorem 1, we have

which means, for any small enough, that

Setting , we have and so ,

and

Moreover, it follows from (64) that

Now, we consider two possible cases for the convergence of the sequence .

Case I. Assume that is not increasing, that is, there exists such that, for each , . Therefore, the limit of exists and

Since , it follows from (68) that

Note that

and F and G are Lipschitz continuous and, for any small enough, we obtain

and so , and as . Therefore, it follows from (65) that . From (11) and (33), (66), we have

Since is bounded, as in the proof of Theorem 1, the sequence converges weakly to a point and the following inequality holds from the property (12):

Since , by using Lemma 2 to the formula (70) and so we can deduce that , that is, the sequence converges strongly to z. Furthermore, it follows from the property of the metric projection that, for all ,

which implies that z is the minimum-norm solution of the system of the problem EP (1) and the problem SVIPs (5) and (6).

Case II. If the sequence is increasing, then it is easy to see that from (68) because of and so, from (65), we can get .

Without loss generality, we assume that there exists a subsequence of the sequence such that for each . In this case, if we define an indicator

then as and and so, from (68), it follows that

Since as , similarly as in the proof in Case I, we get

and

and so

From (77), we can see that

Thus . Therefore, according to Lemma 4, we have

which implies that the sequence converges strongly to z, the minimum-norm element of . This completes the proof. □

Corollary 1.

Let , be two real Hilbert spaces and be a bounded linear operator. Let ϕ be a bifunction satisfying the conditions –. Assume that and are maximal monotone mappings with . If the sequence in satisfies the following conditions:

and, for any , the sequence is generated by the following iterations:

where is defined as the above algorithms, then the sequence converges strongly to a point .

Remark 5.

If the bifunction , then the problem EP (1) is equivalent to the following problem:

where is a nonlinear operator. It is a well-known classical variational inequality problem and, clearly, we can obtain a common solution of the variational inequality problem and the split variational inclusion problem via the above algorithms.

Remark 6.

If the bifunction , , , C and Q are N-dimensional and M-dimensional Euclidean spaces, respectively, then the proposed problem in this present paper reduces to the split common fixed point problem of Censor and Segal [48], where U and T are direct operators.

4. Applications to Fixed Points and Split Convex Optimization Problems

In this section, we consider the fixed point problem and the split convex optimization problem.

A mapping is called pseudo-contractive if the following inequality holds: for any ,

It is well known that pseudo-contraction mappings includes nonexpansive mappings and is monotone mapping. The following lemma is very useful for obtaining the fixed point of the pseudo-contractive mapping.

Lemma 7.

(see [2]) Let C be a nonempty closed convex subset of a real Hilbert space H. Let be a continuous pseudo-contractive mapping and define a mapping as follows: for any and ,

Then the following hold:

- (1)

- is a single-valued mapping.

- (2)

- is a nonexpansive mapping.

- (3)

- is closed and convex.

If h and l are two proper, convex and lower semi-continuous functions, define (: the subdifferential mapping of h) as follows:

From Rockafellar [59], is a maximal monotone mapping. The split convex optimization problem is illustrated as follows:

Denote the solution set of the split convex optimization problem by

Now, we show the existence of a common element of the fixed point set of a pseudo-contractive mapping and the solution set of the split convex minimization problem as follows:

Theorem 4.

Let , be two real Hilbert spaces and be a bounded linear operator. Let T be a pseudo-contractive mapping. Assume that and are two proper, convex and lower semi-continuous functions such that and are maximal monotone mappings with . If the sequences , in satisfy the following conditions:

and, for any and , the sequence is generated by the following iterations:

where is defined as in Algorithm 1, then the sequence converges weakly to an element of .

Proof.

Take , then is equivalent to . Thus, according to Theorem 1, the conclusion follows. □

Theorem 5.

Let , be two real Hilbert spaces and be a bounded linear operator. Let T be a pseudo-contractive mapping. Assume that and are two proper, convex and lower semi-continuous functions such that and are maximal monotone mappings with . If the sequence in satisfies the following conditions:

and, for any , the sequence is generated by the following iterations:

where is defined as in Algorithm 2, then the sequence converges strongly to a point .

Theorem 6.

Let , be two real Hilbert spaces and be a bounded linear operator. Let T be a pseudo-contractive mapping. Assume that and are two proper, convex and lower semi-continuous functions such that and are maximal monotone mappings with . If the sequence in satisfies the following conditions:

and, for any and , the sequence is generated by the following iterations:

where is defined as in Algorithm 3, then the sequence converges strongly to a point .

5. Numerical Examples

In this section, we present some examples to illustrate the applicability, efficiency and stability of our self-adaptive step size iterative algorithms. We have written all the codes in Matlab R2016b (MathWorks, Natick, MA, USA) and are preformed on a LG dual core personal computer (LG Display, Seoul, Korea).

5.1. Numerical Behavior of Algorithm 1

Example 1.

Let and define the operators , and on real line by for all , the bifunction ϕ by and set the parameters on Algorithm 1 by , , for each . It is clear that

From the definition of ϕ, we have

and then

For the quadratic function of y, if it has at most one solution in , then the discriminant of this function , that is, . According to Algorithm 1, if , then we compute the new iteration and the iterative progress is written as

In this way, the step size is self-adaptive and not given beforehand.

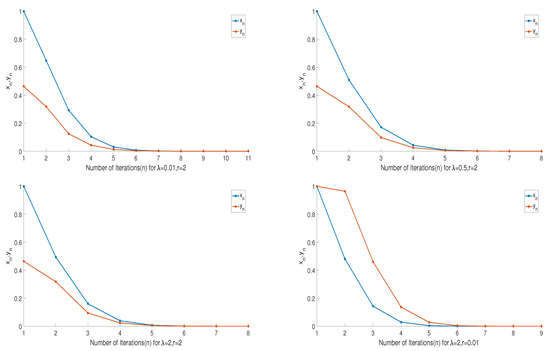

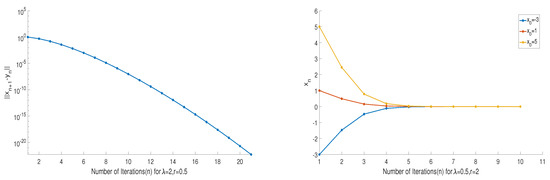

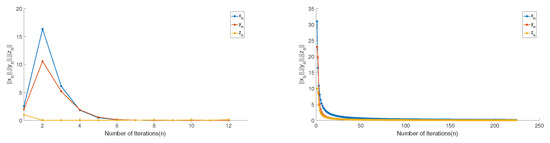

First, we test three cases of and for initial point , and then test three initial points randomly generated by Matlab for λ and r. The values of and are reported in Figure 1 and Figure 2 and Table 1. In these figures, x-axes represent for the number of iterations while y-axes represent the value of and , the stopping criterion of Figure 1 is . These figures imply that the behavior of for Algorithm 1 that converges to the same solution, i.e., as a solution of this example.

Figure 1.

Values of and for different and r.

Figure 2.

Values of and .

Table 1.

The convergence of Algorithm 1.

- (1)

- (2)

- The convergence rate of Algorithm 1 is fast, efficient, stable and simple to implement. The number of iterations remains almost consistent irrespective of the initial point and parameters .

- (3)

- The error of can be obtain approximately equal to even smaller in 20 iterations.

5.2. Numerical Behaviours of Algorithms 2 and 3

Example 2.

Let and define the operators , and as the following:

the bifunction ϕ by . In this example, we set the parameters in Algorithm 2 and Algorithm 3 by , , , and for each .

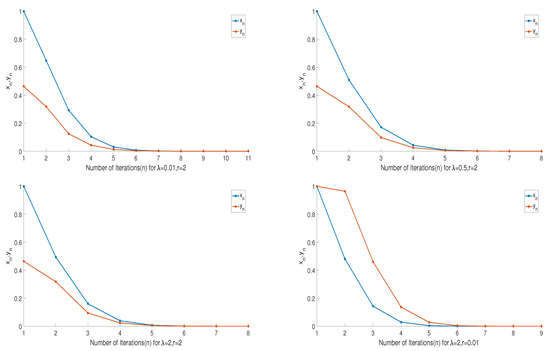

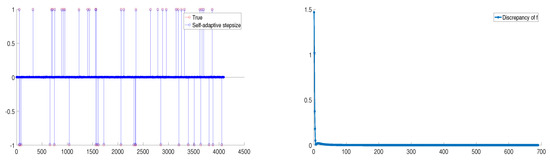

First, we take an initial point , then the test results are reported in Figure 3.

Figure 3.

Values of for Algorithms 2 and 3.

Next, we present the test results for initial point in Table 2.

Table 2.

The convergence of Algorithm 3.

5.3. Comparisons with Other Algorithms

In this part, we present several experiments in comparison our Algorithms 1 and 3 with the other algorithms. Two methods used to compare are the algorithm in Byrne et al. [36] and the algorithm in Sitthithakerngkiet et al. [46]. The step size for the algorithm in Byrne et al. [36] and the algorithm in Sitthithakerngkiet et al. [46] which depends on the norm of operator A. In addition, let the mapping be an infinite family of nonexpansive mappings and a nonnegative real sequence . Then the W-mapping is generated by and and , the bounded linear operator in Sitthithakerngkiet et al. [46]. We choose the stopping criterion for our algorithm is , for Byrne et al. [36] is and for Sitthithakerngkiet et al. [46] is . For the three algorithms, the operators are defined as Example 2, the parameters and , in Sitthithakerngkiet et al. [46], , and in our Algorithm 1, , and in our Algorithm 3. We take , for all these algorithms and compare the iterations and computer times. The experiment results are reported in Table 3.

Table 3.

Comparison Algorithms 1 and 3 with other algorithms.

From all the above figures and tables, one can see the behavior of the sequences and , which concludes that and converge to a solution and our algorithms are fast, efficient, and stable and simple to implement (it takes average of 10 iterations to converge). Especially, one can see that our Algorithms 1 and 3 seem to have a competitive advantage. However, as mentioned in the previous sections, the main advantage of these our algorithms is that the step size is self-adaptive without the prior knowledge of operator norms.

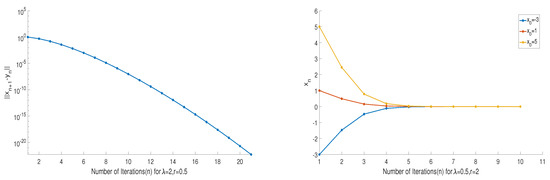

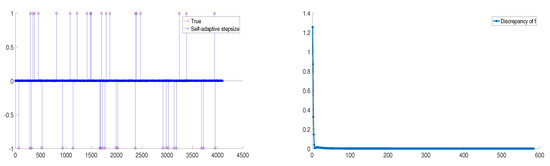

5.4. Compressed Sensing

In the last example, we choose a problem from the field of compressed sensing according to the review comments, that is the recovery of a sparse and noisy signal from a limited number of sampling. Let be K-sparse signal, . The sampling matrix is stimulated by standard Gaussian distribution and vector , where is additive noise. When , it means that there is no noise to the observed data. Our task is to recover the signal from the data b. For further details, one can consult with Nguyen and Shin [34].

For solving the problem, we recall the LASSO (Least Absolute Shrink Select Operator) problem Tibshirani [60] as follows:

where is a given constant. So, in the relation with the problem SVIPs (5) and (6), we consider , and define

and define

In this example, we take , , then the problem EP (1) is equivalent to the following problem:

For the experiment setting, we choose the following parameters in our Algorithm 3: , , , , is generated randomly with , , is K-spikes with amplitude distributed in whole domain randomly. In addition, for simplicity, we take and the stopping criterion with . All the numerical results are presented in Figure 4 and Figure 5.

Figure 4.

Numerical result for .

Figure 5.

Numerical result for .

6. Conclusions

A series of some problems in finance, physics, network analysis, signal processing, image reconstruction, economics, and optimizations are reduced to find a common solution of the split inverse and equilibrium problems, which implies numerous possible applications to mathematical models whose constraints can be presented as the problem EP (1) and the split inverse problem.

This motivated the study of a common solution set of split variational inclusion problems and equilibrium problems.

The main result of this paper is to introduce a new self-adaptive step size algorithm without prior knowledge of the operator norms in Hilbert spaces to solve the split variational inclusion and equilibrium problems. The convergence theorems are obtained under suitable assumptions and the numerical examples and comparisons are presented to illustrate the efficiency and reliability of the algorithms. In one sense, the algorithms and theorems in this paper complement, extend, and unify some related results in the split variational inclusion and equilibrium problems.

Author Contributions

The authors contributed equally to this work. The authors read and approved final manuscript.

Funding

The first author was funded by the National Science Foundation of China (11471059) and Science and Technology Research Project of Chongqing Municipal Education Commission (KJ 1706154) and the Research Project of Chongqing Technology and Business University (KFJJ2017069).

Acknowledgments

The authors express their deep gratitude to the referee and the editor for his/her valuable comments and suggestions.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Blum, E.; Oettli, W. From optimization and variational inequalities to equilibrium problems. Math. Stud. 1994, 63, 123–145. [Google Scholar]

- Flam, D.S.; Antipin, A.S. Equilibrium programming using proximal-like algorithms. Math. Program. 1997, 78, 29–41. [Google Scholar] [CrossRef]

- Moudafi, A. Second-order differential proximal methods for equilibrium problems. J. Inequal. Pure Appl. Math. 2003, 4, 18. [Google Scholar]

- Bnouhachem, A.; Suliman, A.H.; Ansari, Q.H. An iterative method for common solution of equilibrium problems and hierarchical fixed point problems. Fixed Point Theory Appl. 2014, 2014, 194. [Google Scholar] [CrossRef]

- Konnov, I.V.; Ali, M.S.S. Descent methods for monotone equilibrium problems inBanach spaces. J. Comput. Appl. Math. 2006, 188, 165–179. [Google Scholar] [CrossRef]

- Konnov, I.V.; Pinyagina, O.V. D-gap functions and descent methods for a class of monotone equilibrium problems. Lobachevskii J. Math. 2003, 13, 57–65. [Google Scholar]

- Charitha, C. A Note on D-gap functions for equilibrium problems. Optimization 2013, 62, 211–226. [Google Scholar] [CrossRef]

- Lorenzo, D.D.; Passacantando, M.; Sciandrone, M. A convergent inexact solution method for equilibrium problems. Optim. Methods Softw. 2014, 29, 979–991. [Google Scholar] [CrossRef]

- Ceng, L.C.; Ansari, Q.H.; Yao, J.C. Some iterative methods for finding fixed point and for solving constrained convex minimization problems. Nonlinear Anal. 2011, 74, 5286–5302. [Google Scholar] [CrossRef]

- Yao, Y.; Cho, Y.J.; Liou, Y.C. Iterative algorithm for hierarchical fixed points problems and variational inequalities. Math. Comput. Model. 2010, 52, 1697–1705. [Google Scholar] [CrossRef]

- Yao, Y.; Cho, Y.J.; Liou, Y.C. Algorithms of common solutions for variational inclusions, mixed equilibrium problems and fixed point problems. Eur. J. Oper. Res. 2011, 212, 242–250. [Google Scholar] [CrossRef]

- Yao, Y.H.; Liou, Y.C.; Yao, J.C. New relaxed hybrid-extragradient method for fixed point problems, a general system of variational inequality problems and generalized mixed equilibrium problems. Optimization 2011, 60, 395–412. [Google Scholar] [CrossRef]

- Qin, X.; Chang, S.S.; Cho, Y.J. Iterative methods for generalized equilibrium problems and fixed point problems with applications. Nonlinear Anal. Real World Appl. 2010, 11, 2963–2972. [Google Scholar] [CrossRef]

- Qin, X.; Cho, Y.J.; Kang, S.M. Viscosity approximation methods for generalized equilibrium problems and fixed point problems with applications. Nonlinear Anal. 2010, 72, 99–112. [Google Scholar] [CrossRef]

- Hung, P.G.; Muu, L.D. For an inexact proximal point algorithm to equilibrium problem. Vietnam J. Math. 2012, 40, 255–274. [Google Scholar]

- Quoc, T.D.; Muu, L.D.; Nguyen, V.H. Extragradient algorithms extended to equilibrium problems. Optimization 2008, 57, 749–776. [Google Scholar] [CrossRef]

- Santos, P.; Scheimberg, S. An inexact subgradient algorithm for equilibrium problems. Comput. Appl. Math. 2011, 30, 91–107. [Google Scholar]

- Thuy, L.Q.; Anh, P.K.; Muu, L.D.; Hai, T.N. Novel hybrid methods for pseudomonotone equilibrium problems and common fixed point problems. Numer. Funct. Anal. Optim. 2017, 38, 443–465. [Google Scholar] [CrossRef]

- Rockafellar, R.T. Monotone operators and proximal point algorithm. SAIM J. Control Optim. 1976, 14, 877–898. [Google Scholar] [CrossRef]

- Moudafi, A. From alternating minimization algorithms and systems of variational inequalities to equilibrium problems. Commun. Appl. Nonlinear Anal. 2019, 16, 31–35. [Google Scholar]

- Moudafi, A. Proximal point algorithm extended to equilibrium problem. J. Nat. Geometry 1999, 15, 91–100. [Google Scholar]

- Muu, L.D.; Otelli, W. Convergence of an adaptive penalty scheme for finding constrained equilibria. Nonlinear Anal. 1992, 18, 1159–1166. [Google Scholar] [CrossRef]

- Dong, Q.L.; Tang, Y.C.; Cho, Y.J.; Rassias, T.M. “Optimal” choice of the step length of the projection and contraction methods for solving the split feasibility problem. J. Glob. Optim. 2018, 71, 341–360. [Google Scholar] [CrossRef]

- Censor, Y.; Elfving, T. A multiprojection algorithm using Bregman projections in a product space. Numer. Algorithms 1994, 8, 221–239. [Google Scholar] [CrossRef]

- Ansari, Q.H.; Rehan, A. Split feasibility and fixed point problems. In Nonlinear Analysis, Approximation Theory, Optimization and Applications; Springer: Berlin/Heidelberg, Germany, 2014; pp. 281–322. [Google Scholar]

- Xu, H.K. Iterative algorithms for nonliear operators. J. Lond. Math. Soc. 2002, 66, 240–256. [Google Scholar] [CrossRef]

- Qin, X.; Shang, M.; Su, Y. A general iterative method for equilibrium problems and fixed point problems in Hilbert spaces. Nonlinear Anal. 2008, 69, 3897–3909. [Google Scholar] [CrossRef]

- Ceng, L.C.; Ansari, Q.H.; Yao, J.C. An extragradient method for solving split feasibility and fixed point problems. Comput. Math. Appl. 2012, 64, 633–642. [Google Scholar] [CrossRef]

- Censor, Y.; Bortfeld, T.; Martin, B.; Trofimov, A. A unified approach for inversion problems in intensity modulated radiation therapy. Phys. Med. Biol. 2003, 51, 2353–2365. [Google Scholar] [CrossRef]

- Kazmi, K.R.; Rizvi, S.H. An iterative method for split variational inclusion problem and fixed point problem for a nonexpansive mapping. Optim. Lett. 2014, 8, 1113–1124. [Google Scholar] [CrossRef]

- Censor, Y.; Gibali, A.; Reich, S. Algorithms for the split variational inequality problem. Numer. Algorithms 2012, 59, 301–323. [Google Scholar] [CrossRef]

- Moudafi, A. The split common fixed point problem for demicontractive mappings. Inverse Probl. 2010, 26, 587–600. [Google Scholar] [CrossRef]

- Moudafi, A. Split monotone variational inclusions. J. Optim. Theory Appl. 2011, 150, 275–283. [Google Scholar] [CrossRef]

- Nguyen, T.L.; NShin, Y. Deterministic sensing matrices in compressive sensing: A survey. Sci. World J. 2013, 2013, 1–6. [Google Scholar] [CrossRef]

- Dang, Y.; Gao, Y. The strong convergence of a KM-CQ-like algorithm for a split feasibility problem. Inverse Probl. 2011, 27, 015007. [Google Scholar] [CrossRef]

- Byrne, C.; Censor, Y.; Gibali, A.; Reich, S. Weak and strong convergence of algorithms for the split common null point problem. J. Nonlinear Convex Anal. 2012, 13, 759–775. [Google Scholar]

- Byrne, C. Iterative oblique projection onto convex sets and the split feasibility problem. Inverse Probl. 2002, 18, 441–453. [Google Scholar] [CrossRef]

- Byrne, C. A unified treatment of some iterative algorithms in signal processing and image reconstruction. Inverse Probl. 2004, 20, 103–120. [Google Scholar] [CrossRef]

- Yang, Q. The relaxed CQ algorithm for solving the split feasibility problem. Inverse Probl. 2004, 20, 1261–1266. [Google Scholar] [CrossRef]

- Gibali, A.; Mai, D.T.; Nguyen, T.V. A new relaxed CQ algorithm for solving split feasibility problems in Hilbert spaces and its applications. J. Ind. Manag. Optim. 2018, 2018, 1–25. [Google Scholar]

- López, G.; Martin-Marquez, V.; Xu, H.K. Solving the split feasibility problem without prior knowledge of matrix norms. Inverse Probl. 2012, 28, 085004. [Google Scholar] [CrossRef]

- Moudafi, A.; Thukur, B.S. Solving proximal split feasibility problem without prior knowledge of matrix norms. Optim. Lett. 2013, 8. [Google Scholar] [CrossRef]

- Gibali, A. A new split inverse problem and application to least intensity feasible solutions. Pure Appl. Funct. Anal. 2017, 2, 243–258. [Google Scholar]

- Plubtieng, S.; Sombut, K. Weak convergence theorems for a system of mixed equilibrium problems and nonspreading mappings in a Hilbert space. J. Inequal. Appl. 2010, 2010, 246237. [Google Scholar] [CrossRef]

- Sombut, K.; Plubtieng, S. Weak convergence theorem for finding fixed points and solution of split feasibility and systems of equilibrium problems. Abstr. Appl. Anal. 2013, 2013, 430409. [Google Scholar] [CrossRef]

- Sitthithakerngkiet, K.; Deepho, J.; Martinez-Moreno, J.; Kuman, P. Convergence analysis of a general iterative algorithm for finding a common solution of split variational inclusion and optimization problems. Numer. Algorithms 2018, 79, 801–824. [Google Scholar] [CrossRef]

- Eslamian, M.; Fakhri, A. Split equality monotone variational inclusions and fixed point problem of set-valued operator. Acta Univ. Sapientiaemath. 2017, 9, 94–121. [Google Scholar] [CrossRef]

- Censor, Y.; Segal, A. The split common fixed point problem for directed operators. J. Convex Anal. 2019, 16, 587–600. [Google Scholar]

- Plubtieng, S.; Sriprad, W. A viscosity approximation method for finding common solutions of variational inclusions, equilibrium problems, and fixed point problems in Hilbert spaces. Fixed Point Theory Appl. 2009, 2009, 567147. [Google Scholar] [CrossRef]

- Mainge, P.E. Strong convergence of projected subgradient methods for nonsmooth and nonstrictly convex minimization. Set-Valued Anal. 2008, 16, 899–912. [Google Scholar] [CrossRef]

- Bruck, R.E.; Reich, S. Nonexpansive projections and resolvents of accretive operators in Banach spaces. Houst. J. Math. 1977, 3, 459–470. [Google Scholar]

- Mainge, P.E. Approximation methods for common fixed points of nonexpansive mappings in Hilbert spaces. J. Math. Anal. Appl. 2007, 325, 469–479. [Google Scholar] [CrossRef]

- Osilike, M.O.; Aniagbosor, S.C.; Akuchu, G.B. Fixed point of asymptotically demicontractive mappings in arbitrary Banach spaces. PanAm. Math. J. 2002, 12, 77–88. [Google Scholar]

- Goebel, K.; Kirk, W.A. Topics in Metric Fixed Point Theory; Cambridge University Press: Cambridge, UK, 1990. [Google Scholar]

- Aubin, J.P. Optima and Equilibria: An Introduction to Nonlinear Analysis; Springer: Berlin/Heidelberg, Germany, 1993. [Google Scholar]

- Ishikawa, S. Fixed points and iteration of a nonexpansive mapping in Banach space. Proc. Am. Math. Soc. 1976, 59, 65–71. [Google Scholar] [CrossRef]

- Browder, F.E.; Petryshyn, W.V. The solution by iteration of nonlinear functional equations in Banach spaces. Bull. Am. Math. Soc. 1966, 72, 571–575. [Google Scholar] [CrossRef]

- Baillon, J.B.; Bruck, R.E.; Reich, S. On the asymptotic behavior of nonexpansive mappings and semigroups in Banach spaces. Houst. J. Math. 1978, 4, 1–9. [Google Scholar]

- Rockafellar, R.T. On the maximal monotonicity of subdifferential mappings. Pac. J. Math. 1970, 33, 209–216. [Google Scholar] [CrossRef]

- Tibshirani, R. Regression shrinkage and selection via the Lasso. J. R. Stat. Soc. 1996, 58, 267–288. [Google Scholar] [CrossRef]

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).