Abstract

Rhythmic neural firing is thought to underlie the operation of neural function. This triggers the construction of dynamical network models to investigate how the rhythms interact with each other. Recently, an approach concerning neural path pruning has been proposed in a dynamical network system, in which critical neuronal connections are identified and adjusted according to the pruning maps, enabling neurons to produce rhythmic, oscillatory activity in simulation. Here, we construct a sort of homomorphic functions based on different rhythms of neural firing in network dynamics. Armed with the homomorphic functions, the pruning maps can be simply expressed in terms of interactive rhythms of neural firing and allow a concrete analysis of coupling operators to control network dynamics. Such formulation of pruning maps is applied to probe the consolidation of rhythmic patterns between layers of neurons in feedforward neural networks.

1. Introduction

Rhythms are ubiquitous and have been shown to play an important role in living organisms [1,2]. Lines of research on the roles of rhythms are studied, including synchronous flashing of fireflies [3], pacemaker cells of the heart [4], synchronization of pulse-coupled oscillators [5,6], synchronous neural activity propagating in scale-free networks, random networks, and cortical neural networks [7,8]. The central questions in the above research concern the origin and the control of rhythms, that is, the issue of understanding what causes the rhythms and how the rhythms interact with each other. Especially in neural network dynamics, an important issue is to probe activity-dependent plasticity of neural connections entwined with rhythms [9,10,11]. Such a kind of plasticity is claimed to be the heart of the organization of behavior [12,13,14,15].

Classic models of neural networks have achieved state-of-the-art performances in classification [16,17,18], object detection [19,20,21], and instance segmentation [22,23,24]. One of the key structures involved in network architecture is convolution. Many operators used for edge detection or pattern recognition are convolved with input images to get different types of derivative measurement in the feature-extracting process. Input images are usually structural with geometric features. Thus, convolution can be applied to get features from input images, which are then processed by other parts of network architecture to determine a maximal score for outcome prediction. Here, instead of concerning the geometric feature extraction, our focus is on the neural network models receiving and reactivating with rhythms. Rhythms can be the features of inputs sending to neural works and also be the features of outputs activating by assemblies of neurons in neural networks. We search for a rule to derive operators from rhythmic firing of neurons. Such operators are acting on the adjustment of neural connections and hence can be convolved with the input rhythm to get features and to propagate features for rhythm formation.

Our point of departure comes from the decirculation process in neural network dynamics [25,26]. The decirculation process describes adaptation of network structure that is crucial for evolutionary neural networks to proceed from one circulating state to another. Two sorts of plasticity operators are derived to modify network structure for decirculation process [27]. One is to measure synchronous neural activity in a circulating state, whereas the other is to measure self-sustaining neural activity in a circulating state. They meet the neurobiological concept called Hebbian synaptic plasticity [12,13,14]. Such plasticity operation reflects an internal control in neural network dynamics, enabling neurons to produce rhythmic, oscillatory activity [27]. Recently, the concept of decirculation process has been extended to the concept of neural path pruning [28]. There, by setting the beginning and the ending of a desired flow of neural firing states, a pruning map can be conducted to indicate critical neuronal connections which have significant controllability in eliminating the flow of neural firing states. On this basis, a simulation result shows that a mobile robot guided by neural network dynamics can be exploited to change neuronal connections determined critically by neural path pruning with rewards and punishment. The mobile robot may result in a good performance of fault-tolerant behavior of tracking (adaptive matching of sensory inputs to motor outputs) [28].

Neural path pruning paves an alternative way to derive operators convolving with signals and controlling neural network dynamics. This motivates us to formulate pruning maps with rhythmic neural firing, which may induce a framework of feedforward neural networks for rhythm formation.

2. Theoretical Framework

The model description follows. Let be the n-dimensional state space consisting of all vectors , with each component being 0 or 1. Consider a dynamical system of n coupled neurons, which is modeled by the equation [25,26]:

where is a vector of neural firing states at time t, with each component representing the firing state of neuron i at time t; is a coupling weight matrix of the n coupled neurons (be they agents in a recurrent or feedforward neural network), with each entry representing the coupling weight underlying the connection from j to i; denotes an ensemble of neurons which adjust their states at time t; and is a transition function whose ith component is defined by

otherwise , where is a firing threshold of neuron i for and the function 𝟙 is the Heaviside function. Consider a flow of neural firing states in , where , , and for some . Specifically, if within , then is said to be a loop of neural firing states in . For each , an integer, denoted , is assigned according to the rule:

The matrix is called the pruning map of . Thus,

The pruning map induces the linear functional , which is defined on the Hilbert space of all real matrices endowed with the Hilbert–Schmidt inner product , where for each . Let be the usual inner product of x and y in . With these notions, a basic theorem reveals the determination of whether the neural connections identified by the pruning map are crucial to eliminate the flow of neural firing states in the dynamics of the neural network. It was stated in [27,28] as follows.

Theorem 1.

Let be a flow of neural firing states in . If and satisfy

then for any initial neural firing state and any updating , the flow encoded by Equation (1) cannot behave in

for each

Theorem 1 is based on the concept of neural path pruning. It points out a regulatory regime for network formation. To show this, consider a flow and a coupling weight matrix . If we choose a coupling operator such that (respectively, ), then

Since the quantity is determined only by the flow and the threshold b, Theorem 1 coupled with the inequality in Equation (7) (respectively, the inequality in Equation (8)) suggests that, given the dynamical system

the change of the coupling weight matrix from A to may enhance (respectively, inhibit) the effect of flow elimination on .

We go further to probe the pruning map with a desired flow of rhythmic neural firing. Each entry in the pruning map may be represented in terms of a quantity related to the firing rhythms of individual neurons. In so doing, we can directly extract information from the pruning map concerning how the rhythms interact with each other. Specifically, for feedforward neural networks, the pruning map provides a recipe to probe the interaction of rhythms between layers, which may reveal a consolidated rule to keep neurons firing in their specific rhythms consistently.

3. Main Results

Assume that neuron i fires in rhythm for Let , where denotes the least common multiple of . For each , let be a nonnegative integer less than , denoting the initial phase of rhythmic neural firing. Thus, the flow with neurons firing in rhythm and in phase can be described as

where if for and ; otherwise, . Here, the notation denotes the largest integer less than or equal to . Theorem 2 represents a matrix decomposition formula of the pruning map underlying rhythmic neural firing.

Theorem 2.

Let be a flow of rhythmic neural firing states given in Equation (10). For each denote by the least common multiple of and , and the greatest common divisor of and . Let denote the nonnegative integer less than such that

Then, the pruning map can be formulated by where is a symmetric matrix whose non-zero entries are given by

and is a non-symmetric matrix whose non-zero entries are given by

Proof.

To prove Theorem 2, note that if , then and

Thus, we have

Denote by for . Since if and only if for , the -entry of the pruning map is reduced by

To compute Equation (18) for each pair of , we estimate the numbers of elements in the intersections

and

Denote by . Consider the spanning class (respectively, ) consisting of all (respectively, ), where k runs through all the integers. Let

and

Define the mapping by

Then, is a homomorphism of onto . We claim that the kernel of , denoted by , is

It is readily seen that for each , so , which implies that

On the other hand, suppose . Then, for some . Since is the greatest common divisor of and , there exist such that

This shows that

and hence , proving the Equation (24). Thus, is isomorphic to . Denote by . Since

and the order of the kernel of is , we may explicitly rewritten by

On the other hand, since

there exists a bijective mapping on with such that

for each and . Recall that for some . By Equation (30), we have

and hence by Equation (31), we have

which implies that there exists such that

for each and . Since and for each and , it follows from Equation (34) that

Since , we may rewrite Equation (35) by

With the inequalities in Equations (34) and (36) established by the construction of , we now proceed to compute Equations (19) and (20). Let . For the case of , since

we have

Furthermore, since

we have

Combining Equations (38) and (40) shows that

Consider a partition of given by

Fix . Since

for each , we have

Furthermore, since

for each , we have

By Equations (42), (44) and (46), we may rearrange the intersection of elements in Equation (41) by

Denote by the number of elements in the set E. Since and for each , it follows from Equations (34) and (36) that

for each . It is readily seen that if

then . Conversely, if , then for some . When , it follows that

When , it follows that

Since , we conclude that there exists only one such that . Hence,

Thus, the equality in Equation (48) can be rewritten by

for each . Combining Equations (47) and (53) gives

for the case of . Now, we turn to the case of . Since and , we have

Since Equations (40) and (44) hold also for the case , they imply that

The partition in Equation (42) and the inclusions in Equations (55) and (56) together imply that

Fix . Since and for each , it follows from Equations (34) and (36) that

It is readily seen that if

then . Conversely, if , then for some . When , it follows that

When , it follows that

This implies that . Hence,

Thus, the equality in Equation (58) can be rewritten by

Combining Equations (57) and (63) gives

for the case of . Now, we split the argument into three cases.

Case 1.. Then

By Equations (18), (19), (20), (54), and (64), we have

Case 2.. Then, exactly one of the following situations holds:

Suppose that . Then,

By Equations (18), (19), (20), (54), and (64), we have

Suppose that . Then,

By Equations (18), (19), (20), (54), and (64), we have

Case 3.. Suppose that . Then,

By Equations (18), (19), (20), (54), and (64), we have

Suppose that . Then,

By Equations (18), (19), (20), (54), and (64), we have

Suppose that and . Then, by Equations (18), (19), (20), (54), and (64), we have

This completes the proof of Theorem 2. □

As an implication, we consider a feedforward neural network consisting of many layers of neurons firing in rhythm. Suppose that there are neurons in layer k for each , where layer 1 denotes the input layer and layer denotes the output layer of the feedforward neural network. Thus, the coupling weight underlying the connection from the ℓth neuron in layer to the th neuron in layer is referred to the entry , where and , in the coupling weight matrix A. Thus, the coupling weight matrix A can be rewritten by

Specifically, we may equip the input layer with a two-layer substructure, given by

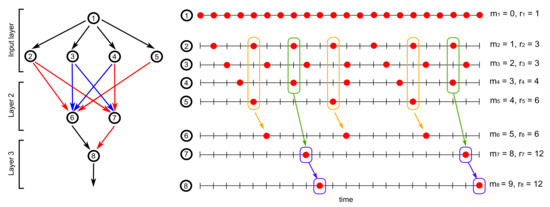

with the firing threshold satisfying for each neuron and the updating satisfying if ; otherwise, for each and Let and . Then, neuron i in the input layer can fire in rhythm and in phase for each . Neurons in the posterior layer receive signals from neurons in the prior layer and fire correspondingly if the incoming signals are greater than the firing thresholds. Note that since neurons in the prior layer fire in rhythm, the firing thresholds can be adjusted to generate certain rhythmic firing patterns or combination of rhythmic firing patterns in the posterior layer. Figure 1 depicts three layers of such a feedforward neural network, in which the coupling weight matrix A is defined by

the firing threshold is defined by , the updating is defined initially by , and

for each

Figure 1.

Rhythmic firing in a feedforward neural network.

Given an initial neural firing state , the dynamical Equation (1) ensures that neurons in the input layer fire in rhythm and in phase , respectively. In addition, since

neurons in layer 2 fire in rhythm and in phase , respectively. This is feasible when the summation of incoming signals from neurons to 6 (respectively, from neurons to 7) is largest, and then the firing threshold of neuron 6 (respectively, neuron 7) can be adjusted to sieve out such a rhythmic firing pattern. Similar results can be found on neuron 8 in layer 3, which fires in rhythm and in phase according to

Denote by the resulting flow of rhythmic neural firing states from time to . According to the formula of the pruning map proved in Theorem 2, we see that

and hence

Then, by neural path pruning described in Equations (7) and (8), the coupling operator can be given by

which maintain the rhythmic firing pattern of neuron 6 (respectively, neuron 7 and neuron 8) by keeping the largest summation of incoming signals from neurons to 6 (respectively, from neurons to 7 and from neuron 7 to 8). As illustrated by the orange, green, and purple boxes in Figure 1, the coupling operator in Equation (95) fits well with the neurobiological concept of Hebbian synaptic plasticity, meaning that when neurons (respectively, neurons and neuron 7) repeatedly or persistently take part in firing neuron 6 (respectively, neuron 7 and neuron 8), the coupling weights between them are strengthened. Such activity-dependent plasticity between layers of neurons is prone to keep neurons firing in rhythm in the posterior layer.

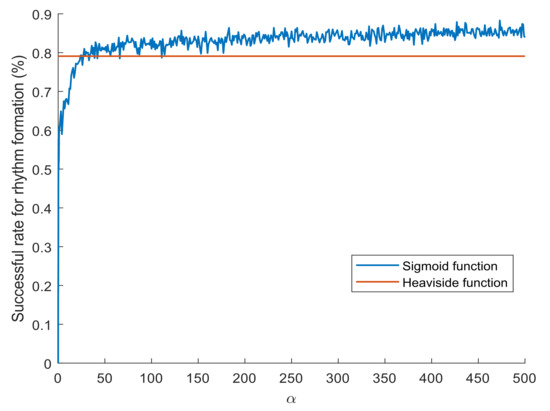

An experimental setting is defined as follows, showing the performance of rhythm formation in feedforward neural networks. The input rhythm and the layer architecture of feedforward neural networks are specified as in Figure 1. Initially, the coupling weights from prior layers to posterior layers and the firing thresholds of neurons are selected randomly from the interval . When a neuron in layer 2 or 3 fires dynamically, its firing threshold will be adjusted by a positive value 1, otherwise a negative value . In addition, the coupling weights are adjusted according to the coupling operators (with intensity 1 or ) derived from neural path pruning. The transition function of a neuron i is selected to be the Heaviside function defined by Equation (2) or the sigmoid function defined by

where is a positive real number. For each round of simulation, we say that rhythm formation occurs in the feedforward neural network if the neuron in layer 3 can persistently fire in rhythm for certain time steps. Specifically, a criterion is defined by k times of neural firing (with the firing state ) in rhythm r with during time steps to 2000. Figure 2 shows the successful rates for rhythm formation in feedforward neural networks, with neurons activating via the Heaviside functions (red line, rounds of simulation) or the sigmoid functions with a selected (blue line, 1000 rounds of simulation per ). It reveals that rhythm formation can robustly occur in feedforward neural networks, even under the change of the transition functions from the Heaviside functions to the sigmoid functions.

Figure 2.

Successful rates for generating rhythmic firing in feedforward neural networks.

4. Conclusions

For neurons firing in rhythm, the pruning map can be formulated in terms of quantities related to rhythmic neural firing. This formulation has the potential to feed crucial control of coupling weights to support the consolidation of rhythms in feedforward neural networks. It reveals a learning rule of feedforward neural networks, which fits well with Hebbian synaptic plasticity for rhythmic pattern formation. Such neural network models can be implemented in relating different kinds of rhythms from input signals to output signals, and hence may be applied to the issues concerning the robot control or pattern recognition with rhythm. We hope that the analysis of pruning maps will stimulate further studies of underlying principles of neural networks concerning how the rhythms interact with each other and organize themselves, providing a theoretical framework mimicking the roles of rhythms in the information processing systems of living organisms.

Author Contributions

Conceptualization, F.-S.T. and S.-Y.H.; Formal analysis, F.-S.T. and S.-Y.H.; and Writing—review and editing, F.-S.T., Y.-L.S., C.-T.P., and S.-Y.H. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported in part by the Ministry of Science and Technology, Taiwan and in part by the China Medical University under Grant CMU106-N-21.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Strogatz, S.H.; Stewart, I. Coupled oscillators and biological synchronization. Sci. Am. 1993, 269, 68–75. [Google Scholar] [CrossRef] [PubMed]

- Glass, L. Synchronization and rhythmic processes in physiology. Nature 2001, 410, 277–284. [Google Scholar] [CrossRef] [PubMed]

- Smith, H.M. Synchronous flashing of fireflies. Science 1935, 82, 151–152. [Google Scholar] [CrossRef] [PubMed]

- Peskin, C.S. Mathematical Aspects of Heart Physiology; Courant Institute of Mathematical Sciences, New York University: New York, NY, USA, 1975. [Google Scholar]

- Mirollo, R.E.; Strogatz, S.H. Synchronization of pulse-coupled biological oscillators. SIAM J. Appl. Math. 1990, 50, 1645–1662. [Google Scholar] [CrossRef]

- Golubitsky, M.; Stewart, I.; Török, A. Patterns of synchrony in coupled cell networks with multiple arrows. SIAM J. Appl. Dyn. Syst. 2005, 4, 78–100. [Google Scholar] [CrossRef]

- Diesmann, M.; Gewaltig, M.-O.; Aertsen, A. Stable propagation of synchronous spiking in cortical neural networks. Nature 1999, 402, 529–533. [Google Scholar] [CrossRef] [PubMed]

- Grinstein, G.; Linsker, R. Synchronous neural activity in scale-free network models versus random network models. Proc. Natl. Acad. Sci. USA 2005, 102, 9948–9953. [Google Scholar] [CrossRef] [PubMed]

- Schechter, B. How the brain gets rhythm. Science 1996, 274, 339. [Google Scholar] [CrossRef] [PubMed]

- Soto-Treviño, C.; Thoroughman, K.A.; Marder, E.; Abbott, L.F. Activity-dependent modification of inhibitory synapses in models of rhythmic neural networks. Nat. Neurosci. 2001, 4, 297–303. [Google Scholar] [CrossRef] [PubMed]

- Buzsáki, G. Rhythms of the Brain; Oxford University Press: New York, NY, USA, 2006; ISBN 0-19-530106-4. [Google Scholar]

- Hebb, D.O. The Organization of Behavior; Wiley: New York, NY, USA, 1949; ISBN 978-0805843002. [Google Scholar]

- Adams, P. Hebb and Darwin. J. Theoret. Biol. 1998, 195, 419–438. [Google Scholar] [CrossRef] [PubMed]

- Sejnowski, T.J. The book of Hebb. Neuron 1999, 24, 773–776. [Google Scholar] [CrossRef]

- Sumbre, G.; Muto, A.; Baier, H.; Poo, M. Entrained rhythmic activities of neuronal ensembles as perceptual memory of time interval. Nature 2008, 456, 102–106. [Google Scholar] [CrossRef] [PubMed]

- LeCun, Y.; Boser, B.; Denker, J.S.; Henderson, D.; Howard, R.E.; Hubbard, W.; Jackel, L.D. Backpropagation applied to handwritten zip code recognition. Neural Comput. 1989, 1, 541–551. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 26 June–1 July 2016; pp. 770–778. [Google Scholar]

- Huang, G.; Liu, Z.; van der Maaten, L.; Weinberger, K.Q. Densely connected convolutional networks. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 4700–4708. [Google Scholar]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich feature hierarchies for accurate object detection and semantic segmentation. In Proceedings of the 2014 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Columbus, OH, USA, 23–28 June 2014; pp. 580–587. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 26 June–1 July 2016; pp. 779–788. [Google Scholar]

- He, K.; Gkioxari, G.; Dollar, P.; Girshick, R. Mask R-CNN. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 2961–2969. [Google Scholar]

- Zhang, W.; Li, R.; Deng, H.; Wang, L.; Lin, W.; Ji, S.; Shen, D. Deep convolutional neural networks for multi-modality isointense infant brain image segmentation. Neuroimage 2015, 108, 214–224. [Google Scholar] [CrossRef] [PubMed]

- Moeskops, P.; Viergever, M.A.; Mendrik, A.M.; de Vries, L.S.; Benders, M.J.; Išgum, I. Automatic segmentation of MR brain images with a convolutional neural network. IEEE Trans. Med. Imaging 2016, 35, 1252–1261. [Google Scholar] [CrossRef] [PubMed]

- Dolz, J.; Desrosiers, C.; Ayed, I.B. 3D fully convolutional networks for subcortical segmentation in MRI: A large-scale study. Neuroimage 2018, 170, 456–470. [Google Scholar] [CrossRef] [PubMed]

- Shih, M.-H.; Tsai, F.-S. Growth dynamics of cell assemblies. SIAM J. Appl. Math. 2009, 69, 1110–1161. [Google Scholar] [CrossRef]

- Shih, M.-H.; Tsai, F.-S. Decirculation process in neural network dynamics. IEEE Trans. Neural Netw. Learn. Syst. 2012, 23, 1677–1689. [Google Scholar] [CrossRef] [PubMed]

- Shih, M.-H.; Tsai, F.-S. Operator control of interneural computing machines. IEEE Trans. Neural Netw. Learn. Syst. 2013, 24, 1986–1998. [Google Scholar] [CrossRef] [PubMed]

- Tsai, F.-S.; Hsu, S.-Y.; Shih, M.-H. Adaptive tracking control for robots with an interneural computing scheme. IEEE Trans. Neural Netw. Learn. Syst. 2018, 29, 832–844. [Google Scholar] [CrossRef] [PubMed]

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).