1. Introduction

We provide local criteria for finding a unique solution

of the nonlinear equation

for Banach space valued mappings with

, and

H is differentiable according to Fréchet [

1,

2]. Many authors have studied local and semilocal convergence criteria of iterative methods (see, for example [

3,

4,

5,

6,

7,

8,

9,

10,

11,

12,

13,

14]).

The most well-known iterative method for approximating a solution

of Equation (

1) is Newton’s method, which is given by

which has a quadratic order of convergence. In order to achieve higher convergence order, a number of modified, multistep Newton’s or Newton-type iterations have been developed in the literature; see [

3,

4,

6,

7,

9,

10,

11,

12,

15,

16,

17,

18,

19] and references cited therein.

There is another important class of multistep methods based on Jarratt methods or Jarratt-type methods [

20,

21,

22]. Such methods have been extensively studied in the literature; see [

23,

24,

25,

26,

27,

28] and references therein. In particular, Alzahrani et al. [

23] have recently proposed a class of sixth order methods for approximating solution of

using a Jarratt-like composite scheme. These methods are very attractive and their local convergence analysis is worthy of study. The authors have shown some important special cases of the class which are defined for each

by

The sixth order of convergence for the methods was established in [

23] by using Taylor expansions and hypotheses requiring derivatives up to sixth order, although only the first order derivatives appear in the methods. The hypotheses of considering higher derivatives restrict the applicability of these methods. As a motivational example, let us consider a function

Q on

,

by

Then,

is unbounded on

D. Notice also that the proofs of convergence in [

23] use Taylor expansions up to the term containing sixth Fréchet-derivative. In this study, we discuss the local convergence of the methods defined above by employing the hypotheses only on the first Fréchet-derivative, taking advantage of the Lipschitz continuity of the first Fréchet-derivative. In addition, we present results in the more general setting of a Banach space.

The rest of the paper is organized as follows. In

Section 2, we present the local convergences of Method-I, II and III. Theoretical results are validated through numerical examples in

Section 3.

Section 4 is devoted to checking the stability of the methods by means of using complex dynamical tool; namely, basin of attraction. Concluding remarks are given in

Section 5.

2. Local Convergence Analysis

Here we discuss the local convergence analysis of the Method-I, Method-II and Method-III. In the analysis we find radius of convergence, computable error bounds on the distances , and then establish the uniqueness of the solution in a certain ball based on some Lipschitz constants.

2.1. Convergence for Method-I

Let

be an increasing and continuous function with

. Assume that equation

has at least one positive solution. Denote by

, the smallest such solution.

Let

and

also be increasing and continuous functions with

. Moreover, define scalar functions on the interval

by

and

We have by (

5),

and

as

. It follows by the intermediate value theorem that equation

has at least one solution in the interval

. Denote by

, the smallest such solution.

Suppose that equation

has at least one positive solution, where

. Denote by

, the smallest such solution.

Set:

Define functions

,

,

,

on the interval

by

and

We get that , as , and as . Denote by and , the smallest solutions of equations and in , respectively.

Define a radius of convergence

r by

Then, we have that for each

,

In order to study Method-I, we need to rewrite it in a more convenient form.

Lemma 1. Suppose that iterates , and are well defined for each . Then, Method-I can be rewritten aswhere . Proof. By the second sub-step of Method-I, we have in turn that

But by using the estimates

and

in the preceding estimates, we show the equivalent second sub-step of Method-I.

To show the equivalent third sub-step of Method-I notice that

Then, from the third sub-step of Method-I and the preceding estimate, we obtain in turn, that

leading to the equivalent third sub-step of Method-I. □

Let and denote the open and closed balls in X respectively, with the center and of radius . Next, we study the local convergence of Method-I.

Theorem 1. Let be a continuously Fréchet-differentiable operator. Suppose that there exists, and functions , ξ, and as defined previously, such that for each Set: .and (4)–(6) hold, where and r are defined previously. Then, the sequence generated by Method-I for is well defined, remains in for each , and converges to δ. Moreover, the following estimates hold.andwhere the “” functions were defined previously. Furthermore, if there exists such that , then the limit point δ is the only solution of equation in . Proof. We shall show the estimates (

14)–(

16) using mathematical induction. By hypothesis

, (

4), (

9), and (

10), we get that

It follows from (

17) and the Banach Lemma on invertible operators [

3,

16] that

and

and

is well defined by the first step of Method-I for

. In view of (

7) and (

12), we get that

so,

Notice that for each . That is, .

Using the first sub-step of Method-I for

and (

7), we can write

Then, we have by Equations (

7), (

9), (

11), (

18), (

19), and (

20) that

which shows (

14) for

and

.

Next, we shall show that

is invertible. Using the Equations (

10) and (

21), we obtain that

So,

is well defined and by the second sub-step of Method-I in Lemma 1

which proves (

15) for

and

.

Hence,

is well defined by last sub-step of Method-I for

. Then, by using the third sub-step of Method-I in Lemma 1, we get that

which proves (

16) for

and

. By simply replacing

,

,

,

by

,

,

,

in the preceding estimates, we arrive at (

14)–(

16). Then, from the estimates

, where

we deduce that

and

.

Finally, we show the unique part: let

for some

with

. Using (

8), we get that

It follows from (

26) that

P is invertible. Then, from the identity

, we conclude that

□

2.2. Convergence of Method-II

Set

and

. Define functions

,

,

, and

on

by

and

We also get that , as , and as .

Denote by and , the smallest such solutions of Equations and , respectively.

Then, we have that for each , .

Lemma 2. Suppose that iterates , , and are well defined for each . Then, Method-II can be rewritten aswhere . Proof. By Lemma 1, we only need to show the second sub-step of Method-II. We can write

where

By replacing in the estimate above it, we conclude the proof. □

Next, we present the local convergence analysis of Method-II in an analogous way to Method-I using the preceding notations.

Theorem 2. Suppose that the hypotheses of Theorem 1 are satisfied but r is defined by (27). Then, the conclusions of Theorem 1 hold with Method-II replacing Method-I and the function replacing the function. 2.3. Convergence for Method-III

Set

and

. Suppose that equation

has at least one positive solution. Denote by

, the smallest such solution.

Set:

. We define functions

,

,

, and

on

by

and

We also get that , as , and as . Denote by and , the smallest such solutions of equations and , respectively.

Then, we have that for each , .

As in the previous two methods we need the auxiliary result.

Lemma 3. Suppose that iterates , , and are well defined for each . Then, Method-III can be rewritten aswhere . Proof. But

so by replacing this estimate in the preceding one, we complete the proof. □

Next, we present the local convergence analysis of Method-III in an analogous way to Method-I using the preceding notations.

Theorem 3. Suppose that the hypotheses of Theorem 1 are satisfied but r is defined by (28) and the function replaces the function. Then, the conclusions of Theorem 1 hold with Method-III replacing Method-I. Proof. The rest of the proof follows as the proof of Theorem 2. □

Remark 1.

- (a)

In view of (10) and the estimatecondition (12) can be dropped and be replaced by - (b)

The result obtained here can be used for operator H satisfying autonomous differential equation [2] of the formwhere T is a known continuous operator. Since , we can apply the results without actually knowing the solution δ. Let, as an example . Then, we can choose: . - (c)

It is worth noticing that methods I, II, and III do not change when we use the conditions of Theorems 1, 2, and 3 instead of stronger conditions used in [23]. Moreover, we can compute the theoretical order of convergence by computational order of convergence (COC) [29]or the approximate computational order of convergence (ACOC) [2], given by

3. Numerical Examples

To validate the results of convergence theorems, we present few numerical examples as follows:

Example 1. Suppose that , where stands for the space of continuous functions defined on . We shall use the maximum norm. Let . Define operator H on D by From above equation, we have that

Then, for

,

and

, we have

,

, and

. Using the definitions of parameters

,

, and

, their computed values are given in

Table 1.

Thus, the convergence of the considered methods to is guaranteed, provided that .

Example 2. We consider the law of population growth. Let , λ, and ν be the population at time a constant birth rate of the population, and the immigration rate, respectively. Then, the equation governing population growth is given as (see [30]) The solution of this differential equation is given by

where

is initial population.

For a particular case study, the problem is given as: Suppose that a certain population initially contains 1,000,000 individuals, that 435,000 individuals immigrate to the community in the first year, and that 1,564,000 individuals are present in the end of one year. The problem is to find birth rate (λ) of this population.

To determine the birth rate (

), we solve the equation

wherein

. The solution (

) of this equation is

. Then, we have that

,

, and

, where

and

Then, for the above set of values the parameters are given in

Table 2.

Thus, the results of theorems ensure convergence of the methods I, II, and III to the solution .

Example 3. Let us consider the function defined bywhere. The Fréchet-derivative is given by

Using the initial approximation

, we obtain the root

of the Function (

33) as

Then, we get that

and

. The values of parameters

,

, and

we calculated are displayed in

Table 3.

Example 4. Consider the motivational example given at the introduction. We have that . It follows that , , and , where . The parameters are given in Table 4. Thus, the convergence of the methods to is guaranteed, provided that .

4. Basins of Attraction

In this section, we present complex geometries of Method-I, II, and III based on the basins of attraction when the methods are applied to the complex polynomial

. The basin of attraction is an useful geometrical tool for comparing convergence domains of the iterative methods [

3].

Let

be a rational map on the Riemann sphere. The orbit of a point

is defined as the set

. A point

is a fixed point of the rational function

R if it satisfies

. A periodic point

of period

is a point such that

, where

m is the smallest such integer. A point

is called attracting if

, repelling if

, and neutral if

. Moreover, if

, the fixed point is super attracting. Let

be an attracting fixed point of the rational function

R. The basin of attraction of the fixed point

is defined

The set of points whose orbit approaches to an attracting fixed point is called the Fatou set. The complementary set, called the Julia set, is the closure of the set consisting of repelling fixed points, which sets up the boundary between the basins of attraction.

In our experiments, we took a square region of the complex plane, with points, and applied the iterative methods starting with in the square. The numerical methods starting at point in a square can converge to the zero of the polynomial or eventually diverge. The stopping criterion for convergence up to a maximum of 25 iterations was considered to be . If the desired tolerance was not achieved in 25 iterations, we did not continue and declared that the iteration from did not converge to any root. The strategy taken into account was this: a color was assigned to each starting point in the basin of a zero. Then, we distinguished the attraction basins by their colors. If the iterative function starting from the initial point converged, then it represented the basins of attraction with the particular assigned color, and if it diverged in 25 iterations, then it enters into the region of black color.

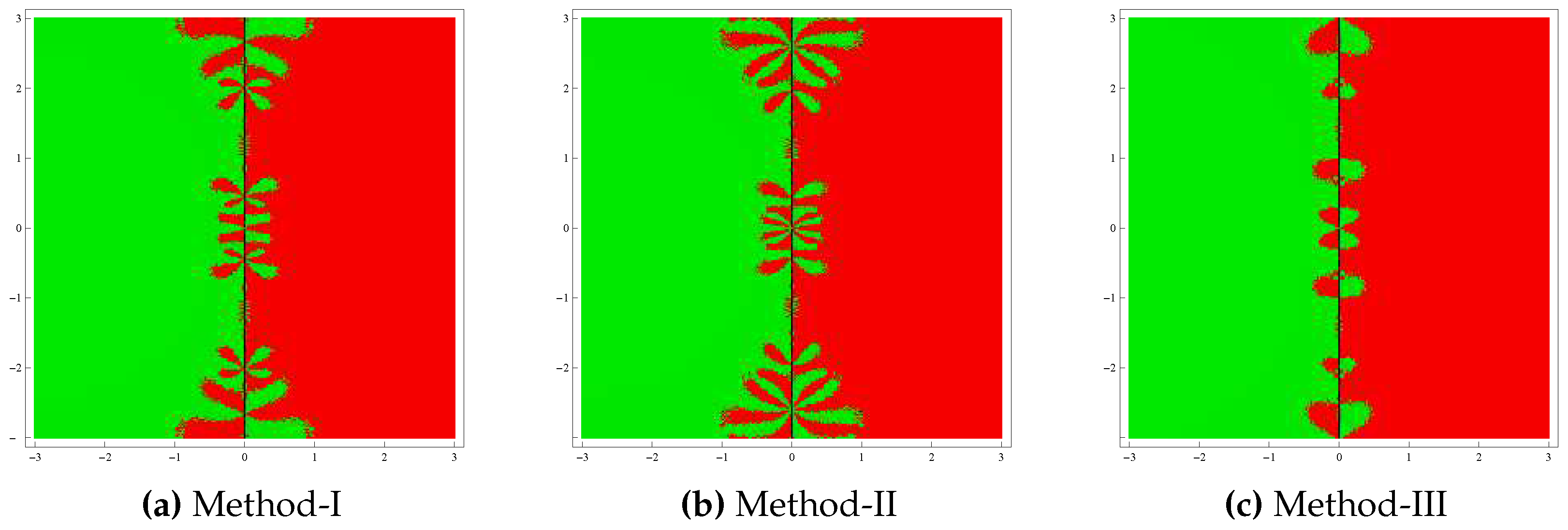

Test problem 1. Let

having the zeros

. The basins of attractors generated by the methods for this polynomial are shown in

Figure 1. From this figure, it can be observed that Method-III has more stable behavior than Methods I and II. In addition, Method-III exhibits very little chaotic behavior on the boundary points compared to other methods.

Test problem 2. Consider the polynomial

having the zeros

. The basins assessed by the methods are shown in

Figure 2. In this case also, the Method-III has the largest basins of attraction compared to Methods I and II. On the other hand, the fractal picture of the Method-II has a large number of diverging points shown by black zones.

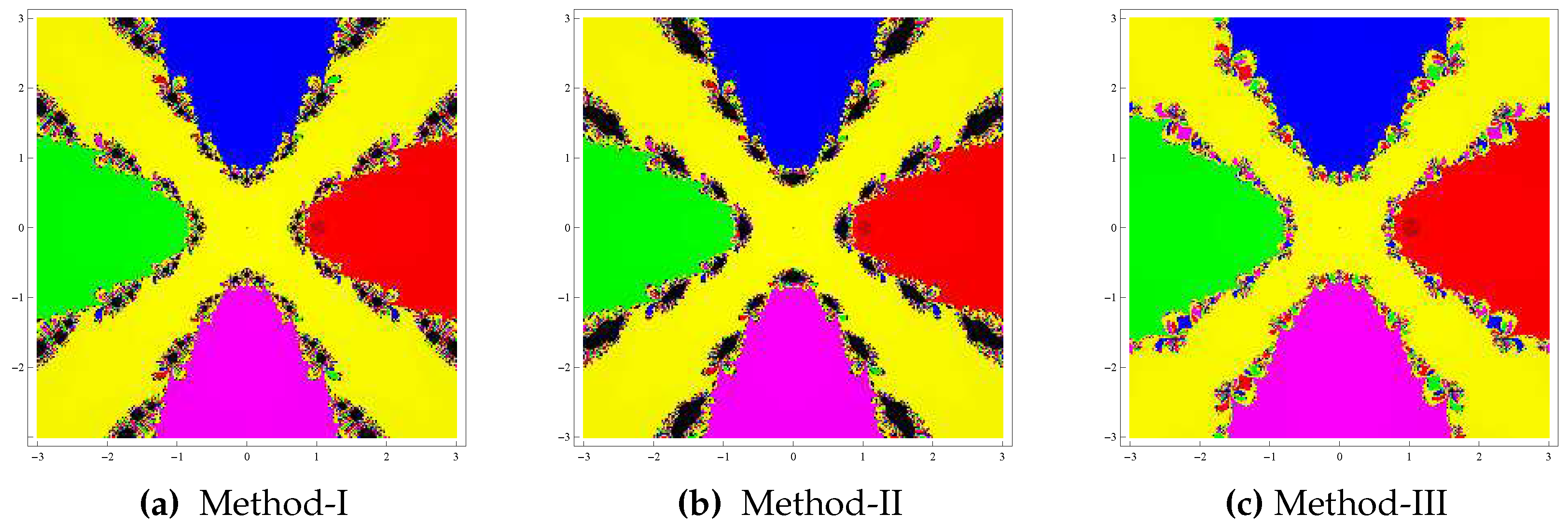

Test problem 3. Consider biquadratic polynomial

having simple zeros

. The basins for this polynomial are exhibited in

Figure 3. We observed that Method-III showed good convergence with wider basins of attraction of the zeros in comparison to other methods. We also noticed that Method-II had bad stability properties.

Test problem 4. Let

having simple zeros

. Like previous problems, in this problem also, Method-III had a good convergence property for the solutions in comparison to other methods (see

Figure 4). Moreover, this was the best method in terms of the least chaotic behavior on the boundary points. On the contrary, Method-II had the highest number of divergent points, and was followed by Method-I.

5. Conclusions

In the present study, we discussed the convergence of existing Jarratt-like methods of sixth order. In the earlier study of convergence, the conditions used were based on Taylor’s expansions requiring up to the sixth or higher-order derivatives of function, although the iterative procedures used first-order derivatives only. It is quite well understood that these hypotheses restrict the applications of the scheme. However, the present study extended the suitability of methods by using assumptions on the first-order derivative only. Moreover, this approach provides the radius of convergence, bounds on error, and estimates on the uniqueness of the solution of equations. These important elements of convergence are not established by the approaches such as Taylor series expansions with higher order derivatives which may not exist, may be costly, or may be difficult to calculate. So, we do not have any idea how close the initial guess was to the solution of convergence for the method. That is to say the initial guess is a shot in the dark by the approaches of applying Taylor series expansions. Theoretical results of convergence so obtained are verified by numerical testing. Finally, the convergence regions of the methods were also assessed by a graphical drawing tool; namely, basin of attraction.