Higher-Order Derivative-Free Iterative Methods for Solving Nonlinear Equations and Their Basins of Attraction

Abstract

1. Introduction

2. Development of Derivative-Free Scheme

2.1. Optimal Fourth-Order Method

2.2. Optimal Eighth-Order Method

2.3. Optimal Sixteenth-Order Method

3. Convergence Analysis

4. Some Known Derivative-Free Methods

5. Test Problems

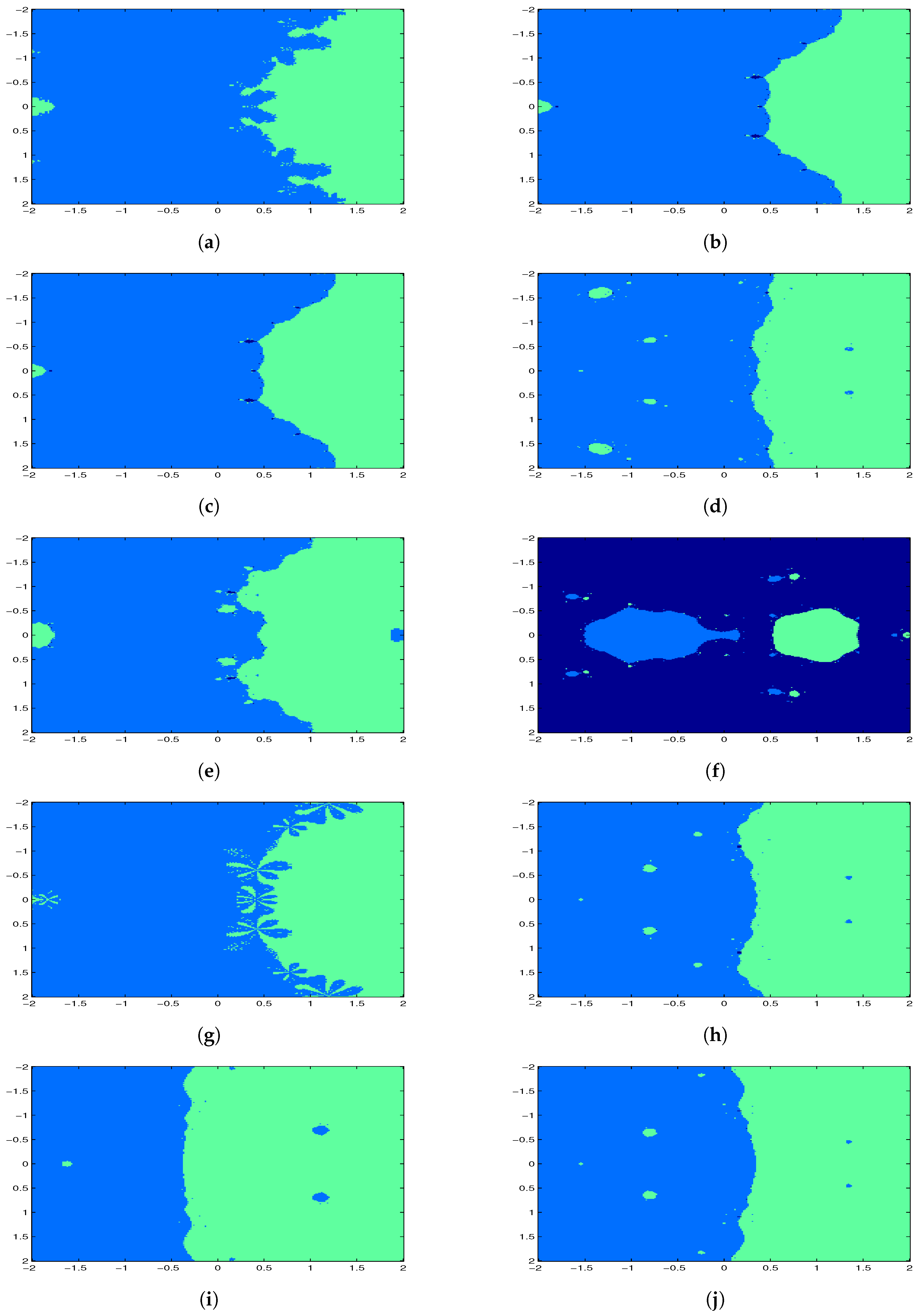

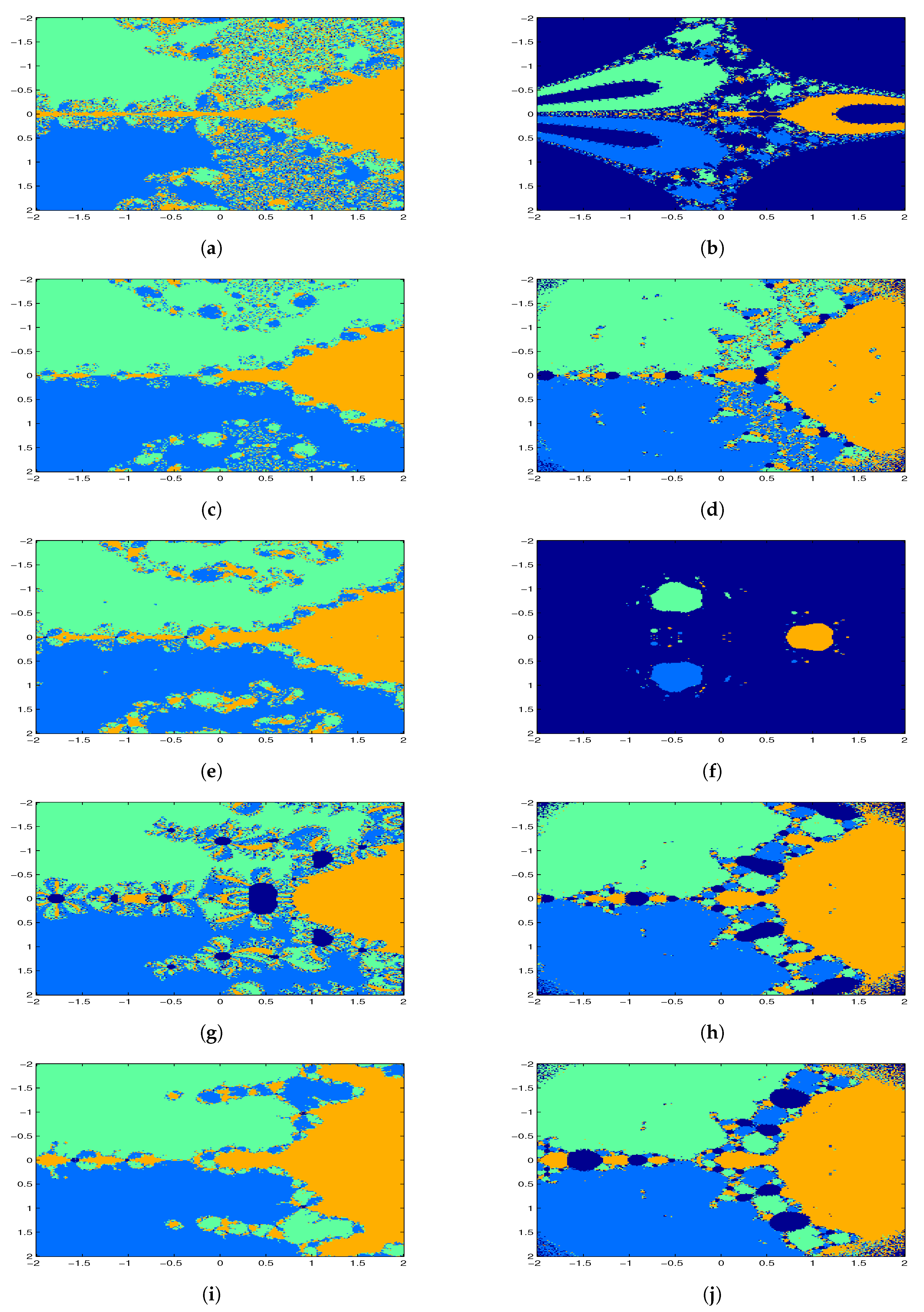

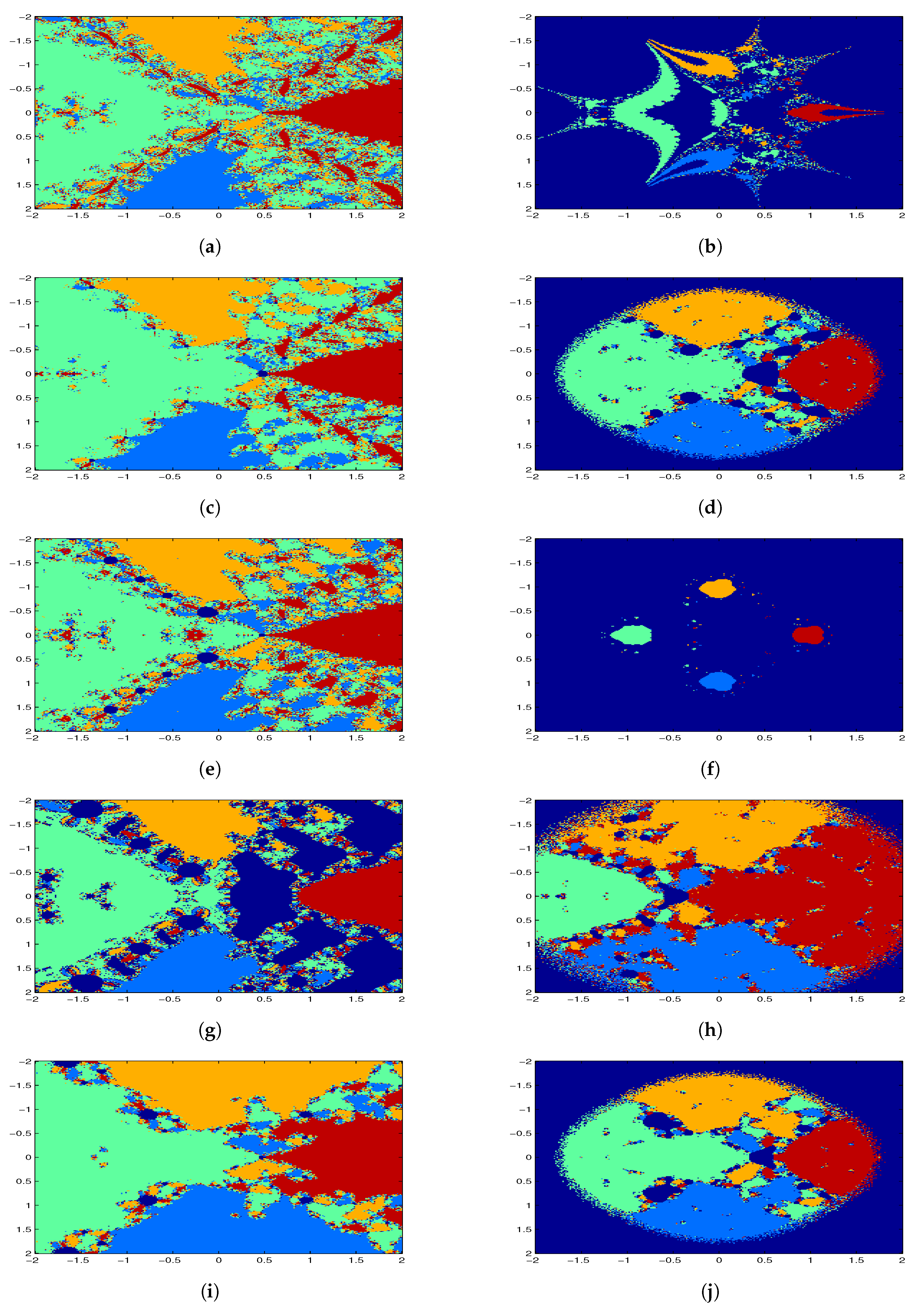

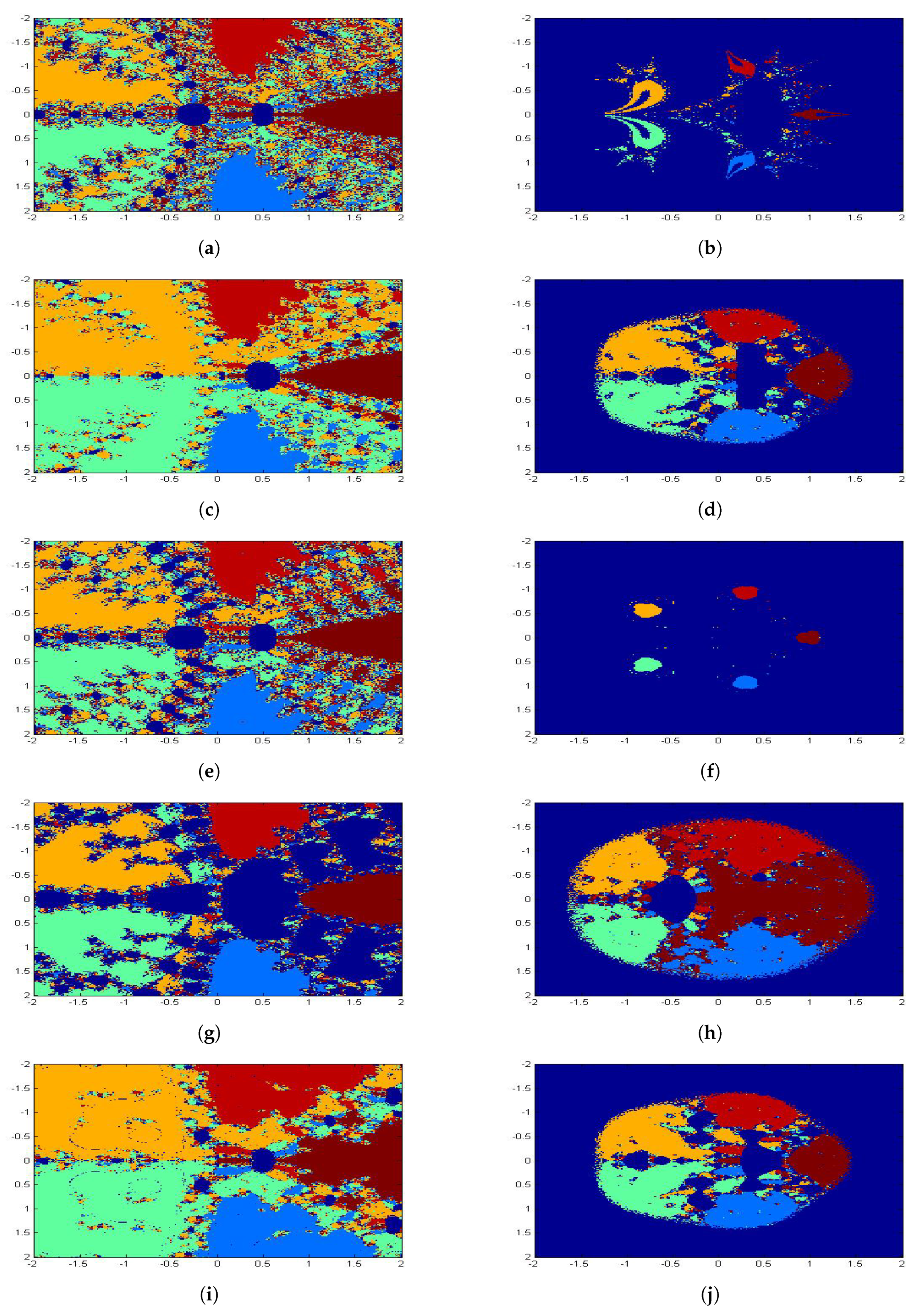

6. Basins of Attraction

7. Concluding Remarks

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Cordero, A.; Hueso, J.L.; Martinez, E.; Torregrosa, J.R. Generating optimal derivative free iterative methods for nonlinear equations by using polynomial interpolation. Appl. Math. Comp. 2013, 57, 1950–1956. [Google Scholar] [CrossRef]

- Soleymani, F. Efficient optimal eighth-order derivative-free methods for nonlinear equations. Jpn. J. Ind. Appl. Math. 2013, 30, 287–306. [Google Scholar] [CrossRef]

- Steffensen, J.F. Remarks on iteration. Scand. Aktuarietidskr. 1933, 16, 64–72. [Google Scholar] [CrossRef]

- Kung, H.; Traub, J. Optimal order of one-point and multi-point iteration. J. Assoc. Comput. Math. 1974, 21, 643–651. [Google Scholar] [CrossRef]

- Behl, R.; Salimi, M.; Ferrara, M.; Sharifi, S.; Alharbi, S.K. Some Real-Life Applications of a Newly Constructed Derivative Free Iterative Scheme. Symmetry 2019, 11, 239. [Google Scholar] [CrossRef]

- Salimi, M.; Long, N.M.A.N.; Sharifi, S.; Pansera, B.A. A multi-point iterative method for solving nonlinear equations with optimal order of convergence. Jpn. J. Ind. Appl. Math. 2018, 35, 497–509. [Google Scholar] [CrossRef]

- Salimi, M.; Lotfi, T.; Sharifi, S.; Siegmund, S. Optimal Newton-Secant like methods without memory for solving nonlinear equations with its dynamics. Int. J. Comput. Math. 2017, 94, 1759–1777. [Google Scholar] [CrossRef]

- Matthies, G.; Salimi, M.; Sharifi, S.; Varona, J.L. An optimal three-point eighth-order iterative method without memory for solving nonlinear equations with its dynamics. Jpn. J. Ind. Appl. Math. 2016, 33, 751–766. [Google Scholar] [CrossRef]

- Sharifi, S.; Siegmund, S.; Salimi, M. Solving nonlinear equations by a derivative-free form of the King’s family with memory. Calcolo 2016, 53, 201–215. [Google Scholar] [CrossRef][Green Version]

- Khdhr, F.W.; Saeed, R.K.; Soleymani, F. Improving the Computational Efficiency of a Variant of Steffensen’s Method for Nonlinear Equations. Mathematics 2019, 7, 306. [Google Scholar] [CrossRef]

- Soleymani, F.; Babajee, D.K.R.; Shateyi, S.; Motsa, S.S. Construction of Optimal Derivative-Free Techniques without Memory. J. Appl. Math. 2012, 2012, 24. [Google Scholar] [CrossRef]

- Soleimani, F.; Soleymani, F.; Shateyi, S. Some Iterative Methods Free from Derivatives and Their Basins of Attraction for Nonlinear Equations. Discret. Dyn. Nat. Soc. 2013, 2013, 10. [Google Scholar] [CrossRef]

- Soleymani, F.; Sharifi, M. On a General Efficient Class of Four-Step Root-Finding Methods. Int. J. Math. Comp. Simul. 2011, 5, 181–189. [Google Scholar]

- Soleymani, F.; Vanani, S.K.; Paghaleh, M.J. A Class of Three-Step Derivative-Free Root Solvers with Optimal Convergence Order. J. Appl. Math. 2012, 2012, 15. [Google Scholar] [CrossRef]

- Kanwar, V.; Bala, R.; Kansal, M. Some new weighted eighth-order variants of Steffensen-King’s type family for solving nonlinear equations and its dynamics. SeMA J. 2016. [Google Scholar] [CrossRef]

- Amat, S.; Busquier, S.; Plaza, S. Dynamics of a family of third-order iterative methods that do not require using second derivatives. Appl. Math. Comput. 2004, 154, 735–746. [Google Scholar] [CrossRef]

- Amat, S.; Busquier, S.; Plaza, S. Review of some iterative root-finding methods from a dynamical point of view. SCIENTIA Ser. A Math. Sci. 2004, 10, 3–35. [Google Scholar]

- Babajee, D.K.R.; Madhu, K. Comparing two techniques for developing higher order two-point iterative methods for solving quadratic equations. SeMA J. 2019, 76, 227–248. [Google Scholar] [CrossRef]

- Cordero, A.; Hueso, J.L.; Martinez, E.; Torregrosa, J.R. A family of iterative methods with sixth and seventh order convergence for nonlinear equations. Math. Comput. Model. 2010, 52, 1490–1496. [Google Scholar] [CrossRef]

- Curry, J.H.; Garnett, L.; Sullivan, D. On the iteration of a rational function: computer experiments with Newton’s method. Commun. Math. Phys. 1983, 91, 267–277. [Google Scholar] [CrossRef]

- Soleymani, F.; Babajee, D.K.R.; Sharifi, M. Modified Jarratt Method without Memory with Twelfth-Order Convergence. Ann. Univ. Craiova Math. Comput. Sci. Ser. 2012, 39, 21–34. [Google Scholar]

- Tao, Y.; Madhu, K. Optimal Fourth, Eighth and Sixteenth Order Methods by Using Divided Difference Techniques and Their Basins of Attraction and Its Application. Mathematics 2019, 7, 322. [Google Scholar] [CrossRef]

- Vrscay, E.R. Julia sets and mandelbrot-like sets associated with higher order Schroder rational iteration functions: a computer assisted study. Math. Comput. 1986, 46, 151–169. [Google Scholar]

- Vrscay, E.R.; Gilbert, W.J. Extraneous fxed points, basin boundaries and chaotic dynamics for Schroder and Konig rational iteration functions. Numer. Math. 1987, 52, 1–16. [Google Scholar] [CrossRef]

- Argyros, I.K.; Kansal, M.; Kanwar, V.; Bajaj, S. Higher-order derivative-free families of Chebyshev-Halley type methods with or without memory for solving nonlinear equations. Appl. Math. Comput. 2017, 315, 224–245. [Google Scholar] [CrossRef]

- Zheng, Q.; Li, J.; Huang, F. An optimal Steffensen-type family for solving nonlinear equations. Appl. Math. Comput. 2011, 217, 9592–9597. [Google Scholar] [CrossRef]

- Madhu, K. Some New Higher Order Multi-Point Iterative Methods and Their Applications to Differential and Integral Equation and Global Positioning System. Ph.D. Thesis, Pndicherry University, Pondicherry, India, June 2016. [Google Scholar]

- Scott, M.; Neta, B.; Chun, C. Basin attractors for various methods. Appl. Math. Comput. 2011, 218, 2584–2599. [Google Scholar] [CrossRef]

| Methods | N | |||||

|---|---|---|---|---|---|---|

| (1) | 8 | 0.0996 | 0.0149 | 6.1109 | 1.0372 | 1.99 |

| (22) | 5 | 0.1144 | 6.7948 | 3.4668 | 5.1591 | 4.00 |

| (23) | 4 | 0.1147 | 3.6299 | 9.5806 | 4.6824 | 3.99 |

| (24) | 5 | 0.1145 | 6.1744 | 1.5392 | 1.3561 | 4.00 |

| (5) | 4 | 0.1150 | 1.3758 | 2.6164 | 3.4237 | 3.99 |

| (25) | 3 | 0.1151 | 1.2852 | 3.7394 | 3.7394 | 7.70 |

| (26) | 3 | 0.1151 | 8.1491 | 1.5121 | 1.5121 | 7.92 |

| (27) | 4 | 0.1151 | 1.8511 | 1.0266 | 0 | 7.99 |

| (9) | 3 | 0.1151 | 7.1154 | 9.3865 | 9.3865 | 8.02 |

| (28) | 3 | 0.1151 | 5.6508 | 1.4548 | 1.4548 | 15.82 |

| (13) | 3 | 0.1151 | 5.3284 | 1.2610 | 1.2610 | 16.01 |

| Methods | N | |||||

|---|---|---|---|---|---|---|

| (1) | 12 | 0.0560 | 0.0558 | 0.0520 | 1.7507 | 1.99 |

| (22) | 5 | 0.2184 | 0.0163 | 3.4822 | 4.7027 | 3.99 |

| (23) | 33 | 0.0336 | 0.0268 | 0.0171 | 2.4368 | 0.99 |

| (24) | 5 | 0.2230 | 0.0117 | 4.4907 | 3.9499 | 3.99 |

| (5) | 5 | 0.2123 | 0.0224 | 2.3433 | 4.3969 | 4.00 |

| (25) | 4 | 0.2175 | 0.0173 | 1.2720 | 1.0905 | 8.00 |

| (26) | D | D | D | D | D | D |

| (27) | 4 | 0.2344 | 4.1548 | 9.5789 | 7.7650 | 7.89 |

| (9) | 4 | 0.2345 | 2.4307 | 4.6428 | 8.2233 | 8.00 |

| (28) | 3 | 0.2348 | 2.2048 | 1.9633 | 1.9633 | 15.57 |

| (13) | 3 | 0.2348 | 2.8960 | 1.7409 | 1.7409 | 17.11 |

| Methods | N | |||||

|---|---|---|---|---|---|---|

| (1) | 7 | 0.3861 | 0.0180 | 4.6738 | 1.0220 | 1.99 |

| (22) | 4 | 0.3683 | 2.8791 | 1.0873 | 2.2112 | 3.99 |

| (23) | 4 | 0.3683 | 2.5241 | 5.2544 | 9.8687 | 3.99 |

| (24) | 4 | 0.3683 | 3.1466 | 1.7488 | 1.6686 | 4.00 |

| (5) | 4 | 0.3683 | 2.2816 | 2.3732 | 2.7789 | 3.99 |

| (25) | 3 | 0.3680 | 1.7343 | 3.8447 | 3.8447 | 8.00 |

| (26) | 4 | 0.3680 | 4.2864 | 1.8700 | 2.4555 | 7.99 |

| (27) | 3 | 0.3680 | 7.8469 | 2.9581 | 2.9581 | 8.00 |

| (9) | 3 | 0.3680 | 9.7434 | 1.0977 | 1.0977 | 8.04 |

| (28) | 3 | 0.3680 | 1.4143 | 6.3422 | 6.3422 | 16.03 |

| (13) | 3 | 0.3680 | 3.6568 | 7.4439 | 7.4439 | 16.04 |

| Methods | N | |||||

|---|---|---|---|---|---|---|

| (1) | 7 | 0.4975 | 0.0500 | 2.5378 | 1.9405 | 2.00 |

| (22) | 4 | 0.2522 | 1.7586 | 1.5651 | 9.8198 | 3.99 |

| (23) | 4 | 0.5489 | 0.0011 | 3.8305 | 5.5011 | 3.99 |

| (24) | 4 | 0.5487 | 9.0366 | 1.4751 | 1.0504 | 3.99 |

| (5) | 4 | 0.5481 | 3.0864 | 8.0745 | 3.7852 | 3.99 |

| (25) | 3 | 0.5477 | 5.4938 | 4.9628 | 4.9628 | 8.17 |

| (26) | 3 | 0.5477 | 4.1748 | 5.8518 | 5.8518 | 8.47 |

| (27) | 3 | 0.5477 | 5.4298 | 4.1081 | 4.1081 | 8.18 |

| (9) | 3 | 0.5477 | 5.8222 | 1.1144 | 1.1144 | 8.13 |

| (28) | 3 | 0.5477 | 2.7363 | 7.2982 | 7.2982 | 16.13 |

| (13) | 3 | 0.5477 | 5.6240 | 1.9216 | 1.9216 | 16.11 |

| Methods | N | |||||

|---|---|---|---|---|---|---|

| (1) | 7 | 0.3072 | 0.0499 | 6.4255 | 4.1197 | 2.00 |

| (22) | 5 | 0.2585 | 0.0019 | 1.5538 | 3.4601 | 4.00 |

| (23) | 4 | 0.2571 | 4.4142 | 3.4097 | 1.2154 | 3.99 |

| (24) | 4 | 0.2580 | 0.0013 | 3.5840 | 1.8839 | 3.99 |

| (5) | 4 | 0.2569 | 2.8004 | 6.2960 | 1.6097 | 3.99 |

| (25) | 3 | 0.2566 | 4.1915 | 6.3444 | 6.3444 | 8.37 |

| (26) | 4 | 0.2566 | 4.0069 | 5.1459 | 0 | 7.99 |

| (27) | 4 | 0.2566 | 2.9339 | 1.0924 | 0 | 7.99 |

| (9) | 3 | 0.2566 | 3.7923 | 9.0207 | 9.0207 | 7.99 |

| (28) | 3 | 0.2566 | 5.3695 | 7.0920 | 7.0920 | 16.06 |

| (13) | 3 | 0.2566 | 1.1732 | 1.2394 | 1.2394 | 16.08 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, J.; Wang, X.; Madhu, K. Higher-Order Derivative-Free Iterative Methods for Solving Nonlinear Equations and Their Basins of Attraction. Mathematics 2019, 7, 1052. https://doi.org/10.3390/math7111052

Li J, Wang X, Madhu K. Higher-Order Derivative-Free Iterative Methods for Solving Nonlinear Equations and Their Basins of Attraction. Mathematics. 2019; 7(11):1052. https://doi.org/10.3390/math7111052

Chicago/Turabian StyleLi, Jian, Xiaomeng Wang, and Kalyanasundaram Madhu. 2019. "Higher-Order Derivative-Free Iterative Methods for Solving Nonlinear Equations and Their Basins of Attraction" Mathematics 7, no. 11: 1052. https://doi.org/10.3390/math7111052

APA StyleLi, J., Wang, X., & Madhu, K. (2019). Higher-Order Derivative-Free Iterative Methods for Solving Nonlinear Equations and Their Basins of Attraction. Mathematics, 7(11), 1052. https://doi.org/10.3390/math7111052