Abstract

We present a new method to efficiently solve a multi-dimensional linear Partial Differential Equation (PDE) called the quasi-inverse matrix diagonalization method. In the proposed method, the Chebyshev-Galerkin method is used to solve multi-dimensional PDEs spectrally. Efficient calculations are conducted by converting dense equations of systems sparse using the quasi-inverse technique and by separating coupled spectral modes using the matrix diagonalization method. When we applied the proposed method to 2-D and 3-D Poisson equations and coupled Helmholtz equations in 2-D and a Stokes problem in 3-D, the proposed method showed higher efficiency in all cases than other current methods such as the quasi-inverse method and the matrix diagonalization method in solving the multi-dimensional PDEs. Due to this efficiency of the proposed method, we believe it can be applied in various fields where multi-dimensional PDEs must be solved.

1. Introduction

The spectral method has been used in many fields to solve linear partial differential equations (PDEs) such as the Poisson equation, the Helmholtz equation, and the diffusion equation [1,2]. The advantage of the spectral method over other numerical methods in solving linear PDEs is its high accuracy; when solutions of PDEs are smooth enough, errors of numerical solutions decrease exponentially as the number of discretization nodes increases [3].

Based on the types of boundary conditions, different spectral basis functions can be used to discretize in physical space. There are several boundary conditions used in solving mathematical and engineering linear PDEs, but the boundary conditions can be classified as periodic and non-periodic boundary conditions. For periodic boundary conditions, the domain is usually discretized using the Fourier series, while Lengendre polynomials and Chebyshev polynomials are most frequently used as basis functions for non-periodic boundary conditions. To investigate an efficient way to spectrally solve PDEs in this paper, we restrict the scope of this paper by only considering linear PDEs with non-periodic boundary conditions. Of the spectral basis functions that can be used with non-periodic boundary conditions, we use the Chebyshev polynomial to discretize the equations in space to use their minimum error properties and achieve spectral accuracy across the whole domain [4].

There are several methods for solving linear PDEs using Chebyshev polynomials. In the collocation method, linear PDEs are directly solved in physical space so that implementation of the method is relatively easy compared to other methods. However, the differentiation matrix derived from the collocation method is full, making computation slow, and ill-conditioning of the differentiation matrix in the collocation method can produce numerical solutions with high errors, especially when a high number of collocation points is used [5].

Another method for solving linear PDEs spectrally with Chebyshev polynomials is the Chebyshev-tau method, which involves solving linear PDEs in spectral space. In the Chebyshev-tau method, the boundary conditions of PDEs are directly enforced to the equation of the system [5,6,7]. This enforcement of boundary conditions produces tau lines, making numerical calculation slow because tau lines increase interactions between Chebyshev modes [8,9]. As the dimension of PDEs rises, the length of calculation time caused by tau lines increases rapidly, resulting in significant lagging to solve PDEs [10,11].

Unlike the Chebyshev-tau method, the Chebyshev-Galerkin method removes direct enforcement of boundary conditions to the equation of the system by discretizing in space using carefully chosen basis functions called Galerkin basis functions that automatically obey given boundary conditions [8]. As a result, when the Chebyshev-Galerkin method is used, tau lines are not created in the system of equation, and therefore, the interaction of tau lines in numerical calculations is not a concern. Because of this advantage, the Chebyshev-Galerkin method is popular for spectral calculation of PDEs. For example, Shen [12] studied proper choices of Galerkin basis functions satisfying standard boundary conditions such as Dirichlet and Neumann boundary conditions and solved linear PDEs with them, and Julien and Watson [13] and Liu et al. [11] used the Galerkin basis functions to solve multi-dimensional linear PDEs with high efficiency.

In 1-D problems, it is straightforward to write linear PDEs as a matrix form in spectral space using a differentiation matrix (see Section 2 for more details). However, because the differentiation matrix is an upper triangular matrix, solving linear PDEs by inverting the differentiation matrix (or using iterative methods to solve the differentiation matrix) is a computationally expensive way to solve equations. Because computational cost increases rapidly as the dimension of the problems increases, efficiently computing solutions of linear PDEs becomes even more important in multi-dimensional problems. In 1-D problems, computational time can be easily saved by modifying the dense equation to sparse systems using the three term recurrence relation [14]. However, due to the coupling of Chebyshev modes in multi-dimensional problems, finding an efficient way to solve linear PDEs is not as straightforward as in 1-D problems.

To facilitate computation in multi-dimensional linear PDE problems, Julien and Watson [13] presented a method called the quasi-inverse technique. Based on the three term recursion relationship in 1-D, they invented a quasi-inverse matrix, and applied it to multi-dimensional linear PDE problems. As a result of the quasi-inverse technique, dense differential operators in multi-dimensional linear PDEs were reduced to sparse operators, then it was possible to efficiently solve multi-dimensional linear PDEs from the sparse systems with approximately operations for k-dimensional problems. Haidvogel and Zang [15] and Shen [12] presented another way to efficiently solve multi-dimensional linear PDEs called matrix diagonalization method. The key of the matrix diagonalization method is decoupling the coupled Chebyshev modes using the eigenvalue and eigenvector decomposition method [16] and lowering dimensionality of the problem to convert solving a high dimensional problem into solving 1-D linear PDE problems multiple times as a function of the eigenvalues of the system [17].

In this paper, we propose a new method to solve multi-dimensional linear PDEs using Chebyshev polynomials called the quasi-inverse matrix decomposition method. Our method was created by adapting the quasi-inverse technique to the matrix diagonalization method. In our method, the equations of systems are first derived using the quasi-inverse technique, then the matrix diagnalization method is used to efficiently solve the derived equations of the systems using eigenvalue and eigenvector decomposition. Because our method uses merits of both the quasi-inverse technique and the matrix diagonalization method, it computes numerical solutions of multi-dimesional linear PDE problems faster than these two methods. We will describe our quasi-inverse matrix decomposition method in this paper by applying it to four test examples.

The rest of our paper is composed as follows. In Section 2, we review the Chebyshev-tau method, the Chebyshev-Galerkin method, and the quasi-inverse technique with a 1-D Poisson equation. Then, the quasi-inverse matrix decomposition method is explained in the next section by applying it to solve 2-D and 3-D Poisson equations based on the Chebyshev-Galerkin method. In Section 4, we apply our quasi-inverse matrix decomposition method to several multi-dimensional linear PDEs and compare results to the ones from the quasi-inverse method and matrix diagonalization method in terms of accuracy of numerical solutions and CPU time to solve the problems. Our conclusions are described in Section 5.

2. 1-D Poisson Equation

In this section, we will explain the Chebyshev-tau method, the Chebyshev-Galerkin method, and the quasi-inverse technique by applying them to solve a 1-D Poisson equation.

2.1. Chebyshev-Tau Method

We start with the 1-D Poisson equation with the Dirichlet boundary conditions as follows:

Definition 1.

The and can be approximated in space with the M-th highest mode, respectively defined as

and

where is the m-th Chebyshev polynomial in x, and and are the m-th Chebyshev coefficients of and , respectively.

Definition 2.

The second derivative of u with respect to x (defined as ) can be approximated by a sum of the coefficients of the second derivatives multiplied by Chebyshev polynomials

Definition 3.

The coefficients of the second derivatives can be written as

where and for [18].

As a matrix form, Equation (6) can be rewritten as

where is usually called the 1-D Laplacian matrix descretized in x, whose matrix components are defined by the left-hand side of Equation (6), and and are the arrays that are defined as and , respectively. Note that is the square matrix of order , but the rank of the matrix is because all elements at the two bottom-most rows are zeros. To make Equation (7) solvable, two additional equations that are provided from the boundary conditions of the Poisson equation as follows

are required to be enforced to the matrix in Equation (7). If the boundary conditions incorporated 1-D Laplacian matrix and the boundary conditions incorporated right-hand side array are defined as and , respectively, solutions of the 1-D Poisson equation can be obtained from

by solving Equation (9) for . However, solving the 1-D Poisson equation from Equation (9) is an inefficient way to achieve numerical solutions because the left-hand side matrix of Equation (9) is the upper triangular matrix with the tau lines at the bottom. Operation complexity of solving this matrix is , which is not computationally cheap.

2.2. Quasi-Inverse Approach

A better way of computing the 1-D Poisson equation is using recursion relations in computing derivatives

where is the m-th Chebyshev coefficients of q-th derivative of u with respect to x defined as

If we use Equation (10) with and , it respectively gives

where is used instead of . Without loss generality, to satisfy Equation (1) in the Chebyshev space for all m, the condition is needed to be established. Then, substituting and in Equation (12) to and , respectively, and rearranging the terms give

Remark 1.

If the matrix is defined as the identity matrix except for the two top-most diagonal elements whose values zero out as , Equation (15) can be written in a matrix form as

where the matrix is the tridiagonal matrix, where the non-zero elements of defined by are given as

for .

Note that the subscript x in matrix symbols (e.g., and ) is to indicate that those matrices are associated with x. Using this subscript symbol identifying which direction the matrix comes from is useful in distinguishing the influence of matrices in multi-dimensional problems where subscripts can be x and y in 2-D, and , and z in 3-D. When the matrix is multiplied on the left in Equation (7), it is possible to claim

The matrix is called a quasi-inverse matrix in x for the Laplace operator because it acts like an inverse matrix of the Laplace operator, producing the quasi-identity matrix (not identity matrix) when multiplied with the Laplace operator.

Similar to the Chebyshev-tau method, boundary conditions need to be incorporated to Equation (16). To do it, we rewrite two equations in Equation (8) as a matrix. By defining as the matrix whose elements are zeros, except for the top two rows where all elements’ values in the top row are one and the values in the second row from the top are alternatively 1 and from the first to last columns, the boundary conditions can be written in a matrix form as

where the is the zero array. Adding Equation (18) to Equation (16) gives

Note that is the zero array only under the given boundary conditions in Equation (1). The can be omitted in Equation (19) because is the zero array, but we represent this term to clearly show the boundary condition enforcement to the equation. The left-hand side matrix of Equation (19) is sparse, so it can be solved efficiently. Non-zero components of are diagonal elements from and the top two rows’ entries from forming tau lines. This matrix can be solved with operations for using a simple Gauss elimination. Compared to the method described in Section 2.1, solving a Poisson equation with the quasi-inverse approach is more efficient because the quasi-inverse approach allows reduction of operation complexity by one order of the number of unknowns.

The key point of the quasi-inverse approach for a Poisson equation is the constitution of the quasi-inverse matrix for the Laplace operator. Multiplying to the both sides of the Poisson equation simplifies the system of the equation based on the property of described in Equation (17). This property of the quasi-inverse matrix can also be used in solving multi-dimensional PDEs efficiently, which will be described in Section 3.

2.3. Chebyshev-Galerkin Method

One disadvantage of the Chebyshev-tau method is the direct enforcement of boundary conditions to the system, which creates tau lines in the equation. For example, when the Poisson equation is solved with the quasi-inverse technique using the Chebyshev-tau method so that the final equation is expressed as Equation (19), the enforcement of boundary conditions generates tau lines in the top two rows. These tau lines increase interaction between Chebyshev modes, hindering fast calculations of the equation. To overcome this drawback of the Chebyshev-tau method and enable efficient computation, researchers have used the Chebyshev-Galerkin method to solve PDEs.

While the boundary conditions of PDEs are directly enforced to the equations of systems in the Chebyshev-tau method, when the Chebyshev-Galerkin method is used, boundary conditions are automatically satisfied without direct boundary condition enforcement to the equations. This is done by discretizing PDEs with new basis functions called Galerkin basis functions that satisfy the given boundary conditions automatically. There are plenty of ways to define Galerkin basis functions satisfying specific boundary conditions, but one commonly used way is representing Galerkin basis functions with linear combinations of Chebyshev polynomials to allow simple but fast transformations between Chebyshev space and Galerkin space. In solving PDEs with Dirichlet boundary conditions using the Chebyshev-Galerkin method, Shen [12] showed the efficiency of the following Galerkin basis functions

where is the m-th Galerkin basis function. In this paper, we used the same Galerkin basis functions as Shen used to transform efficiently from Chebyshev space to Galerkin space and vice versa.

Definition 4.

When a 1-D Poisson equation is solved with the Chebyshev-Galerkin method using the Galerkin basis functions defined in Equation (20), can be approximated by a sum of the Galerkin basis functions multiplied by their coefficients , which can be written as

Remark 2.

Note that the matrix is not a square matrix because is discretized with -th modes in Chebyshev space but discretized with -th modes in Galerkin space, where the difference comes from the absence of boundary conditions enforcement at the Chebyshev-Galerkin method. Substituting Equation (22) into Equation (16) gives

where and . Note that is the matrix and is the array. The top two rows’ elements of the matrix and array are zeros because these rows are discarded for boundary conditions enforcement. However, in the Chebyshev-Garlerkin method, since the boundary conditions are automatically obeyed by the proper choice of Galerkin basis functions, the top two rows of and are no longer necessary. Therefore, those modes can be deleted from the equation so we can only consider non-discarded modes in Equation (23).

Definition 5.

Let us define the symbol tilde to represent the top two rows of a certain matrix that are thrown away. Based on this notation, and are respectively the matrix and array, which are the subsets of and , where the top two rows of and are omitted, respectively. Then, we can take into account only non-discarded modes from Equation (23) by using and

Because the matrix is the bi-diagonal matrix whose main diagonal elements are all 1 and the second super-diagonal elements are all , Equation (24) can be solved for with the backward substitution efficiently in operations. After is computed, the solution of the 1-D Poisson equation can be easily obtained from Equation (22).

3. Quasi-Inverse Matrix Diagonalization Method

The quasi-inverse technique can be used with both the Chebyshev-tau method and the Chebyshev-Galerkin method. In this paper, we will use the quasi-inverse technique with the Chebyshev-Galerkin method to solve multi-dimensional problems for two reasons: (1) using the Galerkin basis function is much more convenient to apply the matrix diagonalization method because the Chebyshev-tau method needs to impose boundary conditions to the equation, which makes the entire calculation complicated in multi-dimensional problems and sometimes makes equations non-separable, and (2) the Chebyshev-Galerkin method is faster than the Chebyshev-tau method to obtain numerical solutions of PDEs. Due to these advantages, our quasi-inverse matrix diagonalization method is invented based on the Chebyshev-Galerkin method. In this section, we will introduce the quasi-inverse matrix diagonalization method by applying it to solve 2-D and 3-D Poisson equations.

3.1. 2-D Poisson Equation

Solving PDEs (including 2-D and 3-D Poisson equations) using the quasi-inverse matrix diagonalization method is divided into two steps.: (1) derive sparse equations of systems using the quasi-inverse technique, and (2) solve the derived equations efficiently using the matrix diagonalization method.

3.1.1. Quasi-Inverse Technique

The 2-D Poisson equation with Dirichlet boundary conditions can be expressed as follows:

Definition 6.

When the 2-D Poisson equation is solved spectrally with Chebyshev polynomials, and are respectively discretized in space as

and

where L and M are the highest Chebyshev modes in x and y, respectively, and and are Chebyshev coefficients of and , respectively.

Multi-dimensional PDEs can be solved with the combination of a 1-D differential matrix using the Kronecker product [19]. Here, the Kronecker product of matrices and is defined as

where is the element of matrix . Because the second derivative of u with respect to x and y in 2-D are respectively equal to and where and are respectively the 1-D Laplacian matrices differentiated in x and y, Equation (25) can be rewritten in a matrix form as

Here, and are the arrays where the i row elements and equal and , respectively, where for and . To make use of the advantage of the quasi-inverse technique, we multiply on the left by to Equation (28). Then, based on the property of the Kronecker product such that , we can obtain

Definition 7.

In the Chebyshev-Galerkin method, is approximated by the coefficients of the Galerkin basis functions multiplied by the Galerkin basis functions in x and y, defined by

Remark 3.

If we define as the array in which the j row element is equal to where for and , equating Equations (30) and (26) allows us to transform Chebyshev space to Galerkin space from

Here, the sizes of matrices and are and , respectively.

Then, by defining and where and y, it is possible to obtain

from Equation (32). The top two rows of all matrices in Equation (33) are zero because these rows are discarded for boundary conditions enforcement. As studied in the 1-D Poisson equation, discarded modes should be omitted from the equation when a PDE is solved with the Chebyshev-Galerkin method. Using the tilde notation described in Section 2.3, we can only consider non-discarded modes at Equation (33), which can be written as

The array can be computed by inverting the sparse matrix in Equation (34), but we will further use the matrix diagonalization method to obtain the solutions more efficiently.

3.1.2. Matrix Diagonalization Method

To separate Equation (34) using the eigenvalue and eigenvector decomposition technique, we first reshape the array to the matrix and define the matrix as . Similarly, let’s reshape the array to the matrix and define the matrix as . Then, we can rewrite Equation (34) as

Multiplying to Equation (35) on the right by gives

We want to emphasize that an n-by-n matrix is diagonalizable if and only if the matrix has n independent eigenvectors [20]. From this argument, we can easily show that the matrix is diagonalizable because matrices and (and their inverse and transpose matrices) are linearly independent, and multiplication of two linearly independent matrices is also linearly independent. As a result, using eigenvalue decomposition, the matrix can be decomposed as

where and are the eigenvector and diagonal eigenvalue matrices of , respectively.

After substituting Equation (37) to Equation (36), multiplying on the right by allows us to obtain

where and . The matrix in Equation (38) can be solved efficiently column by column due to the fact that is the diagonal matrix. If we set and and define the j-th diagonal element of as , the j-th column of Equation (38) can be written as

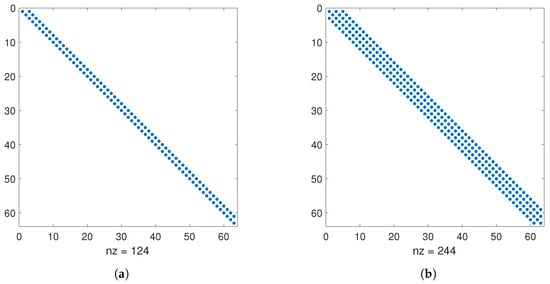

for . The matrices and are bi-diagonal and quad-diagonal matrices, respectively, whose non-zero elements are presented in Figure 1. Due to the sparsity, solving Equation (39) for can be performed efficiently with operations for all j. Once it is performed so that all elements of the matrix are obtained, the matrix can be computed from

Figure 1.

Non-zero elements of the matrices and are presented in (a) and (b), respectively. Here, both L and M are set to 64.

3.2. 3-D Poisson Equation

By following the same steps used in the 2-D problem, we can use the quasi-inverse matrix diagonalization method to 3-D PDEs. Here, we will explain the way to use the quasi-inverse matrix diagonalization method for 3-D problems, particularly applying it to solve a 3-D Poisson equation.

3.2.1. Quasi-Inverse Technique

We want to solve the 3-D Poisson equation with the following Dirichlet boundary conditions:

Definition 8.

In employing Chebyshev polynomials to solve PDEs, and are represented by the sums of Chebyshev coefficients multiplied by Chebyshev polynomials, defined as

and

where L, M and N are the highest Chebyshev modes in x, y, and z, respectively, and and are the -th Chebyshev coefficients of and , respectively.

In a matrix form, Equation (41) can be rewritten using the Kronecker product as

where and are the arrays in which the j row components of those arrays are respectively equal to and where for and . Multiplying to the left of Equation (44) by gives

Definition 9.

When using the Chebyshev-Galerkin method, we approximate with the Galerkin basis functions, with their coefficients as

where is the mode coefficient of the Galerkin basis functions.

Remark 4.

By defining as the array in which the j row component is set to where for and , the arrays and are associated by

Substituting Equation (47) to Equation (45) and distributing the transformation matrix in each direction produce

As we defined in the solution of the 2-D Poisson equation, use notations of and for and z. Then, Equation (49) can be rewritten as

At each matrix in Equation (49), all elements in the top two rows are zeros because those places are discarded to impose boundary conditions. Erasing those rows and using the symbol tilde to represent the deleted top two rows of given matrices give

For simplicity, define , and , then Equation (50) can be simplified as

3.2.2. Matrix Diagonalization Method

Decomposition of Equation (51) can be performed by using the same matrix diagonalization steps used in the 2-D Poisson equation. However, here we want to briefly describe the procedure again to clearly show how the equation is diagonalized and solved efficiently in 3-D problems.

We reshape the array and to the matrix and matrix and define them as and , respectively. With these matrices, we can rewrite Equation (51) as

Let us multiply on the right by to Equation (52)

and decompose as

where and are the eigenvector matrix and diagonal eigenvalue matrix of , respectively. Then, after substituting Equation (54) to Equation (53) and multiplying on the right by , if we define and , it is possible to obtain

for where the is the k-th diagonal element of , and and are set as and , respectively.

Equation (55) is decoupled in z direction but still coupled in x and y directions. To decouple it completely in all directions, based on the definition of and , Equation (55) can be expanded as

Again, let’s reshape arrays and to matrices and refer those matrices as and . Note that the index k in the parenthesis here is used to clearly show that those matrices are a function of k so that the matrices’ entries are changed when k is changed. Defining = allows Equation (56) to be rewritten as

At each k, Equation (57) is the 2-D coupled equation. This is the same type of equation as Equation (35), and therefore the can be efficiently solved by applying the matrix diagonalization method once again to separate coupled modes in x and y directions. To do this, after multiplying on the right by to Equation (57), decompose where and are the eigenvector matrix and diagonal eigenvalue matrix of , respectively. After that, if we multiply on the right by and set and , then we can get

Here, the is the j-th diagonal element of , and and are set to and . For each k and j, Equation (58) can be solved for in operations.

4. Numerical Examples

In this section, our quasi-inverse matrix diagonalization method is applied to several 2-D and 3-D PDEs, and the numerical results are presented. The numbers of Chebyshev modes to discretize solutions at each spatial direction do not need to be identical. Nonetheless, we use the same numbers of Chebyshev modes N at each spatial direction in all test problems for simplicity. Numerical codes are implemented in MATLAB and operated using a 16 GB-RAM Macbook Pro with a 2.3 GHz quad-core Intel Core i7 processor. For matrix diagonalization, MATLAB’s built-in function “eig” is used to compute the matrix’s eigenvalues and eigenvectors. For inverse matrix calculation, we use MATLAB’s backslash operator.

4.1. Multi-Dimensional Poisson Equation

The n-dimensional Poisson equations described in Section 3 are solved using the quasi-inverse matrix diagonalization method for and 3

where is the boundary of the rectangular domain. As a test example, we define as

Note that we use coordinates and in the 2-D problem and , , and in the 3-D problem. Then, the exact solution of Equation (59) becomes

This test problem is solved using the quasi-inverse technique, matrix diagonalization method, and quasi-inverse matrix diagonalization method. The accuracy of numerical solutions obtained with these three methods is presented in Table 1 as a function of N where the accuracy is computed by the relative -norm error between the exact and numerical solutions, defined as

where indicates the -norm, and u and are the numerical and exact solutions, respectively. In both 2-D and 3-D problems, three methods show a similar order of accuracy, which is identical until . At , all numerical solutions encounter machine accuracy (∼10) in double precision.

Table 1.

Errors of numerical solutions of 2-D and 3-D Poisson equations as a function of unknowns in 1-D. The numerical solutions are computed using QI, MD, and OIMD methods where QI, MD, and QIMD stand for Quasi-Inverse, Matrix Diagonalization, and Quasi-Inverse Matrix Diagonalization, respectively. All methods show a similar order of accuracy and reach spectral accuracy at .

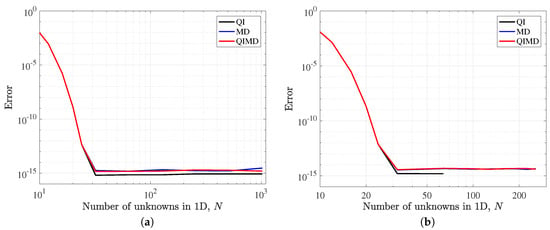

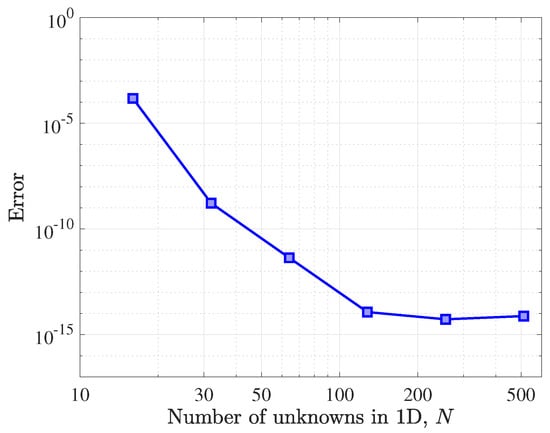

At high N, errors of numerical solutions obtained from the spectral method using Chebyshev polynomials can increase because the system of equation tends to be ill-conditioned as N increases. To be a good spectral method, the error of numerical solutions at high N should be retained near the machine accuracy and should not rise as N increases. In this aspect, to further check the error and robustness of the proposed method, we solve the Poisson problem with high N up to in 2-D and in 3-D and measure the errors of numerical solutions of the proposed method as a function of N. We present the results of this experiment in Figure 2. As shown in the figure, even at high N, the errors of numerical solutions of the proposed method retain the order of , showing high accuracy and robustness of the proposed method.

Figure 2.

Errors of numerical solutions of 2-D (plotted in (a)) and 3-D (plotted in (b)) Poisson equations with a the high number of N. In both 2-D and 3-D problems, the errors of the proposed method (QIMD) do not rise as N increases. The proposed method shows the same order of accuracy as the quasi-inverse method and the matrix diagonalization method.

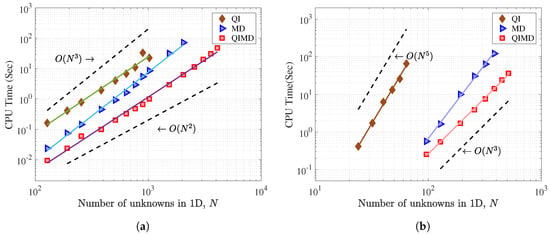

Figure 3 shows the CPU time of the quasi-inverse, matrix diagonalization, and quasi-inverse matrix diagonalization methods in solving 2-D and 3-D Poisson equations as a function of N as a log-log scale. Then, we know that the slope of each method’s data in the figure indicates the operation complexity of the corresponding method. To calculate slopes, the date are fitted using the best linear regression by where t is CPU time, and m and b are the coefficients of best linear regression. The results of the best regression are presented in Table 2 where is the r-squared value of the best linear regression.

Figure 3.

Comparison of CPU times among the Quasi-Inverse (QI), Matrix Diagonalization (MD), and Quasi-Inverse Matrix Diagonalization (QIMD) methods as a function of the one-dimensional number of unknowns in solving (a) a 2-D Poisson equation and (b) a 3-D Poisson equation. The results of the best linear regression associated with the lines in the figure are represented in Table 2. Here, and operation lines in (a), and and operation lines in (b) are plotted for comparison.

Table 2.

CPU time (t) and the number of unknowns in 1-D (N) are associated using the best linear regression in the log-log scale by . Values of m, b and of the Quasi-Inverse (QI), Matrix Diagonalization(MD) and Quasi-Inverse Matrix Diagonalization (QIMD) methods in solving 2-D and 3-D Poisson equations are presented where here is the r-squared value of the best linear regression.

In solving the 2-D Poisson equation, we observe that the operation complexity of the matrix diagonalization method is highest among the three methods, while the other two methods show similar operation complexity. However, actual CPU time of the quasi-inverse matrix diagonalization method is more than 10 times shorter than the quasi-inverse method. These observations indicate that reduction of operation complexity comes from the quasi-inverse technique’s ability to make the equations of systems sparse, rather than from the matrix diagonalization technique. However, the matrix diagonalization technique is still able to help reduce CPU times by decreasing the actual number of calculations, even though the two methods have the same order of operation complexity.

In solving the 3-D Poisson equation, as Julien and Waston claimed in [13], the operation complexity of the quasi-inverse technique is approximately . In the matrix diagonalization method, the operation complexity approximately increases from to as dimensionality of the Poisson equation rises from 2-D to 3-D. This is because the bottleneck of the matrix diagonalization method in solving the Poisson equation is computing from the dense matrix, which requires about operations per calculation, and repetition of this calculation increases from N to times. When using the quasi-inverse matrix diagonalization method to solve the 3-D problem, the most time consuming step is also computing , but the left-hand side matrix in Equation (58) is sparse where the bandwidth is four, and therefore can be solved in operations. Because this calculation is repeated times in the 3-D problem, the total operation complexity of the quasi-inverse matrix diagonalization method becomes as shown in Table 2.

4.2. Two-Dimensional Poisson Equation with No Analytic Solution

The next numerical example is a 2-D Poisson equation with the complicated forcing term. That is, we solve the same problem described in Section 3 in 2-D but with the forcing term

Note that analytic solutions cannot be found for this Poisson equation. The problem is solved using the quasi-inverse matrix diagonalization method by changing the number of unknowns so , and 1024.

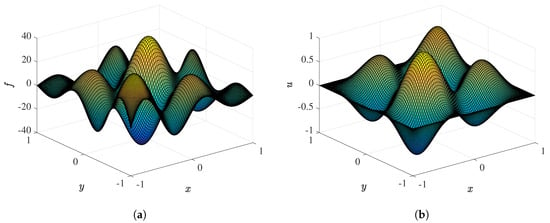

Figure 4 shows the values of the forcing term in Figure 4a and the numerical solution of the problem at in Figure 4b. To check the accuracy of the quasi-inverse matrix diagonalization method in solving this problem, we compute the numerical errors of the problem. Because no exact solution of the problem is available, we treat the numerical solution at as the most accurate solution and compare the other numerical solutions with it. Therefore, the error here indicates the relative difference between the numerical solutions obtained at and the numerical solution obtained at in a sense of the norm. We present the values of the errors as a function of N as a log-log scale in Figure 5. As N increases, the error decreases exponentially, which shows spectral accuracy of our method, as expected.

Figure 4.

Values of the forcing term and the numerical solution of a 2-D Poisson equation with no exact solution are presented in (a) and (b), respectively. The numerical solution presented in (b) is at .

Figure 5.

Error of numerical solutions of a 2-D Poisson equation with no exact solution. The numerical solutions here are obtained from the quasi-inverse matrix diagonalization method. The values of the errors are computed by comparing the numerical solution at each N with the one at .

4.3. Coupled Two-Dimensional Helmholtz Equation

We apply the quasi-inverse matrix diagonalization method to 2-D coupled Helmholtz equations and compared the numerical results with the ones obtained from the quasi-inverse technique. We consider the following coupled equations with Dirichlet boundary conditions

where and are constant numbers. As a test problem, the functions and are assumed as

Then, the exact solutions of Equation (64) are

The numerical solutions and are defined by using Chebyshev polynomials and Galerkin basis functions as

and and are defined using Chebyshev polynomials as

For and 2, define two arrays as and in which the j-th entries are respectively equal to and where for and . This allows us to express Equation (64) in a matrix form as

To make Equation (69) simple, rewrite and as and , respectively, then

Erasing the top two rows of the matrices in Equation (70) as we did in solving the multi-dimensional Poisson equations gives

where the symbol tilde is defined as before. We want to combine two equations in Equation (71). To do this, define and as

where if , otherwise 0. Then, two equations in Equation (71) can be combined as

By rearranging the terms, Equation (72) can be rewritten as

where , and as defined in Section 3.2. Because Equations (73) and (51) are the same types of equations, Equation (73) can be solved for by following the same steps as solving 3-D PDEs described in Section 3.2.

We tested coupled 2-D Helmholtz equations at and . When N is 32, 64, 128, 256, and 512, the L-infinite errors between the numerical and exact solutions and CPU times are computed using the quasi-inverse and quasi-inverse matrix diagonalization methods, and the results are presented in Table 3. In both cases, the same order of high accuracy is obtained for all N. In terms of CPU time, similar to the multi-dimensional Poisson equation problems, the latter method is much faster than the former method, especially when N is high, showing the efficiency of our quasi-inverse matrix diagonalization method.

Table 3.

Results of solving the coupled Helmholtz equations using the Quasi-Inverse (QI) and Quasi-Inverse Matrix Diagonalization (QIMD) methods with respect to accuracy and CPU times. Although both methods show the similar order of accuracy as a function of N, the CPU times of the QIMD method are much less than the ones of the QI method, showing the high efficiency of the QIMD method.

4.4. Stokes Problem

As a last text problem, a 3-D Stokes problem is solved using our quasi-inverse matrix diagonalization method. The Stokes problem we solved is

with the boundary conditions where is the boundary of the rectangular domain. In the problem, we set the body force terms and in Equation (74) as

where and . Then, the exact solutions of this Stokes problem are given by

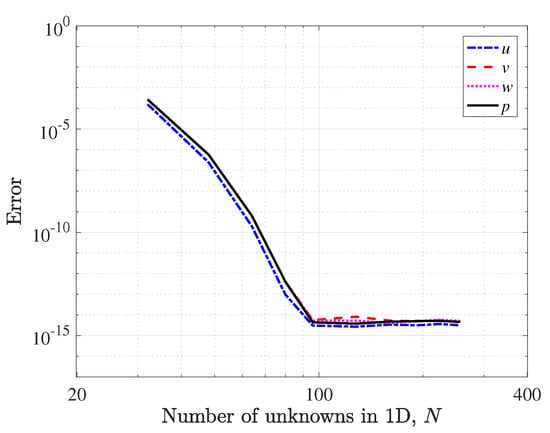

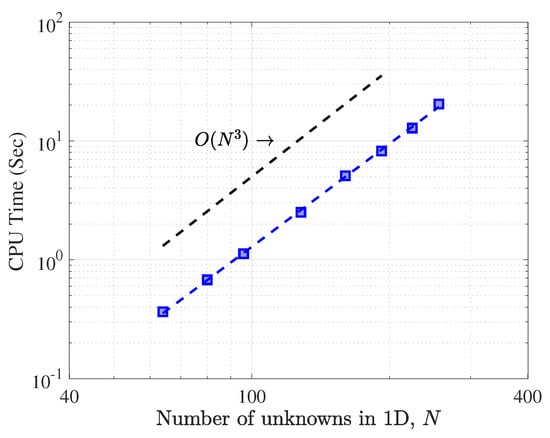

The accuracy of numerical solutions of , and p obtained using the quasi-inverse matrix diagonalization method is presented in Figure 6. The errors decay slower than the ones tested in the previous examples, but all of them show spectral convergence that reached machine accuracy around . Figure 7 shows the CPU time of the Stokes problem in a log-log scale as a function of N. When the best linear regression of the data is implemented, the slope of this graph is 2.884, approximately showing operation complexity of the 3-D Stokes problem as expected.

Figure 6.

Numerial errors of , and p as a function of N in solving the Stokes problem.

Figure 7.

Blue markers show CPU times in solving the 3-D Stokes problem. The blue dashed line is plotted from the best linear regression of the blue markers’ data, where the slope is 2.884. The black dashed line shows for comparsion.

5. Conclusions

A new efficient way of solving multi-dimensional linear PDEs spectrally using Chebyshev polynomials called the quasi-inverse matrix diagonalization method is presented in this paper. In this method, we discretize numerical solutions in spaces using the Chebyshev-Galerkin basis functions, which automatically obey the boundary conditions of the PDEs. Then, the quasi-inverse technique is used to construct sparse differential operators for the Chebyshev-Galerkin basis functions, and the matrix diagonalization technique is applied to further facilitate computations by decoupling the modes in spatial directions. It is proved that the proposed method is highly efficient compared to other current methods when applying it on the test problems. The proposed method is especially fast in computing 3-D linear PDEs. We show that the operation complexity of the proposed method in solving 3-D linear PDEs is approximately when tested with the numerical examples in this paper (tested up to ), suggesting the possibility of handling 3-D linear PDE problems with high numbers of unknowns. Although the test problems in the paper are restricted to second order linear PDEs, the quasi-inverse matrix diagonalization method can be used to solve separable high order linear PDEs such as fourth order linear PDEs which do not have cross terms (e.g., ) and biharmonic equations with , types of boundary conditions where the derivative with respect to n is the normal derivative of the boundary of the domain.

Funding

This work was supported by 2018 Korea Aerospace University faculty research grant.

Conflicts of Interest

The author declares no conflict of interest.

References

- Buzbee, B.L.; Golub, G.H.; Nielson, C.W. On direct methods for solving Poisson’s equations. SIAM J. Numer. Anal. 1970, 7, 627–656. [Google Scholar] [CrossRef]

- Bueno-Orovio, A.; Pérez-García, V.M.; Fenton, F.H. Spectral methods for partial differential equations in irregular domains: The spectral smoothed boundary method. SIAM J. Sci. Comput. 2006, 28, 886–900. [Google Scholar] [CrossRef]

- Mai-Duy, N.; Tanner, R.I. A spectral collocation method based on integrated Chebyshev polynomials for two-dimensional biharmonic boundary-value problems. J. Comput. Appl. Math. 2007, 201, 30–47. [Google Scholar] [CrossRef]

- Boyd, J.P. Chebyshev and Fourier Spectral Methods; Courier Corporation: North Chelmsford, MA, USA, 2001. [Google Scholar]

- Peyret, R. Spectral Methods for Incompressible Viscous Flow; Springer: Berlin/Heidelberg, Germany, 2013. [Google Scholar]

- Saadatmandi, A.; Dehghan, M. Numerical solution of hyperbolic telegraph equation using the Chebyshev tau method. Numer. Methods Part. Differ. Equ. Int. J. 2010, 26, 239–252. [Google Scholar] [CrossRef]

- Ren, R.; Li, H.; Jiang, W.; Song, M. An efficient Chebyshev-tau method for solving the space fractional diffusion equations. Appl. Math. Comput. 2013, 224, 259–267. [Google Scholar] [CrossRef]

- Canuto, C.; Hussaini, M.Y.; Quarteroni, A.; Zang, T.A. Spectral Methods: Evolution to Complex Geometries and Applications to Fluid Dynamics; Springer: Berlin/Heidelberg, Germany, 2007. [Google Scholar]

- Johnson, D. Chebyshev Polynomials in the Spectral Tau Method and Applications to Eigenvalue Problems; NASA: Washington, DC, USA, 1996. [Google Scholar]

- Marti, P.; Calkins, M.; Julien, K. A computationally efficient spectral method for modeling core dynamics. Geochem. Geophys. Geosyst. 2016, 17, 3031–3053. [Google Scholar] [CrossRef]

- Liu, F.; Ye, X.; Wang, X. Efficient Chebyshev spectral method for solving linear elliptic PDEs using quasi-inverse technique. Numer. Math. Theory Methods Appl. 2011, 4, 197–215. [Google Scholar]

- Shen, J. Efficient spectral-Galerkin method II. Direct solvers of second-and fourth-order equations using Chebyshev polynomials. SIAM J. Sci. Comput. 1995, 16, 74–87. [Google Scholar] [CrossRef]

- Julien, K.; Watson, M. Efficient multi-dimensional solution of PDEs using Chebyshev spectral methods. J. Comput. Phys. 2009, 228, 1480–1503. [Google Scholar] [CrossRef]

- Dang-Vu, H.; Delcarte, C. An accurate solution of the Poisson equation by the Chebyshev collocation method. J. Comput. Phys. 1993, 104, 211–220. [Google Scholar] [CrossRef]

- Haidvogel, D.B.; Zang, T. The accurate solution of Poisson’s equation by expansion in Chebyshev polynomials. J. Comput. Phys. 1979, 30, 167–180. [Google Scholar] [CrossRef]

- Abdi, H. The eigen-decomposition: Eigenvalues and eigenvectors. Encycl. Meas. Stat. 2007, 2007, 304–308. [Google Scholar]

- Nam, S.; Patil, A.K.; Patil, S.; Chintalapalli, H.R.; Park, K.; Chai, Y. Hybrid interface of a two-dimensional cubic Hermite curve oversketch and a three-dimensional spatial oversketch for the conceptual body design of a car. Proc. Inst. Mech. Eng. D-J. Automob. Eng. 2013, 227, 1687–1697. [Google Scholar] [CrossRef]

- Canuto, C.G.; Hussaini, M.Y.; Quarteroni, A.; Zang, T.A. Spectral Methods; Springer: Berlin/Heidelberg, Germany, 2006. [Google Scholar]

- Trefethen, L.N. Spectral Methods in MATLAB; SIAM: Philadelphia, PA, USA, 2000. [Google Scholar]

- Meyer, C.D. Matrix Analysis and Applied Linear Algebra; SIAM: Philadelphia, PA, USA, 2000. [Google Scholar]

© 2019 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).