1. Introduction

Recently, the security of process control systems has become crucially important since control systems are vulnerable to cyber-attacks, which are a series of computer actions to compromise the security of control systems (e.g., integrity, stability and safety) [

1,

2]. Since cyber-physical systems (CPS) or supervisory control and data acquisition (SCADA) systems are usually large-scale, geographically dispersed and life-critical systems where embedded sensors and actuators are connected into a network to sense and control the physical devices [

3], the failure of cybersecurity can lead to unsafe process operation, and potentially to catastrophic consequences in the chemical process industries, causing environmental damage, capital loss and human injuries. Among cyber-attacks, targeted attacks are severe threats for control systems because of their specific designs with the aim of modifying the control actions applied to a chemical process (for example, the Stuxnet worm aims to modify the data sent to a Programmable Logic Controller [

4]). Additionally, targeted attacks are usually stealthy and difficult to detect using classical detection methods since they are designed based on some known information of control systems (e.g., the process state measurement). Therefore, designing an advanced detection system (e.g., machine learning-based detection methods [

5,

6]) and a suitable optimal control scheme for nonlinear processes in the presence of targeted cyber-attacks is an important open issue.

Due to the rapid development of computer networks of CPS in the past two to three decades, the components (e.g., sensors, actuators, and controllers) in a large-scale process control system are now connected through wired/wireless networks, which makes these systems more vulnerable to cyber-attacks that can damage the operation of physical layers besides cyber layers. Additionally, since the development of most of the existing detection methods still depends partly on human analysis, the increased use of data and the designs of stealthy cyber-attacks pose challenges to the development of timely detection methods with high detection accuracy. In this direction, the design of cyber-attacks, the anomaly detection methods focusing on physical layers, and the corresponding resilient control methods have received a lot of attention. A typical method of detection [

4] is using a model of the process and comparing the model output predictions with the actual measured outputs. In [

7], a dynamic watermarking method was proposed to detect cyber-attacks via a technique of injecting private excitation into the system. Moreover, four representative detection methods were summarized in [

3] as Bayesian detection with binary hypothesis, weighted least squares,

-detector based on Kalman filters and quasi-fault detection and isolation methods.

Besides the detection of cyber-attacks, the design of resilient control schemes also plays an important role in operating a chemical process reliably under cyber-attacks. To guarantee the process performance (e.g., robustness, stability, safety, etc.) and mitigate the impact of cyber-attacks, resilient state estimation and resilient control strategies have attracted considerable research interest. In [

2,

8], resilient estimators were designed to reconstruct the system states accurately. An event-triggered control system was proposed in [

9] to tolerate Denial-of-service (DoS) attacks without jeopardizing the stability of the closed-loop system.

On the other hand, as a widely-used advanced control methodology in industrial chemical plants, model predictive control (MPC) achieves optimal performance of multiple-input multiple-output processes while accounting for state and input constraints [

10]. Based on Lyapunov methods (e.g., a Lyapunov-based control law), the Lyapunov-based model predictive control (LMPC) method was developed to ensure stability and feasibility in an explicitly-defined subset of the region of attraction of the closed-loop system [

11,

12]. Additionally, process operational safety can also be guaranteed via control Lyapunov-barrier function-based constraints in the framework of LMPC [

13]. At this stage, however, the potential safety/stability problem in MPC caused by cyber-attacks has not been studied with the exception of a recent work that provides a quantitative framework for the evaluation of resilience of control systems with respect to various types of cyber-attacks [

14].

Motivated by this, we develop an integrated data-based cyber-attack detection and model predictive control method for nonlinear systems subject to cyber-attacks. Specifically, a cyber-attack (e.g., a min-max cyber-attack) that aims to destabilize the closed-loop system via a sensor tamper is considered and applied to the closed-loop process. Under such a cyber-attack, the closed-loop system under the MPC without accounting for the cyber-attack cannot ensure closed-loop stability. To detect potential cyber-attacks, we take advantage of machine learning methods, which are widely-used in clustering, regression, and other applications such as model order reduction [

15,

16,

17], to build a neural network (NN)-based detection system. First, the NN training dataset was obtained for three conditions: (1) The system without disturbances and cyber-attacks (i.e., nominal system); (2) The system with only process disturbances considered; (3) The system with only cyber-attacks considered. Then, a NN detection method is trained off-line to derive a model that can be used on-line to predict cyber-attacks. In addition, considering the classification accuracy of the NN, a sliding detection window is employed to reduce false cyber-attack alarms. Finally, a Lyapunov-based model predictive control (LMPC) method that utilizes the state measurement from secure, redundant sensors is developed to reduce the impact of cyber-attacks and re-stabilize the closed-loop system in finite time.

The rest of the paper is organized as follows: in

Section 2, the class of nonlinear systems considered and the stabilizability assumptions are given. In

Section 3, we introduce the min-max cyber-attack, develop a NN-based detection system and a Lyapunov-based model predictive controller (LMPC) that guarantees recursive feasibility and closed-loop stability under sample-and-hold implementation within an explicitly characterized set of initial conditions. In

Section 4, a nonlinear chemical process example is used to demonstrate the applicability of the proposed cyber-attack detection and control method.

4. Lyapunov-Based MPC (LMPC)

To cope with the threats of the above sensor cyber-attacks, a feedback control method that accounts for the corruption of some sensor measurements should be designed by defenders to mitigate the impact of cyber-attacks and still stabilize the system of Equation (

1) at its steady-state. Based on the assumption of the existence of a Lyapunov function

and a controller

that satisfy Equation (2), the LMPC that utilizes the accurate measurement from redundant, secure sensors is proposed as the following optimization problem:

where

is the predicted state trajectory,

is the set of piecewise constant functions with period

, and

N is the number of sampling periods in the prediction horizon.

represents the time derivative of

, i.e.,

. We assume that the states of the closed-loop system are measured at each sampling time instance, and will be used as the initial condition in the optimization problem of LMPC in the next sampling step. Specifically, based on the measured state

at

, the above optimization problem is solved to obtain the optimal solution

over the prediction horizon

. The first control action of

, i.e.,

, is sent to the control actuators to be applied over the next sampling period. Then, at the next sampling time

, the optimization problem is solved again, and the horizon will be rolled one sampling time.

In the optimization problem of Equation (14), the objective function of Equation (14a) that is minimized is the integral of

over the prediction horizon, where the function

is usually in a quadratic form (i.e.,

, where

R and

Q are positive definite matrices). The constraint of Equation (

14b) is the nominal system of Equation (

1) (i.e.,

) to predict the evolution of the closed-loop state. Equation (

14c) defines the initial condition of the nominal process system of Equation (

14b,

14d) defines the input constraints over the prediction horizon. The constraint of Equation (

14e) requires that

for the system decreases at least at the rate under

at

when

. However, if

enters a small neighborhood around the origin

, in which

is not required to be negative due to the sample-and-hold implementation of the LMPC, the constraint of Equation (

14f) is activated to maintain the state inside

afterwards.

When the cyber-attack is detected by

but not confirmed by

yet, the optimization problem of the LMPC of Equation (14) uses the state measurement from redundant, secure sensors instead of the original sensors as the initial condition

for the optimization problem of Equation (14) until the next instance of detection. However, if the cyber-attack is finally confirmed by

, the misbehaving sensor will be isolated, and the optimization problem of the LMPC of Equation (14) starts to use the state measurement from secure sensors instead of the compromised state measurement as the initial condition

for the optimization problem of Equation (14) for the remaining time of process operation. The structure of the entire cyber-attack-detection-control system is shown in

Figure 3.

If the cyber-attack is detected and confirmed before the closed-loop state is driven out of the stability region, it follows that the closed-loop state is always bounded in the stability region

thereafter and ultimately converges to a small neighborhood

around the origin for any

under the LMPC of Equation (14). The detailed proof can be found in [

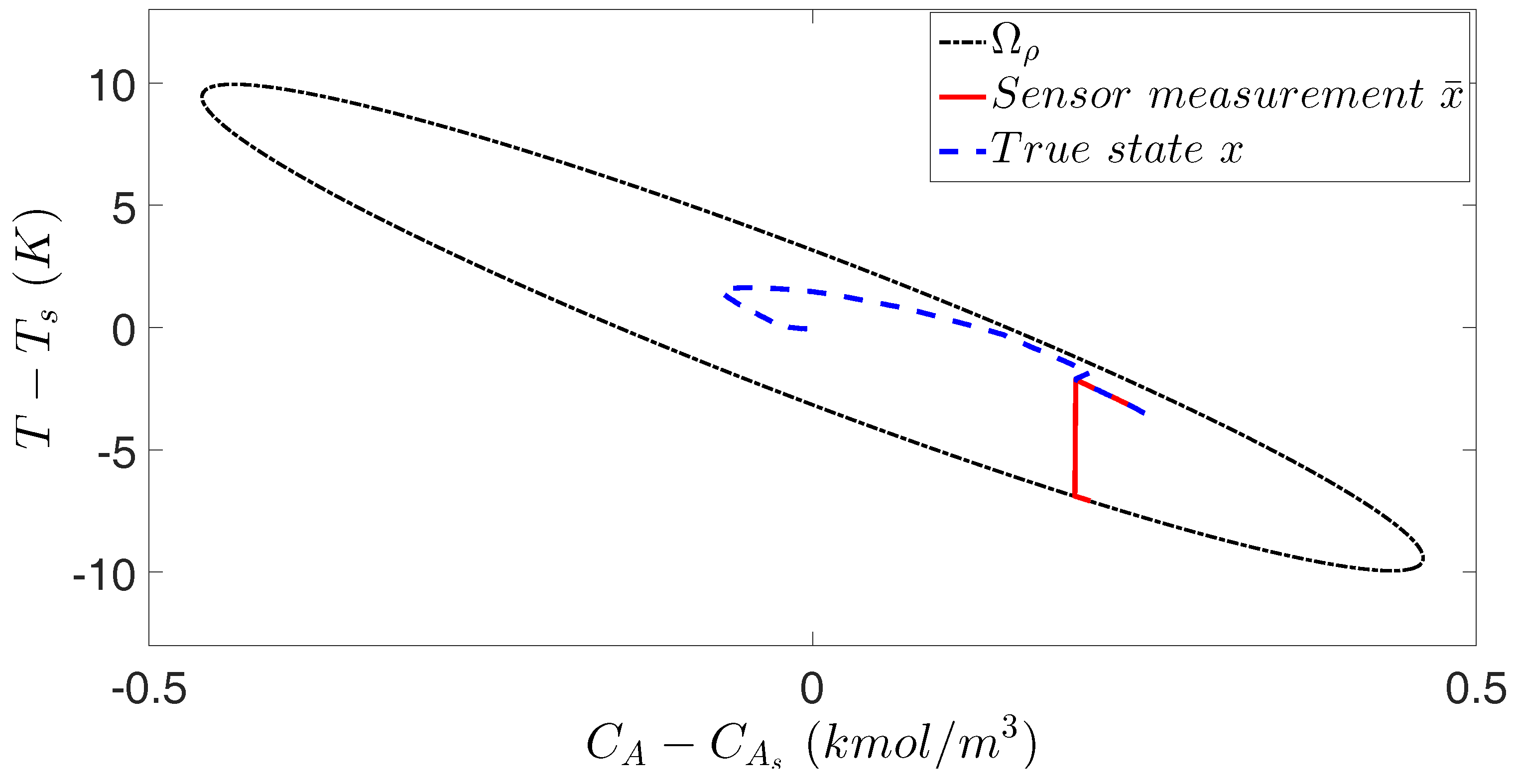

11]. An example trajectory is shown in

Figure 4.

Remark 6. It is noted that the speed of detection (which depends heavily on the size of the input data to the NN, the number of hidden layers and the type of activation functions) plays an important role in stabilizing the closed-loop system of Equation (1) since the operation of the closed-loop system under the LMPC of Equation (14) becomes unreliable after cyber-attacks occur. In other words, if we can detect cyber-attacks in a short time, the LMPC can switch to redundant, secure sensors and still be able to stabilize the system at the origin before it leaves the stability region . Additionally, the probability of closed-loop stability can be derived based on the classification accuracy of the NN-based detection method and its activation frequency . Specifically, given the classification accuracy , if the NN-based detection system is activated every sampling step, the probability of the cyber-attack being detected at each sampling step (i.e., ) is equal to , which implies that the probability of closed-loop stability is no less than . Moreover, for safety reasons, the region of initial conditions can be chosen as a conservative sub-region (i.e., , where ) inside the stability region to avoid the rapid divergence of states under cyber-attacks and improve closed-loop stability. For example, let such that , still stays in despite a miss of detection of cyber-attacks. Therefore, the probability of closed-loop stability under the LMPC of Equation (14) reaches (i.e., the probability of cyber-attacks being detected within two sampling periods). Remark 7. It is demonstrated in [11] that in the presence of sufficiently small bounded disturbances (i.e., ), closed-loop stability is still guaranteed for the system of Equation (1) under the sample-and-hold implementation of the LMPC of Equation (14) with a sufficiently small sampling period Δ.

In this case, it is undesirable to treat the disturbance as a cyber-attack and trigger the false alarm. Therefore, the detection system should account for the disturbance case and have the capability to distinguish cyber-attacks from disturbances (i.e., the system with disturbances should be classified as a distinct class or treated as the nominal system). 5. Application to a Chemical Process Example

In this section, we utilize a chemical process example to illustrate the application of the proposed detection and control methods for potential cyber-attacks. Consider a well-mixed, non-isothermal continuous stirred tank reactor (CSTR) where an irreversible first-order exothermic reaction takes place. The reaction converts the reactant

A to the product

B via the chemical reaction

. A heating jacket that supplies or removes heat from the reactor is used. The CSTR dynamic model derived from material and energy balances is given below:

where

is the concentration of reactant

A in the reactor,

T is the temperature of the reactor,

Q denotes the heat supply/removal rate, and

is the volume of the reacting liquid in the reactor. The feed to the reactor contains the reactant

A at a concentration

, temperature

, and volumetric flow rate

F. The liquid has a constant density of

and a heat capacity of

.

,

E and

are the reaction pre-exponential factor, activation energy and the enthalpy of the reaction, respectively. Process parameter values are listed in

Table 1. The control objective is to operate the CSTR at the equilibrium point

kmol/m

, 395.3 K) by manipulating the heat input rate

, and the inlet concentration of species

A,

. The input constraints for

and

are

kJ/min and

kmol/m

, respectively.

To place Equation (15) in the form of the class of nonlinear systems of Equation (

1), deviation variables are used in this example, such that the equilibrium point of the system is at the origin of the state-space.

represents the state vector in deviation variable form, and

represents the manipulated input vector in deviation variable form.

The explicit Euler method with an integration time step of

min is applied to numerically simulate the dynamic model of Equation (15). The nonlinear optimization problem of the LMPC of Equation (14) is solved using the IPOPT software package [

26] with the sampling period

min.

We construct a Control Lyapunov Function using the standard quadratic form

, with the following positive definite

P matrix:

Under the LMPC of Equation (14) without cyber-attacks, closed-loop stability is achieved for the nominal system of Equation (15) in the sense that the closed-loop state is always bounded in the stability region

with

and ultimately converges to

with

around the origin. However, if a min-max cyber-attack is added to tamper the sensor measurement of temperature of the system of Equation (15), closed-loop stability is no longer guaranteed. Specifically, the min-max cyber-attack is designed to be of the following form:

where

,

, and

are the corresponding state measurements under min-max cyber-attacks. In this example, the min-max cyber-attack of Equation (17) is designed such that the measurement of concentration remains unchanged, and the measurement of temperature is tampered to be the minimum value that keeps the state at the boundary of the stability region

.

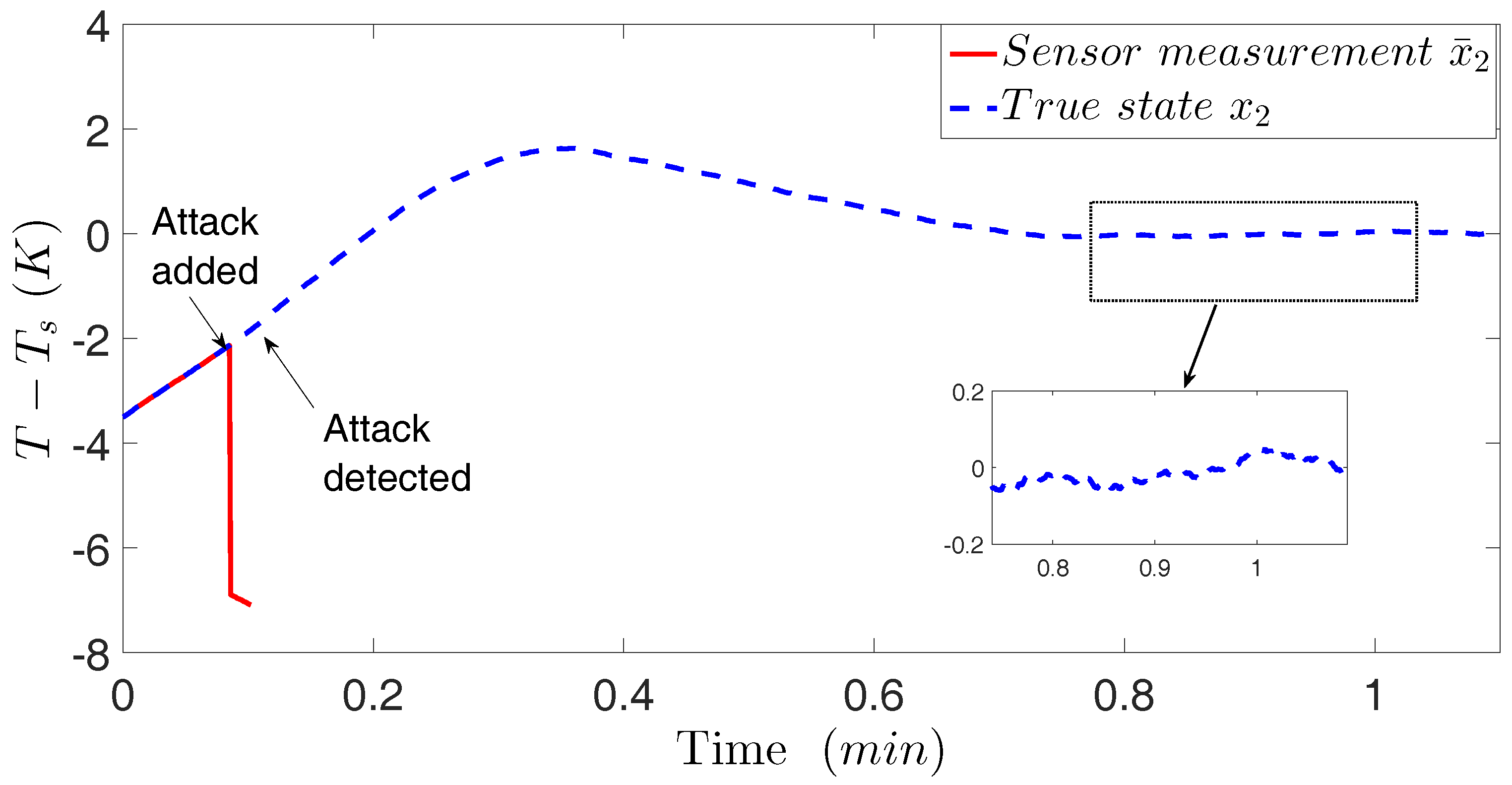

In

Figure 5 and

Figure 6, the temperature sensor measurement is intruded by a min-max cyber-attack at time

min. Without any cyber-attack detection system, it is shown in

Figure 5 that the LMPC of Equation (14) keeps operating the system of Equation (15) using false sensor measurements blindly and finally drives the closed-loop state out of the stability region

.

To handle the min-max cyber-attack, the model-based detection system of Equation (5) and the NN-based detection method are applied to the system of Equation (15). The simulation results are shown in

Figure 7,

Figure 8,

Figure 9,

Figure 10,

Figure 11,

Figure 12 and

Figure 13. Subsequently, the application of the NN-based detection method to the system under other cyber-attacks and the presence of disturbances is demonstrated in

Figure 14,

Figure 15 and

Figure 16. Specifically, we first demonstrate the application of the model-based detection system of Equation (5) and of the LMPC of Equation (14), where

and

are chosen through closed-loop simulations. In

Figure 7, the min-max cyber-attack of Equation (17) is added at

min and is detected at

min before the closed-loop state comes out of

. The variation of the CUSUM statistic

is shown in

Figure 8, in which

remains at

b when there is no cyber-attack and exceeds

at

min. After the min-max cyber-attack is detected, the true states are obtained from redundant, secure sensors and the LMPC of Equation (14) drives the closed-loop state into

.

Next, the NN-based detection system and the LMPC of Equation (14) are implemented to mitigate the impact of cyber-attacks. The feed-forward NN model with two hidden layers is built in Python using the Keras library. Specifically, 3000 time-series balanced data samples of the closed-loop states of the nominal system, the system with disturbances, and the system under min-max cyber-attacks from

to

min are used to train the neural network to generate the classification of three classes, where class 0, 1, and 2 stand for the system under min-max cyber-attacks, the nominal system and the system with disturbances, respectively. It is demonstrated that 3000 time-series data is sufficient to build the NN for the CSTR example because dataset size smaller than 3000 leads to lower classification accuracy while the increase of dataset size over 3000 does not significantly improve the classification accuracy but brings more computation time as found in our calculations. 3000 data samples are split into 2000 training data, 500 validation data and 500 test data, respectively.

is utilized as the input vector to the NN model. The structure of the NN model is listed in

Table 2. Additionally, to improve the performance of the NN model, batch normalization is utilized after each hidden layer to improve the performance of the NN algorithm.

To apply the NN-based detection method, we first investigate the relationship of the classification accuracy of the NN with respect to the size of the dataset. Specifically, assuming that the min-max cyber-attack occurs at a random sampling step before

min, the first NN model

is trained at

min using the data of states from

to

min. As shown in

Figure 9, early-stopping is activated at the 8th iteration (epoch) of training when validation accuracy ceases to increase. The averaged classification accuracy at

min is obtained by training the same model

for 10 times independently. The above process is repeated by increasing the size of the dataset by

min every time to derive the models for different time instances (i.e.,

,

, …). The minimum, the maximum and the averaged classification accuracy at each detection time instance are shown in

Figure 10.

Figure 10 shows that the averaged test accuracy increases as more state measurements are collected after the cyber-attack occurs, and is up to 95% with state measurements for a long period of time. This suggests that the detection based on recent models is more reliable and deserves higher weights in the sliding window. The confusion matrix of the above NN for three classes: the system under min-max cyber-attack, the nominal system, and the system with disturbances is given in

Table 3. Additionally, besides the NN method, other supervised learning-based classification methods including k-NN, SVM and random forests are also applied to the same dataset and obtained the averaged test accuracies, sensitivities and specificities within

min as listed in

Table 4.

When the detection of cyber-attacks is incorporated into the closed-loop system of Equation (15) under the LMPC of Equation (14), the detection system is called every

sampling periods. The sliding window length is

sampling periods and the threshold for the detection indicator is

. The detection system is activated from

min such that a desired test accuracy is achieved with enough data. The closed-loop state-space profiles under the NN-based detection system with the stability region

check and the detection system without the

check are shown in

Figure 11 and

Figure 12.

Specifically, in

Figure 11, it is demonstrated that without the stability region check, the closed-loop state leaves

before the cyber-attack is confirmed. However, under the detection system with the boundedness check of

, the closed-loop state is always bounded in

by switching to redundant sensors at the first detection of min-max cyber-attacks. In

Figure 12, it is shown that after the min-max cyber-attack is confirmed at

min, the misbehaving sensor is isolated and the LMPC of Equation (14) starts using the measurement of temperature from redundant sensors and re-stabilizes the system at the origin. The simulations demonstrate that it takes around 0.8 min for the closed-loop state trajectory to enter and remain in

under the LMPC of Equation (14) once the min-max cyber-attack is detected. The corresponding input profiles for the closed-loop system of Equation (

1) under the NN-based detection system with the

check are shown in

Figure 13, where it is observed that a sharp change of

occurs from

min to

min due to the min-max cyber-attack.

Additionally, when both disturbances and min-max cyber-attacks are present, it is demonstrated that the NN-based detection system is still able to detect the min-max cyber-attack and re-stabilize the closed-loop system of Equation (15) in the presence of disturbances by following the same steps as in the pure-cyber-attack case. The bounded disturbances

and

are added in Equation (

15a,

15b) as standard Gaussian white noise with zero mean and variances

kmol/(m

min) and

K/min, respectively. Also, the disturbance terms are bounded as follows:

kmol/(m

min), and

K/min, respectively. The closed-loop state and input profiles are shown in

Figure 14,

Figure 15 and

Figure 16. Specifically, in

Figure 15, it is demonstrated that the min-max cyber-attack occurs at

min and is confirmed at

min before the closed-loop state leaves

. In the presence of disturbances, the misbehaving sensor is isolated and the closed-loop states are driven to a neighborhood around the origin under the LMPC of Equation (14). In

Figure 16, it is demonstrated that the manipulated inputs show variation around the steady-state values (0, 0) when the closed-loop system reaches a neighborhood of the steady-state due to the bounded disturbances.

Lastly, since the surge cyber-attack of Equation (

6) is undetectable by the model-based detection method, we also test the performance of the NN-based detection on the surge cyber-attack due to the similarity between surge cyber-attacks and min-max cyber-attacks (i.e., the surge cyber-attack works as a min-max attack for the first few sampling steps). It is demonstrated in simulations that

of surge cyber-attacks can be detected by the NN-based detection system that is trained for min-max cyber-attacks only, which implies that the NN-based detection method can be applied to many other cyber-attacks with similar properties.

Moreover, when cyber-attacks with different properties are taken into account, for example, the replay attack (i.e., , where X is the set of past measurements of states), the NN-based detection system can still efficiently distinguish the type of cyber-attacks and disturbances by re-training the NN model. The new NN model is built with labeled training data for the case of min-max, replay, nominal and with disturbances, for which the classification accuracy within min is up to 85%. As a result, the NN-based detection model can be readily updated with the data of new cyber-attacks without changing the entire structure of detection or control systems.