1. Introduction

The problems of reconstruction of specially structured matrices from a prescribed set of eigen data are collectively known as inverse eigenvalue problems (IEPs). The level of difficulty of an IEP depends on the structure of the matrices which are to be reconstructed and on the type of eigen information available. M.T. Chu in [

1] gave a detailed characterization of inverse eigenvalue problems. A few special types of inverse eigenvalue problems have been studied in [

2,

3,

4,

5,

6,

7,

8]. Inverse problems for matrices with prescribed graphs have been studied in [

9,

10,

11,

12,

13,

14]. Inverse eigenvalue problems arise in a number of applications such as control theory, pole assignment problems, system identification, structural analysis, mass spring vibrations, circuit theory, mechanical system simulation and graph theory [

1,

12,

15,

16].

In this paper, we study two IEPs, namely IEPP (inverse eigenvalue problem for matrices whose graph is a path) and IEPB (inverse eigenvalue problem for matrices whose graph is a broom). Similar problems were studied in [

5], for arrow matrices. The usual process of solving such problems involves the use of recurrence relations among the leading principal minors of

where

A is the required matrix. However, we have included graphs in our analysis by bringing in the requirement of constructing matrices which are described by graphs. In particular, we have considered paths and brooms. Thus, in addition to recurrence relations among leading principal minors, we have used spectral properties of acyclic matrices to solve the problems. Particularly, the strict interlacing of the eigenvalues in IEPB could be proved because of the fact that the minimal and maximal eigenvalues of an acyclic matrix are simple.

The paper is organized as follows : In

Section 2, we discuss some preliminary concepts and clarify the notations used in the paper. In

Section 3, we define the inverse problems to be studied, namely IEPP and IEPB.

Section 4 deals with the analysis of IEPP, the main result being presented as Theorem 4.

Section 5 deals with the analysis of IEPB, the main result being presented as Theorem 9. In

Section 6, we present some numerical examples to illustrate the solutions of IEPP and IEPB.

2. Preliminary Concepts

Let V be a finite set and let P be the set of all subsets of V which have two distinct elements. Let . Then is said to be a graph with vertex set V and edge set E. To avoid confusion, the vertex set of a graph G is denoted by and the edge set is denoted by . Our choice of P implies that the graphs under consideration are free of multiple edges or loops and are undirected. If and , then we say that is an edge and u and v are then called adjacent vertices. The degree of a vertex u is the number of edges which are incident on u. A vertex of degree one is called a pendant vertex. A path P of G is a sequence of distinct vertices such that consecutive vertices are adjacent. The path on n vertices is denoted by . A graph is said to be connected if there exists a path between every pair of its vertices. A cycle is a connected graph in which each vertex is adjacent to exactly two other vertices. A connected graph without any cycles is called a tree.

Given an

symmetric matrix

A, the graph of A, denoted by

, has vertex set

and edge set

. For a graph

G with

n vertices,

denotes the set of all

symmetric matrices which have

G as their graph. A matrix whose graph is a tree is called an

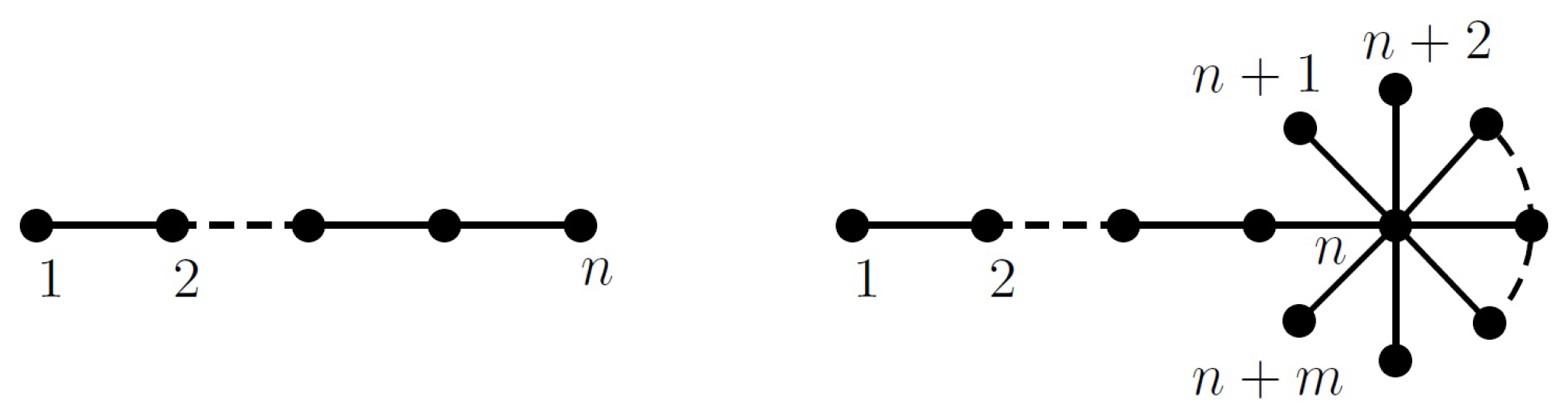

acyclic matrix. Some simple examples of acyclic matrices are the matrices whose graphs are paths or brooms (

Figure 1).

Throughout this paper, we shall use the following notation:

Matrix of a path

will be a tridiagonal matrix with non zero off-diagonal entries :

where the

s are non-zero.

Matrix of a broom

will be of the following form:

where the

s are non zero.

will denote the ith leading principal submatrix of the required matrix ( or ).

, (respectively ) i.e., the ith leading principal minor of (respectively ), being the identity matrix of order i. For the sake of writing the recurrence relations with ease, we define .

3. IEPs to be Studied

In this paper we shall study the following two inverse eigenvalue problems :

IEPP Given n real numbers and a real vector find an matrix such that is an eigenvalue of , and is an eigenpair of .

IEPB Given real numbers and , find an matrix such that and are respectively the minimal and maximal eigenvalues of , .

4. Solution of IEPP

The following Lemma gives the relation between successive leading principal minors of :

Lemma 1. The sequence of characteristic polynomials of satisfies the following recurrence relations: Here denotes the jth leading principal submatrix of , the matrix corresponding to the path on n vertices.

Lemma 2. For any and , and cannot be simultaneously zero.

Proof. If , then , which implies , but this contradicts the restriction on that . Once again, for , if , then the recurrence relation (ii) from Lemma 1, , which gives . This will in turn imply that . Thus, we will end up with , implying that which is a contradiction. □

Lemma 3. If is an eigenvector of corresponding to an eigenvalue λ, then and the components of this eigenvector are given by Proof. Since

is an eigenpair of

, we have

. Comparing the first

rows of this matrix equation on both sides, we have

By the second recurrence relation from Lemma 1,

We define the quantities

as

Multiplying Equation (

2) by

, we get

which gives

Now, from Equation (

1), we have

. Again from Equation (

4),

Proceeding this way, we see that

which can also be written as

. This further implies that

Since

X is an eigenvector,

. If

, then from Equation (

5), we see that all the other components of

X become zero. Thus,

. □

Theorem 4. The IEPP has a unique solution if and only if for all .

The unique solution is given by Proof. Let

for all

. As per the conditions of IEPP,

is an eigenvalue of

for each

. Thus,

.

which gives the expression for calculating

. The expression is valid as

, because by Lemma 2,

and

cannot be simultaneously zero.

Now, since

is an eigenpair of

, so by Equation (

5),

which implies that

Since

,

. Hence the above expression for

is valid and

for all

. Successive use of Equations (

6) and (

7) will give us the values of

and

for

.

Conversely, if there exists a unique solution for IEPP, then since X is an eigenvector of , so by Lemma 3, . The existence of a solution implies that for . It then follows from the expressions in Equation (7) that for . □

5. Solution of IEPB

Lemma 5. Let be a monic polynomial of degree n with all real zeros and and be the minimal and maximal zero of P respectively.If , then .

If , then .

The proof immediately follows after expressing the polynomial as a product of its linear factors.

Lemma 6. If T is a tree, then the minimal and maximal eigenvalues of any matrix are simple i.e., of multiplicity one. [Corollary 6 of Theorem 2 in [17]] In other words, this Lemma says that the minimal and maximal eigenvalues of an acyclic matrix are simple. Again, since for each j, the leading principal submatrix corresponds to a tree so by Lemma 6 the minimal and maximal eigenvalues of must be simple i.e., in particular .

Lemma 7. The sequence of characteristic polynomials of satisfies the following recurrence relations:.

, .

.

Lemma 8. For any and , and cannot be simultaneously zero.

Proof. Same as Lemma 2. □

By Cauchy’s interlacing theorem ([

14,

18]), the eigenvalues of a symmetric matrix and those of any of its principal submatrix interlace each other. Thus,

’s and

’s must satisfy:

Each diagonal element

is also a

principal submatrix of

A. Hence

. Since

and

are the minimal and maximal eigenvalues of

, so

and

. We need to solve these equations successively using the recurrence relations in Lemma 1. For

,

. For

,

which imply that

will always exist as the denominator in the above expression for can never be zero. We have and so if , then by Rolle’s theorem ∃ such that , which is not possible. Thus, . Also, by Lemma 5, and so the expression for is non-negative and so we can get real values of .

Now for

, we have

which gives

Let

denote the determinant of the coefficient matrix of the above system of linear equations in

and

. Then

. If

, then the system will have a unique solution, given by

We claim that the expression for

in RHS is non negative. This follows from Lemma 5. Since

and

, so by Lemma 5,

In addition, by Lemma 2,

and

cannot be simultaneously zero. Thus,

. Similarly,

. This implies that

and

. Thus, we can get non-zero real values of

from Equation (

8) if and only if

and

for all

.

Now, if , then i.e., . Since both the terms in this sum are non negative, we must have and . However, from Lemma 2, and cannot be simultaneously zero. In addition, and cannot be simultaneously zero. Thus, the only possibility is that . However, this will then imply that , which is not possible as by Lemma 6 . Thus, for all .

Again,

and

are the eigenvalues of

and so

and

. Hence,

so we get a system of equations linear in

and

We first investigate the conditions under which the coefficient matrix of the above system is singular. By Cauchy’s interlacing property, we have

Thus,

and

. Let

be the determinant of the coefficient matrix of Equation (

10). Then,

As a consequence of Lemma 5, both the products in the LHS are non-negative and so

for all

. Thus,

will vanish if and only if

will vanish

i.e., if and only if

and

If

, then since

and

is the minimum possible zero of

, we get

. Consequently,

but this contradicts Lemma 8, according to which

and

cannot be simultaneously zero. Hence,

. Similarly, it can be shown that

. Thus there are the following possibilities :

- i.

and .

- ii.

and .

- iii.

and .

- iv.

and .

If (i) happens, then, since

, so from the equations in Equation (

10),

and

. This implies that

for some

and

for some

. However, as per the inequality Equation (

11), it then follows that

and

. Since

and

, so the above equalities imply that

and

. Hence from the recurrence relation (3) of Lemma 7, we get

which implies that

. Similarly, it will follow that

. However,

and so

, but this is not possible as

and

are the minimal and maximal eigenvalues of the acyclic matrix

and by Lemma 6, the minimal and maximal eigenvalues of an acyclic matrix are simple. Hence (i) cannot hold. From the above arguments, it also follows that (iv) cannot hold.

If (ii) holds, then the augmented matrix of the system of Equation (

10) will be of rank one and so the system will have infinite number of solutions. Similarly, if (iii) holds, then the system will have infinite number of solutions. However, if we put the additional constraint that

and

for all

then

and

, so that (ii) and (iii) will not hold.

Thus, we see that

if and only if

Under this constraint, the unique solution of the system Equation (

10) is given by

where

and

.

The above analysis of the IEP can be framed as the following theorem :

Theorem 9. The IEPB has a solution if and only ifand the solution is given by The solution is unique except for the signs of the non-zero off-diagonal entries.

6. Numerical Examples

We apply the results obtained in the previous section to solve the following :

Example 1. Given 7 real numbers and a real vector , find a matrix such that is an eigenvalue of for each and is an eigenpair of .

Solution Using Theorem 1, we obtain the following matrix as the solution :

The eigenvalues of the all the leading principal submatrices are :

Example 2. Given 13 real numbers , rearrange and label them as and and find a matrix such that and are the minimal and maximal eigenvalues of , the jth leading principal sub matrix of .

Solution Using Theorem 9, we rearrange the numbers in the following way

i.e.,

Then, using the expressions for

and

we get

Here we have taken all the s as positive. We can take some of the s as negative also. In fact, we can construct such matrices for the above problem, the only difference being in the signs of the non-zero off-diagonal entries.

We compute the spectra of all the leading principal submatrices of to verify the the conditions of the IEPB are satisfied. The minimal and maximal eigenvalues of each principal submatrix are shown in italics.

7. Conclusions

The inverse eigenvalue problems discussed in this paper require the construction of specially structured matrices from mixed eigendata. The results obtained here provide an efficient way to construct such matrices from given set of some of the eigenvalues of leading principal submatrices of the required matrix.

The problems IEPP and IEPB are significant in the sense that they are partially described inverse eigenvalue problems i.e., they require the construction of matrices from partial information of eigenvalues and eigenvectors. Such partially described problems may occur in computations involving a complicated physical system where it is often difficult to obtain the entire spectrum. Many times, only the minimal and maximal eigenvalues are known in advance. Thus, the study of inverse problems having such prescribed eigen structure are significant. It would be interesting to consider such IEPs for other acyclic matrices as well.