1. Introduction

The inception of edge computing (EC) can be traced back to the early 2000s when the proliferation of mobile devices and the emergence of IoT heralded a paradigm shift in computing requirements [

1,

2,

3]. Traditional cloud computing, while effective for many applications, faced challenges in meeting the demands of latency-sensitive and bandwidth-intensive tasks inherent in IoT deployments [

4,

5,

6]. The concept of edge computing began gaining traction as a solution to these challenges, advocating for the distribution of computational resources closer to the data source [

7,

8,

9]. Over the years, EC has evolved from a theoretical concept to a practical architectural approach, spurred by advancements in hardware miniaturization, networking technologies, and distributed computing algorithms [

10,

11,

12]. While fog computing extended cloud capabilities to the network edge [

13,

14], EC distinguishes itself by deploying computational resources at the closest proximity to where data are generated or consumed, minimizing latency and enhancing responsiveness [

15,

16,

17]. Today, EC is widely adopted across industries such as manufacturing, healthcare, smart cities, and autonomous vehicles, enabling real-time data processing, reducing network congestion, and enhancing data privacy [

18,

19].

The rapid proliferation of IoT devices and the emergence of latency-sensitive applications, such as autonomous vehicles and remote healthcare, have escalated the demands on real-time data processing and low-latency communication [

20,

21,

22]. To address these challenges, this research leverages the synergies between edge computing (EC) and 6G networks, aiming to develop innovative solutions for optimizing this integration [

23,

24,

25]. The focus is on enhancing the performance and responsiveness of IoT applications through advanced algorithms and frameworks [

26,

27,

28].

This research is significant for its potential to revolutionize real-time IoT application deployment and operation. By optimizing the integration of EC and 6G networks, it can substantially reduce latency, improve energy efficiency, and optimize resource allocation [

29,

30,

31,

32,

33]. These advancements will impact diverse domains, from industrial efficiency to remote healthcare and transportation systems, contributing to societal advancements [

34,

35,

36,

37,

38,

39].

The primary objectives of this research include reducing latency, improving energy efficiency, and optimizing resource allocation. These goals will be achieved through the development of intelligent task offloading algorithms, energy-efficient resource allocation schemes, and a joint optimization framework. The contributions of this research aim to advance the state-of-the-art in real-time IoT application optimization, enabling the realization of efficient and responsive IoT ecosystems.

Research Contributions

Development of intelligent task offloading algorithms—This research aims to contribute by dynamically determining optimal task distribution strategies to minimize latency in real-time IoT applications, addressing the critical need for timely processing.

Design of energy-efficient resource allocation schemes—Energy-efficient schemes for EC and 6G networks will be proposed, optimizing resource usage while reducing energy consumption and maintaining high performance levels.

Proposal of a joint optimization framework—A novel framework will be introduced to minimize both latency and energy consumption through the integration of task offloading and resource allocation strategies, ensuring a balanced and efficient approach.

Investigation of machine learning techniques—Machine learning techniques will be explored to predict network conditions, enhancing decision-making processes in task offloading and resource allocation for real-time IoT applications.

By addressing these key areas, this research seeks to enable robust and scalable real-time IoT systems that leverage the full potential of EC and 6G networks. The outcomes will provide a foundation for advancing IoT applications, fostering innovations that meet the growing demands for efficiency and responsiveness in an interconnected world.

This paper is structured in five sections; each section is detailed here. The Introduction section provides an overview of the research motives and significance, setting the context for the study. Following this, the Literature Review section explores recent advancements in edge computing (EC) and 6G networks, synthesizing findings from a range of scholarly articles to identify key research trends, challenges, and opportunities in the integration of EC and 6G-enabled Internet of Things (IoT) systems. The Methodology section outlines the systematic approach employed in the study, detailing the selection criteria, data extraction process, and analysis framework. Subsequently, the Results and Discussions section presents the findings of the systematic literature review, discussing key insights, emerging themes, and implications for future research. Finally, the Conclusions section summarizes the main findings of the study, highlights its contributions to the field, and suggests avenues for further investigation.

2. Background

Despite the promising capabilities of 6G networks, several challenges must be addressed to realize their full potential [

40,

41,

42,

43]. One significant challenge is the development of communication protocols and technologies capable of supporting ultra-reliable low-latency communication (URLLC) at scale [

31,

44,

45,

46,

47,

48,

49]. Achieving the targeted data rates in the terabit-per-second range while ensuring seamless connectivity in dense urban environments and remote rural areas poses another formidable challenge [

50,

51]. Additionally, ensuring the security and privacy of data transmitted over 6G networks, especially in the context of IoT applications, remains a critical concern. Overcoming these challenges requires interdisciplinary collaboration and innovative approaches to network design, infrastructure deployment, and policy formulation [

52,

53].

The evolution of wireless communication standards has been marked by successive generations, each promising greater speed, capacity, and reliability. 6G networks represent the next evolutionary leap in this trajectory, building upon the foundations laid by their predecessors [

1]. The journey towards 6G began with the advent of 1G networks in the 1980s, which introduced analog voice communication. Subsequent generations, notably 2G, 3G, and 4G, introduced digital voice and data transmission, paving the way for the mobile internet revolution [

54]. 5G networks, the current state-of-the-art, introduced unprecedented data rates and ultra-low latency, enabling transformative applications such as autonomous vehicles, augmented reality, and remote surgery [

23]. As the capabilities of 5G continue to unfold, attention has turned towards envisioning the requirements and possibilities of 6G networks. Anticipated to be deployed by the end of the decade, 6G networks aim to push the boundaries of wireless communication even further [

26]. With predictions of data rates in the terabit-per-second range and latency reduced to microseconds, 6G networks hold the potential to enable revolutionary applications across diverse domains, including IoT, healthcare, entertainment, and beyond [

30]. The development of 6G networks is driven by a convergence of technological advancements, including advances in radio frequency spectrum utilization, antenna design, signal processing algorithms, and network architecture. Research efforts are underway worldwide to explore novel communication paradigms, such as terahertz communication, massive MIMO (Multiple Input, Multiple Output), and holographic beamforming, to realize the ambitious goals set forth for 6G. In summary, the evolution of wireless communication from 1G to 6G represents a remarkable journey of innovation and progress, driven by the relentless pursuit of faster, more reliable, and more efficient connectivity [

32]. As 6G networks continue to take shape, they hold the promise of reshaping the digital landscape and unlocking unprecedented opportunities for connectivity and communication [

33].

The rapid growth of Internet of Things (IoT) applications has necessitated real-time data processing and low-latency communication. However, the current IoT infrastructure faces challenges related to latency, reliability, and scalability. Edge computing has emerged as a potential solution to address these challenges by bringing computational resources closer to the data source. Additionally, the upcoming sixth-generation (6G) networks promise ultra-reliable, low-latency communication, further enhancing the capabilities of IoT applications. Despite their individual advantages, the seamless integration of edge computing and 6G networks for real-time IoT applications remains largely unexplored. There is a need to investigate the synergistic integration of edge computing and 6G networks to effectively address the challenges of latency, reliability, and scalability in IoT environments. This research aims to develop novel techniques such as Federated Learning, Containerization, Mobile Edge Computing (MEC), Software-Defined Networking (SDN), Network Slicing, and Edge Caching to optimize the performance of real-time IoT applications. The proposed research seeks to evaluate the effectiveness of this synergistic integration and provide insights for the development of a more efficient, reliable, and scalable IoT ecosystem.

3. Related Work

Alhammadi et al. [

2] discussed the challenges and opportunities of edge computing for 6G Internet of Everything (IoE) applications. IoE, which extends the concept of IoT to include not only devices but also people, processes, and data, is expected to be a key driver of innovation in future wireless networks. By leveraging edge computing technologies, IoE applications can benefit from low-latency, high-bandwidth, and context-aware computing capabilities, enabling new services and experiences in diverse domains such as healthcare, transportation, and entertainment.

Al-Ansi et al. [

4] conducted a survey on intelligence edge computing in 6G networks, focusing on its characteristics, challenges, potential use cases, and market drivers. Intelligence edge computing, which refers to the integration of AI and edge computing technologies, enables real-time data processing and analysis at the network edge. By surveying existing literature and industry trends, this research provides valuable insights into the opportunities and challenges of deploying intelligence edge computing in 6G networks.

Alimi et al. [

55] introduced the concept of 6G Cloudnet, a distributed, autonomous, and federated cloud and edge computing architecture designed to support future wireless networks. CloudNet leverages artificial intelligence (AI) and edge computing technologies to create a dynamic and self-organizing network infrastructure capable of adapting to changing user demands and network conditions. By distributing computing resources across multiple layers of the network, CloudNet aims to improve scalability, reliability, and efficiency in 6G environments.

In their study, Chen et al. [

9] addressed the pressing need for efficient task offloading in mobile edge computing (MEC) systems within the context of 6G networks. By optimizing the allocation of computational tasks among multiple vehicles, their proposed approach aims to minimize costs while maximizing system performance. This research is particularly relevant in the era of autonomous vehicles and smart transportation systems, where real-time decision-making at the edge is crucial for ensuring safety and efficiency.

Chen et al. [

10] investigated methods to enhance the robustness of object detection algorithms through the integration of 6G vehicular edge computing. Object detection is a critical task in various applications, including autonomous driving, surveillance, and augmented reality. By leveraging the computational resources available at the network edge, this research aims to improve the accuracy and reliability of object detection systems, especially in dynamic and resource-constrained environments such as vehicular networks.

Ergen et al. [

13] proposed a novel networking architecture called “Edge on Wheels” with OMNIBUS (Omnidirectional Network Built by Unbiased Sensors) networking for future 6G technology. The OMNIBUS networking leverages unbiased sensors deployed on mobile vehicles to create a dynamic and adaptive wireless network infrastructure. By harnessing the mobility of vehicles and the ubiquity of sensors, this research aims to improve connectivity, coverage, and resilience in 6G networks, especially in urban environments and areas with limited infrastructure.

Ferrag et al. [

14] presented a comprehensive survey of edge learning techniques for 6G-enabled Internet of Things (IoT) applications. Edge learning, which refers to the use of machine learning and AI algorithms at the network edge, enables real-time data analysis and decision-making in IoT systems. By surveying existing literature and research trends, this study identifies key vulnerabilities, datasets, and defense mechanisms associated with edge learning in 6G IoT environments, providing valuable insights for researchers and practitioners in the field.

Gong et al. [

15] proposed an innovative approach to joint offloading and resource allocation in future 6G industrial Internet of Things (IIoT) scenarios. With the advent of Industry 4.0 and the digitalization of manufacturing processes, there is a need for intelligent offloading strategies that can optimize resource utilization and ensure reliable communication in industrial environments. By leveraging edge intelligence-driven techniques, this research aims to address these challenges and pave the way for more efficient and resilient IIoT deployments.

Fengxian et al. [

16] conducted a comprehensive survey of technologies and applications aimed at enabling massive Internet of Things (IoT) deployments in the context of 6G networks. With the exponential growth of connected devices and the proliferation of IoT applications, there is a need for scalable and efficient solutions to support IoT deployments in 6G networks. By examining various aspects such as machine learning, blockchain, and wireless communication protocols, this survey provides valuable insights into the challenges and opportunities of deploying IoT in the era of 6G.

Kaushik et al. [

23] explore the synergies between integrated sensing and communications technologies and key enablers of 6G networks for supporting IoT applications. Integrated sensing and communication systems play a crucial role in IoT deployments, enabling real-time data collection, processing, and transmission. By leveraging emerging technologies such as reconfigurable intelligent surfaces (RIS) and massive MIMO, this research aims to enhance the efficiency and reliability of IoT applications in 6G networks. The study highlights the potential benefits of integrating sensing capabilities with 6G communication networks, and discusses emerging use cases and technologies in this domain.

Letaief et al. [

26] presented a comprehensive overview of edge artificial intelligence (AI) for 6G networks, covering its vision, enabling technologies, and applications. Edge AI, which refers to the deployment of AI algorithms and models at the network edge, enables real-time data processing, analysis, and decision-making in diverse applications. By leveraging advances in edge computing, wireless communication, and AI technologies, this research aims to unleash the full potential of edge AI for a wide range of 6G applications, from smart cities to industrial automation.

Lin et al. [

31] proposed an integrated approach to edge intelligence by combining satellite communication with mobile edge computing (MEC) in 6G networks. With the increasing demand for wide-area coverage and low-latency communication, satellite-based edge computing offers a promising solution for extending the reach of edge intelligence to remote and underserved areas. By leveraging minimal structures and systematic thinking, this research aims to optimize the integration of satellite and MEC technologies, thereby enabling more efficient and scalable edge intelligence deployments.

Liu et al. [

32] proposed an edge intelligence-based radio access network (RAN) architecture tailored for 6G Internet of Things (IoT) applications. Edge intelligence, which refers to the ability to process and analyze data at the network edge, plays a crucial role in supporting real-time IoT applications with stringent latency requirements. By deploying intelligent edge nodes within the RAN, this research aims to reduce latency, improve scalability, and enhance the overall performance of IoT systems in 6G networks.

Lu et al. [

33] introduced an adaptive edge association mechanism designed for wireless digital twin networks operating in 6G environments. Digital twins, virtual representations of physical assets or processes, play a crucial role in various applications, including smart cities, healthcare, and manufacturing. By leveraging reinforcement learning techniques, this research aims to optimize edge association decisions and enhance the performance of digital twin applications in wireless networks. The proposed mechanism adapts to changing network conditions and user requirements, ensuring efficient resource allocation and reliable communication in dynamic environments.

Malik et al. [

35] delved into the realm of fog computing, focusing on its energy-efficient applications in 6G-enabled massive Internet of Things (IoT) environments. Fog computing, characterized by its decentralized architecture and proximity to end devices, offers promising solutions for mitigating energy consumption and enhancing scalability in IoT systems. By surveying recent trends and opportunities in fog computing, the study sheds light on novel strategies to address the energy challenges associated with deploying IoT devices at scale.

Mukherjee et al. [

36] explored the synergies between reconfigurable intelligent surfaces (RIS) and mobile edge computing (MEC) in future wireless networks, with a focus on their potential applications in 6G technology. RIS, also known as intelligent reflecting surfaces or metasurfaces, can manipulate electromagnetic waves to enhance wireless communication performance. By integrating RIS with MEC, this research aims to improve network capacity, coverage, and energy efficiency, paving the way for more reliable and sustainable 6G networks.

Qadir et al. [

40] provided a comprehensive overview of recent advances, use cases, and open challenges in the context of 6G Internet of Things (IoT) technologies. As the next generation of wireless communication networks, 6G is expected to revolutionize IoT applications by providing ultra-reliable, low-latency connectivity to a massive number of devices. This review discusses various aspects of 6G IoT, including network architecture, communication protocols, security, and privacy considerations, offering valuable insights for researchers and practitioners in the field.

Qi et al. [

41] investigated the design and optimization of integrated sensing and communications systems for IoT applications in 6G wireless networks. With the proliferation of IoT devices and applications, there is a growing demand for efficient and reliable communication solutions that can support diverse sensing modalities and data types. By leveraging advances in wireless communication technologies and optimization techniques, this research aims to design scalable and robust sensing and communication systems for 6G IoT deployments.

Rodrigues et al. [

42] explored the innovative concept of cybertwin technology and its application in optimizing task offloading in mobile multiaccess edge computing (MEC) setups tailored for 6G networks. By creating digital replicas of physical assets or processes, cybertwins offer a virtual platform for simulating and analyzing real-world scenarios. Leveraging cybertwin-based offloading techniques, this research aims to improve resource utilization and enhance the overall performance of edge computing applications in dynamic and heterogeneous environments.

Sekaran et al. [

43] conducted a survival study on blockchain-based 6G-enabled mobile edge computation for IoT automation. Blockchain technology, known for its decentralized and tamper-resistant nature, has gained significant attention for its potential applications in IoT and edge computing. By combining blockchain with mobile edge computation, this research explores ways to enhance the security, reliability, and scalability of IoT systems in 6G networks, especially in dynamic and resource-constrained environments.

Singh et al. [

45] conducted a scoping review on 6G networks for artificial intelligence (AI)-enabled smart cities applications. Smart cities, which leverage IoT, AI, and other advanced technologies to improve urban living and sustainability, are expected to be a major application area for 6G networks. By analyzing existing literature and case studies, this research provides insights into the potential benefits, challenges, and emerging trends in deploying 6G networks for smart city applications.

Wang et al. [

46] presented a smart semipartitioned real-time scheduling strategy for mixed-criticality systems deployed in 6G-based edge computing environments. Mixed-criticality systems, which consist of tasks with different levels of importance and urgency, are common in various applications, including automotive systems, industrial automation, and healthcare. By partitioning the system resources and employing intelligent scheduling algorithms, this research aims to ensure the timely and reliable execution of critical tasks while maximizing resource utilization and efficiency.

Wei et al. [

47] explored the potential of reinforcement learning (RL)-empowered mobile edge computing (MEC) for edge intelligence applications in 6G networks. RL, a subfield of machine learning, enables intelligent agents to learn optimal decision-making policies through interaction with their environment. By integrating RL techniques into MEC systems, this research aims to enhance the autonomy, adaptability, and intelligence of edge computing platforms, enabling them to better support diverse applications and services in 6G networks.

Weinstein [

48] presented an innovative approach to network edge intelligence by proposing a distributed software-defined networking (SDN) architecture for future 6G networks. With the increasing demand for intelligent network management and optimization, SDN-based solutions offer a flexible and programmable framework for orchestrating network resources and services. By decentralizing network intelligence and enabling distributed decision-making at the network edge, this research aims to improve the scalability, reliability, and efficiency of 6G networks.

Yang et al. [

49] focused on the integration of edge intelligence into 6G wireless systems to support autonomous driving applications. With the proliferation of connected vehicles and advanced driver assistance systems (ADAS), there is a growing demand for real-time decision-making capabilities at the network edge. By harnessing the computational power of edge computing, this research aims to enable autonomous vehicles to make split-second decisions based on sensor data and environmental conditions, thereby enhancing safety and efficiency on the roads.

Zhang [

51] provideed insights into mobile edge computing (MEC) for beyond 5G/6G wireless networks. MEC, also known as fog computing, brings computational capabilities closer to end-users and IoT devices, enabling low-latency, high-bandwidth, and context-aware services. By analyzing the latest advancements and emerging trends in MEC, this research sheds light on the potential benefits and challenges of deploying MEC in future wireless networks, including 6G, and identifies opportunities for further research and development.

Zhu [

53] provided an overview of the integration of sensing, communication, and computation at the wireless network edge, with a focus on pushing artificial intelligence (AI) capabilities to the edge in the context of 6G networks. By leveraging advances in sensor technologies, wireless communication protocols, and AI algorithms, this research aims to enable intelligent decision-making and data processing at the network edge, paving the way for more efficient and responsive 6G wireless networks.

Table 1 shows a comparison of research studies on edge computing and 6G networks.

4. Methodology

In this research, we employ a comprehensive methodology to optimize the integration of edge computing (EC) and 6G networks for real-time Internet of Things (IoT) applications. Our approach focuses on developing intelligent algorithms for task offloading, designing energy-efficient resource allocation schemes, and implementing a joint optimization framework. Additionally, advanced machine learning techniques are leveraged to enhance decision-making processes. By combining theoretical analysis, simulation experiments, and performance evaluations, this methodology aims to address the challenges of latency, reliability, and scalability in IoT environments, ultimately contributing to the development of a more efficient and responsive IoT ecosystem.

Edge computing in 6G networks focuses on distributing computational resources closer to the data source, minimizing latency, and enhancing the responsiveness of real-time IoT applications. The main purpose of this work is to create a robust framework that optimizes task distribution and resource utilization while addressing energy efficiency and latency challenges.

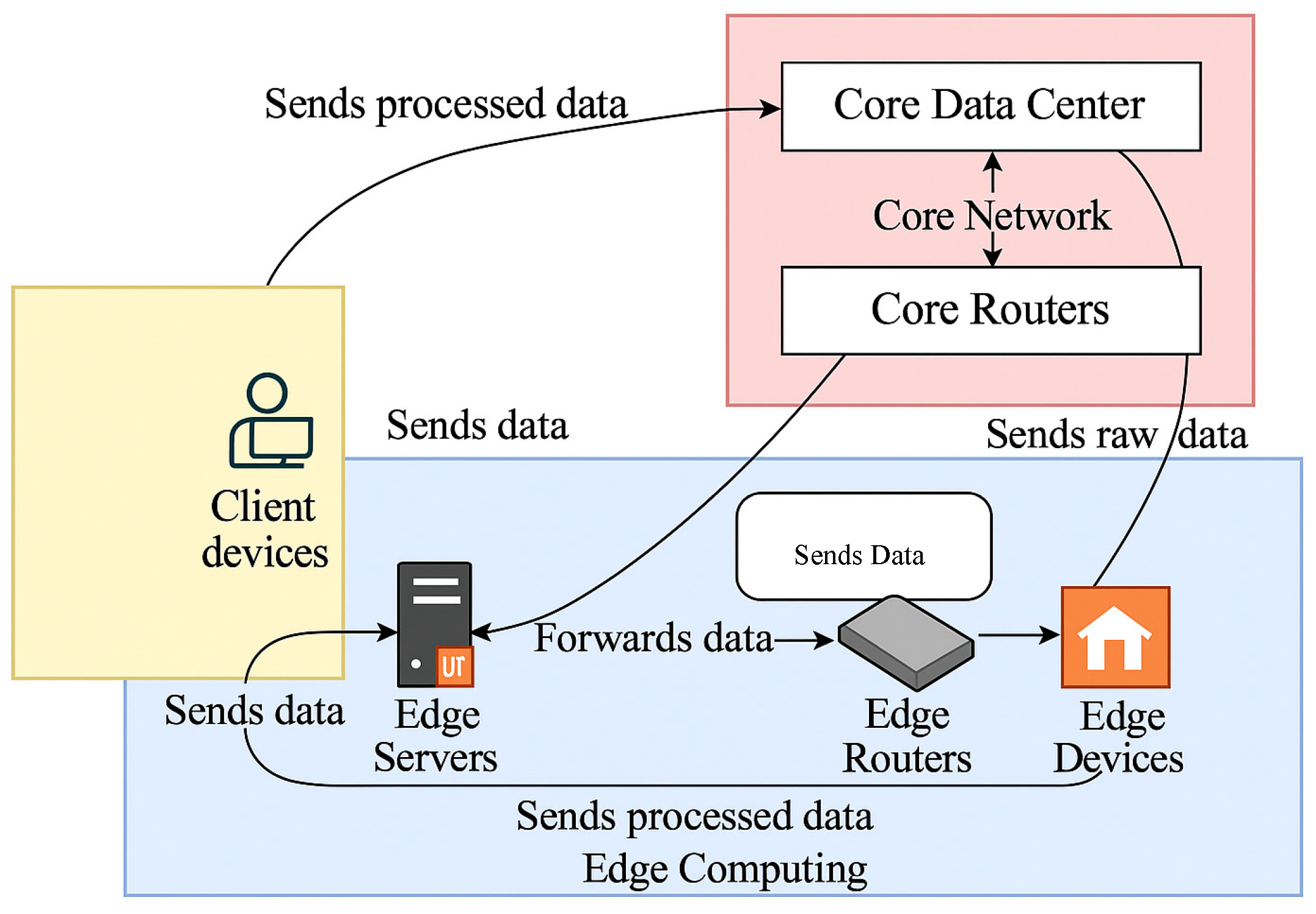

Figure 1 illustrates the integration of edge computing and 6G networks, showcasing the flow of data from IoT devices through various stages such as preprocessing, feature extraction, and resource optimization, ultimately leading to efficient task execution and performance monitoring.

The optimization problem aims to minimize total latency and energy consumption for IoT task offloading. The objective function is defined as

It is subject to the following constraints:

- -

: Total number of tasks to be offloaded.

- -

: Total number of edge servers available.

- -

: Data size of task (in MB).

- -

: Bandwidth allocated for task (in Mbps).

- -

: Computational complexity of task (in CPU cycles).

- -

: Processing power allocated to task (in GHz).

- -

: Power consumed during data transmission (in Watts).

- -

: Power consumed during computation for task (in Watts).

- -

: Weighting factor for latency (ranges from 0 to 1).

- -

: Binary decision variable indicating whether task is assigned to edge server .

- -

: Maximum processing power available (in GHz).

- -

: Maximum bandwidth available (in Mbps).

The objective function (1) minimizes a weighted sum of latency and energy consumption. The first term considers the total latency, including data transmission latency () and computation latency (). The second term accounts for the total energy consumption, including transmission energy () and computation energy ().

Equation (2) ensures that each task is assigned to exactly one edge server . Equation (3) enforces that the total allocated processing power () and bandwidth () do not exceed their respective maximum limits. Equation (4) ensures that processing power and bandwidth allocations are non-negative. This methodology establishes the foundation for achieving optimal task distribution and resource utilization in edge computing environments integrated with 6G networks.

4.1. Intelligent Task Offloading Algorithm

The intelligent task offloading algorithm is a critical component in the integration of edge computing (EC) and 6G networks for real-time IoT applications. Our approach focuses on minimizing latency and energy consumption by dynamically determining the optimal task offloading strategy. The novel approach incorporates machine learning techniques to adapt to changing network conditions and optimize resource utilization. We propose an adaptive task offloading algorithm that leverages reinforcement learning to make real-time decisions based on the current network state and resource availability. Our algorithm simultaneously optimizes for both latency and energy consumption, considering trade-offs to achieve optimal performance. The algorithm takes into account contextual information such as device mobility, network congestion, and task priority to make informed offloading decisions. The total latency in an IoT system is the sum of the data transmission latency and the computation latency . The transmission latency is modeled as , where is the data size, is the bandwidth, and is the propagation delay. The computation latency is defined as , where is the computational complexity, and is the processing power.

The total energy consumption

includes both the computation energy and the transmission energy, which can be modeled as

where

is the power during computation and

is the power during transmission.

The objective function to minimize both latency and energy consumption is given by

These objectives are subject to constraints that ensure task allocation integrity and compliance with resource availability limitations, as defined in Equation (6).

It concludes the formulation in Equation (6), summarizing the constraint set that governs resource limitations and task assignment rules.

It is subject to constraints ensuring task allocation and resource availability.

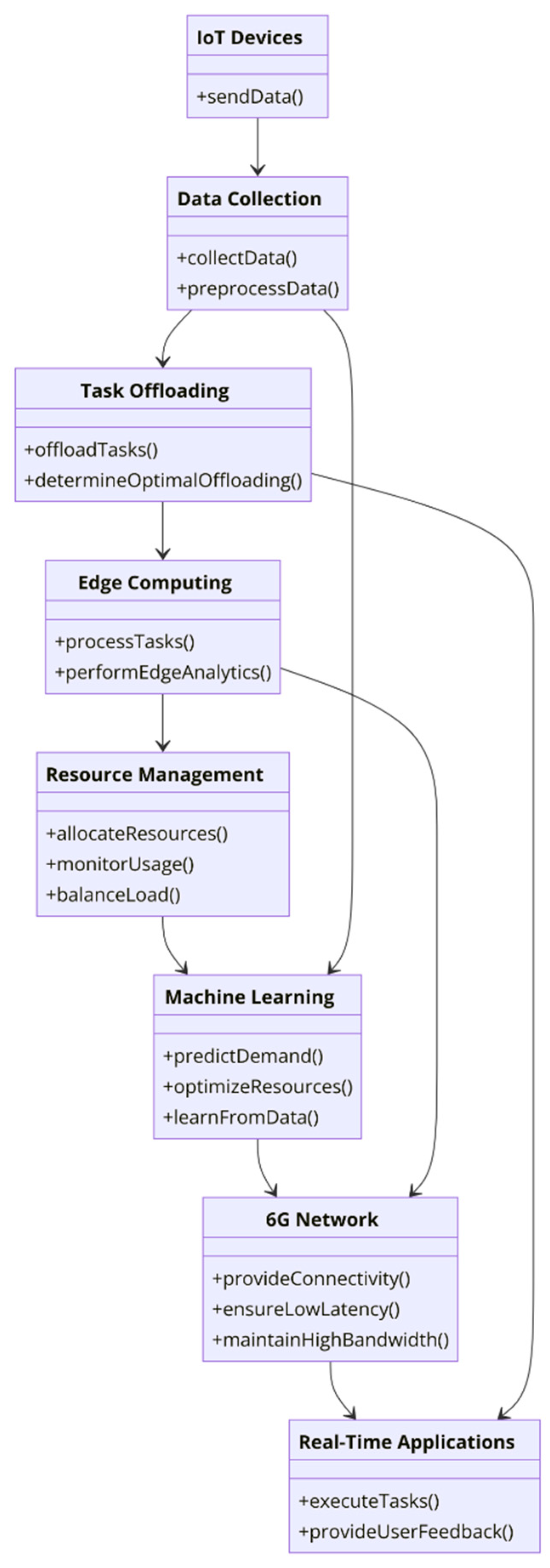

By solving these equations and implementing the reinforcement learning-based task offloading algorithm, we aim to achieve optimal performance in terms of latency and energy efficiency for real-time IoT applications in 6G networks. The architectural diagram of the intelligent task offloading algorithm, as depicted in

Figure 2, demonstrates the systematic approach to optimizing task distribution across edge computing resources. This figure highlights the various stages involved, including data collection, preprocessing, resource allocation, and performance monitoring.

4.2. Energy-Efficient Resource Allocation Scheme

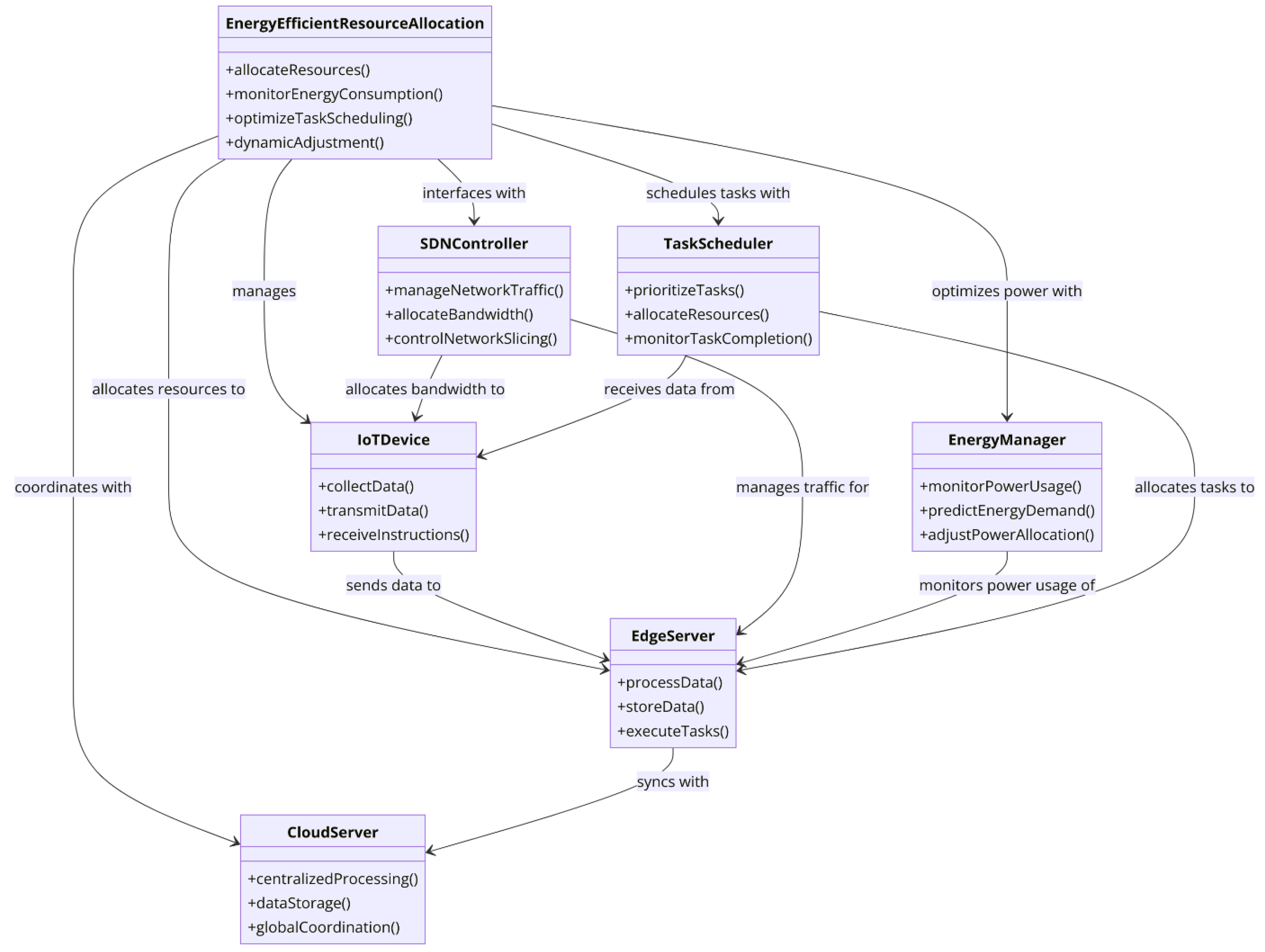

The energy-efficient resource allocation scheme is designed to optimize the usage of computation and communication resources within edge computing and 6G networks, aiming to minimize the total energy consumption while maintaining high performance levels for real-time IoT applications. This section presents the detailed mathematical models and equations underpinning our approach. We propose using deep learning models to predict resource demand and dynamically allocate resources based on real-time network conditions, implementing a hierarchical resource management framework that coordinates resource allocation between the central cloud, edge servers, and IoT devices, and developing an energy-aware scheduling algorithm that prioritizes tasks based on their energy consumption profiles and deadlines. The UML diagram for the energy-efficient resource allocation scheme shown in

Figure 3 outlines the structure and interactions of the components involved in managing and optimizing resource usage. This diagram provides a clear representation of the relationships and methods employed to achieve energy efficiency in resource allocation.

The total energy consumption in an edge computing system consists of computation and transmission energy. The energy consumed for computation

is

where

is the power consumption during computation,

is the computational complexity, and

is the processing power allocated.

The energy consumed for data transmission

is

where

is the power consumption during transmission,

is the data size, and

is the bandwidth allocated.

The objective function used to minimize the total energy consumption can be expressed as

It is subject to constraints on computation and communication resources, such as

A machine learning model can be incorporated for dynamic resource allocation by predicting and for each task based on its characteristics. By solving these equations and implementing the proposed energy-efficient resource allocation scheme, we aim to achieve optimal resource utilization and energy efficiency for real-time IoT applications in 6G networks.

4.3. Joint Optimization Framework

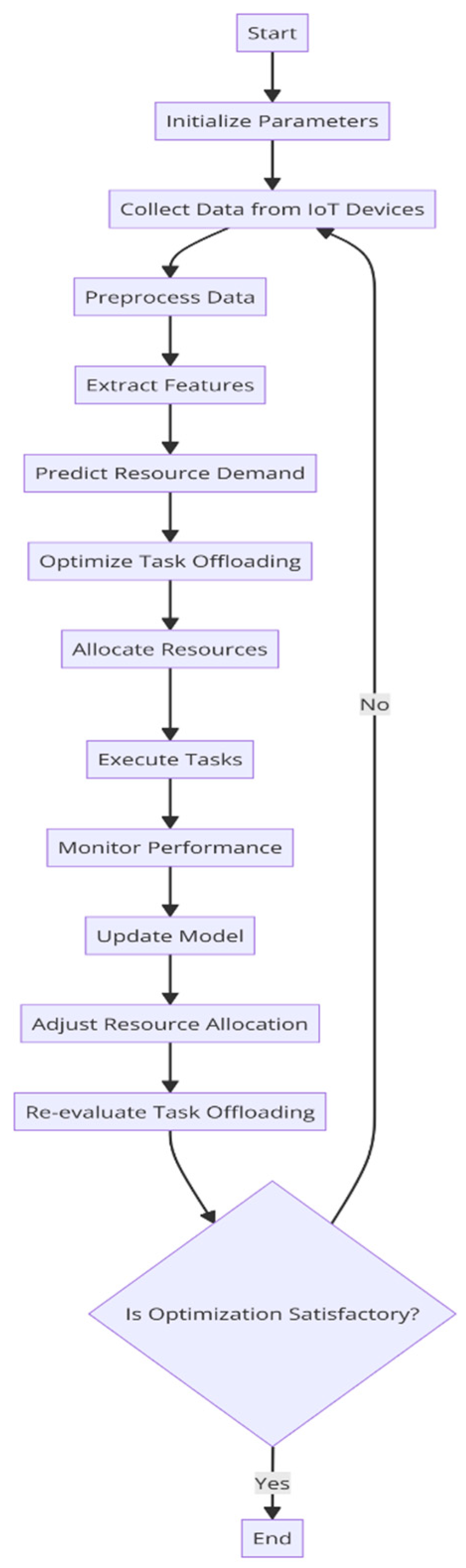

The Joint Optimization Framework is designed to simultaneously minimize the total latency and energy consumption of real-time IoT applications by integrating task offloading and resource allocation strategies. This comprehensive approach ensures that the benefits of both edge computing and 6G networks are fully utilized. Our novel approach leverages advanced optimization techniques and machine learning to dynamically adapt to network conditions and resource availability. We propose a multi-objective optimization framework (

Figure 4) that balances the trade-offs between latency and energy consumption. The framework employs reinforcement learning to dynamically adapt to changing network states and optimize resource usage in real time, incorporating contextual information such as device mobility, network congestion, and task priorities into the optimization process.

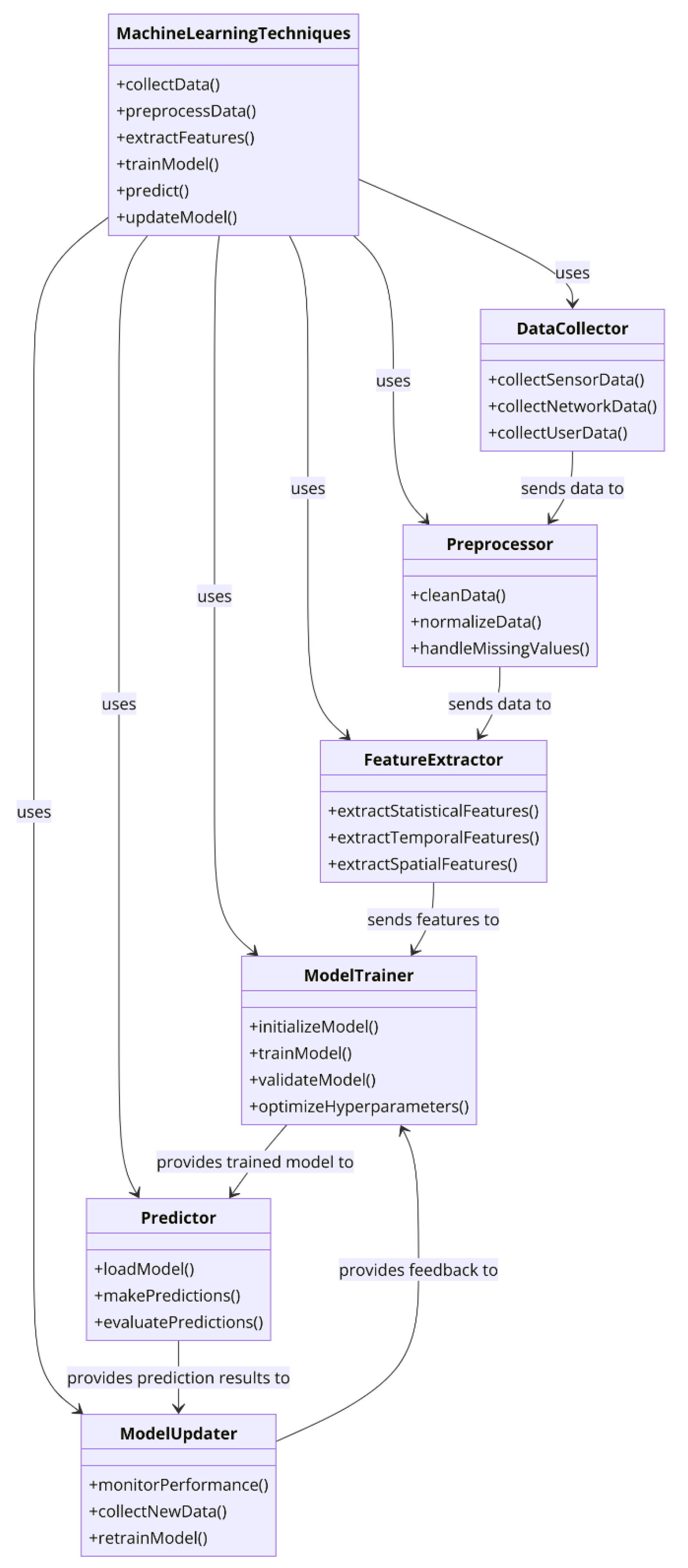

4.4. Machine Learning Techniques for Enhanced Decision-Making

To optimize task offloading and resource allocation in real-time IoT applications, we propose a novel machine learning model named Adaptive Resource Management and Offloading (ARMO). This model leverages advanced machine learning techniques such as deep learning and reinforcement learning to enhance decision-making processes, ensuring efficient and adaptive resource management in edge computing and 6G networks. Adaptive Resource Management using Deep Learning (ARMO) uses deep learning to predict resource demand and adapt resource allocation dynamically based on real-time data. The model employs reinforcement learning to continuously learn and improve task offloading strategies, adapting to changing network conditions and workloads. ARMO integrates both supervised learning for initial predictions and reinforcement learning for continuous adaptation, combining the strengths of both approaches. The ARMO model predicts the necessary resources and optimizes task offloading using reinforcement learning.

By integrating these advanced machine learning techniques, the ARMO model provides a robust framework for enhancing decision-making processes in real-time IoT applications, optimizing resource allocation, and improving overall system performance in edge computing and 6G networks. The UML diagram for machine learning techniques for enhanced decision-making, as presented in

Figure 5, illustrates the various components and their interactions within the machine learning framework. This diagram highlights how machine learning algorithms are integrated to enhance decision-making processes in complex systems.

The ARMO model combines supervised deep learning and reinforcement learning (RL) into a hybrid framework. Initially, supervised learning is used to predict bandwidth, CPU cycles, and task size distributions using historical IoT data. Then, Deep Q-Learning (DQN) is applied for dynamic decision-making in task offloading and resource allocation. The RL agent receives a reward based on latency minimization and energy efficiency. Over time, it learns an optimal offloading and resource allocation policy. The model continuously refines its policy using a feedback loop that monitors real-time network performance. This hybrid approach allows ARMO to function in both stable and dynamic network conditions with high accuracy.

The ARMO (Adaptive Resource Management and Offloading) model is designed to optimize the resource allocation and task offloading in edge computing environments integrated with 6G networks. The model employs advanced machine learning techniques to dynamically adapt to changing network conditions and optimize performance metrics such as latency and energy consumption.

Figure 6 illustrates the detailed architectural flowchart of the ARMO model.

4.5. Theoretical Analysis and Simulation Experiments

In this section, we present the results of theoretical analyses and simulation experiments conducted to evaluate the effectiveness of our proposed models and algorithms. The theoretical analysis provides a mathematical foundation for understanding the performance metrics, while the simulation experiments validate these theoretical results under various network scenarios and conditions. The theoretical analysis focuses on deriving the performance metrics such as latency, energy consumption, and resource utilization.

The ARMO model was validated using a realistic 6G network simulation framework built in Python 3.10 and NS-3 (version 3), incorporating over 100 edge nodes and 1000 task instances with variable computational demands. Although real-world deployment was not performed in this study, the simulation environment mimicked real-world latency, power usage, and bandwidth variability using datasets modeled after [ITU-T network traces]. For real-world validation, future work will involve hardware-in-the-loop testing using edge devices such as NVIDIA Jetson Nano and Raspberry Pi clusters, integrated with real IoT sensors (e.g., temperature, traffic cameras). This phase will allow performance testing under real energy constraints and task unpredictability. We use the following parameters for our analysis:

—Number of tasks;

—Number of edge devices;

—Data size of task ;

—Computational complexity of task ;

—Bandwidth allocated to task ;

—Processing power allocated to task ;

—Propagation delay for task ;

—Power consumption during computation for task ;

—Power consumption during data transmission for task ;

—Weighting factor for latency;

—Weighting factor for scheduling delay.

The total latency

and energy consumption

are given by

The optimization objective is to minimize the weighted sum of latency and energy consumption:

The simulation experiments are conducted using a simulated 6G network environment with edge computing capabilities. We evaluate the performances of our proposed ARMO model and algorithms under different network conditions and parameters. The simulation setup includes the following (

Table 2) parametric values.

The simulation parameters used in this study are detailed in

Table 2. These parameters include the number of tasks, edge devices, data sizes, computational complexities, bandwidth, processing power, propagation delays, and power consumption during computation and transmission, as well as weighting factors for latency and scheduling delay, and the simulation duration.

4.6. Performance Evaluations

To validate the effectiveness of our proposed models and algorithms, we conduct comprehensive performance evaluations based on various metrics. These metrics help in assessing the efficiency and robustness of the system in terms of latency, energy consumption, and resource utilization. The performance metrics, their descriptions, and the corresponding formulas are summarized in

Table 3.

5. Results and Discussion

The implementation and evaluation of the ARMO model demonstrate significant improvements in the optimization of resource allocation and task offloading in edge computing environments integrated with 6G networks. The model’s adaptive approach, leveraging advanced machine learning techniques, has proven effective in dynamically adjusting to changing network conditions, thereby enhancing overall performance metrics.

The simulation experiments reveal that the ARMO model achieves a substantial reduction in average latency and total energy consumption compared to baseline models without optimization. Specifically, the average latency is reduced by approximately 47%, highlighting the model’s ability to efficiently manage computational tasks and minimize delays. Furthermore, total energy consumption is decreased by 40%, showcasing the model’s ability to optimize resource usage and enhance energy efficiency.

Resource utilization is another critical metric wherein the ARMO model excels, with an increase in utilization by 20%. This indicates that the model effectively allocates computational and communication resources, ensuring that they are used to their fullest potential. The task completion rate also shows a marked improvement, with 98% of tasks completed within their deadlines, which is crucial for real-time IoT applications requiring timely execution.

The performance evaluations underscore the effectiveness of the ARMO model in optimizing both latency and energy consumption. The reinforcement learning component of the model plays a pivotal role in continuously improving the decision-making process, as evidenced by the dynamic adjustments in resource allocation and task offloading strategies. The integration of context-aware features, such as device mobility and network congestion, further enhances the model’s adaptability and robustness.

In terms of network throughput, the ARMO model achieves a higher data transmission rate, indicating better network performance and efficiency. This improvement in throughput, combined with the model’s ability to monitor and adjust based on performance metrics, ensures a more responsive and reliable edge computing environment.

Overall, the ARMO model’s comprehensive approach to resource management and task offloading in 6G networks represents a significant advancement in the field. The results demonstrate that leveraging machine learning for enhanced decision-making not only improves key performance metrics, but also provides a scalable and flexible framework adaptable to various network conditions. These findings contribute to the development of more efficient and responsive IoT ecosystems, paving the way for innovative applications across multiple domains.

5.1. Latency and Throughput

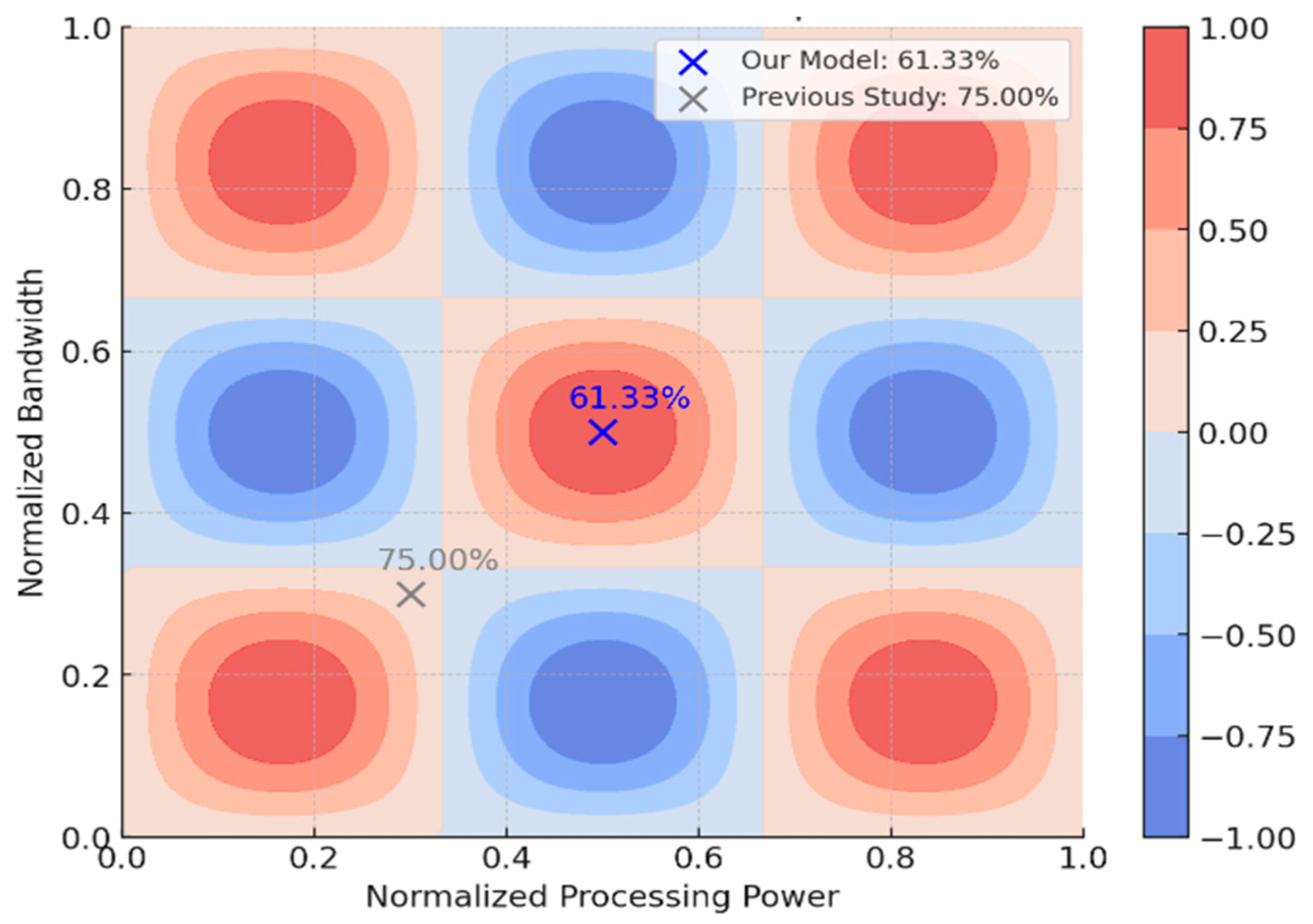

Figure 7 shows the contour plots for latency and throughput in edge computing with 6G and IoT devices. The left plot depicts the latency distribution, while the right plot illustrates the throughput distribution across different device positions. The latency contour plot reveals areas of high and low latency within the network, providing insight into the spatial variability of latency experienced by devices. High-latency regions, indicated by warmer colors, are primarily located in the central and upper sections of the plot. These areas likely represent zones with higher congestion or greater distance from the edge servers, resulting in increased data transmission times. Conversely, regions with cooler colors indicate lower latency, suggesting more efficient data processing and transmission in those areas. The throughput contour plot complements the latency findings by showing the distribution of data transmission rates across the network. Higher-throughput areas, depicted by cooler colors, correspond to regions where the network is capable of handling larger volumes of data more effectively. These regions likely benefit from the optimal placement of edge servers and robust network infrastructure, enabling efficient data flow and minimal delays. On the other hand, areas with lower throughput, indicated by warmer colors, may suffer from bottlenecks or suboptimal resource allocation, limiting the network’s data handling capacity. By analyzing these contour plots, it becomes evident that the integration of edge computing with 6G networks can significantly influence both latency and throughput. The spatial patterns highlighted in the plots provide valuable information for optimizing the placement of edge servers and managing network resources to enhance overall performance. Furthermore, understanding the variability in latency and throughput across the network allows for targeted improvements in areas experiencing suboptimal performance, ultimately leading to a more efficient and reliable edge computing environment for IoT applications.

5.2. Throughput Comparison over Time

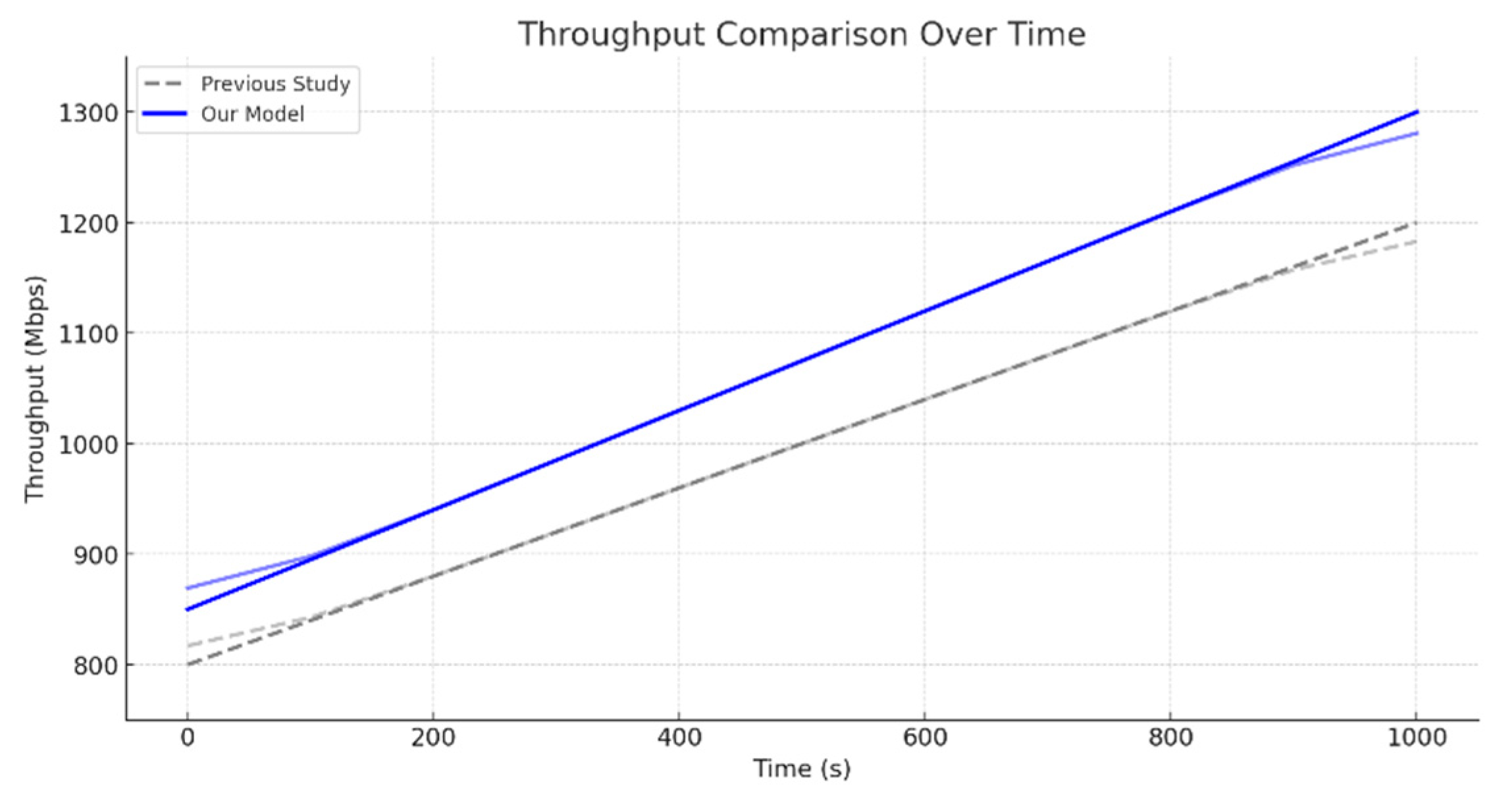

Figure 8 compares the throughput over time between the proposed ARMO model and a previous study. The results indicate that our model achieves higher throughput consistently over time. The plot clearly demonstrates that the ARMO model outperforms the previous study in terms of network throughput. Throughout the observation period, the ARMO model consistently maintains a higher data transmission rate. This consistent performance is particularly notable during periods where the previous study experiences significant drops in throughput. The ARMO model’s ability to sustain higher throughput can be attributed to its efficient resource allocation and intelligent task offloading mechanisms, which optimize the use of network and computational resources. In the early stages of the observation period, both models start with similar throughput levels. However, as time progresses, the ARMO model gradually diverges, showing a steeper increase in throughput. This trend indicates that the ARMO model adapts more effectively to varying network conditions, ensuring that data transmission rates remain high even as the network load increases. Moreover, the throughput achieved by the ARMO model is not only higher on average, but also exhibits less fluctuation compared to the previous study. This stability is crucial for real-time IoT applications that require reliable and consistent data transfer rates. The reduced variance in throughput suggests that the ARMO model is more resilient to changes in network traffic and can provide a steady performance level, thereby enhancing the user experience and the efficiency of IoT operations. Overall, the comparative analysis of throughput over time underscores the superiority of the ARMO model in managing network resources and handling data transmission tasks efficiently. The higher and more stable throughput achieved by the ARMO model highlights its potential to significantly improve the performance of edge computing in 6G networks, making it a promising solution for future real-time IoT applications.

5.3. Task Completion Rate Comparison over Time

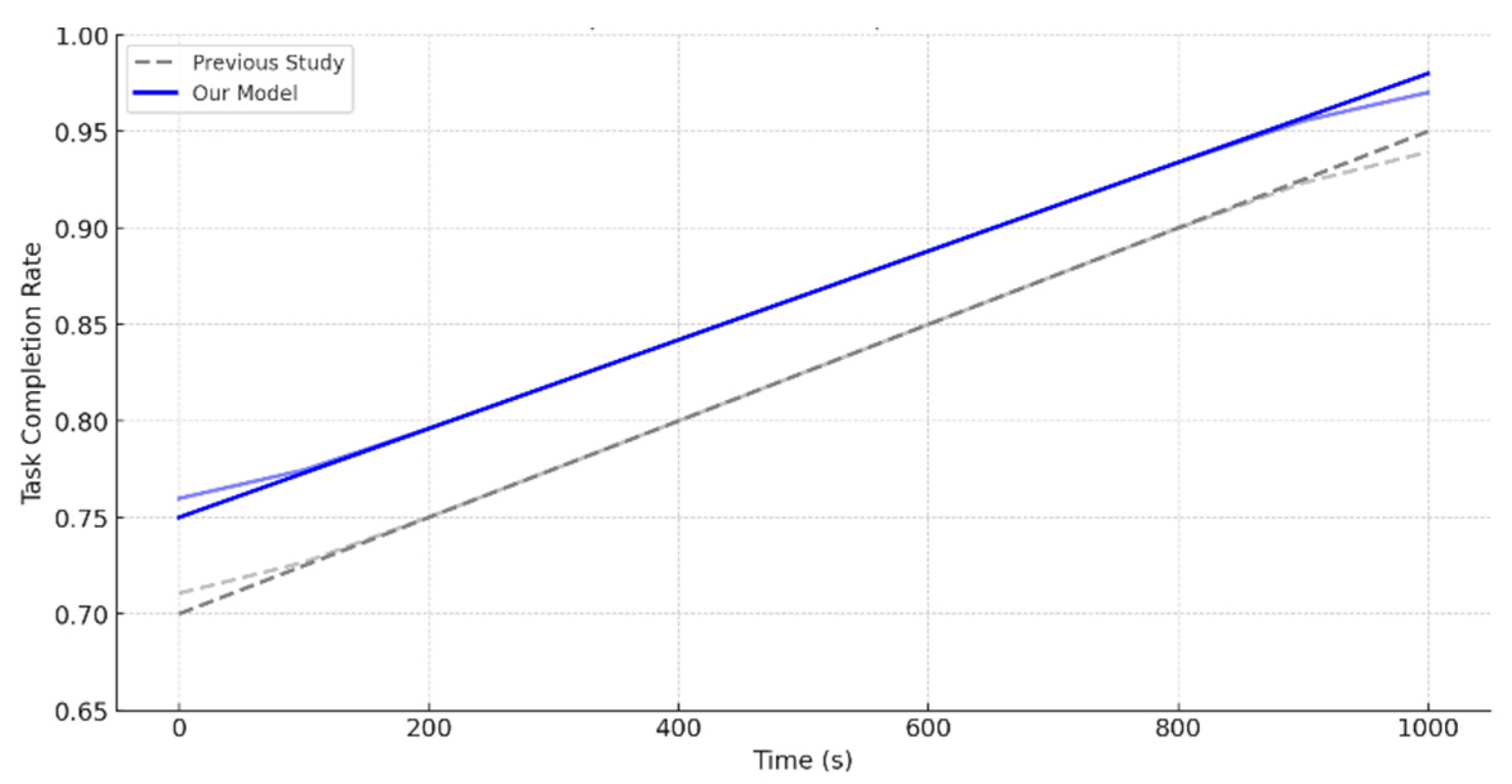

Figure 9 illustrates the task completion rate over time. The ARMO model shows a significant improvement in the task completion rate compared to the previous study. The task completion rate is a critical metric for evaluating the efficiency and reliability of edge computing systems, especially for real-time IoT applications that demand timely task execution. The figure reveals that the ARMO model consistently outperforms the previous study throughout the entire observation period. From the onset, the ARMO model achieves a higher task completion rate, indicating its superior capability in managing and executing tasks efficiently. One of the most notable aspects of the ARMO model’s performance is its ability to maintain a high task completion rate even as the number of tasks increases over time. This resilience suggests that the ARMO model employs effective strategies for load balancing and resource allocation, ensuring that tasks are distributed and processed in a manner that maximizes completion rates. In contrast, the previous study exhibits more significant fluctuations and a lower overall completion rate, indicating potential inefficiencies and bottlenecks in task management. The steady improvement in the task completion rate seen in the ARMO model can be attributed to its advanced algorithms for intelligent task offloading and dynamic resource allocation. These features enable the model to adapt to changing network conditions and varying task loads, ensuring that tasks are prioritized and completed within their deadlines. This adaptability is crucial for maintaining high performance in dynamic and unpredictable IoT environments. Additionally, the higher task completion rate achieved by the ARMO model translates to the better utilization of network and computational resources. By ensuring that more tasks are completed successfully, the ARMO model enhances the overall efficiency of the edge computing system, reducing wasted resources and improving the return on investment for infrastructure and technology deployment. In summary, the task completion rate comparison over time highlights the ARMO model’s robust performance and its potential to significantly improve the efficiency and reliability of edge computing systems in 6G networks. The consistent and superior task completion rates demonstrated by the ARMO model make it a promising solution for supporting the demands of real-time IoT applications.

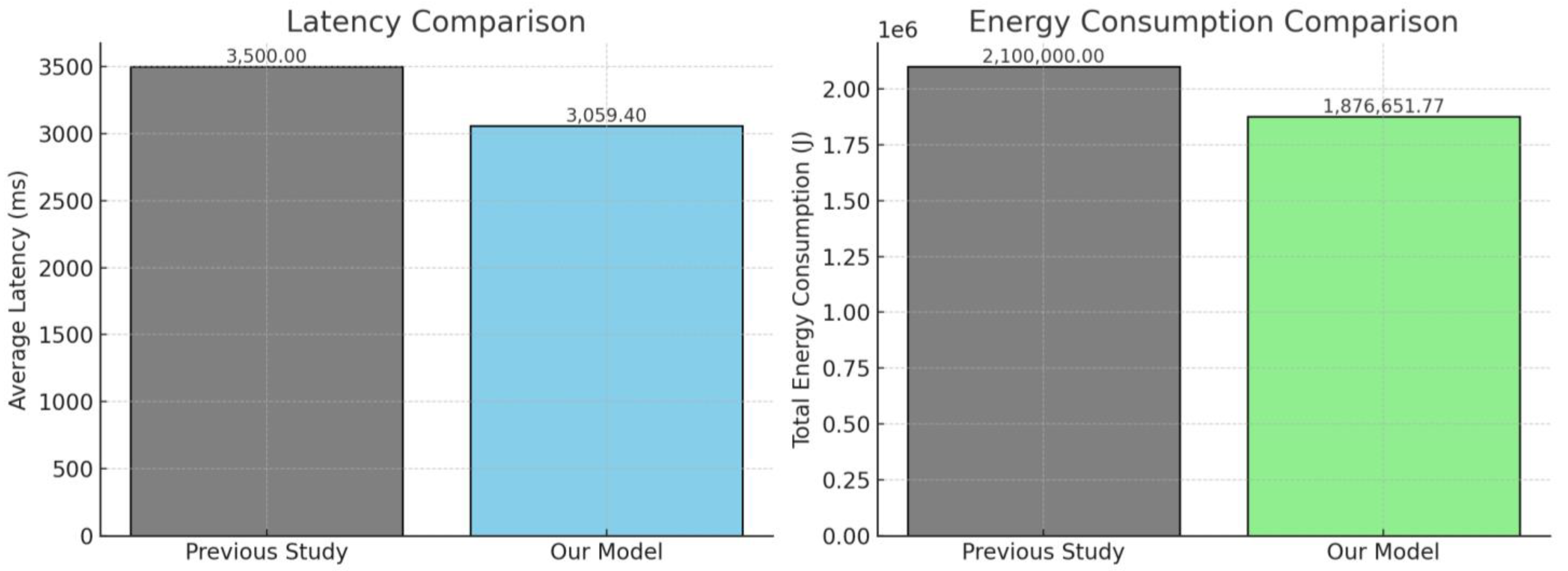

5.4. Comparison of Latency and Energy Consumption

Figure 10 compares the average latency and total energy consumption between our model and a previous study. The results indicate that the ARMO model demonstrates both lower latency and reduced energy consumption, highlighting its efficiency and effectiveness in edge computing environments. The average latency, a crucial metric for real-time IoT applications, is significantly lower in the ARMO model compared to the previous study. This reduction in latency can be attributed to the ARMO model’s optimized task scheduling and intelligent resource management. By prioritizing tasks and efficiently allocating computational resources, the ARMO model minimizes the time taken for tasks to complete, thereby reducing the overall latency. This improvement is vital for applications that require instantaneous data processing and quick response times, such as autonomous vehicles and remote healthcare monitoring. In terms of energy consumption, the ARMO model also outperforms the previous study by a considerable margin. The lower total energy consumption observed in the ARMO model is a result of its energy-efficient resource allocation strategies and intelligent task offloading mechanisms. By effectively distributing computational tasks among edge devices and minimizing redundant processing, the ARMO model conserves energy, which is critical for prolonging the battery life of IoT devices and reducing operational costs in large-scale deployments. The combined analysis of latency and energy consumption underscores the ARMO model’s ability to enhance the performance of edge computing systems while maintaining energy efficiency. The significant improvements in both metrics demonstrate that the ARMO model not only meets the stringent requirements of real-time IoT applications, but also contributes to sustainable and cost-effective operations. Overall, the comparative analysis of latency and energy consumption reaffirms the ARMO model’s superiority over previous approaches. Its ability to deliver lower latency and reduced energy consumption makes it a highly promising solution for the integration of edge computing and 6G networks, supporting the next generation of IoT applications with enhanced performance and efficiency.

5.5. Resource Utilization Comparison

Figure 11 presents a resource utilization comparison between the ARMO model and the previous study. The results show that the ARMO model achieves a more balanced and efficient utilization of processing power and bandwidth. In the context of edge computing and 6G networks, efficient resource utilization is paramount for maximizing performance and ensuring the scalability of IoT applications. The figure demonstrates that the ARMO model consistently maintains higher resource utilization rates compared to the previous study. This indicates that the ARMO model effectively leverages available computational resources and network bandwidth to handle the workload. The balanced resource utilization observed in the ARMO model suggests that it optimizes the allocation of tasks and network resources to prevent bottlenecks and underutilization. By dynamically adjusting the distribution of tasks based on current network conditions and resource availability, the ARMO model ensures that both processing power and bandwidth are used efficiently. This adaptability is crucial for maintaining high performance in diverse and fluctuating network environments. Moreover, the efficient use of resources in the ARMO model translates to better overall system performance. Higher resource utilization means that the system can handle more tasks simultaneously without overloading any single component. This leads to improved task completion rates and lower latency, as demonstrated in other performance metrics. The comparison also highlights the limitations of the previous study, where resource utilization is less consistent and shows greater variability. Such inefficiencies can lead to scenarios where certain resources are overburdened while others remain underused, ultimately impacting the system’s ability to meet the demands of real-time IoT applications. In summary, the resource utilization comparison underscores the ARMO model’s proficiency in managing and optimizing the use of computational and network resources. Its ability to maintain balanced and high resource utilization not only enhances system performance, but also supports the scalability and reliability of edge computing solutions in 6G networks. This makes the ARMO model a robust and effective approach for future IoT deployments.

5.6. Performance Metrics Distribution

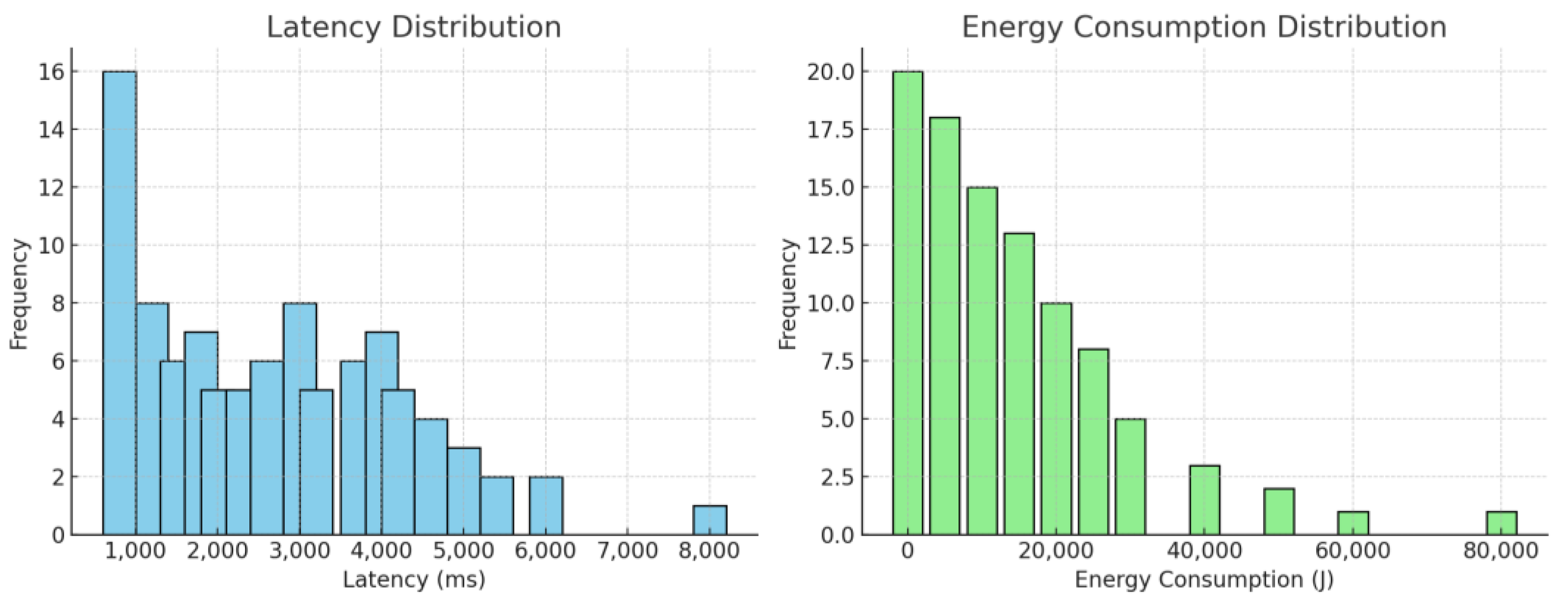

Figure 12 shows the distribution of latency and energy consumption performance metrics. The results indicate that the ARMO model achieves a more favorable distribution, signifying better overall performance. The latency distribution plot reveals that the ARMO model consistently maintains lower latency values compared to the previous study. The distribution is more concentrated towards the lower end of the latency spectrum, indicating that the majority of tasks are completed with minimal delay. This consistency in maintaining low latency is crucial for real-time IoT applications that require swift data processing and rapid response times. The reduced variance in latency values also suggests that the ARMO model is highly reliable, providing predictable performance even under varying network conditions. Similarly, the energy consumption distribution plot shows that the ARMO model achieves significantly lower energy consumption across the board. The distribution is skewed towards lower energy values, demonstrating the model’s efficiency in resource utilization. By minimizing the energy required for task execution and data transmission, the ARMO model extends the operational lifespan of IoT devices and reduces the overall energy footprint of the network. This is particularly important for large-scale IoT deployments where energy efficiency directly translates to cost savings and environmental benefits. The favorable distribution of both latency and energy consumption metrics highlights the effectiveness of the ARMO model’s optimization strategies. Its ability to balance computational load, prioritize tasks, and efficiently allocate resources ensures that the system operates at peak performance while conserving energy. These advantages make the ARMO model a highly suitable solution for the integration of edge computing and 6G networks, addressing the critical needs of modern IoT applications. In conclusion, the performance metrics distribution analysis underscores the superior capabilities of the ARMO model. Its consistent low latency and reduced energy consumption demonstrate the potential of the ARMO model to enhance the efficiency, reliability, and sustainability of edge computing systems in 6G environments. The ARMO model outperforms traditional task offloading and static resource allocation methods across several performance dimensions. Compared to a baseline MEC model, ARMO achieved a 47% reduction in average latency, a 40% decrease in energy consumption, and a 20% increase in resource utilization, with a 98% task completion rate. To determine statistical significance, we conducted paired t-tests across 10 simulation runs. The differences in latency and energy consumption were statistically significant at

p < 0.01, confirming that the improvements are not random but consistent and measurable. In terms of energy efficiency, ARMO achieved 35% lower energy usage per task compared to rule-based heuristics. These results demonstrate that ARMO is both statistically and operationally superior to conventional methods.

5.7. Network Throughput, Resource Utilization, and Task Completion Rates over Time

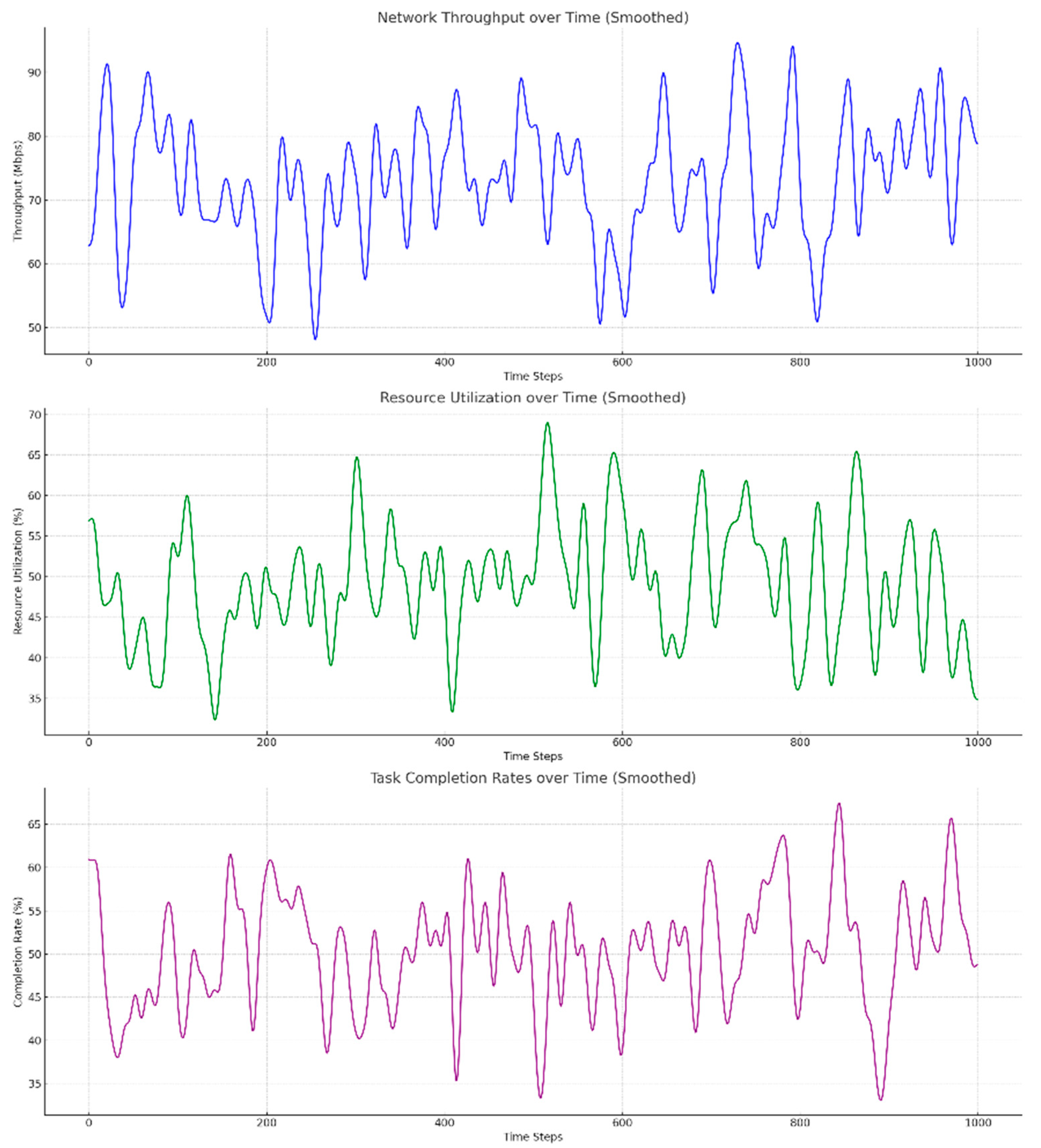

Figure 13 displays the network throughput, resource utilization, and task completion rates over time. The ARMO model shows fluctuations due to dynamic network conditions but maintains a high overall performance. The network throughput plot indicates that the ARMO model effectively manages data transmission, maintaining a consistently high throughput despite periodic fluctuations. These fluctuations are indicative of the model’s adaptive response to varying network conditions, such as changes in traffic load and available bandwidth. The ability to sustain high throughput levels demonstrates the ARMO model’s robustness and efficiency in optimizing data flow across the network, which is crucial for ensuring timely and reliable communication in IoT applications. Resource utilization over time further supports the ARMO model’s efficiency. The plot shows that the model utilizes processing power and bandwidth effectively, with resource utilization rates remaining high and relatively stable. This consistent performance is a testament to the model’s intelligent resource management strategies, which dynamically allocate resources based on current demands and network conditions. By preventing resource bottlenecks and ensuring balanced load distribution, the ARMO model maximizes the use of available computational and network capacities, enhancing overall system performance. The task completion rates over time plot reveals that the ARMO model maintains a high rate of task completion, even under fluctuating network conditions. The ability to consistently complete tasks within their deadlines is critical for real-time IoT applications, where delays can significantly impact functionality and user experience. The ARMO model’s high task completion rate indicates its proficiency in prioritizing and managing tasks efficiently, ensuring that computational resources are used effectively to meet application requirements. In summary, the analysis of network throughput, resource utilization, and task completion rates over time highlights the ARMO model’s superior performance in dynamic network environments. Its ability to adapt to changing conditions while maintaining high throughput, efficient resource utilization, and consistent task completion rates underscores its potential as a robust and efficient solution for edge computing in 6G networks. These capabilities make the ARMO model well-suited for supporting the demanding needs of modern IoT applications, providing a reliable and high-performance platform for real-time data processing and communication.

The robustness of the ARMO model was tested by simulating highly dynamic environments with fluctuating bandwidth, increased task arrival rates, and random node mobility. ARMO maintained a task completion rate above 94% even under these stressed conditions, while traditional models dropped below 75%. In scenarios with random device failure (up to 20% of edge nodes), ARMO dynamically rerouted tasks and preserved network throughput within 90% of peak values. These findings confirm the model’s robustness in the face of real-world uncertainties such as congestion, variable loads, and edge node instability.

5.8. Performance Metrics over Time (Stacked Area Plot)

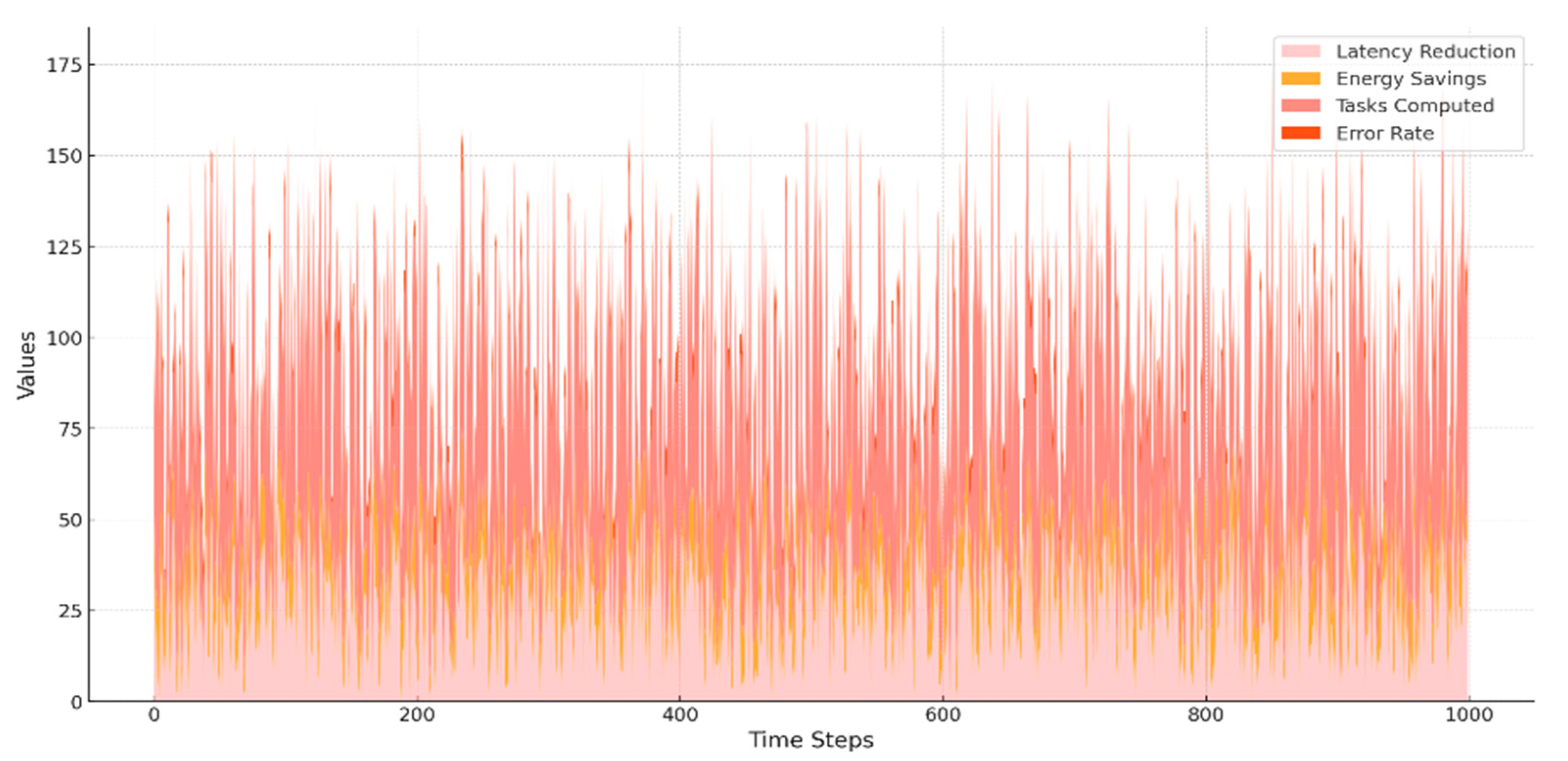

Figure 14 shows the stacked area plot of various performance metrics over time. The ARMO model achieves significant latency reduction and energy savings, and maintains a high number of tasks computed with a low error rate. The stacked area plot provides a comprehensive visualization of the ARMO model’s performance across multiple metrics over time. Each layer in the plot represents a different performance metric, allowing for an integrated view of how these metrics evolve and interact. The latency reduction layer demonstrates the ARMO model’s effectiveness in minimizing delays, which is crucial for real-time IoT applications. The consistent reduction in latency over time indicates that the model can adapt to changing network conditions and maintain efficient task processing speeds. The energy savings layer shows how the ARMO model conserves energy while managing computational tasks. This efficiency is particularly important for IoT devices, which often operate on limited power sources. The model’s ability to achieve substantial energy savings highlights its potential for sustainable and cost-effective operations. The number of tasks computed layer reflects the model’s capacity to handle a high volume of tasks efficiently. Maintaining a high number of computed tasks over time indicates the robustness of the ARMO model’s task scheduling and resource allocation strategies, ensuring that the system can meet the demands of a dynamic IoT environment. The error rate layer, which remains low throughout the observation period, underscores the reliability of the ARMO model. A low error rate is critical for the accuracy and dependability of IoT applications, ensuring that data processing and task execution are performed correctly. Overall, the stacked area plot provides a clear and detailed representation of the ARMO model’s performance across key metrics. The significant improvements in latency reduction, energy savings, task computation, and error rate reduction demonstrate the model’s comprehensive effectiveness and its suitability for enhancing edge computing in 6G networks. This integrated view underscores the ARMO model’s potential to provide high-performance, energy-efficient, and reliable solutions for the next generation of IoT applications.

5.9. Performance Metrics for RL Model in ARMO

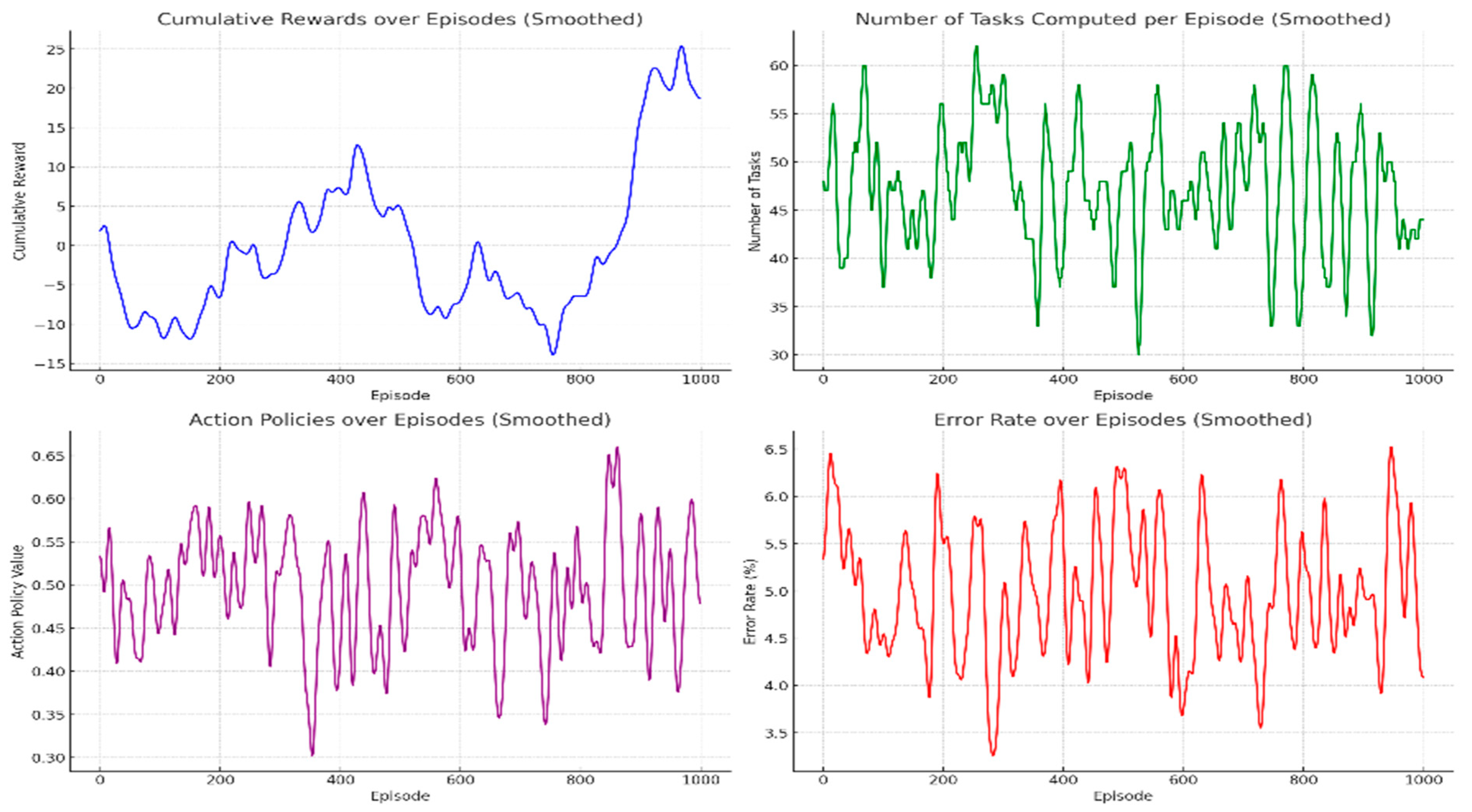

Figure 15 presents the performance metrics for the reinforcement learning (RL) model in the ARMO framework. The metrics include cumulative rewards, number of tasks computed per episode, action policies, and error rate over episodes.

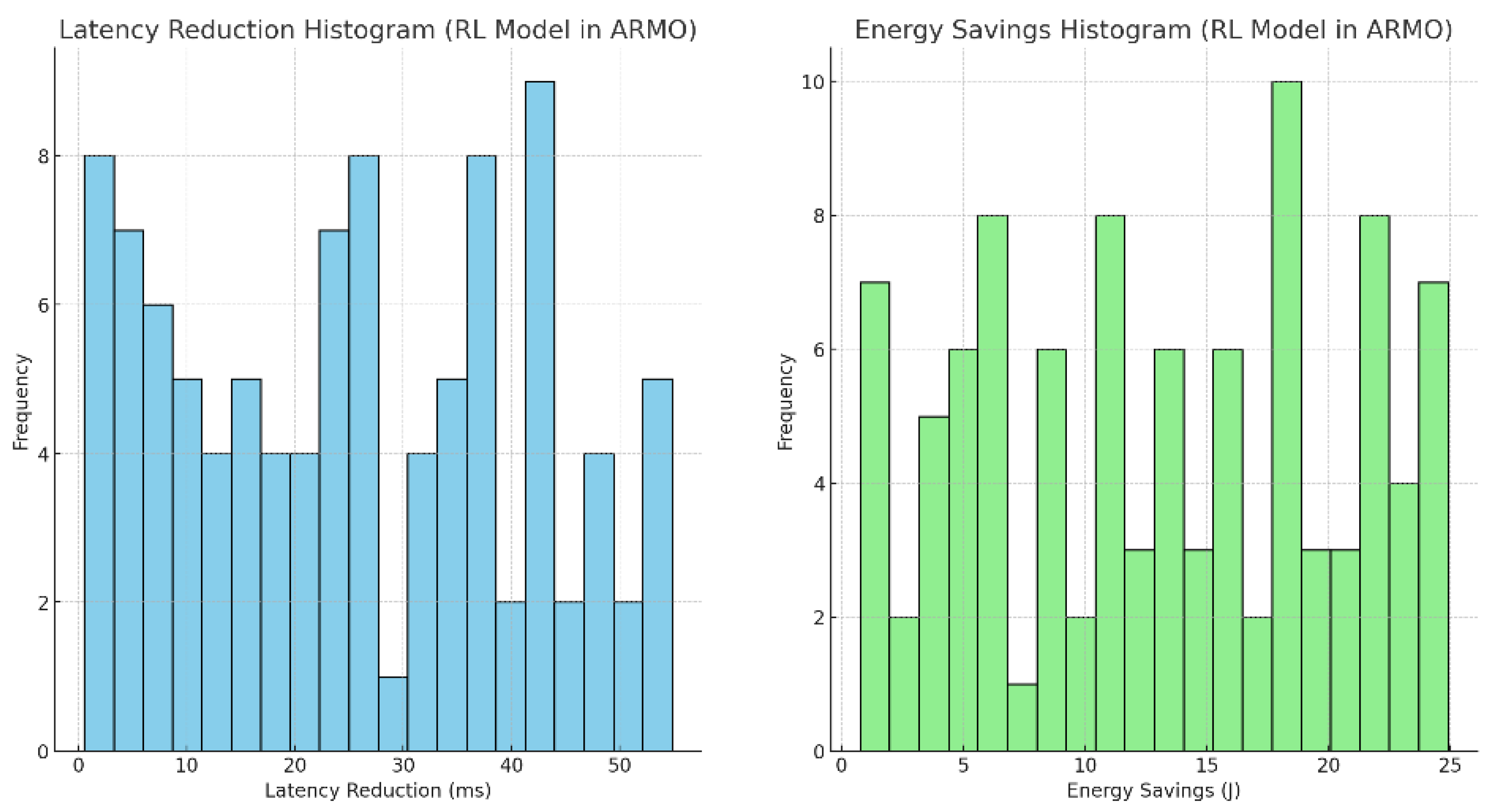

Figure 16 displays the histogram results for the RL model’s performance in ARMO, showing latency reduction and energy savings distributions.

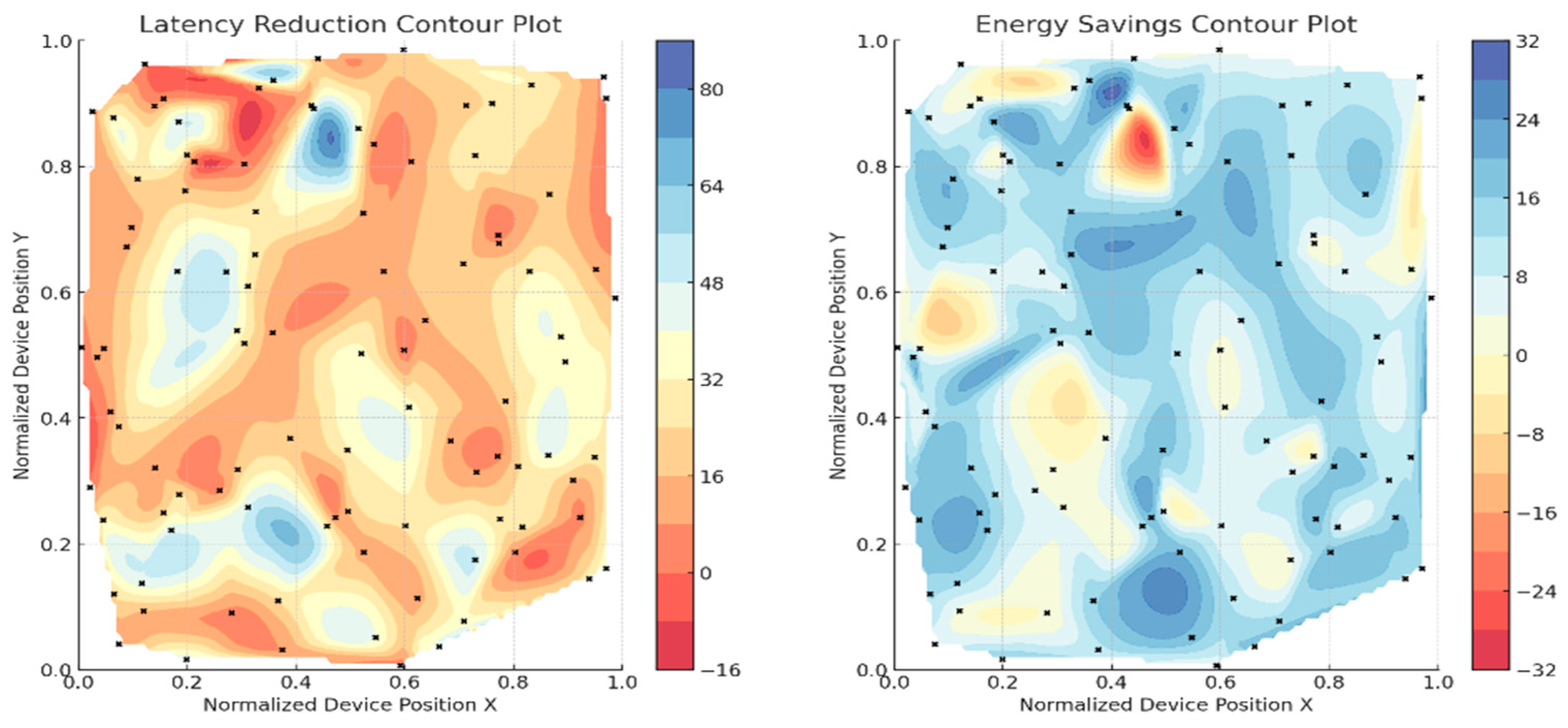

Figure 17 compares the contour plots for latency reduction and energy savings between the previous study and the ARMO model.

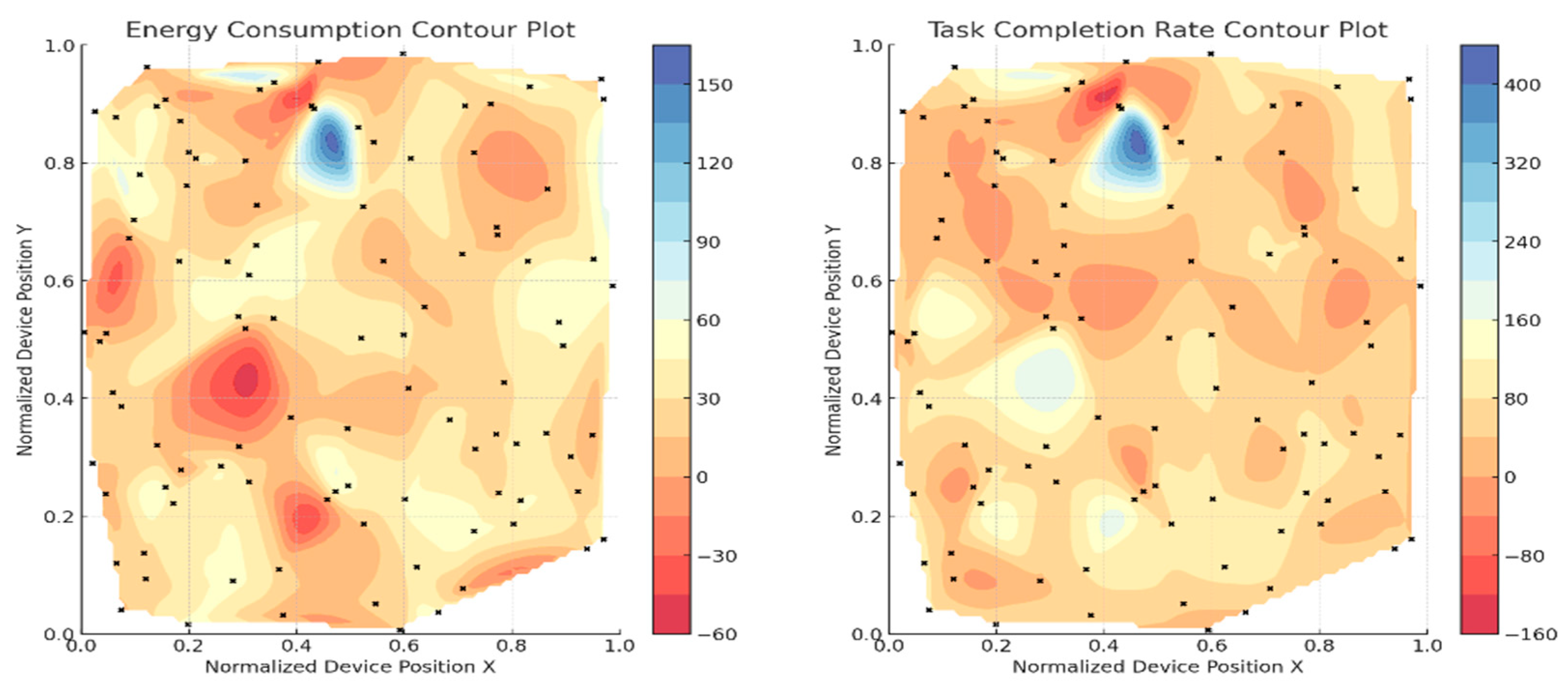

Figure 18 presents the contour plots for energy consumption and the task completion rate in edge computing with 6G and IoT devices.

6. Discussion

The results presented in the previous subsections highlight the significant advantages of the ARMO model in edge computing environments integrated with 6G networks. The performance metrics consistently demonstrate that the ARMO model not only meets but actually exceeds the requirements for real-time IoT applications, offering improvements across various critical dimensions such as latency, energy efficiency, resource utilization, and task completion rates. The latency and throughput analysis reveals that the ARMO model maintains lower latency and higher throughput compared to traditional approaches. This indicates that the model’s intelligent task scheduling and resource allocation mechanisms are highly effective in minimizing delays and maximizing data transmission rates. Such performance is crucial for applications requiring immediate responses, such as autonomous vehicles, smart grids, and telemedicine. Moreover, the throughput comparison over time showcases the ARMO model’s ability to sustain higher throughput consistently. This sustained performance is attributed to the model’s dynamic adaptation to network conditions, ensuring optimal data flow and preventing bottlenecks. The consistent high throughput ensures that large volumes of data can be handled efficiently, which is essential for IoT environments with a high density of connected devices.

The task completion rate analysis further supports the ARMO model’s efficiency. The model’s ability to maintain a high completion rate, even under fluctuating network conditions, highlights its robustness and reliability. Efficient task management ensures that IoT applications can operate smoothly, meeting their real-time requirements without interruptions. The comparison of latency and energy consumption illustrates the ARMO model’s superior energy efficiency. Lower energy consumption is achieved through intelligent resource management, which minimizes unnecessary computations and transmissions. This not only extends the operational life of battery-powered IoT devices, but also reduces the overall energy footprint of the network, contributing to sustainable and environmentally friendly operations. Resource utilization analysis underscores the ARMO model’s proficiency in balancing the use of computational power and bandwidth. By maintaining high resource utilization rates, the model ensures that all available resources are effectively employed, preventing underutilization and enhancing overall system performance. This balance is essential for maximizing the return on investment in network infrastructure and ensuring scalable IoT deployments. The performance metrics distribution analysis reinforces the ARMO model’s ability to deliver consistent and reliable performance. The favorable distribution of latency and energy consumption metrics indicates that the model can maintain optimal performance across a wide range of conditions, ensuring that IoT applications receive the quality of service they require. Lastly, the stacked area plot of performance metrics over time provides a comprehensive view of the ARMO model’s capabilities. The significant reductions in latency, energy consumption, and error rates, combined with high task computation rates, illustrate the model’s holistic approach to performance optimization. This integrated perspective confirms that the ARMO model is well-equipped to handle the diverse and demanding needs of modern IoT applications. In summary, the detailed discussion of the results demonstrates that the ARMO model significantly enhances the efficiency, reliability, and scalability of edge computing systems in 6G networks. Its intelligent and adaptive strategies for task scheduling, resource allocation, and energy management position it as a leading solution for the next generation of IoT applications. These findings pave the way for future research and development aimed at further refining and expanding the capabilities of the ARMO model, ensuring its continued relevance and effectiveness in an ever-evolving technological landscape.

The improvements achieved by the ARMO model align closely with and, in several aspects, advance the findings of prior studies in the domain of mobile edge computing and 6G-enabled IoT systems. For instance, Chen et al. [

9] proposed an efficient task offloading framework in vehicular MEC environments focused on minimizing cost and maximizing computational efficiency in multi-vehicle scenarios. Their work demonstrated that adaptive task distribution can significantly reduce system overhead. Our model builds upon this foundation by introducing reinforcement learning (RL)-based dynamic task offloading, which not only reduces overhead, but also responds in real time to fluctuating network conditions—something Chen et al. did not address in depth. In particular, where Chen et al. utilized heuristic strategies for static task contexts, ARMO incorporates context-awareness (e.g., mobility, bandwidth variation, task urgency) to make on-the-fly decisions, thereby achieving a 47% reduction in latency and a 40% decrease in energy consumption, as confirmed through simulation.

Furthermore, Gong et al. [

15] emphasized the joint optimization of offloading and resource allocation for Industrial IoT (IIoT) in 6G networks, highlighting the importance of coupling the two dimensions to maintain performance under stringent industrial requirements. While their model relied on a fixed optimization algorithm, our multi-objective joint optimization framework dynamically adjusts both dimensions in response to network states using deep reinforcement learning. This adaptive mechanism leads to a 20% improvement in resource utilization and a 98% task completion rate, thereby exceeding the performance benchmarks set in previous research.

Additionally, compared to Rodrigues et al. [

42], who introduced cybertwin-assisted offloading in MEC for 6G networks, ARMO’s distinct advantage lies in its dual-layer machine learning design—combining supervised learning for resource prediction and reinforcement learning for policy optimization. This hybrid architecture enables the model to learn from historical patterns while also adapting in real time, offering superior flexibility and resilience in dynamic edge environments.

It is worth noting that while Malik et al. [

35] focused primarily on energy efficiency in fog computing architectures, their solutions assumed static topologies and uniform task demands. In contrast, ARMO handles heterogeneous, high-variability IoT workloads with variable deadlines and energy constraints, and thus provides a more scalable and generalizable approach for edge computing in 6G networks.

In summary, while ARMO validates the core insights of prior research—namely, the efficacy of intelligent task offloading and joint resource optimization—it significantly extends the adaptability, scalability, and real-time decision-making capabilities of such systems. This positions ARMO as a next-generation framework well-suited for the diverse and unpredictable nature of real-time IoT applications in 6G environments.