Abstract

This paper presents a novel meta-heuristic algorithm inspired by the visual capabilities of the mantis shrimp (Gonodactylus smithii), which can detect linearly and circularly polarized light signals to determine information regarding the polarized light source emitter. Inspired by these unique visual characteristics, the Mantis Shrimp Optimization Algorithm (MShOA) mathematically covers three visual strategies based on the detected signals: random navigation foraging, strike dynamics in prey engagement, and decision-making for defense or retreat from the burrow. These strategies balance exploitation and exploration procedures for local and global search over the solution space. MShOA’s performance was tested with 20 testbench functions and compared against 14 other optimization algorithms. Additionally, it was tested on 10 real-world optimization problems taken from the IEEE CEC2020 competition. Moreover, MShOA was applied to solve three studied cases related to the optimal power flow problem in an IEEE 30-bus system. Wilcoxon and Friedman’s statistical tests were performed to demonstrate that MShOA offered competitive, efficient solutions in benchmark tests and real-world applications.

Keywords:

mantis shrimp; Gonodactylus smithii; polarized light vision; global optimization; Langevin equation; bio-inspired algorithm MSC:

90C26

1. Introduction

Metaheuristics are methods that can be implemented to solve optimization problems to obtain an optimal solution (near-to-optimal), including non-linear problems with non-linear constraints [1]; real-world engineering applications [2,3,4,5], such as optimization problems in power electronics [6]; or even those that traditional methods cannot solve [7]. However, as described in [8,9], there is no method capable of solving every optimization problem, as some of these approaches might perform better than others. Metaheuristics involve exploring the local and global solution search space efficiently [10]. The local exploration strategy refers to a search focused on a promising area of space or domain, whereas global exploration focuses on searching for new areas within the problem domain. A good balance between global and local searches can improve the metaheuristic performance.

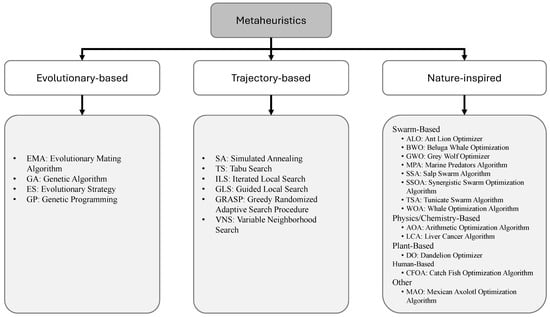

Metaheuristics can be categorized as evolutionary-based, trajectory-based, and nature-inspired; see Figure 1. A short description of these metaheuristic types can be summarized as follows. The process of biological evolution inspires evolutionary algorithms [11,12,13,14,15]. Nature-inspired algorithms include swarm algorithms, which are metaheuristics based on the collective behavior of biological systems [16,17,18,19,20,21,22,23,24,25,26,27,28]. They also include physics-inspired algorithms that use physical principles for optimization [29,30,31,32,33,34], and algorithms inspired by human behavior [35,36,37,38]. Moreover, trajectory-based metaheuristics follow a trajectory through the solution space, aiming to improve the solution found at each step. Unlike population-based algorithms, which work with multiple solutions simultaneously, trajectory-based metaheuristics focus on a single solution that evolves and improves iteratively. A more extensive metaheuristic classification can be found in [39,40].

Figure 1.

Metaheuristic classification.

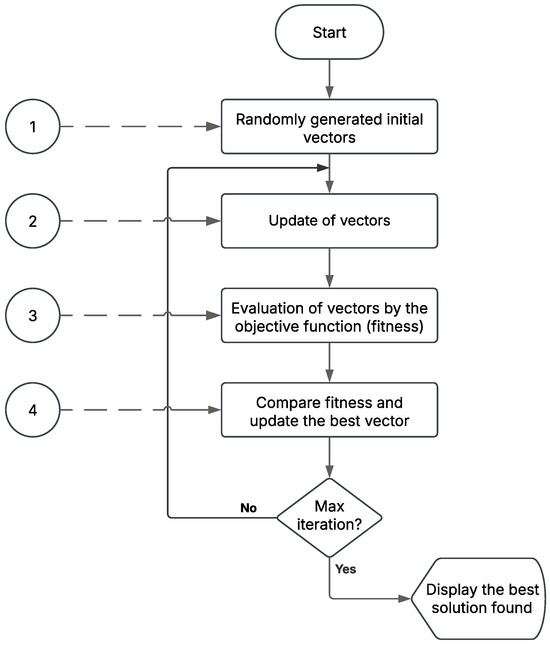

A schematic representation of a metaheuristic algorithm is shown in Figure 2. In the first stage, a set of vectors, also named as search agents, is randomly generated. The size of the vector represents the number of variables of the problem, where its value represents a possible solution.

Figure 2.

Schematic representation of a metaheuristic algorithm.

In the second stage, vectors are updated by functions representing living organisms’ behavior or physical and chemical phenomena as bio-inspired models. These vector modifications present an additional opportunity to investigate novel regions within the solution search space.

In the third stage, vectors are evaluated by the objective function, where the calculated value is referred to as the fitness. In minimization, the ideal fitness vector is the minimum within the population.

In the fourth stage, during each iteration, the vector exhibiting the best fitness is thereafter compared with the fitness of the previously recombined vectors.

This process is repeated until the stop criterion is reached, either the number of interactions or the number of objective function evaluations is satisfied, and then the best achievable solution is found.

The contributions of this work are the following:

- A novel bio-inspired optimization algorithm named MShOA, which is based on the mantis shrimp’s visual ability to detect polarized light, is proposed. Once the polarization state is determined, these detection capabilities allow the mantis shrimp to decide whether to search for food, hit predators, defend itself, or escape from its burrow.

- The performance of MShOA was evaluated with a set of 20 unimodal and multimodal functions reported in the literature. Furthermore, it was implemented in 10 real-world constraint optimization applications from CEC2020 and 3 study cases of an electrical engineering optimal power flow problem.

- In the Wilcoxon and Friedman statistical tests, MShOA outperformed 14 algorithms taken from the state of the art.

- MShOA’s source code is available for the scientific community.

This paper is structured as follows: Section 2 shows the MShOA bio-inspiration, outlines the mathematical model, provides the pseudocode, and includes the general flowchart of the algorithm. Section 3 shows the performance of MShOA compared with 14 recent bio-inspired algorithms. Section 4 presents the results and discusses the algorithm. Section 5 describes the application of MShOA to real-world optimization problems. Finally, Section 6 summarizes the conclusions and future work.

2. Mantis Shrimp Optimization Algorithm (MShOA)

2.1. Biological Fundamentals

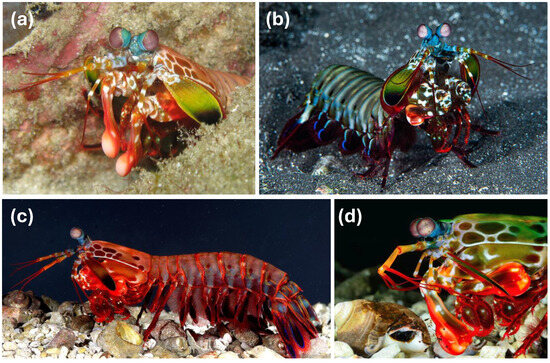

The purple-spotted mantis shrimp (Gonodactylus smithii) is a stomatopod crustacean that primarily inhabits shallow tropical marine waters worldwide. They are sedentary animals that spend most of the day hidden in burrows on the seabed. However, they make forays to feed, exhibiting a remarkable ability to return to their burrows [41]. During these outings, they behave in a cautious and observant manner, using their complex visual systems to detect dangers and opportunities, especially when searching for food [42]. They have the most complex eyes in the animal kingdom, with up to 12 types of photoreceptors that allow them to discriminate linearly and circularly polarized light [43,44,45]. Their eyes are also extremely mobile, capable of performing proactive torsional rotations of up to 90 degrees. These movements can be coordinated or independent during visual scanning, allowing them to individually adjust the orientation of each eye in response to specific stimuli, such as polarized light signals [46]. Mantis shrimps use these specific polarization signals, both linear and circular, in critical social contexts, such as mating and territorial defense [47,48]. This adaptation enables them to accurately orient themselves in their surroundings to detect objects, predators, prey, and communicate visually with other mantis shrimp, optimizing their visual perception and behavior in their environment [49]. Additionally, they are also characterized by their fierce competition for their burrows, which are highly valuable resources due to their multifunctional use. They use their acute vision to determine the size of the opponent and their fighting ability. The opponent’s fighting ability is decisive in deciding whether to fight for the burrow or avoid the confrontation [50]. Figure 3 summarizes most of the aforementioned mantis shrimps’ behaviors. Figure 3a illustrates how shrimp hide in their shelter to increase their chances of staying safe. The shrimp’s eyes gaze in multiple directions at once for further decision-making, which may include strike, defense or shelter, burrow, or cautiously forage, as seen in Figure 3b. Figure 3c shows a lateral view of a red mantis shrimp. Additionally, Figure 3d illustrates the impact of a mantis shrimp’s strike on a prey shell.

Figure 3.

(a) The shrimp inside its shelter. Photograph (published under a CC BY license). Author unknown. (b) The shrimp observing in different directions simultaneously. Photograph (published under a CC BY-SA license). Author unknown. (c) The purple-spotted mantis shrimp (Gonodactylus smithii). Photograph by Roy L. Caldwell (published under a CC BY-SA license). (d) Strike of a mantis shrimp. Photograph (published under a CC BY license). Author unknown.

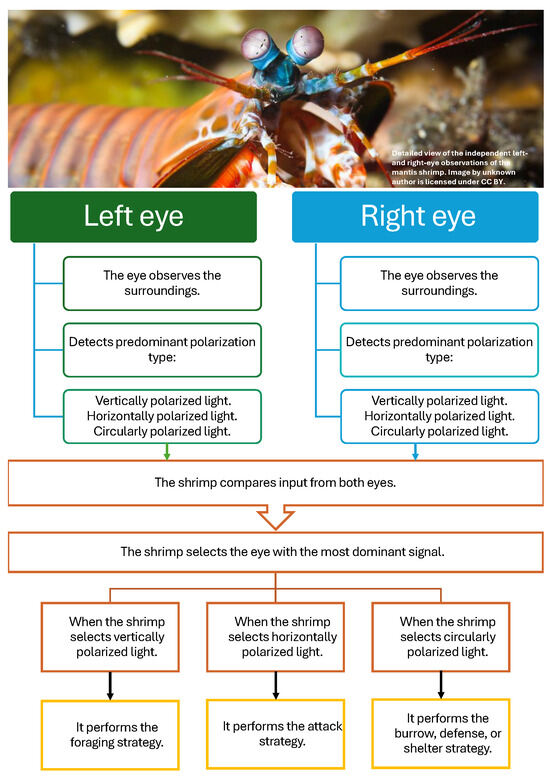

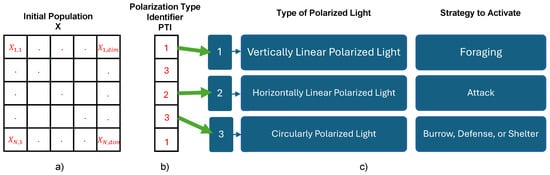

Overall, when observing its surroundings, the mantis shrimp can see independently with both eyes. In this way, each eye detects a predominant type of polarization (vertical, horizontal, or circular) within its field of view, which allows it to identify prey, a threat, or a potential mate. Subsequently, the shrimp compares the information captured by both eyes and selects the detected signal that is the most predominant, which then guides its subsequent behavior. Based on this process, this study considered that the mantis shrimp engages in foraging when vertical polarization dominates; attacks when horizontal polarization is more intense; and burrows, defends, or shelters when circular polarization is detected, as depicted in Figure 4.

Figure 4.

Shrimp strategies based on detected polarized light.

2.2. Initialization

The algorithm’s initialization consists of two phases to obtain the initial values. As seen in Equation (1), a set of multidimensional solutions representing the initial population is randomly generated in the first phase:

where is the initial population, N is defined as the number of search agents, and represents the dimension of the problem (number of variables). Figure 5a presents a graphical representation of the initial population X. Finally, and represent the lower and upper bounds of the search space, respectively. As described in Equation (2), a vector is randomly generated in the second phase to represent the detected polarization’s Polarization Type Indicator (PTI):

Figure 5.

(a) Schematic representation of the initial population, where stands for the vector size; (b) Polarization Type Identifier (PTI) vector representation; (c) strategy activated by the type of polarized light detected.

In both phases, the rand function is assumed to follow a uniform distribution in the range . The round function restricts the value to 1, 2, or 3, as seen in Figure 5b. These values represent the reference angle set at (), (0 or ), or ( or ), which are related to vertical linearly, horizontal linearly, and circularly polarized light, respectively. Each of these polarization states is based on the visual capabilities of the mantis shrimp. In addition, a value of 1 activates the mantis shrimp’s foraging strategy, while a value of 2 activates the attack strategy. The final strategy, burrow, defense, or shelter, is triggered by a of 3. Figure 5c illustrates the correlation of the PTI value and the type of polarized light detected, as well as its relationship with the forage; attack; or burrow, defense, or shelter strategies.

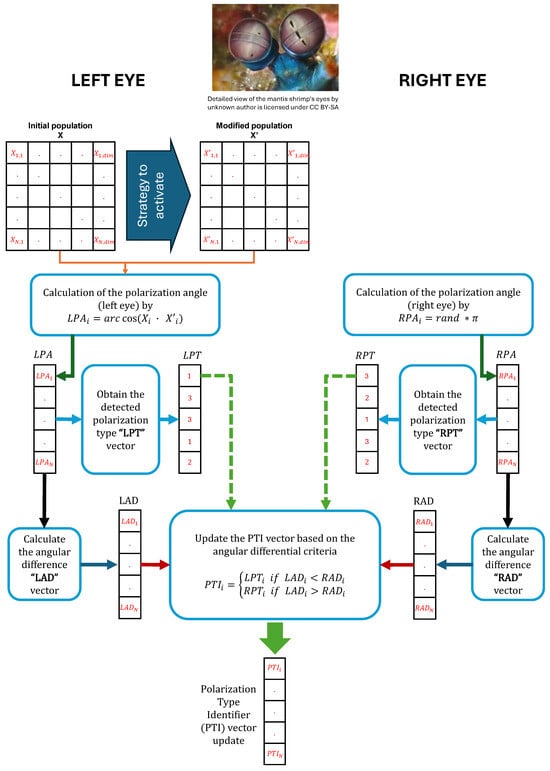

2.3. Polarization Type Identifier (PTI) Vector Update Process

The following steps outline how the PTI vector is updated. The whole process is summarized in Figure 6.

Figure 6.

Schematic diagram of the Polarization Type Identifier () vector update process.

- The calculation of the polarization angle was inspired by the visual system of the mantis shrimp, where each eye performs independent perception. The left eye employs vectors from both the initial (X) and updated population () to compute the Left Polarization Angle (), as described in Equation (3). In contrast, the Right Polarization Angle () is determined through Equation (4).Furthermore, the angular difference between and is computed for subsequent consideration in the PTI update process.

- Before the PTI value is updated, the detected left- and right-eye polarization types should be calculated. The Left Polarization Type () vector and the Right Polarization Type () vector are determined by the criteria described in Equation (5):

- The Left-Eye Angular Difference () between the Left Polarization Angle () and the reference angles of the polarized light is calculated using Equation (6). Similarly, the Right-Eye Angular Difference () is calculated with the Right Polarization Angle ().

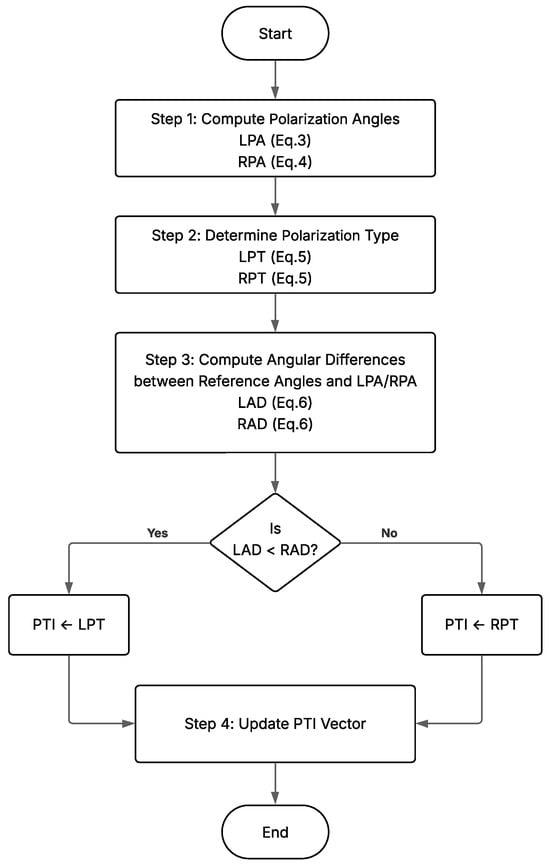

- Finally, by Equation (7), the PTI value is calculated. The pseudocode for the previously discussed polarization type identifier vector update process (PTI) is described in Algorithm 1 and in Figure 7.

Figure 7. Algorithm 1’s Polarization Type Identifier (PTI) vector update process—flowchart.

Figure 7. Algorithm 1’s Polarization Type Identifier (PTI) vector update process—flowchart.

| Algorithm 1 Polarization Type Identifier (PTI) Vector Update Process |

|

2.4. Mathematical Model and Optimization Algorithm

Overall, the mantis shrimp’s behavior is characterized by foraging trips, in which it remains cautious and observant to detect prey or predators. In its burrow, it remains vigilant for any danger or opportunity for food. As previously described, it performs all these activities thanks to its eye’s independent movement skills, making it capable of perceiving linearly horizontal, linearly vertical, and circularly polarized light. This study used the polarized light detection signal capabilities of the mantis shrimp to determine the crustacean’s movement strategy, whether it is a forage; attack; or burrow, defense, or shelter survival tactic.

A mathematical model that simulates each eye’s rotational and independent movements is introduced to explore the mantis shrimp’s polarized light detection skills. Each eye of the mantis shrimp is considered a polarizing filter. The left eye is set to observe a known environment, while the right eye randomly explores an area, searching for new interactions within the environment. The intensity of the polarized light detected by each eye is meticulously compared, and then, the final decision is based on the signal with the highest intensity, which is directly related to the proximity of the polarization angle. Table 1 summarizes the polarized light detection capabilities of the mantis shrimp and their relationships with their behavioral strategies.

Table 1.

Behavioral strategies of the mantis shrimp based on detected polarized light.

2.5. Strategy 1: Foraging

The characteristic movement of the mantis shrimp when searching for food can be described as Brownian motion. Therefore, we can analyze this behavior starting with the generalized Langevin equation. This equation describes the motion of a particle without external forces, known as a free Brownian particle [51]. The dynamics of this particle are described by Equation (8). In this case, by assuming that no external forces are acting on the particle, is set to 0, which simplifies Equation (8) to Equation (9). This represents the equilibrium between friction () and the noise term . A detailed explanation of this simplification process can be found in [51].

Given that only and appear in the equation of motion, Equation (9) can be rewritten in terms of the velocity to obtain Equation (10), where the quantity in the Langevin equation is called the dynamic friction kernel, while is known as a random force. In Equation (11), a diffusion constant D, which allows the random component of the model to be scaled, is then incorporated:

Moreover, the current position is updated by adding the random movement defined by the Langevin equation. For this work, the value of is proposed to be 1; thus, the equation can be rewritten as

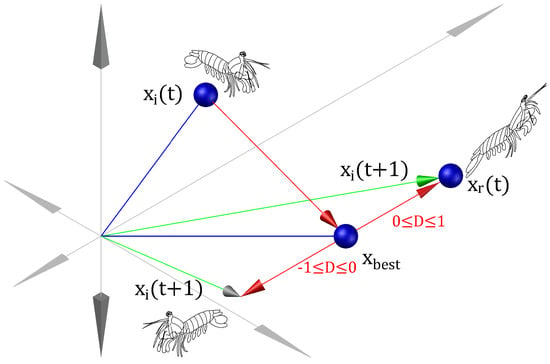

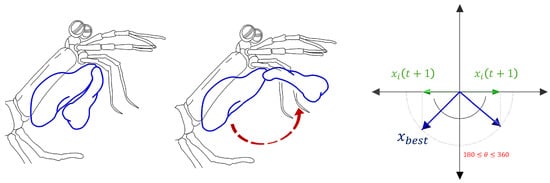

where represents the new position of the mantis shrimp in the ()-th iteration; represents the best position found for the mantis shrimp; v is defined by the difference between the current position and the best position ; represents the difference between and the current vector , where , ; and D is a random diffusion value set within . The foraging strategy is summarized in Figure 8.

Figure 8.

Foraging strategy.

2.6. Strategy 2: Attack

The mantis shrimp’s strike is renowned in biology for its capacity to fracture hard shells, comparable to the impact of a bullet [52,53,54,55]. Equation (13) shows how this hit can be represented by a circular motion parametric equation in a two-dimensional plane:

where the vector r represent the mantis shrimp’s front appendages and is the angular strike motion.

Finally, the mantis shrimp’s attack can be expressed as follows:

where represents the new position of the mantis shrimp in the ()-th iteration, represents the best position found for the mantis shrimp, and is randomly generated with . The graphical representation of the attack strategy is shown in Figure 9.

Figure 9.

Mantis shrimp’s attack strike.

2.7. Strategy 3: Burrow, Defense, or Shelter

The mantis shrimp bases its decision-making strategy regarding defending, sheltering, or burrowing on its exceptional visual ability to assess an opponent’s threat. Using their keen vision, they determine whether the opponent is significantly larger and likely stronger, choosing to flee, or if similar in size or smaller, they aggressively decide to defend or shelter in their territory [56,57,58,59,60]. These animals hide in their shelters to increase the chance of staying safe [61]. Equation (15) is used to describe the mantis shrimp’s defense or shelter strategy:

where represents the new position of the mantis shrimp in the ()-th iteration, represents the best position found for the mantis shrimp, and k is a scaling factor randomly generated between 0 and . The graphic representation of the burrow, defense, or shelter strategy is shown in Figure 10.

Figure 10.

Burrow, defense, or shelter strategy.

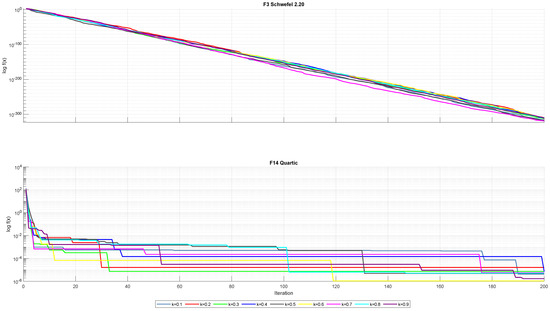

Sensitivity Analysis of k Parameter

The third strategy introduces a scaling parameter k fixed at 0.3. To evaluate the algorithm’s sensitivity to this parameter, nine different values of k running from 0.1 to 0.9 in increments of 0.1 were studied. The analysis included 10 unimodal and 10 multimodal benchmark functions; see Table A1 and Table A2. Each test was executed independently 30 times, with a population size of 30 and 200 iterations. The statistical results of this sensitivity analysis are presented in Table 2, Table 3, Table 4, Table 5 and Table 6. Figure 11 illustrates the behavior of MShOA when using different values of k, as tested on one representative unimodal function and one multimodal function.

Table 2.

Comparative performances of MShOA with to on benchmark functions.

Table 3.

Continuation of comparative performances of MShOA with to on benchmark functions.

Table 4.

Continuation of comparative performances of MShOA with to on benchmark functions.

Table 5.

Continuation of comparative performances of MShOA with to on benchmark functions.

Table 6.

Continuation of comparative performances of MShOA with to on benchmark functions.

Figure 11.

Convergence performances of unimodal function F3 and multimodal function F14.

Subsequently, the nonparametric Wilcoxon signed-rank test was applied to determine whether any alternative value of k led to statistically significant performance differences when compared with . The results of this test, as summarized in Table 7, indicate that no statistically significant differences were observed at the 5% significance level.

Table 7.

Statistical analysis of the Wilcoxon signed-rank test that compared different values of the parameter k in MShOA for 10 unimodal and 10 multimodal functions using a 5% significance level.

2.8. Pseudocode for MShOA

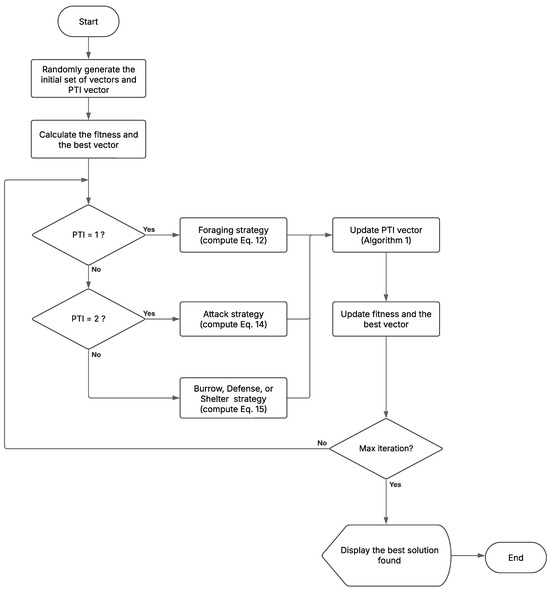

The pseudocode and flowchart of MShOA are described in Algorithm 2 and Figure 12, respectively.

| Algorithm 2 Optimization Algorithm of the Mantis Shrimp |

|

Figure 12.

MShOA flowchart.

MShOA’s Time Complexity

The computational complexity of an optimization method is characterized by a function that relates the method’s runtime to the size of the problem’s input. Big-O notation functions as a widely accepted representation. The time complexity of MShOA is described as follows:

In addition to Equation (16), the time complexity of MShOA depends on the number of iterations (), the population size of mantis shrimps (nMS), the dimensionality of the problem (dim), and the cost of the objective function (f). Table 8 describes each part of Equation (16).

Table 8.

Description of the MShA algorithm’s computational complexity.

Therefore, the overall time complexity of MshOA can be computed as follows:

In O notation, when we add several terms, the fastest-growing one dominates. Hence, the time complexity of MShOA can be expressed as follows:

3. Experimental Setup

The efficiency and stability of MShOA were evaluated by solving 20 optimization functions from the literature; see Appendix A. MShOA was compared with the 14 bio-inspired algorithms described below:

- Ant Lion Optimizer (ALO): The algorithm presents the predatory behavior of the ant lion in nature and mathematically models five stages: random movement, building traps, entrapment of ants in traps, catching prey, and rebuilding traps [21].

- Arithmetic Optimization Algorithm (AOA): This algorithm takes advantage of the distribution behavior of fundamental arithmetic operations in mathematics, including multiplication (M), division (D), subtraction (S), and addition (A) [62].

- Beluga Whale Optimization (BWO): This algorithm was inspired by the natural behaviors of beluga whales and mathematically models their behavior in pair swimming, preying, and whale fall [63].

- Dandelion Optimizer (DO): This algorithm simulates the long-distance flight of dandelion seeds relying on the wind and is divided into three stages: the rising stage, the descending stage, and the landing stage [64].

- Evolutionary Mating Algorithm (EMA): the evolutionary algorithm is based on a random mating concept from the Hardy–Weinberg equilibrium [65].

- Grey Wolf Optimizer (GWO): This algorithm is inspired by grey wolves (Canis lupus) and mimics their leadership hierarchy and hunting mechanisms in nature [22].

- Liver Cancer Algorithm (LCA): This algorithm mimics liver tumor’s growth and takeover processes and mathematically models their ability to replicate and spread to other organs [66].

- Mexican Axolotl Optimization Algorithm (MAO): This algorithm was inspired by the way axolotls live in their aquatic environment and is modeled after their processes of regeneration, reproduction, and tissue restoration [67].

- Marine Predators Algorithm (MPA): This algorithm is inspired by the interactions between predators and prey in marine ecosystems and models the widespread foraging strategies and optimal encounter rate policies [68].

- Salp Swarm Algorithm (SSA): This algorithm is inspired by the behavior of salps in nature and primarily models their swarming behavior when navigating and foraging in oceans [17].

- Synergistic Swarm Optimization Algorithm (SSOA): This algorithm combines swarm intelligence with synergistic cooperation to find optimal solutions. It mathematically models a cooperation mechanism, where particles exchange information and learn from one another, enhancing their search behaviors and improving the overall performance [69].

- Tunicate Swarm Algorithm (TSA): this algorithm imitates the behavior of tunicates and models their use of jet propulsion and collective movements while navigating and searching for food [70].

- Whale Optimization Algorithm (WOA): this algorithm imitates the social behavior of humpback whales in nature and mathematically models their bubble-net hunting strategy [18].

- Catch Fish Optimization Algorithm (CFOA): this algorithm is inspired by traditional rural fishing practices and models the strategic process of fish capture through two main phases: an exploration phase combining individual intuition and group collaboration, and an exploitation phase based on coordinated collective action [71].

Each algorithm was executed independently 30 times, with a population size of 30 and 200 iterations. The initial configuration parameters of all the employed algorithms are detailed in Table 9. The Wilcoxon test was applied to compare the performances of the algorithms. The four best-ranked algorithms, as computed using the Friedman test, were selected for further evaluation on 10 optimization problems taken from the CEC2020 benchmark suite detailed in Table 24. Moreover, three engineering study cases based on the optimal power flow optimization problem were also studied.

Table 9.

Initial parameters for each algorithm.

All experiments were conducted on a standard desktop with the following specifications: Intel Core i9-13900K 5.8 GHz processor, 192 GB RAM, Linux Ubuntu 24.04 LTS operating system, and MATLAB R2024a compiler.

4. Results and Discussion

The computational results of MShOA, GWO, BWO, DO, WOA, MPA, LCA, SSA, EMA, ALO, MAO, AOA, SSOA, TSA, and CFOA on 20 benchmark test functions are presented in Table 10, Table 11, Table 12, Table 13, Table 14, Table 15, Table 16 and Table 17, where the average, standard deviation, and best values are provided for comparison measurements.

Table 10.

Comparative performances of MShOA and 14 algorithms on benchmark functions.

Table 11.

Continuation of comparative performances of MShOA and 14 algorithms on benchmark functions.

Table 12.

Continuation of comparative performances of MShOA and 14 algorithms on benchmark functions.

Table 13.

Continuation of comparative performances of MShOA and 14 algorithms on benchmark functions.

Table 14.

Continuation of comparative performances of MShOA and 14 algorithms on benchmark functions.

Table 15.

Continuation of comparative performances of MShOA and 14 algorithms on benchmark functions.

Table 16.

Continuation of comparative performances of MShOA and 14 algorithms on benchmark functions.

Table 17.

Continuation of comparative performances of MShOA and 14 algorithms on benchmark functions.

Three stages of nonparametric Wilcoxon signed-rank tests, at a 5% significance level, and Friedman tests determined the algorithms’ performances. In stage 1, 10 unimodal functions were analyzed, whereas in stage 2, 10 multimodal functions were studied. The unimodal and multimodal functions evaluated the algorithms’ capabilities in exploitation and exploration in the solution space, respectively. In stage 3, the set of 20 previously used functions—unimodal and multimodal—was analyzed with the Wilcoxon and Friedman statistical tests; see Table A1 and Table A2. The Wilcoxon test assessed whether MShOA exhibited statistically superior performance; a p-value below 0.05 signified that MShOA outperformed the algorithm under comparison. The Friedman test evaluated algorithms by ranking them according to their average performance and benchmark scores.

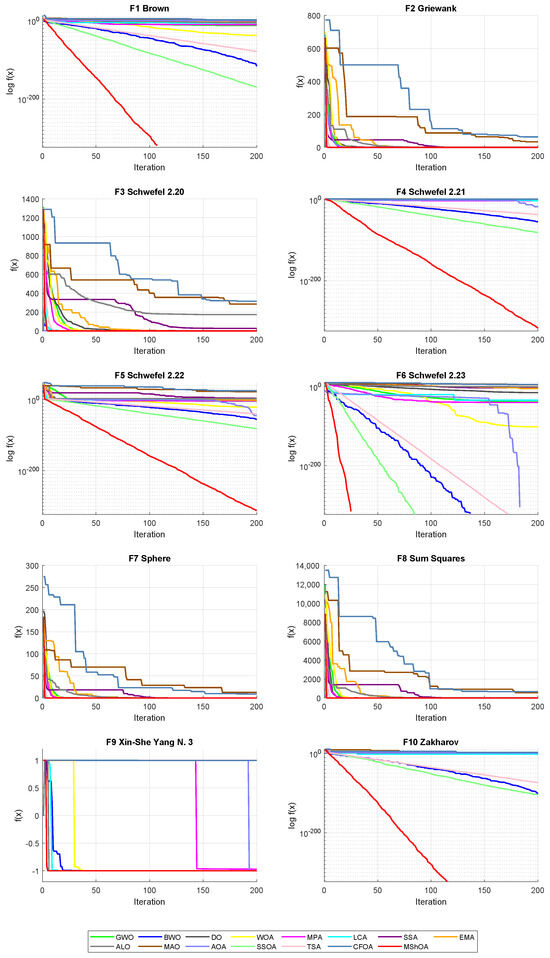

In stage 1, according to the Wilcoxon test (see Table 18), the MShOA algorithm was better than all the others in local searches. The results of the Friedman test (see Table 19) show that MShOA ranked first among the analyzed algorithms. Figure 13 presents the convergence curves for each unimodal function.

Table 18.

Statistical analysis of the Wilcoxon signed-rank test comparing MShOA with other algorithms for 10 unimodal functions using a 5% significance level.

Table 19.

Performance comparison on 10 unimodal functions by Friedman test.

Figure 13.

Convergence curves of 10 unimodal functions.

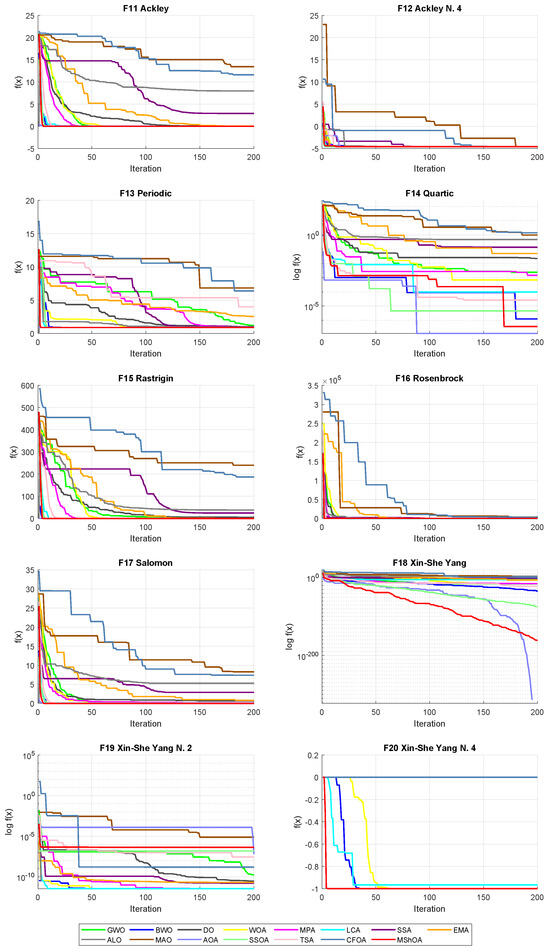

In stage 2, the algorithms’ performances were evaluated with 10 multimodal functions; see Table A1. The Wilcoxon test results (see Table 20) indicate that MShOA outperformed SSA, EMA, ALO, MAO, and CFOA; meanwhile, they also demonstrate competitive results compared with GWO, BWO, DO, WOA, MPA, LCA, AOA, SSOA, and TSA. The Friedman test results (see Table 21) indicate that the BWO algorithm ranked first. In the global search, MShOA demonstrated a competitive performance by ranking in second place. Figure 14 presents the convergence curves for each multimodal function.

Table 20.

Statistical analysis of the Wilcoxon signed-rank test comparing MShOA with other algorithms for 10 multimodal functions using a 5% significance level.

Table 21.

Performance comparison on 10 multimodal functions by Friedman test.

Figure 14.

Convergence curves of 10 multimodal functions.

In stage 3, the set of 20 previously used functions—unimodal and multimodal—was analyzed with the Wilcoxon and Friedman statistical tests; see Table A1. The Wilcoxon test results, shown in Table 22, indicate that MShOA outperformed the following optimization algorithms: ALO, DO, EMA, GWO, LCA, MAO, MPA, SSA, TSA, and WOA. However, no significant differences were observed with BWO, AOA, and SSOA. The Friedman test analysis (see Table 23) shows that BWO ranked first, MShOA ranked second, and SSOA and AOA ranked third and fourth, respectively.

Table 22.

Statistical analysis of the Wilcoxon signed-rank test comparing MShOA with other algorithms for 20 functions using a 5% significance level.

Table 23.

Performance comparison of algorithms on 20 unimodal and multimodal functions by Friedman test.

5. Real-World Applications

The performance of MShOA was evaluated by solving 10 optimization problems from CEC 2020 [72]. These problems are presented in Table 24 and described in Section 5.2, Section 5.3, Section 5.4, Section 5.5, Section 5.6, Section 5.7, Section 5.8, Section 5.9, Section 5.10 and Section 5.11. In addition, MShOA was tested on three different cases of the optimal power flow problem for the IEEE bus 30 configuration. These cases were fuel cost, active power, and reactive power. The results for each of these engineering problems were obtained with MShOA and the top three algorithms ranked according to the Friedman test: BWO, SSOA, and AOA; see Table 23. The results for each real-world optimization problem described in Table 24 are summarized in table form to include the decision variables and the feasible objective function value found, as can be seen in Table 25, Table 26, Table 27, Table 28, Table 29, Table 30, Table 31, Table 32, Table 33 and Table 34. The constraint-handling method used on each of the real-world optimization problems is described in Section 5.1.

Table 24.

Real-world optimization problems from the CEC2020 benchmark, where D is the problem dimension, g is the number of inequality constraints, h is the number of equality constraints, and f() is the best-known feasible objective function value.

Table 25.

Results of the process synthesis problem.

Table 26.

Comparison results of the process synthesis and design problem.

Table 27.

Comparison results of the process flow sheeting problem.

Table 28.

Comparison results of the weight minimization of a speed reducer.

Table 29.

Comparison results of the tension/compression spring design (case 1).

Table 30.

Comparison results of the welded beam design.

Table 31.

Comparison results of the multiple disk clutch brake design problem.

Table 32.

Comparison results of the planetary gear train design optimization problem (transposed).

Table 33.

Comparison results of the tension/compression spring design (case 2).

Table 34.

Comparison results of Himmelblau’s function.

5.1. Constraint Handling

The penalization method taken from [39], which is applied to engineering problems with constraints, is presented in Equation (19):

where is the fitness function value of a viable solution (i.e., a solution that satisfies all the constraints). In other matters, represents the fitness function value of the worst solution in the population, and is the Mean Constraint Violation [39] represented in Equation (20):

In this case, represents the average sum of the inequality constraints () and the equality constraints (), as shown in Equations (21) and (22), respectively. It is important to note that the inequality constraints and the equality constraints only have single value, which is the penalty applied when the constraint is violated.

5.2. Process Synthesis Problem

The process synthesis problem includes two decision variables and two inequality constraints. The mathematical representation of the problem is shown in Equation (23). The best-known feasible objective value taken from Table 24 is 2.

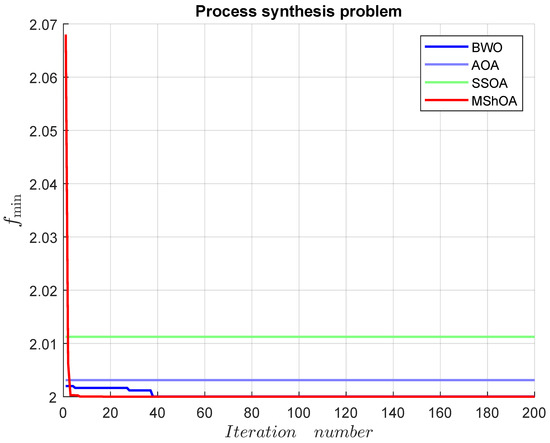

Table 25 compares MShOA, BWO, AOA, and SSOA, all of which provided effective and competitive solutions. Nonetheless, SSOA and AOA did not satisfy one or more problem constraints. MShOA’s difference from the best-known feasible objective function value was . The convergence graph is shown in Figure 15.

Figure 15.

Convergence graph of the process synthesis problem.

5.3. Process Synthesis and Design Problem

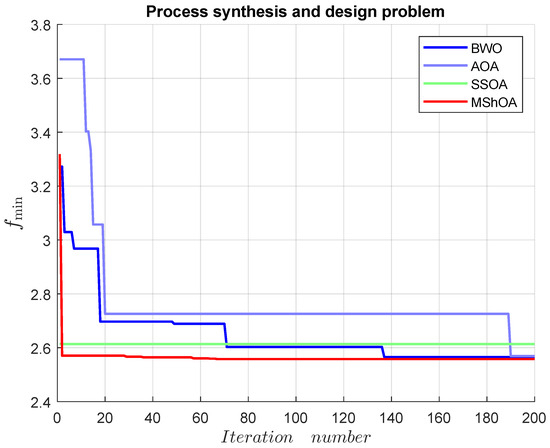

The process synthesis and design problem included three decision variables, one inequality, and one equality constraint. The mathematical representation of the problem is shown in Equation (24). The best-known feasible objective value taken from Table 24 is .

Table 26 compares MShOA, BWO, AOA, and SSOA, all of which provided effective and competitive solutions. However, only the results of MShOA did not violate any problem constraints. MShOA’s difference from the best-known feasible objective function value was . The convergence graph is shown in Figure 16.

Figure 16.

Convergence graph of the process synthesis and design problem.

5.4. Process Flow Sheeting Problem

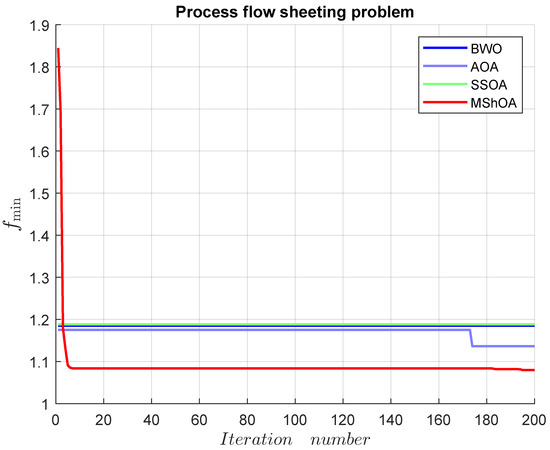

The process flow sheeting problem includes three decision variables and three inequality constraints. The mathematical representation of the problem is shown in Equation (25). The best-known feasible objective value from Table 24 is .

Table 27 compares MShOA, BWO, AOA, and SSOA, all of which provided effective and competitive solutions. Nonetheless, BWO and AOA did not satisfy one or more problem constraints. MShOA’s difference from the best-known feasible objective function value was . The convergence graph is shown in Figure 17.

Figure 17.

Convergence graph of the process flow sheeting problem.

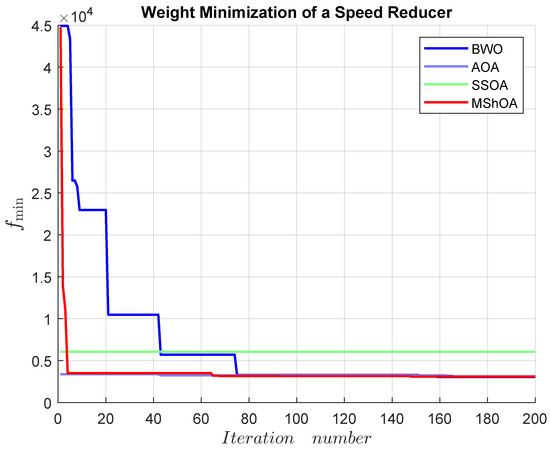

5.5. Weight Minimization of a Speed Reducer

The weight minimization of a speed reducer includes seven decision variables and eleven inequality constraints. The mathematical representation of the problem is shown in Equation (26). The best-known feasible objective value taken from Table 24 is .

Table 28 compares MShOA, BWO, AOA, and SSOA, all of which provided effective and competitive solutions. Nonetheless, SSOA did not satisfy one or more problem constraints. MShOA’s difference from the best-known feasible objective function value was . The convergence graph is shown in Figure 18.

Figure 18.

Convergence graph of the weight minimization of a speed reducer.

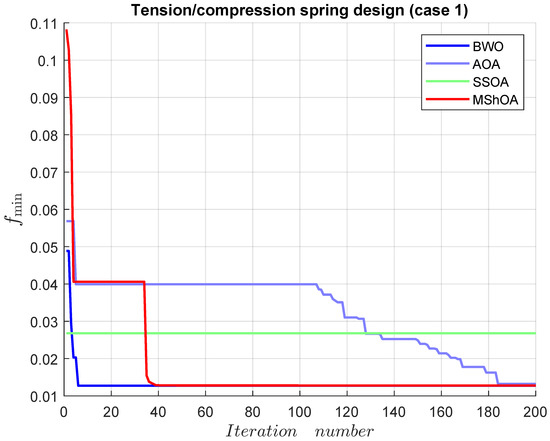

5.6. Tension/Compression Spring Design (Case 1)

The tension/compression spring design (case 1) problem includes three decision variables and four inequality constraints. The mathematical representation of the problem is shown in Equation (27). The best-known feasible objective value taken from Table 24 is .

Table 29 compares MShOA, BWO, AOA, and SSOA, all of which provided effective and competitive solutions. Nonetheless, SSOA did not satisfy one or more problem constraints. MShOA’s difference from the best-known feasible objective function value was . The convergence graph is shown in Figure 19.

Figure 19.

Convergence graph of the tension/compression spring design (case 1).

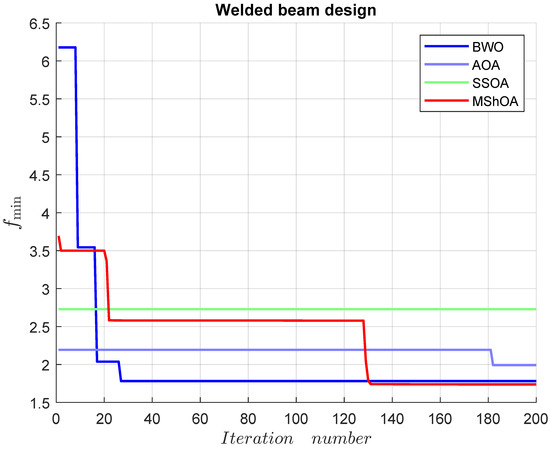

5.7. Welded Beam Design

The welded beam design includes four decision variables and five inequality constraints. The mathematical representation of the problem is shown in Equation (28). The best-known feasible objective value from Table 24 is .

Table 30 compares MShOA, BWO, AOA, and SSOA, all of which provided effective and competitive solutions. Nonetheless, BWO and SSOA did not satisfy one or more problem constraints. MShOA’s difference from the best-known feasible objective function value was . The convergence graph is shown in Figure 20.

Figure 20.

Convergence graph of the welded beam design.

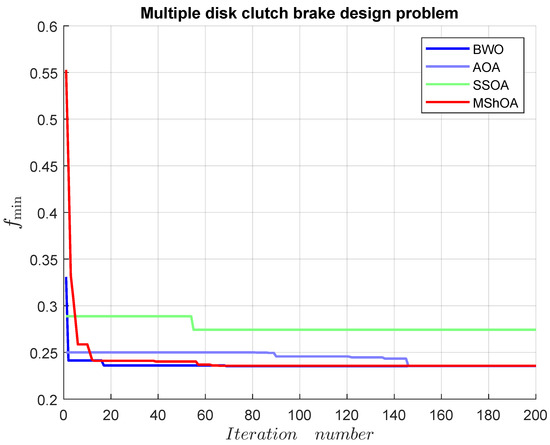

5.8. Multiple Disk Clutch Brake Design Problem

The multiple disk clutch brake design problem includes five decision variables and eight inequality constraints. The mathematical representation of the problem is shown in Equation (29). The best-known feasible objective value taken from Table 24 is .

Table 31 compares MShOA, BWO, AOA, and SSOA, all of which provided effective and competitive solutions. Nonetheless, BWO, SSOA, and AOA did not satisfy one or more problem constraints. MShOA’s difference from the best-known feasible objective function value was . The convergence graph is shown in Figure 21.

Figure 21.

Convergence graph of the multiple disk clutch brake design problem.

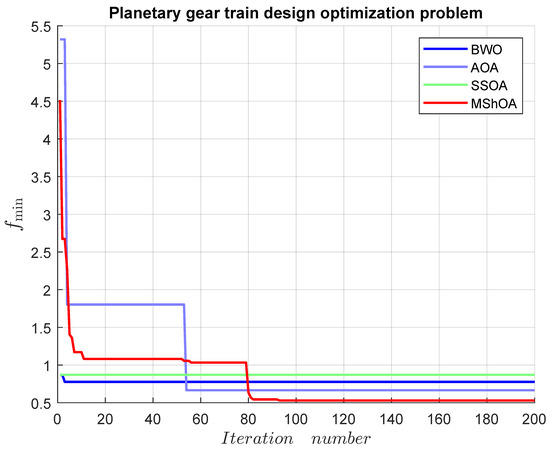

5.9. Planetary Gear Train Design Optimization Problem

The planetary gear train design optimization problem includes six decision variables and eleven inequality constraints. The mathematical representation of the problem is shown in Equation (30). The best-known feasible objective value from Table 24 is .

Table 32 compares MShOA, BWO, AOA, and SSOA, all of which provided effective and competitive solutions. Nonetheless, SSOA and AOA did not satisfy one or more problem constraints. MShOA’s difference with the best-known feasible objective function value was . The convergence graph is shown in Figure 22.

Figure 22.

Convergence graph of the planetary gear train design optimization problem.

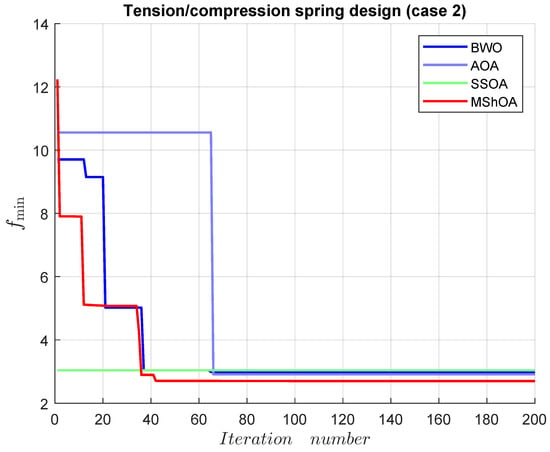

5.10. Tension/Compression Spring Design (Case 2)

The tension/compression spring design (case 2) problem includes three decision variables and eight inequality constraints. The mathematical representation of the problem is shown in Equation (31). The best-known feasible objective value taken from Table 24 is .

Table 33 compares MShOA, BWO, AOA, and SSOA, all of which provided effective and competitive solutions. MShOA’s difference from the best-known feasible objective function value was . The convergence graph is shown in Figure 23.

Figure 23.

Convergence graph of the tension/compression spring design (case 2).

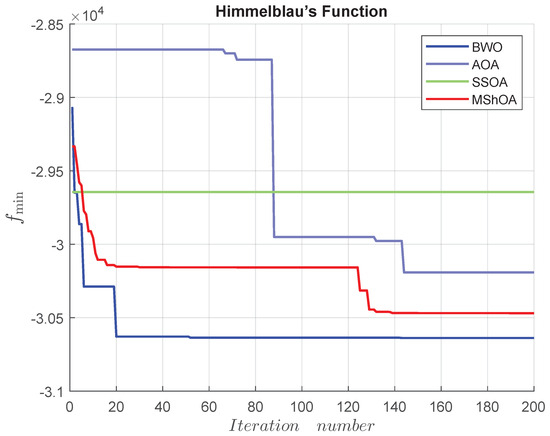

5.11. Himmelblau’s Function

The Himmelblau’s function problem includes five decision variables and six inequality constraints. The mathematical representation of the problem is shown in Equation (32). The best-known feasible objective value taken from Table 24 is .

Table 34 compares MShOA, BWO, AOA, and SSOA, all of which provided effective and competitive solutions. Nonetheless, BWO, SSOA, and AOA did not satisfy one or more problem constraints. MShOA’s difference with the best-known feasible objective function value was . The convergence graph is shown in Figure 24.

Figure 24.

Convergence graph of Himmelblau’s function.

5.12. Optimal Power Flow

The optimal power flow (OPF) problem was initially considered an annex to the conventional economic dispatch (ED) problem because both problems were solved simultaneously [73]. The OPF has changed over time into a non-linear optimization problem that tries to find the operating conditions of an electrical system while considering the power balance equations and the constraints of the transmission network [74,75]. The mathematical formulation of the OPF is described in Equation (33); this equation is called the canonical form:

In Equation (34), the set of control variables u is defined, which includes (active power generation at PV buses), (voltage magnitudes at PV buses), (VAR compensators), and T (transformer tap settings). The parameter is the number of generators, is the number of VAR compensators, and is the number of regulating transformers.

In Equation (35), the set of state variables are

- —active output power (generation) of the reference node.

- —voltage at the load node.

- —reactive power at the output (generation) of all generators.

- —transmission line.

The constraints of the active power equality are defined in Equation (36), which indicates that the active power injected into node is equal to the sum of the active power demanded at node plus the active power losses in the transmission lines connected to the node:

The constraints of the reactive power equality are defined in Equation (37), which indicates that the reactive power injected into node is equal to the reactive power demanded at node plus the reactive power associated with the flows in the transmission lines connected to the node:

The real and reactive power equality constraints are defined in Equations (36) and (37), respectively, where

- —the active power generation.

- —the reactive power generation.

- —the active load demand.

- —the reactive load demand.

- —the number of buses.

- —the conductance between buses i and j.

- —the susceptance between buses i and j.

- (the admittance matrix).

The inequality constraints in an optimal power flow (OPF) problem [76] cover the operational limits of the system’s components. The generator constraints are represented by Equation (38), and these constraints are shown in Table 35:

Table 35.

Generator inequality constraints.

Table 36.

Transformer inequality constraints.

Table 37.

Shunt VAR compensator inequality constraints.

Finally, the security constraints are represented in Equation (41):

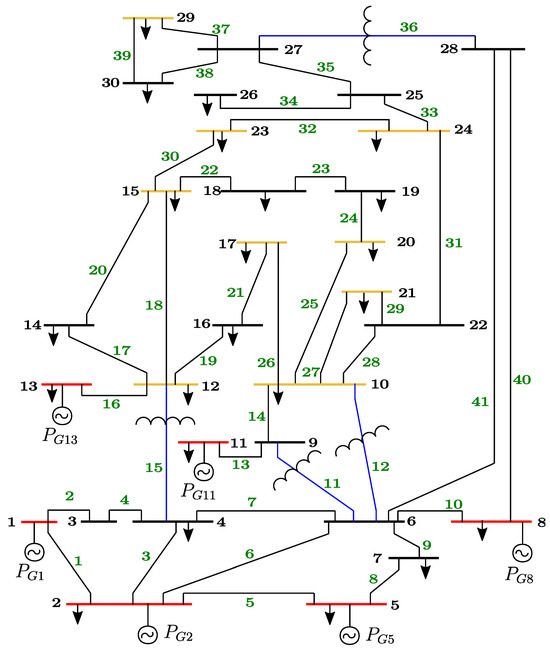

Following the previously described methodology in Section 5, the Mantis Shrimp Optimization Algorithm (MShOA), the Beluga Whale Optimization (BWO), the Arithmetic Optimization Algorithm (AOA), and the Synergistic Swarm Optimization Algorithm (SSOA) are applied to the optimal power flow problem for the IEEE-30 Bus test system; see Figure 25. Under these tests, three case studies were analyzed: the optimization of the total fuel cost for generation, the optimization of active power losses, and the optimization of reactive power losses.

Figure 25.

IEEE 30-bus system.

Case 1 included the total fuel cost of the six generating units connected at buses 1, 2, 5, 8, 11, and 13. The objective function was defined as follows:

where the quadratic function is described in [76].

The objective functions for Case 2 and Case 3, which involved optimizing the active power losses and optimizing the reactive power losses, respectively, are described in Equations (43) and (44):

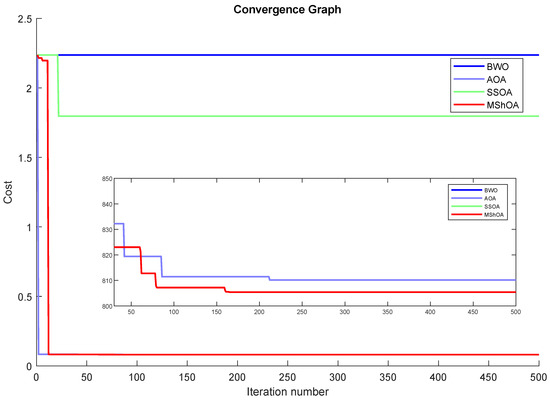

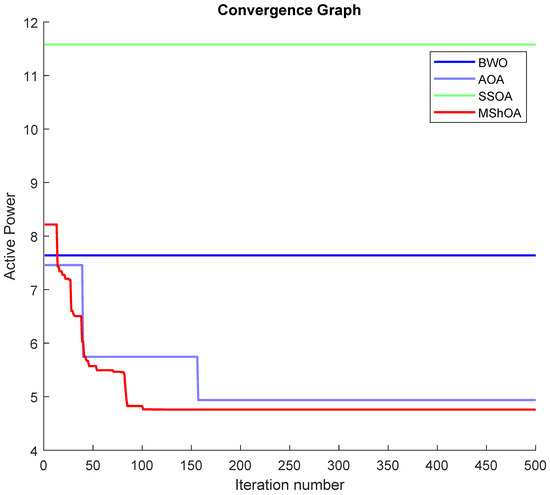

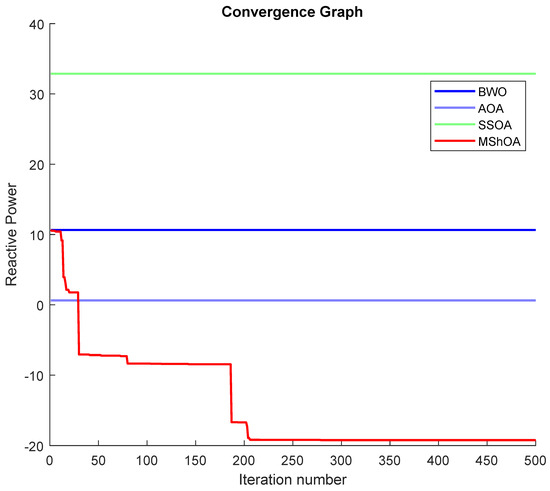

The convergence graphs analysis showed that MShOA outperformed the Beluga Whale Optimization (BWO), Arithmetic Optimization Algorithm (AOA), and Synergistic Swarm Optimization Algorithm (SSOA) in all three cases of this study, as illustrated in Figure 26, Figure 27 and Figure 28, respectively. The tests were conducted with a population size of 30 and 500 iterations. The minimum values obtained by each algorithm for each case are summarized in Table 38.

Figure 26.

Case 1: minimization of fuel cost.

Figure 27.

Case 2: minimization of active power transmission losses.

Figure 28.

Case 3: minimization of reactive power transmission losses.

Table 38.

Obtained results.

6. Conclusions

This paper discusses a novel metaheuristic optimization technique inspired by the behavior of the mantis shrimp, called the Mantis Shrimp Optimization Algorithm (MShOA). The shrimp’s behavior includes three strategies: forage (food search); attack (the mantis shrimp strike); and burrow, defense, and shelter (hole defense and shelter). These strategies are triggered by the type of polarized light detected by the mantis shrimp.

The algorithm’s performance was evaluated on 20 testbench functions, on 10 real-world optimization problems taken from IEEE CEC2020, and on 3 study cases related to the optimal power flow problem: optimization of fuel cost in electric power generation, and optimization of active and reactive power losses. In addition, the algorithm’s performance was compared with fourteen algorithms selected from the scientific literature.

The statistical analysis results indicate that MShOA outperformed CFOA, ALO, DO, EMA, GWO, LCA, MAO, MPA, SSA, TSA, and WOA. In addition, it proved to be competitive with BWO, AOA, and SSOA.

Results and conclusions of this study:

- The mantis shrimp’s biological strategies modeled in this study included polarization principles in optics as new methods for optimization goals.

- MShOA has no parameters to configure in the modeling of strategies. The only parameters are those common to all bio-inspired algorithms, e.g., population size and number of iterations.

- The Wilcoxon rank and Friedman tests were performed to analyze the 10 unimodal and 10 multimodal functions. The Wilcoxon test results indicate that MShOA outperformed all the other algorithms in unimodal functions. In addition, it ranked first in the Friedman test. Furthermore, in the Wilcoxon test, MShOA outperformed the following algorithms: SSA, EMA, ALO, MAO, and CFOA in multimodal functions. Additionally, it ranked second in the Friedman statistical tests. These results demonstrate that MShOA has a remarkable balance between local and global searches.

- The set of 20 functions, unimodal and multimodal, was analyzed with Wilcoxon and Friedman statistical tests. The Wilcoxon test results indicate that MShOA outperformed the following optimization algorithms: CFOA, ALO, DO, EMA, GWO, LCA, MAO, MPA, SSA, TSA, and WOA. On the other hand, the Friedman test analysis shows that MShOA was ranked second.

- MShOA demonstrated outstanding results in 80% of the real-world IEEE CEC2020 engineering problems: process synthesis problem, process synthesis and design problem, process flow sheeting problem, welded beam design, planetary gear train design optimization problem, and tension/compression spring design.

- In the optimal power flow (OPF) problem study cases, MShOA obtained better solutions than BWO, AOA, and SSOA.

- MShOA was able to effectively solve real-world problems with unknown search spaces.

The proposed algorithm demonstrated competitive performances. However, it has some limitations that open avenues for future work. Currently, it is designed for single-objective optimization and has not yet been extended to handle multi-objective scenarios. Additionally, while it performed well on the tested optimal power flow (OPF) cases, its generalization to more complex or large-scale OPF models remains to be fully explored. Future work will address these limitations by extending the algorithm to multi-objective optimization and evaluating its applicability to broader and more diverse OPF problem instances.

Author Contributions

Conceptualization, J.A.S.C., H.P.V. and A.F.P.D.; Methodology, H.P.V. and A.F.P.D.; Software, J.A.S.C.; Validation, H.P.V. and A.F.P.D.; Formal analysis, J.A.S.C., H.P.V. and A.F.P.D.; Investigation, J.A.S.C.; Resources, H.P.V.; Writing—original draft preparation, J.A.S.C.; Writing—review and editing, H.P.V. and A.F.P.D.; Visualization, J.A.S.C.; Supervision, H.P.V. and A.F.P.D.; Project administration, H.P.V. and A.F.P.D.; Funding acquisition, H.P.V. All authors have read and agreed to the published version of this manuscript.

Funding

This project was supported by the Instituto Politécnico Nacional (IPN) through grant SIP no. 20250569.

Data Availability Statement

The source code used to support the findings of this study has been deposited in the MathWorks repository at https://www.mathworks.com/matlabcentral/fileexchange/180937-mantis-shrimp-optimization-algorithm-mshoa, available since 30 April 2025.

Acknowledgments

The first author acknowledges support from SECIHTI to pursue his Ph.D. in advanced technology at the Instituto Politécnico Nacional (IPN)–CICATA Altamira.

Conflicts of Interest

The authors declared no potential conflicts of interest with respect to the research, authorship, funding, and/or publication of this article.

Appendix A

Table A1.

Classification of testbench functions.

Table A1.

Classification of testbench functions.

| ID | Function Name | Unimodal | Multimodal | n-Dimensional | Non-Separable | Convex | Differentiable | Continuous | Non-Convex | Non-Differentiable | Separable | Random |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| F1 | Brown | x | x | x | x | x | ||||||

| F2 | Griewank | x | x | x | x | x | ||||||

| F3 | Schwefel 2.20 | x | x | x | x | x | x | |||||

| F4 | Schwefel 2.21 | x | x | x | x | x | x | |||||

| F5 | Schwefel 2.22 | x | x | x | x | x | x | |||||

| F6 | Schwefel 2.23 | x | x | x | x | x | x | |||||

| F7 | Sphere | x | x | x | x | x | x | |||||

| F8 | Sum Squares | x | x | x | x | x | x | |||||

| F9 | Xin-She Yang N. 3 | x | x | x | x | x | ||||||

| F10 | Zakharov | x | x | x | x | |||||||

| F11 | Ackley | x | x | x | x | x | ||||||

| F12 | Ackley N. 4 | x | x | x | x | x | ||||||

| F13 | Periodic | x | x | x | x | x | x | |||||

| F14 | Quartic | x | x | x | x | x | x | |||||

| F15 | Rastrigin | x | x | x | x | x | x | |||||

| F16 | Rosenbrock | x | x | x | x | x | x | |||||

| F17 | Salomon | x | x | x | x | x | x | |||||

| F18 | Xin-She Yang | x | x | x | x | x | ||||||

| F19 | Xin-She Yang N. 2 | x | x | x | x | |||||||

| F20 | Xin-She Yang N. 4 | x | x | x | x | x |

Table A2.

Descriptions of the testbench functions.

Table A2.

Descriptions of the testbench functions.

| ID | Function | Dim | Interval | |

|---|---|---|---|---|

| F1 | 30 | 0 | ||

| F2 | 30 | 0 | ||

| F3 | 30 | 0 | ||

| F4 | 30 | 0 | ||

| F5 | 30 | 0 | ||

| F6 | 30 | 0 | ||

| F7 | 30 | 0 | ||

| F8 | 30 | 0 | ||

| F9 | 30 | , | −1 | |

| F10 | 30 | 0 | ||

| F11 | 30 | , | 0 | |

| F12 | 2 | |||

| F13 | 30 | 0.9 | ||

| F14 | 30 | random noise | ||

| F15 | 30 | 0 | ||

| F16 | 30 | 0 | ||

| F17 | 30 | 0 | ||

| F18 | 30 | random | 0 | |

| F19 | 30 | 0 | ||

| F20 | 30 | −1 |

References

- Sang-To, T.; Le-Minh, H.; Wahab, M.A.; Thanh, C.L. A new metaheuristic algorithm: Shrimp and Goby association search algorithm and its application for damage identification in large-scale and complex structures. Adv. Eng. Softw. 2023, 176, 103363. [Google Scholar] [CrossRef]

- Lodewijks, G.; Cao, Y.; Zhao, N.; Zhang, H. Reducing CO2 Emissions of an Airport Baggage Handling Transport System Using a Particle Swarm Optimization Algorithm. IEEE Access 2021, 9, 121894–121905. [Google Scholar] [CrossRef]

- Kumar, A. Chapter 5—Application of nature-inspired computing paradigms in optimal design of structural engineering problems—A review. In Nature-Inspired Computing Paradigms in Systems; Intelligent Data-Centric Systems; Mellal, M.A., Pecht, M.G., Eds.; Academic Press: Cambridge, MA, USA, 2021; pp. 63–74. [Google Scholar] [CrossRef]

- Sharma, S.; Saha, A.K.; Lohar, G. Optimization of weight and cost of cantilever retaining wall by a hybrid metaheuristic algorithm. Eng. Comput. 2022, 38, 2897–2923. [Google Scholar] [CrossRef]

- Peraza-Vázquez, H.; Peña-Delgado, A.; Ranjan, P.; Barde, C.; Choubey, A.; Morales-Cepeda, A.B. A bio-inspired method for mathematical optimization inspired by arachnida salticidade. Mathematics 2022, 10, 102. [Google Scholar] [CrossRef]

- Peña-Delgado, A.F.; Peraza-Vázquez, H.; Almazán-Covarrubias, J.H.; Cruz, N.T.; García-Vite, P.M.; Morales-Cepeda, A.B.; Ramirez-Arredondo, J.M. A Novel Bio-Inspired Algorithm Applied to Selective Harmonic Elimination in a Three-Phase Eleven-Level Inverter. Math. Probl. Eng. 2020, 8856040. [Google Scholar] [CrossRef]

- Tzanetos, A.; Dounias, G. Nature inspired optimization algorithms or simply variations of metaheuristics? Artif. Intell. Rev. 2021, 54, 1841–1862. [Google Scholar] [CrossRef]

- Joyce, T.; Herrmann, J.M. A Review of no free lunch theorems, and their implications for metaheuristic optimisation. In Nature-Inspired Algorithms and Applied Optimization; Yang, X.S., Ed.; Springer International Publishing: Cham, Switzerland, 2018; pp. 27–51. [Google Scholar] [CrossRef]

- Almazán-Covarrubias, J.H.; Peraza-Vázquez, H.; Peña-Delgado, A.F.; García-Vite, P.M. An Improved Dingo Optimization Algorithm Applied to SHE-PWM Modulation Strategy. Appl. Sci. 2022, 12, 992. [Google Scholar] [CrossRef]

- Abualigah, L.; Hanandeh, E.S.; Zitar, R.A.; Thanh, C.L.; Khatir, S.; Gandomi, A.H. Revolutionizing sustainable supply chain management: A review of metaheuristics. Eng. Appl. Artif. Intell. 2023, 126, 106839. [Google Scholar] [CrossRef]

- Beyer, H.G.; Beyer, H.G.; Schwefel, H.P.; Schwefel, H.P. Evolution strategies—A comprehensive introduction. Nat. Comput. 2002, 1, 3–52. [Google Scholar] [CrossRef]

- Yao, X.; Liu, Y.; Lin, G. Evolutionary programming made faster. IEEE Trans. Evol. Comput. 1999, 3, 82–102. [Google Scholar] [CrossRef]

- Storn, R.; Price, K. Differential Evolution—A Simple and Efficient Heuristic for Global Optimization over Continuous Spaces. J. Glob. Optim. 1997, 11, 341–359. [Google Scholar] [CrossRef]

- Holland, J.H. Genetic algorithms. Sci. Am. 1992, 267, 66–73. [Google Scholar] [CrossRef]

- Koza, J.R. Genetic programming as a means for programming computers by natural selection. Stat. Comput. 1994, 4, 87–112. [Google Scholar] [CrossRef]

- Heidari, A.A.; Mirjalili, S.; Faris, H.; Aljarah, I.; Mafarja, M.; Chen, H. Harris hawks optimization: Algorithm and applications. Future Gener. Comput. Syst. 2019, 97, 849–872. [Google Scholar] [CrossRef]

- Mirjalili, S.; Gandomi, A.H.; Mirjalili, S.Z.; Saremi, S.; Faris, H.; Mirjalili, S.M. Salp Swarm Algorithm: A bio-inspired optimizer for engineering design problems. Adv. Eng. Softw. 2017, 114, 163–191. [Google Scholar] [CrossRef]

- Mirjalili, S.; Lewis, A. The Whale Optimization Algorithm. Adv. Eng. Softw. 2016, 95, 51–67. [Google Scholar] [CrossRef]

- Askarzadeh, A. A novel metaheuristic method for solving constrained engineering optimization problems: Crow search algorithm. Comput. Struct. 2016, 169, 1–12. [Google Scholar] [CrossRef]

- Mirjalili, S. Dragonfly algorithm: A new meta-heuristic optimization technique for solving single-objective, discrete, and multi-objective problems. Neural Comput. Appl. 2016, 27, 1053–1073. [Google Scholar] [CrossRef]

- Mirjalili, S. The ant lion optimizer. Adv. Eng. Softw. 2015, 83, 80–98. [Google Scholar] [CrossRef]

- Mirjalili, S.; Mirjalili, S.M.; Lewis, A. Grey Wolf Optimizer. Adv. Eng. Softw. 2014, 69, 46–61. [Google Scholar] [CrossRef]

- Kaveh, A.; Farhoudi, N. A new optimization method: Dolphin echolocation. Adv. Eng. Softw. 2013, 59, 53–70. [Google Scholar] [CrossRef]

- Gandomi, A.H.; Yang, X.S.; Alavi, A.H.; Talatahari, S. Bat algorithm for constrained optimization tasks. Neural Comput. Appl. 2013, 22, 1239–1255. [Google Scholar] [CrossRef]

- Gandomi, A.H.; Alavi, A.H. Krill herd: A new bio-inspired optimization algorithm. Commun. Nonlinear Sci. Numer. Simul. 2012, 17, 4831–4845. [Google Scholar] [CrossRef]

- Yang, X.S. Firefly algorithms for multimodal optimization. In Stochastic Algorithms: Foundations and Applications; Lecture Notes in Computer Science; Springer: Berlin/Heidelberg, Germany, 2009; Volume 5792. [Google Scholar] [CrossRef]

- Dorigo, M.; Caro, G.D. Ant Colony Optimization: A New Meta-Heuristic. In Proceedings of the 1999 Congress on Evolutionary Computation (CEC 1999), Washington, DC, USA, 6–9 July 1999. [Google Scholar] [CrossRef]

- Kennedy, J.; Eberhart, R. Particle Swarm Optimization. In Proceedings of the IEEE International Conference on Neural Networks, Perth, WA, Australia, 27 November–1 December 1995. [Google Scholar] [CrossRef]

- Kaveh, A.; Dadras, A. A novel meta-heuristic optimization algorithm: Thermal exchange optimization. Adv. Eng. Softw. 2017, 110, 69–84. [Google Scholar] [CrossRef]

- Kaveh, A.; Bakhshpoori, T. Water Evaporation Optimization: A novel physically inspired optimization algorithm. Comput. Struct. 2016, 167, 69–85. [Google Scholar] [CrossRef]

- Kashan, A.H. A new metaheuristic for optimization: Optics inspired optimization (OIO). Comput. Oper. Res. 2015, 55, 99–125. [Google Scholar] [CrossRef]

- Rashedi, E.; Nezamabadi-pour, H.; Saryazdi, S. GSA: A Gravitational Search Algorithm. Inf. Sci. 2009, 179, 2232–2248. [Google Scholar] [CrossRef]

- Formato, R.A. Central force optimization: A new metaheuristic with applications in applied electromagnetics. Prog. Electromagn. Res. 2007, 77, 425–491. [Google Scholar] [CrossRef]

- Erol, O.K.; Eksin, I. A new optimization method: Big Bang-Big Crunch. Adv. Eng. Softw. 2006, 37, 106–111. [Google Scholar] [CrossRef]

- Zhu, A.; Gu, Z.; Hu, C.; Niu, J.; Xu, C.; Li, Z. Political optimizer with interpolation strategy for global optimization. PLoS ONE 2021, 16, e0251204. [Google Scholar] [CrossRef]

- Fadakar, E.; Ebrahimi, M. A new metaheuristic football game inspired algorithm. In Proceedings of the 1st Conference on Swarm Intelligence and Evolutionary Computation (CSIEC 2016), Bam, Iran, 9–11 March 2016. [Google Scholar] [CrossRef]

- Rao, R.V.; Savsani, V.J.; Vakharia, D.P. Teaching-learning-based optimization: A novel method for constrained mechanical design optimization problems. CAD—Comput.-Aided Des. 2011, 43, 303–315. [Google Scholar] [CrossRef]

- Atashpaz-Gargari, E.; Lucas, C. Imperialist Competitive Algorithm: An Algorithm for Optimization Inspired by Imperialistic Competition. In Proceedings of the 2007 IEEE Congress on Evolutionary Computation (CEC 2007), Singapore, 25–28 September 2007. [Google Scholar] [CrossRef]

- Peraza-Vázquez, H.; Peña-Delgado, A.; Merino-Treviño, M.; Morales-Cepeda, A.B.; Sinha, N. A novel metaheuristic inspired by horned lizard defense tactics. Artif. Intell. Rev. 2024, 57, 1–65. [Google Scholar] [CrossRef]

- Harifi, S.; Mohammadzadeh, J.; Khalilian, M.; Ebrahimnejad, S. Giza Pyramids Construction: An ancient-inspired metaheuristic algorithm for optimization. Evol. Intell. 2021, 14, 1743–1761. [Google Scholar] [CrossRef]

- Patel, R.N.; Khil, V.; Abdurahmonova, L.; Driscoll, H.; Patel, S.; Pettyjohn-Robin, O.; Shah, A.; Goldwasser, T.; Sparklin, B.; Cronin, T.W. Mantis shrimp identify an object by its shape rather than its color during visual recognition. J. Exp. Biol. 2021, 224, 242256. [Google Scholar] [CrossRef] [PubMed]

- Patel, R.N.; Cronin, T.W. Landmark navigation in a mantis shrimp. Proc. R. Soc. B 2020, 287, 20201898. [Google Scholar] [CrossRef] [PubMed]

- Streets, A.; England, H.; Marshall, J. Colour vision in stomatopod crustaceans: More questions than answers. J. Exp. Biol. 2022, 225, jeb243699. [Google Scholar] [CrossRef]

- Thoen, H.H.; How, M.J.; Chiou, T.H.; Marshall, J. A different form of color vision in mantis shrimp. Science 2014, 343, 411–413. [Google Scholar] [CrossRef]

- Chiou, T.H.; Kleinlogel, S.; Cronin, T.; Caldwell, R.; Loeffler, B.; Siddiqi, A.; Goldizen, A.; Marshall, J. Circular polarization vision in a stomatopod crustacean. Curr. Biol. 2008, 18, 429–434. [Google Scholar] [CrossRef]

- Zhong, B.; Wang, X.; Gan, X.; Yang, T.; Gao, J. A biomimetic model of adaptive contrast vision enhancement from mantis shrimp. Sensors 2020, 20, 4588. [Google Scholar] [CrossRef]

- Cronin, T.W.; Chiou, T.H.; Caldwell, R.L.; Roberts, N.; Marshall, J. Polarization signals in mantis shrimps. In Proceedings of the Polarization Science and Remote Sensing IV, San Diego, CA, USA, 3–4 August 2009; Volume 7461. [Google Scholar] [CrossRef]

- Wang, T.; Wang, S.; Gao, B.; Li, C.; Yu, W. Design of Mantis-Shrimp-Inspired Multifunctional Imaging Sensors with Simultaneous Spectrum and Polarization Detection Capability at a Wide Waveband. Sensors 2024, 24, 1689. [Google Scholar] [CrossRef]

- Daly, I.M.; How, M.J.; Partridge, J.C.; Temple, S.E.; Marshall, N.J.; Cronin, T.W.; Roberts, N.W. Dynamic polarization vision in mantis shrimps. Nat. Commun. 2016, 7, 1–9. [Google Scholar] [CrossRef] [PubMed]

- Gagnon, Y.L.; Templin, R.M.; How, M.J.; Marshall, N.J. Circularly polarized light as a communication signal in mantis shrimps. Curr. Biol. 2015, 25, 3074–3078. [Google Scholar] [CrossRef] [PubMed]

- Alavi, S. Statistical Mechanics: Theory and Molecular Simulation. By Mark E. Tuckerman. Angew. Chem. Int. Ed. 2011, 50, 12138–12139. [Google Scholar] [CrossRef]

- Patek, S.N.; Caldwell, R.L. Extreme impact and cavitation forces of a biological hammer: Strike forces of the peacock mantis shrimp Odontodactylus scyllarus. J. Exp. Biol. 2005, 208, 3655–3664. [Google Scholar] [CrossRef]

- Patek, S.N.; Nowroozi, B.N.; Baio, J.E.; Caldwell, R.L.; Summers, A.P. Linkage mechanics and power amplification of the mantis shrimp’s strike. J. Exp. Biol. 2007, 210, 3677–3688. [Google Scholar] [CrossRef]

- DeVries, M.S.; Murphy, E.A.; Patek, S.N. Research article: Strike mechanics of an ambush predator: The spearing mantis shrimp. J. Exp. Biol. 2012, 215, 4374–4384. [Google Scholar] [CrossRef]

- Cox, S.M.; Schmidt, D.; Modarres-Sadeghi, Y.; Patek, S.N. A physical model of the extreme mantis shrimp strike: Kinematics and cavitation of Ninjabot. Bioinspiration Biomimetics 2014, 9, 016014. [Google Scholar] [CrossRef] [PubMed]

- Caldwell, R.L.; Dingle, J. The Influence of Size Differential On Agonistic Encounters in the Mantis Shrimp, Gonodactylus Viridis. Behaviour 2008, 69, 255–264. [Google Scholar] [CrossRef]

- Caldwell, R.L. Cavity occupation and defensive behaviour in the stomatopod Gonodactylus festai: Evidence for chemically mediated individual recognition. Anim. Behav. 1979, 27, 194–201. [Google Scholar] [CrossRef]

- Caldwell, R.L.; Dingle, H. Ecology and evolution of agonistic behavior in stomatopods. Die Naturwissenschaften 1975, 62, 214–222. [Google Scholar] [CrossRef]

- Berzins, I.K.; Caldwell, R.L. The effect of injury on the agonistic behavior of the Stomatopod, Gonodactylus Bredini (manning). Mar. Behav. Physiol. 1983, 10, 83–96. [Google Scholar] [CrossRef]

- Steger, R.; Caldwell, R.L. Intraspecific deception by bluffing: A defense strategy of newly molted stomatopods (Arthropoda: Crustacea). Science 1983, 221, 558–560. [Google Scholar] [CrossRef] [PubMed]

- Caldwell, R.L. The Deceptive Use of Reputation by Stomatopods; State University of New York Press: New York, NY, USA, 1986; pp. 129–145. [Google Scholar]

- Abualigah, L.; Diabat, A.; Mirjalili, S.; Elaziz, M.A.; Gandomi, A.H. The Arithmetic Optimization Algorithm. Comput. Methods Appl. Mech. Eng. 2021, 376, 113609. [Google Scholar] [CrossRef]

- Zhong, C.; Li, G.; Meng, Z. Beluga whale optimization: A novel nature-inspired metaheuristic algorithm. Knowl.-Based Syst. 2022, 251, 109215. [Google Scholar] [CrossRef]

- Zhao, S.; Zhang, T.; Ma, S.; Chen, M. Dandelion Optimizer: A nature-inspired metaheuristic algorithm for engineering applications. Eng. Appl. Artif. Intell. 2022, 114, 105075. [Google Scholar] [CrossRef]

- Sulaiman, M.H.; Mustaffa, Z.; Saari, M.M.; Daniyal, H.; Mirjalili, S. Evolutionary mating algorithm. Neural Comput. Appl. 2023, 35, 487–516. [Google Scholar] [CrossRef]

- Houssein, E.H.; Oliva, D.; Samee, N.A.; Mahmoud, N.F.; Emam, M.M. Liver Cancer Algorithm: A novel bio-inspired optimizer. Comput. Biol. Med. 2023, 165, 107389. [Google Scholar] [CrossRef] [PubMed]

- Villuendas-Rey, Y.; Velázquez-Rodríguez, J.L.; Alanis-Tamez, M.D.; Moreno-Ibarra, M.A.; Yáñez-Márquez, C. Mexican axolotl optimization: A novel bioinspired heuristic. Mathematics 2021, 9, 781. [Google Scholar] [CrossRef]

- Faramarzi, A.; Heidarinejad, M.; Mirjalili, S.; Gandomi, A.H. Marine Predators Algorithm: A nature-inspired metaheuristic. Expert Syst. Appl. 2020, 152, 113377. [Google Scholar] [CrossRef]

- Alzoubi, S.; Abualigah, L.; Sharaf, M.; Daoud, M.S.; Khodadadi, N.; Jia, H. Synergistic Swarm Optimization Algorithm. CMES Comput. Model. Eng. Sci. 2024, 139, 2557–2604. [Google Scholar] [CrossRef]

- Kaur, S.; Awasthi, L.K.; Sangal, A.L.; Dhiman, G. Tunicate Swarm Algorithm: A new bio-inspired based metaheuristic paradigm for global optimization. Eng. Appl. Artif. Intell. 2020, 90, 103541. [Google Scholar] [CrossRef]

- Jia, H.; Wen, Q.; Wang, Y.; Mirjalili, S. Catch fish optimization algorithm: A new human behavior algorithm for solving clustering problems. Clust. Comput. 2024, 27, 13295–13332. [Google Scholar] [CrossRef]

- Kumar, A.; Wu, G.; Ali, M.Z.; Mallipeddi, R.; Suganthan, P.N.; Das, S. A test-suite of non-convex constrained optimization problems from the real-world and some baseline results. Swarm Evol. Comput. 2020, 56, 100693. [Google Scholar] [CrossRef]

- Lin, J.; Magnago, F.H. Optimal power flow In Electricity Markets: Theories and Applications; Wiley: Hoboken, NJ, USA, 2017; pp. 147–171. [Google Scholar] [CrossRef]

- Nucci, C.A.; Borghetti, A.; Napolitano, F.; Tossani, F. Basics of Power systems analysis. In Springer Handbook of Power Systems; Papailiou, K.O., Ed.; Springer: Singapore, 2021; pp. 273–366. [Google Scholar] [CrossRef]

- Huneault, M.; Galiana, F.D. A Survey Of The Optimal Power Flow LiteratureA Survey Of The Optimal Power Flow Literature. IEEE Trans. Power Syst. 1991, 6, 762–770. [Google Scholar] [CrossRef]

- Ela, A.A.A.E.; Abido, M.A.; Spea, S.R. Optimal power flow using differential evolution algorithm. Electr. Power Syst. Res. 2010, 80, 878–885. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).