1. Introduction

The problem of maximizing DR-submodular functions, which generalizes set submodular functions to more general domains such as integer lattices and box regions in Euclidean spaces, has emerged as a prominent research topic in optimization. Set submodular functions inherently capture the diminishing returns property, where the marginal gain of adding an element to a set decreases as the set expands. DR-submodular functions extend this fundamental property to continuous and mixed-integer domains, enabling broader applications in machine learning, graph theory, economics, and operations research [

1,

2,

3,

4]. This extension not only addresses practical problems with continuous variables but also provides a unified framework for solving set submodular maximization through continuous relaxation techniques [

5,

6].

The problem of deterministic DR-submodular function maximization considered in this paper can be formally written as follows:

where the feasible set

is compact and convex, and

is a differentiable DR-submodular function. While this problem is generally NP-hard, under certain structural assumptions, approximation algorithms with constant approximation ratios can be developed. For unconstrained scenarios, Niazadeh et al. [

7] established a tight

-approximation algorithm, aligning with classical results for unconstrained submodular maximization. In constrained settings, monotonicity plays a critical role: convex-constrained monotone DR-submodular maximization admits a

-approximation [

1], whereas non-monotone cases under down-closed constraints achieve

-approximation [

1] with recent improvements to

[

8]. For general convex constraints containing the origin, a

-approximation guarantee is attainable [

9,

10,

11].

While deterministic DR-submodular maximization has been extensively studied, practical scenarios often involve uncertainties where the objective function can only be accessed through stochastic evaluations. This motivates the investigation of stochastic DR-submodular maximization problems, which are typically formulated as follows:

where the DR-submodular function

is defined as the expectation of stochastic functions

with

. Building upon the Lyapunov framework established for deterministic problems [

10], recent works like [

12,

13] have developed stochastic variants of continuous greedy algorithms. Specifically, Lian et al. [

14] proposed SPIDER-based methods that reduce gradient evaluation complexity from

in earlier works [

12] to

through variance reduction techniques.

The Lyapunov framework proposed by Du et al. [

9,

10] provides a unified perspective for analyzing DR-submodular maximization algorithms. By modeling algorithms as discretizations of ordinary differential equations (ODEs) in the time domain

, this approach establishes a direct connection between continuous-time dynamics and discrete-time implementations. Specifically, the approximation ratio of discrete algorithms differs from their continuous counterparts by a residual term that diminishes as the stepsize approaches zero. However, the use of constant stepsizes in this framework imposes fundamental limitations: the required number of iterations grows inversely with stepsize magnitude, leading to linear computational complexity both in theory and practical implementations.

To address the limitations of fixed stepsize strategies, recent advances have explored dynamic stepsize adaptation for submodular optimization. For box-constrained DR-submodular maximization, Chen et al. [

15] developed a

-approximation algorithm with

adaptive rounds, where stepsizes are selected through enumeration over a candidate set of size

. Furthermore, Ene et al. [

16] achieved

-approximation for non-monotone cases using

parallel rounds, and

-approximation for monotone cases with

rounds. These works inspire our development of binary search-based dynamic stepsizes that achieve comparable approximation guarantees while reducing computational complexity.

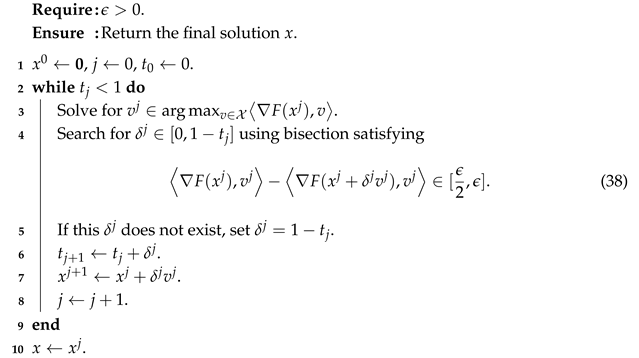

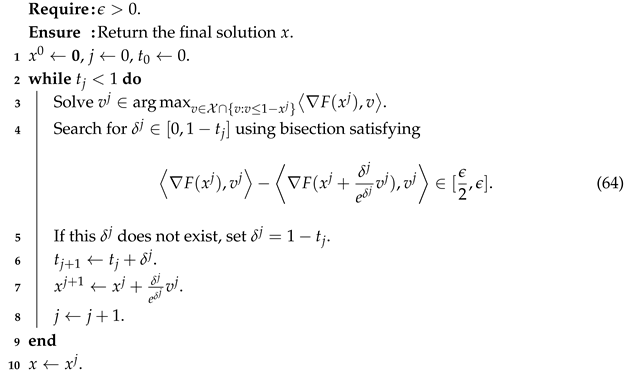

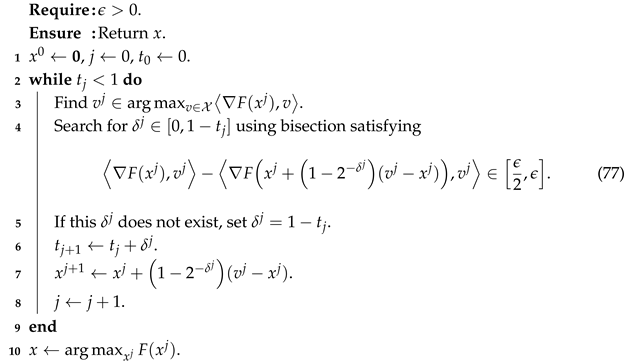

The dynamic stepsize strategy in this paper leverages binary search to approximate solutions to univariate equations by selecting intervals based on midpoint function value signs, ensuring convergence via monotonicity and continuity. While stochastic methods like simulated annealing [

17] address non-convex/stochastic problems via probabilistic criteria, their guarantees depend on cooling schedules and lack deterministic convergence. In contrast, our binary search framework exploits the monotonicity/continuity of the stepsize equation (Equation (38)), achieving sufficiently precise solutions with guaranteed efficiency, avoiding cooling parameter dependencies and focusing on theoretical foundations for DR-submodular structures.

1.1. Contributions

This paper introduces a novel dynamic stepsize strategy for DR-submodular maximization problems, offering significant improvements over traditional fixed stepsize methods. Our approach achieves state-of-the-art approximation guarantees while reducing computational complexity. Notably, the iteration complexity of our algorithms is independent of the smoothness parameter L. In the monotone case, it is also independent of the variable dimension n. Furthermore, both the gradient evaluation complexity and function evaluation complexity exhibit only a logarithmic dependence on the problem dimension n and the smoothness parameter L. Below, we summarize the key contributions:

Deterministic DR-Submodular Maximization: For deterministic settings, our dynamic stepsize strategy achieves the following complexity bounds:

- –

In the monotone case, the iteration complexity is , where reflects the gradient norm at the origin, and denotes the discretization error.

- –

For non-monotone functions, the iteration complexity increases to , accounting for the added challenge posed by non-monotonicity.

To determine the stepsize dynamically, we employ a binary search procedure, introducing an additional factor of to the evaluation complexity.

Stochastic DR-Submodular Maximization: Extending our approach to stochastic settings, we achieve comparable complexity results with high probability:

- –

For monotone objective functions, the iteration complexity remains .

- –

In the non-monotone case, the complexity is .

These results demonstrate that our method maintains efficiency regardless of the smoothness parameter L, making it particularly suitable for large-scale stochastic optimization problems.

Empirical Validation: We validate the effectiveness of our dynamic stepsize strategy through three examples: multilinear extensions of set submodular functions, DR-submodular quadratic functions, and softmax extensions for determinantal point processes (DPPs). The results confirm that our approach outperforms fixed stepsize strategies in terms of both iteration complexity and practical performance.

Table 1 provides a unified overview of our algorithms’ theoretical guarantees and computational complexities (iteration and gradient evaluation bounds) under diverse problem settings, enabling readers to rapidly grasp the efficiency and adaptability of our dynamic stepsize framework.

1.2. Organizations

The organization of the rest of this manuscript is as follows.

Section 2 introduces the fundamental concepts and key results that form the basis of our work. In

Section 3, we outline the design principles of our dynamic stepsize strategy and establish theoretical guarantees for both monotone and non-monotone deterministic objective functions.

Section 4 extends our approach to stochastic settings, presenting algorithms and analyses tailored for monotone and non-monotone DR-submodular functions under uncertainty. In

Section 5, we evaluate the computational efficiency of our strategy through its application to three canonical DR-submodular functions, with comprehensive numerical experiments validating the efficacy of the dynamic stepsize approach.

Section 6 summarizes our key findings while discussing both limitations and promising future research directions.

2. Preliminaries

We begin by introducing the formal definition of a non-negative DR-submodular function defined on the continuous domain , along with some fundamental properties.

Definition 1. A function is said to be DR-submodular if for any two vectors satisfying (coordinate-wise) and any scalar such that , the following inequality holds:for all . Here, represents the i-th standard basis vector in . This property reflects the diminishing returns behavior of F along each coordinate direction. Specifically, the marginal gain of increasing a single coordinate diminishes as the input vector grows larger.

To facilitate further discussions, we introduce additional notation. Throughout this paper, the inequality for two vectors means that holds for all . Additionally, the operation is defined as , and is defined as .

An important result is that, in the differentiable case, DR-submodular functions are equivalent to the monotonic decrease in the gradient. Specifically, when

F is differentiable,

F is DR-submodular if and only if [

2]

Another essential property for differentiable DR-submodular functions that will be used in this paper is derived from the concavity-like behavior in non-negative directions, as stated in the following proposition.

Proposition 1 ([

18]).

When F is differentiable and DR-submodular, then In this paper, we also require the function

F to be

L-smooth, meaning that for any

, there holds

where

denotes the Euclidean norm unless otherwise specified. An important property of

L-smooth functions is that they satisfy the following necessary (but not sufficient) condition:

For the stochastic DR-submodular maximization problem (

2), we introduce additional notations to describe the stochastic approximation of the objective function’s full gradient.

- At each iteration j, let denote a random subset of samples drawn from , with m representing the size of .

- The stochastic gradient at

x is computed as

- We use an unbiased estimator to approximate the true gradient .

All algorithms and theoretical analyses in this paper for problems (

1) and (

2) rely on the following foundational assumption:

Assumption 1. The problems under consideration satisfy these conditions:

- 1.

is DR-submodular and L-smooth.

- 2.

and .

- 3.

A Linear-Objective Optimization (LOO) oracle is available, providing solutions to

The following assumption is essential for the stochastic problem (

2).

Assumption 2. The stochastic gradient is unbiased—i.e., This assumption ensures that the mini-batch gradient estimator

satisfies

where

denotes the random mini-batch sampled at iteration

j. This property is critical for deriving high-probability guarantees in stochastic optimization.

Lyapunov Method for DR-Submodular Maximization

As discussed in [

10], Lyapunov functions play a crucial role in the analysis of algorithms. Depending on the specific problem, the Lyapunov function can take various parametric forms. Taking the monotone DR-submodular maximization problem for example, the ideal algorithm can be designed as follows:

A unified parameterized form of the Lyapunov function is given by:

where

and

are time-dependent parameters.

The monotonicity of the Lyapunov function is closely tied to the approximation ratio of the algorithm, as demonstrated by the following inequality:

where

represents the optimal solution. The specific values of

,

, and

T depend on the problem under consideration and are chosen accordingly to achieve the desired theoretical guarantees. In this problem, letting

can guarantee the monotonicity of

and then the approximation ratio for monotone DR-submodular functions is:

For maximizing non-monotone DR-submodular functions with down-closed constraints, the ideal algorithm can be designed as follows:

In this problem, let

,

, and

. Then the best approximation ratio for monotone DR-submodular functions is:

where

is down-closed.

For maximizing non-monotone DR-submodular functions with general convex constraints, the ideal algorithm can be designed as follows:

In this problem, let

,

, and

. Then the best approximation ratio for monotone DR-submodular functions is:

where

is only convex.

In this paper, we focus on the same algorithmic ODE forms as those discussed above. However, our key improvement lies in the discretization process. Specifically, we aim to enhance the iteration complexity by employing a dynamic stepsize strategy. This approach allows for more efficient approximations while maintaining the desired theoretical guarantees, thereby advancing the state-of-the-art in DR-submodular maximization algorithms.

5. Examples

To explore the potential acceleration offered by a dynamic stepsize strategy, we present three illustrative examples in this section.

Multilinear Relaxation for Submodular Maximization. Let

V be a finite ground set, and let

be a function. The multilinear extension of

f is defined as

where

. It is well known that the function

f is submodular (i.e.,

for any

and

) if and only if

F is DR-submodular. Therefore, maximizing a submodular function

f can be achieved by first solving the maximization of its multilinear extension and then obtaining a feasible solution to the original problem through a rounding method. Such algorithms are known to provide strong approximation guarantees [

5,

19].

Let denote the vector whose i-th entry is 1 and all other entries are 0. The upper bound of can be derived as follows.

Lemma 7. Let F denote of a submodular set function f. Suppose the feasible set satisfies and we have For the multilinear extension of a submodular set function, the Lipschitz constant

L is given by

[

6].

Softmax Relaxation for DPP MAP Problem. Determinantal point processes (DPPs) are probabilistic models that emphasize diversity by capturing repulsive interactions, making them highly valuable in machine learning for tasks requiring varied selections. Let

H denote the positive semi-definite kernel matrix associated with a DPP. The softmax extension of the DPP maximum a posteriori (MAP) problem is expressed as

where

I represents the identity matrix. Based on Corollary 2 in [

4], the gradient of the softmax extension

can be written as follows:

Consequently, the

-norm of the gradient at

is given by

In practical scenarios involving DPPs, the matrix H is often a Gram matrix, where the diagonal elements are universally bounded. This implies that the asymptotic growth of is upper-bounded by .

DR-Submodular Quadratic Functions. Consider a quadratic function

of the form

where

(i.e.,

A is a matrix with non-positive entries). In this case,

is DR-submodular. It is straightforward to verify that

and the gradient Lipschitz constant is given by

The computational complexities of the algorithms designed to address the three constrained DR-submodular function maximization problems outlined earlier are compiled in

Table 2. The results presented in the table reveal that the dynamic stepsize strategy introduced in this work offers a significant advantage in terms of complexity over the constant stepsize approach, both for the MLE and softmax relaxation problems. However, in the quadratic case, a definitive comparison of their complexities cannot be made, because they are determined by the

-norm of the linear term vector and the

-norm of the quadratic term matrix, respectively, and there is no inherent relationship between the magnitudes of these two quantities.

Numerical Experiments

We conduct numerical experiments to evaluate different stepsize selection strategies for solving DR-submodular maximization problems. Our investigation focuses on two fundamental classes of objective functions: quadratic DR-submodular functions and softmax extension functions. The experimental framework builds upon established methodologies from [

9,

14], with necessary adaptations for our specific analysis.

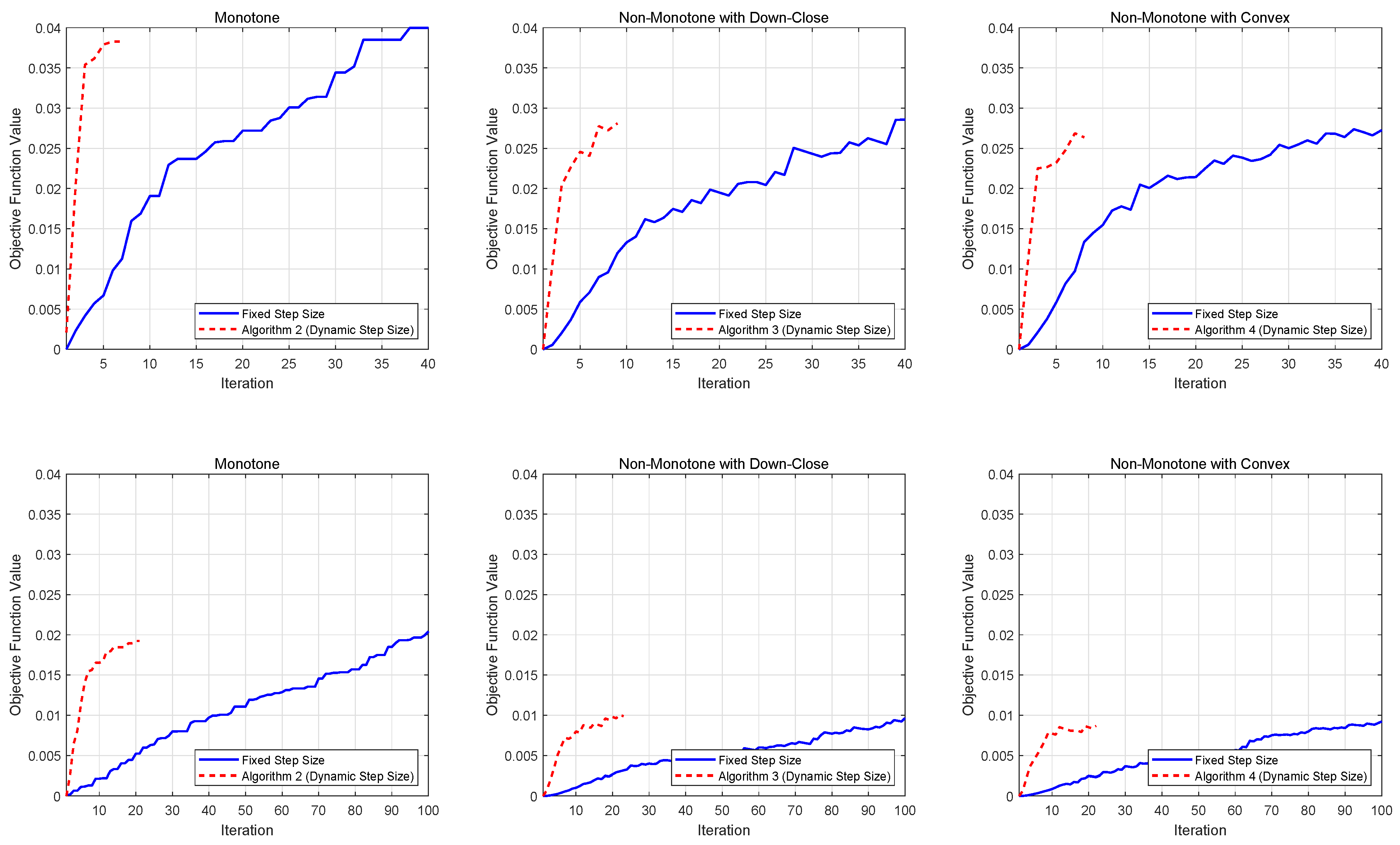

Our experimental evaluation considers two problem classes: softmax extension problems and quadratic DR-submodular problems with linear constraints. Since neither problem class inherently satisfies monotonicity, we augment both functions with an additional term, where b is a positive vector with components in appropriate ranges. This modification enables the verification of Algorithms 2 and 5 by ensuring monotonicity preservation.

We evaluate Algorithms 2–4 on the softmax extension problems, while testing the stochastic algorithms (Algorithms 5–7) on quadratic DR-submodular problems with incorporated random variables. The randomization methodology follows the principled approach outlined in [

14].

For each problem class, we consider decision space dimensions , with the number of constraints m set as for each dimension. The approximation parameter is fixed at 0.1, and the constant stepsize strategy employs 100 iterations. Each configuration is executed with five independent trials, with averaged results reported.

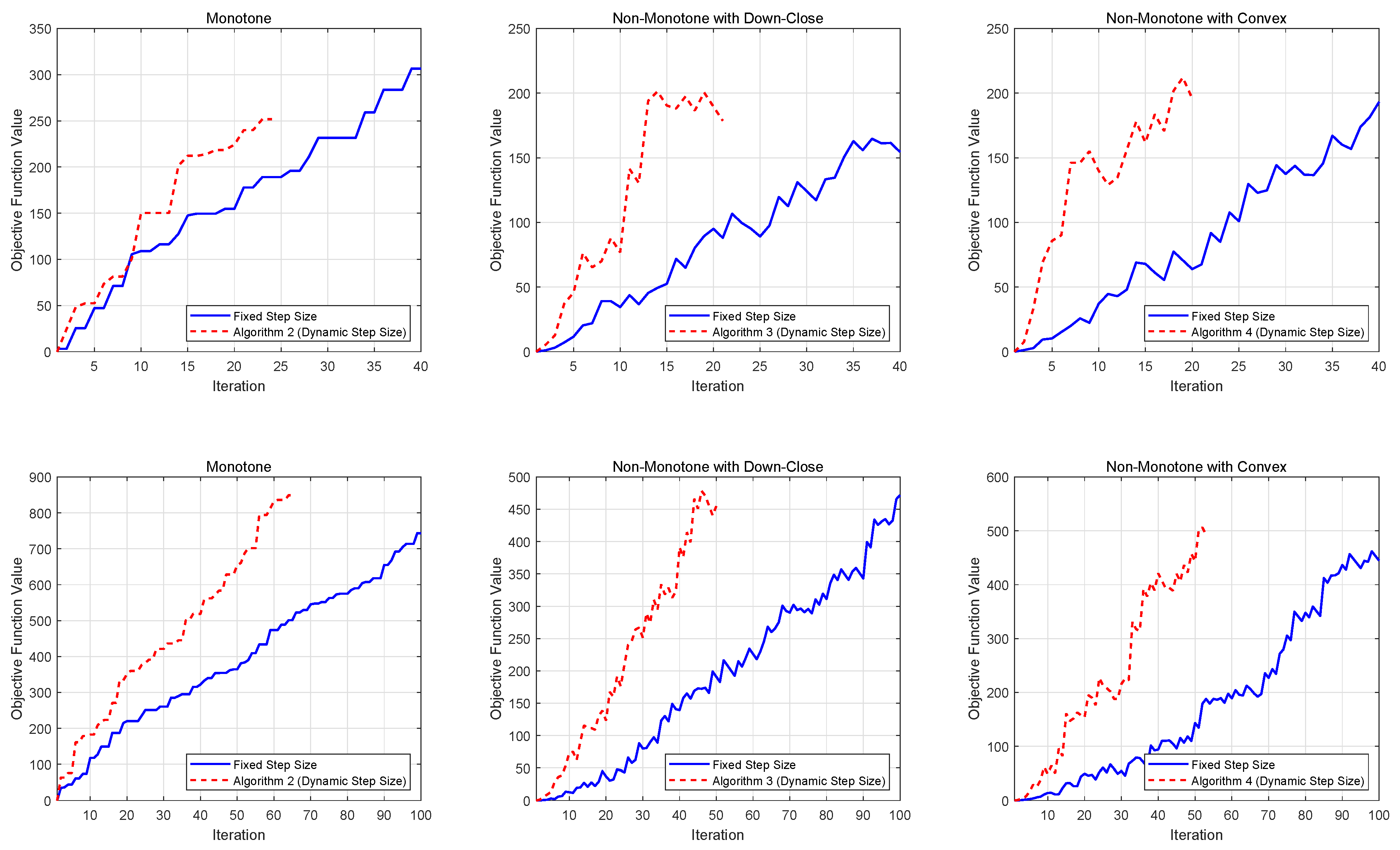

Figure 1 presents the performance comparison for softmax extension problems, while

Figure 2 displays the results for stochastic quadratic DR-submodular problems. Both figures demonstrate the evolution of achieved function values across different stepsize strategies, providing empirical insights into algorithmic efficiency.

From the numerical results, we observe that for both deterministic and stochastic problems, dynamic stepsizes generally lead to lower iteration complexity compared to constant stepsizes, especially for larger problem dimensions. This finding highlights the advantages of using dynamic stepsizes in solving DR-submodular maximization problems.

6. Conclusions

This paper introduces a dynamic stepsize strategy for DR-submodular maximization, achieving iteration complexities independent of the smoothness parameter L. In deterministic settings, monotone cases attain -approximation with iterations, while non-monotone problems under down-closed or general convex constraints achieve and -approximations with iterations. For stochastic optimization, variance reduction techniques (e.g., SPIDER) further reduce gradient evaluation complexities while maintaining high-probability guarantees. Empirical results on multilinear extensions, DPP softmax relaxations, and DR-submodular quadratics validate the practical efficiency of our methods compared to fixed stepsize baselines.

Our work has three key limitations: first, while our dynamic strategy matches the iteration complexity of fixed stepsize methods, it does not guarantee superiority for all L-smooth DR-submodular functions. Second, Algorithm 1 avoids the L-smoothness assumption but requires a univariate equation oracle to solve Equation (22), which lacks practical applications as no real-world examples have been identified to support this assumption. Third, our stepsize mechanism heavily relies on the DR-submodularity property, limiting its applicability to non-DR-submodular functions or mixed-integer domains. These limitations highlight opportunities for future research to extend our framework to broader function classes and practical scenarios.