Abstract

This paper explores articles hosted on the arXiv preprint server with the aim of uncovering valuable insights hidden in this vast collection of research. Employing text mining techniques and through the application of natural language processing methods, I xamine the contents of quantitative finance papers posted in arXiv from 1997 to 2022. I extract and analyze, without relying on ad hoc software or proprietary databases, crucial information from the entire documents, including the references, to understand the topic trends over time and to find out the most cited researchers and journals in this domain. Additionally, I compare numerous algorithms for performing topic modeling, including state-of-the-art approaches.

Keywords:

quantitative finance; text mining; natural language processing; unsupervised clustering; topic modeling; research trends; named entity recognition MSC:

94A16; 91G99; 68T50

1. Introduction

Quantitative finance is a field of finance that studies mathematical and statistical models and applies them to financial markets and investments, for pricing, risk management, and portfolio allocation. These models are needed to analyze financial data, find the price of financial instruments, and measure their risk (see [1,2]). Readers are referred to [3] for an insightful exploration of the role of models in finance and to [4] for some philosophical remarks on mathematics and finance.

The world of finance is always moving forward even in times of crisis. Innovations in finance come from the development of new financial services, products, or technologies. Research trends in quantitative finance are driven not only by innovations but also by structural changes in financial markets or by changes in regulation (refs. [5,6]). When a structural change occurs, some models are no longer able to explain the phenomena observed in the market; consequently, quants and researchers start working on new models. Examples of such changes are when the implied volatility smile appeared in 1987 (see [7]) or the Euribor-OIS spread materialized in 2007 (see [8]). Research activities driven by new products are, for instance, the development of pricing models for interest rate and equity derivatives started in 1990s, the structuring of credit products in the early 2000s, or the recent research trend on cryptocurrencies. New technologies applied to finance include the increasing role of big data and the advent of machine learning techniques. Regulation has an impact on the development of new quantitative tools for measuring, managing, and monitoring financial risks (e.g., the Basel Accords).

In this paper, I explore the arXiv preprint server, the dominant open-access preprint repository for scholarly papers in the fields of physics, mathematics, computer science, quantitative biology, quantitative finance, statistics, electrical engineering and systems science, and economics. The articles in arXiv are not peer reviewed, but there are advantages in submitting to this repository, mainly to disseminate a paper without waiting for the peer review and publishing process, which can be slow (see [9]). The arXiv collection provides a unique source of data for conducting various studies, including bibliometric, trend, and citation network analyzes (see [10]). It is a valuable resource for advancing scientific knowledge and conducting research on research, often referred to as meta-research. For example, trend detection on computer science papers stored in arXiv is performed in [11,12], the authors of [13] conduct a case study of computer science preprints submitted to arXiv from 2008 to 2017 to quantify how many of these preprints were eventually printed in peer-reviewed venues, the authors of [14] explore the images of around 1.5 million papers held in the repository, Okamura [15] investigates the citations of more than 1.5 million preprints on arXiv to study the evolution of collective attention on scientific knowledge, the authors of [16] train a state-of-art classification approach, and the authors of [17] design an algorithm to help researchers to perform systematic literature reviews.

In this study, I analyze all papers on quantitative finance, a small portion of the entire arXiv, which contains more than two million works at the time of writing. The choice is also motivated by my experience in this domain and scientific curiosity.

The code is run in a standard desktop environment, without using a big cluster. Scaling to a large number of papers may be not trivial. Dealing with a large amount of data requires significant computing resources, including processing power and memory, to manipulate and analyze the data efficiently. It is not simple and maybe even impossible to explore more than two million papers with a standard desktop environment like mine.

The studies of papers on finance topics are not new in the literature. For example, the authors of [18] review the history of a well-known journal in this field and highlight its growth in terms of productivity and impact. The authors present a bibliometric analysis and identify key contributors, themes, and co-authorship patterns, and suggest future research directions. A systematic literature review and a bibliometric analysis on around 3000 articles on asset pricing sourced from the top 50 finance and economics journals, spanning a 47-year period from 1973 to 2020 is conducted in [19]. As observed by the authors, the exclusion of certain publications may potentially offer an alternative perspective on the landscape of existing asset pricing research. By using bibliometric and network analysis techniques, including the Bibliometrix Tool of [20,21], researchers investigate more than 4000 papers on option pricing that appeared from 1973 to 2019. They follow the procedure suggested by [22]. Their study aims to pinpoint high-quality research publications, discern trends in research, evaluate the contributions of prominent researchers, assess contributions from different geographic regions and institutions, and, ultimately, to examine the interconnectedness among these aspects. The works of [18,19,21] are focused on asset pricing or on a specific journal, their corpus is obtained by searching in the Scopus database using specific keywords, and the bibliometric analysis relies on VOS viewer (see [23]) and Gephi (see [24]). A bibliometric analysis of financial risk has also been performed by [25], who analyze a sample of publications obtained from the Web of Science database using CiteSpace. Finally, the authors of [26] conduct a bibliometric analysis of asset–liability management (ALM) literature, identifying key journals, authors, and articles, while highlighting research trends and gaps in the field.

My study explores all papers on quantitative finance collected in arXiv up to the end of 2022 (around 16,000) and it considers text mining techniques implemented in Python 3.11 to extract information directly from the portable document format (pdf) files containing the full text of the papers, excluding images, without relying on ad hoc software or proprietary databases. As observed by the authors of [27], examining the full text of documents significantly improves text mining compared to studies that only explore information collected from abstracts (as a crosscheck, I conducted the analysis on both abstracts and full texts; the analysis using the full text data showed better results). Their finding highlights the importance of using complete textual content for more comprehensive and accurate text mining and analysis.

The main objectives of my work are twofold. First, I explore the topics of the quantitative finance papers collected in arXiv in order to describe the evolution of topics over time. After having evaluated the performance of various clustering algorithms, I investigate on which themes researchers have focused their attention in the period 1997 to 2022. Second, I try to understand who are the most prominent authors and journals in this field. Both analyses are performed with data mining techniques and without actually reading the papers.

As I will see in the following, text mining techniques can help to reveal specific topics, such as novel applications of sentiment analysis to stock price prediction, that are closely aligned with the behavioral finance literature.

The remainder of the paper is organized as follows. First, I provide a brief description of the data analyzed in this work (Section 2). Then, in Section 3, the preprocessing phase is discussed by offering further insights on the papers analyzed in my work. In Section 4, I compare various clustering algorithms and, after having selected the best performer, I explore, by splitting my corpus into 30 clusters, the evolution of topics over time. Finally, in Section 5, I describe an entity extraction process to investigate authors and journals with the largest number of occurrences in the corpus considered in this work. Section 6 concludes.

2. Data Description

In this section, I provide a description of the papers analyzed in this work. As observed above, there are various domains in arXiv (i.e., physics, mathematics, computer science, quantitative biology, quantitative finance, statistics, electrical engineering and systems science, and economics) and each domain has is own categories. The categories within the quantitative finance domain are the following:

- Computational finance (q-fin.CP) includes Monte Carlo, PDE, lattice and other numerical methods with applications to financial modeling;

- Economics (q-fin.EC) is an alias for econ.GN and it analyses micro and macro economics, international economics, theory of the firm, labor economics, and other economic topics outside finance;

- General finance (q-fin.GN) is focused on the development of general quantitative methodologies with applications in finance;

- Mathematical finance (q-fin.MF) examines mathematical and analytical methods of finance, including stochastic, probabilistic and functional analysis, algebraic, geometric, and other methods;

- Portfolio management (q-fin.PM) deals with security selection and optimization, capital allocation, investment strategies, and performance measurement;

- Pricing of securities (q-fin.PR) discusses valuation and hedging of financial securities, their derivatives, and structured products;

- Risk management (q-fin.RM) is about risk measurement and management of financial risks in trading, banking, insurance, corporate, and other applications;

- Statistical finance (q-fin.ST) includes statistical, econometric and econophysics analyses with applications to financial markets and economic data;

- Trading and market microstructure (q-fin.TR) studies market microstructure, liquidity, exchange and auction design, automated trading, agent-based modeling, and market-making.

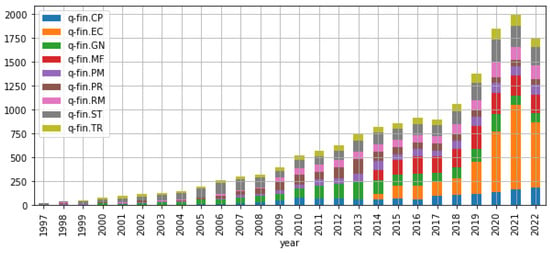

These categories are assigned by the authors when they submit their papers. Even if it is possible to select multiple couples of domain-category belonging to more than one domain, I select as the reference category only the first category within the quantitative finance domain. Figure 1 shows the numbers of papers on quantitative finance submitted to arXiv between 1997 and 2022. The increase in the last three years is mainly due to the q-fin.EC category.

Figure 1.

Categories by year. The y-axis indicates the total number of papers.

As observed in Section 1, the code is implemented in Python and it is run under Ubuntu 22.04 on a desktop with an AMD Ryzen 5 5600 g processor and 32 GB of RAM. As I will describe in the following, numerous packages are considered.

As far as the collection process is concerned, I retrieve data from arXiv by selecting all categories within quantitative finance (i.e., q-fin). I collect article metadata and pdf files for all articles from 1997 to 2022 for a total of around 16,000 articles (18 GB of data).

While the metadata are obtained through urllib.request and feedparser, the pdf files are downloaded by means of the arxiv package. The metadata can be collected by following the suggestions provided in the arXiv web pages. They are a fundamental input of the analysis and include the link to the paper main web page, from where it is possible to extract the paper identification code (id, e.g., 2005.06390). The metadata contain information like authors names, paper title, primary category, submission and last update dates, abstract, and publication data when available (e.g., digital object identifier, DOI). Subsequent updates of the papers can be stored in the repository and for this reason, there is a version number at the end of the paper id.

Since a paper could be assigned to multiple categories, a web-scraping tool written in Python allows us to retrieve from the paper main web page the list of all categories of each paper. I select from this list the subset of categories within the quantitative finance domain. Thus, I assign as the reference category of a paper the first category appearing in this subset. Starting from this list, I are able to filter and analyze all papers in the nine categories within q-fin.

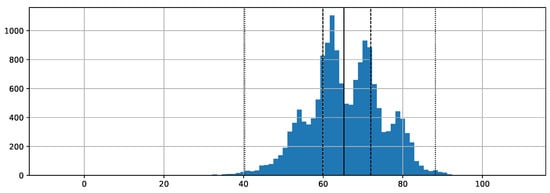

The pdftotext package allows us to extract text from pdf files. Each paper becomes a single (long) string. As discussed in Section 3, the lengths of these strings vary across papers, also because some documents are not papers (e.g., there are also theses and books). As a first assessment of the corpus, for each document, I estimate the readability of the papers through textstat. As shown in Figure 2, the Flesch reading ease score (see [28]) is on average equal to 65.7 (plain English), the lower and upper quartiles are 59.91 (fairly difficult to read, but not far from the plain English) and 71.95 (fairly easy to read), respectively, and 99% of the papers are in the range from 40.28 (difficult to read) and 88.20 (easy to read). There is only one paper with a negative value, but this is caused by the text contained in the figures. All other papers are above 17.17, that is above the extremely difficult to read level.

Figure 2.

Flesch reading ease score. The vertical line represents the median, the dashed line is the quantile of level 0.25 (0.75), and the dotted line is the quantile of level 0.005 (0.995). The y-axis indicates the total number of papers.

3. Text Preprocessing

This section describes the text processing steps. The preprocessing phase is performed with nltk: (1) I split the text in tokens; (2) I extract the numbers representing years in the text (we assume these numbers have 4 digits; I do not explore this data in the empirical analysis); (3) I identify all strings containing alphabet letters, and I refer to them as words even when they do not belong to English vocabulary; this step allows also to remove some symbols that are not recognized as letters in text analysis; (4) I remove all stopwords and all words with length fewer than 3 characters; I also check whether there are words with more than 25 characters (quite uncommon in English); (5) I conduct a lemmatization by means of a part-of-speech tagger considering nouns, verbs, adjectives and adverbs; and (6) I check if the paper is written in English by means of the langdetect package and I discard all non-English papers. Both the extraction phase and the preliminary text analysis is parallelized by means of the multiprocessing package. I refer to the output of this first preprocessing phase as lemmatized data.

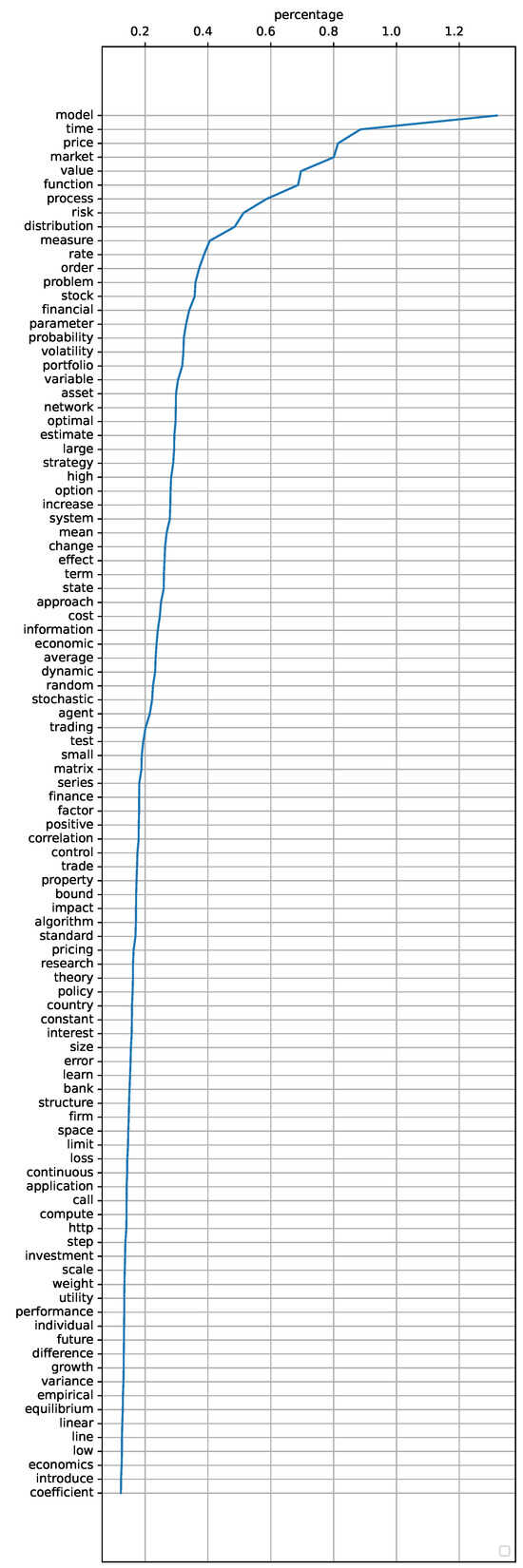

Thus, I analyze the frequency of the words across the whole corpus and I remove all words appearing fewer than 25 times. I also discard some words frequently used in writing papers on quantitative finance that do not help in understanding the topic of the paper. The list of these words includes “proof” and “theorem”, verbs commonly used in mathematical sentences (e.g., “assume”, “satisfy”, and “define”), mathematical functions (e.g., “min”, and “log”), and adverbs. The complete list is available upon request. In Figure 3, the list of the top 100 most frequent words obtained after this cleaning phase and their percentages of appearance are shown (see also Figure 4). The word “model” is extremely frequent (one in every 100 words). The word “http” is also quite common, indicating that the papers’ full texts contain numerous internet links (this word could be discarded, since it carries no meaning).

Figure 3.

Frequent words of the corpus and their percentages of appearance.

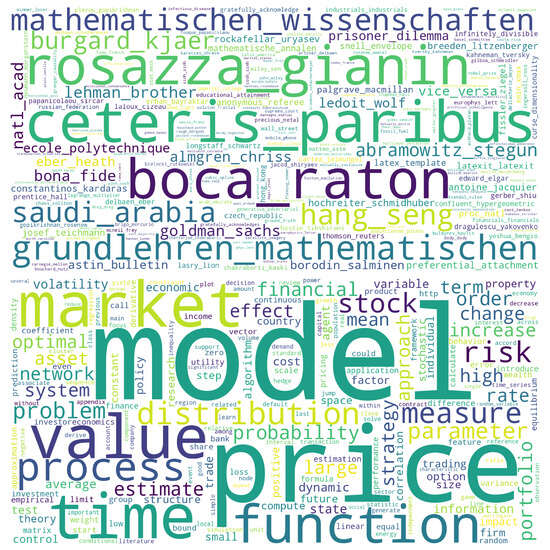

Figure 4.

Frequency-based word clouds of bigrams and of all words including bigrams and tri(four)grams. I consider n-grams with parameters min count equal to 250 and threshold equal to 10.

After a first preprocessing phase, I conduct an n-gram analysis by considering the Phrases model of the gensim package. I ignore all words and bigrams with a total collected count over the entire corpus lower than 250 and set the score threshold equal to 10. I find the bigrams and then, to find trigrams and fourgrams, I re-apply the same model to the transformed corpus including bigrams. This approach allows us to have a better ex post understanding of the corpus, which is full of n-grams (e.g., Monte Carlo simulation, Eisenberg and Noe, or bank balance sheet). The word clouds of frequent bigrams and of frequent words including bigrams and trigrams (fourgrams) are depicted in Figure 4.

It should be noted that some topic modeling algorithms analyzed in Section 4 do not need text preprocessing. In those cases, the input is just a single list containing the whole paper text. While I refer to this latter input as raw data, I define the output of the preprocessing as cleaned data.

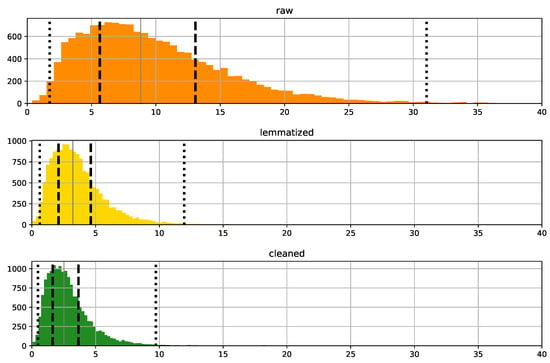

In Figure 5, I show how the length of the papers varies over the three data preprocessing phases. Starting from the raw data, containing all words and symbols, after a preliminary cleaning step I obtain the lemmatized data and then, after the last cleaning steps, the cleaned data. The number of words varies from a median value of 8824 words for the raw data to around 2518 words for the cleaned data.

Figure 5.

Paper length, in terms of number of words, for raw, lemmatized and cleaned data. The x-axis values are in thousands. The vertical line represents the median, the dashed line is the quantile of level 0.25 (0.75), and the dotted line is the quantile of level 0.01 (0.99). The y-axis indicates the total number of papers.

4. Topics Trend

Now, I are in the position to perform a topics trend analysis. I employ a topic modeling approach to identify the subjects discussed in the documents examined in this study, and then I observe how these topics change over time. Topic modeling refers to a class of statistical methods used to determine which subjects are prevalent in a given corpus. The topic modeling algorithms considered in my study are briefly described in Section 4.1, where some details of my implementation are also provided. Then, in Section 4.2, I select the best-performing model among some selected approaches presented in the related literature. I evaluate these approaches by assessing their ability to accurately match the nine q-fin categories that researchers assign to their work when submitting it to arXiv. Finally, in Section 4.3, after having split the papers into 30 clusters, each one representing a specific topic, I discuss the evolution of research trends over time.

4.1. Topic Modeling Algorithms

Topic modeling algorithms are widely used in natural language processing and text mining to uncover latent thematic structures in a collection of documents. Different algorithms have been developed, each with its own strengths and limitations (see [29]). The choice of a topic modeling algorithm depends on specific factors, such as the desired level of topic granularity and computational constraints. Some algorithms may require substantial computational resources and large amounts of training data. The techniques selected in this study are widely used in the literature, and robust implementations are available. I consider the following models.

- k-means. I perform a clustering analysis by considering the k-means algorithm implemented in scikit-learn. This algorithm groups data points into k clusters by minimizing the distance between data points and their cluster center. The document word matrix is created through the CountVectorizer function, which converts the corpus to a matrix of token counts. I ignore terms that have a document frequency strictly higher than 75%.

- LDA. By considering the same document word matrix analyzed with the k-means algorithm, I perform topic modeling with the latent Dirichlet allocation (LDA). LDA is a well-known unsupervised learning algorithm. As observed in the seminal work of [30], the basic idea is that documents are represented as random mixtures over latent topics, where each topic is characterized by a distribution over words. I study two different implementations of LDA (i.e., scikit-learn and gensim).

- Word2Vec. I train a word embedding model (i.e., Word2Vec) and then I perform a clustering analysis by considering again the k-means approach. An embedding is a low-dimensional space into which high-dimensional vectors are projected. Machine learning on large inputs like sparse vectors representing words is easier if embeddings are considered. Ideally, an embedding captures some of the semantics of the input by placing semantically similar inputs close together in the embedding space. The Word2Vec neural network introduces distributed word representations that capture syntactic and semantic word relationships (see [31]). In more detail, I generate document vectors using the trained Word2Vec model, that is, I obtain numerical vectors for each word in a document, and then the document vector is the weighted average of the vectors. Thus, the k-means algorithm is applied to the matrix representing the corpus. I consider the Word2Vec model implemented in gensim.

- Doc2Vec. I create a vectorized representation of each document through the Doc2Vec model and then I perform a clustering analysis by considering the k-means approach. The Doc2Vec extends Word2Vec and it can learn distributed representations of varying lengths of text, from sentences to documents (see [32]). I consider the Doc2Vec model implemented in gensim.

- Top2Vec. I study the Top2Vec model, an unsupervised learning algorithm that finds topic vectors in a space of jointly embedded document and word vectors (see [33]). This algorithm directly detects topics by performing the following steps. First, embedding vectors for documents and words are generated. Second, a dimensionality reduction on the vectors is implemented. Third, the vectors are clustered and topics are assigned. This algorithm is implemented in an ad hoc library named Top2Vec and it automatically provides information on the number of topics, topic size, and words representing the topics.

- BERTopic. I study a BERTopic model, which is similar to Top2Vec in terms of algorithmic structure and uses BERT as an embedder. As described in the seminal work of [34], from the clusters of documents, topic representations are extracted using a custom class-based variation of term frequency-inverse document frequency (TF-IDF). This is the main difference with respect to Top2Vec. The algorithm is implemented in an ad hoc library named BERTopic. The main downside with working with large documents, as in my case, is that information will be ignored if the documents are too long. The model accepts a limited number of tokens, discarding any additional input. Since I are dealing with large documents, to work around this issue, I first split each documents into chunks of 300 tokens and then fit the model on these chunks. BERTopic does not allow one to directly select the number of topics; for this reason, on the first step, I obtain a number of topics much larger than the desired one. Since I obtain for each chunk the corresponding topic, I have for each document a list of possibly different topics and the length of these lists varies across documents (i.e., the length of a single list depends on the length of the corresponding document). To perform clustering on this list of lists of topics, I consider each integer representing a topic as a word. Thus, I use the Word2Vec algorithm described above to find similarities between these list of topics. Each topic label, that is the number representing the topic, is treated as a string, and Word2Vec transforms it into a numerical vector. I then apply k-means clustering to group these lists based on their similarity in the vector space. The resulting clusters reveal relationships and patterns among these lists and allow us to select the number of clusters I need for my purposes.

In theory, both BERTopic and Top2Vec should use raw data since these algorithms rely on an embedding approach, and keeping the original structure of the text is of paramount importance (see [35]). However, raw data extracted from quantitative finance papers have a considerable number of formulas, symbols, and numbers that may affect the algorithm’s performance. For this reason, I consider both raw and cleaned data. Additionally, for these state-of-the-art algorithms, the number of extracted topics tends be large; however, the algorithms offer the possibility to reduce the number of topics and of outliers, which can be larger than expected. The parameters of a BERTopic model have to be carefully chosen to avoid memory issues. Alternatively, it is possible to perform topic modeling online, that is, the model is trained incrementally from a mini-batch of instances. This results in a less resource-demanding approach in terms of memory and CPU usage; however, it also generates less rich and less comprehensive outputs and for these reasons, I do not consider this incremental approach here.

4.2. Algorithm Performance on Full Texts

Since is not simple to assess the performance of different topic modeling algorithms (see [36]), I start by comparing the clusters assigned by each algorithm on the entire corpus to the nine clusters defined by the q-fin categories described in Section 2, that is the categories that researchers assign to their work during the submission process to arXiv. By exploiting a Bayesian optimization strategy, the authors of [37] present a framework for training, analyzing, and comparing topic models where the competitor models are trained by searching for their optimal hyperparameter configuration for a given metric and dataset. Here, I consider a simpler approach in which I compare the models by looking at some metrics. The assessment of topic modeling algorithms typically involves the use of performance measures, and it is important to note that different algorithms can yield varying results across these metrics. Here, I compare different algorithms by looking at some standard performance measures.

In Table 1, I report the following similarity measures between true and predicted cluster labels: (1) the rand score (RS) is defined as the ratio between the number of agreeing pairs and the number of pairs, and it ranges between 0 and 1, where 1 stands for perfect match; (2) the adjusted rand score (ARS), that is the rand score adjusted for chances, has a value close to 0 for random labeling, independently of the number of clusters and samples, and exactly 1 when the clusterings are identical (up to a permutation), however is bounded below by -0.5 for especially discordant clusterings; (3) the mutual info score (MI) is independent of the absolute values of the labels (i.e., a permutation of the cluster labels does not change the value of the score); (4) the normalized mutual information (NMI) is a normalization of the MI to scale the results between 0 (no mutual information) and 1 (perfect correlation); (5) cluster accuracy (CA) is based on the Hungarian algorithm to find the optimal matching between true and predicted cluster labels; and (6) to compute the purity score (PS), each cluster is assigned to the class that is most frequent in the cluster, and the similarity measure is obtained by counting the number of correctly assigned papers and dividing by the number of observations. This latter score increases as the number of clusters increases and for this reason, it cannot be used as a trade off between the number of clusters and clustering quality, that is to find the optimal number of clusters.

Table 1.

Algorithm performance.

The measures presented in Table 1 demonstrates that the Doc2Vec approach, when coupled with k-means clustering on cleaned data, outperforms other models. This is evident from the higher MI and PS measures that it achieves compared to its competitors. Moreover, Doc2Vec exhibits practical advantages, as it is straightforward to implement and significantly reduces computing time when compared to more advanced techniques like BERTopic. Interpreting the results of the Doc2Vec approach is simple, as it allows the identification of the most representative documents by retrieving the centroid vectors of each cluster. The Word2Vec approach, when coupled with k-means clustering on cleaned data, also shows good performance.

It is worth noting that there are no significant differences in performance measures when applying either the Top2Vec or BERTopic methods to raw or cleaned data. This may be due to the fact that raw data contain mathematical formulas that do not contribute substantial additional information, even if, as shown in Figure 5, raw data have a larger number of words. This finding indicating an equivalence between raw or cleaned data may also be due to the relatively simple structure commonly found in quantitative finance papers. It is important to note that these findings may not generalize to papers or books with more intricate and complex text structures and without formulas.

LDA implemented in scikit-learn has better performance than the LDA implementation in gensim. The plain k-means does not show satisfactory results, even if the algorithm can be implement without any great effort.

Finally, as an overall assessment, it is important to highlight that the performance metrics reported in Table 1 do not demonstrate particularly impressive results. This could partly stem from the wide-ranging nature of each q-fin category, encompassing numerous subtopics and arguments. Conversely, some papers can be classified under multiple categories, as it is not always obvious how to select a single definitive category for a given work.

4.3. Empirical Study

As shown in Section 4.2, the best performing model is Doc2Vec with k-means clustering applied on cleaned data. This model is considered to have a better understanding of the topics discussed in the quantitative finance papers analyzed in this work. To obtain the desired number of topics I perform again a k-means clustering analysis. To extract the most representative documents, I retrieve the centroid vectors of each cluster. These centroids represent the average position of all document embeddings assigned to a particular cluster. For each cluster centroid, I find the nearest neighbors among the original Doc2Vec embeddings. These nearest neighbors are the documents that are closest to the centroid in the embedding space and can be considered as the main documents of that cluster. Thus, I select for each cluster the 20 most representative documents and I find a label for the topic on the basis of the documents titles. Note that the underlying meanings of the topics are subject to human interpretation. However, also this phase is automated by asking ChatGPT (GPT-3.5) to name the topic after having provided the list of 20 titles (see [38]).

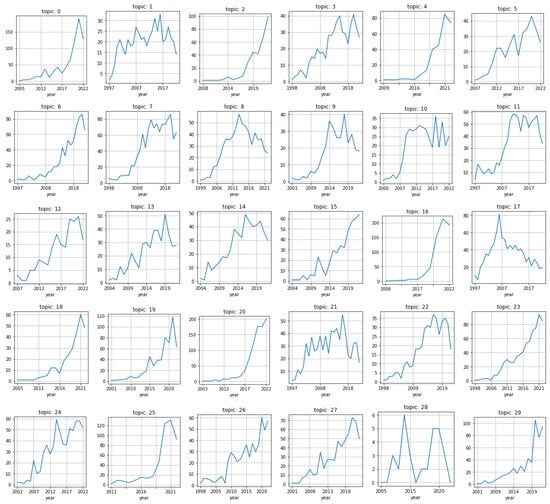

Given the size of my sample, a reduction down to 30 topics can be considered a good compromise for a topic analysis. The selected number of topics strikes a good balance between ensuring a sufficient quantity of documents for each topic and maintaining the desired level of granularity. This approach allows us to extract meaningful insights from the data while avoiding an excessive division of content that could affect the ability to identify overarching trends and patterns. As shown in Figure 6, most of the topics have an increasing trend (topic 28 seems to be the only exception). This is also reflected in the growth in the number of papers shown in Figure 1. For each topic, the list of 20 titles is the input for the question I ask the ChatGPT chatbot. The topics label (i.e., the ChatGPT reply to the question) and the title of the most representative paper are reported in Table 2. ChatGPT was employed due to its ability to generate coherent topic labels. To ensure reliability, I validated the outputs and found a high degree of consistency between the topic labels generated by ChatGPT and the most representative papers.

Figure 6.

Topics trend by year across the sample of around 16,000 papers in the q-fin categories. The y-axis indicates the total number of papers.

Table 2.

The label extracted from ChatGPT and the title of the most representative paper for each topic.

It is interesting to observe that topics related to decentralized finance and blockchain technology (2) and stock price prediction with deep learning and news sentiment analysis (20) show a remarkable increase. It is important to note that this topic is connected to behavioral finance. Health, policy, and social impact studies, represented by topic 16, and diverse perspectives in education, innovation, and economic development (0) also show an increase. Both topics are oriented towards economics. These topics trends are also affected by the introduction in 2014 of the q-fin.EC category within the arXiv quantitative finance papers. Classical quantitative finance subjects like portfolio optimization techniques and strategies (6), stochastic volatility modeling and option pricing (7), game theory and strategic decision-making (19), and high-order numerical methods for option pricing in finance (23), as well as new themes that have appeared in the literature in recent years, like deep reinforcement learning in stock trading and portfolio management (4) and environmental and economic impacts of mobility technologies (25), attracted the interest of researchers in the analyzed period. The representativeness of topic 28 is limited, mainly because the number of papers in this cluster is low, and one could merge it with another cluster (i.e., 8).

It is clear that some topics are more related to economics than to finance. This also depends on the presence of the q-fin.EC category. For articles in this category, there is not always a flawless alignment with quantitative finance.

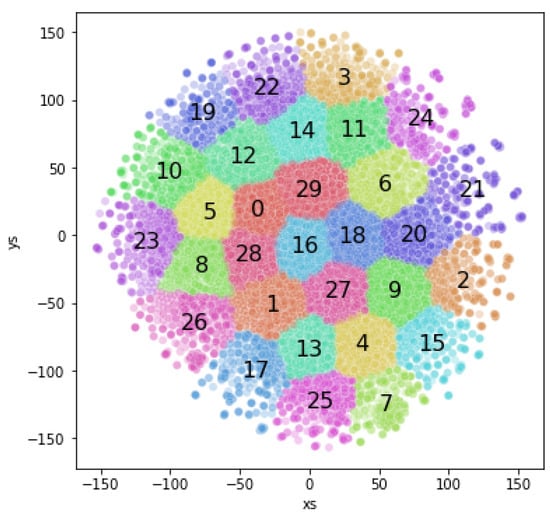

To visualize the clusters, in Figure 7, the document vectors are projected onto 2-dimensional space through the standard t-distributed stochastic neighbor embedding (t-SNE) algorithm. The larger the distance between topics, the more distinct the papers in those topics are in the original high-dimensional space. Conversely, it is also possible to have a better view on how close topics are to each other (e.g., 8 and 28). It appears that the most specialized or narrowly focused topics tend to occupy peripheral positions, while themes that are more aligned with economics are positioned closer to the center.

Figure 7.

Topic clusters projected onto 2-dimensional space through the standard t-distributed stochastic neighbor embedding (t-SNE) algorithm. The numbers represent the topics reported in Table 2.

5. Extracting Authors and Journals

In this section, I extract the author surnames by means of the spacy package. In more detail, starting from the raw data, I perform named-entities recognition. Since this approach extracts both first names and surnames, I remove all first names by checking if these names are included in a list of about 67,000 first names. It should be noted that the number of occurrence of the surname of the author in a paper strongly depends on the citation style. Surnames are always reported in the references, but they do not necessary appear in the main text of a paper. Additionally, even if the author–date style is widely used (i.e., the citation in the text consists of the authors name and year of publication), the surname of the first author appears more frequently (e.g., Bianchi is more probable than Tassinari, even if Bianchi and Tassinari are coauthors of the same papers, together with other coauthors).

The algorithm is able to find the names and surnames occurring in the text. These are included in the PERSON entity types. The first 100 authors by number of occurrences in the corpus are selected. In order to have additional information on these authors, I obtain topics, number of citations, h-index, and i10-index from Google Scholar (see Table 3). It should be noted that not all authors are registered in Google Scholar, even if they made a significant contribution to the field (e.g., Markowitz) or there are authors with the same surname and belonging to the same research field (see also Figure 3 in [21]). This is the case for some researchers I find in my corpus (e.g., Zhou, Bayraktar, and Chakrabarti). For these last-named authors, it is not simple to find a perfect match in Google Scholar even if their number of occurrences is generally high (for the reasons described above, I exclude from Table 3 the following researcher names: Zhou, Markowitz, Peng, Jacod, Merton, Guo, Lo, Follmer, Yor, Almgren, Embrechts, Bayraktar, Artzner, Weber, Jarrow, Feng, Samuelson, Tang, Chakrabarti, Glasserman, Tsallis, Leung, Sato, Zariphopoulou, Kramkov, Karoui, Cizeau, Cao, and Christensen).

Table 3.

Number of author occurrences and Google Scholar metrics as of May 2023. The symbol [] indicates that the information is not available.

The algorithm is also able to find the most cited journals, included in the ORG entity types: Journal of Finance (4490 occurrences)*, Mathematical Finance (3785), Journal of Financial Economics (3325)*, Physica A (3137)*, Quantitative Finance (3044), Econometrica (2473)*, Journal of Econometrics (1971), American Economic Review (1878), Insurance Mathematics and Economics (1667), Review of Financial Studies (2636)*, Journal of Banking and Finance (1542)*, Physical Review (1538), Journal of Economic Dynamics (1289), Energy (1267), Operations Research (1242), The Quarterly Journal of Economics (1238)*, Journal of the American Statistical Association (1204), Management Science (1160), European Journal of Operational Research (1066), Quantum (1043), IEEE Transactions (996), Journal of Political Economy (990), Journal of Economic Theory (977), Energy Economics (946), International Journal of Theoretical and Applied Finance (946), SIAM Journal on Financial Mathematics (888), Science (865), Expert Systems with Applications (845), Applied Mathematical Finance (743), Finance and Stochastics (736), Mathematics of Operations Research (652), PLoS (602), The Annals of Applied Probability (593), Stochastic Processes and their Applications (525), Energy Policy (520), International Journal of Forecasting (520), The European Physical Journal (514), Journal of Empirical Finance (510), and Journal of Risk (509). I consider only journals with more than 500 occurrences and I exclude publishing houses. The journals with the asterisk symbol are identified with more than one name. It should be noted that some well-known journals are slightly below 500 occurrences. Furthermore, it is worth noting that papers with a strong mathematical focus tend to receive significantly fewer citations compared to papers that lean more towards economics or finance.

It is important to acknowledge that while the arXiv repository serves as a valuable resource for scholarly papers, it may not encompass the entirety of the quantitative finance research landscape. While the repository strives to be comprehensive, there may be variations in the representation of scholars from different countries. Some scholars may have a relatively higher presence due to their active participation in submitting their research to arXiv. The platform content is reliant on authors voluntarily submitting their work, which introduces some bias. As a result, some authors and their contributions may not be represented. Therefore, my analysis and conclusions should be interpreted within the context of the available arXiv data, recognizing that there may be additional research and authors in the field of quantitative finance who have chosen alternative avenues for publishing their work. The same observation is true for the findings described in Section 4.3.

It is possible that influential scholars may not be as consistently represented or that, for various reasons, they have not regularly submitted their work to arXiv (see also [39]). In the study [21], focused on option pricing, some well-known authors are cited but they do not appear among the first 100 authors in my analysis. This discrepancy could be influenced by factors such as publication preferences, possible copyright issues, or institutional practices that may vary across different academic communities.

As a final remark, in my view, researchers in quantitative finance should consider submitting their work to arXiv due to the potential benefits it offers (see also [40]). The delay between arXiv posting and journal publication, which can sometimes be more than a year, underscores the importance of submitting preprints to the repository. By doing so, researchers can help the community to understand research trends in their field more promptly, while also accelerating the dissemination of their own findings. This approach aligns with the findings of [41], who show that rapid and open dissemination through preprints helps scholarly and scientific communication, enhancing the reception of findings in the field. In the meantime, as a possible alternative, to gain a comprehensive understanding of the entire landscape, future studies may consider incorporating other reputable academic databases and journals to ensure a more holistic exploration of quantitative finance research and its authors. Network approaches would also help to identify cliques and highly connected groups of authors. Insights from network clusters could further improve the understanding of the textual data investigated in this work.

6. Conclusions

In this study, I explore the field of quantitative finance through an analysis of papers in the arXiv repository. My objectives are twofold: first, I investigate the evolution of topics over time, and second, I identify prominent authors and journals in this domain. By employing data mining techniques, I achieve these goals without reading the papers individually.

The preprocessing phase, when needed, ensures the suitability of the data for subsequent analyses. Topic modeling helps in gaining insights and understanding the main themes and trends within my large dataset. By applying topic modeling algorithms, I identify the best performer and examine the temporal evolution of quantitative finance topics. This analysis reveals the changing research trends and highlights the emergence and decline of various topics over time.

Furthermore, I conduct an entity extraction process to identify influential authors and journals in the field. Through quantifying author and journal occurrences, I shed light on the researchers who have made notable contributions to quantitative finance.

My study demonstrates the power of data mining techniques in uncovering insights from a large-scale preprint repository. My work not only showcases the power of data mining but also highlights the continued growth and dynamism of quantitative finance as a discipline. The techniques explored in this work can assist researchers in exploring and identifying novel research topics, discovering connections between different research areas, and staying up-to-date with the latest developments in the field. Furthermore, my methodology may serve as a roadmap for future studies on broader datasets or in other scientific domains utilizing text mining techniques. Although scaling to a larger number of papers may pose challenges, my approach provides valuable insights.

Finally, I believe that quantitative finance researchers should consider sharing their work on arXiv to potentially accelerate the dissemination and impact of their findings and to enhance the community understanding of research trends.

Funding

This research received no external funding.

Data Availability Statement

The data used in this study were collected from https://arxiv.org/ (accessed on 21 February 2025).

Acknowledgments

The author thanks arXiv, ChatGPT, and Google Scholar for use of their open-access interoperability, Sabina Marchetti, and two anonymous referees for their comments and suggestions. This publication should not be reported as representing the views of the Bank of Italy. The views expressed are those of the author and do not necessarily reflect those of the Bank of Italy.

Conflicts of Interest

The author declares no conflicts of interest.

References

- Bianchi, M.L.; Tassinari, G.L.; Fabozzi, F.J. Fat and heavy tails in asset management. J. Portf. Manag. 2023, 49, 236–263. [Google Scholar] [CrossRef]

- Vogl, M. Quantitative modelling frontiers: A literature review on the evolution in financial and risk modelling after the financial crisis (2008–2019). SN Bus. Econ. 2022, 2, 183. [Google Scholar] [CrossRef] [PubMed]

- Derman, E. Models Behaving Badly: Why Confusing Illusion with Reality Can Lead to Disaster, on Wall Street and in Life; Wiley: Hoboken, NJ, USA, 2011. [Google Scholar]

- Ippoliti, E. Mathematics and finance: Some philosophical remarks. Topoi 2021, 40, 771–781. [Google Scholar] [CrossRef]

- Carmona, R. The influence of economic research on financial mathematics: Evidence from the last 25 years. Financ. Stoch. 2022, 26, 85–101. [Google Scholar] [CrossRef]

- Cesa, M. A brief history of quantitative finance. Probab. Uncertain. Quant. Risk 2017, 2, 1–16. [Google Scholar] [CrossRef]

- Derman, E.; Miller, M.B. The Volatility Smile; Wiley: Hoboken, NJ, USA, 2016. [Google Scholar]

- Bianchetti, M.; Carlicchi, M. Interest Rates After the Credit Crunch: Multiple-Curve Vanilla Derivatives and SABR. Available online: https://arxiv.org/abs/1103.2567 (accessed on 21 February 2025).

- Huisman, J.; Smits, J. Duration and quality of the peer review process: The author’s perspective. Scientometrics 2017, 113, 633–650. [Google Scholar] [CrossRef]

- Clement, C.B.; Bierbaum, M.; O’Keeffe, K.P.; Alemi, A.A. On the use of arXiv as a dataset. Available online: https://arxiv.org/abs/1905.00075 (accessed on 21 February 2025).

- Eger, S.; Li, C.; Netzer, F.; Gurevych, I. Predicting Research Trends from arXiv. Available online: https://arxiv.org/abs/1903.02831 (accessed on 21 February 2025).

- Viet, N.T.; Kravets, A.G. Analyzing recent research trends of computer science from academic open-access digital library. In Proceedings of the 8th International Conference on System Modeling and Advancement in Research Trends (SMART), Moradabad, India, 22–23 November 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 31–36. [Google Scholar]

- Lin, J.; Yu, Y.; Zhou, Y.; Zhou, Z.; Shi, X. How many preprints have actually been printed and why: A case study of computer science preprints on arXiv. Scientometrics 2020, 124, 555–574. [Google Scholar] [CrossRef]

- Tan, K.; Munster, A.; Mackenzie, A. Images of the arXiv: Reconfiguring large scientific image datasets. J. Cult. Anal. 2021, 3, 1–41. [Google Scholar] [CrossRef]

- Okamura, K. Scientometric engineering: Exploring citation dynamics via arXiv eprints. Quant. Sci. Stud. 2022, 3, 122–146. [Google Scholar] [CrossRef]

- Bohara, K.; Shakya, A.; Debb Pande, B. Fine-tuning of RoBERTa for document classification of arXiv dataset. In Mobile Computing and Sustainable Informatics; Shakya, G., Papakostas, S., Kamel, K.A., Eds.; Springer Nature: Singapore, 2023; pp. 243–255. [Google Scholar]

- Fatima, R.; Yasin, A.; Liu, L.; Wang, J.; Afzal, W. Retrieving arXiv, SocArXiv, and SSRN metadata for initial review screening. Inf. Softw. Technol. 2023, 161, 107251. [Google Scholar] [CrossRef]

- Burton, B.; Kumar, S.; Pandey, N. Twenty-five years of The European Journal of Finance (EJF): A retrospective analysis. Eur. J. Financ. 2020, 26, 1817–1841. [Google Scholar] [CrossRef]

- Ali, A.; Bashir, H.A. Bibliometric study on asset pricing. Qual. Res. Financ. Mark. 2022, 14, 433–460. [Google Scholar] [CrossRef]

- Aria, M.; Cuccurullo, C. bibliometrix: An R-tool for comprehensive science mapping analysis. J. Informetr. 2017, 11, 959–975. [Google Scholar] [CrossRef]

- Sharma, P.; Sharma, D.K.; Gupta, P. Review of research on option pricing: A bibliometric analysis. Qual. Res. Financ. Mark. 2024, 16, 159–182. [Google Scholar] [CrossRef]

- Donthu, N.; Kumar, S.; Mukherjee, D.; Pandey, N.; Lim, W.M. How to conduct a bibliometric analysis: An overview and guidelines. J. Bus. Res. 2021, 133, 285–296. [Google Scholar] [CrossRef]

- Van Eck, N.; Waltman, L. Software survey: VOSviewer, a computer program for bibliometric mapping. Scientometrics 2010, 84, 523–538. [Google Scholar] [CrossRef]

- Bastian, M.; Heymann, S.; Jacomy, M. Gephi: An open source software for exploring and manipulating networks. In Proceedings of the International AAAI Conference on Web and Social Media, Palo Alto, CA, USA, 17–20 May 2009; pp. 361–362. [Google Scholar]

- Liu, J.; Liu, Y.; Ren, L.; Li, X.; Wang, S. Trends and Trajectories: A Bibliometric Analysis of Financial Risk in Corporate Finance and Finance (2020–2024). Available online: https://papers.ssrn.com/sol3/papers.cfm?abstract_id=4960436 (accessed on 21 February 2025).

- Joaqui-Barandica, O.; Manotas-Duque, D.F. Assets liability management: A bibliometric analysis and topic modeling. Entramado 2022, 18, 1–23. [Google Scholar]

- Westergaard, D.; Stærfeldt, H.H.; Tønsberg, C.; Jensen, L.J.; Brunak, S. A comprehensive and quantitative comparison of text-mining in 15 million full-text articles versus their corresponding abstracts. PLoS Comput. Biol. 2018, 14, e1005962. [Google Scholar] [CrossRef]

- DuBay, W.H. The principles of readability. ERIC 2004. Available online: https://eric.ed.gov/?id=ed490073 (accessed on 21 February 2025).

- Sethia, K.; Saxena, M.; Goyal, M.; Yadav, R.K. Framework for topic modeling using BERT, LDA and K-means. In Proceedings of the 2nd International Conference on Advance Computing and Innovative Technologies in Engineering (ICACITE), Greater Noida, India, 28–29 April 2022; IEEE: Piscataway, NJ, USA, 2022; pp. 2204–2208. [Google Scholar]

- Blei, D.M.; Ng, A.Y.; Jordan, M.I. Latent Dirichlet allocation. J. Mach. Learn. Res. 2003, 3, 993–1022. [Google Scholar]

- Mikolov, T.; Chen, K.; Corrado, G.; Dean, J. Efficient estimation of Word Representations in Vector Space. arXiv 2013, arXiv:1301.3781. [Google Scholar]

- Le, Q.; Mikolov, T. Distributed representations of sentences and documents. In International Conference on Machine Learning; PMLR: Brookline, MA, USA, 2014; pp. 1188–1196. [Google Scholar]

- Angelov, D. Top2vec: Distributed Representations of Topics. arXiv 2020, arXiv:2008.09470. [Google Scholar]

- Grootendorst, M. BERTopic: Neural Topic Modeling with a Class-Based TF-IDF Procedure. arXiv 2022, arXiv:2203.05794. [Google Scholar]

- Egger, R.; Yu, J. A topic modeling comparison between LDA, NMF, Top2Vec, and BERTopic to demystify Twitter posts. Front. Sociol. 2022, 7, 886498. [Google Scholar] [CrossRef] [PubMed]

- Rüdiger, M.; Antons, D.; Joshi, A.M.; Salge, T.O. Topic modeling revisited: New evidence on algorithm performance and quality metrics. PLoS ONE 2022, 17, e0266325. [Google Scholar] [CrossRef]

- Terragni, S.; Fersini, E.; Galuzzi, B.G.; Tropeano, P.; Candelieri, A. OCTIS: Comparing and optimizing topic models is simple. In Proceedings of the 16th Conference of the European Chapter of the Association for Computational Linguistics: System Demonstrations, Kyiv, Ukraine, 19–23 April 2021; pp. 263–270. [Google Scholar]

- Ebinezer, S. Transform Your Topic Modeling with ChatGPT: Cutting-Edge NLP. Available online: https://medium.com/ (accessed on 21 February 2025).

- Metelko, Z.; Maver, J. Exploring arXiv usage habits among Slovenian scientists. J. Doc. 2023, 79, 72–94. [Google Scholar] [CrossRef]

- Mishkin, D.; Tabb, A.; Matas, J. ArXiving Before Submission Helps Everyone. arXiv 2020, arXiv:2010.05365. [Google Scholar]

- Wang, Z.; Chen, Y.; Glänzel, W. Preprints as accelerators of scholarly communication: An empirical analysis in mathematics. J. Informetr. 2020, 14, 101097. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).