This section presents a systematic evaluation of the Dynamic Deformable YOLO network’s performance in comparison with state-of-the-art object detection architectures.

4.3.1. Analysis of Detection Performance Metrics

We conducted comparative experiments on the LVPD test set to evaluate detection accuracy, computational efficiency, and model complexity.

Table 6 presents the quantitative results.

This experiment evaluated the performance of multiple detection models on the LVPD test set. The experimental results reveal that RF-DETR-B achieved superior accuracy with an mAP@0.5 of 90.5%, despite its relatively low detection speed of 28.2 FPS. In contrast, YOLOv9s demonstrated optimal computational efficiency at 55.3 FPS, though with a marginally lower accuracy of 84.5%. The proposed DCNYOLO architecture established an advantageous balance among detection accuracy (87.4%), processing speed (53.6 FPS), and model complexity (8.5M parameters), making it particularly suitable for applications requiring both real-time processing and computational efficiency. While D-FINE-N exhibited remarkable accuracy (90.3%), its operational speed of 36.6 FPS proved insufficient for large-volume parenteral detection requirements. Based on comprehensive analysis, although each model demonstrates distinct advantages, DCNYOLO emerges as the optimal solution for diverse application scenarios due to its well-balanced performance metrics.

To thoroughly assess the generalization capability of the proposed method, we conducted comparative experiments on the VisDrone2019 test set and compared its performance with mainstream models such as YOLOv9s, YOLOv7m, TPHYOLO, RF-DETR-B, and D-FINE-N. The results of the experiments are shown in

Table 7.

The experimental results demonstrate that our proposed method excels in both mAP (36.4%) and FPS (53.1) metrics, particularly in FPS, where it significantly outperforms other high-precision models like RF-DETR-B and D-FINE-N, showcasing a good balance between accuracy and speed. Moreover, our method has a parameter count of only 8.5M, which is substantially lower than that of YOLOv7m and RF-DETR-B, indicating its advantage in model lightweightness. Although slightly lower in mAP compared to RF-DETR-B (37.2%), our method is more competitive in terms of real-time performance and model efficiency, making it suitable for practical application scenarios. Overall, the proposed method demonstrates excellent performance in terms of generalization capability, inference speed, and model complexity, providing an efficient and practical solution for object detection tasks.

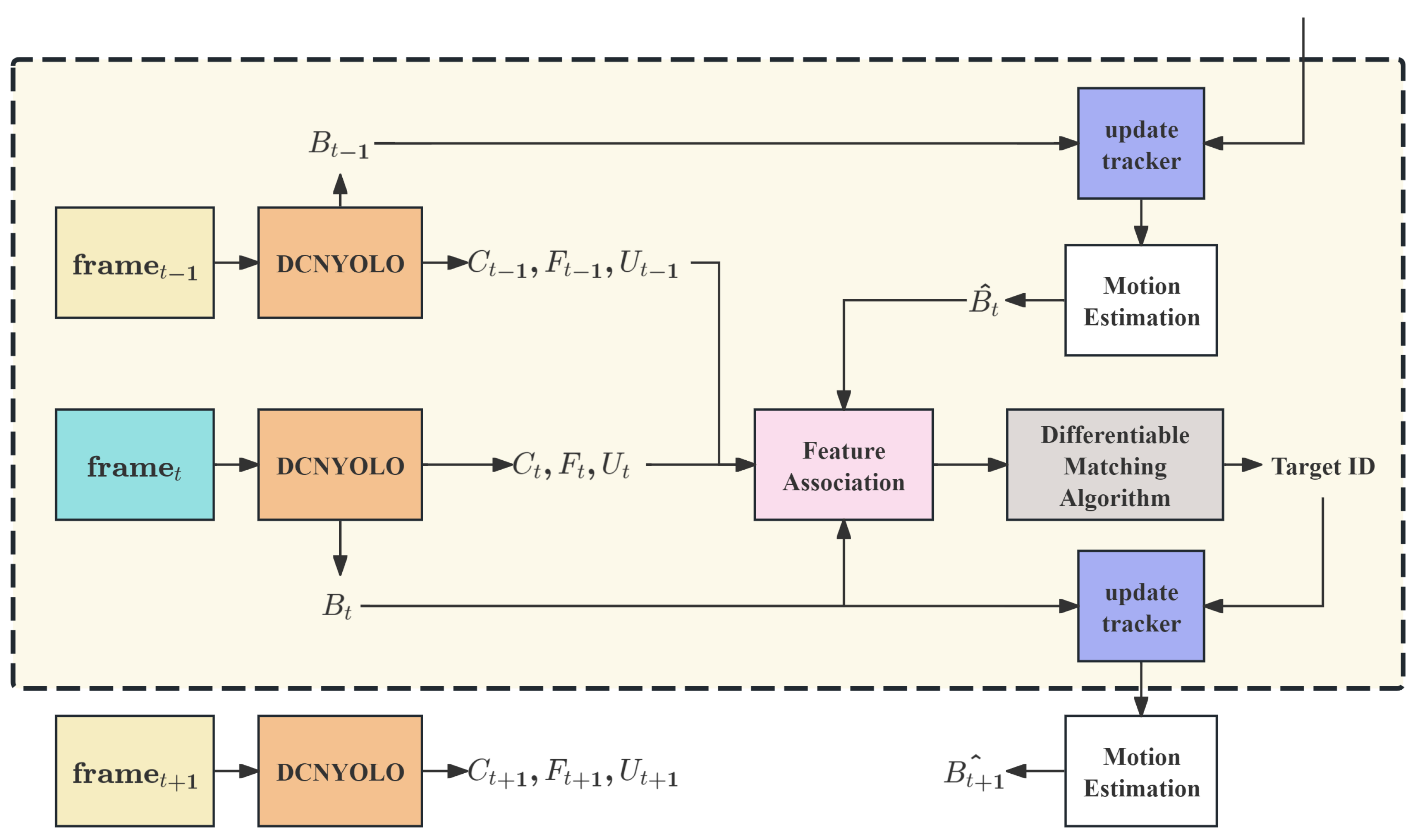

4.3.2. Evaluation of Differentiable Object Association Tracking

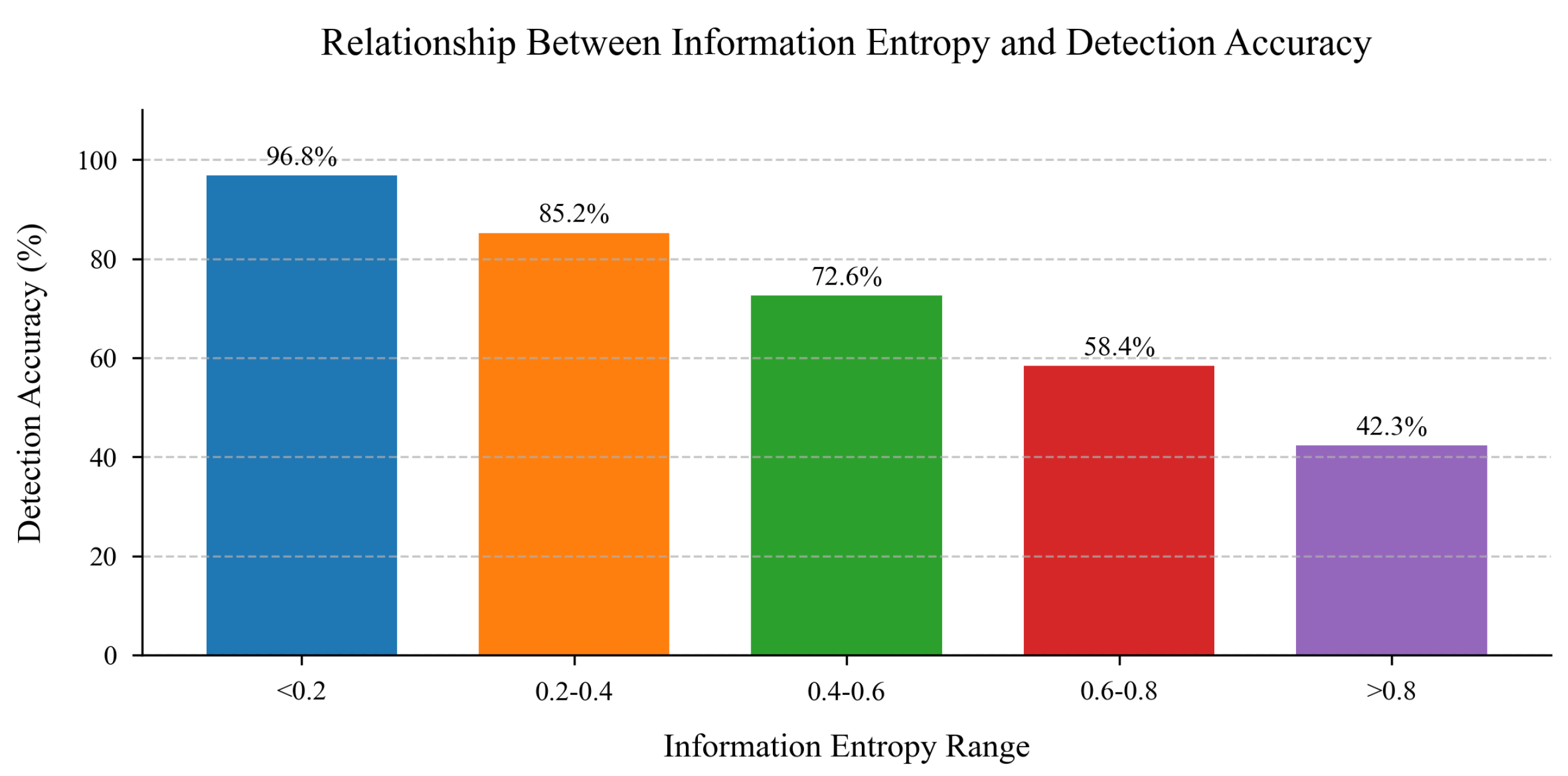

We conducted a systematic analysis of the relationship between information entropy and detection accuracy to validate our uncertainty estimation approach.

Figure 7 illustrates the detection performance across varying entropy thresholds. The empirical results reveal a strong inverse correlation between information entropy and detection precision: instances with entropy values below 0.2 achieved 96.8% accuracy, while those exceeding 0.8 exhibited significantly lower accuracy (42.3%). These findings validate information entropy as an effective metric for quantifying detection reliability, providing crucial guidance for subsequent tracking association and re-identification processes.

Then, we evaluated our tracking framework against state-of-the-art methods on the LVPD test set, as presented in

Table 8.

In this experiment, we conducted a comprehensive comparison of various object tracking methods on the LVPD test set, including SORT, DeepSORT, ByteTrack, TransTrack, CMTrack, BoostTrack++, AdapTrack, and our proposed method. The experimental results demonstrate that our proposed method outperformed others in terms of MOTA (79.2%) and FPS (50.3), showcasing its significant advantages in tracking accuracy and real-time performance. Additionally, our method excelled in IDF1 (77.8%) and ID Sw.(983) metrics, further validating its effectiveness in maintaining target identity and reducing identity switches. In contrast, while SORT and DeepSORT performed well in FPS, they lagged significantly in the MOTA and IDF1 metrics. ByteTrack and TransTrack improved in accuracy but suffered from poor real-time performance. Overall, our method surpassed existing mainstream tracking algorithms in comprehensive performance, offering a superior solution for object tracking in complex scenarios. This demonstrates the effectiveness of the Gumbel–Sinkhorn soft matching algorithm in establishing target correspondences and the contribution of the uncertainty weighting strategy in enhancing the quality of matches.

We also conducted experiments on the MOT20 dataset, comparing our object tracking method with other state-of-the-art approaches. The results of these experiments are presented in

Table 9.

The results indicate that different methods exhibit varying performance across various metrics. In terms of the HOTA metric, BoostTrack++ demonstrated the best performance with a score of 66.4, followed closely by AdapTrack at 64.7, our proposed method (Ours) at 61.5, and ByteTrack at 61.3. Regarding the MOTA metric, ByteTrack and BoostTrack++ were quite close, with scores of 77.8 and 77.7, respectively, while our method achieved a score of 76.1. On the IDF1 metric, BoostTrack++ led with a score of 82.0, StrongSORT scored 77.0, and our method achieved 76.5. Lower IDs (number of identity switches) indicate better performance in maintaining identity, with StrongSORT and BoostTrack++ excelling, having only 770 and 762 switches, respectively, compared to SORT’s significantly higher count of 4470. In terms of frame rate (FPS), SORT significantly outperformed with 57.3, while our method reached 23.6, maintaining a relatively high accuracy while demonstrating good real-time performance. In contrast, StrongSORT, DeepSORT, and BoostTrack++ had lower frame rates of 1.4, 3.2, and 2.1, respectively. Overall, our proposed method (Ours) achieved a good balance between accuracy and real-time performance, showcasing commendable performance across the board.

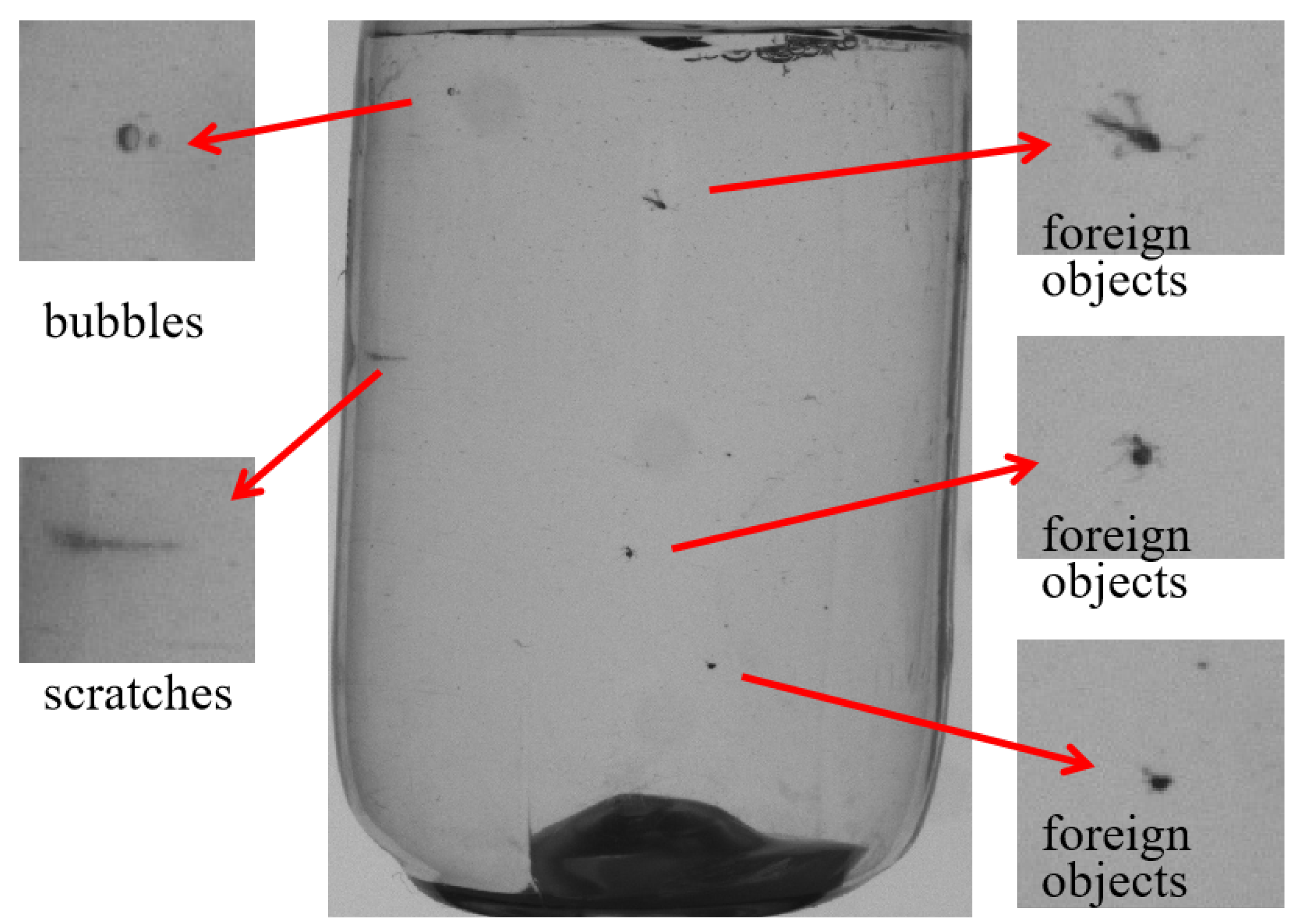

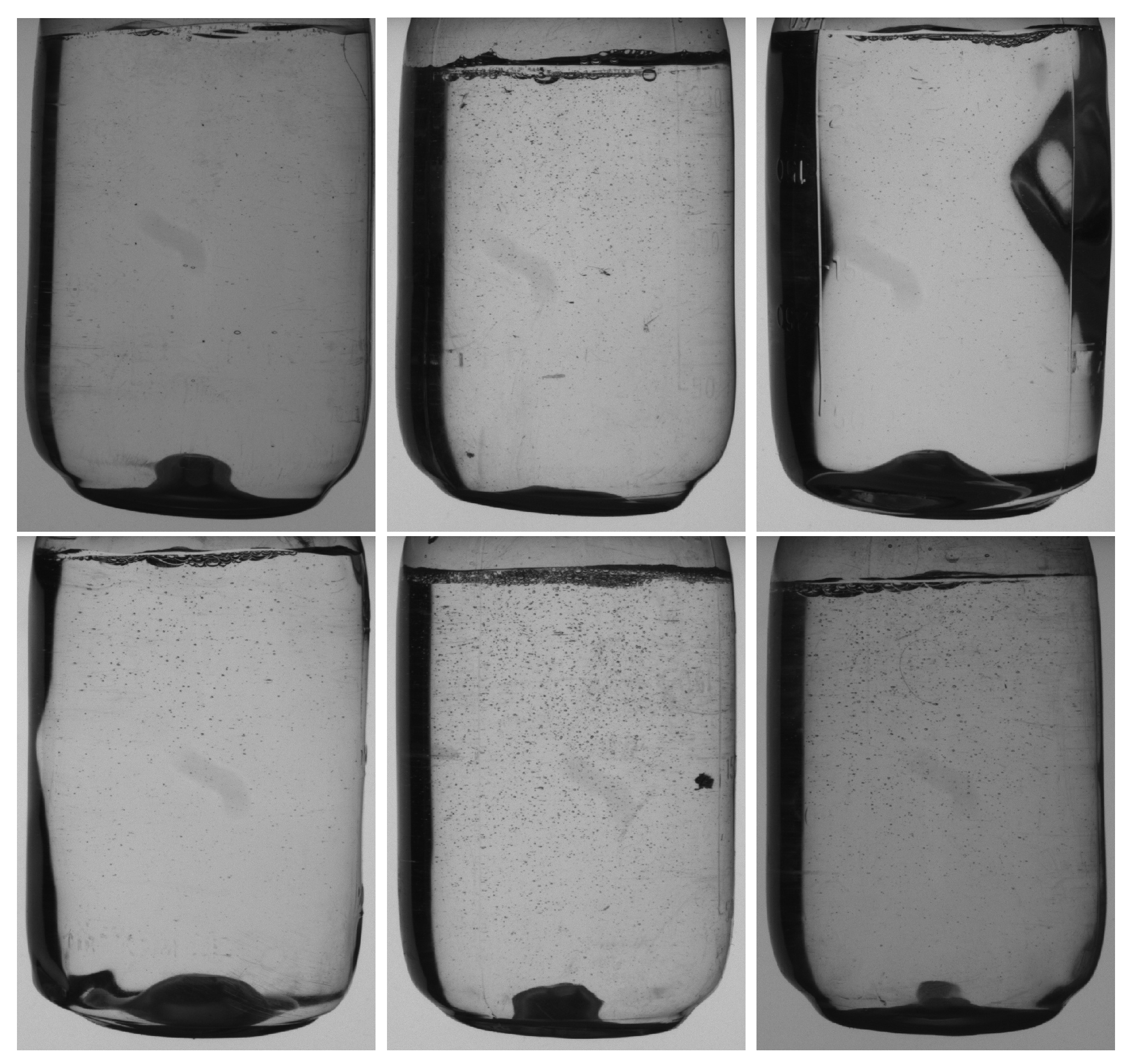

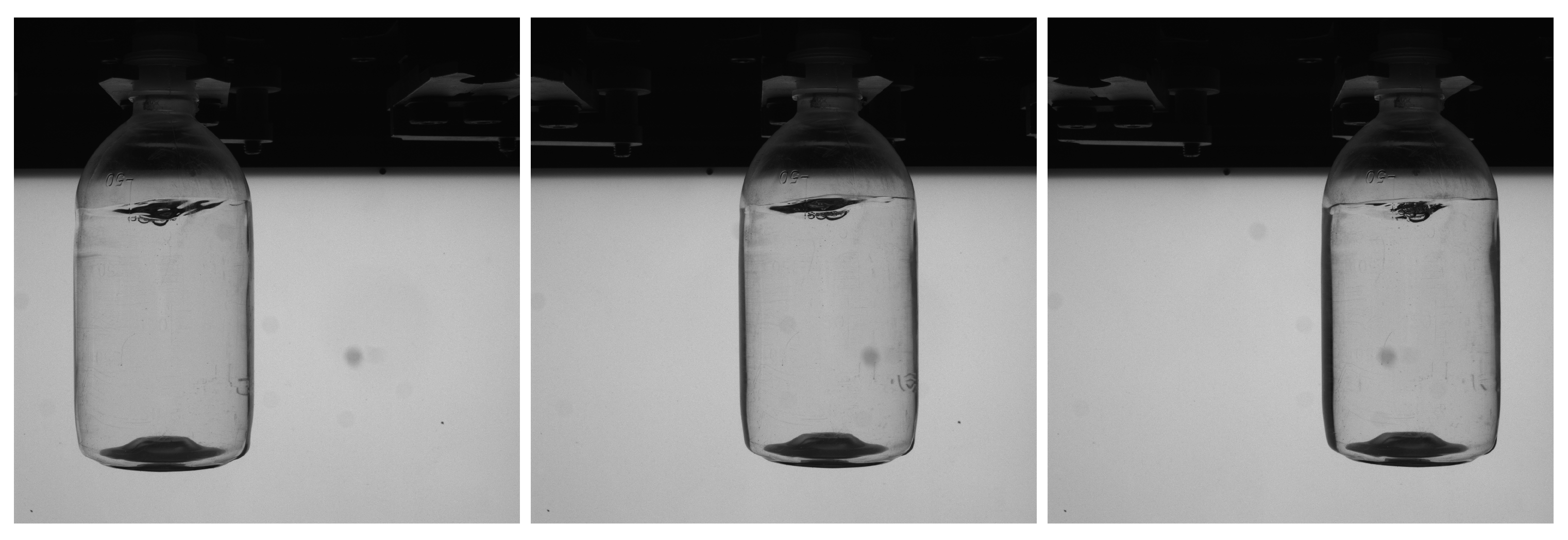

4.3.3. Comprehensive Performance Analysis

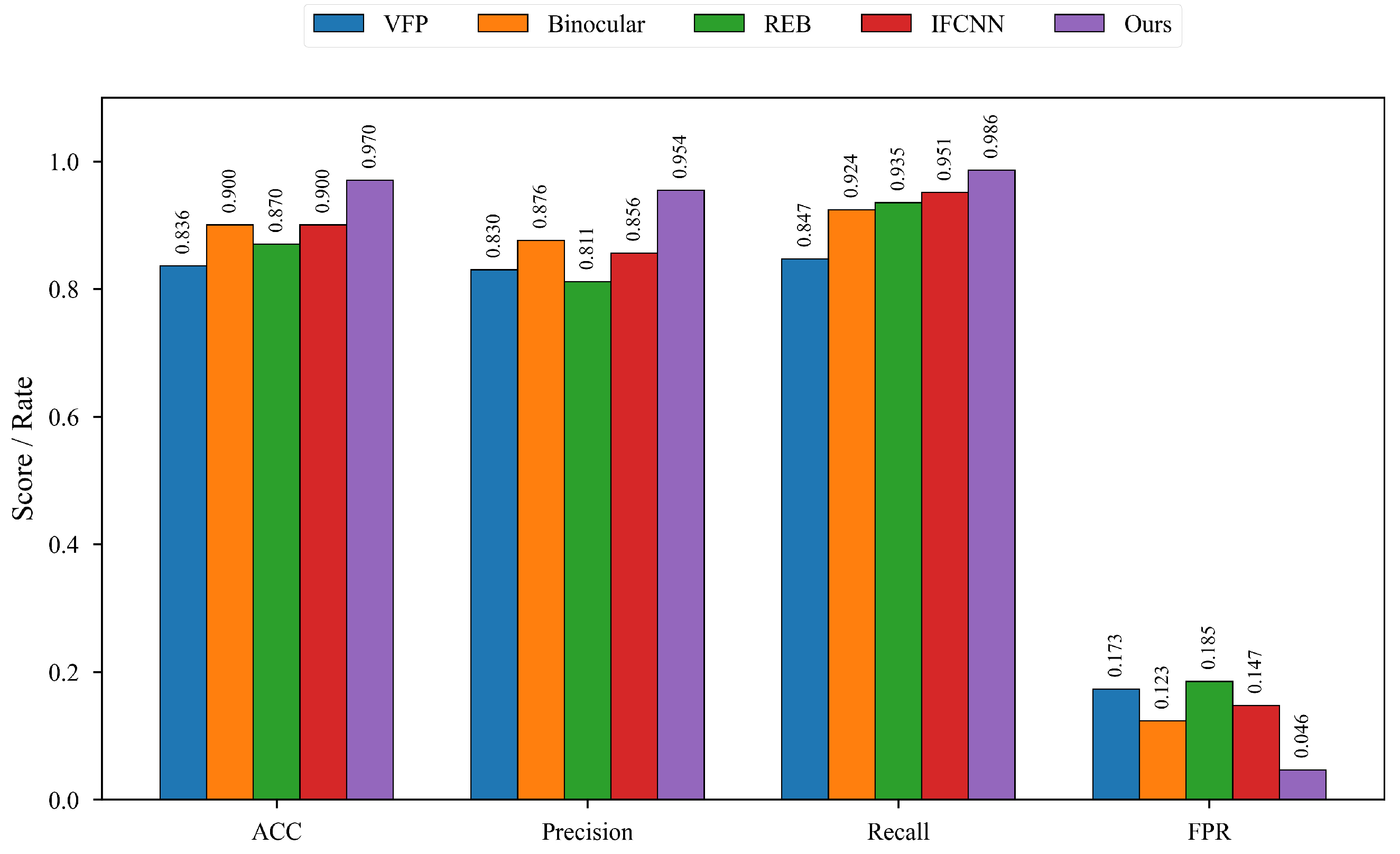

We conducted extensive comparative experiments against state-of-the-art methods for large-volume infusion foreign matter detection. The evaluation protocol involved 300 infusion bottles (150 containing foreign matter, 150 control samples), with 10 images captured per bottle. For single-frame detection methods, a majority voting mechanism was implemented, wherein an object is classified as a foreign object only if it is detected in five consecutive frames of the image sequence.

Table 10 and

Figure 8 presents the quantitative results.

The experimental results demonstrate significant performance improvements across multiple metrics:

Accuracy (ACC): Our method achieved 0.970, representing a 7.8% improvement over the next-best performers (IFCNN and Binocular: 0.900).

Precision: At 0.954, our approach substantially outperformed existing methods (IFCNN: 0.856), indicating superior false detection suppression.

Recall: Our method demonstrated near-optimal performance (0.986), surpassing the previous state-of-the-art (IFCNN: 0.951), significantly reducing missed detections.

False Positive Rate (FPR): Our method achieved an FPR of 0.046, representing a reduction to approximately one-third to one-half of existing methods (e.g., VFP: 0.173), validating its robustness in complex scenarios.

Furthermore, through architectural optimization and efficient design principles, our method achieves a processing speed of 5.1 BPS, representing an 18.6% improvement over conventional approaches (IFCNN: 4.3 BPS). This enhancement in computational efficiency, coupled with maintained accuracy, better addresses real-world deployment requirements.

These results validate the effectiveness of our end-to-end framework, which integrates object detection, tracking association, same-object feature fusion, and re-identification. The framework successfully addresses the limitations of existing methods, particularly regarding false detections and missed detections in complex environments, providing a robust solution for real-time large-volume infusion monitoring.