GSA-KAN: A Hybrid Model for Short-Term Traffic Forecasting

Abstract

1. Introduction

- We rethink the model optimization for traffic flow forecasting from a data-driven perspective and exemplify a gravitational search algorithm-improved Kolmogorov–Arnold network.

- We propose a hybrid learning model to automatically find an optimal model for accurate traffic flow forecasting.

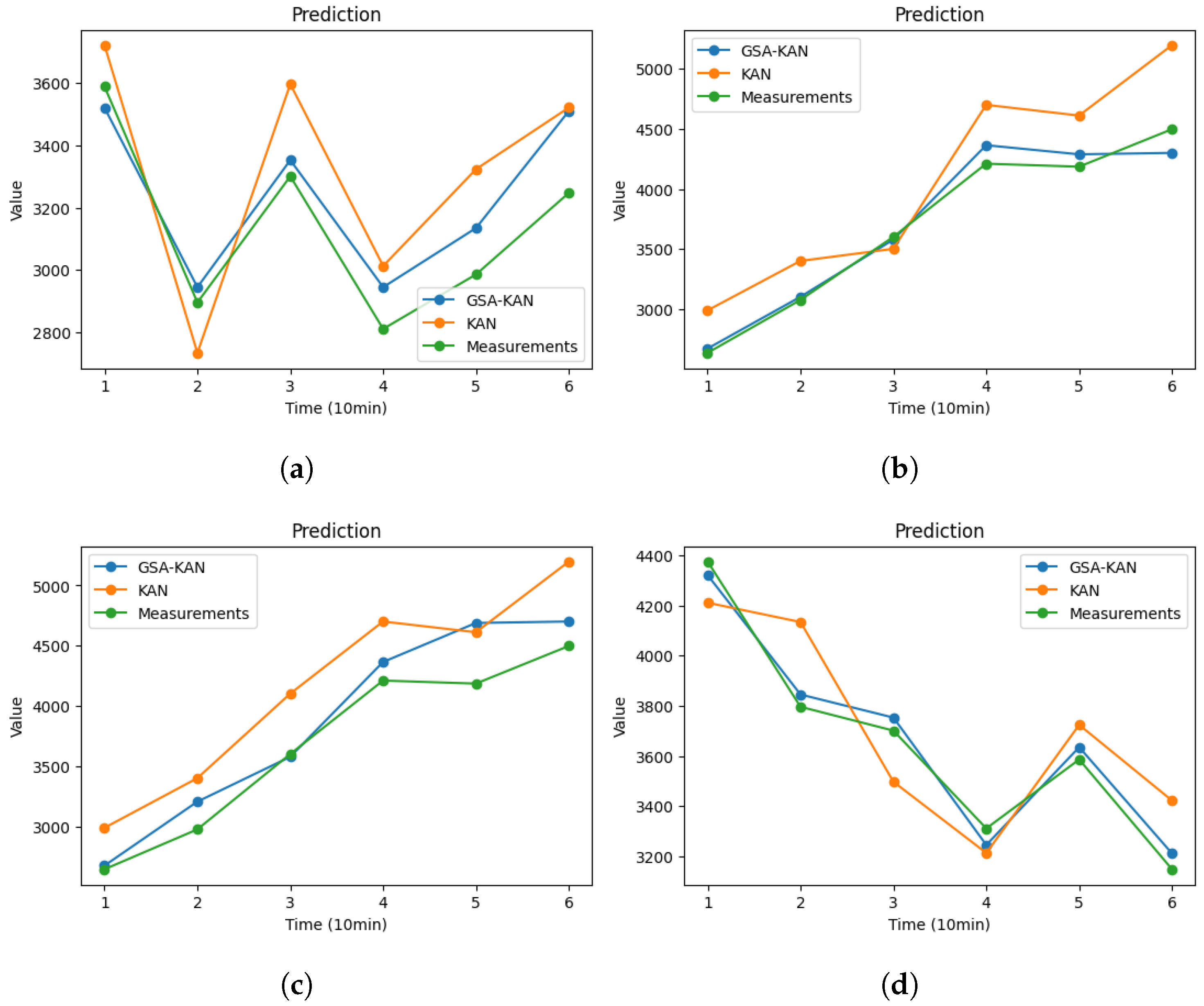

- We conduct extensive experiments on four real-world benchmark datasets to evaluate the effectiveness of our proposed model. The experimental results demonstrate that our GSA-KAN model outperforms existing parametric and nonparametric models for both the root mean square error (RMSE) and the mean absolute percentage error (MAPE), highlighting its potential for practical applications in traffic management.

2. Methodology

2.1. Problem Definition

2.2. Gravitational Search Algorithm

2.3. Kolmogorov–Arnold Networks

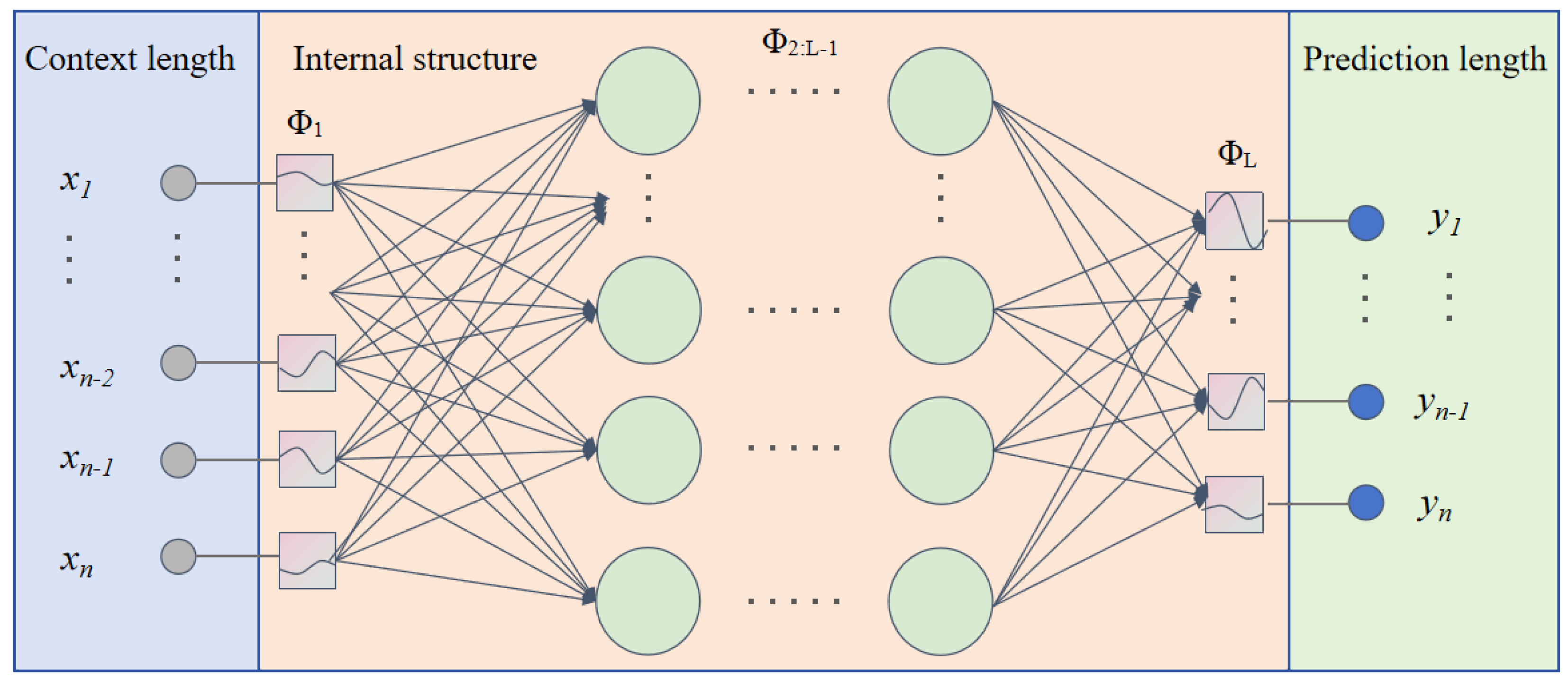

2.4. KAN Traffic Flow Forecasting

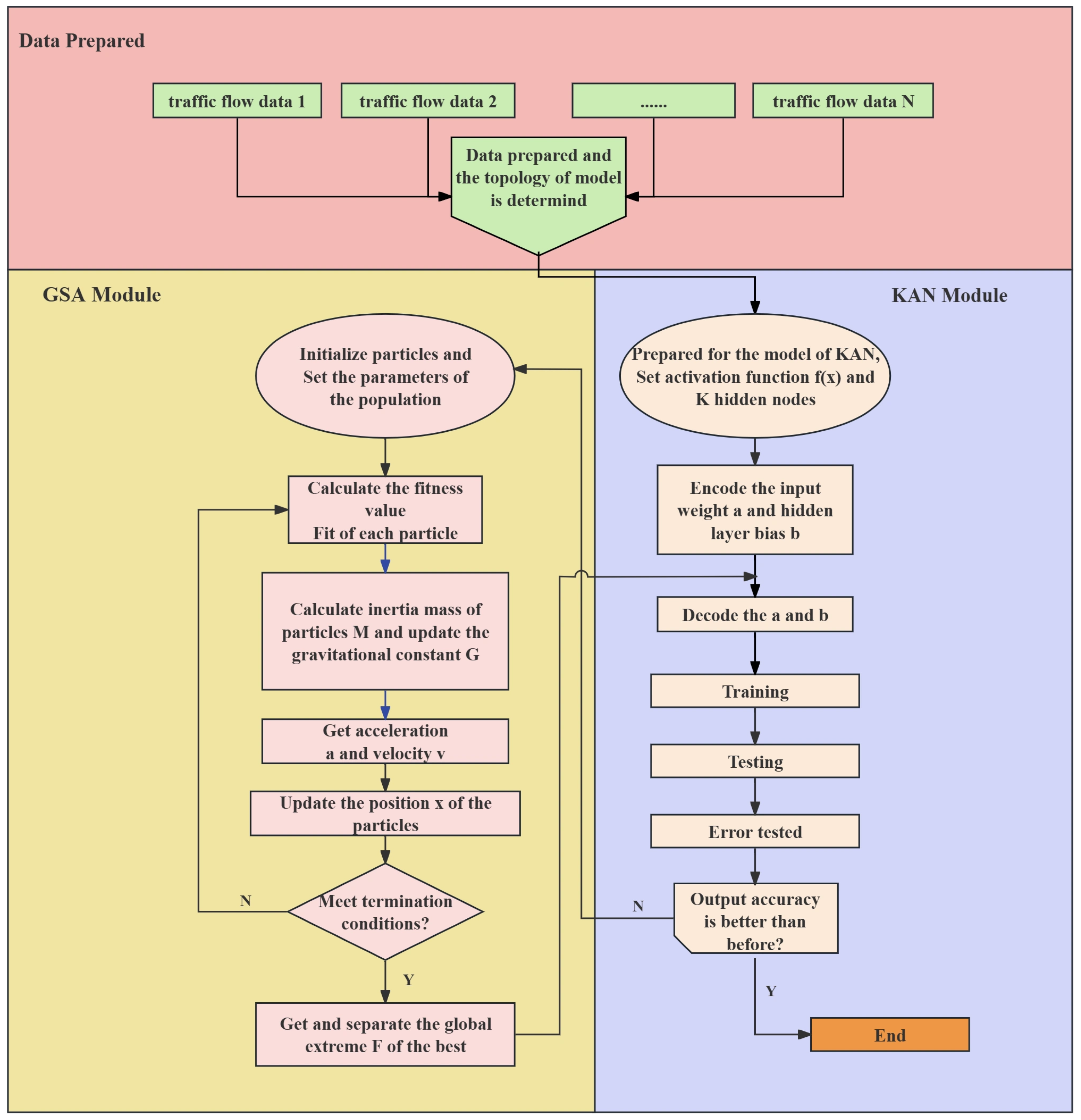

2.5. Hybrid GSA-KAN Algorithm

- Step 1. The parameters such as the dimension, d, of the search space, the number of particles, k, the gravitational constant, G, the gravitational constant decay rate, v, and the maximum number of iterations, T, are first set.

- Step 2. The position, x, and velocities, v, of k groups of particles are randomly generated viawhere and define the boundaries of the parametric search space, is the maximum value of the velocity, and is a random number from 0 to 1. Each group of particles represents a set of parameters with weights and biases in the KAN model.

- Step 3. Calculate the fitness value for each particle using the KAN model, which is generally the opposite of the prediction error value.

- Step 4. Calculate the repulsive and gravitational forces between particles by means of the relevant equations defined in the GSA model.

- Step 5. The velocity and position of the particles are updated based on the gravitational force and the current velocity.

- Step 6. Repeat steps 3–5 until the maximum number of iterations is reached or other stopping conditions are met.

- Step 7. Output the optimized KAN model parameters and use them for traffic flow forecasting.

| Algorithm 1: Framework of GSA-KAN |

|

- Hybrid architecture of gravitational search algorithm (GSA) and Kolmogorov–Arnold network (KAN): To the best of our knowledge, this is the first work to integrate the GSA with KAN. This hybrid framework automates the optimization of hyperparameters (e.g., B-spline grid points and regularization factors) while preserving the parameter efficiency of KAN, thereby addressing the limitations of manual tuning in traditional models.

- Dynamic resolution adaptation: We propose a novel adaptive grid adjustment strategy for the B-spline functions in KAN. Unlike fixed-resolution approaches, this strategy dynamically adjusts grid density based on traffic flow volatility, such as variations between peak hours and off-peak hours, enabling higher prediction accuracy during abrupt traffic changes.

- Robustness-driven optimization: While conventional GSA focuses solely on minimizing prediction error, our enhanced GSA incorporates a dual-objective function that balances RMSE and model sparsity. This design prevents overfitting and enhances interpretability, as validated in our experiments.

3. Experiments

3.1. Dataset Description

3.2. Evaluation Criteria

3.3. Experimental Settings

3.4. Experimental Results and Discussion

3.5. Hyperparameter Sensitivity Study

3.6. Limitations and Future Work

4. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Jiang, M.; Liu, Z. Traffic flow prediction based on dynamic graph spatial-temporal neural network. Mathematics 2023, 11, 2528. [Google Scholar] [CrossRef]

- Wang, F.; Liang, Y.; Lin, Z.; Zhou, J.; Zhou, T. SSA-ELM: A Hybrid Learning Model for Short-Term Traffic Flow Forecasting. Mathematics 2024, 12, 1895. [Google Scholar] [CrossRef]

- Liu, H.W.; Wang, Y.T.; Wang, X.K.; Liu, Y.; Liu, Y.; Zhang, X.Y.; Xiao, F. Cloud Model-Based Fuzzy Inference System for Short-Term Traffic Flow Prediction. Mathematics 2023, 11, 2509. [Google Scholar] [CrossRef]

- Yang, H.; Li, X.; Qiang, W.; Zhao, Y.; Zhang, W.; Tang, C. A network traffic forecasting method based on SA-optimized ARIMA-BP neural network. Comput. Netw. 2021, 193, 108102. [Google Scholar]

- Koopmans, L.H. The Spectral Analysis of Time Series; Elsevier: Amsterdam, The Netherlands, 1995. [Google Scholar]

- Xanthopoulos, P.; Pardalos, P.M.; Trafalis, T.B.; Xanthopoulos, P.; Pardalos, P.M.; Trafalis, T.B. Linear discriminant analysis. In Robust Data Mining; Springer: Berlin/Heidelberg, Germany, 2013; pp. 27–33. [Google Scholar]

- Balakrishnama, S.; Ganapathiraju, A. Linear discriminant analysis-a brief tutorial. Inst. Signal Inf. Process. 1998, 18, 1–8. [Google Scholar]

- Sutton, C.; McCallum, A. An introduction to conditional random fields. Found. Trends Mach. Learn. 2012, 4, 267–373. [Google Scholar]

- Reynolds, D.A. Gaussian mixture models. Encycl. Biom. 2009, 741, 659–663. [Google Scholar]

- Guo, J.; Huang, W.; Williams, B.M. Adaptive Kalman filter approach for stochastic short-term traffic flow rate prediction and uncertainty quantification. Transp. Res. Part C Emerg. Technol. 2014, 43, 50–64. [Google Scholar]

- Tseng, F.M.; Yu, H.C.; Tzeng, G.H. Applied hybrid grey model to forecast seasonal time series. Technol. Forecast. Soc. Change 2001, 67, 291–302. [Google Scholar]

- Kumar, K.; Parida, M.; Katiyar, V. Short term traffic flow prediction for a non urban highway using artificial neural network. Procedia-Soc. Behav. Sci. 2013, 104, 755–764. [Google Scholar]

- Yang, H.F.; Dillon, T.S.; Chang, E.; Chen, Y.P.P. Optimized configuration of exponential smoothing and extreme learning machine for traffic flow forecasting. IEEE Trans. Ind. Informatics 2018, 15, 23–34. [Google Scholar]

- Tian, Z. Approach for short-term traffic flow prediction based on empirical mode decomposition and combination model fusion. IEEE Trans. Intell. Transp. Syst. 2020, 22, 5566–5576. [Google Scholar]

- Nagpure, A.R.; Agrawal, A.J. Using Bidirectional, GRU and LSTM Neural Network methods for Multi-Currency Exchange Rates Prediction. Int. J. Innov. Technol. Explor. Eng. (IJITEE) 2019, 8, 716–722. [Google Scholar]

- Tian, Y.; Zhang, K.; Li, J.; Lin, X.; Yang, B. LSTM-based traffic flow prediction with missing data. Neurocomputing 2018, 318, 297–305. [Google Scholar]

- Yang, D.; Li, S.; Peng, Z.; Wang, P.; Wang, J.; Yang, H. MF-CNN: Traffic flow prediction using convolutional neural network and multi-features fusion. IEICE Trans. Inf. Syst. 2019, 102, 1526–1536. [Google Scholar]

- Wei, W.; Wu, H.; Ma, H. An autoencoder and LSTM-based traffic flow prediction method. Sensors 2019, 19, 2946. [Google Scholar] [CrossRef] [PubMed]

- Zheng, H.; Lin, F.; Feng, X.; Chen, Y. A hybrid deep learning model with attention-based conv-LSTM networks for short-term traffic flow prediction. IEEE Trans. Intell. Transp. Syst. 2020, 22, 6910–6920. [Google Scholar]

- Abduljabbar, R.L.; Dia, H.; Tsai, P.W. Unidirectional and bidirectional LSTM models for short-term traffic prediction. J. Adv. Transp. 2021, 2021, 5589075. [Google Scholar]

- Zhang, J.; Mao, S.; Yang, L.; Ma, W.; Li, S.; Gao, Z. Physics-informed deep learning for traffic state estimation based on the traffic flow model and computational graph method. Inf. Fusion 2024, 101, 101971. [Google Scholar]

- Zhang, J.; Mao, S.; Zhang, S.; Yin, J.; Yang, L.; Gao, Z. EF-former for short-term passenger Flow Prediction during large-scale events in Urban Rail Transit systems. Inf. Fusion 2025, 117, 102916. [Google Scholar]

- Zhang, J.; Zhang, S.; Zhao, H.; Yang, Y.; Liang, M. Multi-frequency spatial-temporal graph neural network for short-term metro OD demand prediction during public health emergencies. Transportation 2025, 1–23. [Google Scholar] [CrossRef]

- Qiu, H.; Zhang, J.; Yang, L.; Han, K.; Yang, X.; Gao, Z. Spatial–temporal multi-task learning for short-term passenger inflow and outflow prediction on holidays in urban rail transit systems. Transportation 2025, 1–30. [Google Scholar] [CrossRef]

- Cai, D.; Chen, K.; Lin, Z.; Li, D.; Zhou, T.; Ling, Y.; Leung, M.F. JointSTNet: Joint Pre-Training for Spatial-Temporal Traffic Forecasting. IEEE Trans. Consum. Electron. 2024. [CrossRef]

- Song, Y.; Liu, Y.; Lin, Z.; Zhou, J.; Li, D.; Zhou, T.; Leung, M.F. Learning from AI-generated annotations for medical image segmentation. IEEE Trans. Consum. Electron. 2024. [Google Scholar] [CrossRef]

- Liu, Z.; Wang, Y.; Vaidya, S.; Ruehle, F.; Halverson, J.; Soljačić, M.; Hou, T.Y.; Tegmark, M. Kan: Kolmogorov-arnold networks. arXiv 2024, arXiv:2404.19756. [Google Scholar]

- Riedmiller, M.; Lernen, A. Multi layer perceptron. In Machine Learning Lab Special Lecture; University of Freiburg: Freiburg im Breisgau, Germany, 2014; Volume 24. [Google Scholar]

- Kruse, R.; Mostaghim, S.; Borgelt, C.; Braune, C.; Steinbrecher, M. Multi-layer perceptrons. In Computational Intelligence: A Methodological Introduction; Springer: Berlin/Heidelberg, Germany, 2022; pp. 53–124. [Google Scholar]

- Vaca-Rubio, C.J.; Blanco, L.; Pereira, R.; Caus, M. Kolmogorov-arnold networks (kans) for time series analysis. arXiv 2024, arXiv:2405.08790. [Google Scholar]

- Bresson, R.; Nikolentzos, G.; Panagopoulos, G.; Chatzianastasis, M.; Pang, J.; Vazirgiannis, M. Kagnns: Kolmogorov-arnold networks meet graph learning. arXiv 2024, arXiv:2406.18380. [Google Scholar]

- Li, C.; Liu, X.; Li, W.; Wang, C.; Liu, H.; Yuan, Y. U-KAN Makes Strong Backbone for Medical Image Segmentation and Generation. arXiv 2024, arXiv:2406.02918. [Google Scholar]

- Karamichailidou, D.; Kaloutsa, V.; Alexandridis, A. Wind turbine power curve modeling using radial basis function neural networks and tabu search. Renew. Energy 2021, 163, 2137–2152. [Google Scholar]

- Rashedi, E.; Nezamabadi-Pour, H.; Saryazdi, S. GSA: A gravitational search algorithm. Inf. Sci. 2009, 179, 2232–2248. [Google Scholar]

- Rashedi, E.; Rashedi, E.; Nezamabadi-Pour, H. A comprehensive survey on gravitational search algorithm. Swarm Evol. Comput. 2018, 41, 141–158. [Google Scholar] [CrossRef]

- Genet, R.; Inzirillo, H. Tkan: Temporal kolmogorov-arnold networks. arXiv 2024, arXiv:2405.07344. [Google Scholar] [CrossRef]

- Kolmogorov, A.N. On the Representation of Continuous Functions of Several Variables by Superpositions of Continuous Functions of a Smaller Number of Variables; American Mathematical Society: Providence, RI, USA, 1961. [Google Scholar]

- Kolmogorov, A.N. On the representation of continuous functions of many variables by superposition of continuous functions of one variable and addition. Dokl. Akad. Nauk USSR 1957, 114, 953–956. [Google Scholar]

- Braun, J.; Griebel, M. On a constructive proof of Kolmogorov’s superposition theorem. Constr. Approx. 2009, 30, 653–675. [Google Scholar] [CrossRef]

- Schmidt-Hieber, J. The Kolmogorov–Arnold representation theorem revisited. Neural Netw. 2021, 137, 119–126. [Google Scholar] [CrossRef] [PubMed]

- Xu, K.; Chen, L.; Wang, S. Kolmogorov-Arnold Networks for Time Series: Bridging Predictive Power and Interpretability. arXiv 2024, arXiv:2406.02496. [Google Scholar]

- Andrychowicz, M.; Denil, M.; Gomez, S.; Hoffman, M.W.; Pfau, D.; Schaul, T.; Shillingford, B.; De Freitas, N. Learning to learn by gradient descent by gradient descent. Adv. Neural Inf. Process. Syst. 2016, 29, 3988–3996. [Google Scholar]

- Hochreiter, S.; Younger, A.S.; Conwell, P.R. Learning to learn using gradient descent. In Proceedings of the Artificial Neural Networks—ICANN 2001: International Conference, Vienna, Austria, 21–25 August 2001; Proceedings 11. Springer: Berlin/Heidelberg, Germany, 2001; pp. 87–94. [Google Scholar]

- Rojas, R.; Rojas, R. The backpropagation algorithm. In Neural Networks: A Systematic Introduction; Springer: Berlin/Heidelberg, Germany, 1996; pp. 149–182. [Google Scholar]

- Schwartz, M.D. Modern machine learning and particle physics. arXiv 2021, arXiv:2103.12226. [Google Scholar] [CrossRef]

- Jordan, M.I.; Mitchell, T.M. Machine learning: Trends, perspectives, and prospects. Science 2015, 349, 255–260. [Google Scholar] [CrossRef]

- Tran, V.D.; Le, T.X.H.; Tran, T.D.; Pham, H.L.; Le, V.T.D.; Vu, T.H.; Nguyen, V.T.; Nakashima, Y. Exploring the limitations of kolmogorov-arnold networks in classification: Insights to software training and hardware implementation. arXiv 2024, arXiv:2407.17790. [Google Scholar]

- Wang, Y.; Van Schuppen, J.H.; Vrancken, J. Prediction of traffic flow at the boundary of a motorway network. IEEE Trans. Intell. Transp. Syst. 2013, 15, 214–227. [Google Scholar] [CrossRef]

- Li, Y.; Li, Z.; Li, L. Missing traffic data: Comparison of imputation methods. IET Intell. Transp. Syst. 2014, 8, 51–57. [Google Scholar]

- Zhang, S.; Li, X.; Zong, M.; Zhu, X.; Cheng, D. Learning k for knn classification. ACM Trans. Intell. Syst. Technol. (TIST) 2017, 8, 1–19. [Google Scholar]

- Lindsney, R.; Verhoef, E. Traffic congestion and congestion pricing. In Handbook of Transport Systems and Traffic Control; Emerald Group Publishing Limited: Bingley, UK, 2001; pp. 77–105. [Google Scholar]

- Castro-Neto, M.; Jeong, Y.S.; Jeong, M.K.; Han, L.D. Online-SVR for short-term traffic flow prediction under typical and atypical traffic conditions. Expert Syst. Appl. 2009, 36, 6164–6173. [Google Scholar] [CrossRef]

- Abdulhai, B.; Porwal, H.; Recker, W. Short-term traffic flow prediction using neuro-genetic algorithms. ITS J.-Intell. Transp. Syst. J. 2002, 7, 3–41. [Google Scholar]

- Chai, W.; Zhang, L.; Lin, Z.; Zhou, J.; Zhou, T. GSA-KELM-KF: A hybrid model for short-term traffic flow forecasting. Mathematics 2023, 12, 103. [Google Scholar] [CrossRef]

- Cui, Z.; Huang, B.; Dou, H.; Tan, G.; Zheng, S.; Zhou, T. Gsa-elm: A hybrid learning model for short-term traffic flow forecasting. IET Intell. Transp. Syst. 2022, 16, 41–52. [Google Scholar]

- Zhou, T.; Han, G.; Xu, X.; Lin, Z.; Han, C.; Huang, Y.; Qin, J. δ-agree AdaBoost stacked autoencoder for short-term traffic flow forecasting. Neurocomputing 2017, 247, 31–38. [Google Scholar] [CrossRef]

- Huan, G.; Xinping, X.; Jeffrey, F. Urban road short-term traffic flow forecasting based on the delay and nonlinear grey model. J. Transp. Syst. Eng. Inf. Technol. 2013, 13, 60–66. [Google Scholar]

- Song, Y.Y.; Ying, L. Decision tree methods: Applications for classification and prediction. Shanghai Arch. Psychiatry 2015, 27, 130. [Google Scholar]

- Zhang, Z.; Zhang, Z. Artificial neural network. In Multivariate Time Series Analysis in Climate and Environmental Research; Springer: Berlin/Heidelberg, Germany, 2018; pp. 1–35. [Google Scholar]

- Ma, X.; Tao, Z.; Wang, Y.; Yu, H.; Wang, Y. Long short-term memory neural network for traffic speed prediction using remote microwave sensor data. Transp. Res. Part Emerg. Technol. 2015, 54, 187–197. [Google Scholar] [CrossRef]

- Huang, G.B.; Zhu, Q.Y.; Siew, C.K. Extreme learning machine: Theory and applications. Neurocomputing 2006, 70, 489–501. [Google Scholar] [CrossRef]

- Khair, U.; Fahmi, H.; Al Hakim, S.; Rahim, R. Forecasting error calculation with mean absolute deviation and mean absolute percentage error. J. Physics Conf. Ser. 2017, 930, 012002. [Google Scholar] [CrossRef]

- Bernerth, J.B.; Aguinis, H. A critical review and best-practice recommendations for control variable usage. Pers. Psychol. 2016, 69, 229–283. [Google Scholar] [CrossRef]

| Layers | RMSE | Per-Iteration Training Time |

|---|---|---|

| One | 301.23 | 13.5 |

| Two | 289.67 | 15.2 |

| Three | 293.45 | 23.4 |

| Parameters | Value |

|---|---|

| Population size | 300 |

| Maximum number of iterations | 100 |

| 100 | |

| v | 20 |

| Models | A1 | A2 | A4 | A8 |

|---|---|---|---|---|

| SVR | 329.09 | 259.74 | 253.66 | 190.33 |

| GM | 347.94 | 261.36 | 275.35 | 189.57 |

| DT | 316.57 | 224.79 | 243.19 | 238.35 |

| ANN | 299.64 | 212.95 | 225.86 | 166.50 |

| LSTM | 294.52 | 211.31 | 224.68 | 168.91 |

| ELM | 294.10 | 201.67 | 222.07 | 169.15 |

| KAN | 289.67 | 208.74 | 221.74 | 163.86 |

| GSA-KAN | 287.80 | 198.12 | 219.73 | 162.69 |

| Models | A1 | A2 | A4 | A8 |

|---|---|---|---|---|

| SVR | 14.34 | 12.22 | 12.23 | 12.48 |

| GM | 12.49 | 10.90 | 13.22 | 12.89 |

| DT | 12.08 | 10.86 | 12.34 | 13.62 |

| ANN | 12.61 | 10.89 | 12.49 | 12.53 |

| LSTM | 12.82 | 11.06 | 13.51 | 12.56 |

| ELM | 11.82 | 10.34 | 12.05 | 12.42 |

| KAN | 11.84 | 10.34 | 11.86 | 12.16 |

| GSA-KAN | 11.77 | 10.25 | 11.69 | 11.92 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lin, Z.; Wang, D.; Cao, C.; Xie, H.; Zhou, T.; Cao, C. GSA-KAN: A Hybrid Model for Short-Term Traffic Forecasting. Mathematics 2025, 13, 1158. https://doi.org/10.3390/math13071158

Lin Z, Wang D, Cao C, Xie H, Zhou T, Cao C. GSA-KAN: A Hybrid Model for Short-Term Traffic Forecasting. Mathematics. 2025; 13(7):1158. https://doi.org/10.3390/math13071158

Chicago/Turabian StyleLin, Zhizhe, Dawei Wang, Chuxin Cao, Hai Xie, Teng Zhou, and Chunjie Cao. 2025. "GSA-KAN: A Hybrid Model for Short-Term Traffic Forecasting" Mathematics 13, no. 7: 1158. https://doi.org/10.3390/math13071158

APA StyleLin, Z., Wang, D., Cao, C., Xie, H., Zhou, T., & Cao, C. (2025). GSA-KAN: A Hybrid Model for Short-Term Traffic Forecasting. Mathematics, 13(7), 1158. https://doi.org/10.3390/math13071158