1. Introduction

Anomaly detection, also known as novelty or outlier detection, is a technique used to identify data points that significantly deviate from other data points. It is a widely discussed topic with growing applications in various domains, including anti-money laundering, health monitoring, fault detection, and spam filtering.

In the financial sector, anomaly detection is crucial for identifying fraudulent credit card transactions. The rise of cashless transactions, such as mobile payments and online credit card purchases, has led to an increase in fraud cases. Cyberattacks that compromise personal and financial data can result in significant economic losses, which can reach millions of dollars annually [

1]. Therefore, accurately and efficiently detecting fraudulent transactions is a critical application of anomaly detection.

Similarly, in the manufacturing industry, anomaly detection is essential to ensure production line quality, particularly with the advent of Industry 4.0 and automation. For example, in the semiconductor industry, automated production systems rely on numerous sensors to monitor operational status and maintain product quality. If system failures go undetected, they can cause substantial financial losses. However, sensor data are often highly complex, with numerous variables and limited anomalous instances, making traditional detection methods ineffective [

2]. Machine learning approaches have been explored to address this issue.

Anomaly detection also plays an important role in bioinformatics, where identifying rare, unusual, or unexpected patterns in complex biological data can lead to significant scientific and clinical discoveries. In biomedical research, anomalies often represent critical biological variations, such as genetic mutations linked to diseases, abnormal cellular behaviors, or irregular physiological signals that may indicate early-stage medical conditions. Effective anomaly detection methods are essential for enhancing disease diagnosis, patient monitoring, and personalized medicine, as they allow researchers and clinicians to distinguish between normal and pathological states with greater accuracy.

In genomic data analysis, anomaly detection aids in the identification of rare genetic mutations and structural variations that contribute to hereditary diseases and cancer development. Traditional statistical methods often struggle with the high dimensionality and inherent noise in genomic sequences, making machine learning-based anomaly detection techniques an increasingly popular alternative. By leveraging data-driven models, researchers can detect mutations and gene expression anomalies that would otherwise go unnoticed with conventional approaches.

Similarly, in proteomics and metabolomics, anomaly detection is instrumental in identifying biomarkers associated with specific diseases. The complexity of biological systems, coupled with the variability of experimental conditions, makes distinguishing meaningful patterns from noise a significant challenge. Anomaly detection models enable the discovery of unexpected molecular interactions and metabolic shifts that could provide insights into disease mechanisms and potential therapeutic targets.

Beyond molecular biology, anomaly detection is also widely used in medical imaging and physiological signal analysis. In radiology, the detection of abnormal patterns in medical images such as magnetic resonance imaging or computed tomography is vital for early detection of cancer and the diagnosis of neurological disorders. Machine learning-based anomaly detection methods improve diagnostic accuracy by identifying subtle abnormalities that may be overlooked by traditional imaging techniques. Likewise, in wearable health monitoring devices, real-time anomaly detection algorithms help identify irregular heart rhythms, respiratory anomalies, and other fluctuations in vital signs, facilitating timely medical interventions.

Various anomaly detection techniques have been applied to domain-specific problems, including statistical methods, classification-based approaches, nearest-neighbor algorithms, information theory, and spectral theorem-based methods. These techniques can be broadly classified into supervised, unsupervised, and semi-supervised anomaly detection methods [

3].

Despite its importance, anomaly detection presents several challenges, including class imbalance, data heterogeneity, and high-dimensional feature spaces. In addition, defining normal samples is often difficult. Many biological datasets contain a disproportionately small number of anomalous cases, making it difficult for conventional classifiers to effectively learn from limited labeled samples. Furthermore, anomaly definitions vary across domains, labeled data may not fully capture all anomaly characteristics, and distinguishing noise from true anomalies remains complex. To address these challenges, researchers are increasingly adopting advanced machine learning approaches such as deep learning, generative models, and feature mapping techniques to enhance the robustness and generalizability of anomaly detection models.

Recent studies have explored advanced deep learning techniques for anomaly detection across various domains. GRU-MACGANS has been employed to address class imbalance in electricity consumption data, improving detection accuracy and interpretability in the presence of outliers and missing values [

4]. In the IoT domain, autoencoder-based transfer learning has been utilized to enhance anomaly detection by learning detailed representations of normal operational data and transferring knowledge across data-rich and data-scarce environments, achieving high precision and recall [

5]. Additionally, in maritime applications, trajectory clustering and prediction using ProbSparse Attention-based Transformers have been introduced to detect anomalous ship movements, providing insights for maritime risk management [

6]. These studies demonstrate the effectiveness of deep learning approaches in handling data imbalance, high dimensionality, and dynamic patterns in anomaly detection tasks.

However, supervised anomaly detection faces a major challenge in acquiring labeled anomaly data. The cost of labeling all possible anomalies is often prohibitively high, resulting in a severe class imbalance where anomalous instances are significantly underrepresented. Conventional classifiers tend to be biased towards the majority class, reducing detection performance in such imbalanced settings [

7]. Addressing this issue is a key focus of supervised anomaly detection research. Ref. [

8] noted that no dedicated algorithms have been specifically designed for supervised anomaly detection. Instead, existing classifiers such as Random Forest (RF) [

9] and neural networks [

10] are commonly used.

Although big data has revolutionized various fields, data collection in domains such as medicine and healthcare remains a significant challenge due to cost constraints and the rarity of certain conditions. Acquiring high-quality medical imaging data or patient records for rare diseases often requires substantial financial investment and ethical considerations, making large-scale data collection impractical. As a result, Few-Shot Learning (FSL) has emerged as an essential technique in medical research and other data-scarce environments.

FSL is a subfield of machine learning that aims to train models using a relatively small amount of data. While traditional machine learning models typically require large-scale datasets for effective training, few-shot learning focuses on how to extract meaningful representations and learn useful patterns from limited labeled samples. FSL can be categorized into three main approaches: metric-based learning, model-based learning, and data augmentation-based enhancement techniques [

11].

FSL aims to train models with a limited number of samples, making it particularly valuable when data acquisition is expensive or infeasible. Traditional machine learning methods often struggle in such scenarios due to their reliance on large labeled datasets. Therefore, deep learning-based approaches have been introduced as a solution. Deep learning models have demonstrated their ability to automatically learn complex feature representations, improving classification performance in low-resource settings. However, most deep learning-based anomaly detection approaches are designed for unstructured data, such as images and text, while their effectiveness in structured data remains underexplored.

Among these approaches, the Siamese Neural Network (SNN) [

12] is a representative metric-based learning approach and a commonly used deep learning framework for few-shot learning. Originally developed for signature verification tasks, SNNs has been widely applied in computer vision and natural language processing for similarity learning, particularly in anomaly detection, facial recognition, and text matching. To further enhance its applicability, researchers have explored adaptations of SNNs to different types of data and tasks, optimizing its architecture for specific applications.

As a deep learning-based architecture, SNNs offer several advantages, particularly in handling complex, high-dimensional, and unstructured data. One of their primary strengths is their ability to automatically learn meaningful feature representations, which is crucial when working with intricate datasets such as medical images, genomic sequences, or multi-modal healthcare records. Furthermore, SNNs are highly effective for FSL, as they do not require an significant number of labeled data to achieve strong performance. By learning the similarity between sample pairs, where one instance serves as a reference (e.g., a normal case), and the other is an unknown test sample, SNNs can effectively generalize even with limited training data. This capability makes them particularly suitable for medical and biomedical applications, where labeled data are often scarce and achieving high-precision anomaly detection is critical. In recent years, several studies have applied SNNs to imbalanced data [

13,

14,

15], primarily by modifying the network architecture, while other research focuses on balancing the two classes [

16,

17]. Most research applies SNNs to anomaly detection in domain-specific image data [

18,

19,

20].

SNNs are widely recognized for their pairwise similarity learning capability but have rarely been utilized as a feature extractor. Most existing anomaly detection algorithms are designed for structured datasets [

8], yet the effectiveness of SNNs in this context remains underexplored. Feature selection and extraction play a crucial role in anomaly detection, as highlighted in a systematic literature review by [

21]. Additionally, ref. [

22] emphasizes the need for data-efficient anomaly detection methods, including few-shot anomaly detection, to address challenges in scenarios with limited labeled data. Motivated by these insights, our study proposes a few-shot learning-based anomaly detection framework that leverages an SNN as a feature extractor.

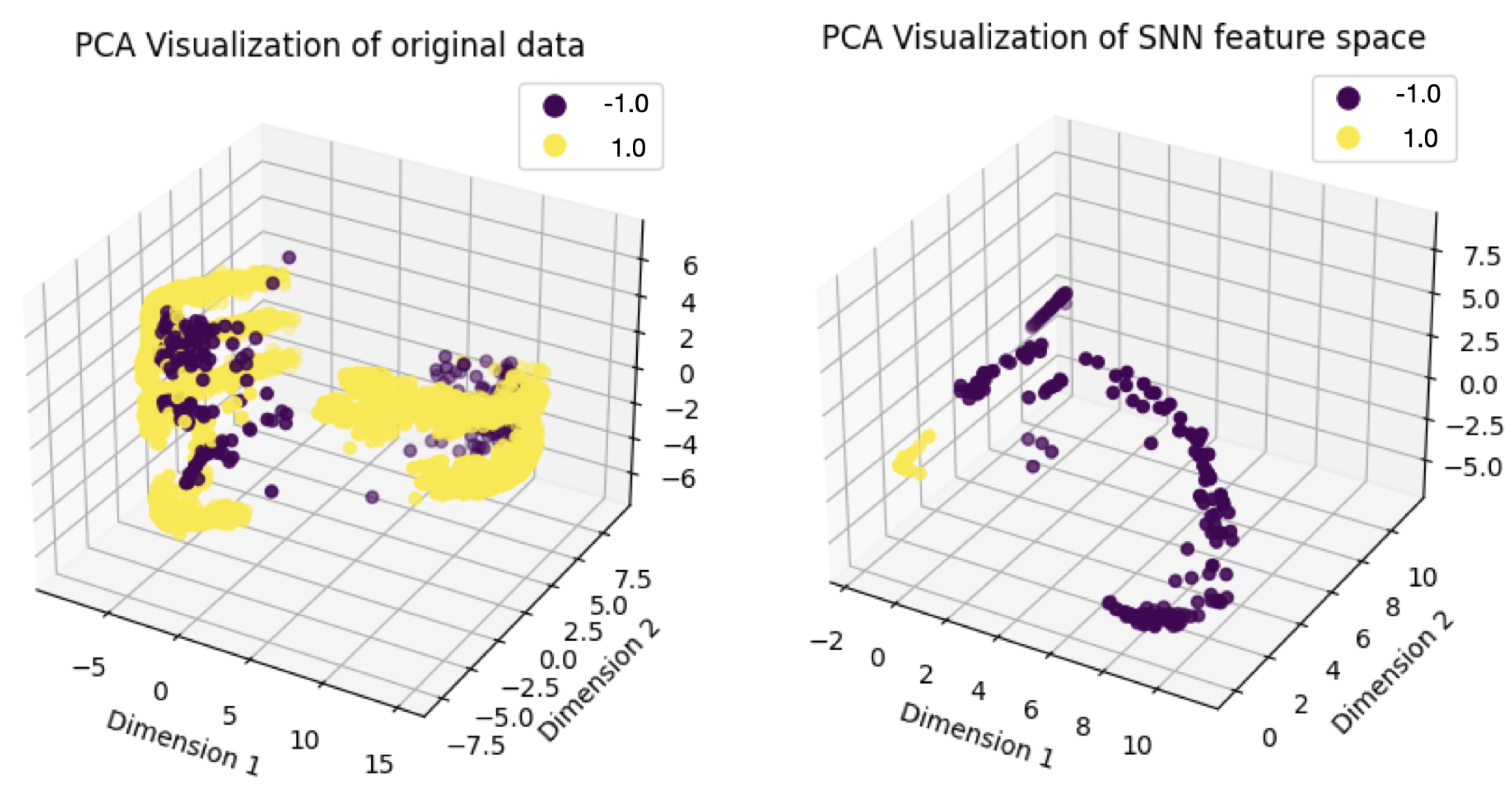

In this study, we introduce a novel approach that repurposes an SNN for feature extraction in structured anomaly detection tasks, leveraging its ability to learn informative representations from limited data. By transforming structured data into a discriminative feature space, the SNN enhances the classification performance of conventional models in distinguishing anomalies from normal instances. By integrating metric-based learning and feature mapping, SNN learning provides a robust framework for improving anomaly detection in high-dimensional, imbalanced datasets. These findings contribute to the development of more accurate and efficient anomaly detection strategies, with promising applications in genomics, medical diagnostics, and computational biology.

2. Methods

2.1. Siamese Neural Network (SNN)

The original SNN consists of two identical convolutional neural networks (CNNs) that share the same architecture and weights. During training, these subnetworks update their parameters synchronously. Unlike traditional supervised learning models, which classify individual data points, the SNN learns to measure pairwise similarity between input samples. Instead of relying on explicit class labels, the SNN processes paired data samples and outputs a similarity score, determining whether the inputs belong to the same class. These input pairs can consist of images, word embeddings, or entire text sequences.

The strength of the SNN lies in its ability to learn from limited labeled data by leveraging pairwise comparisons rather than absolute class labels. Once trained, the network can generalize to unseen data by evaluating similarity scores, making it a powerful tool for various machine learning tasks. The model learns a feature representation that enables it to distinguish whether input pairs belong to the same category or not.

The following equations describe the mathematical formulation of the SNN. Given an input pair

, the SNN processes each sample through a shared subnetwork

, where

represents the shared learnable parameters:

where

are the feature embeddings of input samples

and

and the function

can be implemented using a convolutional neural network (CNN) or a fully connected neural network (FCN). The two subnetworks share the same architecture and weights, ensuring identical feature transformation.

The similarity between the two feature embeddings is computed using a distance function. The most commonly used metric is the Euclidean distance Alternatively, the cosine similarity can be used , where represents how far apart the two embeddings are and ranges from −1 (completely dissimilar) to 1 (identical).

To optimize the Siamese network, the contrastive loss function is used, which ensures that the network minimizes the distance between similar pairs and maximizes the distance between dissimilar pairs. is the label, where 0 indicates similar pairs and 1 indicates dissimilar pairs. D is the Euclidean distance between embeddings. m is the margin parameter, ensuring that dissimilar pairs are separated by at least m.

At inference time, the sigmoid activation function

usually is applied to scale the distance output into the range

:

. A threshold

T is then applied to classify whether two samples are similar or dissimilar:

where

T is a predefined hyperparameter that can be tuned on validation data.

2.2. Proposed Method

In this study, we focus on evaluating the effectiveness of the SNN as a feature extractor for anomaly detection in structured data. Instead of performing pairwise similarity comparisons as in the classic SNN approach, once the SNN is trained, the fully connected neural network functions serve as a feature extractor in our implementation. For a given input sample , we obtain the learned feature representation as .

This extracted feature representation is then integrated with subsequent classifiers for further analysis and prediction, , where is the chosen classifier, mapping feature embeddings to final class predictions.

In the architecture of the sub-network, each neural network layer is followed by a ReLU activation function to introduce non-linearity, and Batch Normalization and Dropout layers are applied to prevent overfitting. Batch Normalization standardizes the output of the previous layer before passing it to the next layer, while Dropout randomly deactivates a proportion of neurons during training to prevent co-adaptation of weights and enhance generalization. The structure of sub-networks are summarized in

Table 1.

2.3. Structured Dataset

Given the relatively limited research on the SNN’s application to structured data, this study seeks to investigate its performance and compare it with other widely used anomaly detection methods. Our goal is to determine whether the SNN can serve as an effective alternative for detecting anomalies in structured datasets, particularly in scenarios where labeled anomaly samples are scarce.

To ensure clarity and maintain focus on our core contribution, we chose structured datasets that exhibit high-dimensional features, imbalanced class distributions, and rare anomaly occurrences—key characteristics that are also commonly observed in biological datasets. While we did not include biological datasets in our experiments, our methodology is generalizable and can be extended to bioinformatics applications. Many biological datasets, such as genomic sequences, proteomics data, and medical imaging datasets, share similar challenges. Given these similarities, the proposed approach provides a promising foundation for future research in bioinformatics anomaly detection, where labeled anomaly cases are often scarce, and feature representation is critical for accurate classification.

2.4. Data Preprocessing: Pairwise Data Construction

Supervised anomaly detection methods typically classify data into normal and anomalous categories, making the problem a binary classification problem. Traditional classifiers require labeled training data, where each data point is assigned a class label. However, SNNs differ in that they learn from paired data samples, with each pair labeled as similar or dissimilar. Therefore, prior to training an SNN, it is essential to construct a dataset of paired samples. The process of constructing these pairs in our approach is as follows:

- 1.

Define a random binary variable , where .

- 2.

If , randomly select two samples from the normal class to form a pair and label them as similar. If , randomly select one sample from the normal class and one from the anomalous class to form a pair and label them as dissimilar.

Through this process, a balanced number of similar and dissimilar pairs are randomly selected. The dataset is constructed with a 1:1 ratio of similar to dissimilar pairs, which is then used to train the SNN model. During training, the output of the model is compared to a threshold value of 0.5:

- -

If the predicted similarity score is greater than 0.5, the test sample is classified as normal.

- -

Otherwise, it is classified as anomalous.

As training progresses, the model continuously refines its decision boundary, improving its accuracy. Each training batch maintains a controlled ratio of similar to dissimilar samples, ensuring the stability of the learning process. The number of sample pairs and feature dimensions is carefully set based on the characteristics of the original dataset, and multiple paired datasets are used for training, with the most accurate training dataset retained.

2.5. Siamese Neural Network Prediction Framework

During evaluation, two prediction methods are used, as shown in

Figure 1:

- 1.

Distance-Based Prediction [

23]: Each test sample is paired with all normal training samples, and the average pairwise Mean Euclidean Distance (MED) is computed. If the average distance exceeds 0.5, the test sample is classified as anomalous; otherwise, it is classified as normal.

- 2.

Feature Extraction for Classification: Instead of using MED for direct classification, the output of the last hidden layer of the SNN is extracted as a feature representation. These feature-mapped data are then used as input for another classifier, such as Support Vector Machine (SVM) [

24] or RF, to improve prediction performance.

Figure 1.

Prediction framework using a Siamese Neural Network. The left plot represents using Mean Euclidean Distance (MED) as a classifier. The right plot shows using the Siamese Neural Network as a feature extractor.

Figure 1.

Prediction framework using a Siamese Neural Network. The left plot represents using Mean Euclidean Distance (MED) as a classifier. The right plot shows using the Siamese Neural Network as a feature extractor.

By leveraging these two approaches, the SNN can be effectively used both as an anomaly detector and as a feature extractor for downstream classification tasks.

2.6. Compared Algorithms and Oversampling Methods

To validate the performance of the proposed method, we evaluate it using three supervised learning models (SNN, Support Vector Machine (SVM) [

24], and Random Forest (RF) [

9]) and two anomaly detection algorithms (Isolation Forest (IF) [

25] and One-Class Support Vector Machine (OCSVM) [

26]). This study implements classifier algorithms using the Scikit-Learn 1.1.1 package [

27] in Python 3.9.13, with all models trained using their default hyperparameters.

Additionally, we compare these models with the Synthetic Minority Over-sampling Technique (SMOTE) [

28] to investigate its impact on anomaly detection performance. Proposed by Chawla et al. [

28], SMOTE was designed to address the overfitting issues associated with traditional undersampling and oversampling methods. The algorithm generates synthetic samples by interpolating between existing minority class samples and their nearest neighbors, effectively balancing class distributions and enhancing model performance on imbalanced datasets.

The nine evaluated algorithmic combinations used in the study are as follows:

- 1.

SNN (as feature extractor) + SVM;

- 2.

SNN (as feature extractor) + RF;

- 3.

Traditional SNN with MED for classification;

- 4.

SMOTE + RF;

- 5.

SMOTE + SVM;

- 6.

RF;

- 7.

SVM;

- 8.

IF;

- 9.

OCSVM.

By comparing these methods, we aim to assess the effectiveness of SNN-based anomaly detection and investigate the potential benefits of integrating oversampling techniques.

2.7. Evaluation Metrics

This section introduces the evaluation metrics used in this study. Since anomaly detection tasks inherently involve class imbalance, the selection of appropriate metrics is crucial. Standard accuracy is often insufficient for evaluating performance in imbalanced datasets, as it can favor the majority class while overlooking anomalies. Therefore, we employ Recall, Precision, F1-Score, G-Mean, and Balanced Accuracy as our primary evaluation metrics.

Before detailing these metrics, we first introduce the confusion matrix in

Table 2.

For instance, given the confusion matrix shown in

Table 3, the model correctly classified 950 anomalous instances and 80 normal instances. However, it misclassified 50 anomalous instances as normal (false negatives) and 20 normal instances as anomalous (false positive).

Using the four values from the confusion matrix, we define the following performance metrics:

- 1.

True Positive Rate (TPrate): Also known as Recall or Sensitivity, it measures the proportion of actual anomalous samples correctly classified as anomalies:

- 2.

True Negative Rate (TNrate): Also referred to as Specificity, it represents the proportion of actual normal samples correctly classified as normal:

- 3.

Precision: It quantifies how many of the samples predicted as anomalous are truly anomalous:

Building upon these three fundamental metrics, we further define F1-Score, G-Mean, and Balanced Accuracy, which offer more robust evaluation criteria for imbalanced classification tasks. As shown in

Table 3, a Precision of 0.98 suggests that 98% of the instances predicted as anomalies are truly anomalous, minimizing false positives. A Recall of 0.95 means the model correctly identifies 95% of actual anomalies, demonstrating strong sensitivity. A Specificity of 0.80 shows that 80% of normal instances are correctly classified, reducing false alarms. The F1-Score of 0.96 balances Precision and Recall, reflecting overall accuracy in detecting anomalies. The G-Mean of 0.87 indicates the model’s ability to handle class imbalance by maintaining a balance between sensitivity and specificity. Lastly, the Balanced Accuracy of 0.88 suggests that the model performs well across both anomaly and normal classes, making it effective in imbalanced data scenarios.

Table 4 summarizes the six performance metrics used in this study.

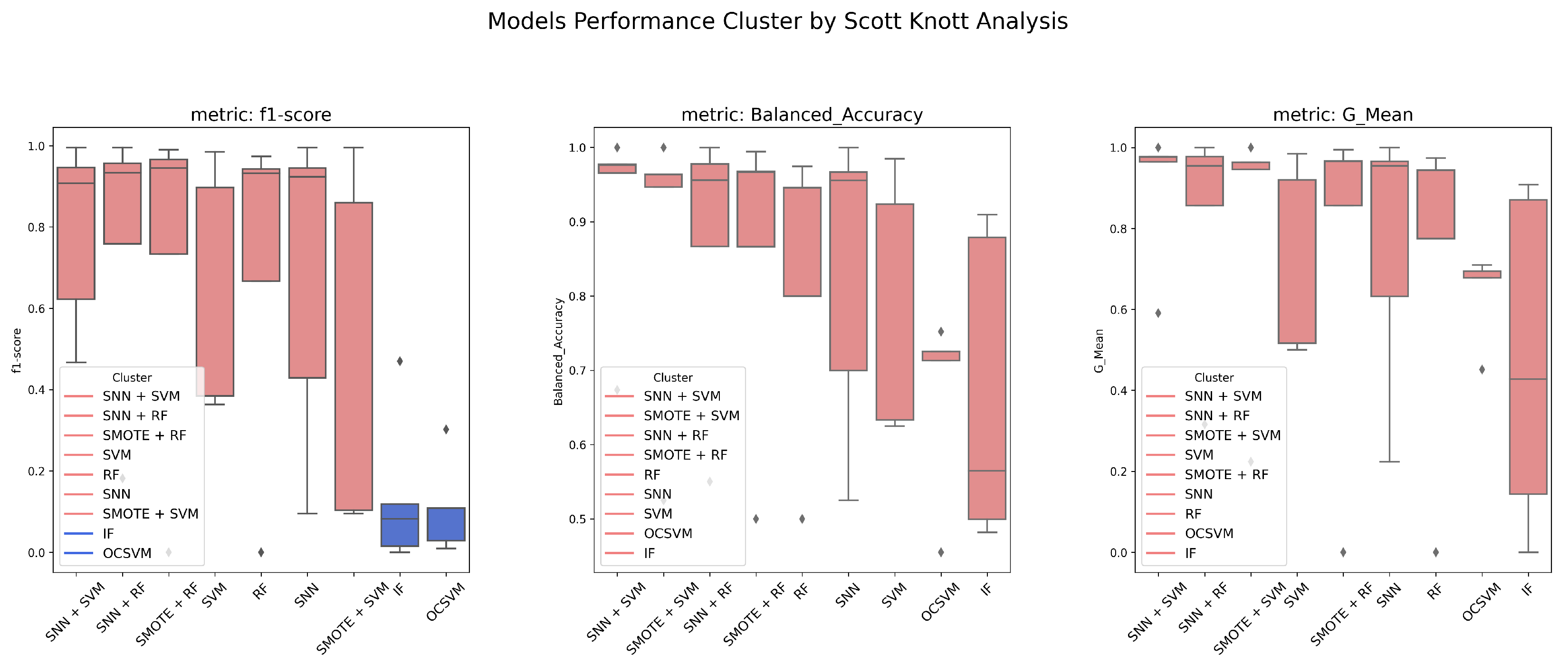

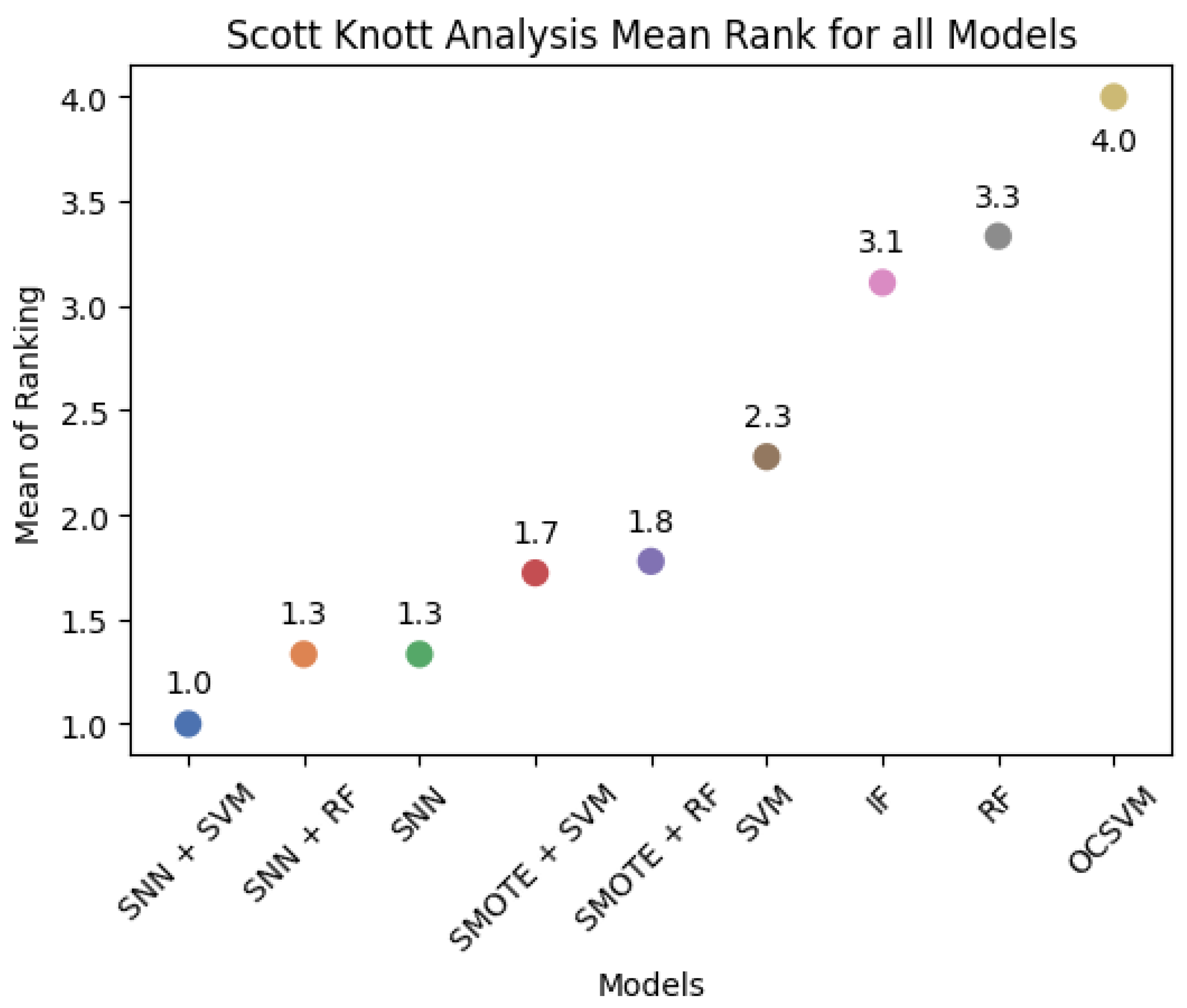

2.8. Scott–Knott Analysis

This study evaluates model performance using six different metrics and applies the Scott–Knott hierarchical clustering method [

29] to rank and group models based on their F1-Score, G-Mean, and Balanced Accuracy. The Scott–Knott method partitions models into statistically distinct groups by performing one-way analysis of variance (ANOVA) to detect significant differences between groups. Models with statistically similar performance are clustered together to provide meaningful rankings.

For each dataset, we perform five independent random resampling procedures to obtain multiple prediction results for each model. The Scott–Knott statistical method is then applied to identify performance-based model clusters. The detailed steps of the Scott–Knott process are as follows:

- 1.

Rank all models based on their performance across evaluation metrics, such as Recall and Precision.

- 2.

After ranking, compute the average value of each model and group them into two clusters based on their mean performance (e.g., the average Recall of all models).

- 3.

Perform one-way ANOVA to test for statistically significant differences between the two clusters. If a significant difference is detected, the clusters are further subdivided iteratively until no significant differences remain.

- 4.

The final step assigns each model to a specific performance group, providing a ranking that reflects the statistical differences among models.

By using the Scott–Knott method, we can effectively rank and categorize models based on statistically meaningful performance differences, enabling a robust comparison of their effectiveness.

2.9. Datasets

Five popular anomaly datasets were used in this study. The Wafer dataset [

30] originates from the UEA & UCR Time Series Classification Repository and represents semiconductor wafer data. The Credit Card Fraud dataset [

31] contains credit card fraud transactions collected from European financial institutions. The Backdoor dataset [

32] consists of network intrusion detection data related to backdoor cyberattacks. The Speech dataset [

33] comprises speech recordings, representing voice-based demographic data collected from the U.S. population. Lastly, the Census dataset [

31] is derived from the U.S. Census Bureau’s income survey, capturing demographic records of individuals with an annual income exceeding USD 50,000.

Due to computational constraints, a random subset of each dataset was sampled for analysis. The dataset characteristics are summarized in

Table 5.

Anomaly Ratio Adjustment: Wafer Dataset

The Wafer dataset used in this study originates from the UEA & UCR Time Series Classification Repository and was introduced by Olszewski (2001) [

30]. This dataset has been widely used for time-series feature extraction research and represents sensor measurements collected during semiconductor manufacturing processes. Each wafer contains 152 sensor features, capturing readings from multiple monitoring points throughout the production line. Based on these features, wafers are classified into normal and anomalous categories.

For this study, we extracted a subset of 5789 samples for training and 1000 samples for testing, maintaining the original 5.28% anomaly ratio in the training set.

Table 6 summarizes the number of normal and anomalous samples in the training and testing sets.

To evaluate how class imbalance affects model performance, we systematically adjusted the anomaly ratio in the Wafer dataset. Anomaly ratios refer to the proportion of anomalous data within the entire dataset. It represents the percentage of data points classified as anomalies compared to the total number of samples. A lower anomaly ratio indicates a highly imbalanced dataset, where anomalies are rare, making detection more challenging. Conversely, a higher anomaly ratio suggests a more balanced distribution between normal and anomalous instances.

Table 7 lists the different anomaly ratios tested in our study, along with the corresponding number of normal and anomalous samples.

By systematically reducing the anomaly ratio, we aim to observe how different models adapt to varying degrees of class imbalance and evaluate their robustness in detecting rare anomalies.

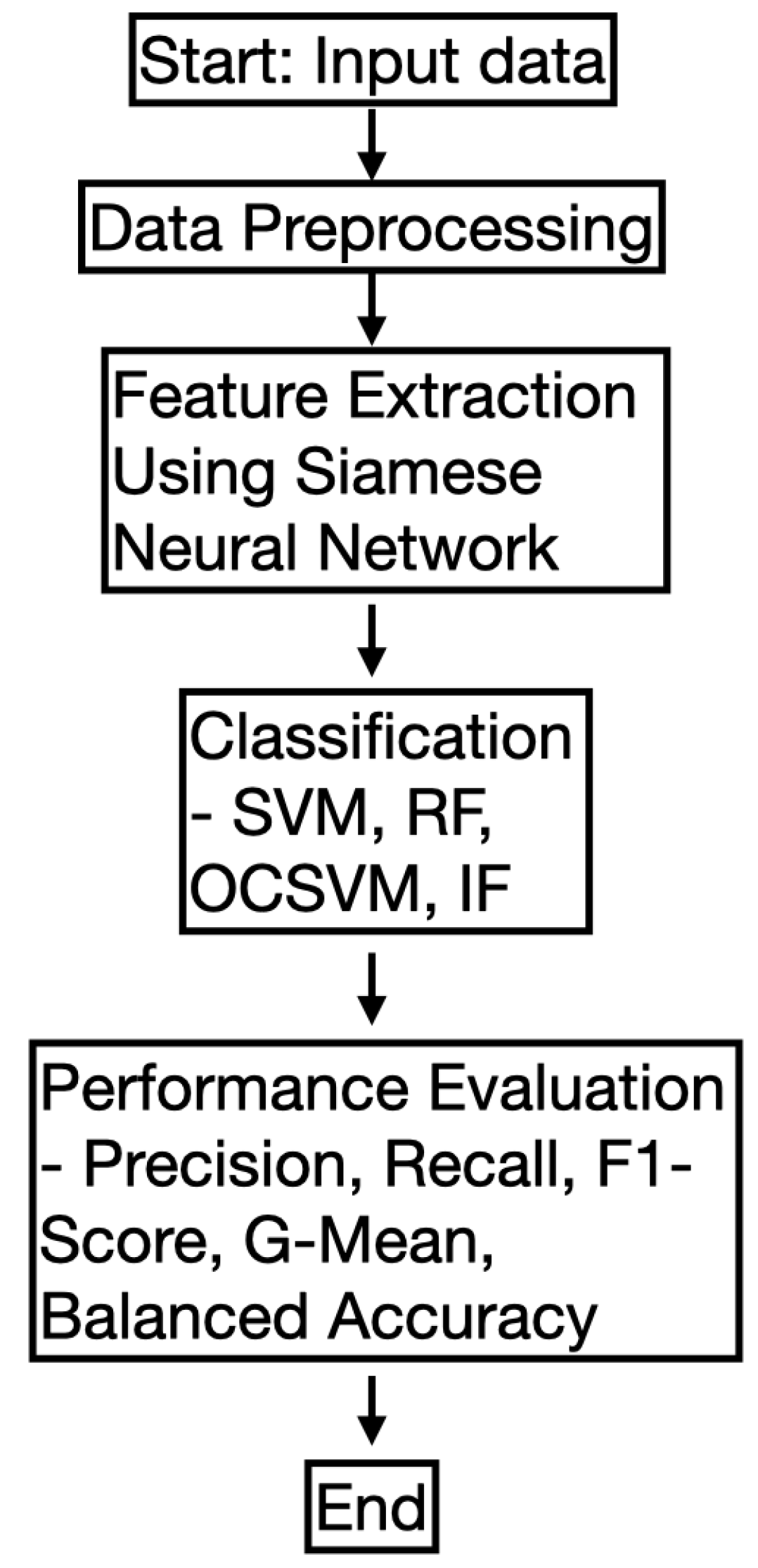

2.10. Flowchart of the Proposed Methodology

In this subsection, we provide a flowchart of the proposed methodology to enhance clarity. The workflow consists of several key steps, including data preprocessing, feature extraction using a Siamese Neural Network (SNN), classification using various models, and performance evaluation. The flowchart in

Figure 2 visually represents the overall process.

4. Conclusions

This study investigated the use of Siamese Neural Networks (SNNs) for anomaly detection in structured datasets, demonstrating their effectiveness in learning meaningful feature representations and improving detection accuracy, particularly in imbalanced data scenarios. Our work contributes to the field by repurposing SNNs as feature extractors, an approach rarely explored in structured anomaly detection, and demonstrating their ability to transform raw data into a more discriminative feature space. By addressing challenges such as limited labeled anomalies and class imbalance, our study fulfills its objective of proposing a few-shot learning-based anomaly detection framework that enhances classification performance.

We systematically compared SNN-based feature extraction with conventional classifiers, anomaly detection models, and oversampling techniques. Our results indicate that SNNs outperform traditional methods, such as OCSVM and Isolation Forest, which, despite their high Recall, suffer from increased false positives. Additionally, SNNs maintain stability in highly imbalanced environments, unlike SVM and Random Forest, which are more susceptible to class distribution skew. The Scott–Knott analysis further revealed that model rankings vary across datasets, emphasizing the importance of pre-processing and feature selection in optimizing anomaly detection performance.

Despite these contributions, our study has certain limitations. First, while SNNs demonstrated strong predictive performance, overfitting remains a concern, particularly in smaller datasets. Optimizing neural network architectures, such as appropriate neuron allocation and gradual layer reduction, may mitigate this issue. Second, our study did not evaluate computational efficiency, which is crucial for real-time anomaly detection applications. Future work should include a runtime analysis to assess model scalability.

Future research should explore stochastic generative models, such as Generative Adversarial Networks (GANs) and Variational Autoencoders (VAEs), to further enhance anomaly detection in structured data for feature augmentation. Given the prevalence of time-series data in bioinformatics, integrating Recurrent Neural Networks (RNNs) or Long Short-Term Memory (LSTM) models could improve anomaly detection in sequential biomedical datasets, such as genomic data, biosensor readings, and medical signal processing.

In conclusion, SNNs provide a promising approach for anomaly detection in structured data by leveraging few-shot learning and feature transformation. This study contributes to the development of more robust and generalizable anomaly detection frameworks, with potential applications in bioinformatics, medical diagnostics, and computational biology. By addressing current limitations and integrating advanced deep learning techniques, future research can further refine SNN-based models for structured anomaly detection in complex, real-world environments.