Abstract

Deep learning network models are crucial in processing images acquired from optical, laser, and acoustic sensors in ocean intelligent perception and target detection. This work comprehensively reviews ocean intelligent perception and image processing technology, including ocean intelligent perception devices and image acquisition, image recognition and detection models, adaptive image processing processes, and coping methods for nonlinear noise interference. As the core tasks of ocean image processing, image recognition and detection network models are the research focus of this article. The focus is on the development of deep-learning network models for ocean image recognition and detection, such as SSD, R-CNN series, and YOLO series. The detailed analysis of the mathematical structure of the YOLO model and the differences between various versions, which determine the detection accuracy and inference speed, provides a deeper understanding. It also reviewed adaptive image processing processes and their critical support for ocean image recognition and detection, such as image annotation, feature enhancement, and image segmentation. Research and practical applications show that nonlinear noise significantly affects underwater image processing. When combined with image enhancement, data augmentation, and transfer learning methods, deep learning algorithms can be applied to effectively address the challenges of underwater image degradation and nonlinear noise interference. This work offers a unique perspective, highlighting the mathematical structure of the network model for ocean intelligent perception and image processing. It also discusses the benefits of DL-based denoising methods in signal–noise separation and noise suppression. With this unique perspective, this work is expected to inspire and motivate more valuable research in related fields.

MSC:

68T07

1. Introduction

With artificial intelligence, sensors, and information communication development, ocean environment intelligent perception and target detection [,] technology has been comprehensively developed and applied. Intelligent perception of the marine environment relies on intelligent perception devices [,], ocean image datasets [,,], and deep learning algorithms. Intelligent detection devices perceive the marine environment by sending detection signals and acquiring and processing target images. In order to enhance the environmental perception abilities of intelligent detection devices in complex marine environments, vision-based ocean object detection algorithms have been used as a key technical tool []. Zha et al. [] proposed a deep learning-based computer vision deblurring method to improve the spatial resolution of beamforming images. Sattar et al. [] presented a vision-processing method for underwater target tracking using various image-processing tools, such as color segmentation, color histograms, and the mean shift. Underwater image processing is a critical issue in ocean intelligent perception []. Xu et al. [] introduced the pre-processing subsystem (GOCI IMPS) of the geostationary ocean color imager (IMage) for Communication, Ocean, and Meteorological Satellites (COMS). They described its functions, development status, and operational concepts. Chen and Liu [] established a VQA model with an attention mechanism and studied its application in ocean image processing. Furthermore, the advancement of ocean image processing technology is significantly driven by the use of deep learning algorithms. For example, Xue et al. [] proposed an underwater acoustic recognition and image processing technology based on deep learning, adopting a feature classification method based on one-dimensional convolution to identify target images. Gong et al. [] proposed a classification method based on deep separable convolutional feature fusion to improve the accuracy of underwater target classification.

However, underwater target detection faces many problems and challenges, such as insufficient sample data, low image resolutions [], poor signal-to-noise ratios [], and signal loss in complex underwater environments []. Chen et al. [] pointed out that the interference of ocean turbulence can cause severe information degradation when laser-carrying image information is transmitted in seawater. The specific measures to address this issue mainly include image enhancement and reducing interference. Multi-scale image feature fusion [,,] provides a practical approach for underwater image enhancement and reconstruction []. For example, Gong et al. [] proposed an underwater image enhancement method based on color feature fusion, which introduces an attention mechanism to design a residual enhancement module to enhance the feature expression ability of underwater images. Chen et al. [] proposed an underwater crack image enhancement network (UCE CycleGAN) that utilizes multi-feature fusion to reduce blur and enhance texture details. Regarding reducing interference, DL-based wave suppression algorithms are effectively applied in ocean engineering; for example, researchers use DL-based wave suppression algorithms to reconstruct or improve SAR image resolution [,]. Jiang et al. [] designed an improved deep convolutional generative adversarial network (DCGAN) for automatically detecting internal waves in the ocean and reducing their interference. Compared with traditional methods, deep learning algorithms can facilitate higher accuracy and improved ocean intelligent perception image processing performance, especially for radar and sonar detection [].

Several scholars have reviewed the underwater ocean object detection technologies based on deep learning [] and image processing with deep learning []. Li et al. [] summarized the key advances in the application of DL algorithms to the visual recognition and detection of aquatic animals, including the datasets, algorithms, and performance. Researchers have also conducted relevant review work [,] on ocean intelligent detection and image processing, referring to both acoustic [,] and optical image processing []. For example, Chai et al. [] reviewed the research on DL-based sonar image algorithms, including key technologies in denoising, feature extraction, classification, detection, and segmentation. The YOLO series has been widely developed and extensively researched, being a well-known DL-based network model for the processing of ocean intelligent detection images. Wang et al. [] reviewed the development of YOLOv1 to YOLOv10, focusing on the functional structure improvements. Hussain [] reviewed the progressive improvements spanning YOLOv1 to YOLOv8, focusing on their key architectural innovations and application performance. Jiao and Abdullah [] discussed the relevant capabilities of the YOLOv1 to YOLOv7 series algorithms and compared their algorithmic performance. This study comprehensively describes the mathematical structures of the YOLO models, the differences in their network architectures and detection algorithms, and the influence of nonlinear noise from the ocean environment on signal image processing, as well as potential countermeasures. This work provides unique research perspectives and reference value for researchers in related fields. It includes the following areas of focus: ocean intelligent perception devices and image acquisition (Section 2), image recognition and detection models (Section 3), adaptive image processing processes supporting image recognition and detection (Section 4), and coping methods for nonlinear noise interference in image detection (Section 5). Section 6 presents the conclusions and discusses future research directions.

2. Ocean Intelligent Perception Devices and Image Acquisition

Ocean intelligent perception devices, such as autonomous underwater vehicles (AUVs) [], sonar sensors, LiDAR, and optical cameras, perceive the ocean environment by sending and receiving detection signals. Thus, the acquisition and analysis of signal images have become important aspects of ocean intelligent perception.

2.1. Autonomous Underwater Vehicles with Multiple Sensors

AUVs have been widely applied in the ocean intelligent detection field, enabling the automatic identification and location of targets in diverse marine environments. Integrated with multiple sensors and devices, AUVs can automatically complete various ocean intelligent detection tasks, including multi-target detection, mobile target detection, remote target detection, and real-time detection.

Optical sensors sense the marine environment by sending and receiving optical signals. An optical camera can provide high-resolution real-time images, being suitable for clear-water areas and short-range detection. Wang et al. [] pointed out that optical sensors can provide more detailed and comprehensive depictions than acoustic sensors in short-range underwater detection. Cameras, which are widely used to detect and track underwater targets [], have been further enhanced by Rao et al. [], who proposed a visual detection and tracking system for moving maritime targets using a multi-camera collaborative approach. Yang et al. [] summarized the use of RGB cameras in intelligent ocean target detection, analyzing their performance in terms of accuracy, speed, and robustness. Combined with multi-sensor systems and advanced machine vision techniques [], this intelligent marine detection equipment demonstrates powerful detection capabilities.

Acoustic sensors sense the marine environment by sending and receiving acoustic signals. In turbid waters, complex environments, and long-distance detection, the application value of acoustic signal detection in ocean exploration far exceeds that of electromagnetic waves and visual signals [,]. Thus, AUVs are usually equipped with sonar sensors [,,] to detect targets and perceive the complex underwater environment of the ocean. They collect image data and combine them with data from other sensors for a comprehensive analysis, providing more extensive marine environmental information. Acoustic sensors represent a powerful tool and method for ocean intelligent detection, particularly when AUVs are supported by multi-sensor devices and deep learning algorithms [,].

Laser sensors perceive the marine environment by sending and receiving laser signals. Usually implemented in AUVs and LiDAR, they stand out due to their ability to quickly acquire high-resolution and accurate detection images during ocean observation and surveys [,]. This efficiency makes them more suitable for long-distance and real-time detection, highlighting their superiority in specific applications, such as underwater laser ranging [], underwater laser imaging [], and underwater laser mapping [].

In summary, AUVs equipped with multi-sensor systems demonstrate remarkable adaptability, as they utilize various signals for ocean target detection, including acoustic, light, electromagnetic, and laser signals. Sonar is the most widely used type of detection signal and is applied in various areas of ocean environment perception. The technical principles and methods of acoustic signal detection are detailed below.

2.2. Sonar Detection Image Acquisition

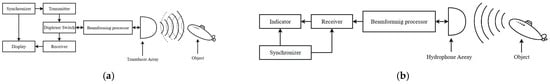

Sound navigation and ranging (sonar) is the most widely used sensing technology in ocean detection. It harnesses sound waves for a multitude of tasks, from ocean exploration to target recognition, encompassing both active and passive sonar detection, as depicted in Figure 1. Active sonar is a key tool in ocean exploration. It includes forward-looking sonar (FLS), side-scan sonar (SSS), and synthetic aperture sonar (SAS).

Figure 1.

Sonar detection types: (a) active sonar; (b) passive sonar [].

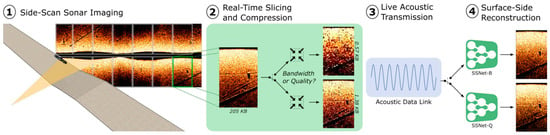

In ocean intelligent detection, AUVs are equipped with various sensors to perform different tasks; among these, forward-looking sonar (FLS) [,], side-scan sonar (SSS) [], and dual-frequency sonar (DIDSON) [] are the most common sonar technologies. Forward-looking sonar and side-scan sonar represent basic mapping sensors in underwater detection. Early sonar detection technology enabled the construction of a two-dimensional map for AUVs. For example, the 2D mapping performed via FLS allows AUVs to obtain environmental information in the direction of navigation. FLS is mainly used for forward detection and enables real-time imaging and measurement in front of an AUV. At the same time, high-frequency FLS is useful in detecting and recognizing small underwater objects due to the high-resolution maps that it generates. Side-scan sonar is mainly used for side detection in AUVs; it can provide high-resolution signal images for underwater target recognition and detection. Various sonar sensors provide high-resolution underwater images to assist AUVs in detecting underwater terrain and landforms. Combined with FLS and SSS, AUVs can obtain 3D mapping information from sonar detection signals []. FLS and SSS can provide real-time detection information for AUVs in different directions in complex underwater environments. Taking SSS as an example, the real-time acoustic detection process of sonar sensors is shown in Figure 2.

Figure 2.

The real-time acoustic detection process of side-scan sonar [].  represents the image compression process.

represents the image compression process.

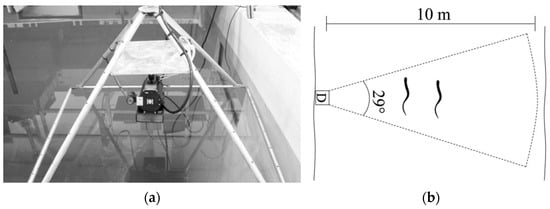

Dual-frequency sonar is a sonar technology that uses two different frequencies (1.8 and 1.1 MHz) of sound waves for detection and identification. It was first developed by the Applied Physics Laboratory of the University of Washington for the United States Navy []. By using two different frequencies of sound waves simultaneously or alternately, dual-frequency sonar can obtain more information, providing real-time underwater images with near-video quality []. Combined with a coaxially mounted low-light video camera (Figure 3a), DIDSON can obtain relatively high-quality detection images by using a more extensive sampling window (Figure 3b); this has been widely applied in marine fish detection [,,].

Figure 3.

Working process of DIDSON: (a) low-light video camera []; (b) larger sampling window of dual recognition sonar [].

2.3. Resource Limitations of Ocean Intelligent Perception Devices

Underwater sensors have significant resource constraints from energy storage and communication methods when detecting underwater targets []. For example, the wireless communication performance of underwater wireless sensor network (UWSN) nodes is poor in underwater environments []. The complex and ever-changing marine environment makes it difficult for underwater fiber-optic acoustic sensors to achieve high-precision identification, positioning, and tracking []. The insufficient real-time performance and refresh rate of underwater acoustic positioning and navigation systems further exacerbate the situation []. The fusion problem of multi-source information data makes it difficult for autonomous underwater vehicles (AUVs) to perceive the external environment accurately []. Insufficient capacity of edge devices for underwater data collection entities leads to difficulties in processing complex data []. Lastly, the nonlinear noise interference caused by underwater radiated noise (URN) [] significantly affects the detection accuracy of underwater sensing equipment.

The above resource constraints pose significant challenges to high-precision detection, real-time detection, and multi-modal fusion detection of ocean intelligent perception, requiring more advanced intelligent image processing algorithms, multi-sensor fusion methods [], denoising methods, and lightweight AI [,] models to address these challenges.

3. Ocean Image Recognition and Detection Models

DL-based network models are used in ocean intelligent perception and detection to analyze and process the signal images received by optical, acoustic, or laser sensors. Deep learning network models have played an important role in ocean detection signal processing, encompassing tasks such as image denoising [], feature extraction [], classification [], recognition [], and detection [] across sonar images [,], optical images [], and laser images []. Chai et al. [] reviewed the applications of various deep learning networks in image processing, providing a thorough analysis of the relevant types involved.

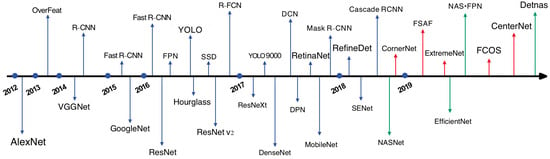

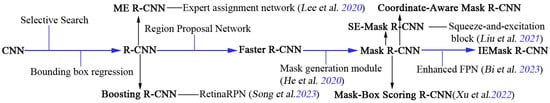

From the perspective of technological development, underwater target feature extraction and recognition technology has progressed from traditional time–frequency-domain methods to deep learning algorithms and end-to-end network models. The former type of method relies on a manually designed feature extraction process, which can easily lead to information loss and poor environmental adaptability. The latter two types of methods both rely on deep learning network models. Yang et al. [] presented a significant advancement in the field, namely the end-to-end underwater acoustic target recognition model (1DCTN), which could achieve a relatively high recognition rate. Multi-target detection typically relies on deep learning models and devices such as cameras and uncrewed boats to detect ocean targets. Underwater object detection technology is evolving rapidly, as shown in Figure 4, and the 1DCTN model is a key player in this transformation, providing crucial support for autonomous devices in ocean environmental perception and detection [].

Figure 4.

The object detection milestones [].

3.1. Deep Convolutional Neural Networks

Deep convolutional neural networks (CNNs) [,,,] are the most well-known network models used for image feature extraction and recognition. These are typically used to extract image features to capture local and global visual information in ocean target detection. CNNs are usually applied in marine acoustic detection and image analysis [,,], such as signal image recognition and classification for sonar or synthetic aperture radar (SAR) []. These models can learn features from a large amount of annotated data, significantly improving the accuracy and robustness of detection. For example, Ashok and Latha studied the use of convolutional neural networks to extract underwater acoustic signal image features by constructing an innovative DNN called the Audio Perspective Region-Based Convolutional Neural Network (APRCNN) []. Wang and Li [] discussed the application of a CNN and its variants in the processing and analysis of ocean remote sensing data. However, conventional object detection algorithms rely on feature-based methods such as HOG, SIFT, and SURF and are unsuitable for dynamic and real-time target detection in complex scenes. When basic CNNs are applied to ocean image recognition using small samples, challenges arise due to the marine environment’s complexity, background interference, the diversity and variability of marine targets, uneven datasets, and the requirements for real-time detection. Despite these challenges, the potential of CNNs to improve the accuracy and robustness in complex underwater environments is evident. Nevertheless, the DCNN is a complex model with many convolutional and pooling layers. It will require large amounts of inference time and computation when detecting ocean targets. Thus, more lightweight and accurate models are needed for real-time detection in complex underwater environments.

3.2. Two-Stage Detection Network Model

Due to their high detection precision, efficiency, and flexibility, two-stage detection network models have been widely developed and applied in ocean intelligent detection. The specific work process includes the following steps. The first is to use the selective search algorithm [] to generate a series of candidate region proposals from the input image, which may contain the target object. The second step includes extracting features from each candidate region using pre-trained convolutional neural networks such as AlexNet [] and VGG []. These features can be used to describe the content within the region. In the last step, the extracted features are input into a support vector machine (SVM) [] for classification, seeking to determine whether the candidate region contains the target object. At the same time, a regression model is used to perform bounding box regression on the candidate regions so as to improve the accuracy in identifying the target position. Bounding box regression is a technique that adjusts the bounding box coordinates to fit the object better. The R-CNN series consists of several well-known two-stage object detection algorithms [,], including Faster R-CNN, Mask R-CNN, Cascade R-CNN, and other improved models. Ocean target detection based on R-CNN can be divided into the following steps: selective search, feature extraction, classification, and regression. However, the main limitation of R-CNN in ocean target detection is that its computational efficiency is relatively low, because each region proposal requires separate feature extraction through the CNN. In addition, the computational cost of the selective search algorithm is significant.

Faster R-CNN has been improved based on R-CNN through the introduction of the Region Proposal Network (RPN). The RPN generates region proposals directly through the CNN, avoiding the selective search and significantly improving the speed. Faster R-CNN still uses the CNN for feature extraction and classification but integrates the region proposal generation process into the network. Therefore, Faster R-CNN allows significant improvements in both speed and accuracy, making it more suitable for multi-target and real-time detection. Moreover, it can quickly adapt to different marine environments and target types, such as SAR image detection [], underwater target detection [], and marine debris and biological exploration [,,].

Mask R-CNN [,] is a versatile extension of Faster R-CNN, significantly enhancing its capabilities by adding an instance segmentation functionality. Pixel-level segmentation is enabled by introducing a branch based on Faster R-CNN to produce binary masks for each target. Due to its excellent image segmentation abilities, Mask R-CNN has been widely adopted in various marine target detection tasks, such as SAR image detection [,], marine debris exploration [], and marine biological detection [,]. For instance, Mana and Sasipraba [] demonstrated the power of Mask R-CNN by developing a deep learning-based classification technique using a mask-based region convolutional neural network for fish detection and classification.

Furthermore, the development of improved R-CNN models for object detection has been undertaken as a collaborative effort, with practical applications in various fields. For example, Bi et al. [] contributed the Information-Enhanced Mask R-CNN (IEMask R-CNN), which uses the FPN structure; this enhances the valuable channel information and global information of feature maps. This model is particularly useful for segmentation tasks in computer vision. Lee et al. [] proposed an ME R-CNN with an expert assignment network (EAN), which captures various areas of interest in computer vision. Liu et al. [] explored an SE-Mask R-CNN by introducing a squeeze-and-excitation block into the ResNet-50 backbone, improving the speed and accuracy of fruit detection and segmentation. These advancements in R-CNN models have been further extended to enhance the performance of underwater target detection models, such as Boosting R-CNN [] for two-stage underwater exploration, Mask-Box Scoring R-CNN [] for sonar image instance segmentation, and Coordinate-Aware Mask R-CNN [] for fish detection in commercial trawl nets. Figure 5 illustrates the development of R-CNN and its partially improved models in ocean target detection.

Figure 5.

The development of R-CNN and its partially improved models in ocean target detection [,,,,,].

3.3. Single-Stage Detection Network Model

The network models mentioned above enable the accurate identification of targets, but the computational complexity is too high for embedded systems, reducing the inference speed. Even for the fastest high-precision detector, Faster RCNN can only run at a speed of 7 frames per second, significantly reducing the update efficiency in real-time detection. Therefore, an end-to-end object detection method using single-stage detection algorithms has been developed using the SSD and YOLO series, which are well-known network models.

Single-Shot Multi-Box Detector (SSD) [,] is a single-stage detection algorithm that differs from traditional two-stage detection algorithms such as Faster R-CNN. SSD does not require the generation of candidate regions; it only needs an input image and ground truth boxes for each object. It performs well in applications requiring high real-time performance, striking an appropriate balance between speed and accuracy. SSD excels in real-time detection [] and multi-target detection []. SSD exhibits a faster inference speed and is superior in tasks such as video surveillance, autonomous driving, and ocean exploration. Moreover, SSD uses multi-scale feature maps to detect targets, enabling it to capture targets of varying sizes by predicting feature maps of different levels, maintaining relatively high accuracy.

YOLO is a well-known computer vision language model for real-time object detection that was developed by Joseph Redmon and his team []. With continuous upgrading and improvement, YOLO has evolved into a fast, real-time, multi-target detection algorithm. Its high precision makes it a reliable choice for real-time detection, such as in autonomous driving and robot vision. Due to its robust real-time detection and image analysis capabilities, YOLO has been widely used in marine object detection [,]. The YOLO model differs significantly from other network models in terms of network structure and inference methods. It has a higher inference speed but a lower detection accuracy. Table 1 shows a comparative study between YOLO with the two-stage detection network of Faster R-CNN and the single-stage detection network of SSD. However, by improving its mathematical structure, the YOLO model is constantly breaking through and upgrading detection accuracy, developing multiple advanced versions.

Table 1.

Comparative study between YOLO and other common detection models.

3.4. Mathematical Structures of YOLO Model

In ocean image detection tasks, the superiority of the YOLO model over other object detection models, such as Faster R-CNN, SSD, and RetinaNet [], is mainly reflected in the following aspects. First is the real-time detection ability. Due to the adoption of a single-stage detection architecture, the inference speed has been dramatically improved, making it suitable for ocean real-time monitoring. Second is the multi-scale prediction and small object detection capability. Due to the use of multi-scale feature fusion and anchor prediction mechanism, its detection ability of small targets is greatly enhanced, which is suitable for detecting marine plankton and floating garbage. Third is the lightweight network architecture design. Some YOLO variants, such as YOLOv5s and YOLOv8n, compress models through channel pruning, quantization, and other techniques, with parameter sizes of only 1–7 M, and can be deployed on edge devices such as buoys and underwater robots. Thus, the mathematical structure of the YOLO model achieves efficient detection through a lightweight backbone network, multi-scale feature fusion and grid prediction, and composite loss function.

- 1.

- Lightweight backbone network

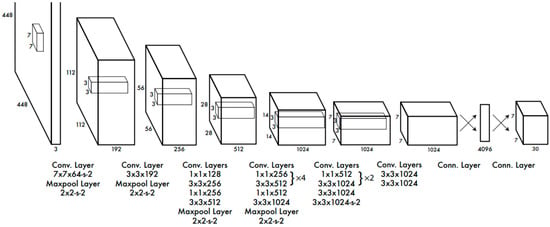

YOLO uses convolutional neural networks (CNN) as its essential backbone. The earliest YOLO model (YOLOv1) includes 24 convolutional layers followed by two fully connected layers, as shown in Figure 6.

Figure 6.

The YOLO architecture []. The “-s-” means the stride.

Regardless of the network structure, the core algorithm is the convolution operation, yielding

where and represent the input and output pixels, respectively. represents the convolutional kernel weights with and steps. is the offset value, and is the activation function, e.g., Leaky ReLU, yielding

Subsequent YOLO versions have developed more complex network structures as their backbone network based on this foundation. Their network backbone reduces computational complexity and improves inference speed by introducing Darknet and CSPDarknet to achieve cross-stage partial networks. They also integrate multi-scale contextual information by introducing SPP/ASPP modules to enhance robustness against complex ocean backgrounds.

- 2.

- Multi-scale feature fusion and grid prediction

- Multi-scale prediction;

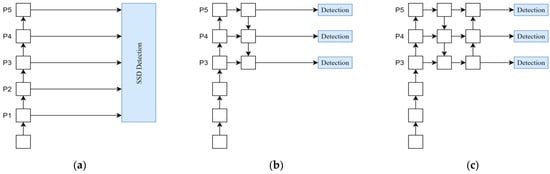

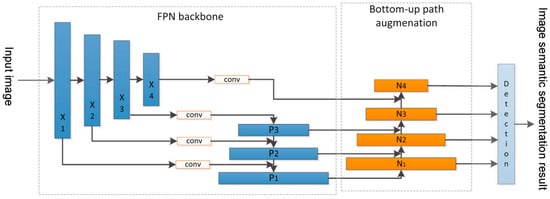

Multi-scale prediction is a critical method for YOLO models that enables the model to detect large and small targets simultaneously. This method improves the model’s adaptability to targets of different sizes and detection accuracy through multi-scale feature fusion. The implementation of multi-scale prediction involves the introduction of anchor boxes, multi-scale training, and other network structures. YOLOv3 and its corresponding versions achieve multi-scale prediction by integrating feature pyramid networks (FPNs) [] or path aggregation networks (PANs) []. These networks play a crucial role in the field by improving feature aggregation, enabling context information in images to be captured by aggregating feature maps from different layers. Dong et al. [] analyzed the structures of FPN and PAN, as shown in Figure 7. The FPN introduces a top-down pathway for the fusion of multi-scale features on top of the SSD, while the PAN adds a bottom-up pathway on top of the FPN.

Figure 7.

Different types of feature fusion networks: (a) SSD; (b) FPN; (c) PAN.

Multi-scale feature fusion, the basis of multi-scale prediction, integrates features from different levels, empowering the model to confidently handle targets of various scales. Signal image processing network models usually incorporate multi-scale feature fusion structures after the convolutional layer, pooling feature maps of different scales to extract multi-scale feature information. This strategic design effectively overcomes the limitations of traditional convolutional neural networks in processing images of different sizes, providing a reassuring level of adaptability.

- Grid prediction;

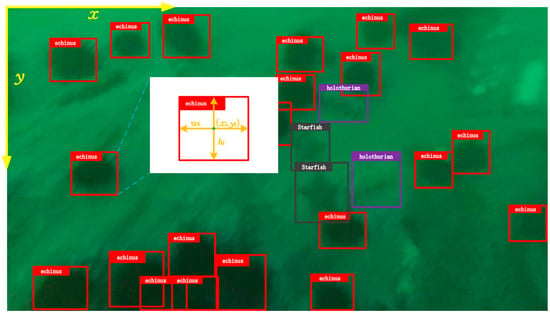

YOLO models use the anchor mechanism to solve the ambiguity problem of dense targets in marine scenes. This mechanism dynamically allocates positive samples by jointly measuring the prediction box and truth value, ensuring dynamic prediction and adaptive labeling. The anchor mechanism further enhances YOLO’s capabilities by dividing the image into grids, allowing each grid cell to predict multiple bounding boxes, each corresponding to an anchor box. It uses pre-defined anchor boxes to predict the locations and categories of targets. Each anchor box has a specific aspect ratio and scale, and the algorithm performs bounding box regression. This involves predicting the bounding box of the target through regression analysis, where the coordinates of candidate regions are adjusted to surround the target. The process also includes target classification and label assignment. Taking YOLOv3 as an example, the center point coordinates of the predicted bounding box can be represented as

where (, ) and (, ) denote the center point coordinates of the true and predicted bounding box, respectively. (, ) is the coordinate in the upper-left corner of the grid. represents the sigmoid function. The predicted bounding box size can be expressed as

where (, ) represents the preset anchor size. (, ) represents the original pixel coordinates output by the model, and (, ) represents the true bounding box size. A detailed explanation of this process is shown in Figure 8.

Figure 8.

Process of predicting bounding box and category label for object of interest, where (, ) denotes the coordinates of the center point of the bounding box predicted by the model [].

- 3.

- Composite loss function

The design of the loss function is crucial for the YOLO model’s object detection performance. The loss function usually consists of positioning loss, classification loss, and object confidence loss.

- Positioning loss;

The positioning loss calculates the difference between the predicted bounding box and the truth bounding box, guiding the model to adjust the center coordinates (, ) and dimensions (, ) of the bounding box to make it closer to the truth target position. The mean squared error (MSE) [] and CIoU loss function are usually used to calculate the positioning loss. For example, it uses the MSE loss function to calculate the positioning loss from YOLOv1 to YOLOv3, yielding

where (, ) and (, ) denote the center point coordinates of the -th true and predicted bounding boxes, as mentioned in Equations (3) and (4). represents the number of grids that the image is divided into, ; is the exponential function used to screen negative samples. is a weight coefficient used to amplify the importance of the localization loss.

It uses the CIoU loss function to calculate the positioning loss from YOLOv4 to YOLOv8, yielding

where is the Intersection over Union of predicted and truth bounding box; represents the Euclidean distance between the predicted box center point and the truth box center point ; is the diagonal length of the smallest bounding rectangle between the predicted box and the truth box; is the weight coefficient used to balance the penalty term of aspect ratio; and is the penalty term of aspect ratio, which yields

where (, ) and (, ) represent the width and height of the predicted box and truth box, respectively.

- Classification loss;

The classification loss measures the difference between the class probability distribution predicted by the model and the truth class labels. It plays a critical role in guiding the model in adjusting the category prediction results, ensuring that each target’s category prediction is as accurate as possible. The binary cross-entropy (BCE) loss function [] is the method most commonly used to calculate the classification loss, which yields

where represents the true label of the -th box with a value of 0 or 1. represents the probability value predicted by the model of the -th box, ranging from 0 to 1. is the sample number.

- Confidence loss;

The confidence loss is used to evaluate the model’s prediction accuracy, i.e., whether the predicted bounding box contains the target. In the YOLO series, the MSE or BCE loss function can be used to calculate the confidence loss. The confidence loss calculation by using the MSE loss function yields

where is a weight coefficient used to balance the confidence loss of positive and negative samples. represents the true confidence score of the -th box, and represents the predicted confidence score of the -th box, yielding

where denotes the probability that the bounding box contains the target. Both , and can take a value of 0 or 1. is the intersection over union ratio of the true and predicted bounding boxes.

The confidence loss calculation by using the BCE loss function yields

3.5. Development and Comparative Study of YOLO Models

The mathematical structure of the YOLO model plays a crucial role in determining its detection accuracy and inference speed. This has led to the development of multiple versions of the YOLO network model, of which the official versions range from YOLOv1 to YOLOv8, which have been maturely applied in diverse fields, including ocean intelligent detection. The latest versions, YOLOv9, YOLOv10, and YOLOv11, are unofficial (community-improved) versions that adopt the backbone network architecture of YOLO8 and have further optimization. YOLOv9 introduces reversible networks to achieve a lightweight design []. In YOLOv10 and YOLOv11, the models’ lightweight nature and real-time detection capabilities are optimized [].

The different YOLO versions vary in their mathematical structure, which is evident in their network architecture and detection algorithms, as shown in Table 2.

Table 2.

Mathematical structure of YOLO official versions from YOLOv1 to YOLOv8.

Furthermore, due to the differences in mathematical structures between different versions, their detection performance also varies. This is specifically manifested in the adaptability of the network architectures of different YOLO versions to different tasks in ocean target detection, localization, classification, and image recognition, as shown in Table 3.

Table 3.

Performance of YOLO official versions from YOLOv1 to YOLOv8.

For the unofficial versions from YOLOv9 to YOLOv11, the detection performance needs to be verified using specific scenarios. Sharma and Kumar [] compared the detection performance and efficiency of YOLOv8 to YOLOv11 in practical applications. Moreover, they offer powerful tools for practical applications. Yuan et al. [] explored the application of YOLOv9 in signal processing for underwater detection scanning sonar; they conducted ablation experiments that confirmed its superiority over other models such as Faster RCNN and YOLOv5. Similarly, Tu et al. [] developed a fully optimized YOLOv10 model for diseased fish detection, demonstrating the potential of these models in real-world scenarios.

4. Adaptive Image Processing Processes Supporting Ocean Image Recognition and Detection

In ocean image recognition and detection, specific adaptive image processing processes provide critical support for ocean image recognition and detection, which forms a complete process from data preprocessing to image analysis. These image-processing processes are realized by introducing related DL-based network structures.

4.1. Adaptive Image Annotation

As an important part of the data training and preparation phase, underwater image data annotation refers to manually or semi-automatically labeling and annotating ocean environment images or video data obtained through underwater detection equipment. This process aims to provide structured and labeled training data for deep learning models to support ocean image recognition and target detection tasks. It annotates target object images to classify them or calibrates the position (bounding box), contour (semantic segmentation), or pixel-level annotation (instance segmentation) of the target for target tracking and recognition.

However, traditional manual image annotation has drawbacks, such as low efficiency in time- and labor-consuming tasks, inconsistent annotation standards, environmental interference in annotation quality, and difficulty annotating small and dense targets. Intelligent technology methods and tools have been developed and widely used to cope with these issues in underwater image analysis and annotation, such as marine image annotation software (MIAS) []. Schoening et al. [] considered past observations in manual ocean image annotation (MIA), analyzed the performance of human expert annotation, and compared it with computational methods using a statistical framework. Giordano et al. [] explored a diversity-based clustering technique for image annotation. They achieved remarkable testing performance on a large fish image dataset, ensuring the diversity of annotation items while maintaining accuracy. In adaptive image annotation, pre-trained network models such as Mask R-CNN [,,] are usually used to guide underwater image annotation automatically, significantly improving the efficiency. For example, Wu et al. [] proposed an image localization perception intelligent annotation algorithm LACP AL based on the Faster R-CNN active learning framework to improve the recognition accuracy of underwater image detection models.

4.2. Adaptive Image Feature Enhancement

Underwater target detection and image recognition encounter many challenges, such as signal attenuation caused by the seawater environment. These factors result in low contrast, poor clarity, and color degradation in underwater images. Image feature enhancement technology helps to enhance image features and mitigates the impact of environmental interference, improving the recognition and detection capabilities of relevant network models. Deep learning network models play an important role in adaptive enhancement of ocean images. Lisani et al. [] evaluated seven state-of-the-art techniques for underwater image enhancement and ranked their practicality in the annotation process: InfoLoss, BAL, Fusion, UCM, ARC, UDCP, and MSR. It often enhances image features by introducing attention modules in image detection models. Taking the well-known YOLOv7 as an example, scholars have introduced attention mechanisms to enhance its image detection performance, as shown in Table 4.

Table 4.

Attention mechanisms introduced in YOLO model and their and enhancement effects.

4.3. Adaptive Image Segmentation

As the core task of ocean image recognition and detection, image segmentation provides structured data support for subsequent analysis and decision-making in ocean image detection by separating targets and backgrounds. This technology divides the image into several subregions with similar attributes by leveraging the features of pixels or regions, such as color, texture, shape, and brightness. It achieves adaptive segmentation of ocean images through deep learning network models, including instance segmentation and semantic segmentation.

- Instance segmentation

Instance segmentation means pixel-level segmentation, which is achieved through joint learning of target localization, classification, and pixel-level segmentation. It provides high-precision data for ocean image detection by accurately distinguishing and labeling each independent target instance in the image. The best-known adaptive instance segmentation models include Mask R-CNN [], YOLACT [], and the SOLO series []. The primary purpose of semantic segmentation is to classify different regions of an image by assigning a category label to each pixel.

- Semantic segmentation

Semantic segmentation is a technique that classifies each pixel in an image into a specific category, such as “coral”, “seaweed”, “sand”, “fish”, and “plastic waste”. Unlike instance segmentation, semantic segmentation does not distinguish individual instances of the same target type but focuses on semantic parsing of the global scene. The best-known adaptive semantic segmentation models include FCN [], path aggregation network (PAN) [], PSPNet [], and SegNet [], which are not simply tools but technological innovations; they enable us to effectively segment targets of interest from signal detection images and process images with complex backgrounds. For example, Yu et al. [] proposed a multi-attention PAN with a pyramid network for the semantic segmentation of marine images, as shown in Figure 9. In this model, the intelligent perception network model combines the FPN backbone and bottom-up path enhancement module to significantly enhance the network model’s image recognition and semantic segmentation capabilities.

Figure 9.

Multi-attention path aggregation network with pyramid network.

- Scene applicability of adaptive image segmentation models

Different network structures are suitable for different semantic segmentation tasks. For example, UNet [,,] is suitable for small sample image semantic segmentation. Hasimoto-Beltran et al. [] proposed a multi-channel DNN model based on UNet and ResNet [] to realize the pixel-level segmentation of marine oil pollution detection images, while the DeepLab series [] are suitable for high-precision image semantic segmentation. Among them, DeepLabv3+ is the most popular advanced network model, being widely used in ocean intelligent detection [,,]. However, high-precision and complex semantic segmentation tasks consume a significant amount of computational resources. To address these challenges, a lightweight end-to-end semantic segmentation network combined with attention and feature fusion modules has been developed and applied []. For example, Wang et al. [] developed a lightweight multi-level attention adaptive feature fusion segmentation network (MA2Net) that integrates the proposed lightweight attention network (LAN) with multi-scale feature pyramid (MASPP) and adaptive feature fusion (AFF) models. This model enhances the ability of neural networks to segment target images in an end-to-end manner, without relying on complex DCNN models, and it realizes fast inference and high-precision semantic segmentation.

5. Impact of Nonlinear Noise on Ocean Signal Detection and Countermeasures

5.1. Impact of Nonlinear Noise on Ocean Signal Detection

The generation of ocean nonlinear noise [] involves multiple complex physical processes, mainly derived from nonlinear effects in fluid dynamics, sound wave propagation, and environmental interactions. Ocean nonlinear noise, whether induced by natural environmental changes [,] or human activities [,], may adversely affect ocean signal detection, presenting a complex challenge in this field. Ainslie and McColm [] systematically explored the basic principles, techniques, and applications of underwater acoustics; and the impact of underwater noise on ecology and detection systems.

The application practice in ocean engineering has shown that ocean nonlinear noise affects ocean signal detection like sonar or radar. Underwater background noise is a complex issue that interferes with underwater target detection, causing signal distortion and attenuation. The potential for nonlinear noise to distort target echo signals and reduce the signal-to-noise ratio (SNR) makes it challenging to separate and identify the target signal. This challenge is particularly pronounced for sonar detection signals, as the interference of nonlinear noise affects the accuracy of target image feature extraction and recognition. The energy loss caused by nonlinear noise interference can lead to uneven signal image intensity, reducing the accuracy and efficiency of target detection.

Furthermore, the nonlinear noise generated by ocean intelligent detection devices and sensors themselves can affect their performance. Therefore, marine environmental noise prediction has become a valuable method for characterizing the detection performance of sonar systems []. The intrinsic causal relationship between wind speed and noise intensity can be used to quantify the impact of underwater background noise on marine sonar systems []. Da et al. [] have pointed out that the nonlinear noise of the ocean determines the detection range of passive and active sonar. Similarly, Audoly & Lantéri [] have highlighted that self-noise can reduce the detection performance of underwater sonar.

5.2. Coping Methods for Nonlinear Noise Impacts on Ocean Image Detection

5.2.1. Traditional Denoising Methods

Traditional underwater image denoising methods mainly rely on mathematical or physical models, such as spatial domain filtering, transform domain filtering, statistical modeling, and physical model-based methods.

Within the spatial domain filtering method, local or global operations are performed in the image pixel space to suppress noise through neighborhood pixel weighting or sorting. This method can be used for underwater sonar images or video denoising [,]. The industry standard for laser detection is spatial optical coherent filtering, a testament to this method’s widespread use and reliability in reducing the interference of nonlinear noise in underwater laser detection and improving detection performance [].

The transform domain method converts an image to the frequency domain or time-frequency domain and removes noise through threshold processing or coefficient correction. This includes wavelet threshold denoising [,] and Fourier Filtering []. The former is usually used for the removal of high-frequency noise from underwater blurred images, and the latter is usually used for elimination of band noise in satellite remote sensing images.

The statistical modeling methods are based on modeling the statistical characteristics of noise or images and restoring clean images through optimal estimation, which includes Wiener filtering [] and Bayesian estimation []. The former is usually used for low-frequency electronic noise suppression in sonar images, and the latter is usually used for color correction and noise joint optimization of underwater images.

The physics model-based approach refers to using ocean optical or acoustic propagation models to reverse engineer the process of noise formation. The key method is the underwater image restoration model, which estimates light rays’ attenuation and backscattering components to restore clear images based on the Jaffe McGlamery equation. In this method, underwater observation images can be represented as

where and represent the observation and clear images, respectively. represents the background scattered light; is the attenuation coefficient; and is distance from the target object to the camera. Based on this, the underwater image restoration method can be represented as

In practical applications, multiple traditional methods are often combined to process different types of noise in stages. Common processes are filtering denoising, physical model restoration, and wavelet denoising in sequence.

5.2.2. Dl-Based Denoising Methods

However, traditional mathematical or physical denoising methods have limitations, such as insufficient modeling of complex noise, manual parameter tuning dependency, and image detail loss. Deep learning algorithms provide a more comprehensive and effective method to address the interference of nonlinear noise in ocean intelligent detection. They achieve automatic noise reduction and perform better in complex noise modeling and detail preservation.

Deep learning algorithms achieve efficient image denoising in ocean target detection and image recognition by automatically learning and processing the complex relationship between noise and effective signals. The specific noise reduction principle is mainly reflected in noise learning, signal reconstruction, and image enhancement. The first is noise residual or distribution learning with deep learning networks combined with data augmentation and transfer learning []. Second is the signal reconstruction with deep learning algorithms to enhance the signal features [,]. Last is distinguishing between noise and effective signals by automatically extracting multi-scale features using deep learning network structures. These principles are not singular and unchanging but tools we can shape according to our needs.

The first noise reduction principle is typically reflected in the commonly used deep learning methods such as denoising neural networks (DnCNNs) [] and denoising diffusion probabilistic models (DDPMs) [,]. Moreover, the second noise reduction principle is typically reflected in the commonly used deep learning methods like denoising generative adversarial networks (DnGANs) [,]. DnCNN is an end-to-end denoising model based on a convolutional neural network (CNN), which directly learns the mapping from noisy images to clean images through multi-layer convolution and nonlinear activation functions. DDPM, intriguingly, belongs to the diffusion model, which simulates the forward process of gradually adding noise to clean images and then learns the backward denoising process. DnGAN achieves noise-to-clean image mapping through generator–discriminator adversarial training. Table 5 shows the core innovations, denoising strategies for dealing with complex marine environments, applicable scenarios, and limitations of the above three methods.

Table 5.

Algorithm and application analysis of DnCNN, DDPM and DnGAN.

The third noise reduction principle is usually realized by specific deep learning network structures with attention mechanisms or other image enhancement modules. This adaptable method reduces noise by enhancing and extracting multi-scale image features to achieve signal-to-noise separation. It is particularly useful in reducing the interference of nonlinear noise in underwater images derived from sonar or other sensors with intense noise and low resolutions. Table 6 shows related deep-learning network structures and denoising methods based on this principle of ocean detection applications.

Table 6.

Deep learning network structures used for signal-to-noise separation and denoising.

6. Conclusions

This work reviews key technological developments in ocean intelligent perception and image processing. It covers ocean intelligent perception devices and image acquisition, image recognition and detection models, adaptive image processing processes, and coping methods for nonlinear noise interference. The review begins with a description of ocean intelligent detection technology and image acquisition via various sensors such as optical, acoustic, and laser sensors. It then shifts its focus to image processing network models, from the basic DCNN to one-stage and two-stage detection network models. The article provided a detailed analysis of the mathematical structures of the YOLO models and their improvements. It also reviewed adaptive image processing processes and their critical support for ocean image detection, such as image annotation, feature enhancement, and image segmentation. Theoretical research and practical application have shown that nonlinear noise from the seawater environment and the equipment significantly influence signal image processing. This work also focuses on countermeasures to cope with nonlinear noise impact, with deep learning methods for image feature enhancement, signal–noise separation, and noise suppression.

This review highlights that the most significant challenge stems from technological and environmental factors, presenting as underwater image quality degradation, annotated data scarcity, underwater sensor device resource limitations, and nonlinear noise interference. The solution method for underwater image quality degradation and ocean annotation data scarcity will focus on image enhancement, data generation, and transfer learning. Considering the device resource and environmental constraints, the optimization scheme based on segmentation technology mainly includes adaptive preprocessing enhancement and lightweight segmentation model design. On the one hand, it can introduce underwater image restoration networks as pre-segmentation modules to enhance the image data preprocessing capability of the detection model. On the other hand, it can use lightweight architectures to optimize the model by reducing the number of parameters through depthwise separable convolution. Furthermore, it is necessary to combine nonlinear modeling, multi-modal data fusion, and physics-guided deep learning to develop more robust marine environmental noise suppression technologies in the future.

Author Contributions

Conceptualization, H.L., Y.L. and Y.T.; Methodology, H.L., Y.T. and T.Q.; Investigation, H.L. and Y.L.; Validation, H.L., Y.L. and T.Q.; Data curation, Y.L. and Y.T.; Writing—original draft preparation, Y.L. and T.Q.; Software, Y.L. and T.Q.; Formal analysis, T.Q. and Y.T.; Project administration, H.L. and Y.L.; Writing—review and editing, Y.L. and T.Q. All authors have read and agreed to the published version of the manuscript.

Funding

This work is supported by the Excellent Youth Program of Philosophy and Social Science of Anhui Universities (no. 2023AH030025); Graduate Education Innovation Fund of Anhui Polytechnic University (no. Xjky2022178, no. Xjky2022194); and Key Projects of Humanities and Social Sciences in Anhui Province’s Universities (no. 2022AH010459).

Data Availability Statement

The datasets supporting the conclusion of this article are included within the article.

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| DL | Deep learning |

| RUV | Remote underwater video |

| AUV | Autonomous underwater vehicle |

| DnCNN | denoising Convolutional Neural Network |

| DnGAN | denoising Generative Adversarial Network |

| YOLO | You Look Once |

| LiDAR | Light Detection and Ranging |

| Sonar | Sound Navigation and Ranging |

| FLS | Forward-looking sonar |

| SSS | Side-scan sonar |

| SAS | Synthetic aperture sonar |

| DIDSON | Dual Frequency Sonar |

| 1DCTN | End-to-end underwater acoustic target recognition model |

| DCNN | Deep convolutional neural network |

| APRCNN | Audio Perspective Region-based Convolutional Neural Network |

| SVM | Support vector machine |

| R-CNN | Region-based Convolutional Neural Network |

| SSD | Single Shot MultiBox Detector |

| FCN | Fully Convolutional Network |

| MSE | Mean Squared Error |

| BCE | Binary Cross Entropy |

| PAN | Path aggregation networks |

| FPN | Feature pyramid networks |

| GPA | Gated path aggregate |

| SNR | Signal-to-noise ratio |

References

- Lu, F.Q.; Gao, X.Y.; Ma, J.; Xu, J.F.; Xue, Q.S.; Cao, D.S.; Quan, X.Q. Intelligent marine detection based on spectral imaging and neural network modeling. Ocean. Eng. 2024, 310, 118640. [Google Scholar] [CrossRef]

- Yan, Y.J.; Liu, Y.D.; Fang, J.; Lu, Y.F.; Jiang, X.C. Application status and development trends for intelligent perception of distribution network. High Volt. 2021, 6, 938–954. [Google Scholar] [CrossRef]

- Torres, A.; Abril, A.M.; Clua, E.E.G. A Time-Extended (24 h) Baited Remote Underwater Video (BRUV) for monitoring pelagic and nocturnal marine species. J. Mar. Sci. Eng. 2020, 8, 208. [Google Scholar] [CrossRef]

- Wang, Y.Y.; Ma, X.R.; Wang, J.; Hou, S.L.; Dai, J.; Gu, D.B.; Wang, H.Y. Robust AUV visual loop-closure detection based on Variational Autoencoder Network. IEEE Trans. Ind. Inform. 2022, 18, 8829–8838. [Google Scholar]

- Lee, S.M.; Roh, M.I.; Jisang, H.; Lee, W. A method of estimating the locations of other ships from ocean images. Korean J. Comput. Des. Eng. 2020, 25, 320–328. [Google Scholar] [CrossRef]

- Cheng, S.J.; Shi, X.C.; Mao, W.J.; Alkhalifah, T.A.; Yang, T.; Liu, Y.Z.; Sun, H.P. Elastic seismic imaging enhancement of sparse 4C ocean-bottom node data using deep learning. IEEE Trans. Geosci. Remote Sens. 2023, 61, 5910214. [Google Scholar] [CrossRef]

- Wang, C.; Stopa, J.E.; Vandemark, D.; Foster, R.; Ayet, A.; Mouche, A.; Chapron, B.; Sadowski, P. A multi-tagged SAR ocean image dataset identifying atmospheric boundary layer structure in winter tradewind conditions. Geosci. Data J. 2025, 12, e282. [Google Scholar] [CrossRef]

- Dong, K.Y.; Liu, T.; Shi, Z.; Zhang, Y. Accurate and real-time visual detection algorithm for environmental perception of USVS under all-weather conditions. J. Real-Time Image Process. 2024, 21, 36. [Google Scholar] [CrossRef]

- Zha, Z.J.; Ping, X.B.; Wang, S.L.; Wang, D.L. Deblurring of beamformed images in the ocean acoustic waveguide using deep learning-based deconvolution. Remote Sens. 2024, 16, 2411. [Google Scholar] [CrossRef]

- Sattar, J.; Giguère, P.; Dudek, G. Sensor-based behavior control for an autonomous underwater vehicle. Int. J. Robot. Res. 2009, 28, 701–713. [Google Scholar]

- Di Ciaccio, F. The supporting role of artificial intelligence and machine/deep learning in monitoring the marine environment: A bibliometric analysis. Ecol. Quest. 2024, 35, 1–30. [Google Scholar]

- Xu, X.P.; Lin, X.X.; An, X.R. Introduction to image pro-processing subsystem of geostationary ocean color imager (GOCI). Korean J. Remote Sens. 2010, 26, 167–173. [Google Scholar]

- Chen, S.D.; Liu, Y. Migration learning based on computer vision and its application in ocean image processing. J. Coast. Res. 2020, S104, 281–285. [Google Scholar] [CrossRef]

- Xue, L.Z.; Zeng, X.Y.; Jin, A.Q. A novel deep-learning method with channel attention mechanism for underwater target recognition. Sensors 2022, 22, 5492. [Google Scholar] [CrossRef] [PubMed]

- Gong, W.J.; Tian, J.; Liu, J.Y. Underwater object classification method based on depthwise separable convolution feature fusion in sonar images. Appl. Sci. 2022, 12, 3268. [Google Scholar] [CrossRef]

- Huang, Y.; Li, W.; Yuan, F. Speckle noise reduction in sonar imagebased on adaptive redundant dictionary. J. Mar. Sci. Eng. 2020, 8, 761. [Google Scholar]

- Belcher, E.; Matsuyama, B.; Trimble, G. Object identification with acoustic lenses. In Proceedings of the Annual Conference of the Marine-Technology-Society, Honolulu, HI, USA, 5–8 November 2001. [Google Scholar]

- Shin, Y.S.; Cho, Y.G.; Choi, H.T.; Kim, A. Comparative study of sonar image processing for underwater navigation. J. Ocean. Eng. Technol. 2016, 30, 214–220. [Google Scholar]

- Chen, Y.H.; Liu, X.Y.; Jiang, J.Y.; Gao, S.Y.; Liu, Y.; Jiang, Y.Q. Reconstruction of degraded image transmitting through ocean turbulence via deep learning. J. Opt. Soc. Am. A Opt. Image Sci. Vis. 2023, 40, 2215–2222. [Google Scholar] [CrossRef]

- Yang, C.; Zhang, C.; Jiang, L.Y.; Zhang, X.W. Underwater image object detection based on multi-scale feature fusion. Mach. Vis. Appl. 2024, 35, 124. [Google Scholar] [CrossRef]

- Wang, Z.; Guo, J.X.; Zeng, L.Y.; Zhang, C.L.; Wang, B.H. MLFFNet: Multilevel feature fusion network for object detection in sonar images. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5119119. [Google Scholar]

- Li, G.Y.; Liao, X.F.; Chao, B.H.; Jin, Y. Multi-scale feature fusion algorithm for underwater remote object detection in forward-looking sonar images. J. Electron. Imaging 2024, 33, 063031. [Google Scholar]

- Xu, T.; Zhou, J.Y.; Guo, W.T.; Cai, L.; Ma, Y.K. Fine reconstruction of underwater images for environmental feature fusion. Int. J. Adv. Robot. Syst. 2021, 18, 17298814211039687. [Google Scholar] [CrossRef]

- Gong, T.Y.; Zhang, M.M.; Zhou, Y.; Bai, H.H. Underwater image enhancement based on color feature fusion. Electronics 2023, 12, 4999. [Google Scholar] [CrossRef]

- Chen, D.; Kang, F.; Li, J.J.; Zhu, S.S.; Liang, X.W. Enhancement of underwater dam crack images using multi-feature fusion. Autom. Constr. 2024, 167, 105727. [Google Scholar]

- Zhu, Y.T.; Chao, X.P.; Wang, X.Q.; Chen, J.; Huang, H.F. An unsupervised ocean surface waves suppression algorithm based on sub-aperture SAR images. Int. J. Remote Sens. 2023, 44, 1460–1483. [Google Scholar]

- Chao, X.P.; Wang, Q.S.; Wang, X.Q.; Chen, J.; Zhu, Y.T. Ocean-wave suppression for synthetic aperture radar images by depth counteraction method. Remote Sens. Environ. 2024, 305, 114086. [Google Scholar]

- Jiang, Z.Y.; Gao, X.; Shi, L.; Li, N.; Zou, L. Detection of ocean internal waves based on modified deep convolutional generative adversarial network and WaveNet in moderate resolution imaging spectroradiometer images. Appl. Sci. 2023, 13, 11235. [Google Scholar] [CrossRef]

- Liu, F.; Song, Q.Z.; Jin, G.H. Expansion of restricted sample for underwater acoustic signal based on Generative Adversarial Networks. In Proceedings of the 10th International Conference on Graphics and Image Processing, Chengdu, China, 12–14 December 2018. [Google Scholar]

- Er, M.J.; Chen, M.J.; Zhang, Y.; Gao, W.X. Research challenges, recent advances, and popular datasets in deep learning-based underwater marine object detection: A review. Sensors 2023, 23, 1990. [Google Scholar] [CrossRef]

- Li, X.B.; Yan, L.; Qi, P.F.; Zhang, L.P.; Goudail, F.; Liu, T.G.; Zhai, J.S.; Hu, H.F. Polarimetric imaging via deep learning: A review. Remote Sens. 2023, 15, 1540. [Google Scholar] [CrossRef]

- Li, J.; Xu, W.K.; Deng, L.M.; Xiao, Y.; Han, Z.Z.; Zheng, H.Y. Deep learning for visual recognition and detection of aquatic animals: A review. Rev. Aquac. 2023, 15, 409–433. [Google Scholar]

- Saleh, A.; Sheaves, M.; Azghadi, M.R. Computer vision and deep learning for fish classification in underwater habitats: A survey. Fish Fish. 2022, 23, 977–999. [Google Scholar] [CrossRef]

- Neupane, D.; Seok, J. A review on deep learning-based approaches for automatic sonar target recognition. Electronics 2020, 9, 1972. [Google Scholar] [CrossRef]

- Whitaker, S.; Barnard, A.; Anderson, G.D.; Havens, T.C. Through-ice acoustic source tracking using vision transformers with ordinal classification. Sensors 2022, 22, 4703. [Google Scholar] [CrossRef]

- Lei, Z.F.; Lei, X.F.; Na, W.; Zhang, Q.Y. Present status and challenges of underwater acoustic target recognition technology: A review. Front. Phys. 2022, 10, 1044890. [Google Scholar]

- Kim, H.G.; Seo, J.-M.; Kim, S.M. Comparison of GAN deep learning methods for underwater optical image enhancement. J. Ocean. Eng. Technol. 2022, 36, 32–40. [Google Scholar] [CrossRef]

- Chai, Y.Q.; Yu, H.H.; Xu, L.; Li, D.L.; Chen, Y.Y. Deep learning algorithms for sonar imagery analysis and its application in aquaculture: A review. IEEE Sens. J. 2023, 23, 28549–28563. [Google Scholar] [CrossRef]

- Wang, C.Y.; Liao, H.Y.M. YOLOv1 to YOLOv10: The fastest and most accurate real-time object detection systems. Apsipa Trans. Signal Inf. Process. 2024, 13, e29. [Google Scholar] [CrossRef]

- Hussain, M. YOLOv1 to v8: Unveiling Each Variant-A Comprehensive Review of YOLO. IEEE Access 2024, 12, 42816–42833. [Google Scholar] [CrossRef]

- Jiao, L.; Abdullah, M.I. YOLO series algorithms in object detection of unmanned aerial vehicles: A survey. Serv. Oriented Comput. Appl. 2024, 18, 269–298. [Google Scholar] [CrossRef]

- Liu, S.; Xu, H.L.; Lin, Y.; Gao, L. Visual navigation for recovering an AUV by another AUV in shallow water. Sensors 2019, 19, 1889. [Google Scholar] [CrossRef]

- Wang, X.M.; Zerr, B.; Thomas, H.; Clement, B.; Xie, Z.X. Pattern formation of multi-AUV systems with the optical sensor based on displacement-based formation control. Int. J. Syst. Sci. 2020, 51, 348–367. [Google Scholar]

- Auster, P.J.; Lindholm, J.; Plourde, M.; Barber, K.; Singh, H. Camera configuration and use of AUVs to census mobile fauna. Mar. Technol. Soc. J. 2007, 41, 49–52. [Google Scholar]

- Rao, J.J.; Xu, K.; Chen, J.B.; Lei, J.T.; Zhang, Z.; Zhang, Q.Y.; Giernacki, W.; Liu, M. Sea-surface target visual tracking with a multi-camera cooperation approach. Sensors 2022, 22, 693. [Google Scholar] [CrossRef]

- Yang, D.F.; Solihin, M.I.; Zhao, Y.W.; Yao, B.C.; Chen, C.R.; Cai, B.Y.; Machmudah, A. A review of intelligent ship marine object detection based on RGB camera. IET Image Process. 2024, 18, 281–297. [Google Scholar]

- Sahoo, A.; Dwivedy, S.K.; Robi, P. Advancements in the field of autonomous underwater vehicle. Ocean. Eng. 2019, 181, 145–160. [Google Scholar]

- Alaie, H.K.; Farsi, H. Passive sonar target detection using statistical classifier and adaptive threshold. Appl. Sci. 2018, 8, 61. [Google Scholar] [CrossRef]

- Abu, A.; Diamant, R. Enhanced fuzzy-based local informationalgorithm for sonar image segmentation. IEEE Trans. Image Process. 2020, 29, 445–460. [Google Scholar]

- Kim, S.H. A study on the position control system of the small ROV using sonar sensors. J. Soc. Nav. Archit. Korea 2008, 45, 579–589. [Google Scholar]

- Jiang, J.J.; Wang, X.Q.; Duan, F.J.; Fu, X.; Huang, T.T.; Li, C.Y.; Ma, L.; Bu, L.R.; Sun, Z.B. A sonar-embedded disguised communication strategy by combining sonar waveforms and whale call pulses for underwater sensor platforms. Appl. Acoust. 2019, 145, 255–266. [Google Scholar]

- Yuan, X.; Li, N.; Gong, X.B.; Yu, C.L.; Zhou, X.T.; Ortega, J.F.M. Underwater wireless sensor network-based delaunay triangulation (UWSN-DT) algorithm for sonar map fusion. Comput. J. 2023, 67, 1699–1709. [Google Scholar]

- Xi, M.; Wang, Z.J.; He, J.Y.; Wang, Y.B.; Wen, J.B.; Xiao, S.; Yang, J.C. High-Precision underwater perception and path planning of AUVs based on Quantum-Enhanced. IEEE Trans. Consum. Electron. 2024, 70, 5607–5617. [Google Scholar]

- Chen, G.J.; Cheng, D.G.; Chen, W.; Yang, X.; Guo, T.Z. Path planning for AUVs based on improved APF-AC algorithm. CMC Comput. Mater. Contin. 2024, 78, 3721–3741. [Google Scholar]

- Jangir, P.K.; Ewans, K.C.; Young, I.R. On the functionality of radar and laser ocean wave sensors. J. Mar. Sci. Eng. 2022, 10, 1260. [Google Scholar] [CrossRef]

- Kincade, K. Sensors and lasers map ebb and flow of ocean life. Laser Focus World 2003, 39, 91–96. [Google Scholar]

- Laux, A.; Mullen, L.; Perez, P.; Zege, E. Underwater laser range finder. In Ocean Sensing and Monitoring IV, Proceedings of the SPIE Defense, Security, and Sensing, Baltimore, MD, USA, 23–27 April 2012; SPIE: Bellingham, WA, USA, 2012; Volume 8732, p. 83721B. [Google Scholar]

- Fournier, G.R.; Bonnier, D.; Forand, J.L.; Pace, P.W. Range-gated underwater laser imaging-system. Opt. Eng. 1993, 32, 2185–2190. [Google Scholar]

- Klepsvik, J.O.; Bjarnar, M.L. Laser-radar technology for underwater inspection, mapping. Sea Technol. 1996, 37, 49–53. [Google Scholar]

- Long, H.; Shen, L.Q.; Wang, Z.Y.; Chen, J.B. Underwater forward-looking sonar images target detection via speckle reduction and scene prior. IEEE Trans. Geosci. Remote Sens. 2023, 61, 5604413. [Google Scholar]

- Franchi, M.; Ridolfi, A.; Allotta, B. Underwater navigation with 2D forward looking SONAR: An adaptive unscented Kalman filter-based strategy for AUVs. J. Field Robot. 2021, 38, 355–385. [Google Scholar]

- Greene, A.; Rahman, A.F.; Kline, R.; Rahman, M.S. Side scan sonar: A cost-efffcient alternative method for measuring seagrass cover in shallow environments. Estuar. Coast. Shelf Sci. 2018, 207, 250–258. [Google Scholar]

- Belcher, E.; Hanot, W.; Burch, J. Dual-frequency identification sonar (DIDSON). In Proceedings of the 2002 Interntional Symposium on Underwater Technology, Tokyo, Japan, 16–19 April 2002. [Google Scholar]

- Joe, H.; Cho, H.; Sung, M.; Kim, J.; Yu, S.-c. Sensor fusion of two sonar devices for underwater 3D mapping with an AUV. Auton. Robot. 2021, 45, 543–560. [Google Scholar]

- Christensen, J.H.; Mogensen, L.V.; Ravn, O. Side-Scan Sonar imaging: Real-time acoustic streaming. In Proceedings of the 13st IFAC Conference on Control Applications in Marine Systems, Robotics, and Vehicles, Oldenburg, Germany, 22–24 September 2021. [Google Scholar]

- Zacchini, L.; Franchi, M.; Ridolfi, A. Sensor-driven autonomous underwater inspections: A receding-horizon RRT-based view planning solution for AUVs. J. Field Robot. 2022, 39, 499–527. [Google Scholar]

- Li, X.H.; Li, Y.A.; Yu, J.; Chen, X.; Dai, M. PMHT approach for multi-target multi-sensor sonar tracking in clutter. Sensors 2015, 15, 28177–28192. [Google Scholar] [CrossRef]

- Moursund, R.A.; Carlson, T.J.; Peters, R.D. A fisheries application of a dual-frequency identification sonar acoustic camera. In Proceedings of the ICES Symposium on Acoustics in Fisheries and Aquatic Ecology, Montpellier, France, 10–14 June 2002. [Google Scholar]

- Able, K.W.; Grothues, T.M.; Rackovan, J.L.; Buderman, F.E. Application of Mobile Dual-frequency Identification Sonar (DIDSON) to Fish in Estuarine Habitats. Northeast. Nat. 2014, 21, 192–209. [Google Scholar]

- Handegard, N.O.; Williams, K. Automated tracking of fish in trawls using the DIDSON (Dual frequency IDentification SONar). ICES J. Mar. Sci. 2008, 65, 636–644. [Google Scholar]

- Nichols, O.C.; Eldredge, E.; Cadrin, S.X. Gray seal behavior in a fish weir observed using Dual-Frequency identification sonar. Mar. Technol. Soc. J. 2014, 48, 72–78. [Google Scholar]

- McCann, E.L.; Johnson, N.S.; Hrodey, P.J.; Pangle, K.L. Characterization of sea lamprey stream entry using Dual-Frequency identification sonar. Trans. Am. Fish. Soc. 2018, 147, 514–524. [Google Scholar]

- Zhao, W.; Li, X.; Pang, Z.Q.; Hao, C.P. A novel distributed bearing-only target tracking algorithm for underwater sensor networks with resource constraints. IET Radar Sonar Navig. 2024, 18, 1161–1177. [Google Scholar]

- Tang, M.Q.; Ren, C.J.; Xin, Y.L. Efficient resource allocation algorithm for underwater wireless sensor networks based on improved stochastic gradient descent method. AD HOC Sens. Wirel. Netw. 2021, 49, 207–222. [Google Scholar]

- Wu, H.J.; Wang, X.L.; Liao, H.B.; Jiao, X.B.; Liu, Y.Y.; Shu, X.J.; Wang, J.L.; Rao, Y.J. Signal processing in smart fiber-optic distributed acoustic sensor. Acta Opt. Sin. 2024, 44, 0106009. [Google Scholar]

- Zhao, D.D.; Mao, W.B.; Chen, P.; Dang, Y.J.; Liang, R.H. FPGA-based real-time synchronous parallel system for underwater acoustic positioning and navigation. IEEE Trans. Ind. Electron. 2024, 71, 3199–3207. [Google Scholar]

- Li, C.Y.; Guo, S.X. Characteristic evaluation via multi-sensor information fusion strategy for spherical underwater robots. Inf. Fusion 2023, 95, 199–214. [Google Scholar]

- Periola, A.A.; Alonge, A.A.; Ogudo, K.A. Edge computing for big data processing in underwater applications. Wirel. Netw. 2022, 28, 2255–2271. [Google Scholar]

- Fuentes, A.J.; Suchy, M.; Palomo, P.B. The greatest challenge for URN reduction in the oceans by means of engineering. In Proceedings of the MTS/IEEE Oceans Seattle Conference, Seattle, WA, USA, 27–31 October 2019. [Google Scholar]

- Zheng, L.Y.; Liu, M.Q.; Zhang, S.L.; Liu, Z.A.; Dong, S.L. End-to-end multi-sensor fusion method based on deep reinforcement learning in UASNs. Ocean. Eng. 2024, 305, 117904. [Google Scholar]

- Bhattacharjee, S.; Shanmugam, P.; Das, S. A deep-learning-based lightweight model for ship localizations in SAR Images. IEEE Access 2023, 11, 94415–94427. [Google Scholar]

- Huang, J.F.; Zhang, T.J.; Zhao, S.J.; Zhang, L.; Zhou, Y.C. An underwater organism image dataset and a lightweight module designed for object detection networks. ACM Trans. Multimed. Comput. Commun. Appl. 2024, 20, 147. [Google Scholar]

- Lu, Y.; Yang, M.; Liu, R.W. DSPNet: Deep learning-enabled blind reduction of speckle noise. In Proceedings of the 25th International Conference on Pattern Recognition, Electronic Network, Milan, Italy, 10–15 January 2021. [Google Scholar]

- Wang, H.; Gao, N.; Xiao, Y.; Tang, Y. Image feature extraction based on improved FCN for UUV side-scan sonar. Mar. Geophys. Res. 2020, 41, 18. [Google Scholar]

- Cheng, Z.; Huo, G.; Li, H. A multi-domain collaborative transfer learning method with multi-scale repeated attention mechanism for underwater side-scan sonar image classification. Remote Sens. 2022, 14, 355. [Google Scholar] [CrossRef]

- Ribeiro, P.O.C.S.; dos Santos, M.M.; Drews, P.L.J.; Botelho, S.S.C.; Longaray, L.M.; Giacomo, G.G.; Pias, M.R. Underwater place recognition in unknown environments with triplet based acoustic image retrieval. In Proceedings of the 17th IEEE International Conference on Machine Learning and Applications, Orlando, FL, USA, 17–20 December 2018. [Google Scholar]

- Wang, Z.; Guo, J.; Huang, W.; Zhang, S. Side-scan sonar image segmentation based on multi-channel fusion convolution neural networks. IEEE Sens. J. 2022, 22, 5911–5928. [Google Scholar]

- Yu, S.B. Sonar image target detection based on deep learning. Math. Probl. Eng. 2022, 2022, 5294151. [Google Scholar]

- Dai, Z.Z.; Liang, H.; Duan, T. Small-sample sonar image classification based on deep learning. J. Mar. Sci. Eng. 2022, 10, 1820. [Google Scholar] [CrossRef]

- Ge, H.L.; Dai, Y.W.; Zhu, Z.Y.; Liu, R.B. A deep learning model applied to optical image target detection and recognition for the identification of underwater biostructures. Machines 2022, 10, 809. [Google Scholar] [CrossRef]

- Zhang, Y.; Wang, X.W.; Sun, L.; Lei, P.S.; Chen, J.N.; He, J.; Zhou, Y.; Liu, Y.L. Mask-guided deep learning fishing net detection and recognition based on underwater range gated laser imaging. Opt. Laser Technol. 2024, 171, 110402. [Google Scholar] [CrossRef]

- Yang, K.; Wang, B.; Fang, Z.D.; Cai, B.G. An end-to-end underwater acoustic target recognition model based on One-Dimensional Convolution and transformer. J. Mar. Sci. Eng. 2024, 12, 1793. [Google Scholar] [CrossRef]

- Dong, K.Y.; Liu, T.; Shi, Z.; Zhang, Y. Visual detection algorithm for enhanced environmental perception of unmanned surface vehicles in complex marine environments. J. Intell. Robot. Syst. 2024, 110, 1. [Google Scholar] [CrossRef]

- Li, L.L.; Zhang, S.J.; Wang, B. Plant disease detection and classification by deep learning—A review. IEEE Access 2021, 9, 56683–56698. [Google Scholar] [CrossRef]

- Li, Y.; Tang, Y. Design on intelligent feature graphics based on convolution operation. Mathematics 2022, 10, 384. [Google Scholar] [CrossRef]

- Singh, O.D.; Malik, A.; Yadav, V.; Gupta, S.; Dora, S. Deep segmenter system for recognition of micro cracks in solar cell. Multimed. Tools Appl. 2021, 80, 6509–6533. [Google Scholar] [CrossRef]

- Liu, H.Y.; Li, Y. Interaction of Asymmetric Adaptive Network Structures and Parameter Balance in Image Feature Extraction and Recognition. Symmetry 2024, 16, 1651. [Google Scholar] [CrossRef]

- Ma, Y.X.; Zhang, X.B.; Jiang, F.K.; Wei, Z.R.; Liu, C.G. Near-field geoacoustic inversion using bottom reflection signals via self-attention mechanism. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2024, 17, 10545–10558. [Google Scholar] [CrossRef]

- Liu, Y.F.; Zhao, Y.J.; Gerstoft, P.; Zhou, F.; Qiao, G.; Yin, J.W. Deep transfer learning-based variable Doppler underwater acoustic communications. J. Acoust. Soc. Am. 2023, 154, 232–244. [Google Scholar] [CrossRef]

- Scaradozzi, D.; de Marco, R.; Li Veli, D.; Lucchetti, A.; Screpanti, L.; Di Nardo, F. Convolutional Neural Networks for enhancing detection of Dolphin whistles in a dense acoustic environment. IEEE Access 2024, 12, 127141–127148. [Google Scholar]

- Li, X.W.; Huang, W.M.; Peters, D.K.; Power, D. Assessment of synthetic aperture radar image preprocessing methods for iceberg and ship recognition with Convolutional Neural Networks. In Proceedings of the IEEE Radar Conference, Boston, MA, USA, 22–26 April 2019. [Google Scholar]

- Ashok, P.; Latha, B. Feature extraction of underwater acoustic signal target using machine learning technique. Trait. Du Signal 2024, 41, 1303–1314. [Google Scholar]

- Wang, H.Y.; Li, X.F. DeepBlue: Advanced convolutional neural network applications for ocean remote sensing. IEEE Geosci. Remote Sens. Mag. 2024, 12, 138–161. [Google Scholar] [CrossRef]

- Uijlings, J.R.; van de Sande, K.E.; Gevers, T.; Smeulders, A.W. Selective search for object recognition. Int. J. Comput. Vis. 2013, 104, 154–171. [Google Scholar]

- Yuan, Z.W.; Zhang, J. Feature extraction and image retrieval based on AlexNet. In Proceedings of the 8th International Conference on Digital Image Processing, Chengdu, China, 20–23 May 2016. [Google Scholar]