1. Introduction

Traffic classification refers to the process of categorizing network traffic into different types or applications based on the features of network packets. This technology has been widely applied in various domains, including Quality of Service (QoS) control, pricing, resource planning for network service providers, as well as malware detection and intrusion detection [

1,

2]. Due to the growing demand for privacy and security from users and businesses, an increasing number of online applications are adopting encryption protocols (such as SSL/TLS) to protect data. According to the Google Internet Transparency Report of January 2025 [

3], over 97% of internet traffic is currently encrypted via HTTPS. These encryption protocols offer privacy protection to users but also pose greater challenges and difficulties in traffic analysis and classification. Moreover, some malicious actors exploit encryption technologies for illegal activities, which not only disrupt the stability of cyberspace but also present significant threats to national security [

4]. Therefore, to address new environments or unknown encryption strategies, capturing effective features from diverse encrypted traffic and supporting accurate and generalizable traffic classification is crucial for achieving network security and efficient management [

5,

6].

With the evolution of network technologies and the growing demand for application requirements, significant progress has been made in network traffic classification techniques. Researchers have proposed various solutions and frameworks to meet the diverse classification needs. The earliest solution was based on port numbers to classify traffic by identifying specific port numbers to distinguish between traffic types [

2]. However, with the widespread use of port randomization and obfuscation techniques, the accuracy of this method has gradually declined, making it unsuitable for complex application scenarios. Nevertheless, port-based classification methods, due to their simplicity and low computational cost, are still used as auxiliary means in some encrypted traffic classification systems. To address the limitations of port-based classification, methods based on Deep Packet Inspection (DPI) have been proposed [

7]. DPI technology performs precise traffic classification by matching patterns or keywords within data packets, effectively identifying unencrypted traffic. However, due to its heavy reliance on plaintext data, this method has gradually lost its effectiveness in the increasingly encrypted network environment.

In recent years, with the rapid development of machine learning and deep learning technologies, these methods have been widely applied to network traffic classification tasks, leading to new research directions [

8]. Current mainstream encrypted traffic classification methods can be divided into two categories: machine learning methods based on statistical features and deep learning methods based on self-learned features. Machine learning methods based on statistical features design traffic statistical features manually and train classification models, making them suitable for encrypted traffic classification. However, their performance often depends on the quality of feature design and has limited generalization ability. In contrast, deep learning methods based on self-learned features, such as convolutional neural networks (CNN), can automatically extract features directly from raw traffic data, eliminating the reliance on manually designed features [

9]. These methods show significant advantages in feature extraction capability, but the performance of deep learning models heavily relies on the quantity and distribution of labeled data, which can lead to biased features being learned by the model.

How to obtain unbiased and generalizable features from a large amount of unlabeled data has become a key research issue. In this context, a significant breakthrough in the field of natural language processing is the Bidirectional Encoder Representations from Transformers (BERT), proposed by the Google AI Language Team [

10]. BERT learns universal features from large-scale unlabeled data through pre-training and fine-tunes with a small amount of labeled data, significantly improving the model’s generalization performance. This technology has recently been introduced into the field of encrypted traffic classification [

6,

11,

12], achieving good results. However, most existing BERT-based encrypted traffic classification methods primarily focus on packet-level features (the overall structure of traffic) and make limited use of byte-level features (which focus on the byte sequences within each packet and the dependencies between bytes). For instance, methods like PERT [

11] and ET-BERT [

6] rely solely on the packet-level features extracted by BERT for classification without fully exploiting the underlying structural information of the traffic data. This approach performs poorly when facing new encrypted traffic classification tasks, especially when there is a significant distribution shift between the pre-trained data and fine-tuned data.

Therefore, how to combine packet-level features and byte-level features to fully explore the deep characteristics of data packets has become an urgent research direction in the field of encrypted traffic classification. To address this issue, in this paper, we propose the CLA-BERT model to overcome the limitations of previous pre-trained models in encrypted traffic classification. We implement the following strategies: first, the BERT model is used to extract the packet-level feature vector representation of the traffic; then, by combining convolutional neural networks (CNN) and Bidirectional Long Short-Term Memory networks (BiLSTM), we can capture both the local features and global dependencies of byte-level traffic features; finally, a multi-head attention mechanism is employed to fuse the packet-level feature vector and byte-level feature vector into the final traffic feature representation. This makes the feature representation more comprehensive and improves the classification performance, as demonstrated by experiments.

The main contributions of this paper are summarized as follows:

A novel encrypted traffic classification model, CLA-BERT, is proposed. By combining packet-level and byte-level features, the model effectively extracts multi-layered information from encrypted traffic, significantly improving classification performance. Compared to existing methods, CLA-BERT demonstrates stronger expressiveness and accuracy when handling complex encrypted traffic.

Small-sample experiments validate the robustness of CLA-BERT in data-scarce scenarios. Under different data volume conditions (5%, 10%, 30%, 50%, 70%), the F1 scores of CLA-BERT are 93.51%, 94.79%, 97.10%, 97.78%, and 98.09%, respectively. This result indicates that CLA-BERT can maintain highly stable classification performance with small datasets, overcoming the performance bottleneck of traditional models in small-sample scenarios.

CLA-BERT demonstrates strong generalization ability in cross-task scenarios, achieving F1 scores of 99.02%, 99.49%, and 97.78% in VPN encryption service classification, VPN encryption application classification, and TLS 1.3 encryption application classification tasks, respectively. This indicates its strong adaptability across different encrypted traffic classification tasks. However, the performance of CLA-BERT heavily relies on the parameters of the pre-trained model. Future research could further optimize adversarial training strategies to improve the model’s robustness in environments with distribution shifts while also exploring more efficient domain adaptation methods to further enhance the model’s robustness and applicability in cross-task scenarios.

4. Method

In recent years, the combination of BERT and LSTM models has achieved remarkable success in the field of Natural Language Processing (NLP), particularly in sentiment analysis and text classification tasks [

29,

30]. Based on this, existing studies have verified the feasibility of applying BERT to encrypted traffic classification. However, most existing methods [

6,

11,

28] primarily focus on packet-level features and overlook the effective utilization of byte-level features within the packets. Inspired by these studies, this paper proposes the CLA-BERT model, which effectively integrates both packet-level and byte-level features of traffic.

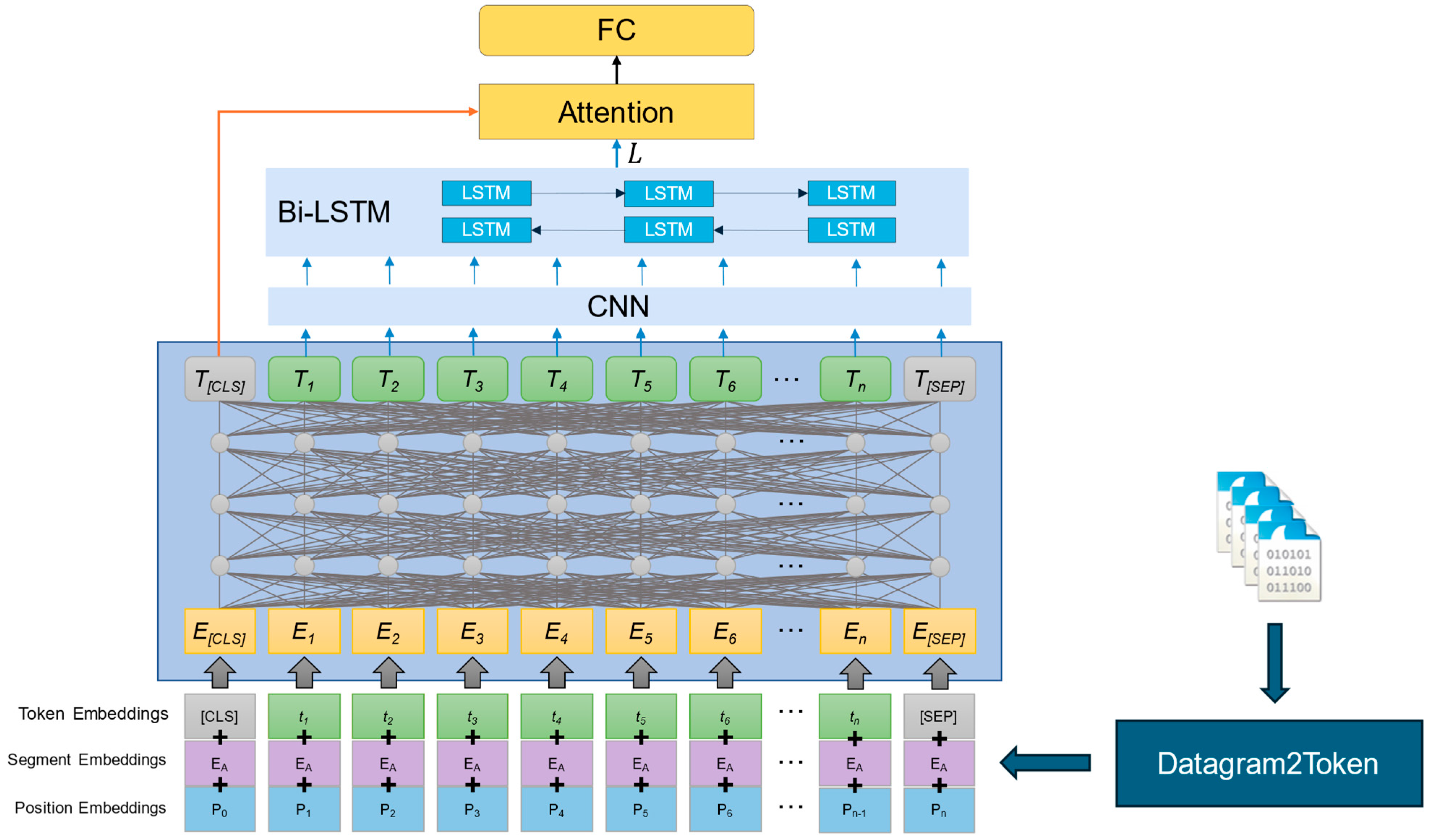

The encrypted traffic classification method proposed in this paper consists of three main steps: data preprocessing, traffic representation, and traffic classification. The model structure is shown in

Figure 1. First, the raw PCAP files undergo data preprocessing, including traffic segmentation, data cleaning, length normalization, format conversion, and sequence generation operations. The entire preprocessing process is completed using the Datagram2Token tool [

6]. Next, the preprocessed data undergo standardization embedding, positional embedding, and segment embedding as model input. The BERT self-attention mechanism is used to extract information containing packet-level features (T

1, …, T

[SEP]). Among them, compared to other tokens, T

[CLS] can more comprehensively fuse the semantic information of each token in the data packets under the control of the attention mechanism and more accurately represent the entire packet’s features. Then, the byte-level feature vectors are input into convolutional neural networks (CNNs) and Bidirectional Long Short-Term Memory Networks (BiLSTMs), respectively, to capture the local features and global dependencies of byte-level traffic. Finally, the output result is

. In the classification phase, T

[CLS] and

are input into the head attention mechanism, where information is learned in parallel across multiple feature spaces, and features are fused. Finally, the classification is performed through a fully connected layer, obtaining the final prediction result. The pseudo-code of CLA-BERT is shown in Algorithm 1.

| Algorithm 1: CLA-BERT Pseudo-code

|

| Input: |

Target labels : Target labels of size , representing the class label for each sample. Segment information : Segment information for the sequence, size . Soft targets (optional): Soft targets of size , where is the number of classes.

Output:Steps:- 1.

Embedding Layer Processing: Convert the input source sequence and segment information into embeddings , where is the embedding dimension:

- 2.

Encoder Processing: Pass the embeddings through the encoder to obtain the output :

- 3.

Separation of and Token Outputs: Extract the and from the encoder output :

- 4.

CNN Layer Processing: Apply 1D convolution on the to obtain the convolutional output , where is the number of convolutional output channels: Then, adjust its dimensions:

- 5.

Bi-LSTM Layer Processing: Pass the convolutional output through a bidirectional LSTM to obtain the output :

- 6.

Attention Mechanism: Use the as the query and pass it through a linear layer to map it to : Apply the multi-head attention mechanism to compute the attention output and attention weights Where has the size .

- 7.

Concatenating Outputs: Concatenate the and to form the final representation :

- 8.

Classification Layer: Pass the concatenated output through a fully connected layer to obtain the predicted values :

- 9.

Loss Calculation: If target labels are provided, compute the loss:

If soft targets are enabled ( is True), compute the mixed loss:

Otherwise, compute the standard cross-entropy loss:

- 10.

Prediction: If is None, return only the predicted logits .

|

4.1. Data Preprocessing

To minimize the potential impact of noise and redundant information in the original dataset on model performance and ensure consistency between the data samples and model input format, we use the Datagram2Token tool for data preprocessing. First, the raw PCAP files are segmented at the packet level. Then, invalid packets smaller than 80 bytes are removed, as they do not contain payload information. Simultaneously, traffic unrelated to the transmission content, such as ARP, ICMP, and DHCP protocol packets, is discarded. Since strong identifiers (e.g., port numbers and IP addresses) do not carry valuable discriminative information and may lead to model overfitting, we avoid focusing on these details during the model training process. To reduce bias and interference, we also remove protocol port information from the IP and TCP headers, as well as the Ethernet header. Next, the packet data are read in hexadecimal format, and the hexadecimal traffic sequences are consolidated into two-byte units, each consisting of four hexadecimal digits (i.e., two bytes). The processed data are shown in

Table 1.

4.2. Traffic Representation

4.2.1. Packet-Level Feature Extraction Module Based on BERT

ET-BERT [

6] models the context relationships at the byte level and BURST level using two self-supervised learning tasks: the network traffic-specific masked BURST task and the homogeneous BURST prediction task. This method leverages large-scale unlabeled data for pre-training, thereby optimizing the universal semantic representation of data packets. Based on this, this paper designs a packet-level feature extraction module based on ET-BERT. The module consists of 12 layers of Transformers, with the representation dimension of each input token set to 768 to enhance the expressive power of packet-level features. The workflow of the BERT model is divided into two main stages: the token embedding stage and the encoding stage.

In the token embedding stage, the model performs token embedding, positional embedding, and segment embedding:

- (1)

Token Embedding: Token embedding converts each token in the sequence into a vector by querying a dictionary table. The value range of each token is from 0 to 65,535, and the dictionary size is 65,536. Additionally, during the token embedding process, two special tokens are added to the input sequence: [CLS] at the beginning of the sequence and [SEP] at the end of the sequence. When the input length is smaller than the model’s minimum requirement, the token [PAD] is added at the end of the sequence; if the input sequence length exceeds the model’s requirement, truncation is performed.

- (2)

Positional Embedding: Positional embedding assigns a position number to each token in the sequence. Given the sequential nature of traffic data transmission, positional embedding ensures that the model focuses on the temporal relationships between tokens. Each input token is assigned a vector of dimension to represent its position in the sequence, with set to 768 in this paper.

- (3)

Segment Embedding: Segment embedding distinguishes the two sentences in an input sentence pair and classifies them based on the semantic similarity between the sentences. This embedding is particularly important in the pre-training phase, although the specific pre-training process is not addressed in this paper.

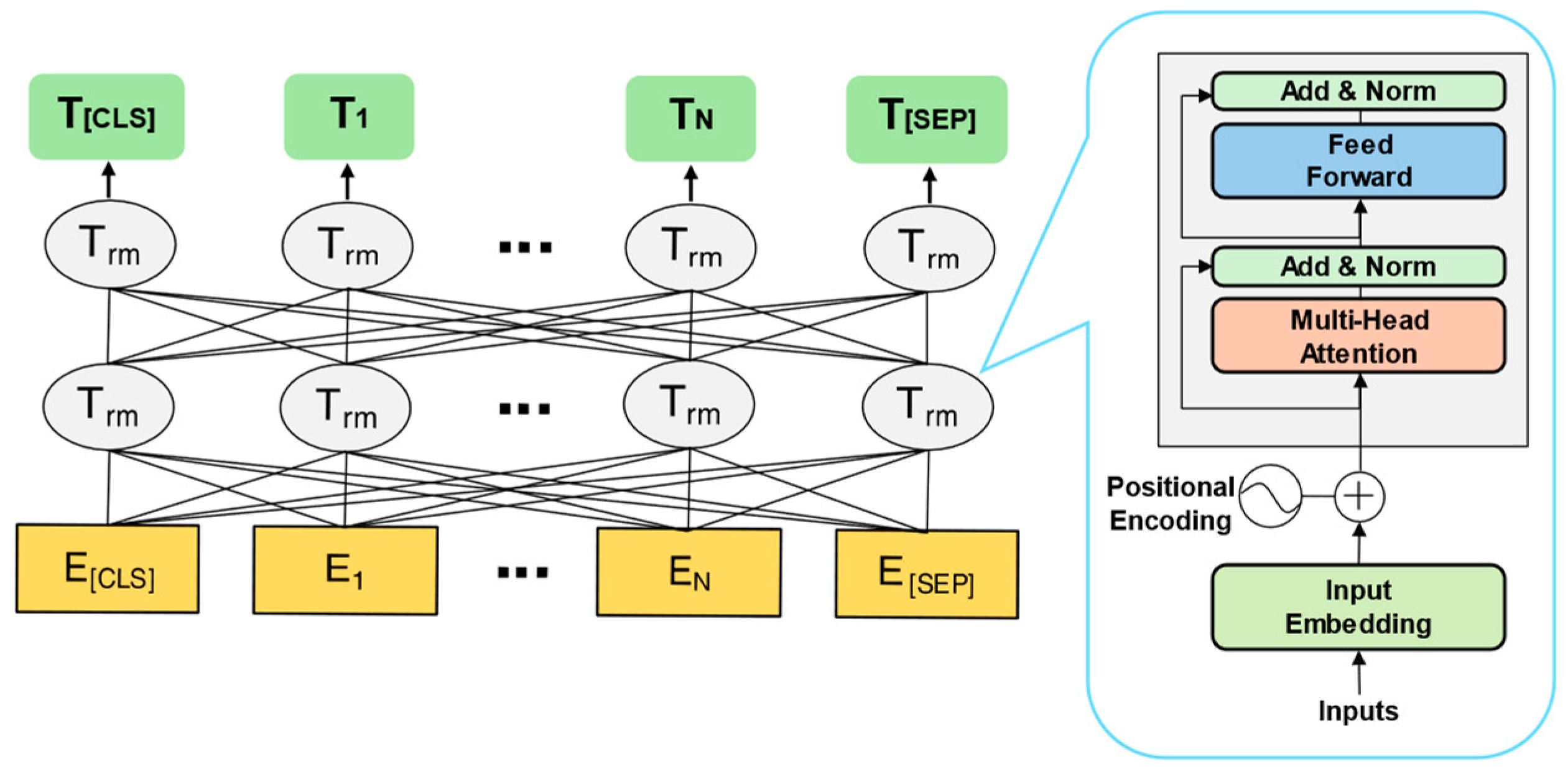

In the encoding stage, the BERT model uses multiple layers of bidirectional Transformer encoders to encode the input vectors. The input vector is (E

[CLS], E

1, …, E

[SEP]), and the corresponding output vector is (T

[CLS], T

1, …, T

[SEP]). In this paper, the special token [CLS] from the BERT model is used to represent the packet-level features of traffic. As the starting token of the sequence input, the hidden state of [CLS] is iteratively aggregated through the multi-layer Transformer attention mechanism, effectively capturing the global semantic relationships between the data units within the traffic packet. This design has two advantages: (1) The self-attention mechanism allows the [CLS] token to establish dynamic weighted connections with all other tokens in the sequence, achieving fine-grained information fusion. (2) Through the multi-layered encoding process, [CLS] ultimately forms a vector representation that contains the full semantic information of the sequence, providing a high-differentiation packet-level feature representation for encrypted traffic classification. The BERT model architecture uses multi-layer bidirectional Transformer encoders, as shown in

Figure 2.

The core of the Transformer is the self-attention mechanism, which captures the relationships between any two tokens in the sequence. The main idea of the self-attention mechanism is as follows: for each input token, the model computes its relationship with other tokens in the sequence and updates each token’s representation based on these relationships. The computation process of the self-attention mechanism [

24] can be expressed by Equations (1) and (2):

where

is the query matrix,

is the key matrix,

is the value matrix,

represents the input vector,

denotes the dimension of the key vectors, and

represents the learned weight matrix.

BERT enhances the model’s expressive capability through the use of multi-head self-attention [

10]. Multi-head self-attention is an extension of the self-attention mechanism, which captures representations from different subspaces by computing multiple self-attention “heads” in parallel. Each “head” is an independent self-attention mechanism that processes the input using different query, key, and value matrices. The computation process of the multi-head self-attention mechanism [

10] can be expressed by Equations (3) and (4):

where

is the number of attention heads,

,

,

is the independent weight matrix for each head, and

represents the final output matrix.

Through the multi-head mechanism, BERT can simultaneously learn multiple different attention patterns, with each attention head focusing on different parts or features of the sequence. This enables BERT to have stronger modeling capabilities compared to a single self-attention mechanism, allowing it to capture richer semantic information.

4.2.2. Byte-Level Feature Extraction Module Based on CNN-BiLSTM

In recent years, methods combining CNN and LSTM have performed well in tasks such as natural language processing and time-series processing, including sentiment analysis [

31]. Research has shown that using models like CNN on top of the BERT model can extract deeper features from sequences. Inspired by this, we use a combination of CNN and BiLSTM after the BERT model to extract byte-level features of the traffic. The proposed byte-level feature learning module consists of CNN and LSTM layers, aiming to extract byte-level feature representations from the raw byte data of the input packets.

In this paper, the CNN layer is first used to capture the local features of the traffic by using the output data from BERT (T

1, …, T

[SEP]) as input, enhancing the model’s sensitivity to local patterns. The CNN layer is composed of one-dimensional convolutional layers and ReLU activation functions, and the computation process of the CNN layer can be expressed [

9] by Equations (5) and (6):

where

represents the input data to the CNN,

is the convolution kernel,

is the bias, s denotes the size of the convolution kernel, and

represents the output data.

Subsequently, the output

from the CNN layer is passed to the LSTM layer to capture the forward and backward dependencies of the traffic sequence. The LSTM network controls the flow of information through four main gating mechanisms, namely, the forget gate

, input gate

, candidate cell state

, and output gate

, as shown in

Figure 3. The computation process [

25] is as follows:

where

represents the input vector at the current time step

,

represents the hidden state from the previous time step,

denotes the cell state from the previous time step,

is the weight matrix, and

represents the bias.

In the LSTM layer, this paper selects BiLSTM as the structure for traffic feature extraction, as shown in

Figure 4. BiLSTM is a variant of LSTM, consisting of a forward LSTM and a backward LSTM, which can concatenate the forward and backward temporal information of traffic data. The computation process [

25] is as follows:

where

represents the input,

denotes the time step,

is the sequence length,

represents the output of the forward LSTM,

is the output of the backward LSTM, and

represents the concatenated output of the bidirectional LSTM.

The temporal information of encrypted traffic not only involves past behavior but also encompasses future trends. BiLSTM, by simultaneously considering both forward and backward temporal information, enables the model to establish connections between the past and future byte states, thereby providing a more comprehensive feature representation.

4.3. Feature Fusion and Classification Based on Attention Mechanism

This paper uses an attention mechanism-based feature fusion module to combine the outputs of the packet-level feature extraction module and the byte-level feature extraction module into a single feature vector. This approach captures the inherent dependencies between different data positions within the traffic from a global perspective and highlights key byte features. The module includes a multi-head attention layer and a feature concatenation operation, where the attention layer contains four attention heads. Specifically, the output of the BERT-based packet-level feature extraction module is used to construct the query matrix

, while the output of the CNN-LSTM-based byte-level feature extraction module is used to construct the key matrix

and the value matrix

. The main computation formula [

10] of this module is as follows:

where

represents the linear mapping,

denotes the concatenation operation, and

represents the computation of each individual attention.

Based on the feature fusion, we perform classification through a fully connected layer. The final output

represents [

6] the scores of each input sample across various categories, as shown in Equation (7).

where

represents the concatenated feature vector,

denotes the mapping from the concatenated feature vector to the number of categories, and

represents the bias of the categories.

5. Experiment

5.1. Experimental Environment and Setup

The experiments were conducted on a computer running Ubuntu 20.04 operating system and Python 3.8, equipped with an Intel(R) Xeon(R) Platinum 8481C processor and an NVIDIA GeForce RTX 4090D (24GB VRAM) GPU accelerator. In this experiment, the model is implemented and fine-tuned using the Universal Encoder Representation (UER) framework [

32] and the PyTorch 1.11.0 framework. UER is an open-source framework designed for training and fine-tuning pre-trained language models, supporting a variety of pre-trained models, including BERT, GPT, and others.

The model’s parameter settings are as follows: In the BERT layer, the maximum input length is set to 128 tokens, and the token embedding dimension is 768. The batch size is set to 64, the learning rate is 2 × 10−5, the number of training epochs is set to 16, and the Adam optimizer is used.

5.2. Dataset

To comprehensively evaluate the performance of the CLA-BERT model in different encrypted traffic classification scenarios, this study selects two representative scenarios: TLS 1.3 encrypted application classification and VPN traffic detection. Experiments were conducted on two commonly used public datasets, with three encrypted traffic classification tasks, as shown in

Table 2.

In the TLS 1.3 application identification task, the experiment used the CSTNET-TLS 1.3 dataset [

6] released by the Chinese Academy of Sciences. This dataset was collected from real network environments between March and July 2021, covering traffic samples from 120 applications supporting the TLS 1.3 protocol from the Alexa Top 5000 sites.

For the VPN encrypted traffic classification task, the ISCX-VPN dataset [

33] was used, which was captured by the Canadian Institute for Cybersecurity. The dataset contains six types of communication applications, covering both VPN and non-VPN traffic. To further assess the model’s performance at different service and application levels, the dataset was subdivided into two datasets based on service type and application type: the ISCX-VPN-Service dataset [

33], which includes 12 service categories, and the ISCX-VPN-App dataset [

33], which includes 17 application categories. Through experiments on these subdivided datasets, we can more comprehensively test the adaptability of the CLA-BERT model in different traffic scenarios.

To mitigate the bias caused by data imbalance in the aforementioned dataset, we randomly selected 5000 samples from each category. For categories with fewer than 5000 samples, the sample size remained unchanged. Since the majority of categories have more than 5000 samples, and only a small number of categories have fewer than 5000 samples, undersampling or oversampling methods were not applied to handle these minority categories. Maintaining the current sample sizes helps avoid excessive artificial intervention in the minority categories, thereby ensuring the authenticity and representativeness of the dataset. Finally, the dataset was divided into training, validation, and test sets in an 8:1:1 ratio.

5.3. Evaluation Metrics

To evaluate the performance of the CLA-BERT model in different encrypted traffic classification tasks, we employed four evaluation metrics: accuracy, precision, recall, and F1 score. These metrics aim to assess the model’s classification performance on encrypted traffic from different perspectives. The specific calculation formulas are shown in Equations:

where

represents the number of samples correctly classified as the target category,

represents the number of samples incorrectly classified as the target category,

represents the number of samples correctly classified as non-target categories, and

represents the number of samples incorrectly classified as non-target categories.

5.4. Hyperparameter Experiment

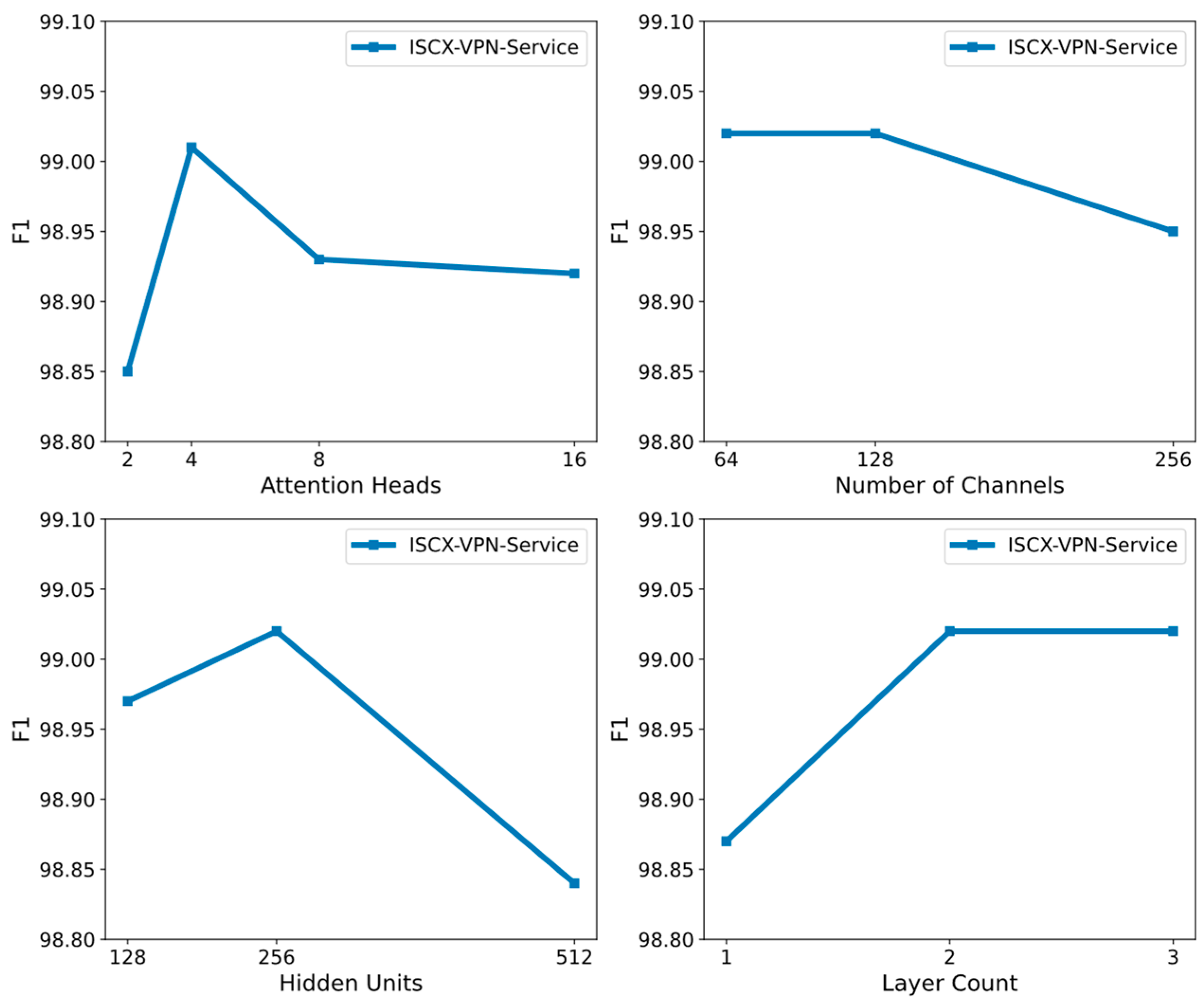

To investigate the impact of hyperparameters on model performance, we conducted multiple experiments on the ISCX-VPN-Service dataset, adjusting four key hyperparameters: the number of attention heads, the number of CNN channels, the number of BiLSTM hidden units, and the number of BiLSTM layers. The goal of these adjustments is to optimize the model’s performance in encrypted traffic classification tasks.

The experimental results (see

Table 3,

Figure 5) show that using four attention heads yields the best model performance, with an accuracy of 0.9902. Increasing the number of attention heads allows the model to capture more features and relationships, thereby enhancing its representational ability. However, a larger number of attention heads (such as 8 or 16) does not lead to significant performance improvement and may slightly reduce performance due to the introduction of redundant information and computational overhead. For the tuning of the number of CNN channels, both 64 and 128 channels performed well. Considering the computational cost, 64 channels are the optimal choice. Increasing the number of channels helps in extracting more features, but the computational burden also increases. Thus, 64 channels provide a good balance between performance and computational efficiency. In terms of BiLSTM hidden units, 256 units provided the best performance, balancing high accuracy and recall with reasonable computational cost. Regarding the number of BiLSTM layers, the model performance gradually improved as the number of layers increased from 1 to 3. Considering the computational cost, 2 BiLSTM layers are the most suitable choice.

In summary, we selected 64 CNN channels, 256 BiLSTM hidden units, and a 2-layer BiLSTM configuration, which ensured both computational efficiency and optimal classification performance.

5.5. Ablation Experiment

For the system evaluation of the contribution of each component to the model’s performance, this study designs an ablation experiment framework based on the ISCX-VPN-App dataset. A stratified random sampling method is employed to select 300 samples from each category to construct the training set, ensuring balanced category distribution. Four sets of control models are configured: (1) removing the CNN module (w/o CNN); (2) removing the BiLSTM architecture (w/o BiLSTM); (3) simultaneously removing both CNN and BiLSTM (w/o C&L); (4) the complete CLA-BERT model (default). The variable control method is applied to validate the collaborative effects of each module in the encrypted traffic classification task.

The experimental results are shown in

Table 4. After removing the CNN component, the model’s performance slightly decreases, with an accuracy of 0.9798. After removing the BiLSTM component, the model exhibits a decrease in accuracy, precision, and recall, with the F1 score dropping to 0.9688. In the configuration where both CNN and BiLSTM components are removed (w/o C&BiL), the model’s performance further deteriorates, with the F1 score at 0.9563. This indicates that the CNN and BiLSTM components play a crucial role in improving the model’s performance.

Finally, when the complete CLA-BERT model is used, the model achieves the best performance, with an accuracy of 0.9824. In summary, the CNN, BiLSTM, and Multi-Head Attention (MHA) modules play an essential role in the encrypted traffic classification task. Removing any of these components significantly weakens the model’s performance, further confirming their indispensability in improving classification accuracy.

5.6. Comparative Experiment

In this section, we evaluate the performance of our proposed CLA-BERT method through comparative experiments on three different traffic classification datasets: ISCX-VPN-Service, ISCX-VPN-App, and CSTNET-TLS 1.3. We selected 14 existing traffic classification methods as baselines for comparison with CLA-BERT. These methods can be categorized into the following three groups: fingerprint-based methods (e.g., FlowPrint [

15]); statistical feature-based methods (e.g., AppScanner [

16], CUMUL [

34], BIND [

19], K-fp [

35], and DF [

36]); deep learning-based methods (e.g., FS-Net [

22], GraphDApp [

37], TSCRNN [

23], Deeppacket [

20], and ICLSTM [

25]); and BERT-based methods (e.g., PERT [

11] and ET-BERT [

6]). Through these comparative experiments, we comprehensively assess the performance of CLA-BERT on different datasets.

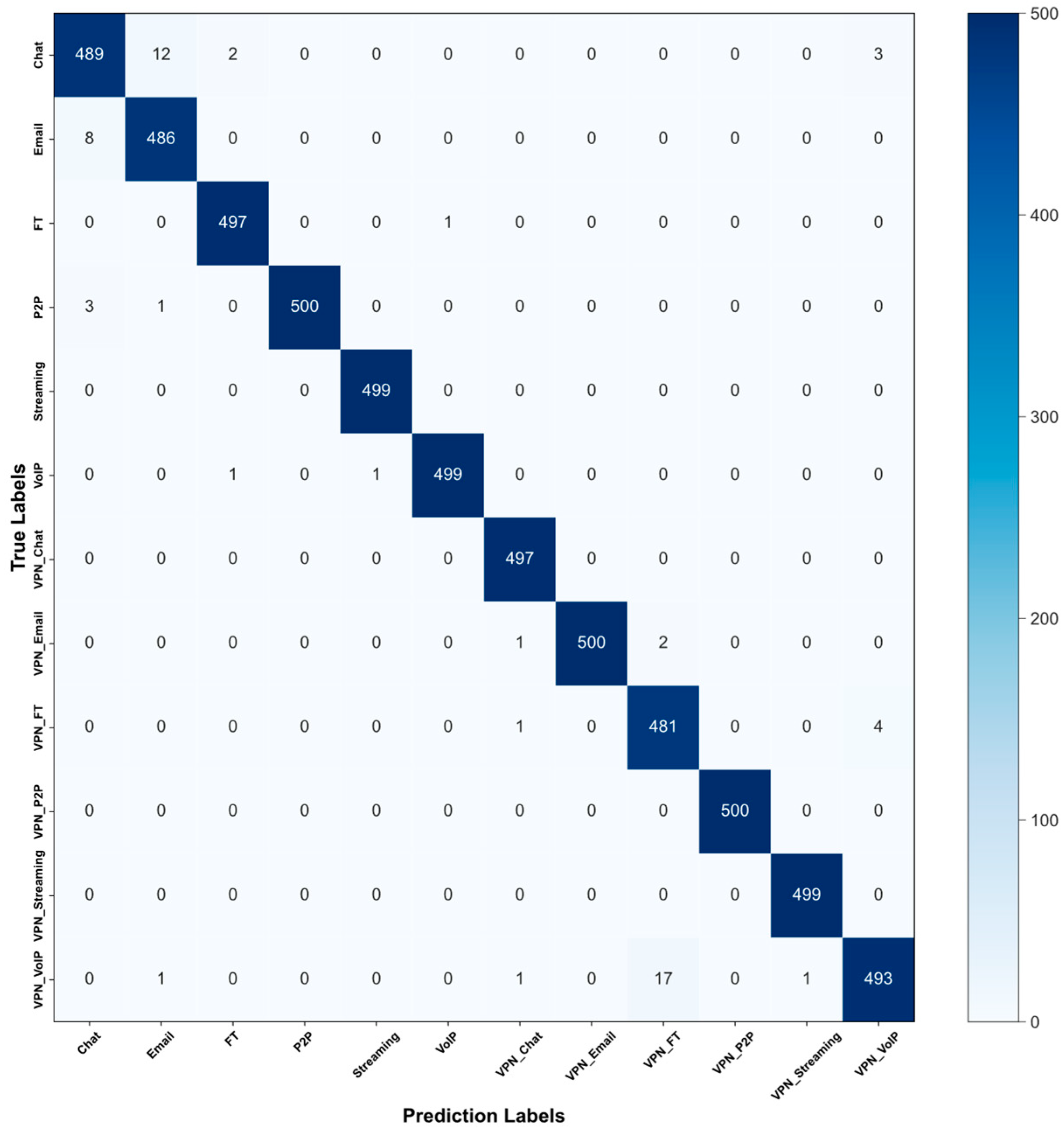

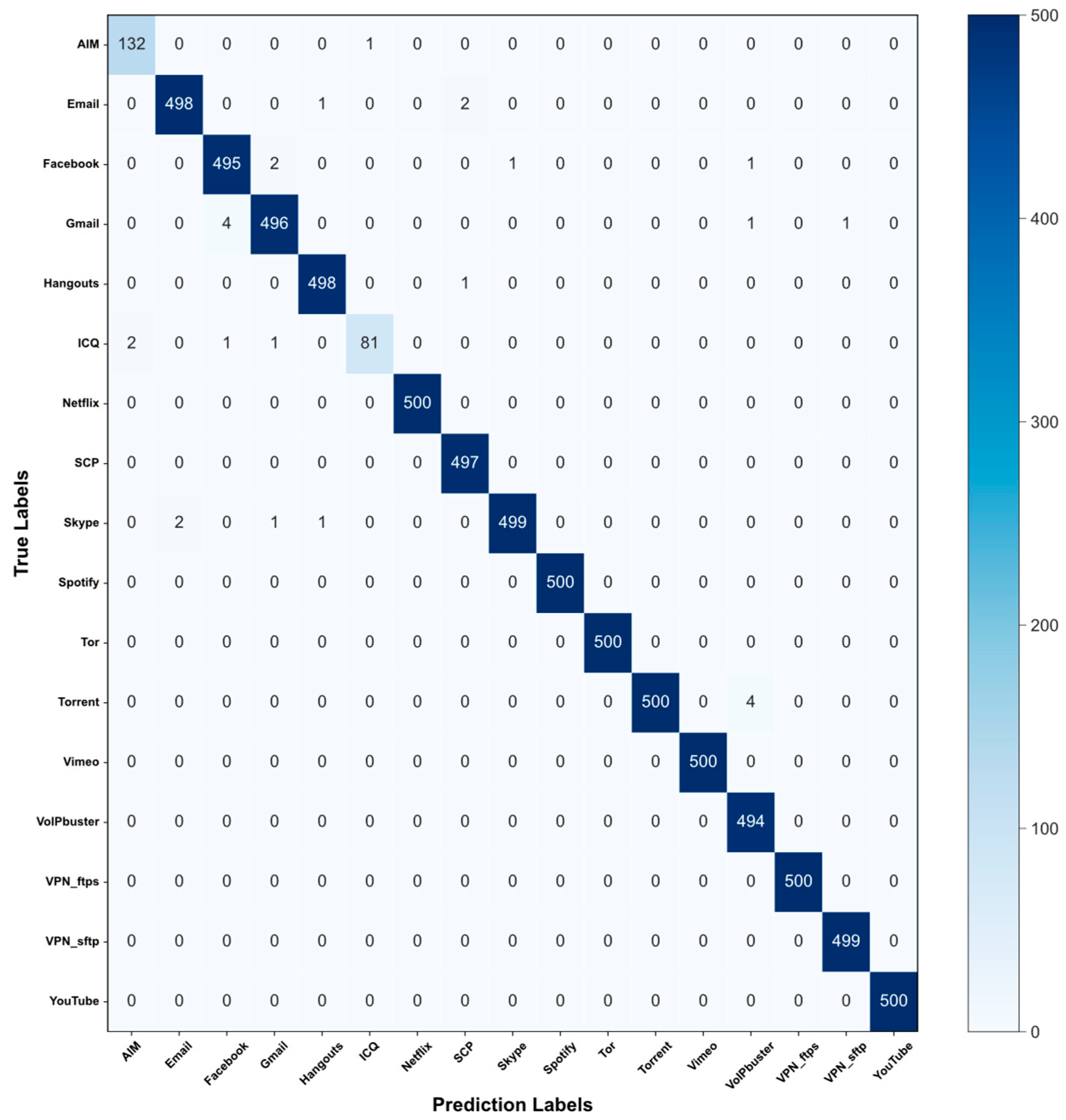

The experimental results (see

Table 5 and

Table 6,

Figure 6 and

Figure 7) show that on the ISCX-VPN-Service, ISCX-VPN-App, and CSTNET-TLS 1.3 datasets, CLA-BERT outperforms existing methods on all evaluation metrics. Specifically, CLA-BERT achieves F1 scores of 99.02%, 99.49%, and 97.78% on these three datasets, respectively. For example, compared to traditional statistical feature-based methods (such as AppScanner and BIND), CLA-BERT performs significantly better. On the ISCX-VPN-Service dataset, CLA-BERT’s accuracy is 27.2% and 23.68% higher than that of AppScanner and BIND, respectively. On the ISCX-VPN-App dataset, CLA-BERT improves accuracy by 2.07% compared to the best-performing deep learning method, Deeppacket. This result shows that CLA-BERT performs exceptionally well in VPN-encrypted traffic classification tasks.

On the CSTNET-TLS 1.3 dataset, CLA-BERT was compared with BERT-based traffic classification methods (e.g., PERT and ET-BERT), and the results show that CLA-BERT improves accuracy by 8.6% and 0.38% over PERT and ET-BERT, respectively. This demonstrates that CLA-BERT, compared to using BERT alone, more effectively integrates packet-level and byte-level features, thereby enhancing the performance of encrypted traffic classification.

5.7. Small-Sample Experiment

To validate the effectiveness and robustness of CLA-BERT in small-sample scenarios, we designed comparative experiments with different data proportions on the ISCX-VPN-Service dataset. The number of samples in each category was set to 1000, and random selections of 70%, 50%, 30%, 10%, and 5% of the samples were used for the small-sample experiments. This design allows us to systematically evaluate the performance changes in CLA-BERT as the data volume decreases.

The comparison results in

Table 7 and

Figure 8 show that the CLA-BERT method maintains relatively stable performance even as the data volume decreases. Specifically, with 5%, 10%, 30%, 50%, and 70% of the data, CLA-BERT achieves F1 scores of 93.51%, 94.79%, 97.10%, 97.78%, and 98.09%, respectively. These results indicate that, as the data volume decreases, the F1 score of CLA-BERT experiences only slight fluctuations, and its overall performance remains stable. Even with a very small dataset (5%), CLA-BERT still maintains a high F1 score, demonstrating its strong robustness in small-sample environments.

This result shows that CLA-BERT can effectively address the challenges of small-sample encrypted traffic classification and still provide stable classification performance as the data volume decreases. This offers strong support for handling sample-scarce encrypted traffic classification tasks in real-world applications.

6. Conclusions

This paper proposes a novel encrypted traffic classification method, CLA-BERT, to address the issues of single-feature dependence and insufficient exploration of deep packet-level characteristics in existing methods. The approach combines the advantages of packet-level and byte-level features, aiming to improve the performance of encrypted traffic classification.

The main conclusions of this study are summarized as follows:

- (1)

Feature Extraction and Fusion Strategy: This study proposes a multi-level feature extraction and fusion strategy, which effectively enhances the representation capability of encrypted traffic. We extract packet-level semantic features using the BERT model, combine convolutional neural networks (CNNs) to extract byte-level local features, and introduce a Bidirectional Long Short-Term Memory network (BiLSTM) to capture sequential dependencies. This multi-granularity feature extraction framework deeply explores both packet-level and byte-level information, overcoming the limitations of traditional single-feature extraction methods. Furthermore, a multi-head attention mechanism is employed to adaptively weight and fuse packet-level and byte-level features, significantly improving the overall feature representation capability.

- (2)

Experimental Design and Performance Evaluation: To comprehensively evaluate the performance of the CLA-BERT model, we conducted multiple experiments on two publicly available datasets, ISC-VPN and CSTNET-TLS 1.3. The experiments cover three challenging encrypted traffic classification tasks: VPN encryption service classification, VPN encryption application classification, and TLS 1.3 encryption application classification. The experimental results show that CLA-BERT performs excellently in these tasks, with accuracy rates of 99.02%, 99.65%, and 97.78%, outperforming existing mainstream methods. These results validate the proposed method’s outstanding capability in handling complex encrypted traffic classification tasks.

- (3)

Model Analysis and Robustness Verification: To further analyze the internal mechanism of the CLA-BERT model, we conducted ablation experiments and robustness tests. The ablation experiments show that the BERT feature extraction module, CNN-BiLSTM feature extraction module, and multi-head attention fusion module all contribute to the model’s performance, demonstrating the effectiveness and rationality of the model architecture. Additionally, we designed small-sample experiments to verify the robustness of the model in data-scarce situations. The results show that even with fewer training samples, CLA-BERT can maintain stable classification performance. Specifically, with 5%, 10%, 30%, 50%, and 70% of the data, CLA-BERT’s F1 scores are 93.51%, 94.79%, 97.10%, 97.78%, and 98.09%, respectively.

In future research, we plan to further expand the application scope of CLA-BERT and extend it to a wider range of encrypted traffic classification tasks (such as different encryption protocols) to comprehensively evaluate its generalization capability and robustness. Additionally, we will introduce lightweight optimization techniques, such as model pruning and low-rank decomposition, to enhance computational efficiency and reduce resource consumption, thereby improving the model’s applicability in real-world scenarios.