Abstract

The Gram–Schmidt process (GSP) plays an important role in algebra. It provides a theoretical and practical approach for generating an orthonormal basis, QR decomposition, unitary matrices, etc. It also facilitates some applications in the fields of communication, machine learning, feature extraction, etc. The typical GSP is self-referential, while the non-self-referential GSP is based on the Gram determinant, which has exponential complexity. The motivation for this article is to find a way that could convert a set of linearly independent vectors into a set of orthogonal vectors via a non-self-referential GSP (NsrGSP). The approach we use is to derive a method that utilizes the recursive property of the standard GSP to retrieve a NsrGSP. The individual orthogonal vector form we obtain is , and the collective orthogonal vectors, in a matrix form, are . This approach could reduce the exponential computational complexity to a polynomial one. It also has a neat representation. To this end, we also apply our approach on a classification problem based on real data. Our method shows the experimental results are much more persuasive than other familiar methods.

MSC:

65F25; 08-02

1. Introduction

The Gram–Schmidt method plays an important role in both theoretical and practical aspects. It has also been applied in constructing some orthogonal elements in some algebraic systems [1], in signal processing [2], and in QR decomposition [3,4] (although there are other approaches to the QR problem [5]). In addition, it could also be applied to machine learning and feature extraction [6,7] via a modified Gram–Schmidt method [8]. However, one would like to have a non-self-referential form of the GSP; but the typical one is in a formal determinant form—whose computational complexity is exponentially high and whose form is not succinctly presented. There is a research gap in bridging the typical (self-referential) and the non-self-referential GSP. This motivates our research on finding a non-self-referential method that could reduce the complexity and enhance the presentation. The novelty part of this article is to find the internal recursive relation between the input vectors U and the converted orthogonal vectors V, as well as a hypothesis of the non-self-referential form and a proof that our hypothesis is correct. Let denote the set of real numbers. Let denote an inner product space, where the inner product satisfies , , , and for all and all . Let be a set of independent vectors, i.e., U is a basis. Then, one associates by the Gram–Schmidt method, the following:

- ;

- ;

- ;⋮

- ;

- ;⋮

- .

This can also be represented in a matrix form:

This is a typical self-referential (or where is explicitly dependent on ) version of the Gram–Schmidt process (GSP). For the non-self-referential version of the Gram–Schmidt process (see [9], Chapter 7), one often resorts to the Gram determinant in the following formula:

where stands for the formal determinant and is defined by the normalized , where by matrix and is the determinant of . For example, suppose ; ; . Then, the normalized is ; the normalized is ; and the normalized is the normalization of the formal determinant , i.e., the normalized is . This computation, however, has exponential complexity. In order to reduce such complexity and to enhance the comprehension, we kept track of all the indexes of the typical GPS and found out the pattern for an inductive computation. Our approach, i.e., the NsrGSP (non-self-referential Gram–Schmidt process), shows (see Theorem 1 and 3 for more details) the following: , where ; ; and where and .

2. Theories and Methods

To show each , for all , it suffices to show .

Proposition 1.

For all .

Proof.

If , then . Assume for all . Now, let be arbitrary such that . If , then it is true by the assumption. Now, let and . Then, , since for all by the induction assumption. □

Since , we can focus on finding the pattern of the latter part. An official definition for this part is given in Definition 1. Thus, it could be written as

Let denote all the connected paths, whose nodes are all natural numbers and whose edges consist of decreasing natural numbers, from p to q, where and . For example, . Indeed, is unique, which could be verified by the following mathematical induction: suppose is unique, then consists of two parts—all the paths from to q are such without going through the node p, and all the paths from to q are such via the node p. Since both sets are disjointed and both are unique by the mathematical assumption, is unique and the size is . Let denote the sum of the -indexed multiplicative values, i.e.,

This definition is generalized in Definition 2.

Definition 1.

(Projection value)

If the meaning could be understood from the context, then is written as .

Definition 2.

(β multiplicative value) The β multiplicative value from node k to node i is defined by , if and , if .

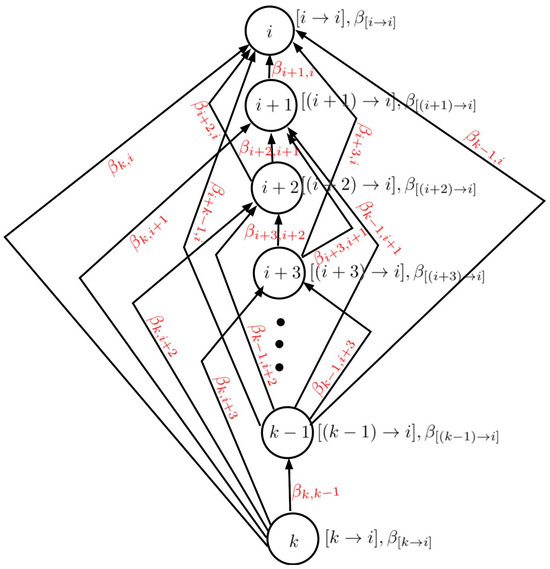

A tree-like expression of these two definitions could be referred to, as shown in Figure 1 and Figure 2.

Figure 1.

Beta directional tree: we name this structure a beta directional tree in the sense that all the directed edges are only feasible from a higher-indexed node to a lower-indexed node. There are nodes, or . Each node p is associated with two quantities: , the feasible paths from p to i and ; and the multiplicative values of all the -indexed values. The edge from p to q () is assigned the weight , which is defined as .

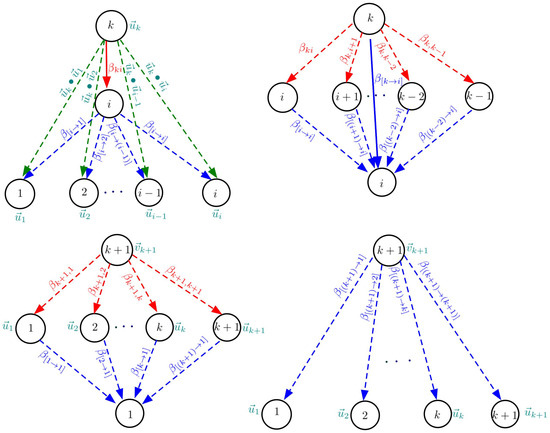

Figure 2.

Structures of and : the (top-left) figure is a visual description of Proposition 3 . The (top-right) figure depicts the matrix multiplication in Definition 2: . The (bottom) figures depict the result from Lemma 2: .

Definition 3.

(β multiplicative vector) The set of β multiplicative distributive values from node k to nodes is defined by .

Definition 4.

(Projection vector) .

Lemma 1.

.

Proof.

From Definitions 2 and 3, we have

From this definition, we could have the following matrix representation:

Let be a vector with length n. Let . Let Let us investigate some terms of and then find their general non-self-referential expressions.

- ;

- ;

- ;

This process could keep on going. The main purpose of this article is to find out the pattern for any arbitrary terms and put them into succinct forms.

Definition 5

(Multiplicative path). Suppose the j’th path is denoted by . Define the beta value associated with the j’th path by .

Definition 6.

Suppose the set of all paths is denoted by . Define its associated beta value by .

Next, we extend these cases and form the following claims and lemmas:

Proposition 2.

.

Proof.

The set of all the paths from node to node i are the paths from to all the other nodes and the existing paths from each node to the node i. □

Corollary 1.

The size of all the paths from node n to node i, or , are for all , i.e., there are paths from node n to node i.

Lemma 2.

(Non-self-referential representation) , where .

Proof.

Let us show this by mathematical induction. Assume is true for all . By Equation (2) and the preceding assumption, one has

Then, the result follows immediately from Definition 2. □

Definition 7.

1. ;

2. , where and are both p-by-q matrices and where denotes the j’th column of matrix ;

3. , where and the square matrix , whose elements are all non-zero.

4. For any vector , we use to denote

Theorem 1.

Proof.

It follows immediately from Lemma 2 and Definition 7. □

Lemma 3.

The k-by-k matrix is .

For example, . Moreover, . The tree structure could refer to Figure 1.

Proposition 3.

.

Proof.

By Equation (1) and Lemma 2, we have . □

The visual depiction of this proposition is also presented in the top-left figure in Figure 2.

Corollary 2.

1. ;

2. ;

3. ;

4. .

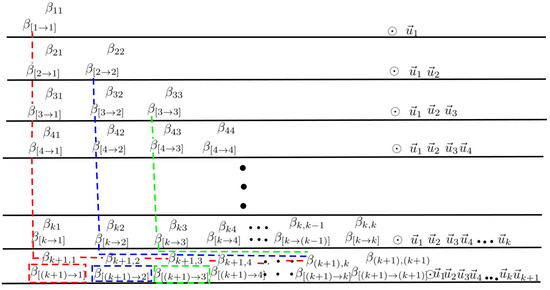

The interaction between them could be seen in Figure 3.

Figure 3.

The inductive Gram–Schmidt Table for coefficients: given the premises ; and , this table tabulates how to inductively find the results of ; and . Inductively, the following then applies: . In the table, they are represented by notation ⊙. Here, let us demonstrate how to resolve some of the terms of the coefficients: ; ; .

Lemma 4.

Proof.

In general, one has the following:

□

Lemma 5.

.

Proof.

From the definition of and Definition 7, one has

. Then, by Theorem 1, the result follows immediately. □

Theorem 2.

.

Proof.

By Lemmas 1,3, 4 and 5, we have the following:

Observe that is a k-by-k square matrix.

Theorem 3.

(Non-self-referential GSP recursion) The set of GSP orthogonal vectors is calculated by , where and .

Proof.

This follows immediately from Theorems 1 and 2. □

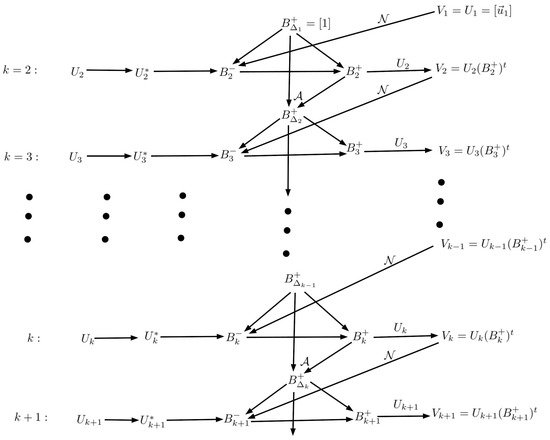

The inductive steps could be referred to in Figure 4.

Figure 4.

Inductive procedures for calculating : this figure demonstrates the inductive output of non-self-referential representations of orthogonal vectors given the input vector-based matrix . In the figure, ; .

Example 1.

Suppose .

Then, and . Other terms are found in the following inductive steps:

- 1.

- ; ; ;; ;

- 2.

- ; ;;; ;

- 3.

- ; ; ;;

- 4.

- ; ; ; .

Observe that for all .

3. Application One

Since the obtained orthonormal bases V are completely dependent on the given input vectors U, we could reduce such dependence. Let denote the set of all permutations of unit , i.e., , where each denotes the j’th permutation of and each denotes the unit vector of . Let denote the set of all corresponding orthonormal bases with respect to U, i.e., , where denotes the obtained orthonormal basis from , i.e., . Let us represent and by tensor products, i.e., and . We could set up some criteria to locate some off the optimal bases among , such as, for example, a natural one based on distances between an unordered . Thus, the candidate basis is

where and is defined by the Euclidean norm, i.e.,

Observe that .

Let us take , for example, and render a precise decision procedure. Let ; ; and (or ). Then, the following applies:

Next,

- ;

- ;

- ;

- .

In order to decide which GSP result is much more acceptable under the uncertainty (or permutations), it suffices to calculate the value of

If , then we should choose the optimal GSP result to be , and, if , then we should choose to be the optimal one.

This result could be captured by the following statement:

where .

Let us take the U in Example 1 and demonstrate how to find the optimal basis (or bases).

After a sequence of numerical computations via R 4.2.2 programming (https://github.com/raymingchen/Gram-Schimdt-Process.git (accessed on 10 February 2025)), we obtain the results (see Figure 5) according to Equation (4): (which are shown in Figure 5) and its corresponding input (permutated) vector is

Figure 5.

Computed results of . The minimal deviation lies in the 83rd permutation, and the value is , i.e., the optimal GSP result is .

A somewhat related optimization problem is to minimize the distance between input vectors and the converted orthogonal vectors. If a reader is interested in extending our method to such a problem, he could also refer to LLL, a lattice basis reduction algorithm [10,11].

4. Application Two

In this section, we will show how to apply our non-self-referential GSP approach to a classification problem. The targets are countries, and the criteria are the governance indicators. The goal is to classify the countries with similar governance systems into the same category. In our case, there are 6 categories for the countries to be classified into.The procedures go as follows:

- Collect time-series data (from Year 2013 to Year 2023) for 202 countries (see Numbered 202 countries.pdf in https://github.com/raymingchen/Gram-Schimdt-Process/blob/ae4e4e7d98059e4dc1cd08b3591b884d4acdc13a/Numbered%20202%20countries.pdf (accessed on 10 February 2025)) from Data Bank. The source data are further processed to fit our purpose and saved as data.csv (https://github.com/raymingchen/Gram-Schimdt-Process/blob/920de0ef528c06058cf0178b2b1718d99862c254/data.csv (accessed on 10 February 2025)). There are six indicators to be studied:

- () Control of Corruption: Estimate;

- () Government Effectiveness: Estimate;

- () Political Stability and Absence of Violence/Terrorism: Estimate;

- () Regulatory Quality: Estimate;

- () Rule of Law: Estimate;

- () Voice and Accountability: Estimate.

For the source data, the meaning of the indicators and how they are collected/computed in the database, please refer to Worldwide Governance Indicators, in particular its Metadata, or https://databank.worldbank.org/source/worldwide-governance-indicators?l=en# (accessed on 10 February 2025). We could use the set of column vectors to denote the set of all the time-series data collected, where each column vector is a 6-by-1 column vector (matrix) recording the values of for country j at Year t. - Single out 6 representative countries as benchmarks: China (), France (), Germany (), India (), Russia Federatioin (), USA ()-the exact content could refer to 6 representative.pdf (https://github.com/raymingchen/Gram-Schimdt-Process/blob/920de0ef528c06058cf0178b2b1718d99862c254/6%20representatives.pdf (accessed on 10 February 2025)); let column vectors denote the values of at Year t; and a 6-by-6 matrix ; for example, is the matrix form of the following tabulated data of Table 1.

Table 1. The values of 6 governance indicators for China, France, Germany, India, Russia, and the USA at Year 2013.

Table 1. The values of 6 governance indicators for China, France, Germany, India, Russia, and the USA at Year 2013. - For each Year t, define a 202-by-6 matrix where T denotes the transpose. For example (round to 2 decimal places),and implement the matrix multiplication (regarded as a set of projections from the vectors in onto the vectors in ) to form a 202-by-6 matrix that records the projection values of all the 202 countries onto the 6 representatives at Year t. We partially demonstrate (round to 2 decimal places) the 202-by-6 matrix, where each row vector represents the projection of vector on the representative vectors . For the full matrix, one could refer to the file named Y_2013ontoU2013.pdf (https://github.com/raymingchen/Gram-Schimdt-Process/blob/3443a9e08ab603e7754d959acfd1189f1e30e248/Y_2013ontoU2013.pdf (accessed on 10 February 2025)). We also skip all the other presentations of ;

- For each year t, find the set of orthogonal vectors of the 6 representatives to yield the maximally-independent vectors (or hidden features) in terms of 6 representatives via the non-self referential representation presented in this article. The implementation of this conversion could refer to Non-self-referential GSP.R in https://github.com/raymingchen/Gram-Schimdt-Process/blob/3d28a5349bf514770c23a5df8950f8fc674aa9d7/Non_self_referential%20GSP.R (accessed on 10 February 2025). For the whole results from to , please refer to https://github.com/raymingchen/Gram-Schimdt-Process/blob/e6f2c27c9ab16a17e0370d1e1c7bd0382f49aeef/Vk_2013to2023.pdf (accessed on 10 February 2025). For example (round to 2 decimal places),;

- Since (by Lemma 2), we compute , indeed we could recursively compute these values directly from evaluating each via our method (this is implemented by the file named code and data for application Two_19.R in https://github.com/raymingchen/Gram-Schimdt-Process/blob/5c8327b7782a16fcd06092814b9192a1fdf8182e/code%20and%20data%20for%20application%20Two_19.R (accessed on 10 February 2025)). For example (round to 2 decimal places and read as row vectors),where each is the projection of the vector onto . For a complete computed result of , please refer to the file Vk_2013to2023.pdf in https://github.com/raymingchen/Gram-Schimdt-Process/blob/8edd29d1b755559b5755a491422f534dd30dd8fa/Vk_2013to2023.pdf (accessed on 10 February 2025)

- For each year t, compute the set of cosine values . For a partial demonstration (round to 2 decimal places), where reflects the similarity of governance systems between country j and the other 6 orthogonal features.

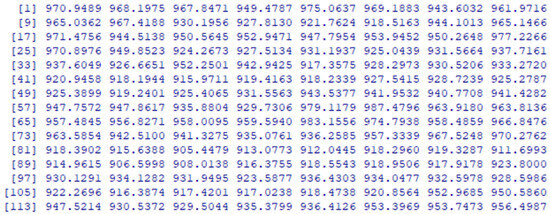

- Assign the categories (from 1 to 6) for each country by its maximal cosine value among all the 6 features. For each year t, find the positions (among columns) that yield the maximal values of , row by row—let us name the categorization at year t by . For example, in the previous case, . The detailed categorization for all is presented in the Appendix A Listing A1. The categorization regarding the 6 representative countries is in particular singled out in Table 2. As for the statistics of the categorization distribution for the other countries, they are revealed in Figure 6.

Table 2. The orthogonal features associated with each representative country from the year 2013 to 2023: The orthogonal features associated with each country are extremely stable—this indicates the governance systems in the representatives are consistent and also indicates their representations are reliable for the classification problem.

Table 2. The orthogonal features associated with each representative country from the year 2013 to 2023: The orthogonal features associated with each country are extremely stable—this indicates the governance systems in the representatives are consistent and also indicates their representations are reliable for the classification problem. Figure 6. Frequency distribution of six categories from Year 2013 to 2023 by NsrGSP: These frequencies represent the relative proportion of countries that lie in each category. In total, there are six categories: 1, 2, 3, 4, 5, and 6. Each of the 202 countries were assigned a distinct category, and the overall distribution was captured by these histograms. The underlying method (NsrGSP) adopted is described as an algorithm in Section Application Two. The features for the six representative countries (China, France, Germany, India, Russia, and the USA) were further analyzed by another six orthogonal (hidden) features—one could regard these six new features as six new dummy countries. By associating (see Table 2) these new six dummy countries with the original six chosen representative countries, then some of the dummy countries become immediately explainable. Dummy Country 1 is associated with China, and Dummy Country 2 is associated with the USA—this is good since we could find the dominating factors/features/countries to represent the clustering/classification. These temporal histograms, which are named the Histogram of catxxxx, show that the China-style and USA-style governance systems are dominating this world, and there is no distinct difference for their domination.

Figure 6. Frequency distribution of six categories from Year 2013 to 2023 by NsrGSP: These frequencies represent the relative proportion of countries that lie in each category. In total, there are six categories: 1, 2, 3, 4, 5, and 6. Each of the 202 countries were assigned a distinct category, and the overall distribution was captured by these histograms. The underlying method (NsrGSP) adopted is described as an algorithm in Section Application Two. The features for the six representative countries (China, France, Germany, India, Russia, and the USA) were further analyzed by another six orthogonal (hidden) features—one could regard these six new features as six new dummy countries. By associating (see Table 2) these new six dummy countries with the original six chosen representative countries, then some of the dummy countries become immediately explainable. Dummy Country 1 is associated with China, and Dummy Country 2 is associated with the USA—this is good since we could find the dominating factors/features/countries to represent the clustering/classification. These temporal histograms, which are named the Histogram of catxxxx, show that the China-style and USA-style governance systems are dominating this world, and there is no distinct difference for their domination.

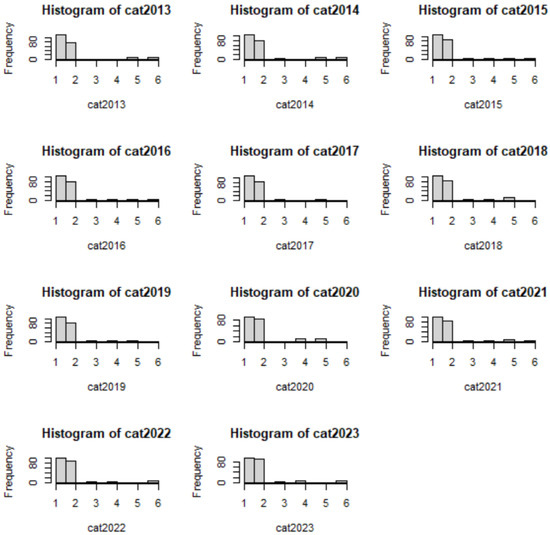

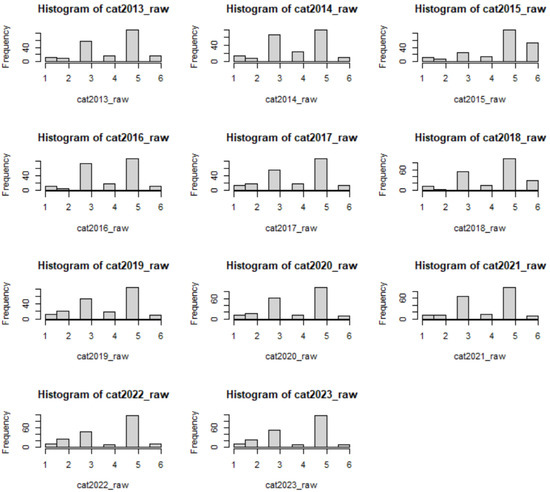

Now let us do some evaluation and comparison regarding the classification problem with various methods based on cosine values and some bases. To this aim, together with our method (NsrGSP), we add two other approaches: one without any GSP involved (or NonGSP) and the other, with Classical GSP thought (or CGSP). The results for the NonGSP are shown in Figure 7. It shows the 3rd (Germany) and 5th (Russian) style governance systems dominate this world, and so does CGSP (the results are shown in Figure 8. Obviously this classification is not exactly what we anticipate—we would like the classification to preserve some distinct features that could differentiate it from another. This is achieved by NsrGSP, since the distinct features are now related to China and America, a result that could polarize the classification more.

Figure 7.

Frequency distribution of the six categories from Year 2013 to 2023 by the nonGSP: These frequencies represent the relative proportion of countries that lie in each category. In total, there are six categories: 1, 2, 3, 4, 5, and 6. Each of the 202 countries were assigned a distinct category, and the overall distribution was captured by these histograms. The underlying method adopted was named nonGSP analysis (or nonGSP): the usual approach adopted in calculating cosine values to analyze the similarities between vectors/data. In our case, the representative vectors were six subjectively chosen countries (China, France, Germany, India, Russia, and the USA). Then, we calculated the cosine values of the 202 countries’ IDs with these six representative IDs and then picked up the most similar country (among the six representative countries) for each of the 202 countries. The results were then plotted as a histogram of , where raw means there is no further processing regarding the given IDs for the representative countries. From the temporal histograms, one could observe that Germany and Russia dominate the main representatives since their systems mix with various other factors that are also shared with other countries. Since their IDs were not further processed, such classification was less informative—we could not perceive the underlying differences easily.

Figure 8.

Frequency distribution of the six categories from Year 2013 to 2023 by CGSP: These frequencies represent the relative proportion of countries that lie in each category. In total, there were six categories: 1, 2, 3, 4, 5, and 6. Each of the 202 countries was assigned a distinct category, and the overall distribution was captured by these histograms. The underlying method adopted was named Classical GSP analysis (or CGSP): this approach converts/projects all the original IDs into/onto the new (sub-)space spanned by the orthogonal vectors derived from the self-referential (or classical) GSP. The cosine values for the similarity computation are based on the coefficient vectors in this newly constructed space. Theoretically, it is appeasing; but experimentally, it has pretty much the same classifying results as nonGSP. It is also much more time consuming because we need to project the IDs of all the 202 countries onto the newly constructed space.

5. Conclusions

In this paper, we derived a non-self-referential inductive method for Gram–Schmidt processes based on the typical Gram–Schmidt process (TGSP). The main idea is to keep track of all indices of the TGSP and find the patterns of change between these indices. The results show that we could largely reduce the computational complexity of GSP and also keep the theoretical favor that all of the generated orthonormal vectors are non-referential. This will give us another perspective for GSP. The results show that

where and . Unlike another non-referential GSP or FDET (see Equation (1)), whose complexity is exponential due to the permutation, our method uses only polynomial complexity. This will bring some theoretical and practical advantages; for example, in Application Two, we showed it could provide a much more persuasive classification and could maintain the polynomial complexity. There are also some disadvantages to this approach. First of all, the presentation of our method is succinct, but the computation of the coefficients still needs to go through a set of recursive procedures. Secondly, we derive our method based on the classical GSP, which tends to cause computational error [12,13]. For a better performance, the readers could derive a new version of our method based on modified GSP [14,15]. Furthermore, it still needs more experiments and research on its applicability to other real-world problems.

Funding

This research was funded by the Internal (Faculty/Staff) Start-Up Research Grant of Wenzhou-Kean University (Project No. ISRG2023029) and the 2023 Student Partnering with Faculty/Staff Research Program (Project No. WKUSPF2023035).

Data Availability Statement

The original contributions presented in this study are included in the article.

Conflicts of Interest

The author declares that he has no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Appendix A. Categorization of the 202 Countries Year by Year: 2013–2023

| Listing A1. The categories assigned to the 202 countries from Year 2013 (cat2013) to 2023 (cat2023). In total, there were six categories—1, 2, 3, 4, 5, and 6—for the 202 countries (in which square brackets are used to identify the order of these countries). |

| > cat2013: [1] 1 1 1 2 1 2 1 1 2 2 2 1 2 2 1 2 1 2 1 1 1 1 1 2 5 2 5 1 1 2 1 1 2 2 [35] 1 1 2 1 1 1 1 1 2 1 2 1 2 2 2 1 2 1 1 1 1 1 1 2 1 1 1 2 2 2 1 1 2 2 [69] 5 2 2 2 1 1 1 1 1 1 2 2 2 1 1 1 1 2 2 2 5 2 2 2 1 1 6 1 2 1 1 1 1 2 [103] 1 1 1 1 2 2 2 2 1 1 2 1 1 2 6 1 2 5 6 1 1 5 1 1 1 2 6 1 2 2 1 1 1 5 [137] 2 2 1 6 5 1 1 1 1 2 2 2 2 2 1 1 2 1 1 1 1 2 1 2 2 2 1 1 2 1 2 1 2 2 [171] 2 1 1 2 2 1 2 1 1 1 1 1 1 5 1 2 1 6 1 1 2 2 2 2 1 6 1 1 1 1 1 1. > cat2014: [1] 1 3 1 2 1 2 1 1 2 2 2 1 2 2 1 2 1 2 1 1 2 1 1 2 5 2 2 1 1 2 1 1 2 2 [35] 1 1 2 1 5 1 1 1 2 1 2 1 2 2 2 1 2 1 1 1 5 1 1 2 1 1 1 2 2 2 1 1 2 2 [69] 6 2 2 2 1 1 1 1 1 1 2 2 2 1 1 1 1 2 2 2 5 2 2 2 1 1 6 1 2 1 1 1 1 2 [103] 1 1 1 1 2 2 2 2 1 1 2 1 1 2 6 1 2 1 6 1 1 2 1 1 1 2 1 1 2 2 1 1 1 2 [137] 2 2 1 6 2 1 1 5 1 2 2 2 2 2 1 2 2 1 1 6 3 2 1 2 2 2 1 1 2 1 2 1 3 2 [171] 2 1 1 2 2 1 2 1 1 1 1 1 6 5 1 2 1 6 1 1 2 2 2 2 1 6 1 1 1 1 1 1. > cat2015: [1] 1 3 1 2 1 2 1 1 2 2 2 1 2 2 1 2 1 2 1 1 2 1 1 2 1 2 2 1 1 2 1 1 2 2 [35] 1 1 2 1 2 1 1 1 2 1 2 1 2 2 2 1 2 1 1 1 1 1 1 2 1 1 1 2 2 2 1 1 2 2 [69] 6 2 2 2 1 1 1 1 1 1 2 2 2 1 1 1 1 2 2 2 4 2 2 2 1 1 5 1 2 1 1 1 1 2 [103] 1 1 1 1 2 2 2 2 1 1 2 1 1 2 5 1 2 1 5 1 1 2 1 1 1 2 1 1 2 2 1 1 1 2 [137] 2 2 1 2 2 1 1 3 1 2 2 2 2 2 1 2 2 1 1 5 3 2 1 2 2 2 1 1 2 1 2 1 2 2 [171] 2 1 1 2 2 1 2 1 1 1 1 1 6 4 1 2 1 6 1 1 2 2 2 2 1 6 1 1 1 1 1 1. > cat2016: [1] 1 3 1 2 1 2 1 1 2 2 2 1 2 2 1 2 1 2 1 1 2 1 1 2 1 2 2 1 1 2 1 1 2 2 [35] 1 1 2 1 4 1 1 1 2 1 2 1 2 2 2 1 2 1 1 1 1 1 1 2 1 1 5 2 2 2 1 1 2 2 [69] 6 2 2 2 1 1 1 1 1 1 2 2 2 1 1 1 1 2 2 2 4 2 2 2 1 1 5 1 2 1 1 1 1 2 [103] 1 1 1 1 2 2 2 2 1 1 2 1 1 2 5 1 2 1 5 1 4 2 1 1 1 2 1 1 2 2 1 1 1 3 [137] 2 2 1 2 3 1 1 3 1 2 2 2 2 2 1 2 2 1 1 5 4 2 1 2 2 2 1 1 2 1 2 1 2 2 [171] 2 1 1 2 2 1 2 1 1 1 1 1 6 4 1 1 1 6 1 1 2 2 2 2 1 6 1 1 1 1 1 1. > cat2017: [1] 1 3 1 2 1 2 1 1 2 2 2 1 2 2 1 2 1 2 1 1 2 1 1 2 1 2 2 1 1 2 1 1 2 2 [35] 1 1 2 1 4 1 1 1 2 1 2 1 2 2 2 1 2 1 1 1 1 1 1 2 1 1 2 2 2 2 1 1 2 2 [69] 5 2 2 2 1 1 1 1 1 1 2 2 2 1 1 1 1 2 2 2 2 2 2 2 1 1 5 1 2 1 1 1 1 2 [103] 1 1 1 1 2 2 2 2 1 1 2 1 1 2 5 1 2 1 1 1 1 2 1 1 1 2 1 1 2 2 1 1 1 2 [137] 2 2 1 2 2 1 1 3 1 2 2 2 2 2 1 2 2 1 1 1 1 2 1 2 2 2 1 1 2 1 2 1 2 2 [171] 2 1 1 2 2 1 2 1 1 1 1 1 5 5 1 1 1 5 1 1 2 2 2 2 1 6 1 1 1 1 1 1. > cat2018: [1] 1 5 1 2 1 2 5 3 2 2 2 1 2 2 1 2 1 2 1 1 2 1 1 2 1 2 2 1 1 2 1 1 2 2 [35] 1 1 2 1 4 1 1 1 2 1 2 1 2 2 2 1 2 1 1 1 1 1 1 2 1 1 2 2 2 2 1 1 2 2 [69] 5 2 2 3 1 1 1 1 1 1 2 2 2 1 1 1 1 2 2 2 2 2 2 2 1 1 5 1 2 1 1 1 1 2 [103] 1 1 1 1 2 2 2 2 1 1 2 1 1 2 5 1 2 1 5 1 5 2 1 1 1 2 2 1 2 2 1 1 1 2 [137] 2 2 1 2 5 1 1 3 1 2 2 2 2 2 1 2 2 1 1 4 1 2 1 2 2 2 1 1 5 1 2 1 2 2 [171] 2 1 1 2 2 1 2 1 1 1 1 1 5 5 1 1 1 6 1 1 2 2 2 2 1 5 1 1 1 1 1 1. > cat2019: [1] 1 5 1 2 1 2 1 2 2 2 2 1 2 2 1 2 1 2 1 1 2 1 1 2 1 2 2 1 1 2 1 1 2 2 [35] 1 1 2 1 2 1 1 1 2 1 2 1 2 2 2 1 2 1 1 1 1 1 1 2 1 1 2 2 2 2 1 1 2 2 [69] 4 2 2 3 1 1 1 1 1 1 2 2 2 1 1 1 1 2 2 2 2 2 2 1 1 1 3 1 2 1 1 1 1 2 [103] 1 1 1 1 2 2 2 2 1 1 2 1 1 2 3 1 2 1 3 1 5 2 1 1 1 2 3 1 2 2 1 1 1 2 [137] 2 2 1 2 2 1 1 2 1 2 2 2 2 2 1 1 2 1 1 1 1 2 1 2 2 2 1 1 5 1 2 1 2 2 [171] 2 1 1 2 2 1 2 1 1 1 1 1 6 4 1 1 1 3 1 1 2 2 2 2 1 4 1 1 1 1 1 1. > cat2020: [1] 1 5 1 2 1 2 4 2 2 2 2 1 2 2 1 2 1 2 4 1 2 1 1 2 4 2 2 1 1 2 1 1 2 2 [35] 1 1 2 1 5 1 1 1 2 1 2 1 2 2 2 1 2 5 1 1 5 1 1 2 1 1 2 2 2 2 1 1 2 2 [69] 4 2 2 2 1 1 1 1 1 1 2 2 2 1 5 1 1 2 2 2 2 2 2 2 1 1 2 1 2 1 2 1 1 2 [103] 1 1 1 1 2 2 2 2 1 1 2 1 1 2 4 1 2 1 4 5 5 2 1 1 1 2 2 1 2 2 1 1 1 2 [137] 2 2 1 2 2 1 5 2 1 2 2 2 2 2 1 1 2 1 1 4 5 2 1 2 2 2 4 1 5 1 2 1 2 2 [171] 2 1 1 2 2 1 2 1 1 1 4 1 6 4 4 1 1 3 1 1 2 2 2 2 1 4 1 1 1 1 1 1. > cat2021: [1] 1 5 1 2 1 2 1 2 2 2 2 1 2 2 1 2 1 2 1 1 1 1 1 2 4 2 2 1 1 2 1 1 2 2 [35] 1 1 2 1 5 1 1 1 2 1 2 1 2 2 2 1 2 5 1 1 1 1 1 2 1 1 2 2 2 2 1 1 2 2 [69] 4 2 2 2 1 1 1 1 1 1 2 2 2 1 2 1 1 2 2 2 2 2 2 2 1 1 3 1 2 1 2 1 1 2 [103] 1 1 1 1 2 2 2 2 1 1 2 1 1 2 1 1 2 1 1 5 4 2 1 1 1 2 2 1 2 2 1 1 1 2 [137] 2 2 1 2 2 1 1 5 1 2 2 2 2 2 1 2 2 1 2 1 5 2 1 2 2 2 1 1 4 1 2 1 2 2 [171] 2 1 1 2 2 1 2 1 1 1 1 1 6 5 1 1 1 3 1 1 2 2 2 2 1 6 1 1 1 1 1 1. > cat2022: [1] 1 2 1 2 1 2 1 4 2 2 2 1 2 2 1 2 1 2 1 1 2 1 1 2 1 2 2 1 1 2 1 1 2 2 [35] 1 1 2 1 6 1 1 1 2 1 2 1 2 2 2 1 2 6 1 1 1 1 1 2 1 1 2 2 2 2 1 1 2 2 [69] 1 2 2 2 1 1 1 1 1 1 2 2 2 1 2 1 1 2 2 2 2 2 2 2 1 1 3 1 2 1 2 1 1 2 [103] 1 1 1 1 2 2 2 2 1 1 2 1 1 2 3 1 2 1 1 6 1 2 1 1 1 2 2 1 2 2 1 1 1 2 [137] 2 2 1 2 6 1 1 6 6 2 2 2 2 2 1 2 2 1 2 1 6 2 1 2 2 2 1 1 4 1 2 1 2 2 [171] 2 1 1 2 2 1 2 1 1 2 1 1 4 4 1 1 1 2 1 1 2 2 2 2 1 3 1 1 1 1 1 1. > cat2023: [1] 1 2 1 2 1 2 1 5 2 2 2 1 2 2 1 2 1 2 4 1 2 1 1 2 4 2 2 1 1 2 1 1 2 2 [35] 1 1 2 1 6 1 1 1 2 1 2 1 2 2 2 1 2 2 1 1 1 1 1 2 1 1 2 2 2 2 1 1 2 2 [69] 4 2 2 2 1 1 1 1 1 1 2 2 2 1 2 1 1 2 2 2 2 2 2 2 1 1 3 1 2 1 2 1 1 2 [103] 1 1 1 1 2 2 2 2 1 1 2 1 1 2 4 1 2 1 3 6 4 2 1 1 1 2 2 1 2 2 1 1 1 2 [137] 2 2 1 2 6 1 6 6 6 2 2 2 2 2 1 2 2 1 2 1 6 2 1 2 2 2 1 1 4 1 2 1 2 2 [171] 2 1 1 2 2 1 2 1 1 6 1 1 4 4 1 1 1 2 1 1 2 2 2 2 1 3 1 1 1 1 1 1. |

Appendix B. Raw Categorization of 202 Countries Year by Year: 2013–2023

| > cat2013_raw |

| [1] 5 5 5 3 5 3 5 5 3 3 3 5 3 1 5 3 1 3 4 5 3 5 5 3 4 6 3 5 5 3 5 5 3 3 |

| [35] 5 5 3 1 4 5 5 5 3 5 6 1 2 6 3 5 3 5 5 5 5 5 5 3 1 5 1 3 2 2 5 5 2 3 |

| [69] 3 2 3 3 5 5 5 5 5 5 6 3 3 4 5 5 5 3 2 3 2 3 3 1 5 5 3 5 6 5 1 5 5 6 |

| [103] 5 5 5 5 3 3 3 6 5 5 6 5 4 3 4 5 6 4 3 5 5 6 5 5 5 3 4 5 3 3 5 5 5 5 |

| [137] 3 1 5 3 4 5 5 4 4 3 6 3 6 6 5 1 3 5 1 5 4 3 5 6 3 3 5 5 2 5 2 5 3 3 |

| [171] 3 5 4 3 3 5 6 5 5 4 5 5 3 2 4 4 5 3 5 5 6 3 6 3 5 3 5 1 5 5 5 5 |

| > cat2014_raw |

| [1] 5 5 5 6 5 3 4 5 3 3 3 5 3 1 5 3 1 3 4 4 3 5 5 3 4 3 3 5 5 2 5 5 3 3 |

| [35] 5 5 3 1 4 5 5 5 3 5 3 1 3 3 3 5 3 5 5 5 5 5 5 3 1 5 1 3 2 3 5 5 2 3 |

| [69] 4 2 3 3 5 5 5 5 5 5 3 3 3 4 4 5 5 3 2 3 4 6 3 1 1 4 4 5 6 5 1 5 1 3 |

| [103] 5 4 5 5 3 3 3 3 5 5 6 5 4 3 4 5 3 5 3 5 5 3 5 5 5 3 4 5 3 3 5 4 5 3 |

| [137] 3 3 5 4 3 5 5 4 4 3 3 3 6 3 5 1 3 4 1 4 3 6 5 6 3 3 4 5 2 5 2 1 3 3 |

| [171] 3 5 4 3 3 5 3 5 5 5 5 5 3 2 4 5 5 3 5 4 6 6 6 3 5 3 5 1 5 5 5 5 |

| > cat2015_raw |

| [1] 5 5 5 6 5 6 4 5 3 3 6 5 6 1 5 6 5 3 4 5 6 5 5 6 4 6 3 5 5 6 5 5 6 6 |

| [35] 5 5 3 1 4 5 5 5 3 5 6 1 3 6 3 5 6 5 5 5 5 5 5 3 1 5 3 3 2 3 5 5 2 3 |

| [69] 4 2 6 3 5 5 5 5 5 5 6 6 6 4 4 5 5 3 2 3 4 6 6 1 5 5 6 5 2 5 1 5 5 3 |

| [103] 5 5 5 5 6 6 6 6 5 5 6 5 5 6 6 5 6 5 6 5 5 3 5 5 5 6 6 5 3 6 5 5 5 5 |

| [137] 6 1 5 6 3 5 5 5 4 6 6 6 6 3 5 1 6 5 1 4 6 6 5 6 6 6 5 5 2 5 2 1 3 6 |

| [171] 6 5 4 3 6 5 6 5 5 1 5 5 4 6 4 4 5 6 5 5 6 3 6 3 5 4 5 1 5 5 5 5 |

| > cat2016_raw |

| [1] 5 5 5 3 5 3 4 5 3 3 3 5 3 1 5 3 5 3 4 5 3 5 5 3 4 3 3 4 5 3 5 5 3 3 |

| [35] 5 5 3 1 4 5 5 5 3 5 3 1 3 3 3 5 3 5 5 5 5 5 5 3 5 5 3 3 2 6 5 5 2 3 |

| [69] 4 2 3 3 5 5 5 4 5 5 6 3 3 4 4 5 5 3 2 3 3 3 3 1 5 4 3 5 6 5 1 5 5 6 |

| [103] 5 5 5 5 3 3 3 3 5 5 6 5 5 3 3 5 3 5 3 5 3 3 5 5 5 3 3 5 3 3 5 5 5 5 |

| [137] 3 1 5 3 6 5 5 4 4 3 3 6 3 6 5 1 3 5 1 4 4 3 5 3 3 3 5 5 2 5 6 5 3 3 |

| [171] 3 5 4 3 3 5 3 5 5 1 5 5 3 3 4 5 5 3 5 4 3 3 6 3 5 3 5 1 5 5 5 5 |

| > cat2017_raw |

| [1] 5 5 5 3 5 3 4 5 3 3 3 5 3 1 5 3 5 3 5 5 3 5 5 3 4 6 3 4 5 3 5 5 3 3 |

| [35] 5 5 3 1 4 5 5 5 3 5 3 1 3 3 3 5 3 5 5 5 5 5 5 3 1 5 3 3 2 2 5 5 6 3 |

| [69] 4 2 3 3 5 5 5 4 5 5 6 3 3 4 4 5 5 3 6 3 2 3 2 1 1 5 2 5 6 5 1 5 5 6 |

| [103] 5 5 5 5 3 3 3 6 5 5 6 5 4 3 2 5 3 5 2 5 5 6 5 5 5 2 3 5 3 3 5 5 5 5 |

| [137] 3 1 5 2 3 5 5 4 4 3 3 3 1 2 5 1 2 5 1 4 5 3 5 6 3 2 4 5 4 5 2 5 3 3 |

| [171] 3 5 4 3 3 5 3 5 5 1 5 5 2 4 4 5 5 2 5 4 6 3 6 3 5 2 5 1 5 5 5 5 |

| > cat2018_raw |

| [1] 5 5 5 6 5 3 4 5 3 3 3 5 3 1 5 3 5 3 5 5 3 5 5 3 4 6 6 4 5 3 5 5 3 6 |

| [35] 5 5 3 1 4 5 5 5 3 5 6 1 3 6 3 5 3 5 5 5 5 5 5 3 5 5 3 3 2 6 5 5 6 3 |

| [69] 4 3 3 3 5 5 5 5 5 5 6 6 3 4 4 5 5 3 2 3 6 6 3 1 1 5 3 5 6 5 1 5 5 6 |

| [103] 5 5 5 5 3 6 3 6 5 5 6 5 5 3 3 5 6 5 3 5 5 6 5 5 5 3 3 5 3 3 5 5 5 5 |

| [137] 3 1 5 3 6 5 5 5 4 3 3 6 6 6 5 1 3 5 1 4 5 3 5 6 3 3 5 5 4 5 6 5 3 3 |

| [171] 3 5 5 3 3 5 6 5 5 1 5 5 3 4 4 5 5 3 5 4 6 3 6 3 5 3 5 1 5 5 5 5 |

| > cat2019_raw |

| [1] 5 5 5 3 5 3 4 4 3 3 3 5 3 1 5 3 1 3 5 5 3 5 5 3 4 6 2 4 5 3 5 5 3 3 |

| [35] 5 5 2 1 4 5 5 5 3 5 2 1 2 2 3 5 3 5 5 5 5 5 5 3 5 5 3 3 2 2 5 5 6 3 |

| [69] 4 2 3 3 5 5 5 4 5 5 6 2 3 4 4 5 5 3 6 2 2 3 3 1 1 5 3 5 6 5 1 5 5 2 |

| [103] 5 5 5 5 3 2 3 3 5 5 6 5 4 2 3 5 2 4 3 5 5 2 5 5 5 3 3 5 3 3 5 5 5 5 |

| [137] 3 1 5 3 2 5 5 4 4 3 3 2 6 2 5 1 3 5 1 4 5 3 5 3 2 3 5 5 4 5 2 5 3 3 |

| [171] 3 5 4 3 3 5 2 5 5 1 5 5 3 4 4 5 5 3 5 4 6 3 6 3 5 3 5 1 5 5 5 5 |

| > cat2020_raw |

| [1] 5 5 5 3 5 3 4 4 3 3 2 5 3 1 5 3 5 3 5 5 3 5 5 3 4 3 3 4 5 3 5 5 3 3 |

| [35] 5 5 3 1 4 5 5 5 3 5 3 5 2 2 3 5 3 5 5 5 5 5 5 3 5 5 3 3 2 2 5 5 6 3 |

| [69] 3 2 3 3 5 5 5 5 5 5 6 3 3 4 4 5 5 3 6 3 2 3 3 1 1 5 3 5 2 5 1 5 5 2 |

| [103] 5 5 5 5 3 3 3 3 5 5 6 5 5 2 3 5 2 5 3 5 5 6 5 5 5 3 3 5 3 3 5 5 5 5 |

| [137] 3 1 5 3 2 5 5 4 5 3 3 3 6 3 5 1 3 5 1 4 5 3 5 3 3 2 5 5 4 5 2 5 3 3 |

| [171] 3 5 5 3 3 5 2 5 5 1 5 5 2 4 4 5 5 3 5 5 6 3 6 3 5 3 5 1 5 5 5 5 |

| > cat2021_raw |

| [1] 5 5 5 3 5 3 5 4 3 3 2 5 3 1 5 3 5 3 5 5 3 5 5 3 4 3 3 4 5 3 5 5 3 3 |

| [35] 5 5 3 1 4 5 5 5 3 5 3 5 2 3 3 5 3 5 5 5 5 5 5 3 5 5 3 3 2 2 5 5 6 3 |

| [69] 4 2 3 3 5 5 5 5 5 5 6 3 3 4 4 5 5 3 6 3 2 3 3 1 5 5 3 5 2 5 1 5 5 3 |

| [103] 5 5 5 5 3 3 3 6 5 5 6 1 5 3 3 5 3 5 3 5 5 6 5 5 5 3 3 5 3 3 5 5 5 4 |

| [137] 3 1 5 3 4 5 5 5 4 3 3 3 6 3 5 1 3 5 1 4 5 3 5 3 3 2 5 5 4 5 2 5 3 3 |

| [171] 3 5 5 3 3 5 2 5 5 1 5 5 3 2 4 5 5 3 5 4 6 3 6 3 5 3 5 1 5 5 5 5 |

| > cat2022_raw |

| [1] 5 4 5 3 5 3 5 5 3 3 2 5 3 1 5 3 5 3 5 5 3 5 5 3 5 3 3 5 5 3 5 5 2 2 |

| [35] 5 5 3 1 4 5 5 5 3 5 2 5 2 2 3 5 3 4 5 5 5 5 5 3 5 5 3 3 2 2 5 5 6 3 |

| [69] 4 2 3 3 5 5 5 5 5 5 6 2 3 4 4 5 5 2 6 3 2 2 3 1 1 5 3 5 2 5 1 5 5 2 |

| [103] 5 5 5 5 3 2 2 6 5 5 6 5 5 2 3 5 2 5 3 5 5 6 5 5 5 3 3 5 3 3 5 5 5 6 |

| [137] 2 1 5 3 5 5 5 5 5 3 2 3 6 3 5 1 3 5 1 5 4 3 5 3 2 2 5 5 4 5 2 5 3 3 |

| [171] 3 5 5 3 2 5 2 5 5 1 5 5 3 2 5 5 5 3 5 5 6 3 6 3 5 3 5 1 5 5 5 5 |

| > cat2023_raw |

| [1] 5 4 5 3 5 3 5 4 3 3 2 5 3 1 5 3 5 3 5 5 3 5 5 3 5 3 3 5 5 3 5 5 3 2 |

| [35] 5 5 2 1 5 5 5 5 3 5 2 5 2 2 2 5 3 4 5 5 5 5 5 3 5 5 3 3 2 2 5 5 6 3 |

| [69] 4 3 3 3 5 5 5 5 5 5 6 3 3 4 4 5 5 2 6 3 2 2 3 1 1 5 3 5 2 5 1 5 5 3 |

| [103] 5 5 5 5 3 2 2 6 5 5 6 5 5 3 3 5 3 5 3 4 5 2 5 5 5 3 3 5 2 3 5 5 5 2 |

| [137] 3 1 5 3 5 5 5 5 4 3 2 3 6 3 5 1 3 5 1 5 5 3 5 6 3 2 5 5 4 5 2 5 3 3 |

| [171] 3 5 5 3 2 5 2 5 5 1 5 5 3 2 5 5 5 3 5 5 6 3 6 3 5 3 5 1 5 5 5 5 |

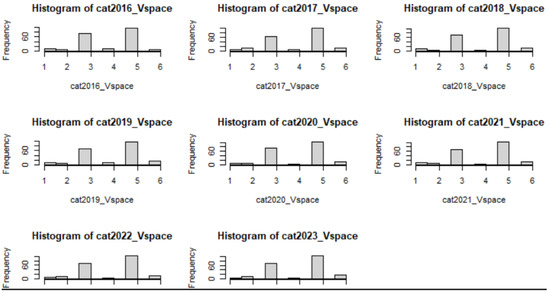

Appendix C. V-Space Categorization of 202 Countries Year by Year: 2013–2023

| > cat2013_Vspace; |

| [1] 5 5 5 3 5 3 5 1 3 3 3 5 3 1 5 3 5 3 4 5 3 |

| [22] 5 5 3 2 3 3 5 5 3 5 5 3 3 5 5 6 1 4 5 5 5 |

| [43] 3 5 3 1 6 3 3 5 3 5 5 5 5 5 5 3 1 5 5 3 2 |

| [64] 2 5 5 2 3 2 2 3 3 5 5 5 5 5 5 6 3 3 4 5 5 |

| [85] 5 3 2 3 3 3 3 1 5 5 4 5 2 5 1 5 5 3 5 5 5 |

| [106] 5 3 3 3 6 5 5 6 5 5 3 4 5 3 4 3 5 5 3 5 5 |

| [127] 5 3 4 5 3 3 5 5 5 5 3 6 5 3 2 5 5 5 4 3 3 |

| [148] 2 3 2 5 1 3 5 1 5 4 3 5 6 3 3 5 5 2 5 2 5 |

| [169] 3 3 3 5 4 3 3 5 3 5 5 5 5 5 5 2 4 4 5 4 5 |

| [190] 5 6 6 6 3 5 3 5 1 5 5 5 5 |

| > cat2014_Vspace; |

| [1] 5 5 5 3 5 3 5 5 3 3 3 5 3 1 5 3 5 3 4 5 3 |

| [22] 5 5 3 4 6 3 5 5 3 5 5 3 3 5 5 3 1 4 5 5 5 |

| [43] 3 5 3 5 3 3 3 5 3 5 5 5 5 5 5 3 5 5 5 3 2 |

| [64] 3 5 5 2 3 4 2 3 3 5 5 5 5 5 5 6 3 3 4 4 5 |

| [85] 5 3 2 3 2 6 3 1 5 5 3 5 2 5 1 5 5 3 5 4 5 |

| [106] 5 3 3 3 6 5 5 6 5 5 3 4 5 3 5 3 5 5 3 5 5 |

| [127] 5 3 4 5 3 3 5 5 5 3 3 6 5 3 2 5 5 4 4 3 3 |

| [148] 3 6 2 5 1 3 5 1 4 3 6 5 6 3 3 5 5 2 5 2 1 |

| [169] 3 3 3 5 4 3 3 5 6 5 5 1 5 5 3 2 4 1 5 3 5 |

| [190] 5 6 6 6 3 5 3 5 5 5 5 5 5 |

| > cat2015_Vspace; |

| [1] 5 5 5 6 5 3 5 5 3 3 3 5 3 1 5 3 5 3 5 5 6 |

| [22] 5 5 6 4 6 2 5 5 3 5 5 6 6 5 5 3 1 4 5 5 5 |

| [43] 3 5 3 5 3 3 6 5 3 5 5 5 5 5 5 3 5 5 5 6 2 |

| [64] 3 5 5 2 3 4 2 3 3 5 5 5 5 5 5 6 3 3 4 5 5 |

| [85] 5 6 2 3 2 6 3 1 5 5 3 5 2 5 1 5 5 3 5 5 5 |

| [106] 5 6 3 6 6 5 5 6 5 5 3 3 5 6 5 3 5 5 3 5 5 |

| [127] 5 3 3 5 3 6 5 5 5 5 3 6 5 3 3 5 5 5 4 3 3 |

| [148] 3 6 3 5 1 3 5 1 5 3 6 5 6 3 3 5 5 2 5 2 5 |

| [169] 3 3 3 5 5 3 6 5 6 5 5 1 5 5 5 3 4 1 5 3 5 |

| [190] 5 6 6 6 3 5 4 5 1 5 5 5 5 |

| > cat2016_Vspace; |

| [1] 5 5 5 3 5 3 4 5 3 3 3 5 3 1 5 3 5 3 5 5 3 |

| [22] 5 5 3 4 3 3 5 5 3 5 5 3 3 5 5 3 1 4 5 5 5 |

| [43] 3 5 3 5 3 3 3 5 3 5 5 5 5 5 5 3 5 5 3 3 2 |

| [64] 3 5 5 2 3 4 2 3 3 5 5 5 5 5 5 6 3 3 4 5 5 |

| [85] 5 3 2 3 3 3 3 1 5 5 3 5 6 5 5 5 5 3 5 5 5 |

| [106] 5 3 3 3 3 5 5 6 5 5 3 3 5 3 5 3 5 5 3 5 5 |

| [127] 5 3 3 5 3 3 5 5 5 5 3 3 5 3 3 5 5 5 4 3 3 |

| [148] 3 3 3 5 1 3 5 1 5 5 3 5 3 3 3 5 5 2 5 3 5 |

| [169] 3 3 3 5 5 3 3 5 3 5 5 1 5 5 3 3 4 1 5 3 5 |

| [190] 5 6 6 6 3 5 4 5 1 5 5 5 5 |

| > cat2017_Vspace; |

| [1] 5 5 5 3 5 3 4 5 3 3 3 5 3 1 5 3 5 2 5 5 3 |

| [22] 5 5 3 4 6 3 5 5 3 5 5 3 3 5 5 3 1 5 5 5 5 |

| [43] 3 5 3 5 3 3 3 5 3 5 5 5 5 5 5 3 5 5 3 3 2 |

| [64] 2 5 5 6 3 4 2 3 3 5 5 5 5 5 5 6 3 3 4 5 5 |

| [85] 5 3 6 2 2 3 3 1 5 5 3 5 6 5 5 5 5 3 5 5 5 |

| [106] 5 3 3 3 6 5 5 6 5 5 3 3 5 3 5 3 5 5 6 5 5 |

| [127] 5 3 3 5 3 3 5 5 5 5 3 6 5 3 2 5 5 5 5 3 3 |

| [148] 3 6 2 5 1 3 5 1 4 5 3 5 6 3 3 5 5 2 5 2 5 |

| [169] 3 3 3 5 5 3 3 5 3 5 5 1 5 5 3 2 4 5 5 3 5 |

| [190] 5 6 3 6 3 5 3 5 5 5 5 5 5 |

| > cat2018_Vspace; |

| [1] 5 5 5 3 5 3 4 5 3 3 3 5 3 1 5 3 5 3 5 5 3 |

| [22] 5 5 3 5 6 3 5 5 3 5 5 3 6 5 5 3 1 5 5 5 5 |

| [43] 3 5 3 5 3 3 3 5 3 5 5 5 5 5 5 3 5 5 6 3 2 |

| [64] 3 5 5 2 3 4 3 3 3 5 5 5 5 5 5 6 3 3 4 5 5 |

| [85] 5 3 2 3 3 3 3 1 5 5 3 5 6 5 1 5 5 6 5 5 5 |

| [106] 5 3 3 3 6 5 5 6 5 5 3 3 5 3 5 3 5 5 6 5 5 |

| [127] 5 3 3 5 3 3 5 5 5 1 3 6 5 3 3 5 5 5 5 3 3 |

| [148] 3 6 3 5 1 3 5 1 5 5 3 5 6 3 3 5 5 2 5 3 5 |

| [169] 3 3 3 5 5 3 3 5 3 5 5 1 5 5 3 3 5 5 5 3 5 |

| [190] 5 6 3 6 3 5 3 5 5 5 5 5 5 |

| > cat2019_Vspace; |

| [1] 5 5 5 3 5 3 4 4 3 3 3 5 3 1 5 3 5 3 5 5 3 |

| [22] 5 5 3 4 6 3 5 5 3 5 5 3 3 5 5 2 1 4 5 5 5 |

| [43] 3 5 3 5 3 3 3 5 3 5 5 5 5 5 5 3 5 5 6 3 2 |

| [64] 2 5 5 6 3 4 3 3 3 5 5 5 5 5 5 6 3 3 4 5 5 |

| [85] 5 3 6 3 3 3 3 1 1 5 3 5 6 5 1 5 5 2 5 5 5 |

| [106] 5 3 3 3 6 5 5 6 5 5 3 3 5 3 5 3 5 5 6 5 5 |

| [127] 5 3 3 5 3 3 5 5 5 5 3 6 5 3 3 5 5 4 5 3 3 |

| [148] 3 6 3 5 1 3 5 1 5 5 3 5 6 3 3 5 5 2 5 3 5 |

| [169] 3 3 3 5 5 3 3 5 3 5 5 1 5 5 3 4 4 5 5 3 5 |

| [190] 5 6 3 6 3 5 5 5 5 5 5 5 5 |

| > cat2020_Vspace; |

| [1] 5 5 5 3 5 3 5 4 3 3 3 5 3 1 5 3 5 3 5 5 3 |

| [22] 5 5 3 5 6 3 5 5 3 5 5 3 3 5 5 3 1 5 5 5 5 |

| [43] 3 5 3 5 2 3 3 5 3 5 5 5 5 5 5 3 5 5 3 3 2 |

| [64] 2 5 5 6 3 3 3 3 3 5 5 5 5 5 5 6 3 3 4 4 5 |

| [85] 5 3 6 3 3 3 3 1 5 5 3 5 2 5 1 5 5 3 5 5 5 |

| [106] 5 3 3 3 6 5 5 6 5 5 3 3 5 3 5 3 5 5 3 5 5 |

| [127] 5 3 3 5 3 3 5 5 5 5 3 6 5 3 3 5 5 5 5 3 3 |

| [148] 3 6 3 5 1 3 5 1 5 5 3 5 6 3 3 5 5 3 5 3 5 |

| [169] 3 3 3 5 5 3 3 5 2 5 5 1 5 5 3 3 5 5 5 3 5 |

| [190] 5 6 3 6 3 5 3 5 5 5 5 5 5 |

| > cat2021_Vspace; |

| [1] 5 5 5 3 5 3 5 4 3 3 3 5 3 1 5 3 5 3 5 5 3 |

| [22] 5 5 3 5 6 3 5 5 3 5 5 3 3 5 5 3 1 4 5 5 5 |

| [43] 3 5 3 5 3 3 2 5 3 5 5 5 5 5 5 3 5 5 6 2 2 |

| [64] 2 5 5 6 3 5 3 3 3 5 5 5 5 5 5 6 3 3 4 4 5 |

| [85] 5 3 6 3 3 3 3 1 5 5 3 5 2 5 1 5 5 3 5 5 5 |

| [106] 5 3 3 3 6 5 5 6 5 5 3 3 5 3 5 3 5 5 3 5 5 |

| [127] 5 3 3 5 3 3 5 5 5 5 3 1 5 3 3 5 5 5 5 3 3 |

| [148] 3 6 3 5 1 3 5 1 5 5 3 5 6 3 3 5 5 3 5 3 5 |

| [169] 3 3 3 5 5 3 2 5 2 5 5 1 5 5 3 3 5 5 5 3 5 |

| [190] 5 6 3 6 3 5 3 5 5 5 5 5 5 |

| > cat2022_Vspace; |

| [1] 5 5 5 3 5 3 5 5 3 3 2 5 3 6 5 3 5 3 5 5 3 |

| [22] 5 5 3 5 6 3 5 5 3 5 5 3 3 5 5 3 1 5 5 5 5 |

| [43] 3 5 3 5 3 3 2 5 3 5 5 5 5 5 5 3 5 5 6 3 2 |

| [64] 2 5 5 6 3 5 3 3 3 5 5 5 5 5 5 6 3 3 4 4 5 |

| [85] 5 3 6 3 3 2 3 1 1 5 3 5 2 5 6 5 5 3 5 5 5 |

| [106] 5 3 3 3 6 5 5 6 5 5 3 3 5 3 5 3 5 5 3 5 5 |

| [127] 5 3 3 5 3 3 5 5 5 3 3 1 5 3 5 5 5 5 5 3 3 |

| [148] 3 6 3 5 6 3 5 4 5 5 3 5 6 3 3 5 5 5 5 3 5 |

| [169] 3 3 3 5 5 3 3 5 2 5 5 1 5 5 3 3 5 5 5 3 5 |

| [190] 5 6 2 6 3 5 3 5 1 5 5 5 5 |

| > cat2023_Vspace; |

| [1] 5 3 5 3 5 3 5 5 3 3 3 5 3 6 5 3 5 3 5 5 3 |

| [22] 5 5 3 5 6 3 5 5 3 5 5 3 3 5 5 3 1 5 5 5 5 |

| [43] 3 5 3 5 3 3 2 5 3 3 5 5 5 5 5 3 5 5 3 2 2 |

| [64] 2 5 5 6 3 5 3 3 3 5 5 5 5 5 5 6 3 3 4 4 5 |

| [85] 5 3 6 3 3 2 3 4 5 5 3 5 2 5 6 5 5 3 5 5 5 |

| [106] 5 3 3 3 6 5 5 6 5 5 3 3 5 3 5 3 5 5 3 5 5 |

| [127] 5 3 3 5 2 3 5 5 5 3 3 6 5 3 5 5 5 5 5 3 3 |

| [148] 3 6 3 5 6 3 5 6 5 5 3 5 6 3 3 5 5 5 5 3 5 |

| [169] 3 3 3 5 5 3 2 5 2 5 5 1 5 5 3 5 5 5 5 3 5 |

| [190] 5 6 3 6 3 5 3 5 5 5 5 5 5 |

References

- Deng, Y. On p-adic Gram–Schmidt Orthogonalization Process. Front. Math. 2024. [Google Scholar] [CrossRef]

- Huang, X.; Caron, M.; Hindson, D. A recursive Gram-Schmidt orthonormalization procedure and its application to communications. In Proceedings of the 2001 IEEE Third Workshop on Signal Processing Advances in Wireless Communications (SPAWC’01), Taiwan, China, 20–23 March 2001; Workshop Proceedings (Cat. No.01EX471). pp. 340–343. [Google Scholar]

- Balabanov, O.; Grigori, L. Randomized Gram-Schmidt process with application to GMRES. Siam J. Sci. Comput. 2022, 44, A1450–A1474. [Google Scholar] [CrossRef]

- Ford, W. Numerical Linear Algebra with Applications: Using MATLAB and Octave; Academic Press: Cambridge, MA, USA, 2014. [Google Scholar]

- Morrison, D.D. Remarks on the Unitary Triangularization of a Nonsymmetric Matrix. J. ACM 1960, 7, 185–186. [Google Scholar] [CrossRef]

- Skogholt, J.; Lil, K.H.; Næs, T.; Smilde, A.K.; Indahl, U.G. Selection of principal variables through a modified Gram–Schmidt process with and without supervision. J. Chemom. 2023, 37, e3510. [Google Scholar] [CrossRef]

- Robinson, P.J.; Saranraj, A. Intuitionistic Fuzzy Gram-Schmidt Orthogonalized Artificial Neural Network for Solving MAGDM Problems. Indian J. Sci. Technol. 2024, 17, 2529–2537. [Google Scholar] [CrossRef]

- Dax, A. A modified Gram–Schmidt algorithm with iterative orthogonalization and column pivoting. Linear Algebra Its Appl. 2000, 310, 25–42. [Google Scholar] [CrossRef]

- Trefethen, L.N.; Bau, D. Numerical Linear Algebra; SIAM: New Delhi, India, 1997. [Google Scholar]

- Bremner, M.R. Lattice Basis Reduction: An Introduction to the LLL Algorithm and Its Applications; CRC Press: Boca Raton, FL, USA, 2012. [Google Scholar]

- Available online: http://www.noahsd.com/mini_lattices/02__GS_and_LLL.pdf (accessed on 10 February 2025).

- Giraud, L.; Langou, J.; Rozložník, M.; Eshof, J.V.D. Rounding error analysis of the classical Gram–Schmidt orthogonalization process. Numer. Math. 2005, 101, 87–100. [Google Scholar] [CrossRef]

- Giraud, L.; Langou, J.; Rozloznik, M. The loss of orthogonality in the Gram–Schmidt orthogonalization process. Comput. Math. Appl. 2005, 50, 1069–1075. [Google Scholar] [CrossRef]

- Imakura, A.; Yamamoto, Y. Efficient implementations of the modified Gram–Schmidt orthogonalization with a non-standard inner product. Jpn. J. Indust. Appl. Math. 2019, 36, 619–641. [Google Scholar] [CrossRef]

- Sreedharan, V.P. A note on the modified gram-schmidt process. Int. J. Comput. Math. 1988, 24, 277–290. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).