Convergence Analysis for Differentially Private Federated Averaging in Heterogeneous Settings †

Abstract

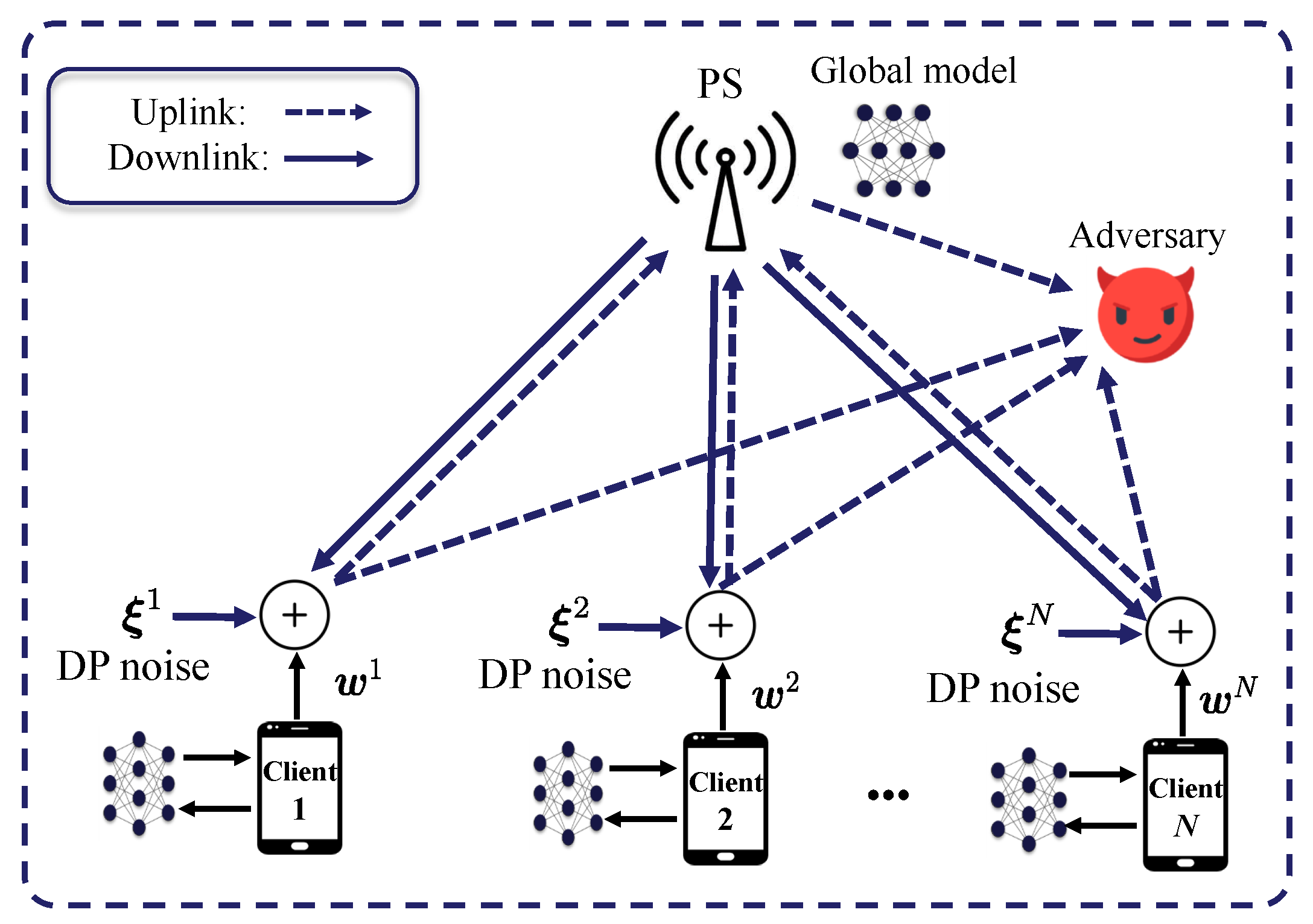

1. Introduction

1.1. Related Work

- FL in the presence of data heterogeneity: A substantial body of research has tackled the challenges posed by non-i.i.d. data in FL. Studies such as [6,19] indicate that the convergence rate of FL models in non-i.i.d. settings is significantly lower than in i.i.d. scenarios. These works also provide theoretical analyses suggesting that effective client selection schemes can improve convergence rates in non-i.i.d. scenarios. Many existing FL frameworks [5,11,20] rely on random client selection strategies [19]. Additionally, some works [21,22] consider device heterogeneity by taking into account factors like wireless channel capacity and computational power. However, a unified theoretical analysis that examines both non-i.i.d. data effects and client sampling strategies in a single framework remains unexplored.

- FL with privacy considerations: Privacy protection has become a critical focus in the FL system. The works [23,24] have explored DP as a robust mechanism to protect data privacy in FL, demonstrating its effectiveness in achieving privacy guarantees without incurring excessive computational overhead. Numerous privacy protection techniques based on DP algorithm in FL have been investigated, including DP-SGD [25], DP-FedAvg [1,26] and the DP-based primal-dual method (DP-PDM) [27], where additive noise is applied to local gradients/models. Notably, the impact of this additive noise on learning performance can be quantified through convergence analysis, and total privacy loss can be readily tracked during the training process. However, few of these studies consider privacy amplification techniques [28,29] to reduce the adverse effect of DP noise, which could potentially improve the privacy–utility tradeoff.

1.2. Contributions

- Theoretical framework: We propose a novel theoretical framework to analyze the convergence of DP-FedAvg under various client sampling strategies, with a particular focus on the impact of privacy protection and non-i.i.d. data. This framework enables us to quantify how client sampling methods and data heterogeneity influence the algorithm’s convergence rate and privacy guarantees.

- Privacy–utility tradeoff: We explore the tradeoff between privacy protection and learning performance, taking into account the effects of privacy amplification. Our theoretical results demonstrate how various system parameters influence this tradeoff and highlights that the privacy protection level and gradient clipping play a crucial role in determining the privacy–utility tradeoff in practical FL systems.

- Empirical validation: We empirically validate our theoretical findings through extensive experiments on real-world datasets, demonstrating the behavior of DP-FedAvg under non-i.i.d. data conditions.

2. Preliminaries

2.1. Federated Learning

- 1.

- First, at the beginning of round , the PS selects a subset of clients to participate and sends them the latest global model .

- 2.

- Each client initializes its local model to be the global model and then performs Q iterations of stochastic gradient descent (SGD) on its local dataset.where represents the learning rate, and is the mini-batch data with ; denotes the stochastic gradient.

- 3.

- Each client uploads the final local model update to the PS.

- 4.

- The PS aggregates the local model updates from all participating clients and improves the global model as

2.2. Differential Privacy

2.3. The Typical DP-FedAvg Algorithm

| Algorithm 1 Proposed DP-FedAvg algorithm |

|

3. Theoretical Analysis for DP-FedAvg

3.1. Assumptions

3.2. Privacy Analysis

3.3. Convergence Analysis for DP-FedAvg

4. Numerical Experiments

4.1. Experimental Setting

- For the MNIST dataset, following the heterogeneous data partition method in [6], each client was allocated data samples of only four different labels with . This led to a high degree of non-i.i.d. datasets among clients.

- For the Adult dataset, following [1], all the training samples were uniformly distributed among a total clients such that each client only contained data from one class with .

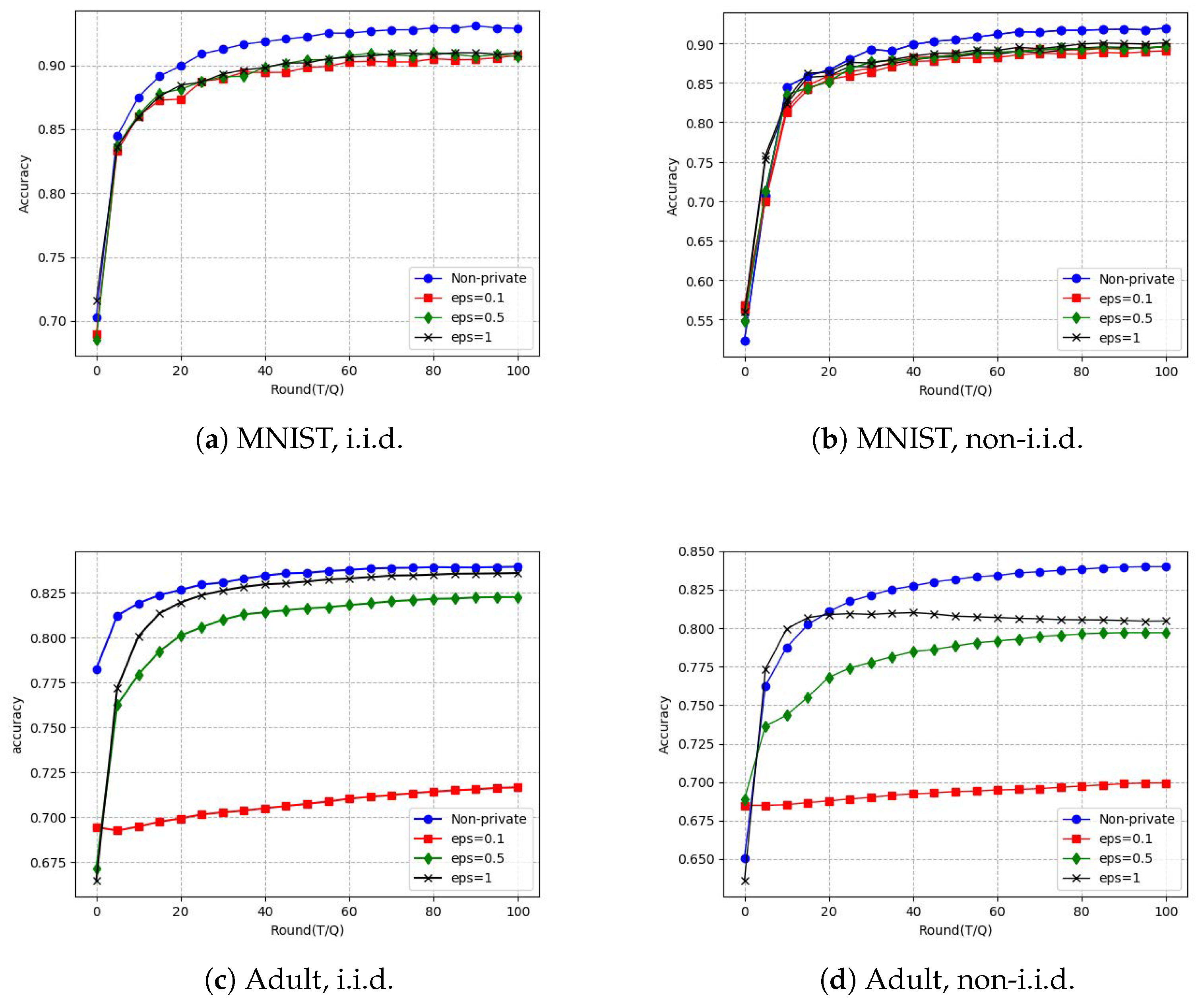

4.2. Impact of the Privacy Protection Level

- Impact of non-i.i.d. data: The presence of non-i.i.d. data across clients in FL amplifies the impact of DP noise. The Adult dataset, which contains categorical and numerical features, is more sensitive to such heterogeneity. Conversely, the MNIST dataset, consisting of grayscale images of handwritten digits with simpler patterns, is inherently more resilient to non-i.i.d. data settings.

- Inherent data structure: The MNIST dataset benefits from the spatial coherence of pixel intensities, where patterns such as the shapes of digits remain discernible when DP noise is introduced. This property of image data enables models to identify meaningful features despite perturbations. On the other hand, the Adult dataset, being tabular with categorical and numerical features, lacks such spatial structure. DP noise in tabular data can obscure critical relationships between features and labels, making it harder for the FL model to extract meaningful patterns under noisy conditions.

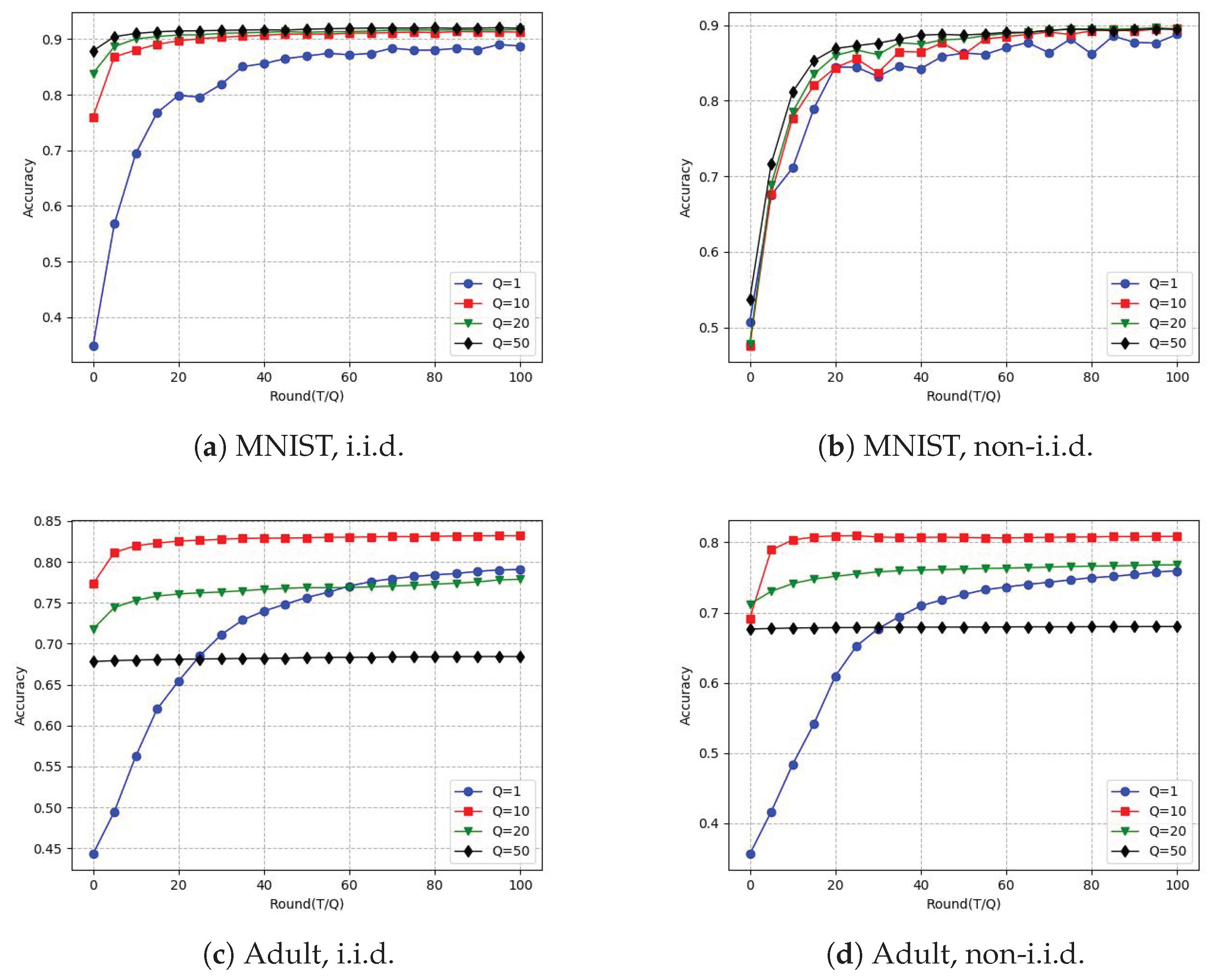

4.3. Impact of Local Epoch Length Q

5. Conclusions and Future Works

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Appendix A. Proof of the Lemma 2

Appendix B. Proof of Theorem 3

Appendix B.1. Key Lemmas

Appendix B.2. Completing the Proof of Theorem 3

Appendix C. Proofs of Key Lemmas in Theorem 3

Appendix C.1. Proof of Lemma A1

Appendix C.2. Proof of Lemma A2

Appendix C.3. Proof of Lemma A3

Appendix C.4. Proof of Lemma A4

Appendix D. Proof of Theorem 4

Appendix D.1. Key Lemmas

Appendix D.2. Completing the Proof of Theorem 4

References

- Li, Y.; Chang, T.H.; Chi, C.Y. Secure Federated Averaging Algorithm with Differential Privacy. In Proceedings of the IEEE International Workshop on Machine Learning for Signal Processing (MLSP), Espoo, Finland, 17–20 September 2020; pp. 1–6. [Google Scholar]

- Sattler, F.; Wiedemann, S.; Müller, K.R.; Samek, W. Robust and communication-efficient federated learning from non-iid data. IEEE Trans. Neural Netw. Learn. Syst. 2019, 31, 3400–3413. [Google Scholar] [CrossRef] [PubMed]

- Wang, S.; Chang, T.-H. Federated Matrix Factorization: Algorithm Design and Application to Data Clustering. IEEE Trans. Signal Process. 2022, 70, 1625–1640. [Google Scholar] [CrossRef]

- Wang, S.; Xu, Y.; Yuan, Y.; Quek, T.Q.S. Toward Fast Personalized Semi-Supervised Federated Learning in Edge Networks: Algorithm Design and Theoretical Guarantee. IEEE Trans. Wireless Commun. 2024, 23, 1170–1183. [Google Scholar] [CrossRef]

- McMahan, B.; Moore, E.; Ramage, D.; Hampson, S.; Y Arcas, B.A. Communication-efficient learning of deep networks from decentralized data. In Proceedings of the International Conference on Artificial Intelligence and Statistics, Fort Lauderdale, FL, USA, 20–22 April 2017; pp. 1273–1282. [Google Scholar]

- Li, X.; Huang, K.; Yang, W.; Wang, S.; Zhang, Z. On the Convergence of FedAvg on non-IID Data. In Proceedings of the International Conference on Learning Representations (ICLR), Addis Ababa, Ethiopia, 26–30 April 2020; pp. 1–26. [Google Scholar]

- Li, Y.; Wang, S.; Chi, C.Y.; Quek, T.Q.S. Differentially Private Federated Clustering Over Non-IID Data. IEEE Internet Things J. 2024, 11, 6705–6721. [Google Scholar] [CrossRef]

- Glasgow, M.R.; Yuan, H.; Ma, T. Sharp bounds for federated averaging (local SGD) and continuous perspective. In Proceedings of the International Conference on Artificial Intelligence and Statistics, Virtual, 28–30 March 2022; pp. 9050–9090. [Google Scholar]

- Woodworth, B.E.; Patel, K.K.; Srebro, N. Minibatch vs local SGD for heterogeneous distributed learning. In Proceedings of the Advances in Neural Information Processing Systems 33 (NeurIPS 2020), Virtual, 6–12 December 2020; pp. 6281–6292. [Google Scholar]

- Hsu, T.M.H.; Qi, H.; Brown, M. Measuring the effects of non-identical data distribution for federated visual classification. arXiv 2019, arXiv:1909.06335. [Google Scholar]

- Li, T.; Sahu, A.K.; Zaheer, M.; Sanjabi, M.; Talwalkar, A.; Smith, V. Federated optimization in heterogeneous networks. Proc. Mach. Learn. Syst. 2020, 2, 429–450. [Google Scholar]

- Karimireddy, S.P.; Kale, S.; Mohri, M.; Reddi, S.J.; Stich, S.U.; Suresh, A.T. Scaffold: Stochastic controlled averaging for on-device federated learning. In Proceedings of the International Conference on Learning Representations (ICLR), Addis Ababa, Ethiopia, 26–30 April 2020; pp. 5132–5143. [Google Scholar]

- Geiping, J.; Bauermeister, H.; Dröge, H.; Moeller, M. Inverting gradients-how easy is it to break privacy in federated learning? In Proceedings of the Advances in Neural Information Processing Systems (NIPS), Virtual, 6–12 December 2020; pp. 16937–16947. [Google Scholar]

- Dwork, C.; Roth, A. The algorithmic foundations of differential privacy. Found. Trends® Theor. Comput. Sci. 2014, 9, 211–407. [Google Scholar] [CrossRef]

- Li, Y.; Huang, C.W.; Wang, S.; Chi, C.Y.; Quek, T.Q. Privacy-Preserving Federated Primal-Dual Learning for Non-Convex and Non-Smooth Problems With Model Sparsification. IEEE Internet Things J. 2024, 11, 25853–25866. [Google Scholar] [CrossRef]

- Wang, X.; Wang, S.; Li, Y.; Fan, F.; Li, S.; Lin, X. Differentially Private and Heterogeneity-Robust Federated Learning with Theoretical Guarantee. IEEE Trans. Artif. Intell. 2024, 5, 6369–6384. [Google Scholar] [CrossRef]

- Li, Z.; He, Y.; Yu, H.; Kang, J.; Li, X.; Xu, Z.; Niyato, D. Data heterogeneity-robust federated learning via group client selection in industrial IoT. IEEE Internet Things J. 2022, 9, 17844–17857. [Google Scholar] [CrossRef]

- Wang, S.; Xu, Y.; Wang, Z.; Chang, T.-H.; Quek, T.Q.S.; Sun, D. Beyond ADMM: A Unified Client-Variance-Reduced Adaptive Federated Learning Framework. In Proceedings of the AAAI, Washington, DC, USA, 7–14 February 2023; pp. 10175–10183. [Google Scholar]

- Wu, H.; Wang, P. Fast-convergent federated learning with adaptive weighting. IEEE Trans. Cogn. Commun. Netw. 2021, 7, 1078–1088. [Google Scholar] [CrossRef]

- Wu, H.; Tang, X.; Zhang, Y.J.A.; Gao, L. Incentive Mechanism for Federated Learning With Random Client Selection. IEEE Trans. Netw. Sci. Eng. 2024, 11, 1922–1933. [Google Scholar] [CrossRef]

- Saha, R.; Misra, S.; Chakraborty, A.; Chatterjee, C.; Deb, P.K. Data-centric client selection for federated learning over distributed edge networks. IEEE Trans. Parallel Distrib. Syst. 2022, 34, 675–686. [Google Scholar] [CrossRef]

- Pang, J.; Yu, J.; Zhou, R.; Lui, J.C. An incentive auction for heterogeneous client selection in federated learning. IEEE Trans. Mob. Comput. 2022, 22, 5733–5750. [Google Scholar] [CrossRef]

- Shen, X.; Liu, Y.; Zhang, Z. Performance-enhanced Federated Learning with Differential Privacy for Internet of Things. IEEE Internet Things J. 2022, 9, 24079–24094. [Google Scholar] [CrossRef]

- Li, Y.; Wang, S.; Chi, C.Y.; Quek, T.Q. Differentially Private Federated Learning in Edge Networks: The Perspective of Noise Reduction. IEEE Netw. 2022, 36, 167–172. [Google Scholar] [CrossRef]

- Abadi, M.; Chu, A.; Goodfellow, I.; McMahan, H.B.; Mironov, I.; Talwar, K.; Zhang, L. Deep learning with differential privacy. In Proceedings of the ACM SIGSAC Conference on Computer and Communications Security, Vienna, Austria, 24–28 October 2016; pp. 308–318. [Google Scholar]

- McMahan, H.B.; Ramage, D.; Talwar, K.; Zhang, L. Learning Differentially Private Recurrent Language Models. In Proceedings of the International Conference on Learning Representations (ICLR), Toulon, France, 24–26 April 2017. [Google Scholar]

- Huang, Z.; Hu, R.; Guo, Y.; Chan-Tin, E.; Gong, Y. DP-ADMM: ADMM-based distributed learning with differential privacy. IEEE Trans. Inf. Forensics Secur. 2019, 15, 1002–1012. [Google Scholar] [CrossRef]

- Erlingsson, Ú.; Feldman, V.; Mironov, I.; Raghunathan, A.; Talwar, K.; Thakurta, A. Amplification by shuffling: From local to central differential privacy via anonymity. In Proceedings of the ACM-SIAM Symposium on Discrete Algorithms, San Diego, CA, USA, 6–9 January 2019; pp. 2468–2479. [Google Scholar]

- Balle, B.; Barthe, G.; Gaboardi, M. Privacy amplification by subsampling: Tight analyses via couplings and divergences. In Proceedings of the Advances in Neural Information Processing Systems (NIPS), Montreal, QC, Canada, 3–8 December 2018; pp. 6277–6287. [Google Scholar]

- Wei, K.; Li, J.; Ding, M.; Ma, C.; Yang, H.H.; Farokhi, F.; Jin, S.; Quek, T.Q.S.; Poor, H.V. Federated Learning With Differential Privacy: Algorithms and Performance Analysis. IEEE Trans. Inf. Forensics Secur. 2020, 15, 3454–3469. [Google Scholar] [CrossRef]

- Noble, M.; Bellet, A.; Dieuleveut, A. Differentially Private Federated Learning on Heterogeneous Data. In Proceedings of the International Conference on Artificial Intelligence and Statistics, Virtual, 28–30 March 2022; pp. 10110–10145. [Google Scholar]

- Shokri, R.; Stronati, M.; Song, C.; Shmatikov, V. Membership inference attacks against machine learning models. In Proceedings of the IEEE symposium on security and privacy (SP), San Jose, CA, USA, 22–26 May 2017; pp. 3–18. [Google Scholar]

- Zhang, Y.; Duchi, J.C.; Wainwright, M.J. Communication-efficient algorithms for statistical optimization. J. Mach. Learn. Res. 2013, 14, 3321–3363. [Google Scholar]

- Blake, C.L.; Merz, C.J. UCI Repository of Machine Learning Databases. 1996. Available online: https://archive.ics.uci.edu/dataset/2/adult (accessed on 30 April 1996).

- LeCun, Y.; Cortes, C.; Burges, C. The MNIST Database. 1998. Available online: http://yann.lecun.com/exdb/mnist (accessed on 1 November 1998).

| Notation | Definition |

|---|---|

| Euclidean norm. | |

| The inner product operator. | |

| , | The DP parameter. |

| The -norm sensitivity. | |

| N | The total number of clients. |

| T | The total number of iterations. |

| Q | The number of local SGD updates. |

| The size of set . | |

| Transpose of vector . | |

| The global model at t-th iteration. | |

| The local model for k-th client at t-th iteration. | |

| The objective function for the client k. | |

| The mini-batch gradient using the data at client k. | |

| The degree of non-i.i.d. data. | |

| The gradient clipping level. | |

| n, | The number of data samples for all client and the client k. |

| b | The size of mini-batch data. |

| d | The dimension of model parameters. |

| The variance of stochastic gradients for the k-th client. | |

| L | L-smooth parameter. |

| -strong convex parameter. | |

| , | The statistical expectation and the probability function, respectively. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, Y.; Wang, S.; Wu, Q. Convergence Analysis for Differentially Private Federated Averaging in Heterogeneous Settings. Mathematics 2025, 13, 497. https://doi.org/10.3390/math13030497

Li Y, Wang S, Wu Q. Convergence Analysis for Differentially Private Federated Averaging in Heterogeneous Settings. Mathematics. 2025; 13(3):497. https://doi.org/10.3390/math13030497

Chicago/Turabian StyleLi, Yiwei, Shuai Wang, and Qilong Wu. 2025. "Convergence Analysis for Differentially Private Federated Averaging in Heterogeneous Settings" Mathematics 13, no. 3: 497. https://doi.org/10.3390/math13030497

APA StyleLi, Y., Wang, S., & Wu, Q. (2025). Convergence Analysis for Differentially Private Federated Averaging in Heterogeneous Settings. Mathematics, 13(3), 497. https://doi.org/10.3390/math13030497