1. Introduction

Among many evolutionary optimization methods, differential evolution (DE) is a heuristic stochastic search algorithm based on population differences. For its simple structure, easy implementation, fast convergence, and robustness, DE is widely used in various fields such as data mining [

1], pattern recognition [

2], digital filter design [

3], artificial neural networks [

4], and electromagnetism [

5]. However, the performance of DE depends seriously on the control parameters and mutation strategies. Inappropriate parameters or mutation strategies will reduce the convergence performance of the algorithm or fall into the local optimum. Therefore, many scholars have conducted research on the update strategy and algorithmic structure of DE algorithms in recent years. Current improvements in DE can mainly be categorized as follows: multi-population [

6], multi-evolutionary model [

7], parameter adaptive [

8,

9], strategy and search space adaptive [

10,

11,

12,

13], variant strategy updating [

14,

15,

16,

17], online algorithmic configurations [

18], parameter free [

19], etc.

Incorporating multi-population or multi-evolution modules into the algorithm can improve the global search capability and convergence rate of the evolutionary algorithm. For example, DSPPDE [

6] employs the idea of isolated evolution and information exchanging in parallel DE algorithms via serial program structure; MEDE [

7] realizes multi-strategy cooperation based on the fact that each evolution strategy of DE has common peculiarities but different characteristics; MCDE [

8] uses a population structure with multi-populations so that covariance learning can establish a proper rotational coordinate system for the crossover operation, and it uses adaptive control parameters to balance the ability of population surveys and convergence; BMEA-DE [

9] divides the population into three sub-populations based on fitness, which uses separate variation strategies and control parameters for different populations.

On the other hand, the parameters are critical to the accuracy of DE and its convergence rate. For example, if the mutation rate of CR is too small, it will reduce the ability of the algorithm in the global search and eventually fall into the local optimum; if the mutation rate of CR is too large, it is computationally consuming, resulting in a decrease in the speed of convergence of the algorithm. Therefore, many scholars have introduced adaptive operators to adjust parameters based on certain information. For example, ADE [

10] introduces an adaptive mutation operator according to the comparison between each individual’s fitness and the best one’s fitness, and it adds a competition operation with a random new population to improve the global search capability. ISADE [

11] incorporates space-adaptive ideas into DE to realize the automatic search for the suitable space and improve the convergence rate and accuracy. IDE [

12] generates the initial population with a reverse learning strategy and selects individuals via the Metropolis criterion of simulated annealing algorithms. APSDE [

13] optimizes the mutation strategy and control parameters through the accompanying population, in which individuals are composed of suboptimal solutions.

The mutation operator of DE has high randomness and is mainly composed of only one evolutionary direction. This kind of operator makes DE prone to problems such as premature phenomena and slow convergence speeds. Moreover, DE cannot realize both a global search of space and local optimization operations at the same time. As a result, many scholars have conducted many studies on the update strategy of DE. SSDE [

14] employs an opposition-based learning method to enhance global convergence performance when producing the initial population. GSDE [

15] optimizes the exploitation ability with Gaussian random walk and local search performances with simplified crossovers and mutations based on individuals’ performance. SEFDE [

16] achieves the feedback adjustment of mutation strategies via the stage of the individual, which can be self-adaptively determined by designing the state judgment factor. Consequently, the algorithm can obtain a trade-off between exploration and exploitation. HHSDE [

17] introduces a new mutation operation to improve the efficiency of mutations within the new harmony generation mechanics of the harmony algorithm (HS).

In addition, some algorithmic configuration methods have been developed in recent years. For example, OAC [

18] selects a trial strategy for vector generation via the multi-armed bandit algorithm, in which kernel density estimation is employed to tune the control parameters during the searching process.

In summary, most of the studies on the improvements in DE are focused on the strategies of mutation operators and control parameters, often disregarding the algorithm’s distinctive search characteristics across various stages.

In general, evolutionary algorithms are generally divided into two stages. The main task of the first stage of the algorithm is to explore the target region globally. Meanwhile, in the second stage, the focus shifts to narrowing down the search region to the neighborhood of the better-performing particles, aiming at finding the locally optimal solutions in the region and avoiding premature convergence. Thus, in this paper, a novel two-stage algorithm is proposed. This algorithm has two kinds of populations and constructs new mutation patterns with adaptive direction vector weight factors and crossover factors. As a result, it is able to achieve fast convergence and avoid the local optimum.

2. A Two-Stage Adaptive Differential Evolution Algorithm (APDE) with Accompanying Populations

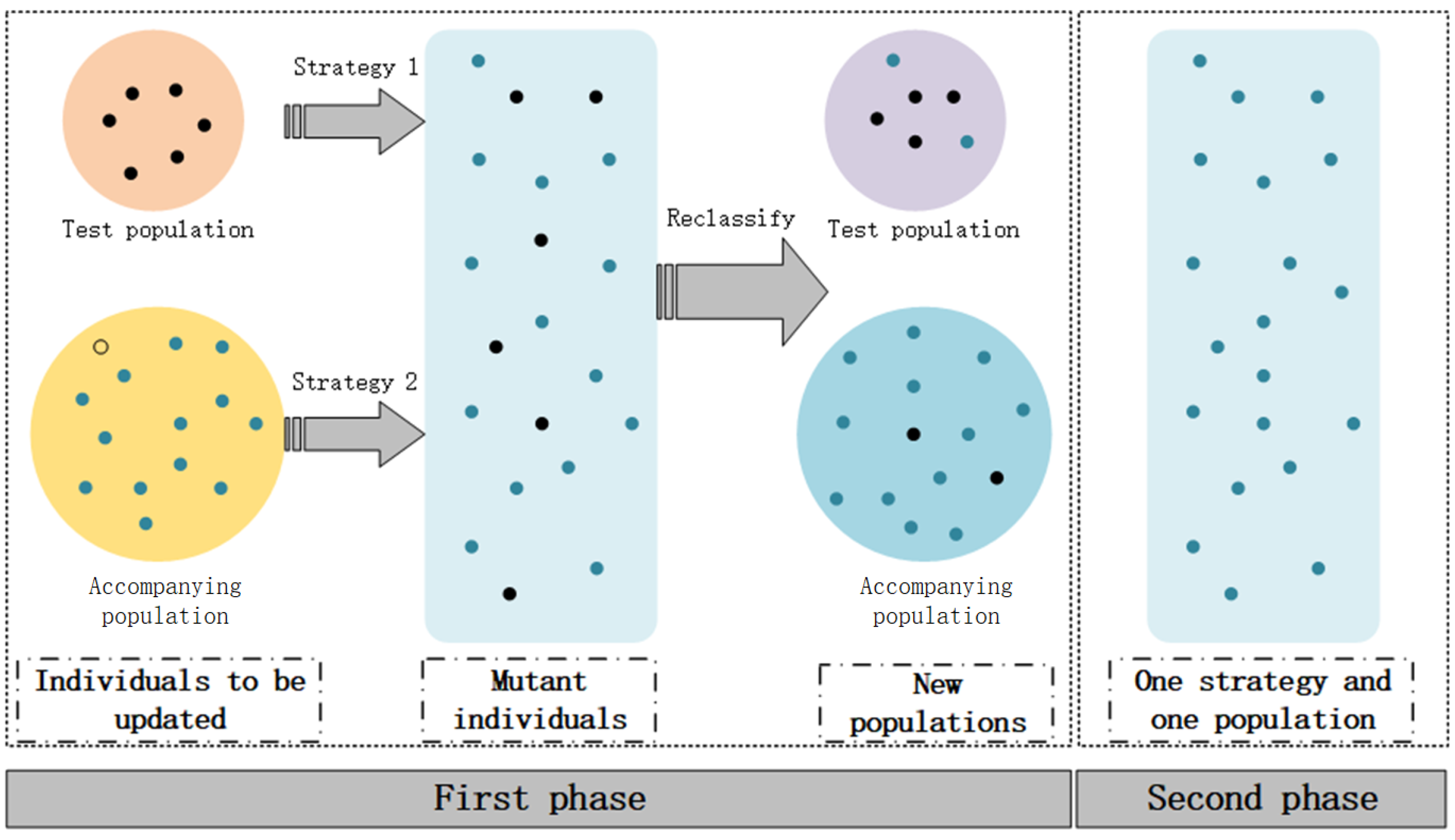

In this section, a two-stage adaptive differential evolutionary algorithm with accompanying populations is proposed. In this algorithm, we use different mutation strategies for the test and accompanying populations in order to maximize the mutation efficiency.

The updated individuals will be reclassified according to their fitness value, which ensures evolutionary diversity and achieves information exchange between populations. In addition, APDE divides the algorithm into two stages according to the search characteristics of the evolutionary algorithm. Therefore, the algorithm can quickly converge in the early stage and escapes local optimum efficiently in the later stage by enhancing its stochastic mutability. This operation can help improve computing efficiency and maintain convergence speeds.

Figure 1 depicts the specific operation of each stage in APDE.

2.1. Initialization

APDE uses the Good Point Set Method [

20] to generate the initial population. Then, the initial individuals are evenly distributed in the search space to overcome blindness and randomness and improve population diversity. The initialization formulas are as follows:

Here, , . and are the upper and lower bounds of the range of values taken by the jth component of the individual.

2.2. Population Segmentation

The population of APDE is divided into 2 classes. One is the test population, for which its main function is to explore the optimal solution based on the information of the optimal individuals. The other one is the accompanying population, which has lower fitness values and is randomly distributed throughout the entire search space. Its primary function is to explore uncharted regions and augment population diversity. After multiple trials, APDE uses a empirical 3:7 ratio to divide the population, and the individuals are reordered according to the fitness value at the end of each iteration. The segmentation operation can be specifically expressed as follows:

where

is the population classification information for the

ith individual. Here, 0 indicates the test population, and 1 indicates the accompanying population.

2.3. Improved Mutation Operator

In 2022, Civicioglu [

19] proposed an elitist mutation operator in the Bernstein–Levy differential evolution(BDE) algorithm, which uses three vectors to generate mutation directions. The generation process of this mutation operator is partially elitist. This structure enhances the algorithm’s local search capability and improves the algorithm’s ability to generate effective mutation patterns. The first two direction vectors

and

are generated by obtaining the difference in the two different individuals selected at random. The generation method is as follows:

where

denotes the vector replacement function.

is generated via the location information about the current optimal solution. The information can be used directly or after numerical perturbation within the limits of the relevant search space.

(1) When current optimal solution

is used directly,

(2) When current optimal solution

is used after applying a numerical perturbing progress,

and are random numbers obeying a uniform distribution in .

BDE selects these two generation methods with a probability of 50%. However, this strategy is too dependent on randomness and does not match the search characteristics of the algorithm. As a result, it slows down the convergence of the algorithm in the early stage and squanders computational resources. Obviously, the generation in (1) is more conducive for the algorithm for quickly approaching the current optimal solution, while the generation in (2) is more suitable for enhancing the global search capability of the algorithm. Therefore, the algorithm in this paper innovates the mutation strategy as follows.

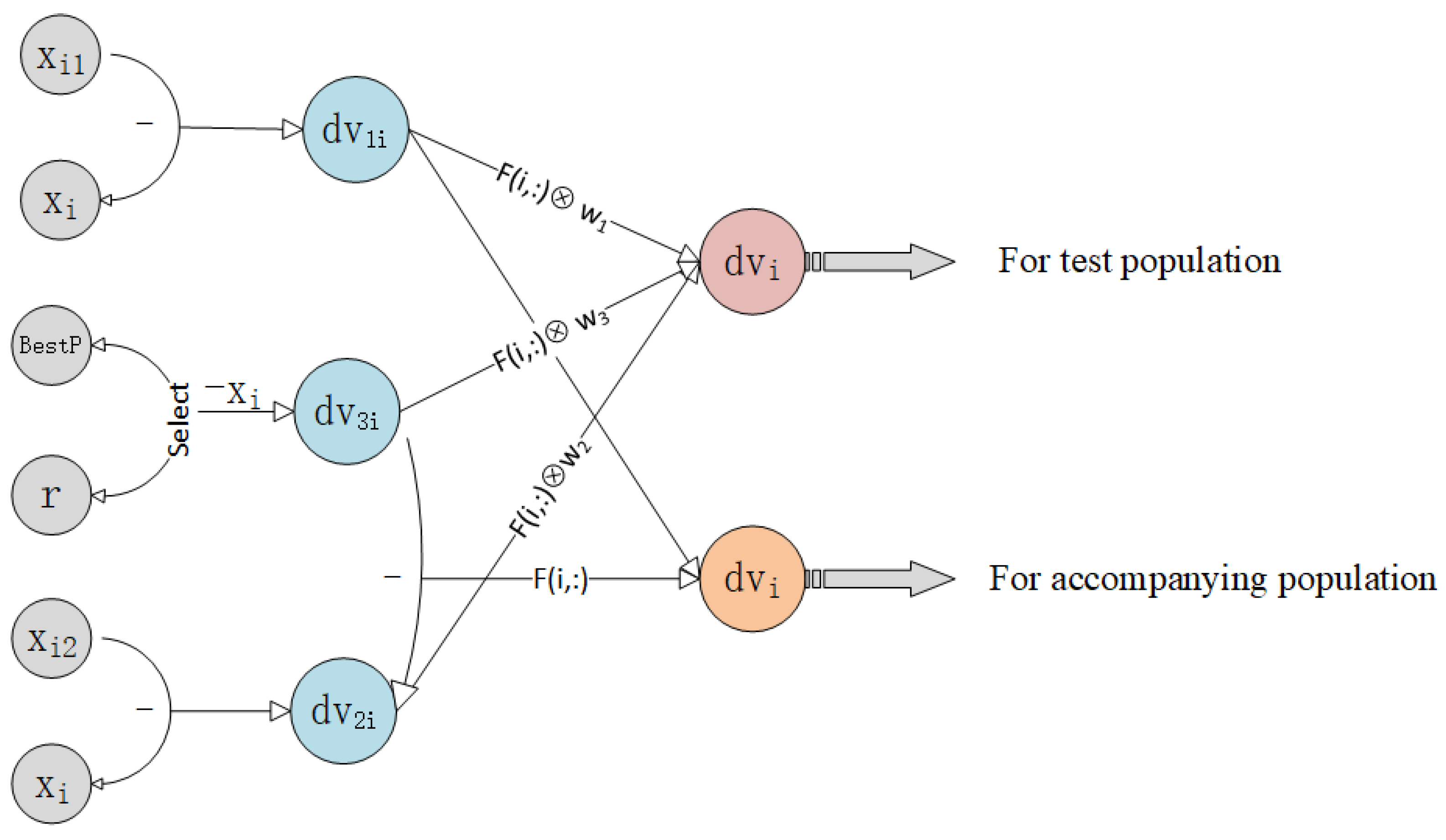

To synthesize the three direction vectors in the test population, an adaptive weight factor is proposed in this paper according to the iterative process of the algorithm:

where

,

, and

are the combined weight factors of

,

, and

;

is the current iteration number;

is the maximum number of iterations. It is easy to know from the above equations that

and

are generated in the same way, so they have the same weights. In order to fully utilize the leadership of the current optimal solution, the weight of

should be higher than

and

and increase with the number of iterations. As a result,

is a direction vector with elite strategies.

For the accompanying population, its combined direction vector consists of the difference between direction vectors and . This structure enables the accompanying population to have a stronger exploration ability.

In summary, the combined direction vector

is defined as follows:

where ⊗ denotes the Hadamard product.

is the value of the evolutionary step size of the

ith individual. The step size is generated by the Levy distribution, which has more powerful exploratory capabilities.

Figure 2 exemplifies the specific way in which the mutation operates.

2.4. Crossover and Selection

The crossover rate plays a critical role in the global searching ability and convergence speed of DE, which are conflicting. Thus, the key is to find a suitable crossover rate to balance the convergence rate and the global searching ability. The adaptive exponential distribution is employed to generate crossover rates rather than the traditional constant value in APDEs, which enables the algorithm to efficiently escape the local optimum in later stages.

The crossover process is controlled by the mapping matrix from BDE, in which the permutation operation is employed to embed the crossover rate CR into the searching directions of each individual. This embedding map is initialized as an

zero matrix. At each BDE iteration step, the numerical values of the map elements are updated via the following Equation (15):

where

, and

is an offspring generation after crossover and mutation. When the value of

is outside the search region, it will be adapted by a random number within the search region.

Finally, we can update the populations as follows.

2.5. Two-Stage Evolutionary Strategy

As is known to all, it is challenging for evolutionary algorithms to avoid falling into a local optimum without reducing the algorithm’s convergence rate. Indeed, it is to balance the randomness and optimization of the search within the algorithmic process. Thus, we focus on solving the problem with different search goals in the early and later stages of the algorithm.

A multi-population strategy can enhance the algorithm’s search ability and population diversity, enabling rapid convergence in the early stages and emphasizing selecting random mutation strategies in the later stage.

In the two stages of the proposed algorithm, we use half of the maximum iteration as the dividing point, and we implement different mutation operator selection strategies. In the first stage, APDE formulates different mutation strategies with various populations, thus achieving effective information exchange between populations. In the second stage, the quality of individuals in the test and accompanying populations increases, and the efficiency of multi-population strategy decreases. As a result, the test and accompanying populations are merged in this stage, and the mutation strategies are selected with equal probability. This operation increases the algorithmic randomness to enhance the search capability of the algorithm in the later stages. The pseudo-code of the APDE is as shown in Algorithm 1.

| Algorithm 1 APDE Algorithm. |

- 1:

Initialization; - 2:

; - 3:

; - 4:

1:NP; - 5:

for

do - 6:

Population Segmentation; - 7:

; - 8:

if then - 9:

- 10:

else - 11:

- 12:

end if - 13:

Generation of Random Direction Vectors; - 14:

- 15:

; - 16:

Generation of Evolutionary Step Size Value; - 17:

for to do - 18:

); - 19:

- 20:

end for - 21:

First Phase; - 22:

if then - 23:

if then - 24:

; - 25:

; - 26:

else - 27:

; - 28:

; - 29:

end if - 30:

end if - 31:

Second Phase; - 32:

if then - 33:

if then - 34:

; - 35:

else - 36:

; - 37:

end if - 38:

if then - 39:

; - 40:

else - 41:

; - 42:

end if - 43:

end if - 44:

Crossing and Selection; - 45:

; - 46:

; - 47:

for to do - 48:

; - 49:

end for - 50:

Generation of Trial Pattern Vectors; - 51:

; - 52:

Boundary Control Mechanism; - 53:

for to do - 54:

for to D do - 55:

if then - 56:

; - 57:

end if - 58:

end for - 59:

end for - 60:

Update; - 61:

for to do - 62:

if then - 63:

; - 64:

end if - 65:

end for - 66:

; - 67:

. - 68:

end for

|

3. Convergence Analysis of APDE

In this section, the convergence of APDE is analyzed using the tool of Markov chains [

21]. It is shown that the presented algorithm converges to the global optimum in probability.

Definition 1. Let a random sequence consist of random variables taking values on a discrete set; the totality of the discrete values is denoted as . is called the state space. If for , , there is Then, is called a Markov Chain [21]. The state space of the random sequence can either be finite or infinite states as needed, and for the differential evolution class of algorithms, we consider to be finite.

Definition 2. Let m and n be positive integers. If the Markov Chain is in state i at time m, the probability of transferring to state j after n steps is . If the corresponding transfer probabilities are independent of time m, that is, , the Markov chain is said to be time-homogeneous [21]. Lemma 1. When the crossover rate is fixed, the population sequence of APDE is a finite time-homogeneous Markov Chain.

Proof. Let the length of individuals be M, the population size be N, and the dimension of the state space be v; then, the size of the state space is . As the individuals in APDE take real values and the state space of the population sequence is a finite set under finite precision conditions, the sequence of populations is finite. Also, the mutation, crossover, and selection operators of the population sequence in APDE are independent with time t when the crossover rate is a constant. The next state is solely dependent on the current state . Therefore, the sequence of populations is time-homogeneous. As a result, is a finite time-homogenous Markov Chain. □

Let

be a population sequence during the

tth iteration.

is an individual in

, and

f is the fitness function on

. Let

be the global optimal fitness value; then, we have the following definition.

Definition 3. Let be a sequence of random variables. The variables in the sequence denote the best fitness of the population in the state at time t. An algorithm is said to be convergent if and only if This definition indicates that algorithm convergence means that the probability of containing the global optimal solution in the population is almost 1, as the algorithm iterates through a sufficient number of generations.

Theorem 1. When the number of iterations of the algorithm is large enough, the APDE algorithm converges to the optima of the problem with a probability of 1.

Proof. Let

be the probability that population

is in state

,

be the probability that population

moves from state

to state

,

S be the state collection,

I be a set consisting of optimal states, and

be a constant value. Note that

; by the nature of Markov chains, we have

After crossover and mutation, an individual will not be selected when its fitness value is worse than the current optimal solution, and there is

□

In APDE,

is adaptive in the range of

. The probability

is determined primarily by the probability of state transfers resulting from the mutation process. It is calculated as follows:

Here, denotes the mutation locus generated for the nth dimension of the ith individual. N denotes the dimension of the optimization problem.

From the previous proof, we can conclude that the probability of the optimal solution being included in the global optimum converges to 1 when

takes a lower bound of 0.1 or an upper bound of 0.9. Additionally, we have

It can be shown that when

is taken adaptively in

, the conclusion can still be reached using the Squeeze Theorem [

22].

In summary, APDE can find the optimal solution with a probability of 1 under the condition that the number of iterations is sufficiently large.

4. Numerical Experiment

In this paper, we employ the test functions in CEC2005 and CEC2017 to verify the performance of APDE, the specific information of which is listed in

Appendix A and

Appendix B. The algorithm of this paper is compared to six DE-type variants and three other types of intelligent optimization algorithms. Each algorithm is set to run 30 times independently, with the maximum number of function evaluations set at

. The values of the dimension

D are given in

Table A1 and

Table A2 in

Appendix A. Finally, we used the Wilcoxon signed-rank test to assess the significant difference between APDE and the competition algorithms.

4.1. Comparative Analysis with APDE and DE-Type Algorithms

In this section, CoDE [

23], DE [

24], BDE, JADE [

25], NSDE [

26], and SaDE [

27] are selected as comparisons to verify the performance of APDE. In

Table 1, the initial values of their internal parameters are listed, and the experimental results are shown in

Table 2 and

Table 3. The results show that APDE achieves optimal values with respect to 16 test functions in CEC2005 and 13 test functions in CEC2017. Although APDE fails to achieve the best results in the remaining test functions, it still performs well in several comparative algorithms. Among the 16 multi-peak test functions of CEC2005, APDE fails to find the optimal solution in only three test functions. This indicates that APDE has a strong ability to jump out of the local optimum and still maintains good optimization performance with respect to multi-extreme problems.

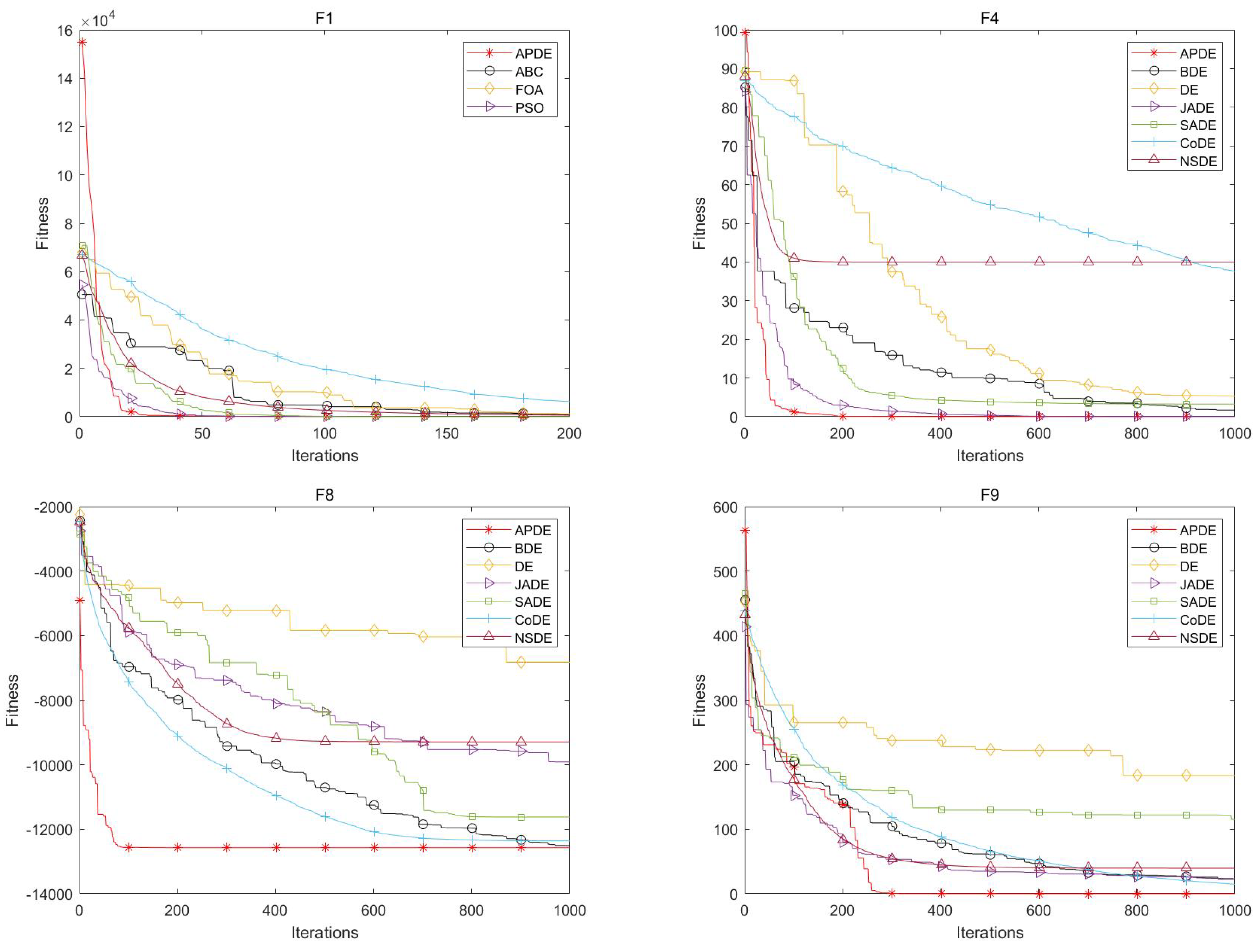

The convergence curves of the six comparison algorithms with respect to test functions F1, F4, F8, and F9 in CEC2005 are illustrated in

Figure 3. The horizontal coordinate is the number of iterations, and the vertical coordinate is the adaptation value. From these figures, it can be seen that the convergence curve of APDE is significantly faster than that of the comparison algorithms, especially with respect to F8. For problem F8, APDE is able to converge to the optimal solution more quickly, while the other comparative algorithms all appear to fall into the phenomenon of the local optimum, resulting in slow convergence or being unable to converge to the optimal solution.

Figure 4 shows the convergence curves of the six comparison algorithms on test functions F5, F8, F20, and F26 in CEC2017. It can be seen that the convergence curve of APDE is faster than the comparison algorithms and has an extremely strong global search capability. Taking F20 as an example, we find that APDE appears to fall into the local optimum at about 100 iterations, and the convergence speed slows down significantly but soon jumps out of the local optimum and rapidly approaches the optimal solution. In contrast, other algorithms converge much slower after falling into a local optimum and fail to jump out of that search region.

4.2. Comparative Analysis with APDE and Non-DE Algorithms

In this section, three algorithms, FOA [

28], PSO [

29], and ABC [

30], are selected for comparison to verify the performance of APDE. The initial values of the internal parameters of the comparison method are given in

Table 4. The experimental results in

Table 5 and

Table 6 show that APDE achieved optimal values for 17 test functions in CEC2005 and 27 test functions in CEC2017. Although APDE failed to achieve the best results on the remaining eight functions, it still ranked second or found the optimal value among several comparative algorithms.

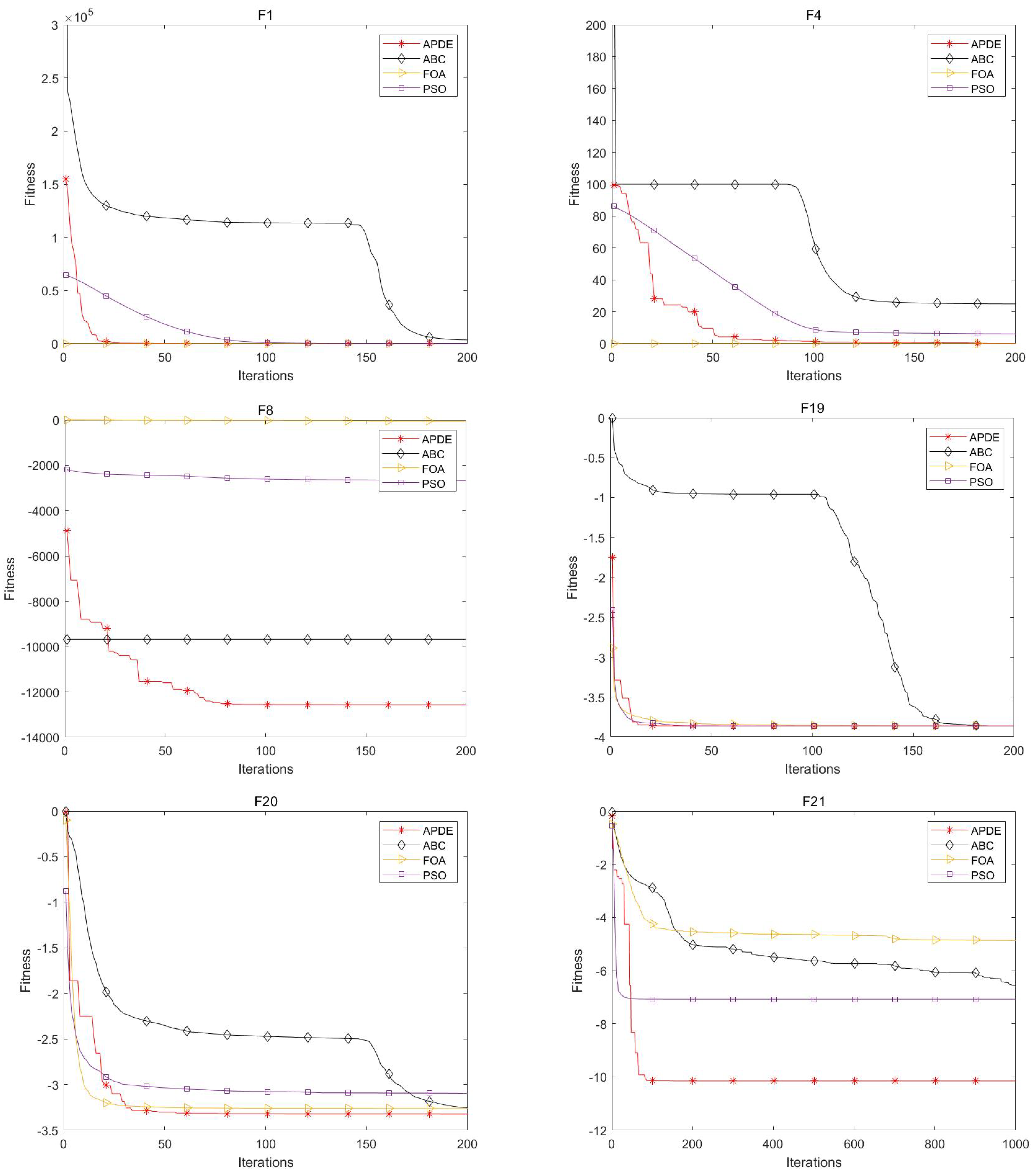

In

Figure 5, the convergence curves of the three comparison algorithms with respect to test functions F1, F4, F8, F19, F20, and F21 in CEC2005 are presented. It can be seen from the figure that APDE can not only converge more quickly but also finds better solutions compared to other algorithms. On F1 and F4, the APDE converges second only to the FOA, which is due to the fact that the initial fitness value of the FOA is more close to the optimal solution 0. With respect to F8, F19, F20, and F21, the optimal value of the test function is negative, and the FOA has no initial solution advantage. At this point, APDE achieves the fastest convergence rate, as well as the smallest fitness value, through its powerful global exploration capability.

In

Figure 6, the convergence curves of the three comparison algorithms on test functions F1, F5, F9, F22, F24, and F28 with respect to CEC2017 are presented. APDE has a unique two-stage structure and a mutation strategy that is more conducive to global search. Then, APDE is able to jump out of the current search region after briefly falling into a local optimum, which enhances the vitality of the algorithm. As can be seen in

Figure 6, APDE has the fastest convergence rate, as well as the optimal fitness value, especially for F22. After APDE and PSO fall into a local optimum at the same node, PSO slowly stops updating the optimal solution, whereas APDE obtains a secondary search capability by jumping out of the current search region.

4.3. Wilcoxon Signed-Rank Test

To further analyze the performance of APDE, the Wilcoxon signed rank test is employed in this paper to compare the experimental results of CEC2005 and CEC2017 provided by APDE and other algorithms in a two-by-two manner. The Wilcoxon signed-rank test adds the ranks of the absolute values of the difference between the observed values and the center of the null hypothesis according to different signs as its test statistic. It suits paired comparisons without needing the differences between pairs of data to be normally distributed. From

Table A3 and

Table A4 in the

Appendix B, we give the results of the significance comparisons based on the Wilcoxon signed rank test with

. The comparison results are represented as (+, =, -) in the last row of

Table A3 and

Table A4. Here, (+) indicates that APDE is statistically superior to the comparison algorithms on current benchmark functions. (=) indicates that APDE is statistically neck-to-neck relative to the effect of the correlation comparison algorithm. (-) indicates that APDE does not perform better than the competition algorithms with respect to the current benchmark function.

The Wilcoxon signed-rank test results on the CEC2005 test set can be expressed as follows: CoDE (15,7,1), DE (15,6,2), BDE (16,7,0), JADE (16,4,3), NSDE (19,3,1), SaDE (16,5,2), FOA (21,0,2), PSO (21,2,0), and ABC (19,2,2). APDE outperforms the comparison algorithm in solving 158 of the 207 standardized test functions of CEC2005, and it performs statistically neck-to-neck relative to the comparison algorithm with respect to 36 functions.

The results on CEC2017 are: CoDE (29,0,0), DE (27,0,2), BDE (26,0,3), JADE (24,0,5), NSDE (21,0,8), SaDE (22,0,7), FOA (29,0,0), PSO (26,0,3), ABC (29,0,0). Here APDE outperforms the comparison algorithm in solving 233 of the 261 standardized test functions, and performs statistically worse than the comparison algorithm on only 28 functions.

4.4. Computational Complexity Analysis

The time complexity of APDE and the other comparison algorithms in this paper is

. Therefore, to deeply analyze the computational complexity of APDE,

Table 7 provides the execution times of each algorithm running 20,000 times on CEC2017’s F18. The table indicates that the computational speed of APDE is surpassed by only PSO among the 10 algorithms evaluated, a circumstance that may be attributed to the simplistic architecture of PSO. Nevertheless, the employment of PSO necessitates a considerable investment of latent time dedicated to the fine-tuning of parameters. It can be observed from

Figure 7 that APDE exhibits stability across problems of varying dimensions, with its runtime not significantly altering with an increase in dimensionality. This further validates the advantage of APDE in addressing high-dimensional problems.