Abstract

This study explores accelerated life tests to examine the durability of highly reliable products. These tests involve applying higher stress levels, such as increased temperature, voltage, or pressure, that cause early failures. The power half-logistic (PHL) distribution is utilized due to its flexibility in modeling the probability density and hazard rate functions, effectively representing various data patterns commonly encountered in practical applications. The step stress partially accelerated life testing model is analyzed under an adaptive type II progressive censoring scheme, with samples drawn from the PHL distribution. The maximum likelihood method estimates model parameters and calculates asymptotic confidence intervals. Bayesian estimates are also obtained using Lindley’s approximation and the Markov Chain Monte Carlo (MCMC) method under different loss functions. Additionally, D- and A-optimality criteria are applied to determine the optimal stress-changing time. Simulation studies are conducted to evaluate the performance of the estimation methods and the optimality criteria. Finally, real-world datasets are analyzed to demonstrate the practical usefulness of the proposed model.

Keywords:

power half-logistic distribution; partially accelerated life testing; adaptive type II progressive censoring; optimal design; Lindley technique; MCMC; simulation MSC:

62P30; 62L15; 62N01; 62N02

1. Introduction

Manufacturers are designing and producing more reliable products as a result of today’s heightened consumer demands and growing market competition. With a decreasing time-to-market, it is critical to evaluate and estimate the product’s reliability during the design and development phase. In addition, due to technological advancements, production designs are always changing. Therefore, it is becoming more difficult to find information regarding the lifespan of goods or materials that are highly reliable when tested under normal conditions. To address this, the industrial sector often uses accelerated life testing (ALT) or partially accelerated life testing (PALT) to quickly gather sufficient failure data and understand the relationship between failures and external stress factors. These tests can significantly save time, labor, resources, and costs.

In ALTs, all units are subjected to stress levels higher than usual to induce failures more quickly. In contrast, PALTs involve testing units under both normal and elevated stress conditions intended to collect more failure data within a constrained time frame without subjecting all units to high stress. PALTs are particularly beneficial in scenarios where time and cost are pressing concerns. The information obtained from such tests can be utilized to estimate the failure behavior of the units under normal conditions. DeGroot and Goel performed a proper statistical modeling of PALTs [1], where the authors considered the tempered random variable model for PALTs.

Nelson [2] suggested various methods to apply stress under accelerated testing conditions, with constant stress and step stress models being the most widely used. In a step stress partially accelerated life test (SSPALT), units are exposed to progressively higher stress levels either at predetermined times or until a specified number of failures occur. This type of test often involves two stress levels, starting with a normal stress level and transitioning to a higher level after a fixed duration. Additional details about step stress accelerated life testing can be found in [3]. Several researchers have studied SSPALT, including [4,5,6,7,8].

The censoring data are of natural interest in survival, reliability, and medical studies due to cost or time considerations. Although traditional type I and type II censoring methods are widely used, progressive censoring schemes (type I and type II) offer significant advances in data collection in lifetime studies. These progressive schemes address the limitations in the flexibility of conventional methods. The type II progressive censoring (TIIPC) scheme, specifically, generalizes the traditional type II approach by allowing for the removal of units throughout the experiment, not just at the endpoint. In the TIIPC scheme, n units are placed in a test. Let denote the times at which the failure is recorded for m of the n units. Immediately after the first failure , units are randomly removed from the surviving units. At the time of the second failure, units of the remaining surviving units are randomly eliminated, and so on. The test continues until the failure time . The experiment ends and the remaining surviving units are eliminated. Removals are predetermined in such a way that . References [9,10] discussed the advantages of the TIIPC schemes.

The TIIPC scheme offers flexibility in the design of an experiment by allowing for predetermined removal times () of units during the test. However, a crucial limitation of TIIPC is the unknown and potentially lengthy operating time (T) compared to traditional type II censoring, as highlighted in [11]. This extended time frame may not be preferable in practical applications due to time constraints or unexpected developments. Consequently, ref. [11] proposed adaptive type II progressive censoring (ATIIPC) to allow experimenters to adjust the planned removal schedule of the surviving units during the test. Recently, interest has been paid to studying SSPALT models under the ATIIPC scheme, including [12,13,14,15,16].

Statistical analysis and modeling of lifetime data play a vital role in various applied sciences, including engineering, economics, insurance, and biological sciences. To address these needs, numerous continuous distributions have been proposed in the statistical literature, such as exponential, Lindley, gamma, log-normal, half-logistic, and Weibull distributions. Among these, the power half-logistic (PHL) distribution has been extensively utilized to analyze lifetime and reliability data. The PHL distribution, introduced by Krishnarani [17], is defined as follows:

Let X be a random variable following the half-logistic distribution with a survival function:

Consider the transformation , then the distribution of Y follows the PHL distribution, which is characterized by a scale parameter and a shape parameter . Its probability density function (pdf), survival function , and hazard rate function (hf) are defined as follows:

and

where , and the cumulative distribution function (cdf) is .

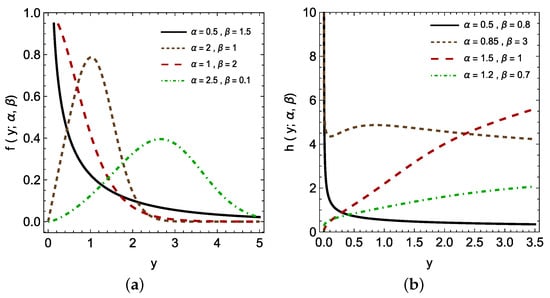

The PHL distribution is a versatile model capable of capturing a wide range of lifetime data, including symmetric and asymmetric types. Its flexibility allows us to represent various data patterns commonly encountered in practical applications effectively. To illustrate the PHL flexibility, Figure 1 shows the plots of the pdf and the hazard rate function for different values of the parameters and . The PHL distribution has found successful applications in various fields. For example, Alomani et al. [18] demonstrate the flexibility of the PHL distribution in modeling a wide range of datasets, including COVID-19 [19], time between failures of secondary reactor pumps [20], and active repair times for 40 airborne communication transceivers [21]. Additionally, Alomani et al. [18] addressed various estimation techniques and data analysis methods for constant-partially accelerated life tests for the PHL distribution. El-Awady et al. [22] further extend this work by investigating the properties and parameter estimation of a generalized form of the PHL distribution, known as the Exponentiated Half-Logistic Weibull (EHLW) distribution. They also examine the stress strength reliability parameter ( ) within the context of the EHLW distribution.

Figure 1.

(a) The pdf and (b) hazard rate function for the PHL distribution with different values of and .

This study is motivated by the growing need for robust statistical modeling techniques in reliability and life testing, particularly under complex experimental conditions such as the SSPALT and ATIIPC schemes. These scenarios are highly relevant in engineering and industrial applications, where understanding the reliability of components under varying stress levels is essential. The ATIIPC scheme, in particular, offers enhanced flexibility by allowing experimenters to adapt the the removal schedule of units during the test, making it well suited for real-world applications with time and resource constraints.

As the PHL distribution has proven to provide significant flexibility in modeling a wide range of lifetime data, including symmetric and asymmetric failure patterns, particularly in reliability analysis under accelerated testing conditions, there remains a critical gap in the literature regarding the application of the PHL distribution under complex experimental conditions such as the SSPALT and ATIIPC schemes. This study addresses this gap by focusing on estimating the parameters of the PHL distribution and the acceleration parameter when the data are derived from the ATIIPC scheme under the SSPALT model.

To achieve this, both maximum likelihood estimation (MLE) and Bayesian methods are employed. MLE is utilized for its well-established theoretical properties, such as consistency and asymptotic efficiency, which make it a standard choice for parameter estimation in reliability studies. For the Bayesian inference, Lindley’s approximation and Markov Chain Monte Carlo (MCMC) techniques are used. Lindley’s approximation provides approximate Bayesian estimates when closed-form solutions are unavailable, leveraging its computational simplicity and efficiency for initial parameter estimation. MCMC, on the other hand, is a powerful and flexible technique for sampling from complex posterior distributions, especially in high-dimensional parameter spaces. It provides accurate and robust estimates by converging to the true posterior distribution, even for small sample sizes. Furthermore, this study explores the problem of determining the optimal stress change time using two distinct optimality criteria.

The structure of this paper is organized as follows. Section 2 introduces the SSPALT model under the ATIIPC scheme using the PHL distribution. Section 3 provides maximum likelihood estimates (MLEs) and asymptotic confidence intervals (ACIs). In Section 4, we discuss the derivation of Bayesian estimates using Lindley’s approximation and the Markov Chain Monte Carlo (MCMC) technique. Section 5 examines two optimality criteria to determine the optimal timing for changing the stress level. Section 6 presents Monte Carlo simulation studies to showcase the effectiveness of the point and interval estimates for the parameters, as well as the optimal time for altering the stress level. Section 7 details the data analysis conducted for specific case studies. Finally, Section 8 concludes the paper with a summary of the findings.

2. Model Assumptions

In the ATIIPC scheme, an initial estimate for the experiment duration, , is set, which can be surpassed if necessary. The desired number of failures (m) is specified. Furthermore, censoring schemes (, ) are predetermined, but these values can be adapted during the experiment. The ATIIPC scheme balances reducing experiment time with acquiring sufficient data (including extreme lifetimes) for robust statistical inference. This is achieved through a dynamic termination strategy based on two cases:

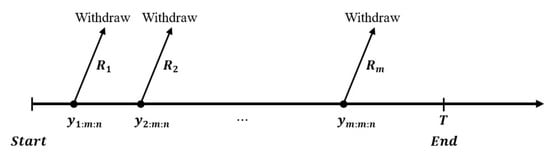

- Case (i):

- If , all m failures are observed, and the experiment ends at the predetermined time (T). The preassigned censoring scheme () is followed.

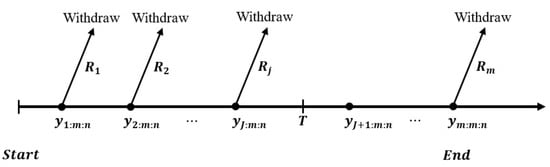

- Case (i):

- If , the experiment continues beyond the planned duration T. Suppose J failures have occurred such that , where and . In this scenario, the test is immediately terminated with the failure. Consequently, the censoring scheme () is dynamically adjusted to become , where . Figure 2 and Figure 3 illustrate the two cases of the ATIIPC schemes.

Under SSPALT, the lifetime of the unit is given as follows:

where Z is the lifetime of the unit under normal use conditions, is the stress change time, is the acceleration factor, and Y is the total lifetime under SSPALT. The above variable transformation is proposed by [1]. The pdf of Y under the SSPALT model can be expressed as

where is the pdf of the PHL distribution defined in Equation (2) with the corresponding survival function given in Equation (3), and is given by

where and . The corresponding survival function is

Figure 2.

The test time .

Figure 3.

The test time .

Under SSPALT with the ATIIPC scheme, n units undergo testing with a progressive censoring scheme . Each unit operates under normal conditions and if it does not fail up to time , it is subjected to accelerated conditions. The experiment continues until censoring time T. In this scenario, no items are withdrawn except at the time of the failure, when all remaining surviving units are removed. The applied scheme in this case is , and the observed data take the form

If , the ATIIPC scheme reduces to the traditional type II censoring scheme. If , the ATIIPC scheme will lead us to the conventional TIIPC scheme.

The statistical inference based on the ATIIPC scheme under SSPALT will be described in the following sections.

3. Maximum Likelihood Estimation

Based on the ATIIPC scheme, the likelihood function of the parameter vector is provided by

where , , , , , , and .

The MLE is a popular method for estimating unknown parameters because it produces accurate and statistically sound results. Apply the natural logarithm to both sides of Equation (7).

To obtain the MLEs of the parameters , and , solve the following system of nonlinear equations.

and

where , and . Obtaining closed-form solutions for the parameters in the nonlinear equations mentioned above is complicated. We use an iterative strategy, like Newton–Raphson, to obtain numerical solutions for nonlinear systems.

Approximate Confidence Intervals

The large-sample property of the MLEs suggests that, under regularity conditions, the MLEs of the parameters , , and are asymptotically normally distributed. To be more specific, is known to provide the asymptotic distribution of the MLEs of , and , where represents the variance–covariance matrix. The inverse of the Fisher Information Matrix (FIM), denoted , provides an estimate of . The FIM is derived by taking the expected values of the negative second-order partial derivatives of the log-likelihood function with respect to the parameters , and :

In practice, due to the complexity of the pdf and the integral expressions involved, the observed FIM is often used as an approximation. The observed FIM is computed using the MLEs , , and :

where

The asymptotic two-sided confidence intervals for the unknown parameter are

where , , represents the diagonal elements of the inverse of the observed FIM and represents the -th percentile of the standard normal distribution.

4. Bayesian Estimation

In this section, we derive Bayes estimates of the parameters and and the accelerated factor . A loss function is used to evaluate the difference between an estimated value and the true value of the parameter. Although symmetric loss functions such as the squared error (SE) loss function are commonly used, asymmetric loss functions are generally more practical and applicable. In this study, we used the linear exponential (LINEX) loss function, a common choice among asymmetric loss functions.

Under the SE loss, the Bayes estimate for a parameter is the posterior mean. The LINEX loss can be written on the form

According to LINEX loss function, the Bayesian estimate of , denoted as , is given by

assuming that the expectation of exists and is finite.

To derive Bayes estimates, we assume independent gamma priors for the parameters , , and with densities , , and , respectively. The joint prior distribution is then given by

where the hyperparameters for . The joint posterior density of , , and is given by

where is the likelihood function given by Equation (7). Based on Equation (21), the Bayesian estimate of any function of , , and , e.g., , under SE loss function and LINEX loss function can be written as

and

respectively. Analytical solutions to the intractable ratios of integrals in Equations (22) and (23) cannot be obtained. Therefore, we adopt two different techniques to compute Bayesian estimates for , , and . These techniques are discussed in the following Subsections.

4.1. Lindley’s Approximation

In this subsection, approximate Bayes estimators for the parameters , , and are derived using Lindley’s approximation technique [23]. Lindley’s approximation is used in Bayesian inference to approximate posterior means when exact integration is computationally challenging, hence providing fast numerical solutions. Lindley approximation is basically a Taylor series expansion around the MLE. Therefore, it approximates the posterior distribution locally using second-order terms, making it possible to compute expectations analytically. Compared to simulation-based methods like MCMC, Lindley approximation offers a good approach, especially for small-to-moderate sample sizes. In this study, the Lindley approximation is applied to derive approximate Bayes estimators under squared error and LINEX loss functions for the model’s parameters. This approach avoids the need for computationally expensive numerical integration.

Under the squared error loss function, the posterior mean of a function is given by

where is the log-likelihood function in Equation (8), is the logarithm of the prior density, and . By applying Lindley’s approximation and expanding about the MLE for , the posterior mean is approximated as

where , , , , and is the element in the inverse of the matrix .

For the case of three parameters, , the posterior mean in Equation (25) can be simplified to obtain the posterior mean of , under squared error loss function, to be

where , , , and

where represent the third-order derivatives of the log-likelihood function (8), obtained by differentiating Equations (14)–(19) with respect to , and . These equations are given in Appendix A.

Using the joint prior density function from Equation (20), we have

Finally, the Bayes estimates under the SE loss function for the parameters , and are derived by employing Equation (26) as follows:

Under the LINEX loss function, applying Lindley’s approximation to the ratio of integrals in Equation (23), the posterior mean can be approximated as

The approximate Bayesian estimates for the parameters , , and under the LINEX loss function are then derived as follows:

Note that all the above quantities are evaluated at the MLEs for .

4.2. MCMC Algorithm

In Bayesian inference, MCMC techniques are essential tools for sampling from complex posterior distributions, particularly when analytical computation is intractable. For example, in Equation (21), MCMC methods facilitate generating samples that approximate the posterior distribution. Two common MCMC methods are Gibbs sampling and the Metropolis–Hastings (MH) algorithm. Gibbs sampling is straightforward, drawing samples from the conditional distributions of each parameter. At the same time, the MH algorithm is more general, sampling from the conditional density of the parameters using a proposal distribution. These samples were subsequently used to compute Bayes estimates and credible intervals (CRIs).

In this study, MCMC is employed because the conditional densities of , and (Equations (35)–(37)) have no explicit distributional forms. The MH algorithm is specifically chosen for its flexibility in sampling from these complex conditional densities. The MH algorithm generates samples that approximate the posterior distribution. These samples are then used to compute Bayes estimates under the squared error and LINEX loss functions, as well as credible intervals. Consequently, MCMC methods are flexible, capable of handling more complex posterior distributions and yielding asymptotically exact results with sufficient samples.

The joint posterior distribution of , , and from Equation (7) is expressed as

The conditional densities for , and are derived as follows:

and

Equations (35)–(37) do not have explicit distributional forms, and Bayesian estimates of the parameters are obtained using the MCMC algorithm with MH steps as follows:

- Start with initial values and set .

- Use the following MH algorithm to generate from :

- (i)

- Generate a proposal from normal distribution .

- (ii)

- Calculate the acceptance probability .

- (iii)

- Generate and set if ; otherwise, set .

- Use the following MH algorithm to generate from :

- (i)

- Generate a proposal from .

- (ii)

- Calculate the acceptance probability .

- (iii)

- Generate and set if ; otherwise, set .

- Use the following MH algorithm to generate from :

- (i)

- Generate a proposal from .

- (ii)

- Calculate the acceptance probability .

- (iii)

- Generate and set if ; otherwise, set .

- Repeat steps (2) and (4) N times to obtain for .

- Compute Bayesian estimates under the squared error and LINEX loss functions as follows:

- –

- For squared error loss: .

- –

- For LINEX loss: .

Here, is the number of burn-in iterations discarded to reduce the effect of initial parameter values. - Construct credible intervals (CRIs) for the Bayesian estimates using the and quantiles of the empirical posterior distribution from the MCMC samples.

For more details regarding the MH algorithm, see the work of Robert et al. [24].

5. Optimal Time of Changing Stress Level

In addition to parameter estimation, this paper addresses the problem of selecting the optimal stress-changing time for SSPALT under the PHL distribution and the ATIIPC scheme. We propose a selection criterion to determine the optimal value of , utilizing the principles of both D-optimality and A-optimality; see [5,25,26,27,28,29]. The practical implications of the optimal stress change time can be addressed as follows:

- The choice of ensures the efficient estimation of model parameters with high precision by optimizing the Fisher Information Matrix. Hence, obtaining a highly robust design for the experiment.

- By minimizing either the generalized asymptotic variance (D-optimality) or the sum of parameter variances (A-optimality), guarantees that the test is conducted with the least amount of error while maximizing the collected information. This leads to cost-effectiveness since fewer units are run under the test.

- In practical tasks (ALT), the ability to switch stress levels at the optimal time allows us to avoid unnecessary wear or overstress on components during testing, ensuring that the test results are realistic and applicable to real-world operating conditions.

- Determining provides the test planners with a systematic way to design experiments that combine precision with efficiency. This can lead to more informed decisions on product reliability, warranty periods, and maintenance schedules.

5.1. D-Optimality

The D-optimality criterion, widely used in designing life tests, ensures high precision in parameter estimation by considering the entire parameter space. This criterion relies on the determinant of the Fisher Information Matrix (), where maximizing this determinant minimizes the generalized asymptotic variance () of the MLEs for the model parameters. Since is inversely related to the determinant of , it can be expressed as

Thus, the optimal stress-changing time is determined by maximizing or equivalently minimizing . Consequently, the optimal can be found by minimizing

5.2. A-Optimality

A-optimality aims to minimize the trace of the variance–covariance matrix . The optimal stress-changing time is determined by

This criterion is also known as the trace criterion. It minimizes the sum of the diagonal entries of the variance–covariance matrix:

A-optimality aims to minimize the trace of the inverse Fisher Information Matrix, providing a measure of overall efficiency in the test design. Minimizing the trace ensures that the test plan is globally optimal in reducing uncertainty.

Minimizing (or ) can be achieved by solving the equation (or ). However, due to the complexity of the equation, an explicit closed-form solution is not feasible. As a result, an iterative numerical method is required to find the optimal value of .

6. Simulation

This section presents the Monte Carlo simulation study conducted to assess the performance of maximum likelihood estimation and Bayesian estimation methods for the model parameter . Bayesian estimation is implemented with two techniques: Lindley approximation and the MCMC technique under squared error and LINEX loss functions, where and . The performance of these estimators is evaluated using the mean squared error (MSE) and average bias (AB) for the point estimates, while the average width (AW) and coverage probability (CP) are used for the confidence intervals . Three cases are considered, each with different values for stress transition time , and experiment duration T:

- Case 1:

- , and .

- Case 2:

- , and .

- Case 3:

- , and .

For each case, 1000 samples were generated using Mathematica (14) software with varying sizes and censoring levels , , , and . Additionally, this study explored three different censoring schemes (CSs), as detailed below:

- CS I:

- for .

- CS II:

- for (for odd m);for (for even m).

- CS III:

- for .

To simulate data form the proposed model under the ATIIPC scheme, we followed an algorithm based on [11], with the detailed steps outlined below:

- Determine the values of and T and the values of the parameters , , and .

- Generate the TIIPC sample with the censoring scheme based on the method proposed by [30].

- Generate m-independent observations from .

- Calculate for .

- Set for . Then, is a PTIIC sample of size m from the distribution.

- Under SSPALT with the TIIPC scheme, calculatewhere is the cdf for the PHL distribution and for .

- Determine the value of J, where , and discard the sample .

- Generate the first -order statistics from a truncated distribution with sample size as .

For the Bayesian estimation approach, informative priors are employed, where the hyperparameters are determined using the method of moments. This ensures that the Gamma prior distribution’s mean matches the corresponding parameter’s true value, with a prior variance set to 1. The MCMC procedure was run for 10,000 iterations, discarding the initial 1000 iterations as a burn-in period. In addition, a thinning process was applied, retaining every third observation to reduce autocorrelation.

The simulation results are presented in Table 1, Table 2, Table 3, Table 4, Table 5, Table 6 and Table 7. Various conclusions about the performance of estimators are drawn as follows:

Table 1.

MSEs of the parameter estimates for Case 1.

Table 2.

ABs of the parameter estimates for Case 1.

Table 3.

MSEs of the parameter estimates for Case 2.

Table 4.

ABs of the parameter estimates for Case 2.

Table 5.

MSEs of the parameter estimates for Case 3.

Table 6.

ABs of the parameter estimates for Case 3.

Table 7.

AW and CP for confidence intervals for the parameters for all cases.

- As the sample size increases, the MSEs and ABs across all estimation methods and parameters tend to decrease.

- Censoring Scheme I generally shows lower MSEs for most parameter estimates compared to Schemes II and III. This trend implies that Scheme I might be more favorable for achieving accurate estimates.

- Across all estimation methods, parameter tends to exhibit larger MSE and AB values compared to parameters and .

- For Bayesian estimates obtained through Lindley’s approximation or MCMC techniques, the choice of loss function affects MSE and AB. Symmetric loss functions (the SE loss) consistently produce preferable results compared to asymmetric loss functions.

- The MLE and MCMC with SE loss offer the most balanced performance in terms of AB and MSE across all cases, with MCMC slightly outperforming in larger samples. Lindley’s method, while effective for and , appears less reliable for .

- Censoring Scheme III, which tends to remove units later in the test, consistently shows higher MSEs and AB across all parameters and estimation methods, particularly in smaller samples. In contrast, Schemes I and II provide more reliable performance with generally lower MSEs and AB, supporting more accurate estimation.

- According to the results of the interval estimates shown in Table 7, the AWs for ACIs and CRIs become narrower as the sample size increases and the CPs are mostly close to the nominal level. The CRIs are narrower and provide better coverage probabilities.

- The average values of the optimal stress change time based on the A-optimality (AO) and D-optimality (DO) are listed in Table 8. The values of for both the AO and the DO methods are relatively close within each scheme.

Table 8. The average optimal transition stress changing time using D-optimality and A-optimality.

Table 8. The average optimal transition stress changing time using D-optimality and A-optimality. - The MLE demonstrates competitive performance across all cases, particularly for smaller sample sizes. Its simplicity and computational efficiency make it a practical choice for applications where quick and reliable estimates are needed.

- The MCMC Bayesian estimates with SE loss show slightly better performance in larger samples, where the MLE remains robust and consistent, especially for parameters and .

7. Application to Real Data

This section explores three examples based on real-world data: two from engineering and one from medicine. The engineering cases focus on analyzing failure times under both normal and accelerated stress conditions, illustrating how to determine the optimal stress change time, , to reduce testing duration while ensuring accurate reliability assessments. Additionally, they demonstrate the use of maintenance optimization in repairable systems. In contrast, the medical example applies the methods to study disease progression, incorporating censored data to enhance treatment strategies and improve patient outcomes.

7.1. Light Bulb Data

The dataset utilized in this analysis originates from an SSPALT experiment conducted by [31]. In this experiment, a total of 64 light bulbs were subjected to a life test at an initial stress level of , representing normal operating conditions. At a predetermined time of h, the stress level increased to an accelerated condition of . During the experiment, failures were recorded out of the 64 tested samples. These failure times are presented in Table 9.

Table 9.

Failure times observed under normal operating conditions () and accelerated stress conditions (), with a stress change point at h.

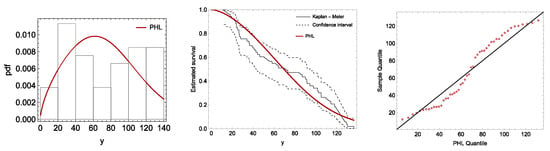

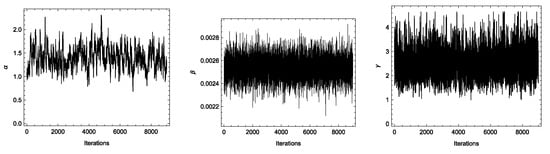

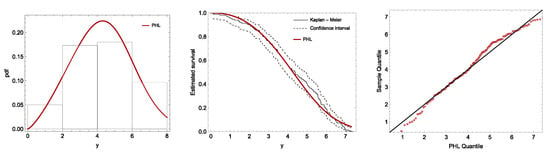

To validate the PHL distribution as a suitable model for the complete set of data, we performed a goodness-of-fit analysis. This step establishes the PHL distribution as the baseline model before applying a more advanced modeling. Table 10 presents the fitting results including MLEs for the parameter, Kolmogorov–Smirnov (KS), and its p-value. The p-value exceeded , which suggests that it was well modeled by the PHL distribution. This result is further supported by Figure 4, which displays the estimated pdf with the observed histogram of the data, the estimated survival function compared with the Kaplan–Meier curve, and QQ-plots to assess the distributional fit.

Table 10.

The MLE, confidence interval, KS statistic, and the p-value for light bulb data.

Figure 4.

Fitting plots of PHL distribution to the light bulb data.

To generate the ATIIPC sample, we consider , , , and a predetermined experiment duration of . The censoring scheme , where means that a is repeated k consecutively, was employed. The generated ATIIPC sample for the SSPALT experiment is presented in Table 11. Since the ATIIPC process permits modifications to the censoring scheme during the experiment, the observations extended beyond the predetermined duration of . Consequently, the censoring scheme was adjusted to to account for the extended experimental period.

Table 11.

The generated ATIIPC sample from light bulb data.

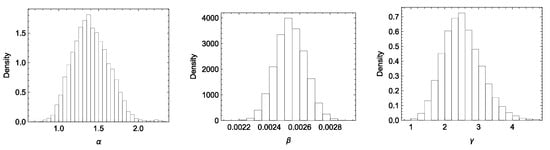

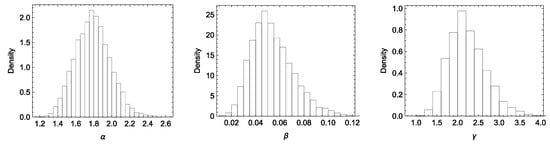

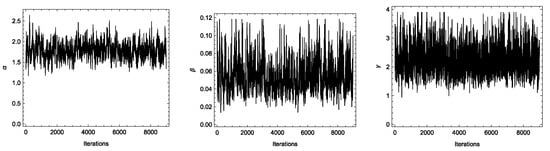

In Table 12 the MLEs, Bayesian estimates, the corresponding confidence intervals, and the optimal values of under the two optimality criteria and are provided. Bayesian estimates were obtained using informative gamma priors for the parameters, where the hyperparameters were estimated to give a prior mean equal to the MLEs and a variance of . The MCMC algorithm was executed for 100,000 iterations, discarding the first 10,000 iterations as burn-in. Every 10th iteration was retained to reduce autocorrelation, yielding 9000 sampled values for each MCMC chain. To assess the convergence of the MCMC chains, histograms of the sampled values for each parameter are presented in Figure 5, while the corresponding trace plots are shown in Figure 6. These figures demonstrate that the MCMC chains for the parameters exhibit good mixing and converge effectively to their respective posterior distributions. By analyzing failure times under normal and accelerated stress conditions, we demonstrate how the optimal stress change time can be determined to minimize testing duration while maintaining accurate reliability predictions. By optimizing , manufacturers can design accelerated life tests that provide reliable results in a shorter time frame, enabling faster product development and quality assurance. This is especially critical in industries such as electronics, where rapid innovation and competitive market pressures demand quick yet accurate reliability assessments.

Table 12.

Point, interval estimates for the parameters, and optimal values of for light bulb data.

Figure 5.

Kernel densities for the posterior samples of the parameters for light bulb data.

Figure 6.

Trace plots for the posterior samples of the parameters for light bulb data.

7.2. Time-Between-Failures Data

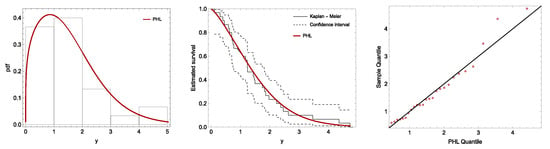

The dataset represents the time between failures in a repairable system obtained from [32]. First, we assessed the adequacy of the PHL distribution for the data by estimating the parameters using MLEs and then evaluating the KS test statistic and its corresponding p-value. The goodness-of-fit results are presented in Table 13. We concluded that the PHL distribution provides an excellent fit to the data. In addition, we visually assessed the goodness-of-fit by plotting a histogram of the data alongside the fitted density function. Furthermore, we compared the Kaplan–Meier curve with the estimated survival curve and generated a QQ-plot of the PHL distribution (see Figure 7).

Table 13.

The MLE, confidence interval, KS statistic, and p-value for time-between-failures data.

Figure 7.

Fitting plots of PHL distribution to time-between-failures data.

To generate the ATIIPC scheme under SSPALT, we set , , , and , with a censoring scheme . The generated data are presented in Table 14. Observations extended beyond the predetermined duration of , prompting an adjustment to the censoring scheme as . Under these conditions, MLEs, Bayesian estimates, and confidence intervals are shown in Table 15. Histograms and trace plots (Figure 8 and Figure 9) confirm that the MCMC chains for , , and mix well and converge effectively to their posterior distributions. This analysis highlighted the utility of the methods in maintenance optimization for repairable systems. By modeling the time between failures, the results can inform maintenance schedules, reducing downtime and improving operational efficiency in industries such as manufacturing and transportation.

Table 14.

The generated ATIIPC scheme from time-between-failures data.

Table 15.

Point, interval estimates for the parameters, and optimal values of for time-between-failures data.

Figure 8.

Kernel densities for the posterior samples of the parameters for time-between-failures data.

Figure 9.

Trace plots for the posterior samples of the parameters for time-between-failures data.

7.3. HIV Infection to AIDS

This section examines a real-world dataset that tracks the progression of HIV infection to AIDS among men over nearly 15 years. The data, derived from the US Centers for Disease Control and Prevention (CDC), highlight that by 1996, of diagnosed adult AIDS cases in the United States were attributed to close contact with HIV-positive individuals. Furthermore, of the new AIDS cases reported in that same year were related to similar exposures. Among the , people who also used injection drugs accounted for of the cumulative cases and of new cases.

This dataset, collected during the advent of combination antiretroviral therapy in 1996, has been frequently cited in studies, including those by [33,34], and for its value in understanding disease progression. Some observations involve patients whose results were censored or who did not develop AIDS during the study period. In this analysis, we implemented the SSPALT model by focusing on the predetermined number of failures observed, i.e., 150, out of a complete dataset comprising 222 individuals. These observations were extracted from [7].

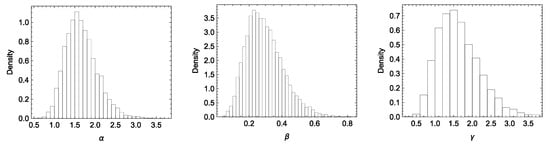

To validate the PHL distribution as a suitable model for the complete set of data, we performed a goodness-of-fit analysis. This step establishes the PHL distribution as the baseline model before applying a more advanced modeling. Table 16 presents the fitting results including MLEs for the parameters, KS distance, and its p-value. The p-value exceeded , which suggests that was well modeled by the PHL distribution. This result is further supported by Figure 10, which displays the estimated pdf with the observed histogram of the data, the estimated survival function compared with the Kaplan–Meier curve, and QQ-plots to assess the distributional fit.

Table 16.

The MLE, confidence interval, KS statistic, and its p-value for HIV infection to AIDS data.

Figure 10.

Fitting plots of PHL distribution to HIV infection to AIDS data.

To generate ATIIPC data under SSPALT, we set the stress change times at and the duration of the experiment was . The total number of failures was fixed to , selected from a total of observations with a censoring scheme . The generated data are presented in Table 17. Observations extended beyond the predetermined duration of , prompting an adjustment to the censoring scheme as . The MLEs, Bayesian estimates, and the 95% confidence intervals are shown in Table 18. Histograms and trace plots (Figure 11 and Figure 12) confirm that the MCMC chains for , , and mix well and converge effectively to their posterior distributions. This analysis showcases the application of these methods in medical research. By modeling disease progression and accounting for censored data, the findings can guide treatment strategies and enhance patient outcomes. This is particularly relevant in studies with long-term follow-up, where censoring is common.

Table 17.

The generated ATIIPC sample from HIV infection to AIDS data.

Table 18.

Point, interval estimates for the parameters, and optimal values of for HIV infection to AIDS data.

Figure 11.

Kernel densities for the posterior samples of the parameters for HIV infection to AIDS data.

Figure 12.

Trace plots for the posterior samples of the parameters for HIV infection to AIDS data.

8. Conclusions

In this study, we applied the SSPALT model to units with lifetimes following the PHL distribution under the ATIIPC scheme. Statistical inferences for the model parameters were derived using maximum likelihood and Bayesian estimations under symmetric and asymmetric loss functions, employing Lindley’s approximation and the MCMC technique. Additionally, we investigated the determination of optimal stress change times using two optimality criteria, A-optimality and D-optimality, to enhance the efficiency of the testing procedure. A comprehensive Monte Carlo simulation study was conducted to assess the performance of the estimation methods and the optimality criteria across various scenarios. The results indicate that all estimation methods perform well. However, we recommend using the MLE method since it offers the most consistent and reliable performance across all scenarios. The optimal stress change times determined using A-optimality and D-optimality criteria were found to be relatively close, highlighting the robustness of the optimization approach.

To further illustrate the applicability of the proposed model, we analyzed three different datasets under SSPALT. The analysis demonstrated the impact of applying the ATIIPC scheme on the predetermined duration of the experiment and the necessary adjustments to the censoring scheme. Furthermore, we successfully determined the optimal stress change times for each dataset. The findings confirm that the PHL distribution under complex experimental conditions, such as the SSPALT model with ATIIPC, provides a flexible and efficient framework.

While this study explores the SSPALT model’s effectiveness under the PHL distribution and ATIIPC scheme, several limitations should be noted. The analysis assumes the PHL distribution for lifetimes, and extending the model to other distributions could enhance its applicability. The optimal stress change time is determined numerically due to the lack of closed-form solutions, and future work could explore approximate analytical methods for improved computational efficiency. Additionally, the robustness of the model under varying levels of censoring, small sample sizes, and alternative prior assumptions in the Bayesian framework remains an area for further investigation. Expanding the study to adaptive stress-testing schemes and real-time monitoring could also provide more practical insights. Handling the inclusion of covariates, such as environmental factors and material properties, into the SSPALT model to study the effect of external variables on lifetimes is another open area. Lastly, validating the proposed methods across diverse real-world datasets and industries will further demonstrate their generalization and reliability.

Author Contributions

Conceptualization, M.M.E.-A.; Methodology, M.M.E.-A.; Software, M.M.E.-A.; Validation, H.H.A.; Formal analysis, M.M.E.-A. and H.H.A.; Investigation, M.M.E.-A. and H.H.A.; Resources, M.M.E.-A.; Data curation, M.M.E.-A. and H.H.A.; Writing—original draft, M.M.E.-A.; Writing—review and editing, H.H.A.; Funding acquisition, H.H.A. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the Deanship of Scientific Research, Vice Presidency for Graduate Studies and Scientific Research, King Faisal University, Saudi Arabia [GRANT No. KFU250266].

Data Availability Statement

Data are contained within the article.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- DeGroot, M.; Goel, P. Bayesian estimation and optimal designs in partially accelerated life testing. Nav. Res. Logist. Q. 1979, 26, 223–235. [Google Scholar] [CrossRef]

- Nelson, W. Accelerated life testing-step-stress models and data analyses. IEEE Trans. Reliab. 1980, 29, 103–108. [Google Scholar] [CrossRef]

- Kundu, D.; Ganguly, A. Analysis of Step-Stress Models: Existing Results and Some Recent Developments; Academic Press: Cambridge, MA, USA, 2017. [Google Scholar]

- Abd-Elfattah, A.; Hassan, A.; Nassr, S. Estimation in step-stress partially accelerated life tests for the Burr type XII distribution using type I censoring. Stat. Methodol. 2008, 5, 502–514. [Google Scholar] [CrossRef]

- Abdel-Hamid, A.; Al-Hussaini, E. Inference and optimal design based on step—Partially accelerated life tests for the generalized Pareto distribution under progressive type-I censoring. Commun. Stat.-Simul. Comput. 2015, 44, 1750–1769. [Google Scholar] [CrossRef]

- Ismail, A. On designing step-stress partially accelerated life tests under failure-censoring scheme. Proc. Inst. Mech. Eng. Part O J. Risk Reliab. 2013, 227, 662–670. [Google Scholar] [CrossRef]

- Ahmad, H.H.; Ramadan, D.A.; Almetwally, E.M. Tampered Random Variable Analysis in Step-Stress Testing: Modeling, Inference, and Applications. Mathematics 2024, 12, 1248. [Google Scholar] [CrossRef]

- Ahmad, H.H.; Almetwally, E.M.; Ramadan, D.A. Competing Risks in Accelerated Life Testing: A Study on Step-Stress Models with Tampered Random Variables. Axioms 2025, 14, 32. [Google Scholar] [CrossRef]

- Balakrishnan, N.; Aggarwala, R. Progressive Censoring: Theory, Methods, and Applications; Springer Science & Business Media: Berlin/Heidelberg, Germany, 2000. [Google Scholar]

- Balakrishnan, N. Progressive censoring methodology: An appraisal. Test 2007, 16, 211–259. [Google Scholar] [CrossRef]

- Ng, H.T.; Kundu, D.; Chan, P. Statistical analysis of exponential lifetimes under an adaptive Type-II progressive censoring scheme. Nav. Res. Logist. 2009, 56, 687–698. [Google Scholar] [CrossRef]

- Kamal, M.; Rahman, A.; Zarrin, S.; Kausar, H. Statistical inference under step stress partially accelerated life testing for adaptive type-II progressive hybrid censored data. J. Reliab. Stat. Stud. 2021, 14, 585–614. [Google Scholar]

- Alotaibi, R.; Almetwally, E.; Hai, Q.; Rezk, H. Optimal test plan of step stress partially accelerated life testing for alpha power inverse Weibull distribution under adaptive progressive hybrid censored data and different loss functions. Mathematics 2022, 10, 4652. [Google Scholar] [CrossRef]

- Alam, I.; Kamal, M.; Rahman, A.; Nayabuddin. A study on step stress partially accelerated life test under adaptive type-II progressive hybrid censoring for inverse Lomax distribution. Int. J. Reliab. Saf. 2024, 18, 1–18. [Google Scholar] [CrossRef]

- Nassar, M.; Nassr, S.; Dey, S. Analysis of burr Type-XII distribution under step stress partially accelerated life tests with Type-I and adaptive Type-II progressively hybrid censoring schemes. Ann. Data Sci. 2017, 4, 227–248. [Google Scholar] [CrossRef]

- Zhang, H.; Wu, J.; Gui, W. Inferences on the generalized inverted exponential distribution in constant stress partially accelerated life tests using generally progressively Type-II censored samples. Appl. Sci. 2024, 14, 6050. [Google Scholar] [CrossRef]

- Krishnarani, S. On a Power Transformation of Half-Logistic Distribution. J. Probab. Stat. 2016, 2016, 2084236. [Google Scholar] [CrossRef]

- Alomani, G.; Hassan, A.; Al-Omari, A.; Almetwally, E. Different estimation techniques and data analysis for constant-partially accelerated life tests for power half-logistic distribution. Sci. Rep. 2024, 14, 20865. [Google Scholar] [CrossRef]

- Abu El Azm, W.; Almetwally, E.; Naji AL-Aziz, S.; El-Bagoury, A.; Alharbi, R.; Abo-Kasem, O. A New Transmuted Generalized Lomax Distribution: Properties and Applications to COVID-19 Data. Comput. Intell. Neurosci. 2021, 2021, 5918511. [Google Scholar] [CrossRef]

- Suprawhardana, M.; Prayoto, S. Total time on test plot analysis for mechanical components of the RSG-GAS reactor. Atom Indones 1999, 25, 81–90. [Google Scholar]

- Oguntunde, P.; Khaleel, M.; Okagbue, H.; Odetunmibi, O. The Topp–Leone Lomax (TLLo) distribution with applications to airbone communication transceiver dataset. Wirel. Pers. Commun. 2019, 109, 349–360. [Google Scholar] [CrossRef]

- El-Awady, M.; Abd El-Monsef, M.; Elbaz, I.M. Exponentiated half-logistic Weibull distribution with reliability inference. Qual. Reliab. Eng. Int. 2024, 40, 1875–1903. [Google Scholar] [CrossRef]

- Lindley, D. Approximate bayesian methods. Trab. Estadística Investig. Oper. 1980, 31, 223–245. [Google Scholar] [CrossRef]

- Robert, C.; Casella, G. Monte Carlo Statistical Methods; Springer: Berlin/Heidelberg, Germany, 1999; Volume 2. [Google Scholar]

- Xiaolin, S.; Pu, L.; Yimin, S. Inference and optimal design on step-stress partially accelerated life test for hybrid system with masked data. J. Syst. Eng. Electron. 2018, 29, 1089–1100. [Google Scholar]

- Abdel-Hamid, A.; Hashem, A. Inference for the exponential distribution under generalized progressively hybrid censored data from partially accelerated life tests with a time transformation function. Mathematics 2021, 9, 1510. [Google Scholar] [CrossRef]

- Zhang, W.; Gui, W. Statistical inference and optimal design of accelerated life testing for the Chen distribution under progressive type-II censoring. Mathematics 2022, 10, 1609. [Google Scholar] [CrossRef]

- Alrumayh, A.; Weera, W.; Khogeer, H.A.; Almetwally, E.M. Optimal analysis of adaptive type-II progressive censored for new unit-lindley model. J. King Saud-Univ.-Sci. 2023, 35, 102462. [Google Scholar] [CrossRef]

- Newer, H.; Abd-El-Monem, A.; Al-Shbeil, I.; Emam, W.; Nower, M. Optimal test plans for accelerated life tests based on progressive type-I censoring with engineering applications. Alex. Eng. J. 2024, 87, 604–621. [Google Scholar] [CrossRef]

- Balakrishnan, N.; Sandhu, R. A simple simulational algorithm for generating progressive Type-II censored samples. Am. Stat. 1995, 49, 229–230. [Google Scholar] [CrossRef]

- Zhu, Y. Optimal Design and Equivalency of Accelerated Life Testing Plans; Rutgers The State University of New Jersey, School of Graduate Studies: New Brunswick, NJ, USA, 2010. [Google Scholar]

- Murthy, D.P.; Xie, M.; Jiang, R. Weibull Models; John Wiley & Sons: Hoboken, NJ, USA, 2004. [Google Scholar]

- Dukers, N.; Renwick, N.; Prins, M.; Geskus, R.; Schulz, T.; Weverling, G.; Coutinho, R.; Goudsmit, J. Risk factors for human herpesvirus 8 seropositivity and seroconversion in a cohort of homosexula men. Am. J. Epidemiol. 2000, 151, 213–224. [Google Scholar] [CrossRef] [PubMed]

- Xiridou, M.; Geskus, R.; De Wit, J.; Coutinho, R.; Kretzschmar, M. The contribution of steady and casual partnerships to the incidence of HIV infection among homosexual men in Amsterdam. Aids 2003, 17, 1029–1038. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).